Abstract

Fruit freshness grading is an innate ability of humans. However, there was not much work focusing on creating a fruit grading system based on digital images in deep learning. The algorithm proposed in this article has the potentiality to be employed so as to avoid wasting fruits or save fruits from throwing away. In this article, we present a comprehensive analysis of freshness grading scheme using computer vision and deep learning. Our scheme for grading is based on visual analysis of digital images. Numerous deep learning methods are exploited in this project, including ResNet, VGG, and GoogLeNet. AlexNet is selected as the base network, and YOLO is employed for extracting the region of interest (ROI) from digital images. Therefore, we construct a novel neural network model for fruit detection and freshness grading regarding multiclass fruit classification. The fruit images are fed into our model for training, AlexNet took the leading position; meanwhile, VGG scheme performed the best in the validation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

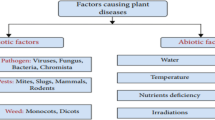

Given the significance of foods in our ordinary lives, fruit grading becomes crucial, but is time-consuming. Grading automatically using computerised approaches is believed as the solution of this problem, which will save human labour. There is a shred of evidence which shows that when fruit deterioration occurs, fruit goes through a series of biochemical transformation that leads to changes in its physical conditions and chemical composition, e.g., changes in nutrition.

Fruit grading methods are grouped into two categories: non-visual and visual approaches. Non-visual grading approaches mainly concentrate on aroma, chemicals, and tactile impression. Fruit spoilage in nature is a biochemical process that natural pigments in various reactions are transformed into other chemicals that result in changes of colours. Identifying fruit spoilage is an innate ability of human perception system. It is regarded as the desirability and acceptance to the consumption of a portion. It assists identifying whether the given fruits are edible or not [1].

The research work unfolds that there exists a strong relationship between bacteria and fruit spoilage, which encompasses aerobic psychrotrophic Gram-negative bacteria with the secretion of extracellular hydrolytic enzymes that corrupt plant’s cell walls, heterofermentative lactobacilli, spore-forming bacteria, yeasts, and moulds. Fruit degeneration is a consequence of biochemical reactions, i.e., a structural acidic heteropolysaccharide grown in terrestrial plant’s cell walls, chiefly consisted of galacturonic acid. Starch/amylum and sugar (i.e., polymetric carbohydrates with the same purposes) are then metabolised with produced lactic (i.e., an acid that is a metabolic intermediate as the end product of glycolysis releasing energy anaerobically) and ethanol [2]. Colonising and induced lesions as a result of microbe dissemination are frequently observed, and infestation is a primary reason of spoilage for postharvest fruits [3].

Besides, the lack of nutrients results in the growth of dark spots, e.g., insufficient calcium leads to apple cork spots [4]. The exposure to oxygen is another determinant as an enzyme known as polyphenol oxidase (PPO) triggers a chain of biochemical reactions including proteins, pigments, fatty acids, and lipids, which lead to fading of the fruit colours as well as degrading to an undesirable taste and smell [5].

The established research evidence shows that if fruit deterioration occurs, fruit goes through a series of biochemical transformation that incurs to changes in its physical conditions, e.g., visual features including colour and shape, most of these features can be extracted. It is affirmed that a computer vision-based approach is the most economical solution.

Previously, scale-invariant feature transform (SIFT) along with colour and shape of fruits [21] has been offered to fruit recognition. K-nearest neighbourhood (KNN) and support vector machine (SVM) have been employed for the classification. Despite attaining high accuracy, this approach has input images with the size \(90\times 90,\) which is low, the information might be dropped. The low-resolution image has the implications that individual pixel may have a significant contribution to the final result, which is dependent on noises for prediction. It is well known that KNN and SVM are vulnerable to the curse of dimensionality where the growth of feature dimensions will have a massive impact on performance; meanwhile, high-resolution images are likely to have rich visual features.

Given the advancement of deep learning, fruit grading algorithms should produce satisfactory accuracies timely [6, 7]. The state-of-the-art technology in computer vision sees the categories in fruit/vegetable automatic grading [8]: detections of fruit/vegetable diseases and defects using foreign biological invasion [10], fruit/vegetable classification for assorted horticultural products [11], estimation of fruit/vegetable nitrogen [12], fruit/vegetable object real-time tracking [13], etc. Most scientific approaches for fruit grading using pattern classification are classified. Pertaining to fruit quality grading, the focus is on not only the freshness, but also the overall visual changes. Despite the recent rise of popularity of deep learning, more than half of the work [14] did not use deep learning methods.

Fruit recognition using computer vision and deep learning is an interesting research field [14]. The delicious golden apples have been graded using SVM + KNN [19]. However, the research project has just twofold: Healthy and defect; only one class of fruits was taken into consideration. Another project was developed for tomato grading [20] using texture, colour, and shape of digital images. A binary classification was proposed that fruits are recognised.

Deep learning was found useful in identifying conditions of citrus leaves [22], which is extremely powerful in image recognition for classification [23]. YOLO [24] was adopted for fruit and vegetable recognition. YOLO is a faster algorithm compared to other approaches, which achieved 40 fps (i.e., frames per second) in videos that are applicable for real-time applications. However, the fruits are constrained to the conditions when the fruits remain being connected to their biological hosts, e.g., hanging on branches. It does not take into account the scenarios where fruits are taken off from trees in the ongoing process of decaying. VGG was used for fruit recognition [25], and the result [25] manifests that convolutional neural network, when going deep, can achieve high accuracy. In contrast to the previous one, a shallow convolutional neural network [26] consisting of four convolution and pooling layers was suggested, followed by two fully connected layers. However, the source images in this experiment are simple, and all fruits are placed ideally at a static position in a pure white background.

An automatic grading system was developed for olive using discrete wavelet transform and statistical texture [27]. Another work [28] has addressed raspberry recognition using deep learning successfully, namely, a nine-layer neural network consisting of three convolutional and pooling layers, one input, dense, and output layer.

Mandarin decay process is impacted by a disease called penicillium digitatum; there is a contribution [29] dedicated to early detection of this disease by examining decay visual features. The visual elements are captured and processed by a combination of decision trees. However, these experiments only were conducted based on one class of fruits. Another problem is that the grading mechanism is a classification model which treats the fruit as being either fresh or rotten/defect. Still, we believe that the decay process occurs gradually; the final predictive layer should regress the output rather than perform a classification task.

Motivated by the related work, a novel fruit freshness grading method based on deep learning is proposed in this article. We create a dataset for fruit freshness grading. The dataset is comprised of selected frames from recorded videos for a dataset having six classes. From data collection, the images are resized and labelled (regions of interest, object classes, and freshness grades). Four typical augmentations are used, e.g., adjusted contrast, sharpness, rotation, and added noises. Our experiments embark on the statistics where visual reflections on the observed objects are discussed, followed by the implementation of a hierarchical deep learning model: YOLO + Regression-CNN for fruit freshness grading. This experiment takes into account of four base networks: VGG [15], AlexNet [16], ResNet [17], and GoogLeNet [18]. The main contributions of this paper are:

-

We propose a new approach to grade fruit freshness. The fruit freshness matters are generally tackled through pattern classification. In this article, the regression of CNN is applied to fruit freshness grading. We detect and classify a given fruit as a visual object for freshness grading.

-

We inject noises to fruit images, so that the developed model is capable of resisting noises introduced from real applications.

In this article, we narrated the work related to fruit freshness grading in Sect. Data Collection. Then, our dataset is described in Sect. Methods, our method is detailed in Sect. Experimental Results, and the experimental results are demonstrated in Sect. Conclusion. In Sect. 5, the conclusion of this paper is drawn.

Data Collection

Different from the existing work, in this article, we propose deep learning algorithms for fruit freshness grading. As we already know, deep learning is promising to the freshness grading for multiclass fruits that will significantly reduce our human labour. In this article, we provide a detailed description of how we have collected visual data and conducted data augmentation before fruit freshness classification. Given the novelty of this research project, the fruit data are not available at present, and thus, we have to collect the data by ourselves. We illustrate our process of how we have received the fruit data and provided empirical evidence on how the dataset accurately represents the fruit freshness.

Datasets

The collected dataset consists of six classes of fruits: apple, banana, dragon fruit, orange, pear, and Kiwi fruits, derived from a vast variety of locations in the images with various noises, irrelevant adjacent objects, and lighting conditions. We first analyse the relationship between fruit appearance and freshness. Fresh apple peel is low in chlorophyll and carotenoid concentrations [30], and the spoilage leads to a gradual degradation of the constituent pigments, that reflect different wavelengths in spectrophotometry. A ripe banana having bright yellow colour is likely a result of carotenoid accumulation [31]. The main compositions of orange peels and flesh are pectin, cellulose, and hemicellulose if excluding water that represents 60–90% of weights [32, 33], the pigments are mostly carotenoids and flavonoids that generate red appearance of oranges. The exotic, aesthetic, and exterior look of dragon fruit is comprised of red-violet betacyanins and yellow betaxanthins [34]. The green colour of Kiwi fruits is a visual manifestation of chlorophylls if the degrading gives rise to the formation of pheophytins and pyropheophytins that render olive-brown colour to the fruit [35]. The green/yellow peel of a pear is a result of congregated chlorophylls; once degradation occurs, chlorophylls degenerate blue-black pheophytins, pyropheophytins are produced [35].

In total, we have collected approximately 4,000 images with each class of fruits around 700. We split the dataset into training and validation sets at the ratio of \(1:9\) (90% for training and 10% for validating). The freshness is graded from 0 to 10.0, with 0 indicating totally rotted (i.e., fruit colour and smell are stable which will not be worsen anymore, e.g., the fruit is not eatable and should be thrown away) and 10.0 for complete freshness shown in Table 1. In this article, we define the particular moment when the fruit is harvested as an absolute freshness grade with the number 10.0. However, based on the definition of total corruption, there lacks a definitive degree on this matter. From the fruit decay experiments, we see that fruit freshness grade is not available, and decayed fruits may have fungus and produce toxin. We consider the fruits are edible as the primary condition of being recognised.

In this project, we invited ten people to participate in the labelling work. We first sampled a few images (i.e., three images for each class of fruits at different decay stages) and required the participants to give their grades. We calculated the mean and standard deviation of the distribution of the proposed freshness grades. Regarding the fruit images with significant grade gaps, e.g., the standard deviation is higher or equals three, we invited them for a second round of grading and narrowed down the disagreement. We kept the labels unchanged if the grades proposed by the participants are close to what we initially have labelled. We justify the labels according to participants’ recommendations if the initially proposed freshness grade is far from the mean. It is assumed that for each image, there is a set of images in which the fruits have a similar freshness grade. We grouped the similar images, and if the sampled images are required to adjust the freshness levels, the associated images will be set accordingly. Table 1 shows 18 fruit images. Our dataset is available at: https://www.kaggle.com/datasets/dcsyanwq/fuit-freshness.

Image Quality Enhancement

Many of the source images have low quality, e.g., blurred or weak exposure to light. Thus, in this article, image enhancement approaches are taken into account to ensure the quality of the images.

Given a three colour-channel image \(I\left( {x,y,z} \right)\) with pixel \(v\left( {x,y,z} \right)\) , there exists a contrast factor \({f}_{\mathrm{contrast}}\) which renders a pixel as same as the average pixel intensity of the whole image when \({f}_{\mathrm{contrast}}=0\), and keeps the intensity unchanged if \({f}_{\mathrm{contrast}}=1\). The intensity variation increases, while \({f}_{\mathrm{contrast}}\) rises up. The relationship between \({f}_{\mathrm{contrast}}\) and input/output pixel is described as

We denote \(v_{\min i}\) as the minimum value and \(v_{\max i}\) as the maximum value in the input image, \(v_{\min o}\) and \(v_{\max o}\) are the minimum and maximum intensity in the output image, respectively, and thus, we have

Here, \({f}_{\mathrm{contrast}}=1.2\) is determined as the result of human perceptions to which degree the contrast-adjusted images are inclusive of necessary visual features while being enhanced enough to render granularities that may be easy for neural network training.

We have our subjective evaluations for the quality of the contrast-based images. In this experiment, we found a myriad of photos which are blurry. This is reduced through image sharpening. We see that granular details are more evident than the image before applying to sharpen. It is believed that sharpened images render better visual results. Interpolation and extrapolation are utilized in the sharpening [36]. We thus define a filter for image smoothing

Pertaining to any source image \({I}_{\mathrm{source}}\), the convolution result \({I}_{\mathrm{smooth}}\) is expressed as

where, \(*\) denotes a convolution operator. Similar to that of the contrast process, we define a sharpness factor \({f}_{\mathrm{sharpen}}\), and the derived image \({I}_{\mathrm{blend}}\) is obtained [37] (Table 2).

where \({f}_{\mathrm{sharpen}}\) controls the result \({I}_{\mathrm{smooth}}\) based on the source image \({I}_{\mathrm{source}}\). In other words, \({f}_{\mathrm{sharpen}}=0\) renders an image completely blurred under with \({\mathrm{kernel}}_{\mathrm{smooth}}\), while \({f}_{\mathrm{sharpen}}=1.0\) keeps the image unaltered. The interpolation with \({f}_{\mathrm{sharpen}}\in (0, 1)\) has the effects after partially blurring the image \({I}_{\mathrm{source}}\), the extrapolation with \({f}_{\mathrm{sharpen}}\in (1.0, +\infty )\) inverses smoothing to sharpening. Provided that decrement of \({f}_{\mathrm{sharpen}}\in (0, 1)\) renders increasingly blurry effects, as a result of linear extrapolation, \({f}_{\mathrm{sharpen}}\in (-\infty , 0)\) blurs multifolds of what single \({\mathrm{kernel}}_{\mathrm{smooth}}\) rendered.

Image Augmentation

Image augmentation is a methodology to transform source images into ones with additional information, including scaling, rotating, cropping, and adding random noises. We experimented a rich assortment of augmentation methods, as shown in Table 3. Based on our observations, we decided to consider the following augmentation approaches: rotating and adding random noises. All images are rotated with the angle 120°; we denote an image \(I\) as a 2D matrix with coordinates \((x, y)\) for pixel value v

We denote a rotation matrix as \(R\), and thus, we have

For any \(\theta\) degree rotation, we have

And

for each new location \(({x}_{\mathrm{new}},{y}_{\mathrm{new}})\) having the same pixel intensity. The new image \({I}_{new}\) is

The source images are the matrices with three colour channels. For an RGB-encoded image \({I}_{rgb}\) with \(z=3\), the rotation matrix is applied to all three dimensions. All images are supplementary with random noises consisting of arbitrary changes of brightness, contrast, saturation, and erosion of ten image regions. The added random noises sequentially follow the order: Random brightness adjustment, random contrast adjustment, and random erosion filtering for the ten image regions. Given an image \(I(x,y,z)\) and each pixel \(v(x, y, z)\), with a brightness factor \({f}_{\mathrm{brightness}}({v}_{\mathrm{add}})\), where

the level of brightness adjustment is proportion to the pixel value, we have \(f\left({v}_{\mathrm{add}}\right)\in [0.9, 1.5]\). Thus

where \({f}_{\mathrm{contrast}}\) denotes the level of contrast of an image. In this article, we set \({f}_{\mathrm{contrast}}\in [0.9, 1.5]\) randomly.

Randomly removing image regions [37] is an image augmentation that addresses generalisation issues. Assume an input image \(I\) with \({w}_{I}\) and \({h}_{I}\) for width and height, and we define two integers \({x}_{\mathrm{start}}\in [0, {w}_{I}]\) and \({y}_{\mathrm{start}}\in [0, {h}_{I}]\) as the starting point \(({x}_{\mathrm{start}},{y}_{\mathrm{start}})\). We define the width and height of a removal region in proportion \({r}_{b}\). In this article, we set \({r}_{b}=0.15\). The two points, namely, bottom left.

\(({x}_{\mathrm{start}}, {y}_{\mathrm{start}})\) and top right \(({x}_{\mathrm{end}}, {y}_{\mathrm{end}})\) of the removed region are defined as

The random selection process repeats ten times, and the results are shown in Table 3. In summary, the data preprocessing contains six classes of fruits with various decay grades. Data augmentation is extensively emphasised in this article. For each image, there are four variants: sharpened with contrast, rotated with random noises. There are two classes of labels for fruit objects: fruit freshness grade and location of a fruit in a given image of VOC [38].

Methods

In this section, a neural network YOLO for fruit classification as one hierarchical deep learning model is considered, whose results are fed into a regression CNN for fruit grading. In comparison to the deep learning method, we first treat a linear model based on texture and colour of the images; the relevant analysis paves the way for explicating the reason why we should implement a deep learning approach.

A Linear Proposal

Simple ambient noises refer to the image background with little distractions, usually plain black or white colour. In an environment, fruit localisation and freshness grading become easy, as simple pixel-based manipulation can render satisfactory results. The primary advantage of this project is a fast computation for fruit grading.

In this project, we proposed a simple solution to locate a fruit on a digital image, automatically grade its freshness based on the texture appearance of the fruit itself. Since most of fruits have distinct appearances when the background has a plain or pure colour, a simple threshold can be applied to segment a fruit object from an image. Image regions within the thresholds will be selected, while others are masked. The contour of the selected image regions will be depicted to determine the bounding boxes for object detection.

Denote an image \(I\) comprising of pixel \({v}_{x, y, z}\) where \(x\in [1, \mathrm{width}]\), \(y\in [1, \mathrm{height}]\), \(z\) is (r,g,b) colour channel, for example, an RGB image has \(z\in [1, 256]\). We have a binary mask

where the \(\mathrm{threshold}\) is the pixel intensity of a fruit image. Pertaining to apples, the most observed colours are beige and crimson with RGB colours \((166, 123, 91)\) and \(\left(220, 20, 60\right),\) respectively. Thus, the colour thresholds are defined as

For freshness grading, we treat the brightness and the pixel intensity within a bounding box as the two conditions. It is believed that generally for a rotten fruit, the number of brown/dark spots grow. This appearance change results in the increases of pixel intensity and the decreases of brightness. An entropy for a given image \(I\) with histograms \({h}_{i}\) is

For a given image \(I\) with a pixel \({p}_{i}\), where \(i=\mathrm{0,1}, \dots , n\), \(n\) represents the number of pixels that comprise the image, and we have

The freshness is calculated using Eq. (21)

where \({k}_{e}\) and \({k}_{b}\) are weight adjustment parameters, and \(b\) is the bias. These parameters are determined via linear regression, assuming a regression output \({y}_{i}\) and a data sample \({x}_{i}\), where \({x}_{i}\) consists of \(n\) dimensions, and thus

The loss function for linear regression is

We minimise the loss

Therefore, we have \(\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}} {\hat{\beta }} = \left\{ {b, - k_{e} ,k_{b} } \right\}\). We selected a few fruit images with various decay levels and calculated the entropy as well as the brightness of the detected bounding box; meanwhile, \({k}_{e}\) and \({k}_{b}\) are determined. We observed the entropy and intensity, adjusted \({k}_{e}\) and \({k}_{b}\) to make the sum of the entropy and brightness intensity close to the corresponding freshness level.

A Hierarchical Deep Learning Model

In this section, we propose a hierarchical deep learning model for fruit freshness classification, whose results are fed into a second one (regression CNN) for freshness grading. YOLO + Regression-CNN is a hierarchical neural network, whose predictive bounding boxes are fed to the regression CNN for freshness grading. Regression CNNs are trained for each class of fruits. In this article, we work for the classification of six classes of fruits; the six regression CNNs are trained. YOLO is used to classify the class of the object/fruits as well as estimate the bounding box, which locates the visual object on an image. The corresponding regression has been applied to this class of fruits for freshness grading. The framework is illustrated in Fig. 1, the regression model is shown in Fig. 2, the pipeline of this model is shown in Fig. 3.

The source images are fed into YOLO for object recognition, where the central point, width, and height of the bounding box are determined. With YOLO prediction, the model maps the predicted class of the detected fruit onto the regression neural network. The detected object in the image is cropped out from its background as the input image to the regression CNN network.

The YOLO model in this article has the same structure as YOLOv3 [39]. We thus define a set of input data \(D\), in which we have

where \(n\) is the total number of input images, and \({I}_{i}\) is the \(i\) th image, \(i=\mathrm{1,2},3\dots ,n\). Our input images are encoded using RGB channels. Thus, this defines each image \({I}_{i}\) as three-dimensional and has the same image size. The image \({I}_{i}\) is defined as a 2D matrix

To prevent overfitting, additional random flips are considered, after YOLOv3 takes the source data and starts the computation [39] at the time \(t\), we have a prediction \(\widehat{Y}=\{\widehat{{y}_{1}},\widehat{{y}_{2}}, \dots , \widehat{{y}_{n}}\}\)

For the YOLO model \({\xi }_{YOLO}\) we have a bounding box, the associated object class, and the prediction

According to the predicted class \(\widehat{{c}_{i}}\), the anchored box is denoted as (\(\widehat{{x}_{i}},\widehat{{y}_{i}},\widehat{{w}_{i}},\widehat{{h}_{i}}\)), the source image \({I}_{i}\) is cropped. The new image is

where \(\widehat{{x}_{i}} and \widehat{{y}_{i}}\) are the central point of the predicted bounding box, the \({crop(I}_{i},\widehat{{x}_{i}},\widehat{{y}_{i}},\widehat{{w}_{i}},\widehat{{h}_{i}})\) for the \(i\) th image \({I}_{i}\) is expressed as

The cropped image \(\mathrm{new}{I}_{i}\) is fed into a regression neural network. We thus define the regression neural network \({\xi }_{rege}\left(t\right)\) at the training epoch \(t\), a cropped image dataset is \(newD=\{\) \(\mathrm{new}{I}_{1}, \mathrm{new}{I}_{2},\dots ,\mathrm{new}{I}_{m}\}\)

where \(\widehat{R}\) is the result of fruit freshness regression, and we have

Hence, the hierarchical model is expressed as

For each prediction \(\widehat{{y}_{i}}\), we have

In this article, we experimented on four base networks, i.e., AlexNet, VGG, ResNet, and GoogleNet, for regression based on the six classes of fruits. Each class of fruits likely has unique feature distinct from others that should be processed by using regression convolutional neural network.

We offer the base networks AlexNet, VGG, ResNet, and GoogLeNet. In the fully connected layers, we modified the number of neurons to fit for our fruit freshness regression. We designed additional four layers for the fully connected neural network.

Experimental Results

In this article, our model is constructed hierarchically, consisting of classification and location-oriented model (YOLO), a set of regression CNNs targeting each fruit type. Besides, we make a comparison to the linear model.

The Proposal

We calculate the average brightness and entropy for a video frame. Associated the images with complicated background noises, the location is very hard to be found, the brightness/entropy approach does not converge as expected. The defined freshness function is shown in Eq. (35)

where it has not a linear relationship with entropy and brightness of the image. The configurations of a linear regressor are shown as

-

\({k}_{1}\): − 2.7701

-

\({k}_{2}\): 0.00367

-

\(b\): 9.0004.

However, this approach is subject to background noises, even if a minor change of background might result in significant errors. During this experiment, we set up different physical backgrounds while acquiring an image of the fruits, including lighting conditions and placing adjacent foreign objects.

For fresh fruits such as apples and banana, we do observe that there exist correlations between entropy/brightness levels and decay stages if the background is set as static. However, for other fruits such as kiwi fruits and oranges, this assumption is hardly correct.

This preliminary approach through entropy/brightness computations reveals the complexity of fruit freshness grading. Fruits have their own processes of decaying; for each decay characteristic, there is no apparent relationship between static visual features (i.e., a set of defined rules of pixel statistics) and freshness levels. Based on these discoveries, we decide to treat each class of fruit individually rather than a comprehensive approach.

YOLO + GoogLeNet

In the GoogLeNet, the fruits show multiple levels of regression on grading fruit freshness. Banana is the most accurately predicted class of fruits, while Kiwi fruits are the most difficult one. Apple freshness grading appears the most unstable one in the validation set. For example, they appeared with fungus and brown/dark spots, in comparison to other fruits, e.g., dragon fruits usually are covered by yellowish dark spots (Table 4).

YOLO + AlexNet

It is observable that the performance of AlexNet on the six classes of fruits is similar to other base network regarding on which class of fruits the regression is prone to deviating from the ground truth. Apples, Kiwi fruits, and pears are the three most challenging classes to be regressed, while bananas are the most accurate one. Fruits with relatively large errors tend to be less stable in standard deviation during regression. This is evident in both training and validation sets of all classes of fruits. The average MSE for the six classes of fruits is \(3.500\) for the training dataset and \(4.099\) for the validation dataset. In terms of regressions, this model generates \(1.480\) for the training set and \(1.248\) for the validation set (Tables 5).

YOLO + ResNet

ResNet-152 is the top net among the ResNet family as well as the deepest one among the ResNets. Again, ResNet fails to deliver reliable results for three particular classes of fruits: apples, Kiwi fruits, and pears. The regression error is largely based on the Kiwi fruit dataset, both on the training and validation sets. For pears, there exists a possibility of overfitting by using the validation set shows \(6.057\), while it reports \(3.984\) using the training set. Banana freshness grading is the most accurate one. In terms of regression stability, pears are the least stable, while oranges are generally the highest one, judged using training and validation sets. On average, the MSE values of training and validation sets for ResNet-152 are \(3.582\) and \(4.058,\) respectively. For stability measurement, the standard deviation is \(1.329\) for the training set and \(1.842\) for the validation set (Table 6).

YOLO + VGG

We tested the VGG-11 model. Again, grading bananas is the most accurate one in freshness grading regression, while classifying apples, kiwis fruits, and pears are the most difficult ones. However, VGG-11 tends to suffer less from overfitting as indicated in the metrics where the result gaps between the training and validation sets are small against what we have observed in other base networks. VGG-11 displays high stability in regression, where for apples, Kiwi fruits, and pears, both training and validation sets show robust regression output (hinted in standard deviation). The average training and validation MSE values are close to the other three base networks, \(3.665\) and \(3.934\), respectively. The standard deviations are \(1.361\) and \(1.266\), respectively (Table 7).

Comparisons

The four deep learning models have similar performance. Using the training set, AlexNet shows the best of MSE, while VGG eyes the lowest error with the validation set. Table 8 is a summary of the overall proposed model regression performance, measured in MSE.

Conclusion

In this paper, we constructed a linear regression model to detect and measure the fruit freshness by judging the darkness of the fruit skin and variations of colour transitions. Accordingly, we affirm that fruit spoilage occurs with biochemical reactions that result in visual fading. Hence, we propounded a deep learning solution.

Deep learning has been used for fruit freshness grading, with the considerations of multiclass of fruits (i.e., apple, banana, dragon fruit, Kiwi fruit, orange, and pear). We have developed a hierarchical approach, in which a slew of fruits are detected and classified with real-time object detection, the regions of interest are cropped from the source images and fed into CNN models for regression, and thus, the freshness level is finally graded. We independently trained the convolutional neural network for four renown models, i.e., GoogLeNet, ResNet, AlexNet, and VGG-11. Our experimental results have shown an excellent performance of deep learning algorithms towards to resolving this problem [9, 40,41].

References

Akinmusire O. Fungal species associated with the spoilage of some edible fruits in Maiduguri Northern Eastern Nigeria. Adv Environ Biol. 2011;5:157–62.

Rawat S. Food spoilage: microorganisms and their prevention. Asian J Plant Sci Res. 2015;5(4):47–56.

Tournas VH, Katsoudas E. Mould and yeast flora in fresh berries, grapes and citrus fruits. Int J Food Microbiol. 2005;105(1):11–7.

Sindhi K, Pandya J, Vegad S. Quality evaluation of apple fruit: a survey. Int J Comput Appl. 2016;975:8887.

Shukla AK. Electron spin resonance in food science. Cambridge: Academic Press; 2016.

Bhargava A, Bansal A. Fruits and vegetables quality evaluation using computer vision: a review. J King Saud Univ-Comput Inf Sci. 2018. https://doi.org/10.1016/j.jksuci.2018.06.002.

Pandey R, Naik S, Marfatia R. Image processing and machine learning for automated fruit grading system: a technical review. Int J Comput Appl. 2013;81(16):29–39.

Cunha JB (2003) Application of image processing techniques in the characterisation of plant leafs. In: IEEE International Symposium on Industrial Electronics, pp 612–616

Fu Y. Fruit freshness grading using deep learning (Master’s Thesis). New Zealand: Auckland University of Technology; 2020.

Koumpouros Y, Mahaman BD, Maliappis M, Passam HC, Sideridis AB, Zorkadis V. Image processing for distance diagnosis in pest management. Comput Electron Agric. 2004;44(2):121–31.

Brosnan T, Sun DW. Inspection and grading of agricultural and food products by computer vision systems—a review. Comput Electron Agric. 2002;36(2–3):193–213.

Tewari VK, Arudra AK, Kumar SP, Pandey V, Chandel NS. Estimation of plant nitrogen content using digital image processing. Agric Eng Int CIGR J. 2013;15(2):78–86.

Ozyildiz E, Krahnstöver N, Sharma R. Adaptive texture and color segmentation for tracking moving objects. Pattern Recogn. 2002;35(10):2013–29.

Tripathi MK, Maktedar DD. A role of computer vision in fruits and vegetables among various horticulture products of agriculture fields: a survey. Inf Process Agric. 2019;7:183.

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv:1409.1556

Krizhevsky A, Sutskever I, Hinton GE. ImageNet classification with deep convolutional neural networks. Adv Neural Inf Process Syst. 2012;25:1097–105.

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: IEEE Conference on Computer Vision and Pattern Recognition, pp 770–778.

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Rabinovich A (2015) Going deeper with convolutions. In: IEEE Conference on Computer Vision and Pattern Recognition, pp 1–9.

Moallem P, Serajoddin A, Pourghassem H. Computer vision-based apple grading for golden delicious apples based on surface features. Inf Process Agric. 2017;4(1):33–40.

Arakeri MP. Computer vision based fruit grading system for quality evaluation of tomato in agriculture industry. Procedia Comput Sci. 2016;79:426–33.

Zawbaa HM, Abbass M, Hazman M, Hassenian AE (2014) Automatic fruit image recognition system based on shape and colour features. In: International Conference on Advanced Machine Learning Technologies and Applications, pp 278–290.

Iqbal Z, Khan MA, Sharif M, Shah JH, Rehman MH, Javed K. An automated detection and classification of citrus plant diseases using image processing techniques: a review. Comput Electron Agric. 2018;153:12–32.

Bresilla K, Perulli GD, Boini A, Morandi B, Grappadelli LC, Manfrini L. Single-shot convolution neural networks for real-time fruit detection within the tree. Front Plant Sci. 2019. https://doi.org/10.3389/fpls.2019.00611.

Redmon J, Divvala S, Girshick R, Farhadi A (2016) You only look once: unified, real-time object detection. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 779–788.

Zeng G (2017) Fruit and vegetables classification system using image saliency and convolutional neural network. In: IEEE Information Technology and Mechatronics Engineering Conference (ITOEC), pp 613–617.

Mureşan H, Oltean M. Fruit recognition from images using deep learning. Acta Univ Sapientiae Inf. 2018;10(1):26–42.

Nashat AA, Hassan NH. Automatic segmentation and classification of olive fruits batches based on discrete wavelet transform and visual perceptual texture features. Int J Wavelets Multiresolut Inf Process. 2018;16(01):1850003.

Al-Sarayreha M, Reis M, Yan W, Klette R. Potential of deep learning and snapshot hyperspectral imaging for classification of species in meat. Food Science. 2020. https://doi.org/10.1016/j.foodcont.2020.107332.

Gómez-Sanchis J, Gómez-Chova L, Aleixos N, Camps-Valls G, Montesinos-Herrero C, Moltó E, Blasco J. Hyperspectral system for early detection of rottenness caused by Penicillium digitatum in Mandarins. J Food Eng. 2008;89(1):80–6.

Knee M. Anthocyanin, carotenoid, and chlorophyll changes in the peel of Cox’s Orange Pippin apples during ripening on and off the tree. J Exp Bot. 1972;23:184–96.

Davey MW, Stals E, Ngoh-Newilah G, Tomekpe K, Lusty C, Markham R, Keulemans J. Sampling strategies and variability in fruit pulp micronutrient contents of west and central African bananas and plantains (Musa species). J Agric Food Chem. 2007;55(7):2633–44.

Bampidis VA, Robinson PH. Citrus by-products as ruminant feeds: a review. Anim Feed Sci Technol. 2006;128(3–4):175–217.

Zheng Y, Yu C, Cheng YS, Zhang R, Jenkins B, VanderGheynst JS. Effects of ensilage on storage and enzymatic degradability of sugar beet pulp. Biores Technol. 2011;102(2):1489–95.

Herbach KM, Stintzing FC, Carle R. Betalain stability and degradation—structural and chromatic aspects. J Food Sci. 2006;71(4):R41–50.

Schwartz SJ, Von Elbe JH. Kinetics of chlorophyll degradation to pyropheophytin in vegetables. J Food Sci. 1983;48(4):1303–6.

Haeberli P, Voorhies D. Image processing by linear interpolation and extrapolation. IRIS Univ Mag. 1994;28:8–9.

Zhong Z, Zheng L, Kang G, Li S, Yang Y (2017) Random erasing data augmentation. arXiv:1708.04896

Everingham M, Van Gool L, Williams CK, Winn J, Zisserman A. The pascal visual object classes (VOC) challenge. Int J Comput Vision. 2010;88(2):303–38.

Redmon J, Farhadi A (2018) YOLOv3: an incremental improvement. arXiv:1804.02767.

Karakaya D, Ulucan O, Turkan M (2019) A comparative analysis on fruit freshness classification. In: Innovations in Intelligent Systems and Applications Conference

Yan W. Computational methods for deep learning: theoretic, practice and applications. Berlin: Springer; 2021.

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article is part of the topical collection “From Geometry to Vision: The Methods for Solving Visual Problems” guest edited by Wei Qi Yan, Harvey Ho, Minh Nguyen and Zhixun Su.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Fu, Y., Nguyen, M. & Yan, W.Q. Grading Methods for Fruit Freshness Based on Deep Learning. SN COMPUT. SCI. 3, 264 (2022). https://doi.org/10.1007/s42979-022-01152-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42979-022-01152-7