Abstract

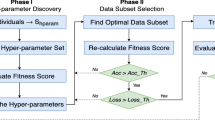

Hyperparameter optimization is a very difficult problem in developing deep learning algorithms. In this paper, a genetic algorithm was applied to solve this problem. The accuracy and the verification time were considered by conducting a fitness evaluation. The algorithm was evaluated by using a simple model that has a single convolution layer and a single fully connected layer. A model with three layers was used. The MNIST dataset and a motor fault diagnosis dataset were used to train the algorithm. The results show that the method is useful for reducing the training time.

Similar content being viewed by others

References

Everingham M, Van Gool L, Williams CK, Winn J, Zisserman A (2010) The pascal visual object classes (VOC) challenge. IJCV 303–338

Huval B, Wang T, Tandon S et al (2015) An empirical evaluation of deep learning on highway driving. arXiv:1504.01716

Stoica P, Gershman AB (1999) Maximum-likelihood DOA estimation by data-supported grid search. IEEE Signal Process Lett 6:273–275

Bergstra J, Bengio Y (2012) Random search for hyper-parameter optimization. J Mach Learn Res 13(1):281–305

Snoek J, Larochelle H, Adams R (2012) Practical Bayesian optimization of machine learning algorithms. Adv Neural Inf Process Syst 25:2960–2968

Lin SW, Ying KC, Chen SC, Lee ZJ (2008) Particle swarm optimization for parameter determination and feature selection of support vector machines. Exper Syst Appl 35(4):1817–1824

de Souza SX, Suykens JA, Vandewalle J, Bolle D (2010) Coupled simulated annealing. Syst Man Cybern Part B Cybern IEEE Trans 40(2):320–335

Birattari M, Yuan Z, Balaprakash P, Stützle T (2010) F-race and iterated f-race: an overview. In: Bartz-Beielstein T, Chiarandini M, Paquete L, Preuss M (eds) Experimental methods for the analysis of optimization algorithms. Springer, Berlin, Heidelberg

Deb K, Agrawal S, Pratap A, Meyarivan T (2000) A fast elitist non-dominated sorting genetic algorithm for multi-objective optimization: NSGA-II. In: Schoenauer M et al (eds) Parallel problem solving from nature PPSN VI. PPSN 2000. Lecture Notes in Computer Science, vol 1917. Springer, Berlin, Heidelberg

Goky S (2017) Deep learning from scratch. Hanbit Media, Seoul, pp 250–254

Han JH, Choi DJ, Hong SK, Kim HS (2019) Motor fault diagnosis using CNN based deep learning algorithm considering motor rotating speed. In: International conference on industrial engineering and applications, pp 440–445

Acknowledgements

This research was supported by the Korea Electric Power Corporation (Grant number: R18XA06-23).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Han, JH., Choi, DJ., Park, SU. et al. Hyperparameter Optimization Using a Genetic Algorithm Considering Verification Time in a Convolutional Neural Network. J. Electr. Eng. Technol. 15, 721–726 (2020). https://doi.org/10.1007/s42835-020-00343-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42835-020-00343-7