Abstract

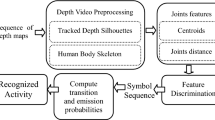

Assessment of human behavior during performance of daily routine actions at indoor areas plays a significant role in healthcare services and smart homes for elderly and disabled people. During this consideration, initially, depth images are captured using depth camera and segment human silhouettes due to color and intensity variation. Features considered spatiotemporal properties and obtained from the human body color joints and depth silhouettes information. Joint displacement and specific-motion features are obtained from human body color joints and side-frame differentiation features are processed based on depth data to improve classification performance. Lastly, recognizer engine is used to recognize different activities. Unlike conventional results that were evaluated using a single dataset, our experimental results have shown state-of-the-art accuracy of 88.9% and 66.70% over two challenging depth datasets. The proposed system should be serviceable with major contributions in different consumer application systems such as smart homes, video surveillance and health monitoring systems.

Similar content being viewed by others

References

Shah S (2009) Influence of the human motion on wide-band characteristics of the 60 GHz IRC. In: 2nd International conference on emerging trends in engineering and technology (ICETET), pp 1123–1126. https://doi.org/10.1109/ICETET.2009.131

Mehmood M, Jalal A, Evans H (2018) Facial expression recognition in image sequences using 1D transform and gabor wavelet transform. In: International conference on applied and engineering mathematics. https://doi.org/10.1109/ICAEM.2018.8536280

Kamal S, Jalal A (2015) A hybrid feature extraction approach for human detection, tracking and activity recognition using depth sensors. Arab J Sci Eng 41(3):1043–1051

Jalal A, Kamal S, Kim D (2015) Shape and motion features approach for activity tracking and recognition from Kinect video camera. In: Proceedings of the 29th IEEE international conference on advanced information networking and applications workshops (WAINA '15). IEEE, Gwangiu, South Korea, March 2015 pp 445–450

Farooq A, Jalal A, Kamal S (2015) Dense RGB-D map-based human tracking and activity recognition using skin joints features and self-organizing map. KSII Trans 9(5):1856–1869

Jalal A, Kamal S (2014) Real-time life logging via a depth silhouette-based human activity recognition system for smart home services. In: Proceedings of the 11th IEEE international conference on advanced video and signal-based surveillance (AVSS '14). Seoul, South Korea, August 2014, pp 74–80

Jalal A, Sharif N, Kim J, Kim T (2013) Human activity recognition via recognized body parts of human depth silhouettes for residents monitoring services at smart homes. Indoor Built Environ 22(1):271–279

Xia L, Aggarwal J (2013) Spatio-temporal depth cuboid similarity feature for activity recognition using depth camera. In: Proceedings of the 26th IEEE conference on computer vision and pattern recognition (CVPR '13). IEEE, Portland, Ore, USA, June 2013, pp 2834–2841

Jalal A, Kim S (2006) Global security using human face understanding under vision ubiquitous architecture system. WASET 13:7–11

Kamal S, Jalal A, Kim D (2016) Depth images-based human detection, tracking and activity recognition using spatiotemporal features and modified HMM. J Electr Eng Technol. https://doi.org/10.5370/JEET.2016.11.6.1857

Jalal A, Kamal S, Kim D (2018) Detecting complex 3D human motions with body model low-rank representation for real-time smart activity monitoring system. KSII Trans Internet Inf Syst 12(3):1189–1204

Muller M, Roder T (2006) Motion templates for automatic classification and retrieval of motion capture data. In: Proceedings of the 2006 ACM SIGGRAPH/Eurographics symposium on Computer animation. Vienna, Austria, September 02–04, 2006, pp 137–146

Jalal A, Kamal S, Kim D (2014) A depth video sensor-based life-logging human activity recognition system for elderly care in smart indoor environments. Sensors 14(7):11735–11759

Farroq F, Jalal A, Zheng L (2017) Facial expression recognition using hybrid features and self-organizing maps. In: Proceedings of IEEE International Conference on Multimedia and Expo (ICME). pp 409–414. https://doi.org/10.1109/ICME.2017.8019503

Jalal A, Kamal S, Kim D (2015) Individual detection-tracking-recognition using depth activity images. In: Proceedings of 12th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI 2015). pp 450–455. https://doi.org/10.1109/URAI.2015.7358903

Jalal A, Kamal S, Kim D (2014) Depth map-based human activity tracking and recognition using body joints features and self-organized map. In: Proceedings 5th International Conference on Computing, Communication and Networking Technologies (ICCCNT). pp 1–6. https://doi.org/10.1109/ICCCNT.2014.6963013

Blumrosen G, Miron Y, Intrator N, Plotnik M (2016) A real-time kinect signature-based patient home monitoring system. Sensors 16:1–21

Jalal A, Kim Y, Kim D (2014) Ridge body parts features for human pose estimation and recognition from RGB-D video data. In: ICCCNT, pp 1–6. https://doi.org/10.1109/ICCCNT.2014.6963015

Jalal A, Zia M, Kim T (2012) Depth video-based human activity recognition system using translation and scaling invariant features for life logging at smart home. IEEE Trans Consumer Electron 58(3):863–871

Jalal A, Kamal S, Kim D (2015) Depth silhouettes context: a new robust feature for human tracking and activity recognition based on embedded HMMs. In: Proceedings of the 12th international conference on ubiquitous robots and ambient intelligence (URAI '15). Goyang, South Korea, October 2015, pp 294–299

Jalal A, Uddin I (2007) Security architecture for third generation (3G) using GMHS cellular network. In: roceedings of the 3rd international conference on emerging technologies (ICET '07). IEEE, Islamabad, Pakistan, November 2007, pp 74–79

Jalal A, Shahzad A (2007) Multiple facial feature detection using vertex-modeling structure. In: Proceedings of the international conference on interactive computer aided learning. September 2007, pp 1–7

Jalal A, Zia M, Kim J, Kim T (2012) Recognition of human home activities via depth silhouettes and R transformation for smart homes. Indoor Built Environ 21:184–190

Jalal A, Kim J, Kim T (2012) Development of a life logging system via depth imaging-based human activity recognition for smart homes. In: Proceedings of the 8th International Symposium on Sustainable Healthy Buildings. Seoul, Republic of Korea, September 2012, pp 91–95

Oreifej O, Liu Z (2013) HON4D: histogram of oriented 4D normals for activity recognition from depth sequences. In: Proceedings / CVPR, IEEE computer society conference on computer vision and pattern recognition. IEEE computer society conference on computer vision and pattern recognition. pp 716–723. https://doi.org/10.1109/CVPR.2013.98

Jalal A, Kim Y, Kamal S, Farooq A, Kim D (2015) Human daily activity recognition with joints plus body features representation using kinect sensor. In: Proceedings of the international conference on informatics, electronics and vision (ICIEV '15). IEEE, Fukuoka, Japan, June 2015, pp 1–6

Jalal A, Zeb M (2008) Security enhancement for e-learning portal. IJCSNS 8(3):41–45

Jalal A, Kim S (2005) A complexity removal in the floating point and rate control phenomenon. In: Proceedings of the conference on Korea multimedia society, vol 8. pp 48–51

Jalal A, Rasheed Y (2007) Collaboration achievement along with performance maintenance in video streaming. In: Proceedings of the IEEE Conference on interactive computer aided learning. Villach, Austria, pp 1–8

Jalal A, Kim J, Kim T (2012) Human activity recognition using the labeled depth body parts information of depth silhouettes. In: Proceedings of the 6th international symposium on sustainable healthy buildings. pp 1–8

Jalal A, Kamal S, Farooq A, Kim D (2015) A spatiotemporal motion variation features extraction approach for human tracking and pose-based action recognition. In: Proceedings of the international conference on informatics, electronics and vision (ICIEV '15). Fukuoka, Japan, June 2015, pp 1–6

Jalal A, Kim S (2005) The mechanism of edge detection using the block matching criteria for the motion estimation. In: Proceedings of the conference on human computer interaction (HCI '05), pp 484–489

Jalal A, Zeb MA (2007) Security and QoS optimization for distributed real time environment. In: Proceedings of the 7th IEEE International conference on computer and information technology (CIT '07). Aizuwakamatsu, Japan, October 2007, pp 369–374

Jalal A, Kim S (2005) Advanced performance achievement using multi-algorithmic approach of video transcoder for low bit rate wireless communication. ICGST Int J Gr Vis Image Process 5:27–32

Jalal A, Kim S, Yun B (2005) Assembled algorithm in the real-time H. 263 codec for advanced performance. In: Proceedings of the 7th international workshop on enterprise networking and computing in healthcare industry (HEALTHCOM '05). IEEE, Busan, South Korea, June 2005, pp 295–298

Wang J, Liu Z, Wu Y, Yuan J (2012) Mining actionlet ensemble for action recognition with depth cameras. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR '12). Providence, RI, USA, June 2012, pp 1290–1297

Jalal A, Kim Y-H, Kim Y-J, Kamal S, Kim D (2017) Robust human activity recognition from depth video using spatiotemporal multi-fused features. Pattern Recogn 61:295–308

Jalal A, Kamal S, Kim D (2016) Human depth sensors-based activity recognition using spatiotemporal features and hidden markov model for smart environments. J Comput Netw Commun 2016(17):1–11

Jalal A, Kamal S, Kim D (2017) Facial expression recognition using 1D transform features and hidden markov model. J Electr Eng Technol 12(4):1657–1662

Jalal A, Kim Y (2014) Dense depth maps-based human pose tracking and recognition in dynamic scenes using ridge data. In: Proceedings of the 11th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS '14). IEEE, Seoul, Republic of Korea, August 2014, pp 119–124

Yang X, Zhang C, Tian Y (2012) Recognizing actions using depth motion maps-based histograms of oriented gradients. In: Proceedings of the 20th ACM international conference on multimedia. Nara, Japan — Oct 29–Nov 02, 2012, pp 1057–1060

Jalal A, Lee S, Kim J, Kim T (2012) Human activity recognition via the features of labeled depth body parts. Proceedings of the international conference on smart homes and health telematics, pp 246–249

Jalal A (2015) IM-DailyDepthActivity dataset. www.imlab.postech.ac.kr/databases.htm. Online. Accessed 25 Aug 2017

Li W, Zhang Z, Liu Z (2010) Action recognition based on a bag of 3D points. In: Proceedings of the IEEE computer society conference on computer vision and pattern recognition workshops (CVPRW '10).IEEE, San Francisco, Calif, USA, June 2010, pp 9–14

Acknowledgements

Project FONDECYT Iniciacion (Grant Number 11160517).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Jalal, A., Kamal, S. & Azurdia-Meza, C.A. Depth Maps-Based Human Segmentation and Action Recognition Using Full-Body Plus Body Color Cues Via Recognizer Engine. J. Electr. Eng. Technol. 14, 455–461 (2019). https://doi.org/10.1007/s42835-018-00012-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42835-018-00012-w