Abstract

An active area of investigation in the search for quantum advantage is quantum machine learning. Quantum machine learning, and parameterized quantum circuits in a hybrid quantum-classical setup in particular, could bring advancements in accuracy by utilizing the high dimensionality of the Hilbert space as feature space. But is the ability of a quantum circuit to uniformly address the Hilbert space a good indicator of classification accuracy? In our work, we use methods and quantifications from prior art to perform a numerical study in order to evaluate the level of correlation. We find a moderate to strong correlation between the ability of the circuit to uniformly address the Hilbert space and the achieved classification accuracy for circuits that entail a single embedding layer followed by 1 or 2 circuit designs. This is based on our study encompassing 19 circuits in both 1- and 2-layer configurations, evaluated on 9 datasets of increasing difficulty. We also evaluate the correlation between entangling capability and classification accuracy in a similar setup, and find a weak correlation. Future work will focus on evaluating if this holds for different circuit designs.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Quantum computing has seen a steady growth in interest ever since the quantum supremacy experiment (Arute et al. 2019). The search for quantum advantage, quantum supremacy for practical applications, is an active area of research (Bravyi et al. 2020). One potential domain of applications is machine learning (Riste et al. 2017). Here, quantum computing is said to potentially bring speedups and improvements in accuracy. One line of reasoning to assume an improvement in accuracy is as follows. A classical neural network takes the input data and maps it into a higher dimensional feature space. Then, using a combination of learnable linear transformations and static non-linear transformations, it maps the data between various higher dimensional feature spaces. This mapping is repeated until the data points are positioned in such a way that a hyperplane can separate data that belongs to different output classes. Given that qubits, the smallest units of information in a quantum computer, can span a larger space due to their quantum mechanical properties, one would expect that with the same resources, data could be mapped between higher dimensional and larger feature spaces. This would allow a more accurate separation of the data. Or, as a trade-off, one would require fewer resources to address the same space and maintain the same level of accuracy.

Recently, a framework compatible with shallow depth circuits for noisy intermediate-scale quantum (Preskill 2018) systems has been developed, named the hybrid quantum-classical framework. In this framework, the quantum machine leverages parameterized quantum circuits (PQCs) in order to make predictions and approximations, while the classical machine is used to update the parameters of the circuit (McClean et al. 2016). Example algorithms are the Variational Quantum Eigensolver (Peruzzo et al. 2014) and the Quantum Approximate Optimisation Algorithm (Farhi et al. 2014). Variational Quantum Circuits can also be used for machine learning problems, and bear resemblance to the structure of classical neural networks (Schuld et al. 2018).

Just as in classical neural networks, many circuit architectures exist. An active area of research focuses on determining the power and capabilities of these circuits (Coyle et al. 2020; Sim et al. 2019; Schuld et al. 2020). Following the previous line of reasoning of mapping data in larger feature spaces, one way of quantifying the power of a PQC is by quantifying the ability of a PQC to uniformly reach the full Hilbert space (Sim et al. 2019). Our work investigates these definitions by performing a numerical analysis on the correlation between such descriptors and classification accuracy in order to guide the choice and design of PQCs, as well as provide insight into potential limitations.

The remainder of this paper is structured as follows. In Section 2, we will describe our approach. Section 3 will outline our experimental setup and design choices. Our results are presented in Section 4 and discussed in Section 5. We will end this paper with the conclusion in Section 6. Raw experimental data can be found in the Appendices.

2 Approach

We will use prior art for descriptors that quantify the ability of a PQC to explore the Hilbert space. We will perform numerical simulations on various custom datasets of increasing difficult to quantify classification performance of these circuits. The dataset will be split in a train, validation, and test sets. The train set will be used for training the PQC and the validation set for evaluating our hyperparameter search. The final evaluation of our model with the best hyperparameters is performed on the test set, which will yield the data points that we will use in our search for correlation. We will repeat the experiments on the validation set to make sure the outcome is consistent. We will perform a statistical analysis to evaluate a potential relation between the descriptors and the classification accuracy of the various circuits.

3 Evaluation

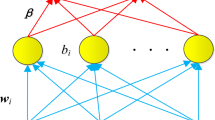

Variational Quantum Circuits applied for machine learning problems bear a resemblance to classical neural networks (NN), which is why they are also sometimes referred to as quantum neural networks (QNNs) (Farhi and Neven 2018). They can be used for classification and regression tasks in a supervised learning approach, or as generative models in an unsupervised setup. We will solely focus on supervised prediction. Both NNs and QNNs embed the data into a higher dimensional representation using an input layer. The input layer of a QNN is called an embedding circuit. The hidden layers of a QNN are referred to as circuit templates, which together constitute a PQC. The variables in these layers, called weights in NNs and parameters or variational angles in QNNs, are initialized along a probability distribution before training starts. The most notable difference between a NN and a QNN is in the way the output is handled. A NN uses an output layer to directly generate a distribution over the possible output classes using only a single run. A QNN needs to be executed several times, referred to as shots, before a histogram over the output states can be generated, which still needs to be mapped to the output classes. The predicted output class is compared to the true output class, denoted as ypredicted and ytrue, respectively, and the error is quantified by the loss function. This quantification of the error is used to guide the optimizer to adjust the parameters in an iterative process until convergence in the loss is reached. The measure of classification accuracy (Acc), or simply accuracy, is the number of correctly classified samples over the total number of samples. In the remainder of this section, we will first discuss the descriptors of PQCs that we will investigate, before going into more depth on the experimental setup that we use to investigate the correlation between the descriptors and the classification accuracy.

3.1 Descriptors of PQCs

Describing the performance of a PQC by the ability of the circuit to uniformly address the Hilbert space has been suggested by Sim et al. (2019). In their theoretical approach, Sim et al. (2019) propose to quantify this by comparing the true distribution of fidelities corresponding to the PQC, to the distribution of fidelities from the ensemble of Haar random states. In practice, they propose to approximate the distribution of fidelities, the overlap of states defined F = |〈ψ𝜃|ψϕ〉|2, of the PQC. They do this by repeatedly sampling two sets of variational angles, simulating their corresponding states, and taking the fidelity of the two resulting states to build up a sample distribution \(\hat {P}_{PQC}(F; {\varTheta })\). The ensemble of Haar random states can be calculated analytically: PHaar = (N − 1)(1 − F)N− 2, where N is the dimension of the Hilbert space (Życzkowski and Sommers 2005). The measure of expressibility (Expr) is then calculated by taking the Kullback-Leibner divergence (KL divergence) between the estimated fidelity distribution and that of the Haar distributed ensemble:

A smaller value for the KL divergence indicates a better ability to explore the Hilbert space. This measure of expressibility is observed on a logarithmic scale. This is where we decided to deviate from the original definition, as we will include these characteristics into the measure itself. In our work, we will evaluate the negative logarithmic of the KL divergence between the ensemble of Haar random states and the estimated fidelity distribution of the PQC, so that larger values for expressibility’ correspond to better ability to explore the Hilbert space, and will be correlated on this logarithmic scale. We will refer to this as expressibility’ (Expr’), distinguished by the ’ symbol:

In the same paper, Sim et al. (2019) define a second descriptor named entangling capability. This descriptor is intended to capture the entangling capability of a circuit which, based on prior art such as work by Schuld et al. (2018) and Kandala et al. (2017), allows a PQC to capture non-trivial correlations in the quantum data. Multiple ways to compute this measure exist, but the Meyer-Wallach entanglement measure (Meyer and Wallach 2002), denoted Q, is chosen due to its scalability and ease of computation. It is defined as

Where ιj(b) represents a linear mapping for a system of n qubits that acts on the computational basis with bj ∈{0,1}:

Here, the symbol HCode \(\hat { }\) denotes the absence of a qubit. In practice, also this measure of the PQC needs to be approximated by sampling, so Sim et al. (2019) define the estimate of entangling capability (Ent) of the PQC to be the following:

where S represents the set of sampled circuit parameter vectors 𝜃.

The quantification of both expressibility and entangling capability for the circuits, as found and provided by Sim et al. (2019), is shown in Table 5 in Appendix 1.

3.2 Datasets

Many datasets for the evaluation of classical machine learning algorithms exist (Goldbloom and Hamner 2020). However, classical machine learning is more advanced as a field compared to quantum machine learning, and currently capable of both processing data at larger scales and predicting more complex distributions. We have searched for a set of problems of increasing and varying difficulty, but not of a larger problem size, to cover a wider range of problems to benchmark against. We were unable to find any that satisfied our needs, and therefore came up with a set of nine datasets for the classifiers to fit, as shown in Fig. 1, labelled numerically in the vertical direction and alphabetically in the horizontal direction. Here, we included datasets where the two classes are bordering one another (1a), require one (1b) or more (1c) bends in a decision boundary, entrap one another (2a, 2b, 2c), or fully encapsulate each other (3a, 3b, 3c). Each dataset contains a total of 1500 data points for training, testing hyperparameters, and validation in a ratio of 3 : 1 : 1. The ratio of data points labelled true versus data points labelled false is 1 : 1, e.g., all datasets are balanced. All data points are cleaned and normalized.

3.3 Embedding the data

Various ways to embed classical data into quantum circuits have been proposed, such as Amplitude Encoding (Schuld and Killoran 2019), Product Encoding (Stoudenmire and Schwab 2016), or Squeezed Vacuum State Encoding (Schuld and Killoran 2019). Important distinguishing characteristics are time complexity, memory complexity, and fit for prediction circuits, as some prediction algorithms require data in a particular format. This part of the QNN is also referred to as the state preparation circuit, embedding circuit or the feature embedding circuit. However, the existing circuits do not meet our requirements. We search for an embedding that:

-

Holds as little expressive power as possible, as we want to observe the expressive power of the PQC

-

Embeds the data equally in all computational bases, to ensure circuit templates with initial gates acting on particular basis have an equal chance of having an observable effect

For this, we propose a novel embedding that we will refer to as the minimally expressive embedding, denoted W(x) in later calculations and shown in Fig. 2. To make sure this embedding circuit holds as little expressive power as possible, we embed the classical data into a quantum state using a linear mapping into the range (0,2π] using the parameter of a Rotational X gate. This can be visualized for this single qubit as embedding data along a circle in the Bloch sphere, as shown in Fig. 2b. We then use a Rotational Y and Rotational Z gate to rotate the circle of embedded data 45° both in the X-axis and Y-axis. The result is an embedding that, when projected down on the various computational basis, can address a similar range in every computational basis, allowing no bias to favor circuits that start with specific (Rotational) Pauli gates that could be off-axis for other embeddings. This can be seen in Fig. 2c and d.

We embed the 2-dimensional data in a replicative fashion: data on the x-axis in Fig 1 is embedded in qubits 0 and 2, data on the y-axis is embedded in qubits 1 and 3. We do this for two reasons:

-

2-Dimensional data can be easily visualized for a better understanding of both the data and the fitted decision boundary

-

Input redundancy is suggested to provide an advantage in classification accuracy (Vidal and Theis 2019)

Due to computational restrictions and available nodes for simulation, we restricted the data redundancy to a factor of 2.

3.4 The parameterized quantum circuit

In this paper, we aim to explore the correlation between our previously introduced descriptors and classification accuracy for various circuits and layers. Every experiment of ours follows the same design: a single embedding circuit followed by 1 or 2 circuit templates. For the circuit templates, denoted U(𝜃) when used later, we use the circuits presented in Sim et al. (2019). In this work, they introduce circuits with parameterized gates RX, RY, and RZ, as defined in Nielsen and Chuang (2002). The exact layout of the circuit templates can be consulted in Fig. 5 in Appendix 2 of our paper. All 19 circuits, except circuit 10, are used in the hyperparameter selection runs. All 19 circuits are used in the validation runs.

3.5 Measurement observable and mapping

The circuit needs to be measured repeatedly. The number of times can be significantly less as previously thought (Sweke et al. 2019). For our observable A, we use Pauli Z gates on all four qubits. This results in a total of 16 possible states, of which we map the first four and last four to output class − 1 and the other to output class 1, in line with previous work (Havlicek et al. 2018).

3.6 Loss function

Loss functions quantify the errors between the predicted class of the sample and the actual class. This can be done in several ways, which creates different loss landscapes. These loss landscapes have different properties for different loss functions, example properties being plateaus, local minima, and global minima that do not align with the true minima. In our work, we will evaluate the L1 loss and L2 loss.

The L1 loss can be seen as the most straightforward loss function, taking the absolute value of the difference between ytrue and ypred:

Here, ypred = 〈0|W‡(x)U‡(𝜃)AU(𝜃)W(x)|0〉, with W(x) and U(𝜃) representing the embedding circuit and the circuit template respectively, as previously defined. Furthermore, ytrue is given as ground truth by the input dataset.

The L2 loss does not use the absolute value but instead ensures positive outcomes through taking the square of the difference, which is easier to differentiate. This square also penalizes large errors harder than small errors, reducing the chance of overfitting. The L2 loss is defined as follows:

For binary values of y, the L2 loss and the L1 loss are equal. This is not the case for continuous values.

3.7 Optimizer

The task of the optimizer is to find updates to the parameters based on the outcome of the loss function in such a way that, after repeated runs, the loss is minimized. Many approaches make use of the gradient, either analytically (Schuld et al. 2019) or approximately (Sweke et al. 2019). Alternative approaches exist, such as genetic optimization strategies (Mirjalili and Sardroudi 2012). Either way, a balance needs to be struck between making large enough jumps to get out of local minima and plateaus, and making small enough updates so that the jumps do not equal random walks in the loss landscape. In our work, we evaluate the Adam optimizer and the gradient descent optimizer, due to their popularity in classical frameworks and their availability in our implementation framework.

3.8 Implementation framework

We originally implemented our work in Qiskit (Abraham 2019), but switched to Pennylane (Bergholm et al. 2018) due to the ease for our hyperparameter search.

3.9 Training setup

We will first perform a hyperparameter search on the loss functions L1 and L2, as well as on the optimizers Adam and gradient descent, all introduced previously in this section. We compare their performance in terms of average classification accuracy over the validation data of our nine datasets. As we evaluate 2 loss functions and 2 optimizers using 19 circuits in both 1- and 2-layer configurations on 9 datasets, we have to train a total of 1368 quantum circuits. We simulate these 1368 circuits classically in our previously mentioned implementation framework, Pennylane (Bergholm et al. 2018). We will report on the difference in accuracy for different numbers of layers of each circuit but as each layer configuration has its own value for the descriptors, we will treat each layer configuration as unique data points in our final analysis. The optimal hyperparameters derived through the validation runs are used as settings for the test runs during which we gather our final accuracy values for all 19 circuits in both 1- and 2-layer configurations evaluated on 9 datasets each. As a sanity check, we will repeat the final experiment three times, requiring a total of 1026 additional simulations.

3.10 Defining correlation

In order to determine the correlation, various indicators have been considered. We decided on the Pearson product-moment correlation coefficient (Boddy and Laird Smith 2009), as the assumptions on the underlying data distribution are the best fit. In our threats to validity, we will make a brief note on linear versus polynomial fitting. We will use the Pearson coefficient to determine the correlation between expressibility and classification accuracy, as well as between entangling capability and classification accuracy on the 342 data points, as described in the previous section. We will use these coefficients to draw conclusions on the level of correlation between the descriptors and the classification accuracy.

3.11 Classical neural network

We will also evaluate our dataset using a classical neural network for comparison and sanity checking. We implemented both 1- and 2-layer versions, each having 16 weights per layer. The activation function used is the Rectified Linear Unit (ReLU), and the system is optimized using the Adam optimizer. All is implemented in Tensorflow (Abadi 2015).

4 Results

The classification accuracy for the various hyperparameter settings can be found in Appendix 4. In particular, the results for the Adam optimizer with L1 loss can be found in Table 9 and with L2 loss in Table 10. The results for the gradient descent optimizer with L1 loss in Table 11 and with L2 loss in Table 12. The average classification accuracy across all datasets for the various hyperparameter settings, as well as the number of layers, is summarized in Table 1. Here, we see that the Adam optimizer combined with L2 loss achieves the best classification accuracy, regardless of the number of layers. Treating each hyperparameter separately using the factorial design (Bose 1947), as shown in Table 2, reconfirmed these settings. The outcome of the three validation runs using the L2 loss and Adam optimizer can be found in Appendix 3, Table 7 for the 1-layer runs and Table 8 for the 2-layer runs. We calculated the mean absolute difference between each of the 342 data points of every run, and found it to be 0.06 with a standard deviation of 0.36. With this info, we expect the correlation not to vary significantly between the runs, which is also what we observe in Table 3, where we show the Pearson product-moment correlation coefficients between the descriptors and classification accuracy for each dataset individually, as well as the mean and standard deviation. We added an extra row where we exclude dataset 2a for reasons described in the “Discussion” section. The relation between expressibility’ and classification accuracy for every dataset is plotted in Fig. 3, and the relation between entangling capability and classification accuracy is plotted in Fig. 4. The average classification accuracy for the classical NN is shown in Table 6 of Appendix 3.

5 Discussion

5.1 Limitations of the experiment

Before discussing the results of our experiment, we will address the limiting factors to place our findings in the correct perspective.

-

Our experiment only includes specific quantum circuit designs. In particular, the system always starts with an embedding circuit, followed by 1 or 2 circuit templates, with rotational gates, parameterized rotational gates, and conditional rotational gates

-

We handcrafted 9 different sets of data points of increasing difficulty that we believe to be realistic and representative problems for current-day quantum machine learning algorithms. However, the sets only contain classical data with 2 features. Data encapsulating more complex patterns, or higher dimensional data, might yield different results

-

The 2d data was embedded in a replicative manner on 4 qubits with our proposed minimally expressive embedding circuit. Different embeddings, additional embeddings, or repetitive embeddings are not evaluated. Neither were ancilla qubits.

Furthermore, different descriptors for expressibility and entangling capability exist, as well as different descriptors for the power of a PQC overall(Coyle et al. 2020; Sim et al. 2019; Schuld et al. 2020).

5.2 Correlation using the best hyperparameter settings

For our experimental setup, we have found an average Pearson correlation coefficient between expressibility’ and classification accuracy of 0.64 ± 0.16, as calculated from run 1 in Table 3. However, when looking at the plots in Fig. 3, we see that dataset 2a contains 33 out of 38 data points close to or at 100% accuracy. As these accuracies are capped out, no meaningful correlation can be expected. For this reason, we mark dataset 2a as faulty and exclude it from our evaluation. This brings us to a final mean Pearson correlation coefficient between expressibility’ and classification accuracy of − 0.70 ± 0.05. This indicates a strong correlation (Dancey and Reidy 2007) between classification accuracy and expressibility’. This is within expectations (Sim et al. 2019).

Using the same experimental setup, the mean Pearson correlation coefficient between entangling capability and classification accuracy across all datasets is 0.26 ± 0.20. As this experiment suffers the same characteristics for dataset 2a, as observed in Fig. 4, we also decide to exclude dataset 2a for our evaluation of this coefficient. This brings the final mean Pearson correlation coefficient between entangling capability and classification accuracy at 0.32 ± 0.09. This indicates a weak correlation (Dancey and Reidy 2007) between classification accuracy and entangling capability. This is not as expected by Sim et al. (2019), but in line with recent findings that argue that an excess in entanglement between the visible and hidden units in a quantum neural network can hinder learning (Marrero et al. 2020).

5.3 Correlation using the best hyperparameter settings

5.4 Correlation using the remaining hyperparameters

As a sanity check on our strong results, we calculated the mean Pearson correlation coefficient and its standard deviation for the different hyperparameter settings on the validation data, all with dataset 2a excluded. This is summarized in Table 4. Here we see that for the Adam optimizer with either L1 or L2, we maintain a value that can be classified as a strong correlation. For gradient descent, the claim would be weaker, being classified as a moderate to strong correlation (Dancey and Reidy 2007). After examining the data, we see that this is caused by the optimizer not performing optimally. In particular, at least half of the circuits on datasets 2c and 3c cannot fit any data, staying around 50% accuracy, and the majority of the circuits are stuck in a local minima around 80% for dataset 3b. This is not surprising, as we saw in Table 2 that the average classification accuracy while using the gradient descent optimizer is lower compared to using the Adam optimizer.

5.5 Limitations of the descriptor

The original paper by Sim et al. (2019) contains 19 circuits with 1 to 5 layers. In their paper, they address that expressibility values appear to saturate for all circuits, albeit at different levels. The lowest value of expressibility that they present is 0.0026, which in our measure ofexpressibility’ corresponds to − log10(0.0026) = 2.59. In our experiments, we tested 6 circuits that have a value of expressibility’ higher than 2, meaning an expressibility smaller than 0.01. The best average accuracy achieved by any of our circuits is 97.7. Still, this includes a fitting of only 92.3% for dataset 3b. In comparison, a classical neural network containing 2 layers with 16 weights is able to achieve an average classification accuracy of 99% without any hyperparameter tuning, as shown in Table 6. When looking at state-of-the-art neural network architectures, we continue to see these patterns. Most quantum classifiers are still being evaluated on small datasets such as ours (Schuld and Killoran 2019; Havlicek et al. 2018), or datasets such as the MNIST (Deng 2012). Classical machine learning models on the other hand are being evaluated on larger and more complex datasets, such as ImageNet (Deng et al. 2009). Although this may be purely due to the infancy of the current systems, limiting the size of the input data, it still appears that adding extra layers does not circumvent the saturation. We believe a hint might lay in the following reasoning: it is not only important for a classifier to be able to uniformly address a large Hilbert space, but also requires a repeated non-linear mapping between these spaces. As a thought experiment, think of a circuit that embeds linearly increasing classical data with a single rotational Pauli X gate. In this scenario, one would not expect to be able to find a separating plane between the two classes without remapping the data. We believe that this is also the reason why in classical neural networks, the data is repeatedly mapped between feature spaces by repeated layers of linear neurons and non-linear activation functions. We believe that quantum circuits need to be designed and evaluated in such a manner too. Recent research addresses this by having alternating layers of embedding and trainable circuit layers (Pérez-Salinas et al. 2020), thereby breaking linearity, although Schuld et al. (2020) drive a point that classical systems can achieve a similar effect with similar resources, deeming the use of quantum for ML not necessary. The investigation into the effect of the design on our descriptors is marked as future work.

5.6 Threats to validity

We are aware that different hyperparameters, differently drawn values from our probability distribution initializating the weights, and different datasets can yield different results. We have aimed to account for this effect by performing a hyperparameter search to find the best hyperparameters for our main result, but still evaluate for the remaining hyperparameters to confirm our results. We also accounted for different values drawn from our probabilitydistribution for initializations of the weights by repeating our experiments three times on 9 different datasets of increasing difficulty. For fitting our correlation indicator, we consider st until 3rd-order polynomials, and did not observe a noticeable difference.

6 Conclusion

In our work, we have found a a mean 0.7 ± 0.05 Pearson product-moment correlation coefficient between classification accuracy and expressibility’ yielding a strong correlation (Dancey and Reidy 2007). This numerically derived outcome is calculated using 342 data points. These data points were generated using 19 circuits, in both 1- and 2-layer configurations, evaluated on 9 custom datasets of increasing difficulty. Similar investigation on the other hyperparameters yielded mean coefficients between 0.46 and 0.7, yielding a moderate to positive correlation (Dancey and Reidy 2007). This combination leads us to conclude that for our experiments, a moderate to strong correlation between classification accuracy and expressibility’ exists. Here, expressibility’ is based on the definition of expressibility proposed by Sim et al. (2019), and meant to capture the ability of a parameterized quantum circuit in a hybrid quantum-classical framework to uniformly address the Hilbert space. This is calculated by taking the negative log of the Kullback–Leibler divergence between the ensemble of Haar random states and the estimated fidelity distribution of the PQC. This outcome is in line with expectations by Sim et al. (2019).

Our experiment is limited to PQCs that follow a specific pattern of concatenating an embedding layer with one or more circuit templates. It is suggested that this circuit setup is limiting the classification performance(Schuld et al. 2020). Further investigation into more elaborate designs that break linearity after embedding is required, for example by repeated alteration between embedding layers and trainable layers (Schuld et al. 2020). Such designs would potentially not be captured by the expressibility’ measure, and further investigation into extending the measure is required.

We have also investigated the correlation between entangling capability of a circuit and its classification accuracy, where entangling capability was measured as the Meyer-Wallach entanglement measure (Meyer and Wallach 2002). The outcome was a weak correlation, based on a similar experimental setup that yielded a mean Pearson product-moment correlation of 0.32 ± 0.09 (Dancey and Reidy 2007). This is not as expected by Sim et al. (2019), but in line with recent findings by Marrero et al. (2020).

References

Abadi M (2015) TensorFlow: Large-scale machine learning on heterogeneous systems. http://tensorflow.org/, Software available from tensorflow.org

Abraham H (2019) Qiskit: An open-source framework for quantum computing

Arute F, Arya K, Babbush R, Bacon D, Bardin JC, Barends R, Biswas R, et al. (2019) Quantum supremacy using a programmable superconducting processor. Nature 574 (7779):505–510

Bergholm V, Izaac J, Schuld M, Gogolin C, Blank C, McKiernan K, Killoran N (2018) Pennylane. arXiv:1811.04968

Boddy R, Laird Smith G (2009) Statistical methods in practice: for scientists and technologists. Wiley, Chichester, UK

Bose RC (1947) Mathematical theory of the symmetrical factorial design. SankhyÄ: The Indian Journal of Statistics: 107- 166

Bravyi S, Gosset D, Koenig R, Tomamichel M (2020) Quantum advantage with noisy shallow circuits. Nature Physics 16(10):1040–1045

Coyle B, Mills D, Danos V, Kashefi E (2020) The born supremacy: Quantum advantage and training of an ising born machine. npj Quantum Information 6(1):1–11

Dancey CP, Reidy J (2007) Statistics without maths for psychology. Pearson education

Deng J, Dong W, Socher R, Li L-J, Li K, Fei-Fei L (2009) Imagenet: A large-scale hierarchical image database. IEEE conference on computer vision and pattern recognition pp. 248-255

Deng L (2012) The mnist database of handwritten digit images for machine learning research. IEEE Signal Processing Magazine 29 (6):141–142

Farhi E, Goldstone J, Gutmann S (2014) A quantum approximate optimization algorithm. arXiv:1411.4028

Farhi E, Neven H (2018) Classification with quantum neural networks on near term processors

Goldbloom A, Hamner B (2020) Kaggle. https://www.kaggle.com/

Havlicek V, Córcoles AD, Temme K, Harrow AW, Kandala A, Chow JM, Gambetta JM (2018) Supervised learning with quantum enhanced feature spaces. Nature 567:209–212. https://doi.org/10.1038/s41586-019-0980-2, arXiv:1804.11326

Kandala A, Mezzacapo A, Temme K, Takita M, Brink M, Chow JM, Gambetta JM (2017) Hardware-efficient variational quantum eigensolver for small molecules and quantum magnets. Nature 549(7671):242–246

KutinS (2016) qpic. https://github.com/qpic/qpic

Marrero CO, Kieferová M, Wiebe N (2020) Entanglement induced barren plateaus. arXiv:2010.15968

McClean JR, Romero J, Babbush R, Aspuru-Guzik A (2016) The theory of variational hybrid quantum-classical algorithms. New J Phys 18:023023

Meyer DA, Wallach NR (2002) Global entanglement in multiparticle systems. Journal of Mathematical Physics 43(9):4273–4278

Mirjalili SZMH, Sardroudi HM (2012) Stochastic gradient descent for hybrid quantum-classical optimization. Applied Mathematics and Computation 218(22):11125–11137

Nielsen MA, Chuang I (2002) Quantum computation and quantum information. Cambridge University Press, Cambridge

Peruzzo A, McClean J, Shadbolt P, Yung M-H, Zhou X-Q, Love PJ, Aspuru-Guzik A, O’Brien JL (2014) A variational eigenvalue solver on a photonic quantum processor. Nature communications 5:4213

Preskill J (2018) Quantum computing in the nisq era and beyond. Quantum 2:79

Pérez-Salinas A, Cervera-Lierta A, Gil-Fuster E, Latorre JI (2020) Data re-uploading for a universal quantum classifier. Quantum 4:226

Riste D, da Silva MP, Ryan CA, Cross AW, Córcoles AD, Smolin JA, Gambetta JM, Chow JM, Johnson BR (2017) Demonstration of quantum advantage in machine learning. NPJ Quantum Information 3(1):1–5

Schuld M, Bergholm V, Gogolin C, Izaac J, Killoran N (2019) Evaluating analytic gradients on quantum hardware. Physical Review A 99(3):032331

Schuld M, Bocharov A, Svore K, Wiebe N (2018) Circuit-centric quantum classifiers

Schuld M, Killoran N (2019) Quantum machine learning in feature hilbert spaces. Physical review letters 122(4):040504

Schuld M, Sweke R, Meyer JJ (2020) The effect of data encoding on the expressive power of variational quantum machinelearning models. arXiv:2008.08605

Sim S, Johnson PD, Aspuru-Guzik A (2019) Expressibility and entangling capability of parameterized quantum circuits for hybrid quantum-classical algorithms. https://doi.org/10.1002/qute.201900070, arXiv:1905.10876

Stoudenmire EM, Schwab DJ (2016) Supervised learning with quantum-inspired tensor networks. arXiv:1605.05775

Sweke R, Wilde F, Meyer J, Schuld M, Fährmann PK, Meynard- Piganeau B, Eisert J (2019) Stochastic gradient descent for hybrid quantum-classical optimization. arXiv:1910.01155

Vidal JG, Theis DO (2019) Input redundancy for parameterized quantum circuits. arXiv:1901.11434

Życzkowski K, Sommers H-J (2005) Average fidelity between random quantum states. Phys Rev A 71:032313

Acknowledgements

The authors would like to thank Sukin Sim for providing the data points in Table 5 and valuable feedback; Nicola Pancotti and Christoph Segler for providing feedback on our statistical approach; Jonas Haferkamp and Jordi Brugués for discussions on measures of expressibility and t-designs; and Ryan Sweke for discussions on function classes. TH has been supported by the BMWi (PlanQK).

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’snote

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1: Expressibility and entangling capability

Appendix 2: Circuits

Circuit templates evaluated in this study. Gates as defined in Nielsen and Chuang (2002). All Rotational Gates are parameterized. Circuit diagrams were generated using qpic (KutinS 2016). Please see copyright notice (Notice regarding choice of circuit templates and visualizations of these circuits: Copyright Wiley-VCH GmbH. Reproduced with permission. Source: Sukin Sim, Peter D. Johnson, and Alán Aspuru-Guzik. “Expressibility and Entangling Capability of Parameterized Quantum Circuits for Hybrid Quantum-Classical Algorithms.” Advanced Quantum Technologies 2.12 (2019): 1900070. Page 8.)

Appendix 3: Test results

Appendix 4: Hyperparameter search results

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hubregtsen, T., Pichlmeier, J., Stecher, P. et al. Evaluation of parameterized quantum circuits: on the relation between classification accuracy, expressibility, and entangling capability. Quantum Mach. Intell. 3, 9 (2021). https://doi.org/10.1007/s42484-021-00038-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42484-021-00038-w