Abstract

The present study aims to compare SARIMA and Holt–Winters model forecasts of mean monthly flow at the V Aniversario basin, western Cuba. Model selection and model assessment are carried out with a rolling cross-validation scheme using mean monthly flow observations from the period 1971–1990. Model performance is analyzed in one- and two-year forecast lead times, and comparisons are made based on mean squared error, root mean squared error, mean absolute error and the Nash–Sutcliffe efficiency; all these statistics are computed from observed and simulated time series at the outlet of the basin. The major findings show that Holt–Winters models had better performance in reproducing the mean series seasonality when the training observations were insufficient, while for longer training subsets, both models were equally competitive in forecasting one year ahead. SARIMA models were found to be more reliable for longer lead-time forecasts, and their limitations after being trained on short observation periods are due to overfitting problems.

Article Highlights

-

Comparison based on rolling cross-validation revealed the models forecasts sensibility to available observations amount.

-

HW and SARIMA models perform better when limited observations or long-view forecasting, respectively, otherwise they do similar.

-

HW models were superior modeling less variable monthly flows while SARIMA models better forecast the highly variable periods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Streamflow forecasting is of great importance to water resources management and planning. Medium- to long-term forecasting at weekly, monthly, seasonal, or even annual time scales is particularly useful in reservoir operations and irrigation management. Due to their importance, a large number of time-series models have been developed for streamflow forecasting [1, 2]. Streamflow forecasting models, according to general modeling theory, are classified into physical/conceptual and data-driven models. With detailed processes’ system description, the former are mostly limited by parameters and process-information requirements, while data-driven models can be more suitable in a data-rich environment. Data-driven models have minimum requirements on prior knowledge about catchment hydrological processes, rapid fitting and simulation times, and they have been found to be accurate in various hydrological forecasting applications [3].

Data-driven or statistical models are mainly divided into stochastic methods and machine learning (ML) algorithms, which respond to analytical and algorithmic modeling cultures but also somewhat to the linearity and nonlinearity of the data relations to be modelled, respectively [4]. Both types of models have been applied in hydrology with a shorter history for ML methods due to relatively recent advances in computation speed. Recent investigations into hydrological applications are as follows: some studies have evaluated the performance in forecasting monthly flows by ML techniques (e.g. gene expression programming, Bayesian networks, multivariate adaptive regression splines) and stochastic time-series models (e.g. autoregressive, autoregressive moving average) and their combinations, concluding that stochastic models perform better than single ML algorithms but all models are inferior to hybrid stochastic-ML models [5,6,7]. For example, Zhu et al. [3] used a mixture-kernel (GPR Gaussian Process Regression) approach to forecast seasonal streamflows obtaining good forecast quality. Elganiny & Eldwer [8] compared a deseasonalized autoregressive moving average model and artificial neural network (ANN) to predict monthly flows in the River Nile, obtaining better performances for the ML method. Other studies, at various time scales and using different hydrological time series, have shown that ML models are equally accurate compared to stochastic forecasting approaches [4, 9].

In this work, we focus on classical univariate time-series models to test them by a rolling cross-validation scheme. We have selected for their application the most popular automatic forecasting methods: autoregressive integrated moving average (ARIMA) and exponential smoothing models [10]. Developed after Box and Jenkins [11], ARIMA models have evolved to deal with non-stationary series. For this, it is possible to find formulations like the all-in-one seasonal forecasting equation (SARIMA) or ARMA modeling on a deseasonalized series (DARMA) or periodic ARMA model for specific (e.g. each month) periods (PARMA). All these models have applications in hydrology [e.g. 12] and specifically in streamflow forecasting [e.g. 13–18]. On the other hand, exponential smoothing techniques were introduced by Holt in the 1950s and later extended by Winters at the beginning of the 1960s [19]. The triple exponential approach, also known as the Holt–Winters model (HW), allows modeling time-series level, trend, and seasonality by the use of three updating equations and multi-step forecasting with a simple linear combination of these elements. The approach is popular across disciplines, including hydrology, where HW models have been extensively applied. For example, applications include the forecast of the daily water level in a reservoir [20], rainfall and temperature series predictions [21,22,23], and the forecast of sewage inflow into a wastewater treatment plant [24].

Comparative studies of these models can be found in various disciplines and statistical research papers [e.g. 10, 25, 26]. Hyndman et al. [10] concluded that both approaches are equally competitive, and each one has its strengths and weaknesses. They mentioned that exponential smoothing models are all non-stationary by definition, so if a stationary model is required, ARIMA models are better, while time series exhibiting nonlinear characteristics may be better modelled using exponential smoothing models. Hyndman et al. [26] show that exponential smoothing models performed better than the ARIMA models for the seasonal M3 data (real business and economic time series from the M3 Makridakis Competition Dataset), with only the exception of the annual M3 data. In hydrology, recently Papacharalampous et al. [9] investigated the predictability of monthly temperature and precipitation using automatic time-series forecasting methods, and they found that all models (including specific exponential smoothing and ARIMA models) are accurate enough to be used in long-term applications. Papacharalampous et al. [4] found these stochastic models are equally competitive and concluded that the forecast quality is subject to the nature of the examined problem and study design.

In general, most of the above-mentioned studies divide the series observations into two fixed subsets, one for training the model and one for assessing its performance. Usually, the latter period corresponds to the most recent observations and its extension depends on forecasting scales and goals. In James et al. [27], the considerable variability of test errors depending on precisely which observations are included in the training set and in the testing set is presented. In this sense, a cross-validation method can be a more accurate and informative way to estimate the model structure and judge its performance. Cross-validation is a resampling technique that iteratively changes the training and testing samples in order to obtain additional information about the fitted models and their associated test errors [27]. In time-series analysis, the order of observations is important, and they cannot be dropped out randomly. Cross-validation is implemented by varying repeatedly the training and test subsets in a rolling forward scheme. Using this approach, information can be obtained that would not be available from fitting the model only once [27].

The present study aims to compare SARIMA and Holt–Winters model forecasts of mean monthly flow at the V Aniversario basin. Model selection and model assessment are carried out by a rolling cross-validation scheme. This hydrological research becomes also interesting due to the geomorphological peculiarities of the basin, with a long subterranean cave system that may influence the rainfall–runoff relationships and thus the autocorrelation structure in streamflow. In addition, this study is important at a site-specific but also at a global extent due to the traditional lack of information regarding hydrological forecasts in Cuba. Many global hydrological initiatives have found the country to be a “white space” of data and research, like the recent large-sample hydrology (datasets) analyzed in Addor et al. [28]. These global initiatives are fundamental to derive robust conclusions on hydrological processes and models [28]. Moreover, the complexity and site-specific dependence of the variables driving streamflow [29] are important if we want to generalize hydrological studies.

2 Materials and methods

2.1 Case study

The study focuses on monthly streamflows from V Aniversario basin, Cuyaguateje River, Pinar del Río Province, Cuba. The basin is located between 22º24’6”–22º35’40”N and 83º47’55”–83º56’9”W with an area of 157 km2. Figure 1 illustrates the geography of the study area. Its latitudinal elongation, like most large-sized basins in Cuba, favors a runoff generation greater than in other karstic systems in the country. The entire Cuyaguateje River basin is one of the biggest in Cuba with an area of 723 km2, in which tobacco cultivation is extensive, constituting a major resource for the national industry [30]. Most of the irrigation for tobacco plantations depend on the Cuyaguateje River and its tributaries [31].

The region is subjected to a subtropical climate with precipitations mostly occurring during a well-defined wet season from May to October. The mean annual climatic characteristics, based on climatology records from 1965 to 1996, are: precipitation 1766 mm, evaporation 1860 mm (1968–1994), temperature 25.1 °C, and humidity that oscillates between 77% and 82% during dry and rainy seasons, respectively [30].

The basin geomorphology is characterized by an anticline chain of mountains. In the northern part of the basin, the landscape is shaped by steep sided limestone hills (mogotes) and numerous cultivated valleys. The maximum elevation is around 590 m.a.s.l. This basin has undergone very intensive (geological) erosion and lies over karstic rock with an extensive subterranean cave system [32].

2.2 Discharge series

In this study, we use the mean monthly flow (Q) observations measured at the basin outlet (hydrometric station) during the period 1971–1990. Figure 2 shows the monthly flow values and the nonparametric Mann–Kendall trend line [33, 34]. The statistical test confirmed the lack of trend and shift along the series values with a p value = 0.4. What is notable in the time series is the seasonal pattern alternating between dry and rainy periods. Figure 3 shows the distribution of monthly flow observations. In this figure, one can observe the average seasonality with a characteristic bimodal distribution during the year, typical in Cuba. The monthly flows present a maximum peak in September and a secondary peak in June. In general, mean annual streamflow is 3.3 m3/s, and maximum recorded monthly flow was 27.8 m3/s.

2.3 Time-series models

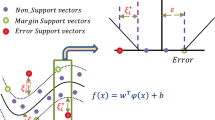

2.3.1 SARIMA model

Non-stationary series can be modeled using the Seasonal Autoregressive and Integrated Moving Average (SARIMA) model with orders (p,d,q)(P,D,Q). The model uses series differentiation and includes seasonal and non-seasonal polynomials. The series follows a SARIMA process if the following equation holds, using the terminology of Cowpertwait and Metcalfe [35]:

where \({\Theta }_{P}\) and \({\theta }_{p}\) are seasonal and non-seasonal autoregressive parameters, respectively; \({\Phi }_{Q}\) and \({\phi }_{q}\) are seasonal and non-seasonal moving average parameters, respectively; B(xt) = xt -1 is the backshift operator; D is the number of seasonal differencing; d is the number of regular differencing; and s is the season duration.

We use built-in-R ARIMA and predict functions from the stats package for model selection and multi-step ahead point forecast. ARIMA function uses by default a fitting method that minimizes the conditional sum-of-squares to find starting parameter values and the maximum likelihood. In the present work, the selection of the best model orders was based on a Consistent Akaike Information Criterion (CAIC) [36] analysis, which was implemented in an iterative function allowing orders to vary between 0 and 2.

CAIC is defined by:

where k is the number of model parameters, \(\mathrm{ln} L({\widehat{\theta }}_{k})\) is log-likelihood value, and n is the time-series length.

2.3.2 Holt–Winters model

The Holt–Winters or triple exponential smoothing approach [19] uses a weighted moving average to estimate local mean, trend, and seasonality. For a series {\({x}_{t}\)} with period p, the updating equations for Holt–Winters’ additive method are:

where \({a}_{t}\), \({b}_{t}\) and \({s}_{t}\) are the smoothed estimates of level, trend, and seasonality at time t, respectively; α, β and γ are smoothing parameters. These parameters make a distinction between the importance of recent and older observations and the weights of older observations decay exponentially.

The k-step forecasting equation for \({x}_{n+k}\) at time n is:

where \({a}_{n}\) and \({b}_{n}\) are the estimated level and trend at time n, respectively; \({kb}_{n}\) is the trend extrapolation; and \({s}_{n+k-p}\) is the appropriate seasonal effect (i.e., in monthly series with period p = 12 , \({s}_{n+k-12}\) corresponds to the estimated seasonal effect at the specific month of the previous year).

We use built-in-R Holt–Winters and predict functions from the stats package for the Holt–Winters model selection and the multi-step ahead point forecast. Herein, initial smoothing parameters are obtained after a simple series decomposition, and an optimal parameter set is obtained by minimizing the Squared Sum one-step Prediction Error (SS1PE).

2.4 Model selection and cross-validation

Model selection and model assessment are performed by a rolling cross-validation scheme in which training and test subsets were systematically changed moving forward in time. The models are always evaluated for a lead time of one and two years beyond the training period. The first training subset uses the period between 1971 and 1980; then, it is iteratively increased 5 times by two years each time. The last training subset uses observations from 1971 to 1988. The error measures explained below were used to test the model forecast but also to select the best model structure out of the cross-validation experiment.

The model performance is based on mean squared error (MSE), root mean squared error (RMSE), mean absolute error (MAE) and the Nash–Sutcliffe efficiency (NSE). The latter statistic, developed by Nash and Sutcliffe [37], measures how much of the variability in observations is explained by the simulations. NSE better represents point-specific closeness as compared to the global deviations of eq. 7, 8 and the mass balance of eq. 9. Given observed \({x}_{t}\) and estimated \({\widehat{x}}_{t}\) series:

3 Results and discussion

In this section, we present the results of SARIMA and Holt–Winters modelled one- and two-year forecasts. As model structure may change along the cross-validation procedure, we first discuss the results from the model assessment perspective and later from the model selection perspective. Herein, the discussion focuses on finding distinct features between the models and from the cross-validation procedure.

3.1 Model assessment

Table 1 shows the forecasting errors for SARIMA and HW realizations, at one- and two-year lead time. In contrast to the other used error metrics, the Nash–Sutcliffe efficiency should be maximized [4]. The years, in the first row of Table 1, are abbreviated to the last two digits, but they all correspond to 1980s. The last column shows averaged errors over the test sets.

By looking at the averaged errors (herein mainly used to name MSE, RMSE and MAE) and efficiencies, it seems that the HW model performs to some extent better than the SARIMA model. This holds for one- and two-year forecasts and without metric exceptions. The averaged NSE values for SARIMA are even negative, while for HW, the values are somewhat better but still showing a poor representation of the observed flow variability. Nevertheless, other peculiar features arise when looking at individual testing subsets.

Inspecting the model accuracy on a rolling cross-validation scheme allowed to see how both approaches respond to the training data sizes by their impacts on the final forecast quality. Note in Table 1 how SARIMA’s errors (efficiencies) decrease (increase) considerably over test sets, reaching at the last two testing subsets very similar performance or even better than HW models. The SARIMA models continued improving the predictability as the training period became larger, while the errors of the HW models appeared randomly distributed. These behaviors are a response of the overfitting characteristic of each model structure, which is treated hereafter. Returning to the metrics evolution, the constant improvement of NSE with the increment of training observations in HW models is particularly clear, which leads to the presumption that the model better captures the observed intra- and inter-annual variability as the number of process cycles increases. In conclusion, the HW models have more potential (at least in reproducing climatology) when the univariate temporal series is ‘short’, while both models are competitive when longer time series are used. In the present analysis, the threshold of sufficient data is around 15 years.

Analyzing the averaged statistics between forecast steps, one can note somewhat better results for longer (two year) periods in both models. This can be explained by the fact that the longer the validation period, the higher the chance of a statistical model to fit, on average, the average behavior of the observed series. Nevertheless, when looking at the different testing sets some differences arise between the two approaches. SARIMA models always perform better for longer forecast intervals (two years ahead), while the HW model errors are indistinct, but predominantly worse for two-year forecasts. Based on the previous statements, one can argue that the SARIMA models are more reliable when forecasting longer intervals. SARIMA models are more flexible, compared to HW, for fitting highly random systems like hydrological systems, mainly in long view forecasting. In contrast, the HW approach resulted in a static replication of monthly forecasts to the second years.

In order to test overfitting issues for the models’ fitted equations, the SARIMA and HW training errors are presented in Table 2. Comparing the models’ errors on the dependent data with those of the independent data reported in Table 1, each model has peculiarities that should be analyzed separately. In the case of HW models, the model structure has a fixed amount of predictors along all training segments. The three model predictors are necessary to model the present time-series components (trend appears less significant, see next sections), and the suitability of this model structure is also proved by the small differences between training and validation errors. The performance of the equation is nearly always worse for the training than the testing data due to the walking procedure in fitting HW, which finds high deviations at the beginning of the time series.

For the case of SARIMA models, the situation is different. Here the model orders (predictors) change along the training sets and the overfitting problems allow to judge these structures. The evolution of the CAIC-selected models structures is as follows: the period 1971–1980 has orders (1 1 2) (2 2 1)12, 1971–1982 has orders (0 1 1) (2 2 1)12, 1971–1984 has orders (0 2 2) (2 1 1)12 and the last two periods, 1971–1986 and 1971–1988 have the simplest structure (0 1 1) (2 1 1)12. The first three structures are overfitted with training errors much higher than the testing errors. However, the last two training sets have errors, as expected, nearly better than the testing data. This explains the improvement of SARIMA performance along the testing subsets.

In order to test the seasonal capacity of the two models, the forecasting errors by season (wet and dry) are summarized in Table 3. Most of the above-mentioned elements are also evident in Table 3, but some other features emerged with this analysis. Comparing the statistics between seasons, it can be observed that the forecasting errors for both models are larger during the rainy periods, but it is worth mentioning that the order of the errors may be influenced by the order of streamflow observations. Then, in contrast the NSE is penalizing the magnitude of these errors in relation to the variability of each period, which resulted in greater NSE values for the wet seasons. Both models learn faster to forecast the dry periods due to the lower inter-annual monthly variability of this season (see Fig. 2), which is appropriate for univariate modeling at large scales. This can be verified by the marked decrease of errors occurring between the second and third testing periods, in contrast to the decaying pattern observed in the wet seasons.

Again, HW models have smaller forecasting errors for the first test subsets, but in the end both approaches are equally effective, with nearly better results for HW with a one-year forecast and for SARIMA with a two-year forecast. However, looking at each model seasonally, it appears that HW models are to some extent consistently superior in modeling the low and less variable flows corresponding to the dry season for both one- and two-year lead time. However, at the end of the cross-validation process, SARIMA structures perform slightly better in predicting the highly variable flows of the wet season (high stochastic dimension associated with extreme events like summer heavy rains or hurricanes).

3.2 Best SARIMA model

As already mentioned, the SARIMA structure and its fitted coefficients vary along the training segments. Shorter training periods caused a model overparameterization, which is minimized for the last two periods. Based on the reported forecasting errors and the parsimony paradigm, we have selected as the best model a SARIMA (0 1 1) (2 1 1)12.

Figure 4 shows the autocorrelation function (ACF) and partial autocorrelation function (PACF) of the untransformed mean monthly flow series (measured from 1971 to 1988), and Fig. 5 shows the D = 1 and d = 1 differenced series in which weak stationarity is verified. On the other hand, Table 4 summarizes the fitted model coefficients and their estimation statistics. With p-values always below 0.05, all model parameters appear statistically significant in the fitted structure of SARIMA. Nevertheless, some distinction in their significance levels and weights are observed: MA coefficients \({(\phi }_{1},{\Phi }_{1})\) have larger weights than the seasonal AR coefficients \({(\Theta }_{1},{\Theta }_{2})\) in respective polynomials. Nonetheless, the statistically proved inclusion of the AR coefficients indicates that the differenced series preserves some temporal dependence two periods backward, being the period of the series s = 12.

Substituting the coefficients into the model equation yields:

where \({x}_{t}\) is the dependent variable, \(B\) is the backshift operator, and \({\mathrm{w}}_{t}\) is the innovation term. Developing the equation for \({x}_{t}\):

After adjusting the equation polynomials, one can observe that the difference between present and previous observations mainly depends on the same differences but 12, 24, 36 months in the past. Even though physical interpretation is hard for SARIMA model equations, in this case, the model structure reveals meaningful univariate dependencies that respond to the inter-annual variability of precipitation in the area.

In the Caribbean region, precipitation is mainly modulated by the influence of El Niño South Oscillation (ENSO) and the systems of high pressures in the North Atlantic Ocean. ENSO highly determines the large-scale precipitation variability in the region [38]. The United States Climate Prediction Center [39] has referred to ENSO as an erratic phenomenon but periodic, occurring on average once each 3 to 5 years. As this teleconnection is responsible for major anomalies in the precipitation pattern, the model seems to perceive its average influence in the temporal variability of streamflows. This is the reason why the model includes such a long memory in the autoregressive polynomial, with predictors from three years back.

The graphical analysis for model residuals is not included in this manuscript, but they appear uncorrelated with zero mean and constant variance indicating a good model selection and fitting. Both the ACF and PACF show no significant temporal correlation, and the distribution of residuals is very close to a normal distribution following the Q-Q analysis, with only some small deviations in the tails.

3.3 Holt–Winters parameters and best model

The HW model predictors do not change along the training sets, but they are smoothed locally at the end of each period making them vary from one set to the other. The mean and standard deviation of the smoothing parameters and the smoothed estimates of level, trend and seasonality, all calibrated out of the cross-validation procedure, are shown in Fig. 6. In general, the smoothing parameters do not vary considerably and they become almost constant for the largest training subsets. The estimated trend is around zero, which corroborates the absence of trend in the series. The estimates of level have some variation over the training sets, but it is the seasonality (specific months) which presents the higher sensitivity to a particular segment. The monthly estimates follow, on average, the characteristic bimodal distribution of monthly flows (see Fig. 2). Here, June and September are also some of the most variable months; nevertheless, the high deviation on estimates for November is quite unexpected given its historical distribution of streamflows. One reason for this could be that the model is perceiving the influence of the late arrival of hurricanes (highly affecting the mean monthly flow), a rare but possible extreme event, in some training sets. Some other monthly variations are suspicious, like the estimates associated with February (high) and May (low), which can be incurring in forecasting errors later on.

Based on the forecasting errors, the best HW model performance corresponds to the last training set (period 1971–1988). Table 5 relates the smoothing parameters and estimates for this model. The smoothing parameter α suggests a rather homogenous level of the series giving only 11% of weight to the local mean values. In contrast, the smoothing seasonal parameter γ assigns similar weights to the present and older observations indicating an important role of the local variability in the monthly pattern. The latter responds to the inter-annual variability of the series, which is influenced by periodic phenomena.

On the other hand, all the seasonal estimates are close to the mean values reported in Fig. 6 except for S9 and S11, which correspond to September and November, respectively. S9 is estimated as 2.8 times (overpassing the standard deviation) above the mean value and S11 as 1.8 times (inside a sd) below the mean value. The former can constitute a potential model overprediction, while the latter seems a good estimate attending the series characteristics. In the next section, a visual inspection of the models’ performances is presented.

3.4 Visual evaluation of the forecasts

Figure 7 shows the observed and forecasted mean monthly flows for the period 1989–1990, which corresponds to the last testing subset used in the cross-validation. In general on this plot, it can be observed that both models mimic the pulse superimposed by the rainy season and its maximum in September. Around this peak is where major differences between the model forecasts arise: HW tends to overestimate peak values, while SARIMA tends to underestimate the observed peak. On the other hand, the two models wrongly forecast a secondary peak in June, which is present in the climatological behavior of the series (bimodal distribution) but not particularly for these years. During this period, the months of June were peculiarly dry. During the dry season, there exists a conditional overestimation of both models with a marked deviation in February and March. Both models have problems in predicting the very low base flows of these months.

For the second year, the realization of the SARIMA model fits better the observed values between July and September, catching to some extent the narrower pulse of these months in relation to those of 1988. The better fit is properly revealed in the metric statistics, which would improve if they were computed exclusively for the single second year. In contrast, the HW model overestimates all the observations of the same period.

4 Conclusions

In this study, the performance of SARIMA and Holt–Winters models was evaluated to forecast multi-step forecasting of monthly flows of the V Aniversario basin in western Cuba. Model selection and model assessment were carried out on a moving forward cross-validation procedure. The obtained results showed that, in general, both models were insufficiently accurate to forecast the monthly flows, but they presented specific potentialities. HW models had better performance in reproducing the mean time-series seasonality when the training observations were insufficient while for the longest training subsets, both models were equally competitive in forecasting a one-year ahead interval. SARIMA models are more reliable when forecasting longer intervals, and their limitations after being trained on short observation periods are due to overfitting problems. Testing the seasonal capacity of these methods, it was found that both models learn faster to forecast the dry periods due to the lower inter-annual monthly variability of this season. In both forecasting intervals, HW models are consistently to some extent superior in modeling the low and less variable flows corresponding to the dry season, while the best SARIMA model performs better in predicting the highly variable flows of the wet season. Looking at details of model structures, the forecasting equation of the best SARIMA model reveals meaningful univariate dependencies that respond to the inter-annual variability of precipitation in the region. The erratic but periodic phenomenon that modulates the major inter-annual anomalies in the precipitation patterns is also perceived by the HW’s smoothing seasonal parameter assigning considerable weights to the local variations of the mean monthly flows patterns.

The reduced amount of monthly flow observations available in this study may have limited the results. Therefore, a good recommendation for future work can be to extend and update the series observations and also include other case studies in order to obtain more robust conclusions. Furthermore, the obtained forecasting errors could still be enhanced by including in the comparison other modeling techniques such as ML models or the recently developed hybrid models.

Data availability

Data available on request from the authors.

References

Abudu S, Cui C, King JP, Abudukadeer K (2010) Comparison of performance of statistical models in forecasting monthly streamflow of Kizil River China. Water Sci Eng 3(3):269–281. https://doi.org/10.3882/j.issn.1674-2370.2010.03.003

Wang W (2006) Stochasticity, Nonlinearity and Forecasting of Streamflow Processes. IOS Press, Amsterdam

Zhu S, Luo X, Xu Z, Ye L (2019) Seasonal streamflow forecasts using mixture-kernel GPR and advanced methods of input variable selection. [J] Hydrol Res 50(1):200–214

Papacharalampous G, Tyralis H, Koutsoyiannis D (2019) Comparison of stochastic and machine learning methods for multi-step ahead forecasting of hydrological processes. Stochast Environ Res Risk Assess. https://doi.org/10.1007/s00477-018-1638-6

Mehdizadeh S, Sales AK (2018) A comparative study of autoregressive, autoregressive moving average, gene expression programming and Bayesian networks for estimating monthly streamflow. Water Resour Manage. https://doi.org/10.1007/s11269-018-1970-0

Mehdizadeh S, Fathian F, Adamowski JF (2019) Novel hybrid artificial intelligence-time series models for monthly streamflow modeling. Appl Soft Comput J. https://doi.org/10.1016/j.asoc.2019.03.046

Mehdizadeh S, Fathian F, Safari MJS, Adamowski JF (2019) A comparative assessment of time series and artificial intelligence models for estimating monthly streamflow: local and external data analyses approach. J Hydrol. https://doi.org/10.1016/j.jhydrol.2019.124225

Elganiny MA, Eldwer AE (2018) Enhancing the forecasting of monthly streamflow in the main key stations of the river nile basin. Water Resour 45(5):660–671. https://doi.org/10.1134/S0097807818050135

Papacharalampous G, Tyralis H, Koutsoyiannis D (2018) Predictability of monthly temperature and precipitation using automatic time series forecasting methods. Acta Geophys. https://doi.org/10.1007/s11600-018-0120-7

Hyndman R, Khandakar Y (2008) Automatic Time Series Forecasting: the forecast Packages for R. J Stat Softw. https://doi.org/10.18637/jss.v000.i00

Box GE, Jenkins GM (1976) Time series analysis, control, and forecasting. San Francisco, CA: Holden Day 3226(3228):10

Durdu ÖF (2010) Application of linear stochastic models for drought forecasting in the Büyük Menderes river basin, western Turkey. Stochastic Environmental Research and Risk Assessment 24(8):1145–1162

Valipour M (2015) Long-term runoff study using SARIMA and ARIMA models in the United States. Meteorol Appl 22:592–598. https://doi.org/10.1002/met.1491

Ghanbarpour MR, Abbaspour KC, Jalalvand G, Moghaddam GA (2007) Stochastic modeling of surface stream flow at different time scales: Sangsoorakh karst basin Iran. J Cave Karst Stud 72(1):1–10

Grimaldi S (2004) Linear parametric model applied to daily hydrological series. J Hydrol Eng 9(5):383–391. https://doi.org/10.1061/(ASCE)1084-0699(2004)9:5(383)

Martins OY, Sadeeq MA, Ahaneku IE (2011) ARMA modelling of Benue River flow dynamics: comparative study of PAR model. Open J Mod Hydrol 1:1–9

Mckerchar AI, Delleur JW (1974) Application of seasonal parametric linear stochastic models to monthly flow data. Water Resour Res 10(2):246–255. https://doi.org/10.1029/WR010i002p00246

Moeeni H, Bonakdari H (2017) Forecasting monthly inflow with extreme seasonal variation using the hybrid SARIMA-ANN model. Stoch Environ Res Risk Assess 31(8):1997–2010

Winters PR (1960) Forecasting sales by exponentially weighted moving averages. Manag Sci 6(3):324–342. https://doi.org/10.1287/mnsc.6.3.324

Das M, Ghosh SK, Chowdary VM, Saikrishnaveni A, Sharma RK (2016) A probabilistic nonlinear model for forecasting daily water level in reservoir. Water Resour Manag. https://doi.org/10.1007/s11269-016-1334-6

Heydari M, Ghadim HB, Rashid M, Noori M (2020) Application of holt-winters time series models for predicting climatic parameters (case study: Robat Garah-Bil Station, Iran). Pol J Environ Stud 29(1):617–627. https://doi.org/10.15244/pjoes/100496

Kamruzzaman M, Beecham S, Metcalfe AV (2011) Non-stationarity in rainfall and temperature in the Murray Darling Basin. Hydrol Process 25:1659–1675. https://doi.org/10.1002/hyp.7928

Puah YJ, Huang YF, Chua KC, Lee TS (2016) River catchment rainfall series analysis using additive Holt-Winters method. J Earth Syst Sci 125(2):269–283

Wasik E, Chmielowski K (2016) The use of Holt-Winters method for forecasting the amount of sewage inflowing into the wastewater treatment plant in Nowy Sacz. Env Protect Nat Resour 27 2(68):7–12. https://doi.org/10.1515/OSZN-2016-0009

Hyndman RJ (2001) It’s time to move from ‘what’ to ‘why’- comments on the M3-competition. Int J Forecast 17(4):567–570

Hyndman RJ, Koehler AB, Snyder RD, Grose S (2002) A state space framework for automatic forecasting using exponential smoothing methods. Int J Forecast 18(3):439–454

James G, Witten D, Hastie T, Tibshirani R (2013) An Introduction to Statistical Learning: with Applications in R. Springer, New York

Addor N, Do HX, Alvarez-Garreto C, Coxon G, Fowler K, Mendoza P (2020) Large-sample hydrology: recent progress, guidelines for new datasets and grand challenges. Hydrol Sciences J. https://doi.org/10.1080/02626667.2019.1683182

Do HX, Mei Y, Gronewold AD (2020) To what extent are changes in flood magnitude related to changes in precipitation extremes? Geophys Res Lett. https://doi.org/10.1029/2020GL088684

Instituto Nacional de Recursos Hidráulicos (INRH) (2000) Catálogo de Cuencas Hidrográficas (Río Cuyaguateje). Pinar del Río, Cuba

Consejo Territorial de Cuencas Hidrográficas (CTCH) (2003) Diagnóstico ambiental de la cuenca Cuyaguateje. Pinar del Río, Cuba

Schiettecatte W, D^hondt L, Cornelis WM, Acosta ML, Leal Z, Lauwers N, Almoza Y, Alonso GR, Díaz J, Ruíz ME, Gabriels D (2008) Influence of landuse on soil erosion risk in the Cuyaguateje watershed (Cuba). Catena 74(1):1–12. https://doi.org/10.1016/j.catena.2007.12.003

Mann HB (1945) Nonparametric tests against trend. Econometrica 13:245–259

Kendall MG (1975) Rank Correlation Methods. Griffin, London

Cowpertwait PSP, Metcalfe AV (2009) Introductory time series with R. Springer

Bozdogan H (1987) Model selection and Akaike’s Information Criterion (AIC): the general theory and its analytical extensions. Psychometrika 52(3):345–370

Nash IE, Sutcliffe IV (1970) River flow forecasting through conceptual models. J. Hydrol. 10(282):290

Giannini A, Kushnir Y, Cane MA (1998) Interannual variability of Caribbean rainfall, ENSO, and the Atlantic Ocean. J Climate 13:297–311

United States Climate Prediction Center (CPC) 2020. El Niño and La Niña Ocean Temperature Patterns. https://www.cpc.ncep.noaa.gov/products/analysis_monitoring/ensocycle/ensocycle.shtml, Accessed May 20

Acknowledgments

We thank the anonymous reviewers, whose comments and suggestions have led to the improvement of this manuscript.

Funding

No funding was received for conducting this study.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors have no conflicts of interest to declare.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Alonso Brito, G.R., Rivero Villaverde, A., Lau Quan, A. et al. Comparison between SARIMA and Holt–Winters models for forecasting monthly streamflow in the western region of Cuba. SN Appl. Sci. 3, 671 (2021). https://doi.org/10.1007/s42452-021-04667-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-021-04667-5