Abstract

We introduce a Python package that provides simple and unified access to a collection of datasets from fundamental physics research—including particle physics, astroparticle physics, and hadron- and nuclear physics—for supervised machine learning studies. The datasets contain hadronic top quarks, cosmic-ray-induced air showers, phase transitions in hadronic matter, and generator-level histories. While public datasets from multiple fundamental physics disciplines already exist, the common interface and provided reference models simplify future work on cross-disciplinary machine learning and transfer learning in fundamental physics. We discuss the design and structure and line out how additional datasets can be submitted for inclusion. As showcase application, we present a simple yet flexible graph-based neural network architecture that can easily be applied to a wide range of supervised learning tasks. We show that our approach reaches performance close to dedicated methods on all datasets. To simplify adaptation for various problems, we provide easy-to-follow instructions on how graph-based representations of data structures, relevant for fundamental physics, can be constructed and provide code implementations for several of them. Implementations are also provided for our proposed method and all reference algorithms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Access to high-quality training data is a known key requirement for progress in machine learning and well-curated open benchmark datasets allow reliable comparisons and promote sustained progress. Well-known benchmarks and competitions such as MNIST [1], CIFAR [2], and ImageNet [3] were pivotal in bringing about the powerful deep learning models for image processing observed today. Research in fundamental physics is no different in this regard. Existing datasets and challenges [4,5,6,7,8,9,10,11] are crucial and enable continuous improvements.

A particularly challenging and specific aspect of data science developments in physics is the abundance of needed data representations and accordingly different architecture designs resulting from different experiment designs and theoretical descriptions. Data might come in the form of high-level observables without additional structure [4], as rectangular [12,13,14,15] or hexagonal [16, 17] images, as sequences [18, 19] or sequences of images [20], as point clouds of vectors in space [21, 22], as graphs [23], as data points distributed on non-Euclidean manifolds [24], or even as hybrid of different sources [25].

Of course, it would be possible to work with domain and experiment-specific data representations for each problem. However, such a strategy would be inefficient and wasteful as different groups and communities need to replicate very similar developments instead of using ready-made solutions. Therefore, flexible representations and network architectures simultaneously suited for multiple sources of data would be of great potential use and distribute the gains from deep learning for scientific applications in science more readily and widely.

While some common benchmark datasets exist in physics, these usually are domain specific. Instead, this contribution, together with the provided pd4ml Python package, unifies data across different domains and both from experiment and theory with minimal barriers to access.Footnote 1 A key difference to existing datasets and collections is that pd4ml provides a simple interface to download and import all included datasets in a consistent ready-to-use way, without the need for additional pre-processing. We will outline a protocol for extending this collection with additional contributions below.

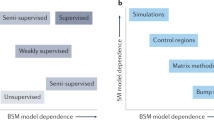

We focus on supervised learning tasks and include examples of both classification and regression problems. While weakly supervised, semi-supervised, and unsupervised learning tasks are of increasing relevance also for physics applications (see, e.g., Ref. [7] for a recent overview), the question of a suitable representation of data can already be answered in a supervised setting. This approach also benefits from easy-to-define and evaluate performance metrics such as accuracy and the area under the ROC curve (AUC) for classification and the resolution for regression tasks.

Another key feature is that all datasets come with a reference implementation of a suitable algorithm representing the state-of-the-art for the specific problem and domain. Specifically included are:

-

distinguishing hadronically decaying top-quarks from light quarks or gluon jets in simulated collisions in an LHC experiment (Top Tagging Landscape) [6];

-

identifying interesting events in the Belle II simulation before detector simulation and reconstruction (Smart Backgrounds) [26, 27];

-

identifying the effects of a non-equilibrium deconfinement phase transition in relativistic nuclear collisions (Spinodal or Not) [28];

-

assigning the correct nature of phase transitions with additional domain adaptation issues (EOSL) [29];

-

and reconstructing the shower maximum from the simulated signal patterns recorded by a cosmic-ray observatory (Air Showers).

These initial datasets already cover aspects of LHC physics, flavor physics, hadron- and nuclear physics as well as astroparticle physics.Footnote 2 The different data volumes and structures ensure that models performing well on these datasets will generalize well to other datasets in fundamental physics.

Given the diverse nature of data in physics, finding suitable representations and compatible network architectures that simultaneously capture relevant symmetries while providing sufficient expressiveness is a persistent problem. On one end of the spectrum lie fully connected networks (FCNs). These can be applied to any data, but provide no useful additional symmetries leading to inefficient training and potential overfitting. On the other end are specific architectures designed for a unique problem. These might be simple convolutional operations or even encode specific physical properties [30,31,32]. Their main disadvantage is that they need to be fine-tuned to a specific physics challenge. An intermediate solution—generic enough to be widely applicable, but with sufficient performance—could greatly simplify model development for many applications in physics. Based on the assembled datasets, we investigate if representing data as graphs has the potential to act as such a lingua franca. Previously, graphs were already successfully explored in a range of fundamental physics applications [23, 33,34,35,36,37,38,39,40,41,42,43,44,45,46,47].

Graphs are a versatile data structure, consisting of nodes connected by edges. Broadly speaking, graph networks process information by passing messages between connected nodes. A detailed description is given in “Graph-Based Network”.

When applying graph networks to physics data, it is necessary to decide how to best represent the data as a graph. This might be trivial—for example, if the data are naturally represented as a graph as is the case for shower histories—or require additional processing steps—if the data are embedded in a geometrical space and locality in that space can be used to build a graph structure. Along with each dataset, we present a strategy to build a corresponding graph.

The remainder of this paper is organized as follows: We introduce the different physics tasks and datasets in “Datasets” and discuss the Python interface in “Python Interface”. An example application of developing and comparing fully connected and graph-based common tagging architectures is discussed in “Example Application”. A summary along with a further outlook are given in “Outlook and Conclusions”while specific reference architectures for all tasks are summarized in Appendix A.

Datasets

An overview of the included datasets is given in Table 1. The provided data do not only cover a range of different physics problems but also show the diversity of data regularly encountered in data analysis in fundamental physics. In the following, additional information on the physics challenge, data generation process, and other details are given for all datasets.

Top Tagging Landscape

Distinguishing or tagging different types of initial particles using their measured decay products is a very common problem at the LHC and forms the basis for subsequent analysis of collision events. The identification of hadronically decaying top quarks with a high Lorentz boost is especially relevant as top quarks are a common decay product in many theories predicting physics beyond the Standard Model.

The top tagging reference dataset [48] was initially produced to allow a fair and consistent comparison of different network architectures developed for this specific problem [6]. It is widely used to benchmark classification algorithms in particle physics and its inclusion in the present collection allows a direct comparison of the obtained more general algorithms with the state-of-the-art on this problem.

The dataset consists of light-quarks and gluon (background) and hadronically decaying top quarks (signal) generated using Pythia [49] and detector simulation applied with Delphes [50]. A particle flow algorithm is used for reconstruction, and jets are formed using the Anti-k\(_T\) algorithm [51] with \(R=0.8\) implemented in FastJet [52]. Per event, the 200 constituent four-vectors of the highest transverse momentum jet are stored. Zero-padding is used when fewer constituents are available. Altogether, 1.2M training examples and 400k examples each for testing and validation are provided. Additional details on the dataset and processing are given in Ref. [6].

Smart Backgrounds

Simulation is one of the major computing challenges for experiments like Belle II that take large amounts of data and require correspondingly large amounts of simulated data. The full simulation process consists of a typically fast event generation step, where decay chains from the initially produced particles to stable final state particles are simulated according to measured or predicted decay models, and a more involved detector simulation and reconstruction step. As it is not known a priori which types of background events are relevant for a particular analysis, the background generation has to include all possible decay chains. Event selection criteria are applied, to simulated and measured data alike, to increase the significance of signals. A powerful selection discards a large fraction of background events while keeping the fraction of rejected signal events low. This is in particular important for searches for rare processes that are highly sensitive to new physics. The fraction of retained simulated background events can be of the order \(10^{-7}\) or even below for analyses at Belle II. Therefore, significant computing resources are wasted to produce simulated background events that are then discarded.

This can potentially be improved by applying filters already after the event generation step, which typically takes only a small fraction of computing resources in the whole process. In our case, the fraction of time needed for generation is about 0.1 %. The challenge is to predict which events will pass the final event selection after detector simulation and reconstruction without running it. This classification problem was first successfully approached using CNNs in Ref. [26] and subsequently improved with graph neural network approaches [27].

The Smart Backgrounds dataset consists of simulated \(e^{+}e^{-}\rightarrow \varUpsilon (4S)\rightarrow B^{0}{\bar{B}}^0\) events with subsequent decays at the event generation step, created using the EvtGen [53] event generator in the context of [26]. The features are the components of the four-momentum of each particle in the decay chain, their production vertex positions and time, the particle type, represented by the PDG [54] identifier, and the index of the mother particle which allows forming a graph of the decay tree. On average, 40 particles are generated per event. Each event in the dataset contains 100 particles, where the features are set to 0 and the mother particle indices to -1 in events with less than 100 particles in the decay chain. PDG identifiers are mapped to an integer number between 1 and 506.

The label indicates whether the event passes filtering criteria based on an event reconstruction using the Full Event Interpretation (FEI) [55] algorithm that tries to reconstruct hadronic B decays from the information after detector simulation and reconstruction [56, 57]. The FEI selection is shared by many analyses and, therefore, has a relatively high retention rate of 5 %.

Spinodal or Not

The spinodal dataset [58] is a simulated dataset, created to identify the effects of a non-equilibrium deconfinement phase transition in relativistic nuclear collisions. The underlying physical question is to understand the non-perturbative interactions of the strong interaction in the high baryon density regime. These questions are closely related to the understanding of the deconfinement mechanism and consequently the dynamical generation of mass in Quantum Chromo Dynamics (QCD). To uncover the properties of dense and hot nuclear matter, the Compressed Baryonic Matter (CBM) [59, 60] experiment is under construction at the FAIR (Facility for Anti-proton and Ion Research) at GSI Darmstadt. At FAIR, heavy nuclei (mostly lead and/or uranium) are accelerated to beam energies of several GeV per nucleon and brought to collision with a similar target at the CBM experiment. In this way, the nuclear matter is simultaneously heated and compressed, and since large nuclei are used, for a very short time, an equilibrated system of QCD matter at several times the nuclear saturation density and temperatures of up to 100 MeV is created. The dynamics that govern this system are determined by the strong interaction, i.e., QCD. Since the dynamic many-body problem of QCD cannot be solved explicitly nor numerically, our understanding of the matter created is based on interpretations of the collected data. This interpretation is done by comparing sophisticated model simulations, either based on relativistic fluid dynamics or microscopic transport simulations, with experimental observations. The presented dataset is the result of such a model simulation, based on a fluid dynamical simulation of heavy ion collisions in the presence of a first-order deconfinement phase transition [61]. In particular, the dataset was created using two distinct scenarios, one where spinodal decomposition occurs and one where it does not. Spinodal decomposition is a well-known effect that describes the dynamics of phase separation and leads to the exponential growth of density fluctuations. It is now of particular interest how these density fluctuations influence the observable final particle spectra as measured by the CBM experiment. In addition, since even the theoretical background of these fluctuations in the fast-expanding and small collision systems is not well understood, it is even of interest to understand whether all events will show such signals or they only occur on rare occasions. Thus, the application of machine learning methods to possibly uncover the effects of the QCD phase transition measured momentum spectra is of great interest. The task for this effort is to identify those events which have undergone spinodal decomposition. In addition, for the physical interpretation, it is also important to see what a characteristic spinodal event looks like compared to a non-spinodal event. Finally, it is important to achieve high accuracy to see whether all events, simulated as spinodal events, also can be identified as such or if not every event shows the relevant characteristics. More information on the physics background and scientific motivation behind this dataset can be found in Ref. [28, 62]. The data included are a coarse-grained—and hence more difficult—version of these data. To create the spinodal classification dataset, 27,000 central collision events of lead on lead are generated at a (typical FAIR/GSI) beam energy of \(E_{\mathrm {lab}}=3.5\, A\) GeV, for each scenario: spinodal or not. From each event, an ’image’ is then generated, containing information on the net baryon density distribution in the transverse spatial \(X-Y\) plane. This corresponds then to a 20-by-20 pixel histogram. We renormalized the pictures by their maximum bin value for each event separately, to avoid possible artifacts from one class having a larger density. The histograms are then flattened to a 400-column array-list of events.

EoS

The EoS dataset is simulated with relativistic hydrodynamics including afterburner hadronic cascade for describing high energy heavy ion collisions. One of the primary motivations for these collision experiments is to understand the QCD phase structure, to which large international efforts have been devoted. The current beam energy scan project at RHIC (BNL) and the forthcoming program at FAIR (GSI) particularly aim at searching for signals and location of the critical end point (CEP) in the QCD phase diagram. This CEP separates the crossover transition and the conjectured first-order phase transition from normal hadronic matter to strongly coupled quark–gluon matter. In the laboratory (LHC, RHIC, or future FAIR, NICA), a huge amount of experimental data have been accumulated for heavy ion collisions, making it a fantastic test ground to study these QCD bulk properties. Conventionally, people look into critical fluctuations in experiments to locate the CEP. However, the currently observed signals are too weak to give plausible conclusions. Also, it is rather involved to disentangle many different physical factors (e.g., initial state effects, transport properties, freeze-out physics) in the collision evolution given only the final states observables. Thus, we lack a reliable bridge between the QCD bulk matter properties (EoS) produced in the collisions and the experimental observables. Being different from conventional strategy, we explored another avenue by formulating this task into a classification task well suited to deep learning, see Ref. [29, 63,64,65,66]. The EoS dataset here has the purpose of utilizing deep learning to investigate the nature of the QCD transition happening during nuclei collision, which can be manifested inside the equation of state (EoS) to be employed within the hydrodynamic simulation for the collision. Previously, it was demonstrated in Ref. [63] that under pure Hydrodynamic evolution (without afterburner hadronic cascade) a deep Convolutional Neural Network (CNN) can identify the nature of the QCD transition encoded in the dynamical evolution with high accuracy provided solely by the pion spectra. This performance was also shown to be robust against other physical factors. Later, a further generalization [64] included more realistic simulation compared to experiment was investigated by considering stochastic particalization and UrQMD hadronic cascade followed by hydrodynamics, where the resonance decay effects were taken into account as well in such a hybrid model. The dataset here is the one used in this study. Specifically, the iEBE-VISHNU hybrid model was used to perform event-by-event simulations, with MC-Glauber and also MC-KLN model for the fluctuating initial condition generation. Two different types of EoS are employed in the dynamics: a crossover EoS from lattice-QCD parametrization and the first-order EoS from a Maxwell construction between hadron resonance gas and ideal gas of quark–gluons with a transition temperature at \(T_c = 165\) MeV. After the hydrodynamic evolution, the fluid cells are projected into particles (hadrons) using the Cooper–Frye formula, and further, the UrQMD follows for simulating the hydronic cascade. We vary different physical parameters (viscosity, initial condition, switching temperature from hydro to cascade) to generate diverse collision events for the robustness test. This also aims to encourage the network to capture the genuine imprint of the QCD transition onto the final states spectra, instead of being biased by any specific setup of features or uncertain physical properties. Inside the EoS dataset, the two classes of events were labeled as EOSL and EOSQ, where each event can be viewed as an “image” to be the input observable of the deep-learning-based algorithm: the two-dimensional histogram of pion spectra with 24 transverse momentum bins and 24 azimuthal angle bins.

Cosmic-Ray-Induced Air Showers

When ultra-high-energy cosmic rays (UHECRs) penetrate the Earth’s atmosphere, they induce large particle cascades called extensive air showers. Using cosmic-ray observatories consisting of ground-based particle detectors, e.g., water-Cherenkov detectors or scintillators, the footprint of air showers can be detected. Here, each detector station measures the time-dependent density of shower particles. This results in signal traces, where the shapes encode information on the shower development. By reconstructing the air-shower characteristics and in particular \(X_{\mathrm {max}} ~\)—the depth of shower maximum—information of the cosmic-ray mass can be obtained.

So far, the reconstruction of \(X_{\mathrm {max}}\) is confined to observations using fluorescence telescopes. However, in contrast to ground-based particle detectors, telescopes have a much smaller duty cycle, as fluorescence observations are only possible in clear and moonless lights. An accurate and extensive measurement of \(X_{\mathrm {max}}\) using ground-based particle detectors could significantly increase the statistics, and thus, give new insights into the cosmic-ray composition.

The air-shower dataset [67] consists of cosmic-ray events and enables the reconstruction of \(X_{\mathrm {max}}\) using a simulated ground-based observatory. The observatory is placed at the height of 1400 m above sea level and features a Cartesian detector array with 1500 m spacing. The design is inspired by the Pierre Auger Observatory [68] and the Telescope Array Project [69].

The used simulation of signals is a fast-simulation approach and utilizes parameterizations and a simplified shower development. It was introduced in Ref. [70]. To decrease memory consumption, simulated air-shower footprints are reduced to a cutout of \(9\times 9\) stations centered at the station that recorded the largest signal. Thus, the arrangement of detectors can be interpreted as a small 2D image. A third dimension is given by the time-dependent signal measurement at each detector station. The signal trace of each station is shortened by selecting the 80 time steps after the first signals were measured. The width of a one-time step corresponds to 25 ns. Furthermore, for each detector station, an arrival time is given. It states the arrival time of the first shower particles at each station, which corresponds to the time where the signal traces start.

The goal of this regression task is to predict \(X_{\mathrm {max}}\) from the detector readouts with the best possible resolution, defined as the standard deviation of the distribution given by the difference between the predictions and the actual values of \(X_{\mathrm {max}}\).

Python Interface

To make the datasets conveniently accessible to a wide range of users, we provide a Python interface to make their use as simple as possible. With a few lines of code, it is possible to load any of the five datasets, perform dataset-specific pre-processing, and create a dataset-specific adjacency matrix for graph neural network algorithms. The package is easily extendable by providing additional wrappers to allow the inclusion of other datasets in the same environment.

The essential function is load which allows loading of the training and testing datasets. The dataset features X as well as the labels y are returned as NumPy [71] arrays. We can see below an example of code that loads the train and the test set of the Spinodal dataset:

If the dataset is not found at the provided path, it is automatically downloaded.Footnote 3 The option to force the download is provided and an MD5 check is implemented.

The package provides an additional loading method called load_data. In this case, the loaded data undergo a pre-processing routine implemented by the dataset providers. One can require the data to be loaded in the form of a graph by setting the appropriate value to the graph argument. The returned adjacency matrices are constructed in the same way described in “Pre-processing and Adjacency Matrix”. An example would be:

The dataset description can be printed out by executing the following command:

The output contains both technical information on the dataset (like the number of events) and references to the papers providing the physics context and the in-detail description of the reference models.

Additionally, we made available in the repositoryFootnote 4 the TensorFlow 2.3 [72] implementation of the reference models described in Appendix A and the common models presented in “Example Application”.

Example Application

To demonstrate the potential added value of such a common interface for transfer learning and similar tasks, we consider two common architectures: FCNs (Fully Connected Network) and GraphNets (“Graph-Based Network”). In both cases, the same model (the only difference being a different number of input layers) is trained on all datasets.

Additionally, a reference model, against which the performance can be compared, is provided for each dataset (Fig. 1). These are described in Appendix A. In the case of well-established datasets (such as the Top Tagging Landscape task), state-of-the-art models are used. For novel datasets, an implementation is provided by the dataset providers as well.Footnote 5

Fully Connected Network

Networks based on the FCN architecture are a useful and versatile baseline as they require no assumptions about the structure of the data. For this reason, we construct an FCN architecture to be trained and evaluated on all datasets described in “Datasets”. The network has a depth of 12 hidden layers each with a size of 256 nodes and the ReLU activation function.Footnote 6 The output layer, as well as the loss function, is dependent on the task of the network:

-

Classification task: Sigmoid output and Binary Cross Entropy loss;

-

Regression task: Linear output and Mean Squared Error loss.

The input data are split into batches of 256 examples and the training is performed across 300 epochs regulated by early stopping which terminates the training when no improvement of the validation loss is observed for more than 15 epochs to prevent overfitting effects. We train using the the Adam [73] algorithm with an initial learning rate of 0.001. The learning rate is reduced by a factor of 10 if no improvement of the validation loss is observed within 8 epochs from the last best score.

Graph-Based Network

Many types of data can either be naturally represented as a graph or transformed into a graph without loss of information. This is also the case for data in fundamental physics where measurement signals can be represented as nodes in a graph and their relations or the relation of the measurement devices as the edges. This additional information about distances and connectivity between data points is something a simple FCN cannot process.

In the following, we consider undirected graphs with N nodes — meaning that an edge between node i and j is equivalent to an edge between j and i. We further assign a vector of features \(n_i \in {\mathbb {R}}^M\) (where M is the dimension of the feature space) to each node. Only unweighted graphs are considered for simplicity, although assigning features to the edges is possible as well. In this case, we can describe the graph representing one input example by two matrices: the feature matrix \(X \in {\mathbb {R}}^{M \times N}\) and the adjacency matrix \(A \in {0,1}^{N \times N}\). An entry of 1 in the row i and column j of the adjacency matrix denotes that there is a connection from node i to node j. Zeros imply unconnected nodes. A dataset then consists of many such graphs, in general, one feature matrix and one adjacency matrix per data point.

In principle, most types of data can be turned into a graph without losing information. We have outlined a few examples in this paper. For example, data represented as an image can easily be turned into a graph structure by building an adjacency matrix based on each pixels’ neighboring pixel (see “Pre-processing and Adjacency Matrix”). If the absolute pixel position is relevant, it can be added as per-node feature.

The architecture of our common GraphNet implementation is very similar to the Smart Backgrounds reference model in “Smart Backgrounds”. The input data are split into batches of 32 elements and the training is performed across 400 epochs regulated by an early stopping with patience of 50 epochs. To optimize the training, the Adam algorithm with an initial learning rate of 0.001 is used that is then reduced by a factor of 10 if no improvement of the validation loss is observed within 8 epochs from the last best score.

The main difference in comparison to the FCN is given by the addition of an adjacency matrix that is input to the graph convolutional layers [74]. The architecture consists of three fully connected layers per node with weigth-sharing across nodes, followed by three graph convolutional layers, followed by a 1D global average pooling operation, followed by three fully connected layers, and an output layer. Starting from the graph convolutional layers, after each layer batch normalization is applied as well as dropout [75] (fraction of 0.2 for all layers except the last one where the fraction is 0.1). All layers employ 256 trainable nodes and PReLU [76] activation functions, except the output layer using a sigmoid or a linear activation function depending on the task (classification or regression). A schematic representation is given in Fig. 2. The same preprocessing steps are performed as for the FCN. Additionally, a dataset-specific step is required to build the adjacency matrices as explained in the following section.

Pre-processing and Adjacency Matrix

For each dataset, basic data preprocessing is performed. The dataset-specific preprocessing is closely inspired by its counterpart in the reference models. The Top Tagging Landscape dataset is transformed from four vectors to four hadronic coordinates, more specifically into the logarithm of the transverse energy (log(p\(\mathrm {_T}\))), the logarithm of the energy (log(E)), the relative pseudorapidity (\({\Delta \eta }\)), and the relative \({\Delta \phi }\) angle. For the SmartBKG dataset, the Particle ID information is one-hot encoded, while not changing the other features. The EoS dataset is standardized and the air-shower dataset is preprocessed by taking the logarithm of the filled signal bins and by normalizing the timing. No preprocessing is performed for the Spinodal or Not dataset.

Each adjacency matrix has been designed to suit the specific dataset characteristics. For the Top Tagging Landscape dataset (“Top Tagging Landscape”) the adjacency matrix has been built performing a k nearest neighbor clustering using the information of the jet constituents with \(k = 7\). In the case of the SmartBKG dataset, the generator-level particles have been used in combination with the mother–daughter relation. Since the EoS and the Spinodal data come in the form of 2D histograms, we exploit this image-like structure and build the adjacency matrix counting as neighbors the eight (three at corners, five at edges) adjacent bins to each “pixel”. In the air-shower dataset the detectors are arranged in a nine-by-nine rectangular grid, which allows the construction of the adjacency matrix implementing the “eight adjacent bin” technique as well. A summary of these graph-building methods is given in Table 2.

Performance Evaluation

The performance of all the models has been evaluated by five trainings using the same data but different random initialization. Then, the mean and standard deviation of the obtained scores are calculated. The accuracy (fraction of correctly classified data points) and the area under curve (AUC) are given for the classification task, while for the regression task we use the resolution as described in “Cosmic-Ray-Induced Air Showers”.

An overview of the results is given in Table 3 (accuracy) and Table 4 (AUC). A graphical representation of the accuracy relative to the accuracy achieved by the reference model is given in Fig. 3. The GraphNet achieves equal or similar performance as the reference model on all classification tasks. The largest gap is observed for the Spinodal dataset where the graph network underperforms the reference model by 2 \(\%\) in AUC (1 \(\%\) in accuracy). The performance difference to the FCN is much larger—here the largest difference is 12 \(\%\) for the EoS dataset. Regression performance on the air-shower dataset (Table 5) shows a larger drop in performance with a ten percent worse resolution for the GraphNet and 30 % for the FCN.

Outlook and Conclusions

With the increasing use of machine learning in fundamental physics, a corresponding need for common datasets and algorithms that can be used across various scientific tasks arises. The collected datasets highlight various facets of this challenge and provide data from very different problems and communities in a unified and simple-to-use setup with the extension to more data and learning tasks planned for the future.

The obtained results show that a common graph-based architecture can achieve (close to) state-of-the-art performance with shared hyperparameters across all tasks. This is encouraging and the proposed architectureFootnote 7 can serve as a solid starting point—or perhaps even algorithm of choice without further optimization—for machine learning tasks in fundamental physics. Similarly, the discussed methods to build graphs for common data structures serve as a template for similar applications.

However, the observed difference in performance for cosmic-ray induced air-shower data implies that further improvements, especially for highly complex data, are needed. Similarly, there is no reason why the reference models should be the upper limit and a sufficiently advanced common model might outperform the baseline on all tasks. Innovations in architectures are needed to overcome this challenge.

These results are just the first steps in taking machine learning in our fields from innovative developments to industrial-style production. A common baseline architecture makes automated training and optimization on user-provided datasets without further manual intervention possible. Such a service could greatly reduce the turn-around and development time for many standard applications of machine learning in our field. Of course, exotic, novel, or otherwise unique tasks will still require dedicated efforts. Thinking further, one could also consider using similarities in the structure of data representing physical measurements and further improve the performance via transfer learning across datasets.

Data Availability

This manuscript has associated data in a data repository. [Authors Comment: The datasets are available via the pd4ml Python package: https://github.com/erum-data-idt/pd4ml.]

Notes

Datasets and models are available at https://github.com/erum-data-idt/pd4ml.

The authors explicitly welcome further contributions of suitable supervised learning problems from fundamental physics and related domains. These can be made by implementing the binding functions following the structure of in https://github.com/erum-data-idt/pd4ml and creating a pull request. Data can be stored at any place that allows automated downloads, such as the Zenodo platform (https://zenodo.org/).

The choice of storage location lies with the dataset providers.

Contributions of additional algorithm implementations via github pull-requests are possible as well.

No extensive tuning of these hyperparameters was performed but we verified that changes in the number of layers, batch size, and learning rate did not significantly alter the results.

implementation available at https://github.com/erum-data-idt/pd4ml

References

LeCun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. In: Proceedings of the IEEE, vol 86, issue no 11, pp 2278–2324. http://yann.lecun.com/exdb/mnist/

Krizhevsky A (2009) Learning multiple layers of features from tiny images. https://www.cs.toronto.edu/~kriz/learning-features-2009-TR.pdf. Accessed 1 July 2021

Deng J, Dong W, Socher R, Li LJ, Li K, Fei-Fei L (2009) Imagenet: a large-scale hierarchical image database. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition, IEEE, pp 248–255

Adam-Bourdarios C, Cowan G, Germain C, Guyon I, Kégl B, Rousseau D (2015) The Higgs boson machine learning challenge. In: Cowan G, Germain C, Guyon I, Kégl B, Rousseau D (eds) Proceedings of the NIPS 2014 Workshop on High-energy Physics and Machine Learning, Proceedings of Machine Learning Research. PMLR, pp. 19–55

Amrouche S et al (2019) The tracking machine learning challenge: accuracy phase. Lawrence Berkeley, Berkeley. https://doi.org/10.1007/978-3-030-29135-8_9

Butter A et al (2019) The machine learning landscape of top taggers. Sci Post Phys. https://doi.org/10.21468/SciPostPhys.7.1.014

Kasieczka G et al (2021) The LHC olympics 2020: a community challenge for anomaly detection in high energy physics. Rep Prog Phys 84:124201

Rousseau D, Ustyuzhanin A (2022) Machine learning scientific competitions and datasets. In: Artificial Intelligence for High Energy Physics, pp 765–812. https://doi.org/10.1142/9789811234033_0020

Nachman B, de Oliveira L, Paganini M (2017) Electromagnetic calorimeter shower images. Mendely data. https://doi.org/10.17632/pvn3xc3wy5.1

Brooijmans G et al (2020) Les Houches 2019 Physics at TeV colliders: new physics working group report. arXiv:2002.12220 [hep-ph]

Aarrestad T et al (2022) The dark machines anomaly score challenge: benchmark data and model independent event classification for the large Hadron Collider. SciPost Phys 12:043. https://doi.org/10.21468/SciPostPhys.12.1.043

Almeida LG, Backović M, Cliche M, Lee SJ, Perelstein M (2015) Playing tag with ANN: boosted top identification with pattern recognition. JHEP 07:086. https://doi.org/10.1007/JHEP07(2015)086

de Oliveira L, Kagan M, Mackey L, Nachman B, Schwartzman A (2016) Jet-images: deep learning edition. JHEP 07:069. https://doi.org/10.1007/JHEP07(2016)069

Komiske PT, Metodiev EM, Schwartz MD (2017) Deep learning in color: towards automated quark/gluon jet discrimination. JHEP 01:110. https://doi.org/10.1007/JHEP01(2017)110

Kasieczka G, Plehn T, Russell M, Schell T (2017) Deep-learning top taggers or the end of QCD? JHEP 05:006. https://doi.org/10.1007/JHEP05(2017)006

Shilon I, Kraus M, Büchele M, Egberts K, Fischer T, Holch T, Lohse T, Schwanke U, Steppa C, Funk S (2019) Application of deep learning methods to analysis of imaging atmospheric Cherenkov telescopes data. Astropart Phys 105:44–53. https://doi.org/10.1016/j.astropartphys.2018.10.003

The Pierre Auger Collaboration (2021) Deep-learning based reconstruction of the shower maximum \(X_{{\rm max}}\) using the water-cherenkov detectors of the pierre auger observatory. JINST 16:P07019. https://doi.org/10.48550/arXiv.2101.02946

Egan S, Fedorko W, Lister A, Pearkes J, Gay C (2017) Long Short-Term Memory (LSTM) networks with jet constituents for boosted top tagging at the LHC. arXiv:1711.09059 [hep-ex]

Erdmann J (2020) A tagger for strange jets based on tracking information using long short-term memory. JINST 15(01):P01021. https://doi.org/10.1088/1748-0221/15/01/P01021

Zhou K, Endrődi G, Pang LG, Stöcker H (2019) Regressive and generative neural networks for scalar field theory. Phys Rev D 100:011501. https://doi.org/10.1103/PhysRevD.100.011501

Komiske PT, Metodiev EM, Thaler J (2019) Energy flow networks: deep sets for particle jets. JHEP 01:121. https://doi.org/10.1007/JHEP01(2019)121

Omana Kuttan M, Steinheimer J, Zhou K, Redelbach A, Stoecker H (2020) A fast centrality-meter for heavy-ion collisions at the CBM experiment. Phys Lett B 811:135872. https://doi.org/10.1016/j.physletb.2020.135872

Qu H, Gouskos L (2020) ParticleNet: Jet Tagging via particle clouds. Phys Rev D 101(5):056019. https://doi.org/10.1103/PhysRevD.101.056019

Bister T, Erdmann M, Glombitza J, Langner N, Schulte J, Wirtz M (2021) Identification of patterns in cosmic-ray arrival directions using dynamic graph convolutional neural networks. Astropart Phys 126:102527. https://doi.org/10.1016/j.astropartphys.2020.102527

Bols E, Kieseler J, Verzetti M, Stoye M, Stakia A (2020) Jet flavour classification using DeepJet. J Instrum. https://doi.org/10.1088/1748-0221/15/12/P12012

Kahn JMS (2019) Hadronic tag sensitivity study of B \(\rightarrow\) K(*)\(\nu {\bar{\nu }}\) and selective background Monte Carlo Simulation at Belle II. Ph.D. thesis. http://nbn-resolving.de/urn:nbn:de:bvb:19-240131. https://doi.org/10.5282/edoc.24013. Accessed 11 Aug 2021

Kahn James, Dorigatti Emilio, Lieret Kilian, Lindner Andreas, Kuhr Thomas (2020) Selective background Monte Carlo simulation at Belle II. EPJ Web Conf 245:02028. https://doi.org/10.1051/epjconf/202024502028

Steinheimer J, Pang L, Zhou K, Koch V, Randrup J, Stoecker H (2019) A machine learning study to identify spinodal clumping in high energy nuclear collisions. JHEP 12:122. https://doi.org/10.1007/JHEP12(2019)122

Pang LG, Zhou K, Su N, Petersen H, Stöcker H, Wang XN (2019) Classify QCD phase transition with deep learning. Nucl Phys A 982:867. https://doi.org/10.1016/j.nuclphysa.2018.10.077

Butter A, Kasieczka G, Plehn T, Russell M (2018) Deep-learned top tagging with a Lorentz layer. Sci Post Phys 5(3):028. https://doi.org/10.21468/SciPostPhys.5.3.028

Erdmann M, Geiser E, Rath Y, Rieger M (2019) Lorentz boost networks: autonomous physics-inspired feature engineering. JINST 14(06):P06006. https://doi.org/10.1088/1748-0221/14/06/P06006

Bogatskiy A, Anderson B, Offermann JT, Roussi M, Miller DW, Kondor R (2020) Lorentz group equivariant neural network for particle physics. arXiv:2006.04780 [hep-ph]

Moreno EA, Cerri O, Duarte JM, Newman HB, Nguyen TQ, Periwal A, Pierini M, Serikova A, Spiropulu M, Vlimant JR (2020) JEDI-net: a jet identification algorithm based on interaction networks. Eur Phys J 80(1):58. https://doi.org/10.1140/epjc/s10052-020-7608-4

Qasim SR, Kieseler J, Iiyama Y, Pierini M (2019) Learning representations of irregular particle-detector geometry with distance-weighted graph networks. Eur Phys J 79(7):608. https://doi.org/10.1140/epjc/s10052-019-7113-9

Dreyer FA, Qu H (2021) Jet tagging in the Lund plane with graph networks. J High Energy Phys 52. https://doi.org/10.1007/JHEP03(2021)052

Duarte J, Vlimant JR (2022) Graph neural networks for particle tracking and reconstruction. In: Artificial intelligence for high energy physics, pp. 387–436. https://doi.org/10.1142/9789811234033_0012

Heintz A, et al (2020) Accelerated charged particle tracking with graph neural networks on FPGAs. 34th Conference on Neural Information Processing Systems arXiv:2012.01563 [physics.ins-det]

Pata J, Duarte J, Vlimant JR, Pierini M, Spiropulu M (2021) Mlpf: efficient machine-learned particle-flow reconstruction using graph neural networks. Eur Phys J. https://doi.org/10.1140/epjc/s10052-021-09158-w

Kansal R, Duarte J, Orzari B, Tomei T, Pierini M, Touranakou M, Vlimant JR, Gunopoulos D (2020) Graph Generative Adversarial Networks for Sparse Data Generation in High Energy Physics. 34th Conference on Neural Information Processing Systems arXiv:2012.00173 [physics.data-an]

Guo J, Li J, Li T (2021) The boosted Higgs jet reconstruction via graph neural network. Phys Rev D 103:116025. https://doi.org/10.1103/PhysRevD.103.116025

Alonso-Monsalve S, Douqa D, Jesús-Valls C, Lux T, Pina-Otey S, Sánchez F, Sgalaberna D, Whitehead LH (2021) Graph neural network for 3D classification of ambiguities and optical crosstalk in scintillator-based neutrino detectors. Phys Rev D. https://doi.org/10.1103/physrevd.103.032005

Ju X, Nachman B (2020) Supervised jet clustering with graph neural networks for Lorentz boosted bosons. Phys Rev D. https://doi.org/10.1103/physrevd.102.075014

Shlomi J, Battaglia P, Vlimant JR (2021) Graph neural networks in particle physics. Mach Learn 2(2):021001. https://doi.org/10.1088/2632-2153/abbf9a

Choma N, et al (2020) Track seeding and labelling with embedded-space graph neural networks. arXiv:2007.00149 [physics.ins-det]

Bernreuther E, Finke T, Kahlhoefer F, Krämer M, Mück A (2021) Casting a graph net to catch dark showers. Sci Post Phys. https://doi.org/10.21468/scipostphys.10.2.046

Ju X et al (2020) Graph neural networks for particle reconstruction in high energy physics detectors. Presented at NeurIPS 2019 Workshop “Machine Learning and the Physical Sciences”. arXiv:2003.11603 [physics.ins-det]

Arjona Martínez J, Cerri O, Pierini M, Spiropulu M, Vlimant JR (2019) Pileup mitigation at the Large Hadron Collider with graph neural networks. Eur Phys J Plus 134(7):333. https://doi.org/10.1140/epjp/i2019-12710-3

Kasieczka G, Plehn T, Thompson J, Russel M (2019) Top quark tagging reference dataset. Zendo. https://doi.org/10.5281/zenodo.2603256

Sjöstrand T, Ask S, Christiansen JR, Corke R, Desai N, Ilten P, Mrenna S, Prestel S, Rasmussen CO, Skands PZ (2015) An introduction to PYTHIA 8.2. Comput Phys Commun 191:159. https://doi.org/10.1016/j.cpc.2015.01.024

de Favereau J, Delaere C, Demin P, Giammanco A, Lemaître V, Mertens A, Selvaggi M (2014) Delphes 3: a modular framework for fast simulation of a generic collider experiment. J High Energy Phys. https://doi.org/10.1007/jhep02(2014)057

Cacciari M, Salam GP, Soyez G (2008) The anti-\(k_t\) jet clustering algorithm. JHEP 04:063. https://doi.org/10.1088/1126-6708/2008/04/063

Cacciari M, Salam GP, Soyez G (2012) FastJet user manual. Eur Phys J 72:1896. https://doi.org/10.1140/epjc/s10052-012-1896-2

Lange DJ (2001) The EvtGen particle decay simulation package. Nucl Instrum Methods A 462:152. https://doi.org/10.1016/S0168-9002(01)00089-4

Particle Data Group (2020) Review of Particle Physics. PTEP 2020(8):083C01. https://doi.org/10.1093/ptep/ptaa104

Keck T et al (2019) The full event interpretation: an exclusive tagging algorithm for the Belle II experiment. Comput Softw Big Sci 3(1):6. https://doi.org/10.1007/s41781-019-0021-8

Kuhr T, Pulvermacher C, Ritter M, Hauth T, Braun N, Belle II Framework Software Group (2019) The Belle II core software. Comput Softw Big Sci 3(1):1. https://doi.org/10.1007/s41781-018-0017-9

The Belle Collaboration (2021) Belle II analysis software framework (basf2). Comput Softw Big Sci. https://doi.org/10.5281/zenodo.5574115

Steinheimer J (2021) Spinodal dataset for classification. Zendo. https://doi.org/10.5281/zenodo.5710737

Hohne C et al (2011) CBM experiment. Lect Notes Phys 814:849. https://doi.org/10.1007/978-3-642-13293-3

Senger P, Bratkovskaya E, Andronic A, Averbeck R, Bellwied R, Friese V, Fuchs C, Knoll J, Randrup J, Steinheimer J (2011) Observables and predictions. Lect Notes Phys 814:681. https://doi.org/10.1007/978-3-642-13293-3

Steinheimer J, Randrup J (2012) Spinodal amplification of density fluctuations in fluid-dynamical simulations of relativistic nuclear collisions. Phys Rev Lett 109:212301. https://doi.org/10.1103/PhysRevLett.109.212301

Steinheimer J, Pang LG, Zhou K, Koch V, Randrup J, Stoecker H (2021) A machine learning study on spinodal clumping in heavy ion collisions. Nucl Phys A 1005:121867. https://doi.org/10.1016/j.nuclphysa.2020.121867

Pang LG, Zhou K, Su N, Petersen H, Stöcker H, Wang XN (2018) An equation-of-state-meter of quantum chromodynamics transition from deep learning. Nat Commun 9(1):210. https://doi.org/10.1038/s41467-017-02726-3

Du YL, Zhou K, Steinheimer J, Pang LG, Motornenko A, Zong HS, Wang XN, Stöcker H (2020) Identifying the nature of the QCD transition in relativistic collision of heavy nuclei with deep learning. Eur Phys J 80(6):516. https://doi.org/10.1140/epjc/s10052-020-8030-7

Du YL, Zhou K, Steinheimer J, Pang LG, Motornenko A, Zong HS, Wang XN, Stöcker H (2021) Identifying the nature of the QCD transition in heavy-ion collisions with deep learning. Nucl Phys A 1005:121891. https://doi.org/10.1016/j.nuclphysa.2020.121891

Jiang L, Wang L, Zhou K (2021) Deep learning stochastic processes with QCD phase transition. Phys Rev D 103:116023. https://doi.org/10.1103/PhysRevD.103.116023

Glombitza J (2021) Reconstruction of simulated air shower footprints measured at a hypothetical ground-based cosmic-ray observatory. Zendo. https://doi.org/10.5281/zenodo.5748080

The Pierre Auger Collaboration (2015) The Pierre Auger cosmic ray observatory. Nucl Instrum Methods Phys Res Sect A 798:172–213. https://doi.org/10.1016/j.nima.2015.06.058

Kawai H et al (2022) Telescope Array Experiment. Nucl Phys B 175–176:221. https://doi.org/10.1016/j.nuclphysbps.2007.11.002

Erdmann M, Glombitza J, Walz D (2018) A deep learning-based reconstruction of cosmic ray-induced air showers. Astropart Phys 97:46. https://doi.org/10.1016/j.astropartphys.2017.10.006

Harris CR, Millman KJ, van der Walt SJ, Gommers R, Virtanen P, Cournapeau D, Wieser E, Taylor J, Berg S, Smith NJ, Kern R, Picus M, Hoyer S, van Kerkwijk MH, Brett M, Haldane A, del Río JF, Wiebe M, Peterson P, Gérard-Marchant P, Sheppard K, Reddy T, Weckesser W, Abbasi H, Gohlke C, Oliphant TE (2020) Array programming with NumPy. Nature 585(7825):357. https://doi.org/10.1038/s41586-020-2649-2

Abadi M, Agarwal A, Barham P, Brevdo E, Chen Z, Citro C, Corrado GS, Davis A, Dean J, Devin M, Ghemawat S, Goodfellow I, Harp A, Irving G, Isard M, Jia Y, Jozefowicz R, Kaiser L, Kudlur M, Levenberg J, Mané D, Monga R, Moore S, Murray D, Olah C, Schuster M, Shlens J, Steiner B, Sutskever I, Talwar K, Tucker P, Vanhoucke V, Vasudevan V, Viégas F, Vinyals O, Warden P, Wattenberg M, Wicke M, Yu Y, Zheng X (2015) TensorFlow: large-scale machine learning on heterogeneous systems. https://www.tensorflow.org/. Software available from tensorflow.org (Accessed 11 Aug 2021)

Kingma DP, Ba J (2015) Adam: a method for stochastic optimization. arXiv:1412.6980 [cs.LG]

Kipf TN, Welling M (2017) Semi-supervised classification with graph convolutional networks. ICLR 2017. arXiv:1609.02907 [cs.LG]

Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R (2014) Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res 15:1929

He K, Zhang X, Ren S, Sun J (2015) Delving deep into rectifiers: surpassing human-level performance on imagenet classification. CoRR. arXiv:1502.01852

Qu H, Gouskos L (2020) Jet tagging via particle clouds. Phys Rev D. https://doi.org/10.1103/physrevd.101.056019

Wang Y, Sun Y, Liu Z, Sarma SE, Bronstein MM, Solomon JM (2019) Dynamic graph CNN for Learning on point clouds. ACM Trans Graph 38(5). https://doi.org/10.1145/3326362

Simonyan K, Zisserman A (2015) Very deep convolutional networks for large-scale image recognition. arXiv:1409.1556 [cs.CV]

Hochreiter S, Schmidhuber J (2022) Long short-term memory. MIT Press 9:1735. https://doi.org/10.1162/neco.1997.9.8.1735

He K, Zhang X, Ren S, Sun J (2016) Deep Residual Learning for Image Recognition. In: 2016 IEEE conference on computer vision and pattern recognition (CVPR), 2016, pp 770–778. https://doi.org/10.1109/CVPR.2016.90

Ioffe S, Szegedy C (2015) Batch normalization: accelerating deep network training by reducing internal covariate shift. In: Proceedings of the 32nd international conference on international conference on machine learning, (ICML'15), JMLR.org. vol 37, pp 448–456

Acknowledgements

This work would not have been possible without the collaborative project IDT-UM (Innovative Digitale Technologien zur Erforschung von Universum und Materie) funded by the German Federal Ministry of Education and Research BMBF and overseen by Projektträger DESY. We thank all our involved colleagues for the excellent collaboration and stimulating scientific environment. LB, EB, GK and WK acknowledge the support of the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) under Germany’s Excellence Strategy—EXC 2121 “Quantum Universe”—390833306. NH and TK acknowledge the support of the Deutsche Forschungsgemeinschaft under Germany’s Excellence Strategy—EXC 2094 “ORIGINS”—390783311. We thank the Belle II data production team for producing the Smart Backgrounds dataset used in this study.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A: Reference Models

Appendix A: Reference Models

In the following, a reference model is provided for each dataset. These reference models represent an algorithm specifically designed and optimized for the problem at hand. They serve as a benchmark to judge the performance of the proposed dataset-independent algorithms.

A.1: Top Tagging Landscape

The ParticleNet [77] algorithm is used as reference for the Top Tagging Landscape dataset. This architecture was one of the best models in a comparison study performed earlier on this dataset [6]. The graph convolutional network is constructed by viewing the input data as point clouds, i.e., each jet is considered an unordered set of particles. To build these point clouds, the particles in each jet are ordered by transverse momentum and zero-padded to up to 100 particles per jet. From the particles 4-momenta seven input features for the network are computed: the logarithm of the transverse energy (log(p\(\mathrm {_T}\))), the logarithm of the energy (log(E)), the relative pseudorapidity (\({\Delta \eta }\)), the relative \({\Delta \phi }\) angle, the logarithm of the particle’s p\(\mathrm {_T}\) relative to the jet p\(\mathrm {_T}\) (log(\(\frac{\mathrm {p}\mathrm {_T}}{\mathrm {p{_T}(jet)}}\))), the logarithm of the particle’s energy relative to the jet energy (log\(\mathrm {\frac{E}{E(jet)}}\)), and the angular sepeartion between the particle and the jet axis (\(\varDelta R = \sqrt{(\varDelta \eta )^2 + (\varDelta \phi )^2}\)). The relative angles are calculated with respect to the jet axis. Based on these inputs, a graph is built for every jet. The edges of this graph are found by a k nearest neighbor search of the \(k=7\) closest particles. The architecture itself is build of three EdgeConv layers [78], followed by a global pooling operation over all the particles to preserve permutation invariance, followed by two fully connected layers.

A.2: Smart Backgrounds

The graph structure of the event decay tree is an ideal input for graph neural networks. A structure based on graph convolutional layers [74] provides high classification performance. The PDG identifiers are passed through an embedding layer to produce an eight-dimensional embedding space which is subsequently concatenated with the remaining eight-dimensional feature vector. These combined particle features are fed to 3 fully connected layers, where the weights are shared across all particles, followed by the ReLU activation function. The next transformation consists of 3 graph convolutional layers, where the adjacency matrix of the decay graph is constructed from the indices of mother particles. Furthermore, it is symmetrized to provide mother-daughter in addition to daughter–mother relationships. By taking the average of the resulting vector along the particle dimension it is reduced to event-level quantities which are finally transformed by 3 more fully connected layers, followed by the ReLU activation function. A final fully connected layer, followed by the sigmoid activation function provides the classification score. All hidden layers, including the graph convolutional layers, contain 128 units.

A.3: Spinodal or Not

Since the simulated output for the data corresponds to a 20x20 histogram, a natural choice for the network structure is that of a convolutional neural network. In this particular case, we employ three convolutional layers with intermediate pooling layers, followed by a single hidden fully connected layer and the output layer. The complete structure of the network is shown in Ref. [28].

A.4: EoS

Inspired by the good performance of CNNs in image recognition tasks, we constructed a VGG-like [79] CNN with 3 convolutional layers followed by fully connected layers for the EoS binary classification task. The histogram of the pions at min-rapidity is taken as the image-like input for the CNN. Batch normalization, dropout, and PReLU activation are used to avoid overfitting. Details of the network architecture and training are discussed in Ref. [64]. We also refer to Ref. [63] for more technical explanations.

A.5: Cosmic-Ray-Induced Air Showers

To process the time-and-space-dependent air-shower footprints, the model uses a two-fold architecture, inspired by Ref. [17, 70]. The first recurrent network part is used to analyze the signal traces recorded by each detector station. Therefore, a two-layer subnetwork—consisting of Long short-term memory networks [80]—is shared over each of the \(9 \times 9\) detector stations and extracts ten features out of each signal trace.

As the resulting output is image-like (\(9 \times 9 \times 10\)), in the second part, the obtained ten feature maps are concatenated to the map of arrival times (\(9 \times 9 \times 1\)) and analyzed in the following on spatial correlations using convolutional operations. This is performed using two blocks, which are separated by a pooling operation. Each of these blocks holds five residual units [81] with convolutional layers and batch normalization [82]. After the residual block, global max pooling and dropout are applied. To speed up the model’s convergence we use a layer that re-normalizes the yielded outputs, which lie at the start of the training mostly between 0 and 1, to the scale of the \(X_{\mathrm {max}}\) distribution. For the reference architecture, we preprocessed the data by performing a logarithmic re-scaling of the signal traces to compensate for the very different signal sizes. We further normalized the arrival times with respect to the time measured at the central station (station with the largest signal). For more details, refer to Ref. [70].

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visithttp://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Benato, L., Buhmann, E., Erdmann, M. et al. Shared Data and Algorithms for Deep Learning in Fundamental Physics. Comput Softw Big Sci 6, 9 (2022). https://doi.org/10.1007/s41781-022-00082-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s41781-022-00082-6