Abstract

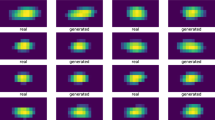

We provide a bridge between generative modeling in the Machine Learning community and simulated physical processes in high energy particle physics by applying a novel Generative Adversarial Network (GAN) architecture to the production of jet images—2D representations of energy depositions from particles interacting with a calorimeter. We propose a simple architecture, the Location-Aware Generative Adversarial Network, that learns to produce realistic radiation patterns from simulated high energy particle collisions. The pixel intensities of GAN-generated images faithfully span over many orders of magnitude and exhibit the desired low-dimensional physical properties (i.e., jet mass, n-subjettiness, etc.). We shed light on limitations, and provide a novel empirical validation of image quality and validity of GAN-produced simulations of the natural world. This work provides a base for further explorations of GANs for use in faster simulation in high energy particle physics.

Similar content being viewed by others

Notes

Full simulation can take up to \({\mathcal {O}}(\text {min/event})\).

While the azimuthal angle \(\phi\) is a real angle, pseudorapidity \(\eta\) is only approximately equal to the polar angle \(\theta\). However, the radiation pattern is nearly symmetric in \(\phi\) and \(\eta\) and so these standard coordinates are used to describe the jet constituent locations.

Bicubic spline interpolation in the rotation process causes a large number of pixels to be interpolated between their original value and zero, the most likely intensity value of neighboring cells. Though a zero-order interpolation would solve sparsity problems, we empirically determine that the loss in jet-observable resolution is not worth the sparsity preservation. A more in-depth discussion can be found in Appendix B.

References

Goodfellow IJ, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2014) Generative adversarial networks. In: Advances in neural information processing systems, pp 2672–2680

Odena A, Olah C, Shlens J (2016) Conditional image synthesis with auxiliary classifier GANs. arXiv:1610.09585

Chen X, Duan Y, Houthooft R, Schulman J, Sutskever I, Abbeel P (2016) InfoGAN: interpretable representation learning by information maximizing generative adversarial nets. arXiv:1606.03657

Salimans T, Goodfellow IJ, Zaremba W, Cheung V, Radford A, Chen X (2016) Improved techniques for training GANs. arXiv:1606.03498

Mirza M, Osindero S (2014) Conditional generative adversarial nets. arXiv:1411.1784

Odena A (2016) Semi-supervised learning with generative adversarial networks. arXiv:1606.01583

Radford A, Metz L, Chintala S (2015) Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv:1511.06434

Reed SE, Akata Z, Mohan S, Tenka S, Schiele B, Lee H (2016) Learning what and where to draw. arXiv:1610.02454

Reed SE, Akata Z, Yan X, Logeswaran L, Schiele B, Lee H (2016) Generative adversarial text to image synthesis. arXiv:1605.05396

Zhang H, Xu T, Li H, Zhang S, Huang X, Wang X, Metaxas D (2016) StackGAN: text to photo-realistic image synthesis with stacked generative adversarial networks. arXiv:1612.03242

Goodfellow IJ (2014) On distinguishability criteria for estimating generative models. arXiv:1412.6515

Aad G et al (2010) The ATLAS simulation infrastructure. Eur Phys J C 70:823–874

CMS Collaboration (2006) CMS Physics: Technical Design Report Volume 1: Detector Performance and Software, Geneva: CERN, p 521

Agostinelli S et al (2003) GEANT4: a simulation toolkit. Nucl Instrum Methods A506:250–303

Edmonds K, Fleischmann S, Lenz T, Magass C, Mechnich J, Salzburger A (2008) The fast ATLAS track simulation (FATRAS). No. ATL-SOFT-PUB-2008-001

Beckingham M, Duehrssen M, Schmidt E, Shapiro M, Venturi M, Virzi J, Vivarelli I, Werner M, Yamamoto S, Yamanaka T (2010) The simulation principle and performance of the ATLAS fast calorimeter simulation FastCaloSim. Tech. Rep. ATL-PHYS-PUB-2010-013, CERN, Geneva

Abdullin S, Azzi P, Beaudette F, Janot P, Perrotta A (2011) The fast simulation of the CMS detector at LHC. J Phys Conf Ser 331:032049

Childers JT, Uram TD, LeCompte TJ, Papka ME, Benjamin DP (2015) Simulation of LHC events on a millions threads. J Phys Conf Ser 664(9):092006

Cogan J, Kagan M, Strauss E, Schwarztman A (2015) Jet-images: computer vision inspired techniques for jet tagging. JHEP 02:118

de Oliveira L, Kagan M, Mackey L, Nachman B, Schwartzman A (2016) Jet-images—deep learning edition. JHEP07:069

Almeida LG, Backović M, Cliche M, Lee SJ, Perelstein M (2015) Playing tag with ANN: boosted top identification with pattern recognition. JHEP 07:086

Komiske PT, Metodiev EM, Schwartz MD (2016) Deep learning in color: towards automated quark/gluon jet discrimination. arXiv:1612.01551

Barnard J, Dawe EN, Dolan MJ, Rajcic N (2016) Parton shower uncertainties in jet substructure analyses with deep neural networks. arXiv:1609.00607

Baldi P, Bauer K, Eng C, Sadowski P, Whiteson D (2016) Jet substructure classification in high-energy physics with deep neural networks. Phys Rev D 93(9):094034

Chatrchyan S et al (2008) The CMS experiment at the CERN LHC. JINST 3:S08004

Cacciari M, Salam GP, Soyez G (2008) The catchment area of jets. JHEP 804:5. doi:10.1088/1126-6708/2008/04/005

Cacciari M, Salam GP, Soyez G (2012) FastJet user manual. Eur. Phys. J. C 72:1896

Krohn D, Thaler J, Wang L-T (2010) Jet trimming. JHEP 1002:084

Sjostrand T, Mrenna S, Skands PZ (2008) A brief introduction to PYTHIA 8.1. Comput Phys Commun 178:852–867

Sjostrand T, Mrenna S, Skands PZ (2006) PYTHIA 6.4 physics and manual. JHEP 0605:026

Nachman B, de Oliveira L, Paganini M (2017) Dataset release—Pythia generated jet images for location aware generative adversarial network training. doi:10.17632/4r4v785rgx.1

de Oliveira L, Paganini M (2017) lukedeo/adversarial-jets: initial code release. doi:10.5281/zenodo.400708

Maas AL, Hannun AY, Ng AY (2013) Rectifier nonlinearities improve neural network acoustic models. In: ICML workshop on deep learning, vol 28

Chintala S (2016) How to train a GAN? In: NIPS, workshop on generative adversarial networks

Maas AL, Hannun AY, Ng AY (2013) Rectifier nonlinearities improve neural network acoustic models. In: Proceedings of the 30th international conference on machine learning, Atlanta, Georgia, USA

Ioffe S, Szegedy C (2015) Batch normalization: accelerating deep network training by reducing internal covariate shift

Kingma DP, Ba J (2014) Adam: a method for stochastic optimization. CoRR. arXiv:1412.6980

Chollet F (2017) Keras. https://github.com/fchollet/keras

Abadi M et al (2015) TensorFlow: large-scale machine learning on heterogeneous systems. https://www.tensorflow.org/

Thaler J, Van Tilburg K (2011) Identifying boosted objects with N-subjettiness. JHEP 1103:015

Larkoski AJ, Neill D, Thaler J (2014) Jet shapes with the broadening axis. JHEP 04:017

Goodfellow, Ian J et al (2013) Maxout networks (Preprint). arXiv:1302.4389

Rubner Y, Tomasi C, Guibas LJ (2000) The earth mover’s distance as a metric for image retrieval. Int J Comput Vis 40:99–121

Acknowledgements

The authors would like to thank Ian Goodfellow for insightful deep learning related discussion, and would like to acknowledge Wahid Bhimji, Zach Marshall, Mustafa Mustafa, Chase Shimmin, and Paul Tipton, who helped refine our narrative.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The work of Benjamin Nachman and Michela Paganini was supported in part by the Office of High Energy Physics of the U.S. Department of Energy under contracts DE-AC02-05CH11231 and DE-FG02-92ER40704. Luke de Oliveira is founder and CEO at Vai Technologies, LLC.

Appendices

Appendix A: Additional Material

See Figs. 25, 26, 27, 28, 29, 30, 31, 32 and 33.

Appendix B: Image Pre-processing

Reference [20] contains a detailed discussion on the impact of image pre-processing and information content of the image. For example, it is shown that normalizing each image removes a significant amount of information about the jet mass. One important step that was not fully discussed is the rotational symmetry about the jet axis. It was shown in Ref. [20] that a rotation about the jet axis in \(\eta -\phi\) does not preserve the jet mass, i.e. \(\eta _i\mapsto \cos (\alpha )\eta _i+\sin (\alpha )\phi _i,\phi _i\mapsto \cos (\alpha )\phi _i-\sin (\alpha )\eta _i\), where \(\alpha\) is the rotation angle and i runs over the constituents of the jet. One can perform a proper rotation about the x-axis (preserving the leading subjet at \(\phi =0\)) via

where

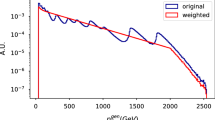

Figure 34 quantifies the information lost by various preprocessing steps, highlighting in particular the rotation step. A ROC curve is constructed to try to distinguish the preprocessed variable and the unprocessed variable. If they cannot be distinguished, then there is no loss in information. Similar plots showing the degradation in signal versus background classification performance are shown in Fig. 35. The best fully preprocessed option for all metrics is the Pix + Trans + Rotation(Cubic) + Renorm. This option uses the cubic spline interpolation from Ref. [20], but adds a small additional step that ensures that the sum of the pixel intensities is the same before and after rotation. This is the procedure that is used throughout the body of the manuscript.

ROC curves for classifying signal versus background based only on the mass (left) or n-subjettiness (right). Note that in some cases, the preprocessing can actually improve discrimination (but always degrades the information content—see Fig. 34)

Rights and permissions

About this article

Cite this article

de Oliveira, L., Paganini, M. & Nachman, B. Learning Particle Physics by Example: Location-Aware Generative Adversarial Networks for Physics Synthesis. Comput Softw Big Sci 1, 4 (2017). https://doi.org/10.1007/s41781-017-0004-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s41781-017-0004-6