Abstract

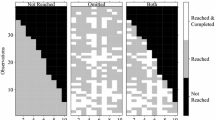

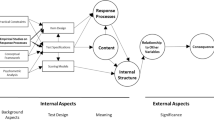

A new response time-based method for coding omitted item responses in computer-based testing is introduced and illustrated with empirical data. The new method is derived from the theory of missing data problems of Rubin and colleagues and embedded in an item response theory framework. Its basic idea is using item response times to statistically test for each individual item whether omitted responses are missing completely at random (MCAR) or missing due to a lack of ability and, thus, not at random (MNAR) with fixed type-1 and type-2 error levels. If the MCAR hypothesis is maintained, omitted responses are coded as not administered (NA), and as incorrect (0) otherwise. The empirical illustration draws from the responses given by N = 766 students to 70 items of a computer-based ICT skills test. The new method is compared with the two common deterministic methods of scoring omitted responses as 0 or as NA. In result, response time thresholds from 18 to 58 s were identified. With 61%, more omitted responses were recoded into 0 than into NA (39%). The differences in difficulty were larger when the new method was compared to deterministically scoring omitted responses as NA compared to scoring omitted responses as 0. The variances and reliabilities obtained under the three methods showed small differences. The paper concludes with a discussion of the practical relevance of the observed effect sizes, and with recommendations for the practical use of the new method as a method to be applied in the early stage of data processing.

Similar content being viewed by others

Notes

At this point, we discuss missing responses at a general level. A distinction between the types “missing by design”, “not reached”, and “omitted” is introduced later in the text in the section “Types of Missing Item Responses”.

References

Arbuckle JL (1996) Full information estimation in the presence of incomplete data. In: Marcoulides GA, Schumacker RE (eds) Advanced structural equation modeling. Lawrence Erlbaum Publishers, Mahwah, pp 243–277

Champely, S. (2017). pwr: Basic functions for power analysis [software]. R package version 1.2-1

Chen HY, Little RJA (1999) A test of missing completely at random for generalised estimating equations with missing data. Biometrika 86:1–13

Core Team R (2017) R: a language and environment for statistical computing [software]. R Foundation for Statistical Computing, Vienna

De Ayala RJ (2009) The theory and practice of item response theory. The Guildford Press, New York

De Ayala RJ, Plake BS, Impara JC (2001) The impact of omitted responses on the accuracy of ability estimation in item response theory. J Educ Meas 38:213–234

Diggle PJ (1989) Testing for random dropouts in repeated measurement data. Biometrics 45:1255–1258

Enders CK (2010) Applied missing data analysis. Guilford Press, New York

Enders CK, Bandalos DL (2001) The relative performance of full information maximum likelihood estimation for missing data in structural equation models. Struct Equ Model 8:430–457

Engelhardt L, Naumann J, Goldhammer F, Frey A, Wenzel SFC, Hartig K, Horz H (2018) Convergent evidence for validity of a performance-based ICT skills test. European Journal of Psychological Assessment

Finch H (2008) Estimation of item response theory parameters in the presence of missing data. J Educ Meas 45:225–245

Frey A, Hartig J, Rupp A (2009) Booklet designs in large-scale assessments of student achievement: theory and practice. Educ Meas: Issues Pract 28:39–53

Glas CAW, Pimentel J (2008) Modeling nonignorable missing data in speeded tests. Educ Psychol Measur 68:907–922

Goldhammer F, Kroehne U (2014) Controlling individuals’ time spent on task in speeded performance measures: experimental time limits, posterior time limits, and response time modeling. Appl Psychol Meas 38:255–267

Goldhammer F, Martens T, Lüdtke O (2017) Conditioning factors of test-taking engagement in PIAAC: an exploratory IRT modelling approach considering person and item characteristics. Large-scale Assess Educ 5(1):18

Hedges LV (1981) Distribution theory for Glass’s estimator of effect size and related estimators. J Educ Stat 6:107–128

Holman R, Glas CAW (2005) Modelling non-ignorable missing data mechanisms with item response theory models. Br J Math Stat Psychol 58:1–17

International ICT Literacy Panel. (2002). Digital transformation: a framework for ICT literacy. Princeton, NJ. http://www.ets.org/research/policy_research_reports/publications/report/2002/cjik

Kim KH, Bentler PM (2002) Tests of homogeneity of means and covariance matrices for multivariate incomplete data. Psychometrika 67:609–624

Köhler C, Pohl S, Carstensen CH (2015) Taking the missing propensity into account when estimating competence scores: evaluation of item response theory models for nonignorable omissions. Educ Psychol Measur 75:850–874

Kong X, Wise J, Bhola SL (2007) Setting the response time threshold parameter to differentiate solution behavior from rapid-guessing behavior. Educ Psychol Meas 67:606–619

Lee Y-H, Chen H (2011) A review of recent response-time analyses in educational testing. Psychol Test Assess Model 53:359–379

Little RJA (1988) A test of missing completely at random for multivariate data with missing values. J Am Stat Assoc 83:1198–1202

Little RJA, Rubin DB (2002) Statistical analysis with missing data, 2nd edn. Wiley, Hoboken

Lord FM (1974) Estimation of latent ability and item parameters when there are omitted responses. Psychometrika 39(2):247–264

Ludlow LH, O’Leary M (1999) Scoring omitted and not-reached items: practical data analysis implications. Educ Psychol Measur 59:615–630

Mislevy RJ, Wu PK (1996) Missing responses and IRT ability estimation: Omits, choice, time limits, and adaptive testing (RR-96-30-ONR). Educ Testing Serv, Princeton

Neyman J, Pearson ES (1933) On the testing of statistical hypotheses in relation to probability a priori. Proc Camb Philos Soc 29:492–510

O’Muircheartaigh C, Moustaki I (1999) Symmetric pattern models: a latent variable approach to item non-response in attitude scales. J R Stat Soc, Ser A 162:177–194

OECD (2016) Technical report of the survey of adult skills (PIAAC), 2nd edn. Author, Paris

Robitzsch A (2016) Zu nichtignorierbaren Konsequenzen des (partiellen) ignorierens fehlender item responses im large-scale assessment [on non-negligible consequences of (partially) ignoring missing item responses in large- scale assessments]. In: Suchań B, Wallner-Paschon C, Schreiner C (eds) PIRLS & TIMSS 2011—die Kompetenzen in Lesen, Mathematik und Naturwissenschaft am Ende der Volksschule: Österreichischer Expertenbericht. Leykam, Graz, pp 55–64

Robitzsch A, Kiefer T, Wu M (2018) TAM: test analysis modules [software]. R package version 2.9-35

Rose N, von Davier M, Xu X (2010) Modeling non-ignorable missing data with IRT (ETS Research Report No. Educational Testing Service, Princeton, pp 10–11

Rubin DB (1976) Inference and missing data. Biometrika 63:581–592

Rubin DB (1987) Multiple imputation for nonresponse in surveys. Wiley, New York

Rutkowski L, Gonzales E, von Davier M, Zhou Y (2014) Assessment design for international large-scale assessments. In: Rutkowski L, von Davier M, Rutkowski D (eds) Handbook of international large-scale assessment: background, technical issues, and methods of data analysis. CRC Press, Boca Raton, pp 75–95

Satterthwaite FE (1946) An approximate distribution of estimates of variance components. Biometr Bull 2:110–114. https://doi.org/10.2307/3002019

van der Linden WJ (2009) Conceptual issues in response-time modeling. J Educ Meas 46:247–272

Warm TA (1989) Weighted likelihood estimation of ability in item response theory. Psychometrika 54:427–450

Weeks JP, von Davier M, Yamamoto K (2016) Using response time data to inform the coding of omitted responses. Psychol Test Assess Model 58:671–701

Welch BL (1947) The generalization of “Student’s” problem when several different population variances are involved. Biometrika 34:28–35. https://doi.org/10.1093/biomet/34.1-2.28

Wenzel SFC, Engelhardt L, Hartig K, Kuchta K, Frey A, Goldhammer F, Naumann J, Horz H (2016) Computergestützte, adaptive und verhaltensnahe Erfassung Informations- und Kommunikationstechnologie-bezogener Fertigkeiten (ICT-Skills) [Computerized adaptive and behaviorally oriented measurement of Information and communication technology-related skills (ICT-skills)]. In Bundesministerium für Bildung und Forschung (BMBF) Referat Bildungsforschung (Hrsg.), Forschungsvorhaben in Ankopplung an Large-Scale Assessments (pp 161–180). Niestetal: Silber Druck

Wilkinson L, Task Force on Statistical Inference, American Psychological Association, Science Directorate (1999) Statistical methods in psychology journals: guidelines and explanations. Am Psychol 54:594–604. https://doi.org/10.1037/0003-066X.54.8.594

Wise SL, Kingsbury GG (2016) Modeling student test-taking motivation in the context of an adaptive achievement test. J Educ Meas 53:86–105

Wise SL, Ma L (2012) Setting response time thresholds for a CAT item pool: the normative threshold method. Paper presented at the annual meeting of the National Council on Measurement in Education, Vancouver, Canada

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

About this article

Cite this article

Frey, A., Spoden, C., Goldhammer, F. et al. Response time-based treatment of omitted responses in computer-based testing. Behaviormetrika 45, 505–526 (2018). https://doi.org/10.1007/s41237-018-0073-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41237-018-0073-9