Abstract

Multi-objective particle swarm optimization algorithms encounter significant challenges when tackling many-objective optimization problems. This is mainly because of the imbalance between convergence and diversity that occurs when increasing the selection pressure. In this paper, a novel adaptive MOPSO (ANMPSO) algorithm based on R2 contribution and adaptive method is developed to improve the performance of MOPSO. First, a new global best solutions selection mechanism with R2 contribution is introduced to select leaders with better diversity and convergence. Second, to obtain a uniform distribution of particles, an adaptive method is used to guide the flight of particles. Third, a re-initialization strategy is proposed to prevent particles from trapping into local optima. Empirical studies on a large number (64 in total) of problem instances have demonstrated that ANMPSO performs well in terms of inverted generational distance and hyper-volume metrics. Experimental studies on the practical application have also revealed that ANMPSO could effectively solve problems in the real world.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Many-objective optimization problems (MaOPs) are widely applied to many fields in the real world, such as water supply portfolio planning [1], crash safety design of vehicles [2], and car cab design with preference information [3]. Due to the conflicting characteristics of these objectives, it is difficult to find an optimal solution that fits all objectives. Therefore, it is obvious that the key to solve the MaOPs lies in finding a set of trade-off solutions [4, 5].

To tackle MaOPs, many nature-inspired heuristic algorithms are proposed in recent years (NSGA-II [6], NSGA-III [7], MOPSO [8], and MOEA/D [9]). However, the effectiveness of these MOEAs deteriorate dramatically when dealing with MaOPs. Therefore, a number of redesigned MOEAs have sprung up, including RPD-NSGAII [10], NSGAII-SDR [11], RVEA [12], SRA [13], MOMBI-II [14] and IEA [15].

In addition to the MOEAs mentioned above, multi-objective particle swarm optimization (MOPSO) attracts many people because of their highly competitive performance. Moreover, according to the results from [16], MOPSO is able to cover the full Pareto Front of the test functions and converge fast. In addition, the swarm intelligence algorithms like MOPSO are also useful in practical applications. For example, a hybrid discrete artificial bee colony algorithm is presented to solve a blocking lot-streaming flow shop (BLSFS) scheduling problem. This algorithm adopts three initialization strategies to improve the quality of the initial population [17]. An evolutionary multi-objective robust scheduling algorithm is proposed to tackle the new model of BLSFS [18]. The innovation of this algorithm is the suggestion of two novel crossover operations. The experimental studies have shown that the swarm intelligence algorithm is effective in solving practical problems.

However, the balance between convergence and diversity is still a particular issue to be addressed about MOPSOs. The convergence depends on the ability of particles to approach the true Pareto Front. Many algorithms increase selection pressure to improve convergence. Nevertheless, the diversity of solutions may decrease if the focus is only put on improving convergence. Thus, how to balance convergence and diversity for MOPSOs is a challenge. Many MOPSOs have been redesigned to deal with this problem. For example, a competitive mechanism is suggested in CMOPSO to improve diversity [19]. The domination-based strategy and decomposition-based strategy are combined in D2MOPSO, and it deletes the external archive [20]. A balanceable fitness estimation (BFE) method is proposed to improve both convergence and diversity in NMPSO, which updates the external archive by calculating the convergence distance and diversity distance [21]. The multi-group multi-objective collaborative optimization framework proposed by Zhan et al. optimizes each objective function through sub-populations [22]. This algorithm sets up an archive maintenance mechanism based on Pareto to solve MOPs [22]. A “belief space” is developed to adjust the parameters of the velocity formula [23]. The random immigrants’ strategy is first introduced into MOPSOs to increase diversity and to prevent particles from falling into local optima [24]. These algorithms are proposed to balance convergence and diversity by improving diversity or the searching ability, which can increase the robustness of these algorithms. While, only increasing randomness cannot bring a qualitative leap to the overall performance of algorithms. Furthermore, it is difficult to ensure that convergence does not decrease while increasing diversity.

In fact, since the global best solution (gbest) and personal best solution (pbest) guide the evolutionary search. The key of the balance between convergence and divergence lies in the performance of two leaders. According to this, some algorithms build a new mechanism of global best solutions selection. A new parallel coordinates system is proposed in [25]. This algorithm selects leaders by setting a leader group. A hybrid framework of the solution distribution entropy is adopted to select global best solutions in AMOPSO [26]. In addition, an adaptive strategy is used to update the flight parameters in this algorithm. The multi-competition leader selection is developed in a modified competitive mechanism multi-objective particle swarm [27]. The extreme solutions are proposed based on Pareto dominance in f-MOPSO/Div algorithm [28]. A framework of the grid-based ranking scheme is introduced to select leaders, which is designed for MaOPs [29]. These algorithms above mainly use the Pareto-based strategy. However, the disadvantage of these algorithms is that they may be unsuitable for tackling MaOPs. The main reason is that the gbest with poor performance impedes the convergence of the algorithm unavoidably. In light of this dilemma, the indicator-based strategy is suggested into MOPSOs, such as MOPSOhv [30], R2-MOPSO [31], R2SMMOPSO [32], IDMOPSO [33], and so on. The contributions of indicators are applied to select gbest in these algorithms. It is reported that desirable properties make the R2 indicator as a viable candidate to be incorporated into an indicator-based algorithm. There is a defect that some algorithms among them delete the external archive, which may lead to insufficient diversity. Meanwhile, the indicator of hyper-volume (HV) is likely to cause the increase of computational complexity.

Generally, these variant MOPSOs achieve promising results in solving problems with 2–3 objectives because of the outstanding heritages of PSO. There are a few approaches designed for MaOPs, these redesigned MOPSOs may suffer from effectiveness deterioration on the balance between convergence and diversity when solving MaOPs with complex Pareto Front. The poor performance is mainly induced by the traditional gbest selection mechanism. Meanwhile, a uniform distribution of solutions relies on the leaders and the searching ability of particles. Therefore, it is crucial to modify the flight of particles. Besides, most of them ignore the fact that the fast convergence rate may become a disadvantage of MOPSOs if it is not controlled effectively.

Motivated by these problems, this paper introduces the R2 contribution and an adaptive strategy into the novel multi-objective particle swarm (ANMPSO) to balance convergence and diversity. Three improvements are mainly proposed in this paper to respond to the current problems encountered by MOPSOs:

-

1.

The global best selection solutions mechanism that uses the R2 contribution is introduced to select the gbest with better convergence and diversity.

-

2.

The adaptive strategy that uses the population spacing information to adjust parameters is proposed to improve the distribution of particles with appropriate diversity and convergence.

-

3.

The updating of particle age is adopted to prevent particles from trapping into local optima.

The remaining parts of this paper are as follows. Some basic knowledge before this study is briefly described in the next section. The algorithm framework of ANMPSO and the details of each improvement are introduced in the third section. The experimental results compared with other algorithms are depicted in the fourth section. Finally, the conclusion and future works are described in the last section.

Basic knowledge

Many-objective problem

Without loss of generality, MaOP consists of more than three conflicting objectives [34]. A MaOP can be stated as

where m is the number of objectives, x is the decision vector, and fi(x) is the ith objective function.

If a decision vector y is dominated by another decision vector z, referred to as z dominates y or \({\text{z}} \prec {\text{y}}\), which can be expressed as

where i, j = 1, 2, …, m. In MaOP, if a solution is not dominated by any other solution, it can be referred to as a Pareto Optimal Solution. The corresponding objective vector set of the Pareto Set is called Pareto Front (PF).

Many-objective particle swarm optimization

Many-objective particle swarm optimization (MOPSO) is proposed in [8]. In the evolutionary process, pbest represents the personal best solution, and gbest represents the global best solution. A gbest can be found by the whole particle swarm. In this paper, a new velocity updating equal is adopted [21]. In each iteration, the new velocity and the new position is updated by

where t represents the tth iteration in the evolutionary process; \(x_{{{\text{pbest}}_{i} }}\) and \(x_{{{\text{gbest}}_{i} }}\) are the positions of pbest and gbest; ω is the inertia weight; c1, c2, and c3 are learning factors; r1, r2, and r3 are the random values uniformly distributed in [0, 1].

R2 indicator and R2 contribution

R2 indicator and R2 contribution are used to assess the relative quality of two sets of individuals, which are proposed in [35, 36]. The Tchebycheff Scalarizing Function in MOEA/D is employed as the utility function in this paper [9].

Assuming that a standard weighted Tchebycheff function has a particular reference point z*, the indicator can be used to assess the quality of a single individual set (A) against z*:

where W indicates a set of weight vectors. Each weight vector w = (w1, w2,…, wM) ∈ W is placed in the M-dimensional objective space. \(\frac{\text{1}}{\left|\text{W}\right|}\) denotes a probability distribution on W. For MaOPs, the weight vectors are randomly initialized in such a way that the sum of each weight vector is equal to one in the objective space.

The contribution of a solution x ∈ A to the R2 indicator is defined as

Balanceable fitness estimation

The balanceable fitness estimation (BFE) is proposed in [21]. This method is mainly used to define the fitness value of a particle by weights, which has a better performance when updating the external archive and selecting the global best solutions. Thus, this method is followed and combined with the R2 contribution in this paper. The main formula is as follows:

where \(Cd(p_{i} {\text{,}}P)\) denotes the normalized diversity distances and \(Cv(p_{i} {\text{,}}P)\) indicates the convergence distances of \(p_{i}\). α and β are two factors that mitigate the impact of diversity distances and convergence distances. Specific details can refer to [21].

The proposed algorithm framework

The framework of ANMPSO is proposed in this section. Five parts are, respectively, described in the following subsections: select global best solutions, adaptive strategy, reinitialize, update external archive, and the evolutionary search on the external archive. Then, a complete algorithm of ANMPSO is proposed.

Select global best solutions

The gbest selection mechanism is a critical step for the entire algorithm. The convergence, diversity, and effectiveness of the algorithm are affected by the selection of leaders. The BFE method assigns BFE value to each non-dominated solution in the external archive using the weight method. The gbest of this method is chosen equally from the top 10% of individuals in the external archive with better BFE values. To further improve diversity and convergence, R2 contribution is introduced in this paper. R2 contribution is to measure the relative quality of two approximations of the PF based on utility functions that map a vector \(\vec{y} \in \Re ^{k}\) to a scalar value \(u \in \Re\).

The utility function adopted by this paper is the Tchebycheff Function, which can be defined as

where \(\omega = {(\omega _{1} ,...,\omega _{k} )} \in W\) is a weight vector, and z* is an ideal point (an objective vector that is not dominated by any point).

R2 contribution can be calculated using the utility function and the equal (6). According to the BFE value and R2 contribution to select leaders in the external archive. The detailed steps of the algorithm are described as follows:

-

1.

Calculate the BFE values of all the individuals in the external archive, and mark the top 10% of individuals with better BFE values.

-

2.

Calculate the R2 contributions of marked individuals.

-

3.

Select the individuals which have the maximum contribution value of R2 and set as gbest.

The pseudo-code is set in Algorithm 1.

Adaptive strategy

Parameters are essential factors in the flying process of particles. The parameters can directly affect the searching ability of particles by adjusting the flying speed and the directions of the particles. Better distribution is determined by the abilities of exploitation and exploration. Most existing works on MOPSOs show that the larger ω and c1, and the smaller c2 would lead to better global exploration. The opposite situation would promote the ability of local exploitation. To improve the distribution of particles, an adaptive method of adjusting parameters is proposed in this paper to change the flying directions of particles. The distance information between particles is used to build a non-linear function. The value of the distance between the adjacent particles and the ith particle at (t + 1)th iteration is defined as

where li (t + 1) is the minimum Manhattan distance between the ith particle and the other particles, \(\bar{l}(t + 1)\) is the average minimum Manhattan distance of all particles. Here, a function is used to better describe the flight process of particles:

where µ (t + 1) is the non-linear adaptive function of the flight process. Then, the proposed adaptive strategy for flight parameters ω, c1, c2, and c3 can be expressed by

Reinitialize

In this paper, a regular dominance strategy is used to update pbest. pbest could be updated if the current position of particle has dominated the pbest. Otherwise, the pbest keep constantly. Considering that the position of a particle remains unchanged, it may fall into local optima. Thus, a re-initialization strategy is proposed in this paper. The detailed steps are as follows:

-

1.

The definition of a particle’s age is quoted and the initial value is set to 0.

-

2.

The maximum value Ta is set to 2.

-

3.

If the pbest is not updated, the age of a particle would increase by one year. Once the age of a particle is over the value of Ta, the particle may trap into local optima. Equal (15) is used to do re-initialization in this situation:

$${{x}}_{{i}}^{{t}{+1}}{\sim}{N}{\Bigg(}\frac{{{x}}_{{{gbest}}_{{i}}}{-}{{x}}_{{{pbest}}_{{i}}}}{{2}}{,|}{{x}}_{{{gbest}}_{{i}}}{-}{{x}}_{{{pbest}}_{{i}}}{|\Bigg).}$$(15)

Update external archive

The external archive of MOPSO has a threshold value. When the number of non-dominated solutions reaches this threshold value, the external archive must be updated. The BFE method is used in this paper to evaluate the qualities of the solutions by estimating the fitness value of the particles. When the archive needs to be updated, the solution with the smallest BFE value must be deleted.

Evolutionary search on the external archive

After the first few steps, some non-dominated solutions are remained in the external archive as leaders to guide the flight of other particles. To maximize the qualities of the solutions, the simulated binary crossover (SBX) and polynomial-based mutation (PM) are used in ANMPSO to perform an evolutionary search in the external archive. They are widely used in MOEAs to solve MOPs [22] and are expanded for MaOPs in this paper. Two searching modes are conducive to finding better solutions.

The complete algorithm of ANMPSO

The previous five parts contain all the steps of ANMPSO, select global best solutions, adaptive strategy, reinitialize, update external archive, and evolutionary search on the external archive. The detailed pseudo-code of the algorithm is described in Algorithm 3. An initialization process is executed from line 1 to line 6. At the start of the optimization cycle, the velocities of particles are set to 0, and the positions are initialized randomly. Line 8 executes the selection of gbest, with details shown in Algorithm 1. Then, the algorithm steps into the main loop. Lines 12–13 are the processes of updating the velocities and the positions of particles using an adaptive strategy. Lines 14–19 represent the re-initialization process. The main loop includes lines 9–16. After that, the dominance relationship will be checked to update the pbest based on Pareto. Line 20 is the process of updating the external archive using the BFE method. The evolutionary search strategy is applied on A to get a new swarm S, which provided another searching mode. At last the final solutions in A are reported as the Pareto Front.

Experimental results

Test functions

In this section, a specific experimental process is discussed. DTLZ1–DTLZ7 [37] and WFG1–WFG9 [38] test functions with 4, 6, 8, and 10 objectives are used to evaluate the comprehension performance of ANMPSO. For DTLZ1–DTLZ7, the number of decision variables is set as D = m + k−1, where m represents the objective number. As suggested in [37], k = 5 for DTLZ1 and k = 10 for DTLZ2–DTLZ7. For WFG1–WFG9, the number of decision variables D is set as 24. Where the position-related parameters K = 2 × (m − 1), and distance-related parameters L = 20 [13, 21].

Performance metrics

The goal of the MaOPs is to minimize the distance between a set of solutions and true PF. To evaluate the performance of the algorithms under consideration, the comprehensive indicators of inverted generation distance (IGD) [39] and HV [40] are used in this paper. They are widely adopted in evaluating the performance of MOEAs [7, 41].

A smaller IGD value means that the solutions are closer to the PF. The definition of IGD is

where the \(\bar{d}_{i}\) indicates the Euclidean distance between the solution i in Pareto-optimal set to the nearest solution in P. The \(\bar{N}\) is the size of the Pareto-optimal set. Similar to [21], for calculating IGD, 200,000 reference points on the Pareto Front of each test instance are sampled by a systematic approach [39, 42].

The HV indicator measures the volume solutions that are dominated by the approximate set. A larger HV value means a better performance of algorithm. The definition of HV is

where L is the Lebesgue measure, z* is the reference point in the objective space. According to [21], the z* is set as (1.0, 1.0, …, 1.0) for calculating HV. As recommended in [13], all the objectives are normalized using the vector 1.1 × (f1 max, f2 max, …, fm max), where fk max (k = 1, 2, …, m) is the maximum value of the kth objective in the true PF.

Algorithms and parameter settings

In this paper, seven state-of-the-art MOEAs (GrEA [43], RVEA [12], NMPSO [21], SPEA2-SDE [44], Two-Arch2 [45], SRA [13], and RPD-NSGAII [10]) are selected for a comparative experimental study. Each one is detailed as follows:

GrEA [43]: In GrEA, grid dominance that determines the mutual relationship of individuals is proposed to balance convergence and diversity. Numerical studies show the typicality of this algorithm.

RVEA [12]: RVEA uses reference vectors to decompose a multi-objective problem into several single-objective sub-problems. The term angle penalized distance is suggested to balance convergence and diversity. Experimental studies show that RVEA is highly competitive.

NMPSO [21]: NMPSO is a novel approach, which proposes the concept of balanced fitness value to increase the selection pressure and introduces the weighting method. NMPSO has a better performance in solving MaOPs compared with other MOPSOs.

SPEA2-SDE [44]: To improve the convergence, SPEA2-SDE proposes the shifted distance estimation approach. Because of the particularity of this method, SPEA2-SDE is comparable.

Two-Arch2 [45]: To balance the convergence, diversity, and complexity in solving MaOPs, particles are divided into two archives: convergence-related and diversity-related namely. This algorithm could resolve MaOPs (up to 20 objectives) with satisfactory performance.

SRA [13]: SRA adopts the stochastic ranking technique to balance the searching biases of different indicators. It is a typical indicator-based algorithm.

RPD-NSGAII [10]: RPD-NSGAII proposes a novel decomposition-based dominance relation to solve MaOPs, which could cope with MaOPs with up to 20 objectives.

The individual parameter settings for each algorithm in this paper are set according to their respective papers. However, due to the weight vector, the objective numbers are set the same as ANMPSO. As proposed in [40], the number of objectives is 165, 252, 330, and 275. The maximum number of evaluations in this paper is set to 105. These algorithms run 30 times to get the average values of IGD and HV.

Moreover, Wilcoxon’s sum test is implemented on the PlatEMO [46]. The results with a significance level of 0.05 obtained on the PlatEMO are used to analyze results. Where “+”, “-”, and “=” represent that the results obtained by other algorithms, respectively, mean “significantly better than”, “significantly worse than”, and “practically similar to” the one obtained by ANMPSO using this statistical test (Table 1).

Comparisons of ANMPSO with state-of-the-art algorithms

Comparisons results on DTLZ1–DTLZ7

Table 2 shows the IGD comparison results of ANMPSO for seven current MOEAs on DTLZ1–DTLZ7 with 4–10 objectives. As observed from Table 2, the proportion of the proposed ANMPSO algorithm in the test instances outperforms GrEA, RVEA, NMPSO, SPEA2-SDE, Two-Arch2, SRA, and RPD-NSGAII with a respective statistical significance of 12/28, 25/28, 24/28, 14/28, 10/28, 21/28, and 11/28. These results are summarized in the last row of Table 2.

The performances of algorithms vary not only with performance metrics but also with problem categories. For DTLZ1 and DTLZ3 problems, GrEA and RPD-NSGAII achieve the best IGD values, respectively. ANMPSO ranks 3rd, as it outperforms other algorithms. For the DTLZ2 problem, ANMPSO is superior than RVEA, NMPSO, SPEA2-SDE, and SRA, has achieved moderate performance in terms of IGD. For the DTLZ4 problem with large “hill sizes”, the main challenge of maintaining diversity of the population is caused by a non-uniform density of solutions. Many algorithms cannot jump out of the local optima for DTLZ4. ANMPSO performs best for IGD in this problem because that the re-initialization strategy can prevent particles from falling local optima. For the DTLZ5 problem, Two-Arch2 ranks 1st, and SRA is second only to Two-Arch2. Although this problem is degenerate, ANMPSO has the best performance, indicating that ANMPSO can solve problems with complex true PF. For the DTLZ6 problem, ANMPSO beats GrEA, RVEA, and SRA. Its performance is similar to SPEA2-SDEs. For the DTLZ7 problem, ANMPSO also shows a superior performance than other compared algorithms.

In Table 3, the comparative results of ANMPSO and seven state-of-the-art algorithms are listed for DTLZ1–DTLZ7 with 4, 6, 8, and 10 objectives using HV. Similar to the conclusions observed from the IGD comparison results, ANMPSO is the best-performing algorithm. Many compared algorithms cannot obtain the HV values when tackling DTLZ1 and DTLZ3 because they are multi-modal problems. According to HV metric values, it can be observed that ANMPSO performs better than other algorithms on DTLZ2 and DTLZ5 problems.

Figure 1 shows the curves of IGD metric values on DTLZ4 and DTLZ7 with 10 objectives. As can be seen from Fig. 1a, b, even if the curve changes of ANMPSO, NMPSO, and SPEA2-SDE are similar, ANMPSO has an obvious advantage over other algorithms and it obtains the best IGD values. As can be seen from Fig. 1b, although the curve of RVEA shows a rapid downward trend in the early stage, it begins to rise slightly in the later stage. The curve’s trend of Two-Arch2 shows that the convergence speed of this algorithm is slow, and the IGD value is worse than that of ANMPSO. The curves of SPEA2-SDE and NMPSO are barely fluctuated during the later iteration. This situation demonstrates that these algorithms probably fall into local optima. Generally, by comparing the results, ANMPSO has excellent performance compared these MOEAs.

Comparisons results on WFG1–WFG9

Tables 4 and 5 show the results of comparisons between ANMPSO and seven typical MOEAs on WFG1–WFG9 benchmark functions. From the results of Table 4, ANMPSO won 22 times out of 36 comparisons and won 21 times out of 36 comparisons on Table 5.

According to the results in Table 4, regarding the WFG1 with a mixed front, ANMPSO is better than GrEA and SPEA2-SDE. Both RVEA and SRA have worse results. The WFG2 has a disconnect and convex front, ANMPSO outperforms other algorithms. The results of ANMPSO show a strong competitiveness for the WFG3 with a linear and degenerate front. The reason why ANMPSO performs well is that the adaptive strategy maintains the diversity in a high-dimensional space. The WFG4–9 problems have concave fronts. Among these comparative results, ANMPSO obtains the second-best results.

From Table 5, the comparison results of ANMPSO and seven state-of-the-art algorithms are listed for WFG1–WFG9 using HV. Observed from the HV comparison of results, the advantages of ANMPSO are evident. ANMPSO obtains the best results on 21 out of 36 comparisons. While GrEA, NMPSO, SPEA2-SDE, Two-Arch2, and RPD-NSGAII, respectively, perform the best on 1, 1, 2, 3, and 8 comparisons, RVEA and SRA do not have the best results.

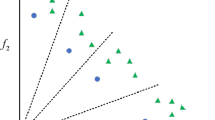

Figures 2 and 3 show parallel coordinates of the solution sets on the WFG3 and WFG5 for 10 objectives with the best IGD values among all the 30 runs and 105 evaluations. In two figures, each polyline in the graph represents a solution, and each vertex on the polyline represents an objective value. The WFG3 has a linear and convex front to detect whether MOEAs have better performances. It can be observed from Fig. 2 that ANMPSO can completely cover the whole true PF with a competitive diversity. ANMPSO is better than SPEA2-SDE, Two-Arch2, SRA, and RPD-NSGAII. Besides, RVEA is relatively better than ANMPSO, since it is more evenly distributed. As observed from Fig. 3, the result of ANMPSO also shows an excellent performance with covering the true PF entirely. This uniform distribution is credit to parameter adjustments and the guidance of gbest. The local exploitation ability and global exploration ability are improved with adaptive strategy.

Application to the crash safety design of vehicles

To verify the availability and practicability of the proposed ANMPSO algorithm, it is applied to optimize the problem of the crash safety design of vehicles. This problem aims to optimize the crash-worthiness of vehicle frontal structure. Considering the lightweight, the mass of the vehicle is set as the first objective. The second objective is to select an integration of collision acceleration in the “full frontal crash”. Considering the most severe mechanical damage, the third objective is set as the toe board intrusion in the “offset-frontal crash”. The nature of the Pareto-optimal Front is complex with holes and the density of its points is variable.

As such, the multi-objective optimization problem is formulated as

where x = (t1, t2, t3, t4, t5)T, \(A_{{{\text{in}}}} = \int_{{t_{1} }}^{{t_{2} }} {a{\text{d}}t}\). The detailed information can be found in [2].

For this problem, all objectives are to be minimized. The maximum number of iterations is set to 1000 and the population size is set to 100. In this problem, the number of decision variables D is 5. To make a fair comparison, all the experiments are run 10 times with different random seeds, and the average IGD and HV values in 10 runs are collected for comparison. The comparison results are presented in Table 6.

From Table 6, it can be observed that ANMPSO, Two-Arch2 and RPD-NSGAII achieve competitive performances. The results of Two-Arch2 and RPD-NSGAII are slightly better than ANMPSO. RVEA, NMPSO, and SRA are significantly worse than ANMPSO, because they do not even find non-dominated solutions. This poor performance is mainly due to the favor of poorly converged individuals in regions where the objective space is less crowded. The reason why ANMPSO outperformed these algorithms is that it is suitable for tackling this problem with disconnect PF. The special gbest selection mechanism can guide particles close to the true PF.

Conclusions

In this paper, ANMPSO is proposed to balance convergence and diversity which has a competitive performance. It can effectively select gbest and obtain a better distribution in the searching space. The proposed algorithm can prevent particles from trapping into local optima. Numerical experimental results prove that the proposed ANMPSO can obtain excellent performances in the two sets of MaOP benchmark functions and the practical crash safety design of vehicles.

Some advantages of ANMPSO can be summarized from the experimental results: (1) By analyzing the comparison results, the comparisons between ANMPSO and GrEA, RVEA, NMPSO, SPEA2-SDE, Two-Arch2, SRA, and RPD-NSGAII evident that ANMPSO obtains the best IGD values and HV values, especially in the DTLZ4, DTLZ7, WFG3, and WFG5 test functions. However, from the HV comparison results on DTLZ1 and DTLZ3 problems, ANMPSO cannot effectively tackle the multi-modal problem. (2) By comparing with the curves of IGD metric values on the DTLZ4 and DTLZ7 with 10 objectives, it can be seen that ANMPSO has higher convergence speed and precision during the whole iteration process. (3) By comparing with the non-dominance solution of these compared algorithms, it can be observed that ANMPSO can fully cover the true PF. (4) By optimizing the practical problem of crash safety design of vehicles, it is found that ANMPSO can efficiently solve this problem.

Although ANMPSO performs well in MaOPs, it has some drawbacks in solving multi-modal problems. In addition, the performance of ANMPSO on other application problems with more objectives in the real world needs to be studied in the future.

References

Praditwong K, Harman M, Yao X (2011) Software module clustering as a multi-objective search problem. IEEE Trans Softw Eng 37:264–282

Liao X, Li Q, Yang X, Zhang W, Li W (2008) Multiobjective optimization for crash safety design of vehicles using stepwise regression model. Struct Multidiscip Optim 35:561–569

Deb K, Gupta S, Daum D, Branke J, Padmanabhan D (2009) Reliability-based optimization using evolutionary algorithms. IEEE Trans Evol Comput 13:1054–1074

Coello CAC, Brambila SG, Gamboa JF, Tapia MGC, Gómez RH (2020) Evolutionary multiobjective optimization: open research areas and some challenges lying ahead. Complex Intell Syst 6:221–236

Gu Q, Wang Q, Xiong NN, Jiang S, Chen L (2021) Surrogate-assisted evolutionary algorithm for expensive constrained multi-objective discrete optimization problems. Complex Intell Syst 1:1–20

Deb K, Pratap A, Agarwal S, Meyarivan T (2002) A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE T Evol Comput 6:182–197

Deb K, Jain H (2013) An evolutionary many-objective optimization algorithm using reference-point-based nondominated sorting approach, part I: solving problems with box constraints. IEEE Trans Evol Comput 18:577–601

Coello CC, Lechuga MS (2002) MOPSO: a proposal for multiple objective particle swarm optimization. In: Paper presented at the Proceedings of the 2002 congress on evolutionary computation, Honolulu, HI, USA, pp 1051–1056

Zhang Q, Li H (2007) MOEA/D: a multiobjective evolutionary algorithm based on decomposition. IEEE Trans Evol Comput 11:712–731

Elarbi M, Bechikh S, Gupta A, Said LB, Ong YS (2018) A new decomposition-based NSGA-II for many-objective optimization. IEEE Trans Syst Man Cybern B 48:1191–1210

Tian Y, Cheng R, Zhang X, Su Y, Jin Y (2018) A strengthened dominance relation considering convergence and diversity for evolutionary many-objective optimization. IEEE Trans Evol Comput 23:331–345

Cheng R, Jin Y, Olhofer M, Sendhoff B (2016) A reference vector guided evolutionary algorithm for many-objective optimization. IEEE Trans Evol Comput 20:773–791

Li B, Tang K, Li J, Yao X (2016) Stochastic ranking algorithm for many-objective optimization based on multiple indicators. IEEE Trans Evol Comput 20:924–938

Hernández Gómez R, Coello Coello CA (2015) Improved metaheuristic based on the R2 indicator for many-objective optimization. In: Paper presented at the Proceedings of the 2015 annual conference on genetic and evolutionary computation, pp 679–686

Gong D, Sun J, Ji X (2013) Evolutionary algorithms with preference polyhedron for interval multi-objective optimization problems. Inf Sci 233:141–161

Coello CAC, Pulido GT, Lechuga MS (2004) Handling multiple objectives with particle swarm optimization. IEEE Trans Evol Comput 8:256–279

Gong D, Han Y, Sun J (2018) A novel hybrid multi-objective artificial bee colony algorithm for blocking lot-streaming flow shop scheduling problems. Knowl Based Syst 148:115–130

Han Y, Gong D, Jin Y, Pan Q (2017) Evolutionary multiobjective blocking lot-streaming flow shop scheduling with machine breakdowns. IEEE Trans Cybern 49:184–197

Xingyi Z, Xiutao Z, Ran C, Jianfeng Q, Yaochu JJ (2018) A competitive mechanism based multi-objective particle swarm optimizer with fast convergence. Inf Sci 427:63–76

Al Moubayed N, Petrovski A, McCall J (2014) D2MOPSO: MOPSO based on decomposition and dominance with archiving using crowding distance in objective and solution spaces. Evol Comput 22:47–77

Lin Q, Liu S, Zhu Q, Tang C, Song R, Chen J, Coello CAC, Wong KC, Zhang J (2016) Particle swarm optimization with a balanceable fitness estimation for many-objective optimization problems. IEEE Trans Evol Comput 22:32–46

Zhan ZH, Li J, Cao J, Zhang J, Chung SH, Shi YH (2013) Multiple populations for multiple objectives: a coevolutionary technique for solving multiobjective optimization problems. IEEE Trans Cybern 43:445–463

Daneshyari M, Yen GG (2011) Cultural-based multiobjective particle swarm optimization. IEEE Trans Syst Man Cybern B 41:553–567

Nal AN, Complex GK (2020) Multi-objective particle swarm optimization with random immigrants. Complex Intell Syst 6:635–650

Hu W, Yen GG, Luo G (2016) Many-objective particle swarm optimization using two-stage strategy and parallel cell coordinate system. IEEE Trans Evol Comput 47:1446–1459

Han H, Lu W, Qiao J (2017) An adaptive multiobjective particle swarm optimization based on multiple adaptive methods. IEEE Trans Cybern 47:2754–2767

Yuen MC, Ng SC, Leung MF (2020) A competitive mechanism multi-objective particle swarm optimization algorithm and its application to signalized traffic problem. Cybern Syst 52:1–32

Rezaei F, Safavi HR (2020) f-MOPSO/Div: an improved extreme-point-based multi-objective PSO algorithm applied to a socio-economic-environmental conjunctive water use problem. Environ Monit Assess 192:767–794

Li L, Li G, Chang L (2020) A many-objective particle swarm optimization with grid dominance ranking and clustering. Appl Soft Comput 96:1–11

Chaman Garcia I, Coello Coello CA, Arias-Montano (2014) MOPSOhv: a new hypervolume-based multi-objective particle swarm optimizer. In: Paper presented at 2014 IEEE congress on evolutionary computation, Beijing, China, pp 266–273

Li F, Liu J, Tan S, Yu X (2015) R2-MOPSO: a multi-objective particle swarm optimizer based on R2-indicator and decomposition. In: Paper presented at 2015 congress on evolutionary computation, Sendai, Japan, pp 3148–3155

Wei L, Li X, Fan R (2019) A new multi-objective particle swarm optimisation algorithm based on R2 indicator selection mechanism. Int J Syst Sci 50:1920–1932

Luo J, Huang X, Yang Y, Li X, Wang Z, Feng J (2020) A many-objective particle swarm optimizer based on indicator and direction vectors for many-objective optimization. Inf Sci 514:166–202

Gu Q, Chen H, Chen L, Li X, Xiong NN (2020) A many-objective evolutionary algorithm with reference points-based strengthened dominance relation. Inf Sci 554:236–255

Brockhoff D, Wagner T, Trautmann H (2015) R2 indicator based multiobjective search. Evol Comput 23:369–395

Suzuki J, Phan DH (2016) R2-IBEA: R2 indicator based evolutionary algorithm for multiobjective optimization. In: Paper presented at 2013 IEEE congress on evolutionary computation, Cancun, Mexico, pp 1836–1845

Deb K, Thiele L, Laumanns M, Zitzler E (2006) Scalable test problems for evolutionary multiobjective optimization. In: Paper presented at evolutionary multiobjective optimization, pp 105–145

Huband S, Barone LC, While L, Hingston PF (2005) A scalable multi-objective test problem toolkit. In: Paper presented at evolutionary multi-criterion optimization, third international conference, EMO 2005, Guanajuato, Mexico, pp 280–295

Bosman PA, Thierens D (2003) The balance between proximity and diversity in multiobjective evolutionary algorithms. IEEE Trans Evol Comput 7:174–188

Zitzler E, Thiele L (1999) Multiobjective evolutionary algorithms: a comparative case study and the strength Pareto approach. IEEE Trans Evol Comput 3:257–271

Ke Li, Kalyanmoy D, Qingfu Z (2014) An evolutionary many-objective optimization algorithm based on dominance and decomposition. IEEE Trans Evol Comput 19:694–716

Das I, Dennis JE (1998) Normal-boundary intersection: a new method for generating the Pareto surface in nonlinear multicriteria optimization problems. Siam J Optim 8:631–657

Yang S, Li M, Liu X, Zheng J (2013) A grid-based evolutionary algorithm for many-objective optimization. IEEE Trans Evol Comput 17:721–736

Li M, Yang S, Liu X (2014) Shift-based density estimation for pareto-based algorithms in many-objective optimization. IEEE Trans Evol Comput 18:348–365

Wang H, Jiao L, Yao X (2015) Two_Arch2: an improved two-archive algorithm for many-objective optimization. IEEE Trans Evol Comput 19:524–541

Tian Y, Cheng R, Zhang X, Jin Y (2017) PlatEMO: a MATLAB platform for evolutionary multi-objective optimization [educational forum]. IEEE Comput Intell Mag 12:73–87

Acknowledgements

This work was supported by the National Natural Science Foundation of China under Grant no. 51774228 and Grant no. 52074205, in part by Shaanxi province fund for Distinguished Young Scholars under Grant no. 2020JC-44, in part by Joint Fund Project of Shaanxi Province Natural Science Basic Research Program under Grant no. 2019 JLP-16.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there are no conflicts of interest regarding the publication of this paper, and the comparison results data used to support the findings of this study are available from the corresponding author upon request.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gu, Q., Jiang, M., Jiang, S. et al. Multi-objective particle swarm optimization with R2 indicator and adaptive method. Complex Intell. Syst. 7, 2697–2710 (2021). https://doi.org/10.1007/s40747-021-00445-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-021-00445-3