Abstract

In the era of Web 2.0, the data are growing immensely and is assisting E-commerce websites for better decision-making. Collaborative filtering, one of the prominent recommendation approaches, performs recommendation by finding similarity. However, this approach fails in managing large-scale datasets. To mitigate the same, an efficient map-reduce-based clustering recommendation system is presented. The proposed method uses a novel variant of the whale optimization algorithm, tournament selection empowered whale optimization algorithm, to attain the optimal clusters. The clustering efficiency of the proposed method is measured on four large-scale datasets in terms of F-measure and computation time. The experimental results are compared with state-of-the-art map-reduce-based clustering methods, namely map-reduce-based K-means, map-reduce-based bat algorithm, map-reduce-based Kmeans particle swarm optimization, map-reduce-based artificial bee colony, and map-reduce-based whale optimization algorithm. Furthermore, the proposed method is tested as a recommendation system on the publicly available movie-lens dataset. The performance validation is measured in terms of mean absolute error, precision and recall, over a different number of clusters. The experimental results assert that the proposed method is a permissive approach for the recommendation over large-scale datasets.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Among the various web revolutions, recommendation system is a prominent tool which is widely used by E-commerce websites to offer more personalized services to the users. For example, movie recommendation method suggests a list of movies that a specific user may prefer based on the information retrieved from the social media or rating made by other similar users [1]. Generally, a recommendation system follows two types of approaches, namely content-based filtering and collaborative filtering. In content-based filtering, each item is associated with a certain set of features which are rated differently by different users. This approach predicts the rating of the items on the basis of user’s inputs [2, 3]. On the contrary, collaborative filtering takes up a completely different approach. It works on the similarity among the users or items [4]. The performance of such recommendation systems is highly dependent on the similarity determination. Generally, clustering-based approaches are quite popular in the literature to determine the similarity [5].

K-means, a widely used clustering approach, has been used in a number of engineering domains for the same. However, K-means generates biased clusters due to its dependence over parameter settings and initial cluster centres [6]. To remedy this concern, meta-heuristic-based solutions have been widely employed to obtain optimal cluster centroids in the last two decades [7,8,9]. Pal et al. [10] introduced a new clustering algorithm using the enhanced bio-geography algorithm. Furthermore, Mittal et al. [11] presented an intelligent gravitation search algorithm-based method to obtain optimal cluster centroids. Sharma et al. [12] introduced an enhanced grey wolf optimization-based method for the optimal clustering of the data. Pal et al. [13] presented genetic algorithm-based energy-efficient weighted clustering method. Recently, a number of researchers have used meta-heuristic-based clustering solutions for recommendation systems. Chen et al. [14] introduced collaborative filtering-based recommendation method using evolutionary clustering. Malik et al. [15] introduced particle swarm-based travel recommendation system. Moreover, Peška et al. [16] performed a detailed study about the applicability of meta-heuristic-based methods for solving the collaborative filtering-based recommendation system. Kumar et al. introduced efficient clustering-based model for the movie recommendations [17]. Kataria [18] introduced artificial bee colony-based movie recommendation system. Similarly, Singh et al. [19] introduced novel movie recommendation system by the efficient clustering of the dataset using modified cuckoo search method. Suganeshwari at al. [20] performed a survey on clustering-based recommendation system and concluded that clustering-based recommendation system can be efficiently utilized for the recommendations of the product and services as it finds the similarity among the the user behavior and uses patterns.

Generally, meta-heuristic methods optimize cluster centroids based on the inter-cluster or intra-cluster distances. Unlike K-means, these methods obtain the optimal solution through collective working, which eradicates any biasness towards initial clusters. Hence, these methods perform better for the clustering problem. Therefore, this paper presents a novel meta-heuristic-based recommendation system for the big data environment.

Meta-heuristic methods refer to the set of algorithms which leverages the concept of guided random search. These methods define a mathematical model which correspond to certain natural phenomena and have been used in the literature to obtain optimal solutions for different real-world optimization problems [21,22,23,24,25]. Generally, they use population-based approach to finds the optimal solution with the information sharing among the individuals. In contrast, single solution-based methods such as simulated annealing and hill climbing [26], finds the solution with a single individual. However, single solution-based algorithm suffers with premature convergence due to the lack of information sharing. Furthermore, the success of a meta-heuristic algorithm majorly depends on the way in which exploration and exploitation is performed [27, 28]. Exploration controls the diversification of the search agents, whereas the convergence of the individuals is controlled by the exploitation. Therefore, each meta-heuristic method tries to attain balance between exploration and exploitation to achieve precise solution [29]. Generally, these algorithms are inspired from swarm-based, or evolution-based phenomenons. Mirjalili et al. [30] developed multi-verse algorithm based on the notion of cosmology. Sayed et al. [31] introduced hybrid SA-MFO algorithm solving the engineering design problems. The genetic algorithm, differential evolution and bio-geography-based optimization are some of the popular examples of evolutionary concept [32]. Furthermore, swarm-based algorithms behave like the swarm of agents to achieve optimal results. Particle swarm optimization (PSO) is one the meta-heuristic that has been broadly used solving problems and several variants of the PSO has also been introduced in the literature [33]. Subsequently, Unal et al. [34] presented multi-objective particle swarm optimization, which uses random immigrants. Lie et al. [35] introduced levy flight based ant colony optimization. Moreover, Satapathy [36] presented the social group optimization, which mimics the social behavior of humans for solving the problems. Furthermore, Tripathi et al. [37] proposed an algorithm inspired by military dog squad to find the optimal solution. Dragonfly-based optimization is another swarm-based algorithm introduced by Mirjalili et al. [38].

WOA [39] is a popular algorithm which models the behavior of humpback whales. Mathematically, WOA simulates the hunting behavior of whales to find the optimal solution. It includes two phases, namely encircling phase and spiral phase, which corresponds to exploration and exploitation, respectively. WOA has surpassed other recent algorithms on the benchmark problems [39]. In the last three years, WOA has been applied across a wide set of application areas, like data clustering, mining, image processing, and others [40]. Moreover, WOA has been improved by several researchers for solving various real-world problems. Mafarja et al. [41] introduced hybrid WOA and simulated annealing-based method for the feature selection. Aziz et al. [42] combined moth fame algorithm with WOA for the multi-level image segmentation. Similarly, Aljarah et al. [43] employed WOA for optimizing connection weights of the neural network. Furthermore, the whale algorithm has also performed competitive in the recommendation system. Karleka et al. [44] introduced a WOA-based clinical risk assessment and recommendation method for treatment. However, collaborative filtering-based recommendation method involves clustering of data according to user’s similarity. Moreover, literature has witnessed that WOA performs efficiently in clustering-based applications [45]. Therefore, this paper aims at leveraging the strengths of WOA for collaborative-filtering-based recommendation system.

Generally, WOA discards bad solutions during position updation. However, the whale having bad fitness might be nearer to global optima [41]. Therefore, it suffers from demerits like the risk of trapping into local optima [46]. To remedy this, a new variant of WOA, tournament selection empowered WOA (TWOA), is proposed in this paper. The tournament process gives a fair chance to the bad solutions to overcome the local optima during exploitation. Furthermore, the strength of TWOA is utilized for improving the quality of the recommendation system. Although meta-heuristic-based recommendation system has shown better efficiency than traditional methods comparatively, these sequentially executing recommendation systems fail to respond in a reasonable amount of time on large-scale datasets [47]. To alleviate the same, the TWOA is parallelized using the map-reduce architecture for large-scale datasets and has been leveraged to obtain optimal clusters to perform recommendations.

The overall contribution of this paper is two folds, (1) a new clustering method, map-reduce-based tournament empowered whale optimization algorithm (MR-TWOA), is presented for efficient clustering of large-scale data set and (2) a novel variant of the WOA, tournament empowered whale optimization algorithm (TWOA), is presented to attain efficient clustering. The clustering efficiency of the proposed map-reduce-based TWOA (MR-TWOA) is tested on four large datasets, namely Replicated Iris, Replicated CMC, Replicated Wine, and Replicated Vowel. The experimental findings are compared with other state-of-the-art map-reduce-based clustering methods, namely map-reduce-based K-means (MR-Kmeans) [7], map-reduce-based bat algorithm (MR-bat) [48], map-reduce-based Kmeans particle swarm optimization (MR-KPSO) [49], map-reduce-based artificial bee colony (MR-ABC) [50], and map-reduce-based whale optimization (MR-WOA). Furthermore, the applicability of the proposed MR-TWOA-based recommendation system is validated using MovieLens dataset [51]. The results are compared with three parameters, namely mean absolute error (MAE), precision, and recall.

The remaining sections of the paper are as follows. In this section, briefs data-clustering and WOA. The next section discusses the proposed recommendation system along with the proposed variant (TWOA) and its parallel version (MR-TWOA). The Experimental results section presents the experimental arrangements and results. Finally, the paper is concluded in the last section.

Preliminaries

Clustering

Data clustering is an unsupervised machine learning approach which iteratively groups the set of N data-points in p clusters. Unlike supervised approaches, it does not need any priori training phase. Let \(O=\{0_{11},o_{12}, \dots , o_{1t}\},\) \(\{o_{21},o_{22}, \dots , o_{2t}\},\) and \(\{o_{n1},z_{n2}, \dots , o_{nt}\}\) be a set of n data-points having t features and \(o_{ij}\) denotes the \(j\mathrm{th}\) attribute value of \(i\mathrm{th}\) data-point. The clustering works iteratively to find a set of cluster centroids denoted as \(K=\{k_{11},k_{12}, \dots , k_{1t}\},\) \(\{k_{21}, k_{22}, \dots , k_{2t}\},\) and \(\{k_{p1}, k_{p2}, \dots , k_{pt}\}\). \(k_{ij}\) corresponds to the value of \(j\mathrm{th}\) attribute of \(i\mathrm{th}\) cluster centroid and \(k_i = {k_{i1}, k_{i2}, \dots , k_{it}}\) is the position vector for \(i\mathrm{th}\) cluster-centroid. Generally, the intra-cluster distance is considered as the objective function while performing clustering which is defined as the Euclidean distance between \(O_i\) and \(K_l\). Its formulation is depicted in Eq. (1).

where \(O_i\) and \(K_l\) represent \(i\mathrm{th}\) data-point and \(l\mathrm{th}\) cluster, respectively.

Whale optimization algorithm (WOA)

Whale optimization algorithm [39] mimics the hunting behavior of humpback whales. The humpback whales hunt small fishes in the proximity surface by generating bubbles in a circular shape. The algorithm works in the two phases, namely exploration and exploitation. Furthermore, the exploitation phase is performed through two different strategies, namely shrinking encircling and spiral update. In shrinking encircling mechanism, the whale moves toward the best whale in a circular manner.

Exploitation phase

To mathematically model exploitation phase of WOA, current best is represented by the position of the prey, which is assumed as the solution nearest to the optimum solution. To exploit the search space, the position of each whale is defined according to the prey, which simulated as encircling behavior. The current position of each agent is defined using two ways, namely spiral formation and encircling of prey. The encircling of prey is equated as Eq. (2).

where position \(\mathbf {P}(m)\), denotes the position of agent at iteration m and \(\mathbf {P}_b(m)\) represents the best agent. \(\mathbf {A}\) represents the coefficient vector which is equated in Eq. (3) while D denotes the distance from best agent which is computed as Eq. (4).

where \(r \in (0,1)\) is a randomly generated number, a is linearly decreasing vector with values from 2 to 0, and \(\mathbf {C}\) denotes an adjustment factor by which search agents captures the local areas.

Furthermore, the spiral formation is mathematically modeled as Eq. (6).

where l represents is a randomly generated number in the range [− 1, 1], constant number b defines spiral shape, and (\(\acute{D}\)) represents the distance between prey and search agent as defied in Eq. (7).

The exploitation phase of the WOA is implemented with equal probabilities using Eq. (8).

here \(q \in (0,1)\) is randomly generated number.

Exploration phase

To perform the exploration, each whale updates its position either randomly in the search space or using the best search agent, which depends on vector \(\mathbf {A}\).

For \(\mathbf {A}>1\), a random movement is performed by whales whereas for \(\mathbf {A}<1\), whales prefer to search locally in the space. The exploration phase is mathematically modelled as Eqs. (9) and (10) at iteration \((t+1)\).

where \(\mathbf {P}_{rand}\) denotes any randomly selected whale. Algorithm 1 details the pseudo-code of the WOA.

Proposed method

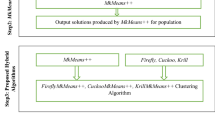

This section details a novel recommendation system, namely map-reduce-based tournament empowered WOA (MR-TWOA), to deal with large-scale data efficiently. The proposed method performs clustering by leveraging the strengths of map-reduce architecture with TWOA. The workflow of the MR-TWOA is depicted in Fig. 1. First, the user-rated dataset is captured. Then, it is processed through the proposed MR-TWOA to obtain optimal clusters in an efficient manner. Here, each whale corresponds to a set of cluster centroids which are defined over d dimensions, where d corresponds to the number of features in the considered dataset. The similarity measure among the user-rating is considered as the clustering criteria. Finally, recommendations are made to the users based on the obtained clusters. In the following section, the proposed variant (TWOA) is detailed, followed by the parallel version of MR-TWOA for clustering the large-scale dataset.

Tournament empowered WOA

WOA defines the position of the optimal solution according to the current best whale and randomly selected whale. The parameter ‘a’ controls the equilibrium between exploration and exploitation. However, WOA performs exploration using the randomly picked solution, which affects the exploration and exploitation balance. To mitigate the above concern, a novel tournament selection empowered WOA has been introduced. Instead of a random solution in the exploration phase, TWOA uses tournament selection [52] for selecting the \(\mathbf {P}_\mathrm{rand}\) solution in Eqs. (9) and (10). This yields a better possibility of selecting good solutions at the later stage. This results in fast convergence and better exploitation.

MR-TWOA-based recommendation method

For clustering using meta-heuristic algorithm, each iteration involves \(N*K*P\) number of distance computations, where N denotes the number of data points, K is the number of clusters, and P denotes the population size. Therefore, on large scale datasets, sequential algorithms fail to respond in terms of memory and computation time. To remedy this, a parallel model of TWOA named as MR-TWOA is presented using Hadoop architecture based on MapReduce. Particularly, MR-TWOA runs over a cluster of computers in which data-points are distributed uniformly among the Hadoop distributed file system (HDFS). The complete architecture of MR-TWOA is presented in Fig. 2. As shown in the figure, the large dataset is first broken into small size input splits (64 MB). For the first iteration, the MR-TWOA population is randomly initialized within the search boundaries. Furthermore, the population file is sent to each mapper running on the cluster. In the proposed recommendation system, the computation of the sum of the squared Euclidean distance (fitness value) is required at each iteration, which takes the majority of the computation cost. Therefore, this task of fitness calculation is parallelized using the mapper function of the Map-Reduce. The proposed MR-TWOA works in two phases, namely MR-TWOA-Map and MR-TWOA-Reduce. MR-TWOA-Map clusters the data-points and finds clusters, with the clustering criteria as the least Euclidean distance between the data-point and corresponding centroid. The pseudo-code of the map phase is detailed in Algorithm 2. As depicted in Algorithm 2, the MR-TWOA-Map phase first retrieves the cluster centers from the population stored in the HDFS. After that, the minimum with distance each data object is calculated with the centroids. The outcome of the this phase is {key:(whaleId,cenId), value:minDistance}, where ‘whaleId’ denotes the identification of whale for clusters matched with the data-point and ‘cenId’ represents the identification of cluster-centroid with minimum distance from the data-point. ‘minDistance’ is the Euclidean distance between data-point and the centroid with identification ‘cenId’. After the completion of the Map phase, the output from all the mappers is collected and grouped by the key. Then, MR-TWOA-Reduce phase processes the distances obtained in Map phase and calculates the intra-cluster distance for each centroid, defined for each whale. The outcome of this phase is of the form \(\{key:(whaleId, cenId), value:intra-cluster distance\}\). The pseudo-code of Reduce phase is illustrated in Algorithm 3.

Time complexity

The time complexity of MR-TWOA-based recommendation method is proportional to the number of clusters, the number of data objects, and the number of dimensions in the dataset. In the MR-TWOA based recommendation method, the optimal number of centroids are obtained with O\((N \times C \times D \times T)\) operations, where N, C, D, and T denotes the total number of data objects, number of clusters and number of dimensions in the dataset, and number of iterations, respectively. Furthermore, for the population size of P, the time complexity of the proposed recommendation system can be represented as O\((P\times N \times C \times D \times T)\).

Experimental results

The performance of MR-TWOA method is analyzed in three sections. First, the efficacy of the proposed TWOA is validated on 23 benchmarks which belong to three different categories, namely uni-modal, multi-modal, and fixed dimensional multi-modal. Second, the clustering efficiency of the parallel version of TWOA (MR-TWOA) has been analyzed on four large-scale datasets. In the third section, the experimental validation of the proposed method (MR-TWOA) as the recommendation system is performed in terms of three parameters, namely mean absolute error (MAE), recall, and precision.

Performance of TWOA on benchmark problems

This section details the experimental analysis of the proposed variant (TWOA) on 23 standard benchmark functions. The simulation results are conducted on a computer having Intel Corei3-4570 processor with 3.20 GHz, 4GB ram and 500 GB hard disk. The results are compared with four recent meta-heuristic methods, namely whale optimization algorithm (WOA) [39], improved cuckoo search (ICS) [53], enhanced grey-wolf optimizer (EGWO) [12], and salp-swarm algorithm (SSA) [54]. As WOA has already shown superior performance over popular meta-heuristic methods in literature such as grey wolf optimizer [55], particle swarm optimization (PSO) [56], dragonfly algorithm [38], differential evolution [57]. Therefore, the comparison includes only recently proposed meta-heuristic methods. Tables 1, 2, 3 detail the considered 23 benchmark functions which are grouped into three categories, namely unimodal, multi-modal, and fixed dimensional multi-modal functions, respectively. Generally, unimodal functions describe the exploitation ability of the considered method, while multi-modal functions validate the exploration ability of the method. Furthermore, each method is executed over 30 times for each benchmark function. The best fitness value obtained in different runs is averaged and analyzed in terms of mean fitness value and standard deviation. The parameter settings of each meta-heuristic method are given in Table 4. These values were fixed according to the related literature to make a fair comparison between the selected meta-heuristics [12, 39, 53, 54]. Moreover, the population size and the number of iterations for all algorithms are kept as 30 and 500, respectively.

Table 5 tabulates the average fitness value on different benchmark functions obtained by the considered meta-heuristic methods along with the standard deviation. It is pertinent from the table that TWOA outperforms the other compared methods on four unimodal functions, i.e. \(F_1, F_2, F_5, F7\). For \(F_3\) and \(F_4\). ICS has shown competitive results while SCA performed well on \(F_6\). Thus, it may be stated that TWOA has superior local searchability. Moreover, TWOA has surpassed other methods on more than 80% of the multimodel functions. This represents that TWOA is robust against trapping in local optima. The superiority of TWOA is due to the inclusion of the tournament selection process which resulted in better trade-off between the exploration and exploitation. Additionally, the poor solutions also got a fair chance in the early phase of the algorithm, which prevents the algorithm from the premature convergence.

Furthermore, to analyze the exploration and exploitation behavior, the convergence trends of the proposed and considered methods on two representative benchmark functions, namely \(F_1\) and \(F_8\), are depicted in Fig. 3. In the figure, the horizontal axis represents the iteration count, and vertical axis denotes the best fitness value. It is visualizable from convergence curves that TWOA smoothly reaches the optimal solution. This shows that the proposed method has better ability to attain an optimal solution. Therefore, it can be validates from experimental analysis that TWOA is an efficient method that can be leveraged for clustering the large scale datasets.

Performance analysis of MR-TWOA

To test the clustering efficiency of MR-TWOA, four extremely large datasets are considered, namely replicated CMC, replicated Vowel, replicated Iris, replicated Wine. The datasets are formed by replicating each sample of the original dataset 1000 times. Table 6 contains the detailed description of the considered datasets. The clustering efficiency of the proposed MR-TWOA is measured in terms of F-measure and computation time. Furthermore, the MR-TWOA clustering results are compared against four recent map-reduce-based clustering methods, namely map-reduce-based K-means (MR-Kmeans), map-reduce-based bat algorithm (MR-BAT), map-reduce-based Kmeans particle swarm optimization (MR-KPSO), map-reduce-based artificial bee colony (MR-ABC), and map-reduce based whale optimization algorithm (MR-WOA). The parallelization of the clustering method is achieved through Hadoop 2.6.2 on Ubuntu 14.04 operating system and simulated in java 1.8.0. Table 7 presents the F-measure (Fm) and computation time (CT) of the considered methods in terms of mean value which is obtained over 30 runs by running the considered methods on a cluster of 5 computers. It is visible from the table that MR-TWOA has outperformed the compared methods on all datasets. The performance of MR-Kmeans algorithm has been recorded as poorest among all the considered methods. However, it has given competitive performance in terms of computation time since it works on single solution-based approach.

Moreover, the parallel computation efficacy of MR-TWOA is validated in terms of speedup which is computed according to Eq. (11).

where \(T_\mathrm{base}\) represents the computation time taken by a method to run on a single machine, and \(T_\mathrm{N}\) refers to the time taken by the same method to run on N number of machines. To study the speedup efficiency of MR-TWOA, two large-scale datasets are considered, namely Replicated Iris and Replicated CMC. Figure 4a and b represent the speedup graphs of MR-TWOA for Replicated Iris and Replicated CMC datasets, respectively. In the speedup graph, Y axis corresponds to the computation time while X axis corresponds to the number of machines (or nodes) in the cluster. From the figures, it is observable that the speedup performance of MR-TWOA running on Replicated Iris dataset is 2.7548 when there are five nodes in the cluster. The speedup performance of MR-TWOA running on Replicated CMC dataset is 2.1561 when there are five nodes in the cluster. This clearly indicates that MR-TWOA is an efficient method and can be used for large-scale clustering datasets.

Analysis of MR-TWOA as recommender system

This section analysis the applicability of the proposed MR-TWOA for the recommendation. To perform the same, MovieLens dataset [51] is considered which is a publicly available dataset, consisting of 1000 user-reviews on 1700 movies. It contains 100,000 data-points, where each data point corresponds to a user-rating for a movie. Furthermore, this dataset is replicated 1000 times to make it suitable for Hadoop architecture. To analyze the efficacy of the MR-TWOA with the considered map-reduce-based clustering methods, three performance measures, namely mean absolute error (MEA), precision, and recall, are considered over the different number of clusters. Table 8 depicts the MAE, precision, and recall of the considered methods. For the visual interpretation of Table 8, Figs. 5, 6, and 7 depict the bar-charts corresponding to mean absolute error, precision, and recall, respectively. The X axis in the figures corresponds to the number of clusters, and Y axis represents the values of the considered measure. From the table and figures, it is visible that MR-TWOA has reported least MEA value among WOA, Bat, ABC and PSO on all the clusters. Whereas, WOA attained second least MEA all the clusters. Furthermore, it can also be observed that MR-TWOA has clearly outperformed all the methods in terms of precision. Again, WOA performed as second best method in terms of precision on all the clusters. It can also be inferred that MR-TWOA attains maximum recall among all the considered methods on all the cluster sets except 10, 15, where MR-BAT and MR-ABC has given competitive results, respectively. Furthermore, WOA has given second-best result when the number of clusters is set as 15, 20, 25, 30 and 40, while MR-Bat and ABC performed second best on 5 and 10 cluster sets, respectively. Therefore, it is affirmed from the experimental results that MR-TWOA is scalable and robust for data clustering. Moreover, it can be leveraged as a powerful alternative for the recommendation system over large-scale datasets.

Conclusion

In this paper, a novel recommendation method, MR-TWOA, is introduced for handling large dataset. The proposed method performs clustering through a novel variant of WOA, termed as tournament empowered WOA (TWOA). The performance of TWOA is tested on 23 uni-model and multi-model benchmark functions in terms of the mean and standard deviation of the fitness value. The results are compared against four recent meta-heuristic methods, namely WOA, ICS, EGWO, and SSA. The experimental results witnessed the superiority of the proposed method as compared to the considered methods on the majority of the benchmark function, which validates the ability of the TWOA for avoiding local optima. Furthermore, the clustering accuracy of the proposed MR-TWOA is tested on four massive datasets in terms of F-measure and computation time. The performance is compared with five recent map-reduce algorithms, namely MR-Kmeans, MR-KPSO, MR-ABC, MR-Bat, and MR-WOA. The proposed MR-TWOA outperformed the compared method on all the datasets, which shows the superior clustering efficiency of the proposed method. Additionally, the performance of MR-TWOA is studied for the parallel environment in terms of speed-up efficiency. To do so, MR-TWO runs on a cluster with 5 machines for four massive datasets. The experimental results of the proposed MR-TWOA surpassed the other state-of-the-art meta-heuristics-based methods. Furthermore, the recommendation ability of MR-TWOA is validated on MovieLens dataset in terms of MEA, precision and recall. It is confirmed from the simulation results that MR-TWOA outperformed the other considered methods in the product recommendation along with the ability to handle massive datasets.

In future, MR-TWOA can be used to unfold other real-world problems pertaining to big datasets. The proposed TWOA incorporates tournament selection for opting better solutions rather than random solutions. Since tournament selection sometimes fails in the selection of best solutions [58], it may limit the exploration ability of the proposed TWOA which can be improved by examining other selection methods. Furthermore, some other framework such as spark may be used to improve the computation cost of the proposed method.

References

Fu S, Yan Q, Feng GC (2018) Who will attract you? Similarity effect among users on online purchase intention of movie tickets in the social shopping context. Int J Inf Manag 40:88–102

Pazzani MJ, Billsus D (2007) Content-based recommendation systems. Tn: The adaptive web, Springer, pp 325–341

Yi S, Liu X (2020) Machine learning based customer sentiment analysis for recommending shoppers, shops based on customers? review. Complex Intell Syst 2020:1–14

Ahmadi A, Mukherjee D, Ruhe G (2019) A recommendation system for emergency mobile applications using context attributes: Remac. In: Proceedings of the 3rd ACM SIGSOFT international workshop on app market analytics, ACM, pp 1–7

Mittal H, Saraswat M (2020) A new fuzzy cluster validity index for hyper-ellipsoid or hyper-spherical shape close clusters with distant centroids. IEEE Trans Fuzzy Syst 2020:1–1. https://doi.org/10.1109/TFUZZ.2020.3016339

Mittal H, Saraswat M (2019) Classification of histopathological images through bag-of-visual-words and gravitational search algorithm. In: Lect. notes of soft computing for problem solving, Springer, pp 231–241

Zhao W, Ma H, He Q (2009) Parallel k-means clustering based on mapreduce. In: Cloud computing, Springer, pp 674–679

Katarya R, Verma OP (2018) Recommender system with grey wolf optimizer and FCM. Neural Comput Appl 30(5):1679–1687

Mittal H, Saraswat M (2018) An image segmentation method using logarithmic kbest gravitational search algorithm based superpixel clustering. Evolut Intell 2018:1–13

Pal R, Pandey HMA, Saraswat M (2016) Beecp: biogeography optimization-based energy efficient clustering protocol for hwsns. In: Contemporary Computing (IC3), 2016 Ninth International Conference on, IEEE, pp 1–6

Mittal H, Saraswat M (2019) An automatic nuclei segmentation method using intelligent gravitational search algorithm based superpixel clustering. Swarm Evolution Comput 45:15–32

Tripathi AK, Sharma K, Bala M (2018) A novel clustering method using enhanced grey wolf optimizer and mapreduce. Big Data Res 14:93–100

Pal R, Yadav S, Karnwal R et al (2020) Eewc: energy-efficient weighted clustering method based on genetic algorithm for hwsns. Complex Intell Syst 2020:1–10

Chen J, Zhao C, Chen L et al (2019) Collaborative filtering recommendation algorithm based on user correlation and evolutionary clustering. Complex Intell Syst 2019:1–10

Malik S, Kim D (2019) Optimal travel route recommendation mechanism based on neural networks and particle swarm optimization for efficient tourism using tourist vehicular data. Sustainability 11(12):3357

Peška L, Tashu TM, Horváth T (2019) Swarm intelligence techniques in recommender systems—a review of recent research. Swarm Evolution Comput 48:201–219

Kumar MS, Prabhu J (2020) A hybrid model collaborative movie recommendation system using k-means clustering with ant colony optimisation. Int J Internet Technol Secured Trans 10(3):337–354

Katarya R (2018) Movie recommender system with metaheuristic artificial bee. Neural Comput Appl 30(6):1983–1990

Singh SP, Solanki S (2019) A movie recommender system using modified cuckoo search. In: Emerging research in electronics, computer science and technology, Springer, pp 471–482

Suganeshwari G, Ibrahim SS (2016) A survey on collaborative filtering based recommendation system, In: Proceedings of the 3rd international symposium on big data and cloud computing challenges (ISBCC–16?), Springer, pp 503–518

Pandey AC, Rajpoot DS, Saraswat M (2017) Twitter sentiment analysis using hybrid cuckoo search method. Inf Process Manag 53:764–779

Pal R, Saraswat M (2019) Histopathological image classification using enhanced bag-of-feature with spiral biogeography-based optimization. Appl Intell 49(9):3406–3424

Mittal H, Saraswat M, Pal R (2020) Histopathological image classification by optimized neural network using igsa. In: International conference on distributed computing and internet technology, Springer, pp 429–436

Gupta V, Singh A, Sharma K, Mittal H (2018) A novel differential evolution test case optimisation (detco) technique for branch coverage fault detection. In: Smart computing and informatics, Springer, pp 245–254

Mittal H, Saraswat M (2018) ckgsa based fuzzy clustering method for image segmentation of rgb-d images. In: Proc. of international conference on contemporary computing, IEEE, pp 1–6

Selim SZ, Alsultan K (1991) A simulated annealing algorithm for the clustering problem. Pattern Recogn 24(10):1003–1008

Jaiswal K, Mittal H, Kukreja S (2017) Randomized grey wolf optimizer (rgwo) with randomly weighted coefficients. In: 2017 tenth international conference on contemporary computing (IC3), IEEE, pp 1–3

Mittal H, Pal R, Kulhari A, Saraswat M (2016) Chaotic kbest gravitational search algorithm (ckgsa). In: Contemporary computing (IC3), 2016 Ninth international conference on IEEE, pp 1–6

Mittal H, Saraswat M (2018) An optimum multi-level image thresholding segmentation using non-local means 2d histogram and exponential kbest gravitational search algorithm. Eng Appl Artif Intell 71:226–235

Mirjalili S, Mirjalili SM, Hatamlou A (2016) Multi-verse optimizer: a nature-inspired algorithm for global optimization. Neural Comput Appl 27(2):495–513

Sayed GI, Hassanien AE (2018) A hybrid sa-mfo algorithm for function optimization and engineering design problems. Complex Intell Syst 4(3):195–212

Tripathi AK, Sharma K, Bala M (2019) Parallel hybrid bbo search method for twitter sentiment analysis of large scale datasets using mapreduce. Int J Inf Secur Privacy (IJISP) 13(3):106–122

Cheng S, Lu H, Lei X, Shi Y (2018) A quarter century of particle swarm optimization. Complex Intell Syst 4(3):227–239

Ünal AN, Kayakutlu G (2020) Multi-objective particle swarm optimization with random immigrants. Complex Intell Syst 2020:1–16

Liu Y, Cao B, Li H (2020) Improving ant colony optimization algorithm with epsilon greedy and levy flight. JSP 24(25):54

Satapathy S, Naik A (2016) Social group optimization (sgo): a new population evolutionary optimization technique. Complex Intell Syst 2(3):173–203

Tripathi AK, Sharma K, Bala M, Kumar A, Menon VG, Bashir AK (2020) A parallel military dog based algorithm for clustering big data in cognitive industrial internet of things. IEEE Trans Ind Informatics https://doi.org/10.1109/TII.2020.2995680

Mirjalili S (2016) Dragonfly algorithm: a new meta-heuristic optimization technique for solving single-objective, discrete, and multi-objective problems. Neural Comput Appl 27(4):1053–1073

Mirjalili S, Lewis A (2016) The whale optimization algorithm. Adv Eng Softw 95:51–67

Medjahed SA, Saadi TA, Benyettou A, Ouali M (2016) Gray wolf optimizer for hyperspectral band selection. Appl Soft Comput 40:178–186

Mafarja MM, Mirjalili S (2017) Hybrid whale optimization algorithm with simulated annealing for feature selection. Neurocomputing 260:302–312

El Aziz MA, Ewees AA, Hassanien AE (2017) Whale optimization algorithm and moth-flame optimization for multilevel thresholding image segmentation. Expert Syst Appl 83:242–256

Aljarah I, Faris H, Mirjalili S (2018) Optimizing connection weights in neural networks using the whale optimization algorithm. Soft Comput 22(1):1–15

Karlekar NP, Gomathi N (2018) Ow-svm: Ontology and whale optimization-based support vector machine for privacy-preserved medical data classification in cloud. Int J Commun Syst 31(12):e3700

Nasiri J, Khiyabani FM (2018) A whale optimization algorithm (woa) approach for clustering. Cogent Math Stat 5(1):1483565

Ling Y, Zhou Y, Luo Q (2017) Lévy flight trajectory-based whale optimization algorithm for global optimization. IEEE Access 5:6168–6186

Tripathi TA, Sharma K, Bala M (2019) Fake review detection in big data using parallel bbo. Int J Inf Syst Manag Sci 2:2

Ashish T, Kapil S, Manju B (2018) Parallel bat algorithm-based clustering using mapreduce. In: Networking communication and data knowledge engineering, Springer, pp 73–82

J. Wang, D. Yuan, M. Jiang (2012) Parallel k-pso based on mapreduce. In: 2012 IEEE 14th international conference on communication technology, IEEE, pp 1203–1208

Banharnsakun A (2017) A mapreduce-based artificial bee colony for large-scale data clustering. Pattern Recogn Lett 93:78–84

Harper FM, Konstan JA (2015) The movielens datasets: history and context. ACM Trans Interactive Intell Syst (tiis) 5(4):1–19

Hussain A, Muhammad YS (2019) Trade-off between exploration and exploitation with genetic algorithm using a novel selection operator. Complex Intell Syst 2019:1–14

Valian E, Mohanna S, Tavakoli S (2011) Improved cuckoo search algorithm for global optimization. Int J Commun Inf Technol 1(1):31–44

Mirjalili S, Gandomi AH, Mirjalili SZ, Saremi S, Faris H, Mirjalili SM (2017) Salp swarm algorithm: a bio-inspired optimizer for engineering design problems. Adv Eng Softw 114:163–191

Mirjalili S, Mirjalili SM, Lewis A (2014) Grey wolf optimizer. Adv Eng Softw 69:46–61

Kennedy J, Eberhart R (1995) Particle swarm optimization. Neural Netw 4:1942–1948

Storn R, Price K (1997) Differential evolution-a simple and efficient heuristic for global optimization over continuous spaces. J Global Optim 11:341–359

Alabsi F, Naoum R (2012) Comparison of selection methods and crossover operations using steady state genetic based intrusion detection system. J Emerg Trends Comput Inf Sci 3(7):1053–1058

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tripathi, A.K., Mittal, H., Saxena, P. et al. A new recommendation system using map-reduce-based tournament empowered Whale optimization algorithm. Complex Intell. Syst. 7, 297–309 (2021). https://doi.org/10.1007/s40747-020-00200-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-020-00200-0