Abstract

We investigate some geometric properties of the real algebraic variety \(\Delta \) of symmetric matrices with repeated eigenvalues. We explicitly compute the volume of its intersection with the sphere and prove a Eckart–Young–Mirsky-type theorem for the distance function from a generic matrix to points in \(\Delta \). We exhibit connections of our study to real algebraic geometry (computing the Euclidean distance degree of \(\Delta \)) and random matrix theory.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper we investigate the geometry of the set \(\Delta \) (below called discriminant) of real symmetric matrices with repeated eigenvalues and of unit Frobenius norm:

Here, \(\lambda _1(Q),\ldots ,\lambda _n(Q)\) denote the eigenvalues of Q, the dimension of the space of symmetric matrices is \(N :=\frac{n(n+1)}{2}\) and \(S^{N-1}\) denotes the unit sphere in \(\text {Sym}(n,\mathbb {R})\) endowed with the Frobenius norm \(\Vert Q\Vert :=\sqrt{\text {tr}(Q^2)}\).

This discriminant is a fundamental object and it appears in several areas of mathematics, from mathematical physics to real algebraic geometry, see for instance (Arnold 1972, 1995, 2003, 2011; Teytel 1999; Agrachev 2011; Agrachev and Lerario 2012; Vassiliev 2003). We discover some new properties of this object (Theorems 1.1, 1.4) and exhibit connections and applications of these properties to random matrix theory (Sect. 1.4) and real algebraic geometry (Sect. 1.3).

The set \(\Delta \) is an algebraic subset of \(S^{N-1}\). It is defined by the discriminant polynomial:

which is a non-negative homogeneous polynomial of degree \(\text {deg}(\text {disc}) =n(n-1)\) in the entries of Q. Moreover, it is a sum of squares of real polynomials (Ilyushechkin 2005; Parlett 2002) and \(\Delta \) is of codimension two. The set \(\Delta _\text {sm}\) of smooth points of \(\Delta \) is the set of real points of the smooth part of the Zariski closure of \(\Delta \) in \(\text {Sym}(n,\mathbb {C})\) and it consists of matrices with exactly two repeated eigenvalues. In fact, \(\Delta \) is stratified according to the multiplicity sequence of the eigenvalues; see (1.3).

1.1 The Volume of the Set of Symmetric Matrices with Repeated Eigenvalues

Our first main result concerns the computation of the volume\(|\Delta |\) of the discriminant, which is defined to be the Riemannian volume of the smooth manifold \(\Delta _{\text {sm}}\) endowed with the Riemannian metric induced by the inclusion \(\Delta _\text {sm}\subset S^{N-1}\).

Theorem 1.1

(The volume of the discriminant).

Remark 1

Results of this type (the computation of the volume of some relevant algebraic subsets of the space of matrices) have started appearing in the literature since the 90’s (Edelman and Kostlan 1995; Edelman et al. 1994), with a particular emphasis on asymptotic studies and complexity theory, and have been crucial for the theoretical advance of numerical algebraic geometry, especially for what concerns the estimation of the so called condition number of linear problems (Demmel 1988). The very first result gives the volume of the set \(\Sigma \subset \mathbb {R}^{n^2}\) of square matrices with zero determinant and Frobenius norm one; this was computed in Edelman and Kostlan (1995) and Edelman et al. (1994):

For example, this result is used in Edelman and Kostlan (1995, Theorem 6.1) to compute the average number of zeroes of the determinant of a matrix of linear forms. Subsequently this computation was extended to include the volume of the set of \(n\times m\) matrices of given corank in Beltrán (2011) and the volume of the set of symmetric matrices with determinant zero in Lerario and Lundberg (2016), with similar expressions. Recently, in Beltrán and Kozhasov (2018) the above formula and Lerario and Lundberg (2016, Thm. 3) were used to compute the expected condition number of the polynomial eigenvalue problem whose input matrices are taken to be random.

In a related paper Breiding et al. (2017) we use Theorem 1.1 for counting the average number of singularities of a random spectrahedron. Moreover, the proof of Theorem 1.1 requires the evaluation of the expectation of the square of the characteristic polynomial of a GOE(n) matrix (Theorem 1.6 below), which constitutes a result of independent interest.

Theorem 1.1 combined with Poincaré’s kinematic formula Howard (1993, p. 17) allows to compute the average number of symmetric matrices with repeated eigenvalues in a uniformly distributed projective two-plane \(L \subset \mathrm {P}\text {Sym}(n, \mathbb {R})\simeq \mathbb {R}\text {P}^{N-1}\):

where by \(\text {P}\Delta \subset \mathrm {P}\text {Sym}(n, \mathbb {R})\simeq \mathbb {R}\text {P}^{N-1}\) we denote the projectivization of the discriminant. The following optimal bound on the number \(\#(L\cap \text {P}\Delta )\) of symmetric matrices with repeated eigenvalues in a generic projective two-plane \(L\simeq \mathbb {R}\text {P}^2\subset \mathbb {R}\text {P}^{N-1}\) was found in Sanyal et al. (2013, Corollary 15):

Remark 2

Consequence (1.1) combined with (1.2) “violates” a frequent phenomenon in random algebraic geometry, which goes under the name of square root law: for a large class of models of random systems, often related to the so called Edelman–Kostlan–Shub–Smale models (Edelman and Kostlan 1995; Shub and Smale 1993b, a, c; Edelman et al. 1994; Kostlan 2002), the average number of solutions equals (or is comparable to) the square root of the maximum number; here this is not the case. We also observe that, surprisingly enough, the average cut of the discriminant is an integer number (there is no reason to even expect that it should be a rational number!).

More generally one can ask about the expected number of matrices with a multiple eigenvalue in a “random” compact 2-dimensional family. We prove the following.

Theorem 1.2

(Multiplicities in a random family). Let \(F:\Omega \rightarrow \mathrm {Sym}(n, \mathbb {R})\) be a random Gaussian field \(F=(f_1, \ldots , f_N)\) with i.i.d. components and denote by \(\pi :\mathrm {Sym}(n, \mathbb {R})\backslash \{0\}\rightarrow S^{N-1}\) the projection map. Assume that:

-

1.

with probability one the map \(\pi \circ F\) is an embedding and

-

2.

the expected number of solutions of the random system \(\{f_1=f_2=0\}\) is finite.

Then:

where \(\mathscr {C}(\Delta )\subset \text {Sym}(n,\mathbb {R})\) is the cone over \(\Delta \).

Example 1

When each \(f_i\) is a Kostlan polynomial of degree d, then the hypotheses of Theorem 1.2 are verified and \(\mathop {\mathbb {E}}\limits \#\{f_1=f_2=0\}=2d |\Omega |/|S^2|\); when each \(f_i\) is a degree-one Kostlan polynomial and \(\Omega =S^2\), then \(\mathop {\mathbb {E}}\limits \#\{f_1=f_2=0\}=2\) and we recover (1.1).

1.2 An Eckart–Young–Mirsky-Type Theorem

The classical Eckart–Young–Mirsky theorem allows to find a best low rank approximation to a given matrix.

For \(r\le m\le n\) let us denote by \(\Sigma _r\) the set of \(m\times n\) complex matrices of rank r. Then for a given \(m\times n\) real or complex matrix A a rank r matrix \({\tilde{A}}\in \Sigma _r\) which is a global minimizer of the distance function

is called a best rank r approximation to A. The Eckart–Young–Mirsky theorem states that if \(A=U^*SV\) is the singular value decomposition of A, i.e., U is an \(m\times m\) real or complex unitary matrix, S is an \(m\times n\) rectangular diagonal matrix with non-negative diagonal entries \(s_1\ge \cdots \ge s_m\ge 0\) and V is an \(n\times n\) real or complex unitary matrix, then \({\tilde{A}} = U^*{\tilde{S}}V\) is a best rank r approximation to A, where \({\tilde{S}}\) denotes the rectangular diagonal matrix with \({\tilde{S}}_{ii} = s_i\) for \(i=1,\ldots ,r\) and \({\tilde{S}}_{jj}=0\) for \(j=r+1,\ldots ,m\). Moreover, a best rank r approximation to a sufficiently generic matrix is actually unique. More generally, one can show that any critical point of the distance function \(\text {dist}_A:\Sigma _r \rightarrow \mathbb {R}\) is of the form \(U^*{\tilde{S}}^I V,\) where \(I\subset \{1,2,\ldots ,m\}\) is a subset of size r and \({\tilde{S}}^I\) is the rectangular diagonal matrix with \({\tilde{S}}^I_{ii} = s_i\) for \(i\in I\) and \({\tilde{S}}^I_{jj} = 0\) for \(j\notin I\). In particular, the number of critical points of \(\text {dist}_A\) for a generic matrix A is \(\genfrac(){0.0pt}1{n}{r}\). In Draisma et al. (2016) the authors call this count the Euclidean distance degree of \(\Sigma _r\); see also Sect. 1.3 below.

In the case of real symmetric matrices similar results are obtained by replacing singular values \(\sigma _1\ge \cdots \ge \sigma _n\) with absolute values of eigenvalues \(|\lambda _1|>\cdots >|\lambda _n|\) and singular value decomposition \(U\Sigma V^*\) with spectral decomposition \(C^T\Lambda C\); see Helmke and Shayman (1995, Thm. 2.2) and Lerario and Lundberg (2016, Sec. 2).

For the distance function from a symmetric matrix to the cone over \(\Delta \) we also have an Eckart–Young–Mirsky-type theorem. We prove this theorem in Sect. 2.

Theorem 1.3

(Eckart–Young–Mirsky-type theorem). Let \(A\in \text {Sym}(n,\mathbb {R})\) be a generic real symmetric matrix and let \(A=C^T\Lambda C\) be its spectral decomposition with \(\Lambda = \text {diag}(\lambda _1,\ldots ,\lambda _n)\). Any critical point of the distance function

is of the form \(C^T \Lambda _{i,j} C\), where

Moreover, the function \(\text {d}_A: \mathscr {C}(\Delta ) \rightarrow \mathbb {R}\) attains its global minimum at exactly one of the critical points \(C^T\Lambda _{i,j}C\in \mathscr {C}(\Delta _{\text {sm}}){\setminus } \{0\}\) and the value of the minimum of \(\text {d}_A\) equals:

Remark 3

Since \(\mathscr {C}(\Delta )\subset \text {Sym}(n,\mathbb {R})\) is the homogeneous cone over \(\Delta \subset S^{N-1}\) the above theorem readily implies an analogous result for the spherical distance function from \(A\in S^{N-1}\) to \(\Delta \). The critical points are \((1-\frac{(\lambda _i-\lambda _j)^2}{2})^{-1/2}\,C^T \Lambda _{i,j} C\) and the global minimum of the spherical distance function \(\text {d}^S\) is \(\min _{B\in \Delta } \text {d}^S(A,B) = \min _{1\le i<j\le n} \arcsin \left( \tfrac{|\lambda _i-\lambda _j|}{\sqrt{2}}\right) \).

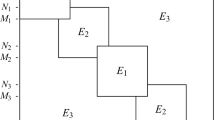

The theorem is a special case of Theorem 1.4 below, that concerns the critical points of the distance function to a fixed stratum of \(\mathscr {C}(\Delta )\). These strata are in bijection with vectors of natural numbers \(w=(w_1,w_2,\ldots ,w_n)\in \mathbb {N}^n\) such that \(\sum _{i=1}^n i \,w_i = n\) as follows: let us denote by \(\mathscr {C}(\Delta )^w\) the smooth semialgebraic submanifold of \(\text {Sym}(n,\mathbb {R})\) consisting of symmetric matrices that for each \(i\ge 1\) have exactly \(w_i\) eigenvalues of multiplicity i. Then, by Shapiro and Vainshtein (1995, Lemma 1), the semialgebraic sets \(\mathscr {C}(\Delta )^w\) with \(w_1<n\) form a stratification of \(\mathscr {C}(\Delta )\):

In this notation, the complement of \(\mathscr {C}(\Delta )\) can be written \(\mathscr {C}(\Delta )^{(n,0,\ldots ,0)} = \text {Sym}(n,\mathbb {R})\setminus \mathscr {C}(\Delta )\). By Arnold (1972, Lemma 1.1), the codimension of \(\mathscr {C}(\Delta )^w\) in the space \(\text {Sym}(n,\mathbb {R})\) equals

Let us denote by \(\hbox {Diag}(n,\mathbb {R})^w:=\text {Diag}(n,\mathbb {R})\cap \mathscr {C}(\Delta )^w\) the set of diagonal matrices in \(\mathscr {C}(\Delta )^w\) and its Euclidean closure by \(\overline{\hbox {Diag}(n,\mathbb {R})^w}\). This closure is an arrangement of \(\frac{n!}{1!^{w_1}2!^{w_2}3!^{w_3}\cdots }\) many \((\sum _{i=1}^n w_i)\)-dimensional planes. Furthermore, for a sufficiently generic diagonal matrix \(\Lambda =\hbox {diag}(\lambda _1,\ldots ,\lambda _n)\) the distance function

has \(\frac{n!}{1!^{w_1}2!^{w_2}3!^{w_3}\cdots }\) critical points each of which is the orthogonal projection of \(\Lambda \) on one of the planes in the arrangement \(\overline{\hbox {Diag}(n,\mathbb {R})^w}\) and the distance \(\hbox {d}_{\Lambda }\) attains its unique global minimum at one of these critical points. We will show that an analogous result holds for

the distance function from a general symmetric matrix \(A\in \hbox {Sym}(n,\mathbb {R})\) to the smooth semialgebraic set \(\mathscr {C}(\Delta )^w\). The proof for the following theorem is given in Sect. 2.

Theorem 1.4

(Eckart–Young–Mirsky-type theorem for the strata). Let \(A\in \hbox {Sym}(n,\mathbb {R})\) be a generic real symmetric matrix and let \(A=C^T\Lambda C\) be its spectral decomposition. Then:

-

1.

Any critical point of the distance function \(\hbox {d}_A: \mathscr {C}(\Delta )^w \rightarrow \mathbb {R}\) is of the form \(C^T \tilde{\Lambda } C\), where \(\tilde{\Lambda }\in \hbox {Diag}(n,\mathbb {R})^w\) is the orthogonal projection of \(\Lambda \) onto one of the planes in \(\overline{\hbox {Diag}(n,\mathbb {R})^w}\).

-

2.

The distance function \(\hbox {d}_A: \mathscr {C}(\Delta )^w \rightarrow \mathbb {R}\) has exactly \(\frac{n!}{1!^{w_1}2!^{w_2}3!^{w_3}\cdots }\) critical points, one of which is the unique global minimum of \(\hbox {d}_A\).

Remark 4

Note that the manifold \(\mathscr {C}(\Delta )^w\) is not compact and therefore the function \(\hbox {d}_A: \mathscr {C}(\Delta )^w \rightarrow \mathbb {R}\) might not a priori have a minimum.

1.3 Euclidean Distance Degree

Let \(X\subset \mathbb {R}^m\) be a real algebraic variety and let \(X^\mathbb {C}\subset \mathbb {C}^m\) denote its Zariski closure. The number \(\#\{x\in X_{\text {sm}}: u-x\perp T_x X_{\text {sm}}\}\) of critical points of the distance to the smooth locus \(X_{\text {sm}}\) of X from a generic point \(u\in \mathbb {R}^m\) can be estimated by the number \(\text {EDdeg}(X):=\#\{x\in X_{\text {sm}}^{\mathbb {C}} : u-x \perp T_x X_{\text {sm}}^{\mathbb {C}}\}\) of “complex critical points”. Here, \(v\perp w\) is orthogonality with respect to the bilinear form \((v,w)\mapsto v^Tw\). The quantity \(\text {EDdeg}(X)\) does not depend on the choice of the generic point \(u\in \mathbb {R}^m\) and it’s called the Euclidean distance degree of X (Draisma et al. 2016). Also, solutions \(x\in X^{\mathbb {C}}_{\text {sm}}\) to \(u-x\perp T_xX_{\text {sm}}^{\mathbb {C}}\) are called ED critical points of u with respect to X (Drusvyatskiy et al. 2017). In the following theorem we compute the Euclidean distance degree of the variety \(\mathscr {C}(\Delta )\subset \text {Sym}(n,\mathbb {R})\) and show that all ED critical points are actually real (this result is an analogue of Drusvyatskiy et al. (2017, Cor. 5.1) for the space of symmetric matrices and the variety \(\mathscr {C}(\Delta )\)).

Theorem 1.5

Let \(A\in \text {Sym}(n,\mathbb {R})\) be a sufficiently generic symmetric matrix. Then the \({n \atopwithdelims ()2}\) real critical points of \(\text {d}_A: \mathscr {C}(\Delta _{\text {sm}}) \rightarrow \mathbb {R}\) from Theorem 1.3 are the only ED critical points of A with respect to \(\mathscr {C}(\Delta )\) and the Euclidean distance degree of \(\mathscr {C}(\Delta )\) equals \(\text {EDdeg}(\mathscr {C}(\Delta )) = {n \atopwithdelims ()2}\).

Remark 5

An analogous result holds for the closure of any other stratum of \(\mathscr {C}(\Delta )^w\). Namely, \(\text {EDdeg}(\overline{\mathscr {C}(\Delta )^w})=\frac{n!}{1!^{w_1}2!^{w_2}3!^{w_3}\cdots }\) and for a generic real symmetric matrix \(A\in \text {Sym}(n,\mathbb {R})\) ED critical points are real and given in Theorem 1.4.

1.4 Random Matrix Theory

The proof of Theorem 1.1 eventually arrives at Eq. (3.7), which reduces our study to the evaluation of a special integral over the Gaussian Orthogonal Ensemble (\(\mathrm {GOE}\)) (Mehta 2004; Tao 2012). The connection between the volume of \(\Delta \) and random symmetric matrices comes from the fact that, in a sense, the geometry in the Euclidean space of symmetric matrices with the Frobenius norm and the random \(\mathrm {GOE}\) matrix model can be seen as the same object under two different points of view.

The integral in (3.7) is the second moment of the characteristic polynomial of a \(\mathrm {GOE}\) matrix. In Mehta (2004) Mehta gives a general formula for all moments of the characteristic polynomial of a \(\mathrm {GOE}\) matrix. However, we were unable to locate an exact evaluation of the formula for the second moment in the literature. For this reason we added Proposition 4.2, in which we compute the second moment, to this article. We use it in Sect. 4 to prove the following theorem.

Theorem 1.6

For a fixed positive integer k we have

An interesting remark in this direction is that some geometric properties of \(\Delta \) can be stated using the language of random matrix theory. For instance, the estimate on the volume of a tube around \(\Delta \) allows to estimate the probability that two eigenvalues of a GOE(n) matrix are close: for \(\epsilon >0\) small enough

The interest of this estimate is that it provides a non-asymptotic (as opposed to studies in the limit \(n\rightarrow \infty \), Ben Arous and Bourgade 2013; Nguyen et al. 2017) result in random matrix theory. It would be interesting to provide an estimate of the implied constant in (1.4), however this might be difficult using our approach as it probably involves estimating higher curvature integrals of \(\Delta \).

2 Critical Points of the Distance to the Discriminant

In this section we prove Theorems 1.3, 1.4 and 1.5. Since Theorem 1.3 is a special case of Theorem 1.4, we start by proving the latter.

2.1 Proof of Theorem 1.4

Let us denote by \(\overline{\mathscr {C}(\Delta )^w}\subset \text {Sym}(n,\mathbb {R})\) the Euclidean closure of \(\mathscr {C}(\Delta )^w\). Note that \(\overline{\mathscr {C}(\Delta )^w}\) is a (real) algebraic variety, the smooth locus of \(\overline{\mathscr {C}(\Delta )^w}\) is \(\mathscr {C}(\Delta )^w\) and the boundary \(\overline{\mathscr {C}(\Delta )^w}{\setminus } \mathscr {C}(\Delta )^w\) is a union of some strata \(\mathscr {C}(\Delta )^{w^\prime }\) of greater codimension.

The following result is an adaptation of Bik and Draisma (2017, Thm. 3) to the space \(\text {Sym}(n,\mathbb {R})\) of real symmetric matrices, its subspace \(\text {Diag}(n,\mathbb {R})\) of diagonal matrices and the action \(C\in O(n), A\in \text {Sym}(n,\mathbb {R})\mapsto C^TAC\in \text {Sym}(n,\mathbb {R})\). Note that the assumptions of Bik and Draisma (2017, Thm. 3) are satisfied in this case as shown in Bik and Draisma (2017, Sec. 3).

Lemma 2.1

Let \(X\subset \text {Sym}(n,\mathbb {R})\) be a O(n)-invariant subvariety. Then for a sufficiently generic \(\Lambda \in \text {Diag}(n,\mathbb {R})\) the set of critical points of the distance \(\text {d}_{\Lambda }: X_{\text {sm}} \rightarrow \mathbb {R}\) is contained in \(X\cap \text {Diag}(n,\mathbb {R})\).

Let now \(X=\overline{\mathscr {C}(\Delta )^w}\), let \(A\in \text {Sym}(n,\mathbb {R})\) be a sufficiently generic symmetric matrix and fix its spectral decomposition \(A=C^T\Lambda C\). By Lemma 2.1 critical points of \(\text {d}_{\Lambda }: \mathscr {C}(\Delta )^w \rightarrow \mathbb {R}\) are all diagonal. Since the intersection \(X\cap \text {Diag}(n,\mathbb {R})=\overline{\text {Diag}(n,\mathbb {R})^w}\) is an arrangement of \(\frac{n!}{1!^{w_1}2!^{w_2}3!^{w_3}\cdots }\) planes, critical points of \(\text {d}_{\Lambda }\) are the orthogonal projections of \(\Lambda \) on each of the components of the plane arrangement. Moreover, one of these points is the (unique) closest point on \(\overline{\text {Diag}(n,\mathbb {R})^w}\) to \(\Lambda \). The critical points of the distance \(\text {d}_A: \mathscr {C}(\Delta )^w \rightarrow \mathbb {R}\) from \(A=C^T\Lambda C\) are obtained via conjugation of critical points of \(\text {d}_\Lambda \) by \(C\in O(n)\). Both claims follow. \(\square \)

2.2 Proof of Theorem 1.3

Let \(w=(n-2,1,0,\ldots ,0)\) and for a given symmetric matrix \(A\in \text {Sym}(n,\mathbb {R})\) let us fix a spectral decomposition \(A=C^T\Lambda C, \Lambda =\text {diag}(\lambda _1,\ldots ,\lambda _n)\). From Theorem 1.4 we know that the critical points of the distance function \(\text {d}_A: \mathscr {C}(\Delta _{\text {sm}}){\setminus } \{0\} \rightarrow \mathbb {R}\) are of the form \(C^T\Lambda _{i,j}C\), \(1\le i<j\le n\), where \(\Lambda _{i,j}\) is the orthogonal projection of \(\Lambda \) onto the hyperplane \(\{\lambda _i=\lambda _j\}\subset \overline{\text {Diag}(n,\mathbb {R})^w}\). It is straightforward to check that

From this, it is immediate that the distance between \(\Lambda \) and \(\Lambda _{i,j}\) equals

This finishes the proof. \(\square \)

2.3 Proof of Theorem 1.5

In the proof of Theorem 1.3 we showed that there are \(\genfrac(){0.0pt}1{n}{2}\) real ED critical points of the distance function from a general real symmetric matrix A to \(\mathscr {C}(\Delta )\). In this subsection we in particular argue that there are no other (complex) ED critical points in this case. The argument is based on Main Theorem from Bik and Draisma (2017) which is stated first.

Theorem 2.2

(Main Theorem from Bik and Draisma (2017)). Let V be a finite-dimensional complex vector space equipped with a non-degenerate symmetric bilinear form, let \(G^{\mathbb {C}}\) be a complex algebraic group and let \(G^{\mathbb {C}}\rightarrow O(V)\) be an orthogonal representation. Suppose that \(V_0\subset V\) is a linear subspace such that, for sufficiently generic \(v_0\in V_0\), the space V is the orthogonal direct sum of \(V_0\) and the tangent space \(T_{v_0}G^{\mathbb {C}}v_0\) at \(v_0\) to its \(G^{\mathbb {C}}\)-orbit. Let \(X^\mathbb {C}\) be a \(G^{\mathbb {C}}\)-invariant closed subvariety of V. Set \(X^\mathbb {C}_0=X^\mathbb {C}\cap V_0\) and suppose that \(G^{\mathbb {C}}X^\mathbb {C}_0\) is dense in \(X^\mathbb {C}\). Then the \(\text {ED}\) degree of \(X^\mathbb {C}\) in V equals the \(\text {ED}\) degree of \(X^\mathbb {C}_0\) in \(V_0\).

We will apply this theorem to the space of complex symmetric matrices \(V=\text {Sym}(n,\mathbb {C})\) endowed with the complexified Frobenius inner product, the subspace of complex diagonal matrices \(V_0=\text {Diag}(n,\mathbb {C})\), the complex orthogonal group \(G^{\mathbb {C}} = \{C\in M(n,\mathbb {C}): C^TC = {\mathbb {1}}\}\) acting on V via conjugation and the Zariski closure \(X^\mathbb {C}\subset \text {Sym}(n,\mathbb {C})\) of \(\mathscr {C}(\Delta )\subset \text {Sym}(n,\mathbb {R})\).

Let us denote by \(G=O(n)\) the orthogonal group. Since \(\mathscr {C}(\Delta )\subset \text {Sym}(n,\mathbb {R})\) is G-invariant, by Drusvyatskiy et al. (2017, Lemma 2.1), the complex variety \(X^\mathbb {C}\subset \text {Sym}(n,\mathbb {C})\) is also G-invariant. Using the same argument as in Drusvyatskiy et al. (2017, Thm. 2.2) we now show that \(X^\mathbb {C}\) is actually \(G^{\mathbb {C}}\)-invariant. Indeed, for a fixed point \(A\in X^\mathbb {C}\) the map

is continuous and hence the set \(\gamma _A^{-1}(X^\mathbb {C})\subset G^{\mathbb {C}}\) is closed. Since by the above \(G\subset \gamma _A^{-1}(X^\mathbb {C})\) and since \(G^{\mathbb {C}}\) is the Zariski closure of G we must have \(\gamma _A^{-1}(X^\mathbb {C}) = G^{\mathbb {C}}\).

Now for any diagonal matrix \(\Lambda \in \text {Diag}(n,\mathbb {C})\) with pairwise distinct diagonal entries the tangent space at \(\Lambda \) to the orbit \(G^{\mathbb {C}}\Lambda = \{C^T\Lambda C: C\in G^{\mathbb {C}}\}\) consists of complex symmetric matrices with zeros on the diagonal:

In particular,

is the direct sum which is orthogonal with respect to the complexified Frobenius inner product \((A_1,A_2)\mapsto \text {tr}(A_1^TA_2)\).

As any real symmetric matrix can be diagonalized by some orthogonal matrix we have

This, together with the inclusion \(\mathscr {C}(\Delta ) \subset G^{\mathbb {C}}(X^\mathbb {C}\cap \text {Diag}(n,\mathbb {C}))\), imply that the set \(G^{\mathbb {C}}(X^\mathbb {C}\cap \text {Diag}(n,\mathbb {C}))\) is Zariski dense in \(X^\mathbb {C}\). Applying Theorem (2.2) we obtain that the ED degree of \(X^\mathbb {C}\) in \(\text {Sym}(n,\mathbb {C})\) equals the ED degree of \(X^\mathbb {C}\cap \text {Diag}(n,\mathbb {C})\) in \(\text {Diag}(n,\mathbb {C})\). Since \(X^\mathbb {C}\cap \text {Diag}(n,\mathbb {C})= \{\Lambda \in \text {Diag}(n,\mathbb {C}): \lambda _i=\lambda _j, i\ne j\}\) is the union of \({n \atopwithdelims ()2}\) hyperplanes the ED critical points of a generic \(\Lambda \in \text {Diag}(n,\mathbb {C})\) are orthogonal projections from \(\Lambda \) to each of the hyperplanes (as in the proof of Theorem 1.3). In particular, \(\text {EDdeg}(X^\mathbb {C}) = \text {EDdeg}(X^\mathbb {C}\cap \text {Diag}(n,\mathbb {C})) = {n\atopwithdelims ()2}\) and if \(\Lambda \in \text {Diag}(n,\mathbb {R})\) is a generic real diagonal matrix ED critical points are all real. Finally, for a general symmetric matrix \(A=C^T\Lambda C\) all ED critical points are obtained from the ones for \(\Lambda \in \text {Diag}(n,\mathbb {R})\) via conjugation by \(C\in O(n)\).

The proof of the statement in Remark 5 is similar. Each plane in the plane arrangement \(\overline{\text {Diag}(n,\mathbb {R})^w}\) yields one critical point and there are \(\frac{n!}{1!^{w_1}2!^{w_2}3!^{w_3}\cdots }\) many such planes. \(\square \)

3 The Volume of the Discriminant

The goal of this section is to prove Theorems 1.1 and 1.2. As was mentioned in the introduction, we reduce the computation of the volume to an integral over the \(\mathrm {GOE}\)-ensemble. This is why, before starting the proof, in the next subsection we recall some preliminary concepts and facts from random matrix theory that will be used in the sequel.

3.1 The \(\text {GOE}(n)\) Model for Random Matrices

The material we present here is from Mehta (2004).

The \(\text {GOE}(n)\) probability measure of any Lebesgue measurable subset \(U\subset \text {Sym}(n,\mathbb {R})\) is defined as follows:

where \(dA=\prod _{1\le i\le j\le n} dA_{ij}\) is the Lebesgue measure on the space of symmetric matrices \(\text {Sym}(n,\mathbb {R})\) and, as before, \(\Vert A\Vert = \sqrt{ \text {tr}(A^2)}\) is the Frobenius norm.

By Mehta (2004, Sec. 3.1), the joint density of the eigenvalues of a \(\mathrm {GOE}(n)\) matrix A is given by the measure \( \tfrac{1}{Z_n} \int _V e^{-\frac{\Vert \lambda \Vert ^2}{2}} |\Delta (\lambda )|\, d\lambda ,\) where \(d\lambda =\prod _{i=1}^n d\lambda _i\) is the Lebesgue measure on \(\mathbb {R}^n\), \(V\subset \mathbb {R}^n\) is a measurable subset, \(\Vert \lambda \Vert ^2 = \lambda _1^2+\cdots +\lambda _n^2\) is the Euclidean norm, \(\Delta (\lambda ):=\prod _{1\le i<j\le n}(\lambda _j-\lambda _i)\) is the Vandermonde determinant and \(Z_n\) is the normalization constant whose value is given by the formula

see Mehta (2004, Eq. (17.6.7)) with \(\gamma =a=\tfrac{1}{2}\). In particular, for an integrable function \(f:\text {Sym}(n,\mathbb {R}) \rightarrow \mathbb {R}\) that depends only on the eigenvalues of \(A\in \text {Sym}(n,\mathbb {R})\), the following identity holds

3.2 Proof of Theorem 1.1

In what follows we endow the orthogonal group O(n) with the left-invariant metric defined on the Lie algebra \(T_{{\mathbb {1}}}O(n)\) by

The following formula for the volume of O(n) can be found in Muirhead (1982, Corollary 2.1.16):

Recall that by definition the volume of \(\Delta \) equals the volume of the smooth part \(\Delta _\mathrm {sm}\subset S^{N-1}\) that consists of symmetric matrices of unit norm with exactly two repeated eigenvalues. Let us denote by \((S^{n-2})_*\) the dense open subset of the \((n-2)\)-sphere consisting of points with pairwise distinct coordinates. We consider the following parametrization of \(\Delta _{\text {sm}}\subset S^{N-1}\):

where \(\lambda _1,\ldots ,\lambda _n\) are defined as

In Lemma 3.1 below we show that p is a submersion. Applying to it the smooth coarea formula (see, e.g., Bürgisser and Cucker 2013, Theorem 17.8) we have

Here \(\mathrm {NJ}_{(C,\mu )}p\) denotes the normal Jacobian of p at \((C,\mu )\) and we compute its value in the following lemma.

Lemma 3.1

The parametrization \(p: O(n)\times (S^{n-2})_* \rightarrow \Delta _{\text {sm}}\) is a submersion and its normal Jacobian at \((C,\mu )\in O(n)\times (S^{n-2})_*\) is given by the formula

Proof

Recall that for a smooth submersion \(f: M\rightarrow N\) between two Riemannian manifolds the normal Jacobian of f at \(x\in M\) is the absolute value of the determinant of the restriction of the differential \(D_{x}f: T_x M \rightarrow T_{f(x)}N\) of f at x to the orthogonal complement of its kernel. We now show that the parametrization \(p: O(n)\times (S^{n-2})_* \rightarrow \Delta _{\text {sm}}\) is a submersion and compute its normal Jacobian.

Note that p is equivariant with respect to the right action of O(n) on itself and its action on \(\Delta _{\text {sm}}\) via conjugation, i.e., for all \(C, \tilde{C}\in O(n)\) and \(\mu \in S^{n-2}\) we have \(p(C\tilde{C},\mu ) = \tilde{C}^Tp(C,\mu )\tilde{C}\). Therefore, \(D_{(C,\mu )}p = C^TD_{({\mathbb {1}},\mu )}p\, C\) and, consequently, \(\mathrm {NJ}_{(C,\mu )}p = \mathrm {NJ}_{({\mathbb {1}},\mu )}p\). We compute the latter. The differential of p at \(({\mathbb {1}},\mu )\) is the map

where \(\dot{\lambda }_i = \dot{\mu }_i\) for \(1\le i\le n-2\) and \(\dot{\lambda }_{n-1} = \dot{\lambda }_n = \tfrac{\dot{\mu }_{n-1}}{\sqrt{2}}\). The Lie algebra \(T_{{\mathbb {1}}}O(n)\) consists of skew-symmetric matrices:

Let \(E_{i,j}\) be the matrix that has zeros everywhere except for the entry (i, j) where it equals 1. Then \(\{E_{i,j} - E_{j,i} : 1\le i < j\le n\}\) is an orthonormal basis for \(T_{{\mathbb {1}}}O(n)\). One verifies that

This implies that p is a submersion and

Combining this with the fact that the restriction of \(D_{({\mathbb {1}},\mu )}p\) to \(T_{\mu }(S^{n-2})_*\) is an isometry we obtain

which finishes the proof. \(\square \)

We now compute the volume of the fiber \(p^{-1}(A), A\in \Delta _{\text {sm}}\) that appears in (3.5).

Lemma 3.2

The volume of the fiber over \(A\in \Delta _{\text {sm}}\) equals \(\vert p^{-1}(A)\vert = 2^{n}\pi \,(n-2)! .\)

Proof

Let \(A= p(C,\mu ) \in \Delta _\mathrm {sm}\). The last coordinate \(\mu _{n-1}\) is always mapped to the double eigenvalue \(\lambda _{n-1}=\lambda _n\) of A, whereas there are \((n-2)!\) possibilities to arrange \(\mu _1,\ldots ,\mu _{n-2}\). For a fixed choice of \(\mu \) there are \(|O(1)|^{n-2}|O(2)|\) ways to choose \(C\in O(n)\). Therefore, by (3.3) we obtain \( \vert p^{-1}(A)\vert = \vert O(1)\vert ^{n-2} \vert O(2)\vert \,(n-2)!=2^{n-2}\cdot 2^2\pi \cdot (n-2)! = 2^{n} \pi (n-2)!\). \(\square \)

Combining (3.5) with Lemmas 3.1 and 3.2 we write for the normalized volume of \(\Delta \):

where \(\Delta (\mu _1,\ldots ,\mu _{n-2})=\prod _{1\le i<j\le n-2}(\mu _i-\mu _j)\).

The function being integrated is independent of \(C\in O(n)\). Thus, using Fubini’s theorem we can perform the integration over the orthogonal group. Furthermore, the integrand is a homogeneous function of degree \(\tfrac{(n-2)(n+1)}{2}\). Passing from spherical coordinates to spatial coordinates and extending the domain of integration to the measure-zero set of points with repeated coordinates we obtain

where

Let us write \(u:=\tfrac{\mu _{n-1}}{\sqrt{2}}\) for the double eigenvalue and make a change of variables from \(\mu _{n-1}\) to u. Considering the eigenvalues \(\mu _1,\ldots ,\mu _{n-2}\) as the eigenvalues of a symmetric \((n-2)\times (n-2)\) matrix Q, by (3.2) we have

Using formulas (3.1) and (3.3) for \(Z_{n-2}\) and \(\vert O(n)\vert \) respectively we write

where in the last step the duplication formula for Gamma function \(\Gamma (\tfrac{n}{2})\Gamma (\tfrac{n-1}{2}) = 2^{2-n} \sqrt{\pi }\,(n-2)!\) has been used. Let us recall the formula for the volume of the \((N-3)\)-dimensional unit sphere: \(|S^{N-3}| = 2\pi ^\frac{N-2}{2} /\Gamma (\tfrac{N-2}{2})\). Recalling that \(N=\frac{n(n+1)}{2}\) we simplify the constant in (3.6)

Plugging this into (3.6) we have

Combining the last formula with Theorem 1.6 whose proof is given in Sect. 4 we finally derive the claim of Theorem 1.1: \( \tfrac{|\Delta |}{|S^{N-3}|} = {n \atopwithdelims ()2}.\)

Remark 6

The proof can be generalized to subsets of \(\Delta \) that are defined by an eigenvalue configuration given by a measurable subset of \((S^{n-2})_*\). Such a configuration only adjusts the domain of integration in (3.7). For instance, consider the subset

It is an open semialgebraic subset of \((S^{n-2})_*\) and \(\Delta _1:=p(O(n) \times (S^{n-2})_1)\) is the smooth part of the matrices whose two smallest eigenvalues coincide. Following the proof until (3.7), we get

where \(\mathbf {1}_{\{Q \succ u{\mathbb {1}}\}}\) is the indicator function of \(Q-u{\mathbb {1}}\) being positive definite.

3.3 Multiplicities in a Random Family

In this subsection we prove Theorem 1.2.

The proof is based on the integral geometry formula from Howard (1993, p. 17). We state it here for the case of submanifolds of the sphere \(S^{N-1}\). If \(A, B\subset S^{N-1}\) are smooth submanifolds of dimensions a and b respectively and \(a+b\ge N-1\), then

Note that the intersection \(A\cap gB\) is transverse for a full measure set of \(g\in O(N)\) and hence its volume is almost surely defined.

Denote \(\widehat{F}=\pi \circ F:\Omega \rightarrow S^{N-1}\). Then, by assumption, with probability one we have:

Observe also that, since the list \((f_1, \ldots , f_N)\) consists of i.i.d. random Gaussian fields, then for every \(g\in O(N)\) the random maps \(\widehat{F}\) and \(g\circ \widehat{F}\) have the same distribution and

In this derivation we have applied (3.8) to \(\Delta _{\text {sm}}, \widehat{F}(\Omega ) \subset S^{N-1}\) (for almost any \(g\in O(N)\) the embedded surface \(g(\widehat{F}(\Omega ))\) intersects \(\Delta \) only along \(\Delta _{\text {sm}}\)). Let us denote by \(L=\{x_1=x_2=0\}\) the codimension-two subspace of \(\mathrm {Sym}(n, \mathbb {R})\) given by the vanishing of the first two coordinates (in fact: any two coordinates). The conclusion follows by applying (3.8) again:

This finishes the proof. \(\square \)

4 The Second Moment of the Characteristic Polynomial of a GOE Matrix

In this section we give a proof of Theorem 1.6. Let us first recall some ingredients and prove some auxiliary results.

Lemma 4.1

Let \(P_m=2^{1-m^2}\sqrt{\pi }^{m}\prod _{i=0}^m (2i)!\) and let \(Z_{2m}\) be the normalization constant from (3.1). Then \(P_m=2^{1-2m}\,Z_{2m}.\)

Proof

The formula (3.1) for \(Z_{2m}\) reads

Using the formula \(\Gamma (z)\Gamma (z+\tfrac{1}{2}) = \sqrt{\pi }2^{1-2z} \Gamma (2z)\) (Spanier et al. 2000, 43:5:7) with \(z=i+1/2\) we obtain

This proves the claim. \(\square \)

Recall now that the (physicist’s) Hermite polynomials\(H_i(x),\, i=0,1,2, \ldots \) form a family of orthogonal polynomials on the real line with respect to the measure \(e^{-x^2}dx\). They are defined by

and satisfy

A Hermite polynomial is either odd (if the degree is odd) or even (if the degree is even) function:

and its derivative satisfies

see Spanier et al. (2000, (24:5:1)) and Gradshteyn and Ryzhik (2015, (8.952.1)) for these properties.

The following proposition is crucial for the proof of Theorem 1.6.

Proposition 4.2

(Second moment of the characteristic polynomial). For a fixed positive integer k and a fixed \(u\in \mathbb {R}\) the following holds.

-

1.

If \(k=2m\) is even, then

$$\begin{aligned} \mathop {\mathbb {E}}\limits \limits _{Q\sim \mathrm {GOE}(k)}\det (Q-u{\mathbb {1}})^2 = \frac{(2m)!}{2^{2m}}\,\sum _{j=0}^m \frac{2^{-2j-1}}{(2j)!}\, \det X_j(u), \end{aligned}$$where

$$\begin{aligned} X_j(u)=\begin{pmatrix} H_{2j}(u) &{} H_{2j}'(u) \\ H_{2j+1}(u)-H_{2j}'(u) &{} H_{2j+1}'(u)-H_{2j}''(u) \end{pmatrix}. \end{aligned}$$ -

2.

If \(k=2m+1\) is odd, then

$$\begin{aligned} \mathop {\mathbb {E}}\limits \limits _{Q\sim \mathrm {GOE}(k)}\det (Q-u{\mathbb {1}})^2 =\frac{\sqrt{\pi }(2m+1)!}{2^{4m+2}\,\Gamma (m+\tfrac{3}{2})} \sum _{j=0}^m \frac{2^{-2j-2}}{(2j)!}\, \det Y_j(u), \end{aligned}$$where

$$\begin{aligned} Y_j(u)=\begin{pmatrix} \frac{(2j)!}{j!} &{} H_{2j}(u) &{} H_{2j}'(u) \\ 0 &{} H_{2j+1}(u)-H_{2j}'(u) &{} H_{2j+1}'(u)-H_{2j}''(u)\\ \tfrac{(2m+2)!}{(m+1)!} &{} H_{2m+2}(u) &{} H_{2m+2}'(u) \end{pmatrix}. \end{aligned}$$

Proof

In Section 22 of Mehta (2004) one finds two different formulas for the even \(k=2m\) and odd \(k=2m+1\) cases. We evaluate both separately.

If \(k=2m\), we have by Mehta (2004, (22.2.38)) that

where \(P_m=2^{1-m^2}\sqrt{\pi }^{m}\prod _{i=0}^m (2i)!\) is as in Lemma 4.1, \(Z_{2m}\) is the normalization constant (3.1) and where \(R_{2j}(u)=2^{-2j} H_{2j}(u)\) and \(R_{2j+1}(u)=2^{-(2j+1)}(H_{2j+1}(u)-H_{2j}'(u))\). Using the multilinearity of the determinant we get

By Lemma 4.1 we have \(\tfrac{P_m}{Z_{2m}}= 2^{1-2m}\). Putting everything together yields the first claim.

In the case \(k=2m+1\) we get from Mehta (2004, (22.2.39)) that

where \(P_m\), \(R_{2j}(u), R_{2j+1}(u)\) are as above and

By (4.2), the Hermite polynomial \(H_{2j+1}(u)\) is an odd function. Hence, we have \(g_{2j+1}=0\). For even indices we use Gradshteyn and Ryzhik (2015, (7.373.2)) to get \(g_{2j}=2^{-2j}\sqrt{2\pi } \tfrac{(2j)!}{j!}\). By the multilinearity of the determinant:

From (3.1) one obtains \(Z_{2m+1} = 2 \sqrt{2}\, \Gamma (m+\tfrac{3}{2})\,Z_{2m}\), which together with Lemma 4.1 implies

Plugging this into (4.4) we conclude that

\(\square \)

Everything is now ready for the proof of Theorem 1.6

Proof of Theorem 1.6

Due to the nature of Proposition 4.2 we have to make a case distinction also for this proof.

In the case \(k=2m\) we use the formula from Proposition 4.2 (1) to write

By (4.3) we have \(H_i'(u)=2iH_{i-1}(u)\). Hence, \(X_j(u)\) can be written as

From (4.1) we can deduce that

From this we see that

and hence,

Plugging back in \(m=\tfrac{k}{2}\) finishes the proof of the case \(k=2m\).

In the case \(k=2m+1\) we use the formula from Proposition 4.2 (2) to see that

Note that the top right \(2\times 2\)-submatrix of \(Y_j(u)\) is \(X_j(u)\), so that \(\det Y_j(u)\) is equal to

Because taking derivatives of Hermite polynomials decreases the index by one (4.3) and because the integral over a product of two Hermite polynomials is non-vanishing only if their indices agree, the integral of the determinant in (4.7) is non-vanishing only for \(j=m\), in which case it is equal to

We find that

where we used (4.6) in the second step. It follows that

It is not difficult to verify that the last term is \(2^{-2m-2} \sqrt{\pi }\,(2m+3)!\). Substituting \(2m+1=k\) shows the assertion in this case. \(\square \)

References

Agrachev, A.A.: Spaces of symmetric operators with multiple ground states. Funktsional. Anal. i Prilozhen. 45(4), 1–15 (2011)

Agrachev, A.A., Lerario, A.: Systems of quadratic inequalities. Proc. Lond. Math. Soc. (3) 105(3), 622–660 (2012)

Arnold, V.I.: Topological properties of eigen oscillations in mathematical physics. Tr. Mat. Inst. Steklova 273(Sovremennye Problemy Matematiki), 30–40 (2011)

Arnold, V.I.: Modes and quasimodes. Funkcional. Anal. i Priložen. 6(2), 12–20 (1972)

Arnold, V.I.: Remarks on eigenvalues and eigenvectors of Hermitian matrices, Berry phase, adiabatic connections and quantum Hall effect. Sel. Math. (N.S.) 1(1), 1–19 (1995)

Arnold, V.I.: Frequent representations. Mosc. Math. J. 3(4), 1209–1221 (2003)

Beltrán, C., Kozhasov, Kh.: The real polynomial eigenvalue problem is well conditioned on the average (2018). arXiv:1802.07493 [math.NA]

Beltrán, C.: Estimates on the condition number of random rank-deficient matrices. IMA J. Numer. Anal. 31(1), 25–39 (2011)

Ben Arous, G., Bourgade, P.: Extreme gaps between eigenvalues of random matrices. Ann. Probab. 41(4), 2648–2681 (2013)

Bik, A., Draisma, J.: A note on ED degrees of group-stable subvarieties in polar representations (2017). arXiv:1708.07696 [math.AG]

Breiding, P., Kozhasov, Kh., Lerario, A.: Random spectrahedra (2017). arXiv:1711.08253 [math.AG]

Bürgisser, P., Cucker, F.: Condition: The Geometry of Numerical Algorithms, Grundlehren der Mathematischen Wissenschaften, vol. 349. Springer, Heidelberg (2013)

Demmel, J.W.: The probability that a numerical analysis problem is difficult. Math. Comput. 50(182), 449–480 (1988)

Draisma, J., Horobet, E., Ottaviani, G., Sturmfels, B., Thomas, R.R.: The Euclidean distance degree of an algebraic variety. Found. Comput. Math. 16(1), 99–149 (2016)

Drusvyatskiy, D., Lee, H.-L., Ottaviani, G., Thomas, R.R.: The Euclidean distance degree of orthogonally invariant matrix varieties. Isr. J. Math. 221(1), 291–316 (2017)

Edelman, A., Kostlan, E.: How many zeros of a random polynomial are real? Bull. Am. Math. Soc. (N.S.) 32(1), 1–37 (1995)

Edelman, A., Kostlan, E., Shub, M.: How many eigenvalues of a random matrix are real? J. Am. Math. Soc. 7(1), 247–267 (1994)

Gradshteyn, I., Ryzhik, I.: Table of integrals, series, and products. Elsevier/Academic Press, Amsterdam (2015)

Helmke, U., Shayman, M.A.: Critical points of matrix least squares distance functions. Linear Algebra Appl. 215, 1–19 (1995)

Howard, R.: The kinematic formula in Riemannian homogeneous spaces. Mem. Am. Math. Soc 106(509), vi+69 (1993)

Ilyushechkin, N.V.: Some identities for elements of a symmetric matrix. J. Math. Sci. 129(4), 3994–4008 (2005)

Kostlan, E.: On the expected number of real roots of a system of random polynomial equations. In: Foundations of computational mathematics (Hong Kong, 2000), pp 149–188. World Sci. Publ., River Edge (2002)

Lerario, A., Lundberg, E.: Gap probabilities and Betti numbers of a random intersection of quadrics. Discrete Comput. Geom. 55(2), 462–496 (2016)

Mehta, M .L.: Random matrices, 3rd edn. Elsevier, Amsterdam (2004)

Muirhead, R.J.: Aspects of Multivariate Statistical Theory. Wiley Series in Probability and Mathematical Statistics. Wiley, New York (1982)

Nguyen, H., Tao, T., Vu, V.: Random matrices: tail bounds for gaps between eigenvalues. Probab. Theory Relat. Fields 167(3–4), 777–816 (2017)

Parlett, B.N.: The (matrix) discriminant as a determinant. Linear Algebra Appl. 355(1), 85–101 (2002)

Sanyal, R., Sturmfels, B., Vinzant, C.: The entropic discriminant. Adv. Math. 244, 678–707 (2013)

Shapiro, M., Vainshtein, A.: Stratification of Hermitian matrices and the Alexander mapping. C. R. Acad. Sci. 321(12), 1599–1604 (1995)

Shub, M., Smale, S.: Complexity of Bezout’s theorem. III. Condition number and packing. J. Complex. 9(1), 4–14 (1993) (Festschrift for Joseph F. Traub, Part I. (1993))

Shub, M., Smale, S.: Complexity of Bezout’s theorem. II. Volumes and probabilities. In: Computational algebraic geometry (Nice, 1992), Progr. Math., vol. 109, pp. 267–285. Birkhäuser Boston, Boston (1993)

Shub, M., Smale, S.: Complexity of Bézout’s theorem. I. Geometric aspects. J. Am. Math. Soc 6(2), 459–501 (1993)

Spanier, J., Oldham, K.B., Myland, J.: An atlas of functions. Springer, Berlin (2000)

Tao, T.: Topics in random matrix theory, Graduate Studies in Mathematics, vol. 132. American Mathematical Society, Providence (2012)

Teytel, M.: How rare are multiple eigenvalues? Commun. Pure Appl. Math. 52(8), 917–934 (1999)

Vassiliev, V.A.: Spaces of Hermitian operators with simple spectra and their finite-order cohomology. Mosc. Math. J. 3(3), 1145–1165, 1202 (2003). (Dedicated to Vladimir Igorevich Arnold on the occasion of his 65th birthday)

Acknowledgements

Open access funding provided by Max Planck Society. The authors wish to thank A. Agrachev, P. Bürgisser, A. Maiorana for helpful suggestions and remarks on the paper and B. Sturmfels for pointing out reference (Sanyal et al. 2013) for (1.2).

Open Access

This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.

The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

To view a copy of this licence, visit https://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Breiding, P., Kozhasov, K. & Lerario, A. On the Geometry of the Set of Symmetric Matrices with Repeated Eigenvalues. Arnold Math J. 4, 423–443 (2018). https://doi.org/10.1007/s40598-018-0095-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40598-018-0095-0