Abstract

Computer-based analysis of preservice teachers’ written reflections could enable educational scholars to design personalized and scalable intervention measures to support reflective writing. Algorithms and technologies in the domain of research related to artificial intelligence have been found to be useful in many tasks related to reflective writing analytics such as classification of text segments. However, mostly shallow learning algorithms have been employed so far. This study explores to what extent deep learning approaches can improve classification performance for segments of written reflections. To do so, a pretrained language model (BERT) was utilized to classify segments of preservice physics teachers’ written reflections according to elements in a reflection-supporting model. Since BERT has been found to advance performance in many tasks, it was hypothesized to enhance classification performance for written reflections as well. We also compared the performance of BERT with other deep learning architectures and examined conditions for best performance. We found that BERT outperformed the other deep learning architectures and previously reported performances with shallow learning algorithms for classification of segments of reflective writing. BERT starts to outperform the other models when trained on about 20 to 30% of the training data. Furthermore, attribution analyses for inputs yielded insights into important features for BERT’s classification decisions. Our study indicates that pretrained language models such as BERT can boost performance for language-related tasks in educational contexts such as classification.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Motivation

In their profession, teachers are required to make complex decisions under uncertainty (Grossman et al., 2009). Professional growth for teachers is therefore related to coping with uncertainty and learning from one’s experiences (Clarke & Hollingsworth, 2002; Kolb, 1984). It has been argued that reflection is an important link to connect personal experiences with theoretical knowledge that helps teachers to understand and cope with uncertain situations (Korthagen & Kessels, 1999). The explicit instruction of reflection was found to be a key ingredient of effective university-based teacher education programs (Darling-Hammond et al., 2017). A goal for university-based teacher education programs is thus to support teachers to become reflective practitioners: professionals who are able to capitalize on practical teaching experiences to grow professionally (Schön, 1987; Korthagen, 1999; Zeichner, 2010).

To assist preservice teachers in reflecting on essential aspects of their teaching experiences, instructors often require them to write about teaching experiences in a structured way guided by reflection-supporting models (Lai & Calandra, 2010; Poldner et al., 2014; Korthagen & Kessels, 1999). However, the analysis of the written reflection is underdeveloped and expectations for what a quality reflection entails vary from context to context (Buckingham Shum et al., 2017). Moreover, feedback by instructors for the written reflections was found to be rather holistic than analytic (Poldner et al., 2014). This is because content analysis of written reflections is a labor-intensive process (Ullmann, 2019).

Computerized methods in the domain of artificial intelligence research can help to analyze written reflections (Wulff et al., 2020). For example, supervised machine learning methods can categorize written reflections in reference to reflection-supporting models (Ullmann, 2019). Recent research in the domain of artificial intelligence research has found that advances in model architectures for deep neural networks such as transformers as pretrained language models can further improve classification performance for various language-related tasks (Devlin et al., 2018). These architectures might well be applicable to reflective writing analytics and hence provide education researchers novel tools to design applications that facilitate accurate reflective writing analytics (Buckingham Shum et al., 2017).

The purpose of this study is to explore the possibilities of using transformer-based pretrained language models for the analysis of written reflections. To do so, we utilized a pretrained language model to analyze preservice physics teachers’ written reflections that are grounded in a theoretically derived reflection-supporting model. Written reflections were collected over several years in a teaching placement and labeled by human raters according to the reflection-supporting model. The labelled texts formed the training data for a transformer-based pretrained language model called Bidirectional Encoder Representations for Transformers (BERT) (Devlin et al., 2018). To assess the performance of the pretrained language model, several other widely used deep learning architectures for modeling language were fit to the data and compared with BERT’s performance to classify segments in the held-out test data. To better understand optimal conditions for training the models, we compared hyperparameters and dependence of classification performance to size of training data between the deep learning architectures. Finally, important input features for BERT’s classification decisions were explored.

Reflective Writing in Teacher Education

Reflective thinking has been conceptualized as the antidote to intuitive, fast thinking (Dewey, 1933; Kahneman, 2012). It has been argued to be a naturally (yet rarely) occurring thinking process that relates to meaning-making from experience and can be characterized to be systematic, rigorous, and disciplined (Clarà, 2015; Dewey, 1933; Rodgers, 2002). Reflection “involves actively monitoring, evaluating, and modifying one’s thinking” (Lin et al., 1999, p. 43). In the context of reflection-supporting teacher education, Korthagen (2001, p. 58) defines reflection as “the mental process of trying to structure or restructure an experience, a problem, or existing knowledge or insights.” Along these lines, reflective thinking has been characterized to include (1) a process with specific thinking activities (e.g., describing, analyzing, evaluating), (2) target content (e.g., teaching experience, personal theory, assumptions), and (3) specific goals and reasons for engaging in this kind of thinking (e.g., to think differently or more clearly, or justify one’s stance) (Aeppli & Lötscher, 2016).

Given the complexity and uncertainty of the teaching profession, and the challenges to transfer formal knowledge into practical teaching knowledge, teachers oftentimes develop intuitive responses to classroom events that can result in blind routines (Fenstermacher, 1994; Grossman et al., 2009; Korthagen, 1999; Neuweg, 2007). Unmitigated exposure to complex and uncertain teaching situations over an extended period of time can furthermore result in the development of control strategies and transmissive views of learning (Hascher, 2005; Korthagen, 2005; Loughran & Corrigan, 1995). Reflecting one’s teaching actions and professional development can help to integrate the formal knowledge with more practical teaching knowledge (Carlson et al., 2019). However, preservice teachers who have not had opportunities to explicitly reflect their teaching actions and learning have no scripts for reflective thinking, e.g., how to write a reflection (Buckingham Shum et al., 2017; Loughran & Corrigan, 1995). Korthagen (1999) noted that preservice teachers have difficulties in adequately understanding a problem that a certain experience exposes. Preservice teachers also tend to be rather self-centered, with comparably fewer concerns for the students’ thinking (Chan et al., 2021; Levin et al., 2009). Expert teachers have developed much more flexibility in their classroom behavior, which relates to more fully developed reflective competencies (Berliner, 2001). Expert teachers are particularly able to reflect while teaching and utilize their experiences for self-directed professional growth (Berliner, 2001; Korthagen, 1999; Schön, 1983).

Reflective university-based teacher education can help preservice teachers to expose their knowledge and integrate it with their practical teaching experiences (Abels, 2011; Darling-Hammond et al., 2017; Lin et al., 1999). Enacting reflection-supporting structures in university-based teacher education requires instructors to create spaces where preservice teachers can make authentic and scaffolded teaching experiences (Grossman et al., 2009). School placements were argued to enable preservice teachers to act in authentic classroom situations that provide opportunities for reflection (Grossman et al., 2009; Korthagen, 2005; Zeichner, 2010). Reflection-supporting structures in university-based teacher education also entail providing prompts and reflection-supporting models that help preservice teachers to engage in reflective thinking (Lin et al., 1999; Mena-Marcos et al., 2013).

Prompts and reflection-supporting models in university-based teacher education programs are often implemented in the context of written reflections where expert feedback is provided afterwards. Common ways of eliciting reflective thinking include assignments such as logbooks (Korthagen, 1999), reflective journals (Bain et al., 2002), or dialogical and response journals (Roe and Stallman, 1994; Paterson, 1995), oftentimes in the context of school placements. In these approaches, students start a written conversation in the form of a student-centered communication in which significant experiences of the learner are explored (Paterson, 1995). Writing offers several advantages as a way for teachers to reflect. Teachers are allowed to think carefully about what they write, have the possibility to rethink and rework ideas, and establish a clear position statement that can be discussed with others (Poldner et al., 2014).

However, despite widespread implementation of reflective writing, little quantifyable knowledge was gathered on the genre of written reflections. What is the prototypical composition of a written reflection? Which aspects of reflective thinking are most prevalent within a large collection of written reflections? We argue that answering these questions requires a principled and systematic way of analyzing written reflections (Buckingham Shum et al., 2017). Moreover, instructors’ feedback for written reflections is often focused on quality rather than addressing the specific contents of the reflection (Poldner et al., 2014). These problems can be partly attributed to the large amount of written reflections that require feedback and the lack of conceptual precision about the content of the reflection (Aeppli & Lötscher, 2016; Rodgers, 2002).

Methods in the context of artificial intelligence research such as natural language processing (NLP) and machine learning (ML) can provide principled and systematic ways for analyzing preservice teachers’ written reflections (Ullmann, 2019), because, amongst others, they incentivize researchers to scrutinize the assumptions and models informing the generation of written reflections (Breiman, 2001; Kovanović et al., 2018; Ullmann, 2019).

Automated Analysis of Reflective Writing

NLP and ML methods have been used to analyze reflective writing in various domains such as engineering, business, health, or teacher education (Buckingham Shum et al., 2017; Luo & Litman, 2015; Ullmann, 2019; Wulff et al., 2020). Commonly, the analyses include a reflection-supporting model utilized as a framework (Ullmann, 2019) to define categories that are to be identified through ML algorithms. As such, the raw texts are commonly preprocessed with NLP methods such as: (1) removing redundant and uninformative words (e.g., stopwords), (2) identifying the linguistic role of words (part-of-speech annotation) (Gibson et al., 2016; Ullmann et al., 2012), or (3) reducing words to their (morphological) base forms (stemming, lemmatization) (Ullmann et al., 2012). Besides these general preprocessing techniques, more reflection-specific extraction methods have been devised as well. For example, Ullmann et al. (2012) identified self-references such as personal pronouns or reflective verbs (e.g., “rethink”, “mull over”) to annotate segments of reflective texts based on pre-defined dictionaries.

The preprocessed texts were then forwarded into ML models or if-then-rules in order to classify segments (typically sentences) of reflective writing. To perform classification, supervised ML methods were then applied (Carpenter et al., 2020; Wulff et al., 2020; Ullmann, 2019). In this context, Buckingham Shum et al. (2017) used hand-crafted rules to distinguish between reflective and unreflective sentences. They used overall 30 annotated texts (382 sentences) to train their system. The best performance for this system was a Cohen’s kappa of .43 (as calculated in: Ullmann (2019)). Gibson et al. (2016) used 6,090 student reflections and categorized them into weak or strong metacognitive activity (related to reflection). They used part-of-speech tagging and dictionary-based methods to represent the texts as features. As calculated by Ullmann (2019), their best performing model received a Cohen’s kappa value of .48. Furthermore, Ullmann (2019) used the distinction between reflective depth (reflective text versus descriptive text) and reflective breadth (eight categories such as awareness of a problem, future intentions). He used shallow learning techniques in ML to classify sentences for reflective breadth. Cohen’s kappa values for the individual categories were ranging from .53 to .85. Finally, Cheng (2017) used a latent semantic analysis approach to classify reflective entries in an e-portfolio system using the A-S-E-R model (A: analysis, reformulation and future application; S: strategy application - analyze the effectiveness of language learning strategy; E: external influences; R: Report of event or experience). Cohen’s kappa values for classification performance ranged from .60 to .73.

A potential advancement in this research was presented by Carpenter et al. (2020). They used word embeddings to represent students’ reflections. Word embeddings are high-dimensional representations of words in vector space that eventually encapsulate semantic and syntactic relations between words and resolve issues such as synonymy or polysemy (Taher Pilehvar and Camacho-Collados, 2020). Carpenter et al. (2020) used the reflection model by Ullmann (2017) to annotate students’ responses in a game-based science environment, where students played microbiologists who had to diagnose the outbreak of a disease. First, the authors ascertained that reflective depth of students’ responses was predictive for their post-test scores. They further showed that using word embeddings (ELMo) with ML algorithms (support vector machine) was more performant compared to models that only used count-based representations of input features.

Most applications for classifying segments of written reflection according to reflection-supporting models have utilized rather shallow learning models such as logistic regression or naïve Bayes classification. However, modeling language data has intricacies that cannot be captured with these models such as long-range dependencies. Deep learning architectures have been found to be able to model these intricacies. Furthermore, deep learning architectures have the advantage to automatically find efficient representations for textual data, thus exempting researchers of tasks such as lemmatization or stopwords removal (Goodfellow et al., 2016).

Deep Learning Models for Language Modeling

Modeling textual data has generally become more performant with the advent and application of deep neural network architectures (Goldberg, 2017; LeCun et al., 2015). Deep neural network architectures mitigated shortcomings with formerly employed bag-of-words language models. Bag-of-words models make the simplifying assumption that the order in which words appear in a segment is irrelevant (Jurafsky and Martin, 2014). Then, modeling the context dependence through word embeddings and the sequencing of words through dynamic embeddings was a major facilitator for performance boosts in language modeling (Taher Pilehvar & Camacho-Collados, 2020).

On the downside, increasingly sophisticated deep neural network architectures required also more training data, because the amount of parameters in the models increased substantially.Footnote 1 To cope with excessive requirements, methods of transfer learning were developed where researchers make use of previously trained large deep learning models that were trained on massive language corpora such as the dump of the Internet or Wikipedia. In analogy to computer vision, where image classification can be boosted through pretrained model weights rather than random initializations of the model weights (Pratt & Thrun, 1997), pretrained model weights in language models can form a solid backbone that provides structure for further downstream tasks (Devlin et al., 2018).

Based on these encouraging findings in ML and NLP research, we postulate that the application of pretrained language models can also advance performance for segment classification in preservice teachers’ written reflections. However, we are not aware of studies that have used deep learning architectures in classification of reflective writing. Carpenter et al. (2020) noticed: “Another direction for future work [in reflective writing analytics] is to investigate alternative machine learning techniques for modeling the depth of student reflections, including deep neural architectures (e.g., recurrent neural networks)” (p. 76).

In line with this suggestion, we employed a pretrained language model named bidirectional encoder representations for transformers (BERT) that has been found to excel in many language-related tasks not specific to written reflections (Devlin et al., 2018). The following overarching research question guided the present study: To what extent can a pretrained language model (BERT) be utilized in the context of preservice teachers’ written reflections in order to classify segments according to the elements of a given reflection-supporting model? More specifically, the following research questions will be answered:

-

1.

To what extent does a fine-tuned pretrained language model (BERT) outperform other deep learning architectures in classification performance of segments in preservice teachers’ written reflections according to the elements of a reflection-supporting model?

-

2.

To what extent is the size of the training data related to the classification performance of the deep learning language models?

-

3.

What features can best explain the classifiers’ decisions?

Method

Preservice Physics Teachers’ Written Reflections

In order to instruct preservice teachers to write a reflection on their teaching experiences, a reflection-supporting model was devised based on an existing reflection-supporting model (Korthagen and Kessels, 1999). Reflection-supporting models as scaffolds for written reflections commonly differentiate between several functional zones (Swales, 1990) of writing that should be addressed to elicit appropriate reflection-related thinking processes (Aeppli & Lötscher, 2016; Bain et al., 1999; Korthagen & Kessels, 1999; Poldner et al., 2014; Ullmann, 2019). According to many models, reflection-related thinking processes start with a recapitulation and description of the teaching situation. This allows preservice teachers to establish evidence on a problem that they noticed (Hatton & Smith, 1995; van Es & Sherin, 2002). Another important category is the evaluation and analysis of the teaching situation (Korthagen & Kessels, 1999). Preservice teachers judge the students’ actions and their own actions. Finally, preservice teachers are instructed to devise alternatives for their actions and conceive consequences for their own professional development. Alternatives and consequences are important aspects of a critical reflection (Hatton & Smith, 1995; Korthagen & Kessels, 1999), because–in comparison to mere analysis–reflection aims at transforming the individual teacher’s experience in a helpful way for her or his future professional development.

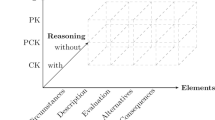

In the present study, a reflection-supporting model was used that was developed in prior studies (Nowak et al., 2019). In this model, essentials of reflective thinking as outlined above are captured in elements that are constitutive of reflective writing, as based on experiential learning theory. The model is based on the ALACT-model by Korthagen and Kessels (1999). In a nutshell, the model by Korthagen and Kessels (1999) outlines a cyclical process for reflection that entails performing the teaching, looking back on essential aspects of the experience, becoming aware of such aspects, creating alternative methods for action, and trying them out. Nowak et al. (2019) adapted this model in the context of written reflections in a school placement for university-based teacher education (see also: Wulff et al., 2020). The model by Nowak et al. (2019) defines a reflection to include the elements: circumstances of the teaching situation, description of the teaching situation, evaluation of students’ and teacher actions, devising of alternatives, and derivation of consequences.

This model was employed in the present study. Preservice teachers were instructed to produce a written reflection on a self-taught lesson in their final teaching placement or on a lesson observed in a video-vignette. The teachers were instructed to 1) describe the circumstances of their/the lesson, followed by 2) a detailed description of events to be reflected on. Then they should 3) evaluate the described event(s) and 4) anticipate alternative actions. Finally, they were instructed to 5) outline personal consequences for their professional development based on their evaluations. This instruction gave us the opportunity to present teachers a scaffold for reflection to eventually compensate the often criticized lack of expectations for what reflection actually entails (Buckingham Shum et al., 2017). The simplicity of the model makes it accessible to teachers at various degrees of expertise: all are given a framework to structure their writing.

All written reflections were collected from preservice physics teachers at two mid-sized German universities. Restricting the study to physics teachers was considered useful, because teaching expertise is considered domain-specific (Berliner, 2001). Physics can be viewed as a knowledge-rich domain (Schoenfeld, 2014). Current classroom instruction in physics tends to be dominated by teacher-centered pedagogy, rather than more constructivist, student-centered learning approaches (Fischer et al., 2010). It can be expected that the development of attention to students’ ideas and noticing physics-specific learning problems through experiental learning as facilitated through reflection can help physics teachers to implement more student-centered instruction.

Written self-reflections were collected over the course of three years. Overall, N=270 reflections were authored by 92 preservice physics teachers in their final teaching placement of their university-based teacher education program. The preservice teachers were new to the reflection-supporting model. Consequently the instruction was explicit on what was expected of the teachers (see below). The teaching placement lasted approximately 15 weeks. Preservice physics teachers who reflected on their own lessons reflected on average 8.9, 4.5, and 3.8 times throughout the three consecutive teaching placements where written reflections were collected. We noticed early in the study that 8.9 written reflections were too much for preservice teachers in a teaching placement lasting only 15 weeks. Consequently, preservice physics teachers were instructed to write only about six written reflections in the following teaching placements. The overall number of reflections that a teacher had to write was eventually one reason why some reflections were very short. However, we did not expect overall quantity of words to pose problems for the classification task at hand. Even though multiple reflections by the same preservice teachers raise issues of intra-individual dependence in the written reflections, we considered the increased size of training data as more relevant given the purpose of our study. Teachers participated based on two possible arrangements. In the first, they could write reflections on their own teaching. In the second, they could reflect on others’ teaching as observed in a video vignette. Instruction for the reflection-supporting model was provided in written form for the preservice teachers who participated the video vignette arrangement. The instruction for the preservice teachers in the school placement was in verbal and written form in the accompanying seminar. In the video vignette arrangement, all preservice physics teachers reflected only once on the same 16-minute video clip. The average length (in words) of all written reflections was 667 (SD= 433) words. The minimum length was 72 words and the maximum length 2,808 words. The vocabulary consisted of 12,518 unique words.

The goal of this study was to train and evaluate a model that classifies segments of preservice physics teachers’ written reflections according to the elements of the reflection-supporting model, namely 1) circumstances, 2) description, 3) evaluation, 4) alternatives, and 5) consequences. A segment as the elementary coding unit was defined as a passage of text that expressed a single idea (e.g., description of an action). In the context of written reflection analytics, smaller coding units such as sentences were found to be more suitable for classification purposes of written reflections as compared to larger units such as whole texts (Ullmann, 2019). Segment lengths were on average 1.6 (SD=1.2) sentences. Minimum segment length was 1 and maximum segment length was 18 sentences. A sample coded text segment looked as follows:

[The purpose of the experiment was to observe different forces and determine their points of contact.][Code: Circumstances] ... [The experimental situation began with unfavourable conditions, as due to a shift of the lesson to another time slot, the experiments had to be carried out in a room that was not a Physics room. However, since they were mechanics experiments, it was still possible to carry them out. However, the room in which the lesson took place is much smaller than the room that was originally planned. Therefore, the experimental situation was somewhat cramped.][Code: Circumstances] ... [After the assignment was announced, some students remained seated because not all students could experiment at the same time.][Code: Description] ... [One of the experiments involved a trolley that was connected to a weight by a thread and a pulley. This experiment was partially modified by the students, contrary to the instructions. However, as these experiments were still in line with the aim of the experiment, no intervention was made. ][Code: Description] ... [The experiment was well received by the students.][Code: Description] ... [The students worked very purposefully, this went better than expected.][Code: Evaluation] ... [Alternatively, the students’ learning time could have been used more effectively by carrying out the experiments as demo experiments. However, this would have eliminated one of the learning objectives of the lesson, which was in the area of knowledge acquisition.][Code: Alternatives] ... [As a consequence for me as a teacher, it is noticeable that the planning must be more detailed. Furthermore, reserves must be created in case an experiment has to be cancelled due to a room change or something similar.][Code: Consequences] ...

A rater was trained to manually label the written reflections according to the elements in the reflection-supporting model (elements 1 to 5). The rater first segmented the written reflections. The minimal segmentation unit was mainly on the sentence-level, with some exception, e.g., when sentences were not meaningful on their own or when they were strongly connected. In prior studies, we found that loosening the sentence-boundary constraint yielded noticeably better interrater agreement (Wulff et al., 2020). Then the rater labeled the segments with one of the five elements according to the definitions of the elements outlined above. Interrater-agreement was ascertained through recoding a subset of the texts (overall N= 8 written reflections) by an independent second rater who used the segments from the first rater. Given the comparably simple classification problem and the promising analyses of interrater agreement for this classification problem in prior studies (Nowak et al., 2018), determining interrater agreement on such a small subset was considered sufficient in this context. Cohen’s kappa values were above .73 for all elements (Wulff et al., 2020). Given the substantial agreement, the first rater labeled the remaining texts. With the final ratings of the texts the proportions of the texts of the elements were calculated to be: circumstances (26%), description (36%), evaluation (23%), alternatives (8%), consequences (7%).

Deep Learning Models

The goal for this study was to utilize a transformer-based pretrained language model (BERT) to classify segments in preservice physics teachers’ written reflections and compare the performance of the pretrained language model with the performance of other deep learning architectures. Before describing the pretrained language model in detail, we will elaborate on the other deep learning architectures that were considered, and outline some of their potential strengths and weaknesses with regards to applications for language analytics. These alternative architectures were feed-forward neural networks (FFNN) and long-short-term-memory neural nets (LSTM), which are widely employed architectures in NLP research (Goldberg, 2017) and have particular advantages in classifying segments of written reflections.

FFNNs are amongst the simpler deep learning architectures. In their easiest form, they consist of an input layer, a hidden layer, and an output layer which are fully connected. The introduction of the hidden layer makes FFNNs more encompassing than models such as logistic regression. In fact, FFNNs with a single hidden layer and non-linear activation functions can approximate any function conceivable (i.e., they are general function approximators) (Goodfellow et al., 2016; Jurafsky and Martin, 2014). One shortcoming of simple FFNNs is that information flows only from lower layers to the next higher layer, with no information flowing back or sideways in one layer. We expect FFNNs to be capable of classifying segments in the written reflections, because they are similar to more shallow ML algorithms (Wulff et al., 2020). However, they should not be regarded as the most performant deep learning architecture due to their strong assumptions about the information flow. Furthermore, ordering of input is not accounted for in FFNNs. Important hyperparameters in FFNNs include the size of the hidden layer. In this study, we only considered width, not depth of the hidden layer. In addition, inputs in FFNNs are often represented through embedding vectors. The dimensionality of the embedding vectors for the inputs can be varied.

LSTMs overcome the assumption that input order is irrelevant. LSTMs are based on recurrent neural networds (RNNs). RNNs excel at capturing patterns in sequential inputs, because they allow the output for a previous input to flow into the next prediction (Goldberg, 2017). RNNs can provide a fixed-size representation of a sequence of any length, which can be beneficial in tasks where, say, word order matters (Goldberg, 2017). RNNs can make predictions for the next word dependent on all the previous input words. As such, they can be used to encode input into a meaningful representation. A shortcoming in RNNs was identified to be the vanishing importance of long-distance relations (Jurafsky & Martin, 2014). Therefore, RNNs are often employed in complex, gated architectures such as LSTMs which encode better statistical regularities and long-distance relationships in the input sequence (Goldberg, 2017). In tasks such as segment classification, the resulting representations from the LSTM are further fed into a FFNN that maps the representation to a label or category (Goldberg, 2017; Jurafsky & Martin, 2014). We expect LSTMs to reach better performance for classifying segments of the written reflections because we conjecture that word order in the input sequence is an important additional feature compared to mere word occurence. Hyperparameters for tuning the LSTM include hidden dimensionality and input embedding dimensionality, among others. We used bidirectional embeddings for the LSTM model.

Transformer-based Pretrained Language Model: BERT

More recently, transformer models have been introduced to processing natural language and were found to outperform LSTMs (Devlin et al., 2018; Vaswani et al., 2017). At the heart of transformer models is a so-called attention mechanism which comprises query, key, and value for each input. Transformers have the advantage over RNN architectures to better attend to distant parts of a sentence, without locality bias (Taher Pilehvar & Camacho-Collados, 2020). Researchers demonstrated that natural language can be characterized by long-range dependencies (Ebeling & Neiman, 1995; Zanette, 2014), which renders transformer-architectures promising models to solve language-related tasks. A more technical advantage is that the computations are parallelizable (Taher Pilehvar & Camacho-Collados, 2020). This means that even on a personal computer the speed required for training the model can be increased by outsourcing computations on the graphical processing unit (GPU) that is built for similar tasks. A powerful implementation of a transformer-based language model is called BERT (Devlin et al., 2018). BERT outperformed previous models in standardized NLP tasks such as question answering, textual entailment, natural language inference learning, and document classification (Conneau et al., 2019; Devlin et al., 2018).

Besides being a transformer architecture, BERT also makes use of transfer learning. The idea of transfer learning is to train a general language representation from large language datasets. In the pretraining phase, BERT uses widely available, unlabeled data from the Internet (Devlin et al., 2018). The learning protocol behind BERT is based on masking randomly chosen input tokens and try to predict them, similar to the cloze-task (Taylor, 1953). Therefore, the model has information from both sides of a masked input token to predict it. BERT is also trained with a next-sentence-prediction task, which enables the model to encode relationships between sentences. For this task, each input sequence gets an extra encoding in a special token (“[CLS]”) that is added to the vocabulary. Next-Sentence-Prediction is mainly beneficial for tasks such as natural language inference and question answering (Taher Pilehvar & Camacho-Collados, 2020). Overall, training BERT embeddings involves minimizing a loss function between correct words, or correct next sentences and predictions. Conditioned on the training data, embeddings are then trained which can be utilized for further tasks such as segment classification (Devlin et al., 2018). The model architecture of BERT base (as compared to large) comprises 12 encoder layers with 768 units (Devlin et al., 2018; Vaswani et al., 2017). The hyperparameters are fixed, once a researcher decides to use a particular pretrained BERT model.

After pretraining, the BERT model can be used in a fine-tuning phase (see Fig. 1). In the fine-tuning phase the pretrained model weights are adjusted to the given task. As such, there is less adjustment necessary when pretrained model weights are used. In addition to the pretrained model weights, the predicted embedding for a segment by the BERT model is fed into another simple classification layer that is added to the pretrained model on top (Devlin et al., 2018; Gnehm & Clematide, 2020).

In the present study, we adopted a pretrained German language model that was trained by deepset AI.Footnote 2 The model weights by deepset AI showed better performance compared to the multilingual BERT models on shared language tasks (Ostendorff et al., 2019). This model has a total vocabulary size of 30,000 that is used to represent all words that occur in the new learning context (occasionally unknown tokens are mapped to a special token, “[UNK]”) and yield a good performance on tasks while keeping memory consumption and computation resources feasible for a personal computer (Ostendorff et al., 2019).

Cross-validation and Technical Implementation

In this study, we used two cross-validation strategies: (1) simple hold-out cross-validation to assess predictive performance of the trained models, and (2) iterated k-fold cross-validation to account for the small sample and better assess generalizability. (1) In the simple hold-out cross-validation 60% of the data (randomly chosen) was used as training data, 20% was used as validation data, and another 20% was used as held-out test data. The same splits were used for all trained models. (2) Given the comparably small size of our datasets for deep learning applications, iterated k-fold cross-validation was additionally considered because it enables evaluation of deep learning models with comparably small datasets as precisely as possible (Chollet, 2018). Iterated k-fold cross-validation randomly splits the data into training and validation subsets. We therefore first concatenated our original training and validation data (not the held-out test data) and then performed iterated k-fold cross-validation on this new dataset. In our case, we split the entire dataset ten times. For each split, the model performance was assessed. This procedure was iterated ten times. Results were then averaged to reach a final score. We performed this procedure with the most performant models, i.e., after tuning the hyperparameters.

All experiments (especially the time measurements) were performed on an Intel Core i9-10900K CPU with 3.70GHz and 32GB RAM. Measurements on GPU were performed on a GeForce RTX 3080, 10GB. Furthermore, we used Python 3.8 (Python Software Foundation, 2020). To train and evaluate the deep learning models we used the Python library pytorch (Paszke et al., 2019). In particular, the Adam optimizer was used in combination with binary cross-entroy loss, which are both implemented in pytorch. The pretrained German BERT model was accessed through the huggingface-library (Wolf et al., 2020).

Comparing BERT with other Deep Learning Architectures (RQ1)

In order to compare BERT with the other deep learning architectures, we fit the considered models (FFNN, LSTM, and BERT) to the training data and evaluated the performance on the validation data. Furthermore, we deleted 11 of BERT’s 12 encoder layers and fitted a reduced BERT model (called: BERT (1)). This gives hints as to how important the number of encoder layers in the BERT architecture was for classification performance in this task. The task was to classify the segments of the written reflections according to the five elements of the reflection-supporting model. After finding the most performant model through hyperparameter optimization, this most performant model was fit on the held-out test data in order to evaluate generalizability.

Performance evaluation of the models for the classification task include precision (p), recall (r), and the F1 score. These performance metrics account for the fact that a classifier should correctly detect labels in the human-labeled data. This is why precision and recall both focus on detecting true positives (Jurafsky & Martin, 2014). For a multiway classifier as in the present case, the precision, recall, and F1 score will be reported for each element and the averaged values. Averages are reported for micro (i.e., global calculations for p/r/F1), macro (i.e., averaged p/r/F1 for each element, unweighted by support for each element), and weighted (i.e., averaged p/r/F1 for each element, weighted by support for each element). The performance metrics are henceforth abbreviated as p/r/F1. We also calculated the Cohen’s kappa value in order to compare the classification performance with a widely used metric in educational research.

To find the most performant models, we conducted a grid search through a hyperparameter space. For all models we varied epochs (values from 3 to 200), batch sizes (values from 3 to 50), learning rates (values from 10− 3 to 10− 5), and hidden sizes (from 100 to 10000). Appropriate step sizes for all hyperparameters were chosen. Epochs indicate the number of times that the procedure of adjusting model weights to the training data is performed. Generally, this is done more than one time. However, at some point the adjustment eventually becomes too restricted to the training data so that the model overfits the training data and classification performance on test data suffers. Batch size indicates the number of training samples that are concurrently fed into the model to produce output. Smaller sizes allow more updates and faster convergence, and larger values provide better estimates of the corpus-wide gradients (Goldberg, 2017). The learning rate relates to the optimization algorithms and needs to be adjusted, because too large or too small values will result in non-convergence of the model weights or similar problems. Finally, the size of the hidden layer relates to representational capacity. However, larger values are not necessarily better. Since BERT uses a very specific vocabulary of 30,000 tokens, FFNNs and LSTMs were fit based on the original BERT vocabulary (names: FFNN and LSTM), and on the original training vocabulary (names: FFNN∗ and LSTM∗). The reasoning was that the BERT vocabulary might be a generally advantageous representation of language data.

Dependence on Size of Training Data (RQ2)

A rule-of-thumb in image classification states that approximately 1,000 instances of a class (e.g., cat or dog) are needed to achieve acceptable classification performance (Mitchell, 2020). In the context of science education assessment, Ha et al. (2011), who used a software for scoring students’ evolution explanations (Mayfield and Rose, 2010), found that approximately 500 samples were sufficient to classify responses under certain circumstances. However, we are not aware of analyses of this kind for dependence of classification performance on the size of training data in written reflections. In particular, it is not clear, for example, if and when classification performance for deep learning architectures will converge given the comparably small data sets that are commonly used in discipline-based educational research. Hence, we performed sensitivity analyses for classification performance for the present data. To do so, random samples from the training data were drawn given a predefined proportion ranging from 0.05 to 1.00. The analysis was performed based on the most performant models from RQ1. Classification performance was assessed on the test dataset.

Interpreting BERT’s Classification Decisions (RQ3)

Interpretability of deep learning models is important in research contexts in order to advance our understanding of the functioning of written reflections (Rose, 2017). Sundararajan et al. (2017) proposed integrated gradients to attribute importance to input tokens for classification tasks in a deep learning architecture. Integrated gradients allow each input feature to get an attribution that accounts for the contribution to the prediction. Integrated gradients are calculated in reference to a neutral baseline input, such as a blank image in image recognition or a “meaningless” word in NLP applications. Gradients can be considered in analogy to products of model coefficients and feature values for a deep network.

For language models, the input tokens that are defined by the vocabulary (more exactly, the token embedding vectors) are assigned attributions through integrated gradients. The attribution values allow researchers to estimate the importance of certain tokens for a classification category. The baseline token is chosen to be a neutral token that ideally has a neutral prediction score. Important hyperparameters include the length of the input vector, which taxes computer memory. In the present study the longest segment was unduly long, so a more reasonable number was chosen (98th percentile of sentence length) which included almost all segments, and memory consumption was reasonable for a personal computer. Another hyperparameter that also largely affects allocation of computer memory is the number of steps that are used to calculate the integrated gradients. 50 steps were chosen in the present case, which seemed a large enough number to prevent incorrect calculations yet small enough to be reasonable for a personal computer. The captum-library in Python was used to perform these calculations (Sundararajan et al., 2017).

Results

Contrasting BERT with other Deep Learning Language Models to Classify Segments in the Written Reflections (RQ1)

Our first RQ was: “To what extent does a fine-tuned pretrained language model (BERT) outperform other deep learning models in classification of segments in preservice teachers’ written reflections according to the elements of a reflection-supporting model?” To answer this RQ, it was analyzed to what extent fine-tuned BERT outperformed FFNNs and LSTMs. Table 1 depicts the classification performance of the best models that resulted from the grid search. It can be seen that FFNN’s performed lowest with weighted F1 averages of .62 and .64, respectively. FFNNs were followed by LSTM’s with weighted F1 averages of .72 and .72, respectively. Furthermore, BERT (1) performed .05 points lower compared to BERT in weighted F1 average. Overall, BERT was the most performant model to classify segments in the written reflections according to the reflection-supporting model, with a weighted F1 average of .82. The Cohen’s kappa values for the classification performance were: FFNN: 0.48, FFNN*: 0.48, LSTM: 0.56, LSTM*: 0.58, BERT (1): 0.66, BERT: 0.75.

The most performant models were then evaluated through iterated k-fold cross-validation to yield more robust estimations for generalizability. Note that results in Table 1, given that they were performed based on simple hold-out cross-validation, are likely inflated. The weighted F1 averages (standard deviations) for iterated k-fold cross-validation were as follows: FFNN: 0.60 (0.07), FFNN*: 0.57 (0.17), LSTM: 0.71 (0.02), LSTM*: 0.71 (0.02), BERT (1): 0.76 (0.02), BERT: 0.81 (0.02). For the FFNN models, performance decreased when averaged over the folds. It can be seen that the standard deviations are comparably large. The iterated k-fold cross-validation values for the LSTM models approximated the finding for the simple fold cross validation value. The standard deviation was also smaller compared to the FFNN models. Similarly, the values for the BERT models also approximate the simple fold cross validation values with a comparably small standard deviation as well. Hence, LSTM and BERT models seem to generalize better compared to FFNN models. Again, BERT outperformed all other models.

The final hyperparameters for the best models in the training phase, the overall number of parameters for these models, and the training time can be seen in Table 2. It can be seen that all architectures have millions of parameters. LSTMs had the lowest number of overall parameters. The LSTMs had also reasonable training times. The full BERT model utilized the largest number of parameters. Consequently, saving the model takes the most storage on hard-disk (> 400 MB). As expected, training time for BERT could be reduced by a factor of 54 to a more manageable time of 2 minutes by outsourcing training to the GPU.

Finally the best overall model was fit on the held-out test data. We chose the full fine-tuned BERT model, because this model was the most performant classification model. When fit to the test data, BERT reached a weighted F1 average of .81. The Cohen’s kappa value was .74.

Classification Performance in Reference to Size of Training Data (RQ2)

Our RQ2 was “To what extent is the size of the training data related to the classification performance of the deep learning language models?” In order to analyze this RQ, we systematically varied the size of the training data. For each proportion a single random draw from the training data was used. Even though multiple draws would raise the precision of the performance estimation, we expected single draws to indicate more global trends in the performance. Figure 2 shows the classification performance (as measured through weighted F1 average) with respect to the size of the training data (depicted as proportion of the whole training data). We also fit a BERT model where we randomly changed the order of the input tokens. This was done because the BERT model also encodes word position. This allowed us to evaluate to what extent word-order was important for classification performance in the given task of classifying segments according to elements in the reflection-supporting model.

Figure 2 indicates that BERT with non-random word-order performs lower for smaller training data sizes compared to FFNN and LSTM, but outperforms all other models at about 20 to 30% of the size of the entire training data. BERT with random word-order in fact suffers in classification performance compared to BERT with non-random word-order. BERT with random word-order settles at about the performance of the LSTM. It is noticeable that all curves flatten by an increasing size of training data.

Explaining the Classifier Decisions (RQ3)

Finally, our RQ3 was “What features can best explain the classifiers’ decisions?” Here, only the BERT model is considered. Layer-integrated gradients were calculated for all inputs in the test data. Layer-integrated gradients were used to assign an attribution score to each word/token in the input according to the contribution this word/token added to the prediction of the segment. Since BERT has multiple embedding layers, the attribution scores across all embedding dimensions were added together to get a final attribution score for each word/token. Now, we wanted to extract the most important words for the elements in the reflection-supporting model. Therefore, we chose classified segments where true outcome and predicted outcome were the same. For each word we then averaged all attributions.

Table 3 depicts the most important nouns, verbs and adjectives for each of the elements. Note that some tokens are split through hashes (#) according to the BERT tokenizer (i.e., wordpiece tokenization). This occurred when only part of a word was known to the tokenizer. It hence split the word into existing tokens in the BERT vocabulary. Besides those split tokens, most of the other words are well interpretable given their belonging to the respective element of the reflection-supporting model. Note, for example, the subjunctive mode of verbs in alternatives. Furthermore, there are words of appraisal such as “good” in evaluation. Also the nouns in circumstances are somewhat prototypical of that element given that the preservice teachers were meant to describe the “class”, state their “goals”, and characterize the “lesson”. Other words are unexpected. For example, “independent” is not necessarily related to circumstances. However, sometimes the preservice teachers noted that this was their first lesson “independent” of their mentors or similar.

Discussion

Reflective writing analytics uses ML and NLP to extract contents from products of reflective thinking such as written reflections. The presented study advances research in reflective writing analytics by applying a pretrained language model (BERT) to boost classification performance for the identification of elements of a reflection-supporting model in the segments of written reflections.

In this study, segments in preservice physics teachers’ written reflections were identified by human raters according to a reflection-supporting model (Nowak et al., 2019). A pretrained language model (BERT) was then trained to classify segments, based on human annotations. Training refers to the adjustment of the model weights in the pretrained language model to reproduce input-output mappings. The quality of this mapping is calculated based on a loss function and model weights are adjusted through an optimization algorithm. The classification performance of the pretrained language model on the validation data was then compared to widely used deep learning algorithms (FFNN, LSTM). The full BERT model yielded the best performance (weighted F1 average of .82). Afterwards, BERT was fit to the held-out test data and achieved a weighted F1 average of .81, which relates to a Cohen’s kappa value of .74. This finding is in line with recent research in NLP that indicates that transformer-based language models such as BERT can boost classification performance in a multitude of language-related tasks and reliably generalize to unseen data (Devlin et al., 2018; Taher Pilehvar and Camacho-Collados, 2020). We furthermore showed that the advantage of the full BERT model manifests at about 20 to 30% of the size of the training data. This resonates with the observation that utilizing pretrained language models reduces the need for training data to achieve good classification performance (Devlin et al., 2018). These findings suggest that pretrained language models such as BERT might be applicable to a range of tasks in reflective writing analytics and beyond where only modest amounts of data are available. Furthermore, the word-order in the segments appears to be relevant for the BERT model to outperform LSTMs in classification performance. This might be due to the fact that pretraining in BERT involves masking words in the inputs and predicting the masked words from the context. Also, BERT uses positional (contextual) embeddings for the input tokens that might raise the importance of word order in the input sequence (Taher Pilehvar & Camacho-Collados, 2020). Finally, we sought to interpret the classification decision of the full BERT model. Consequently, layer-integrated gradients were used to calculate attributions for the inputs that indicated to what extent a token in the input contributed to the classification. The retrieved words with high attributions were well interpretable in terms of the respective elements in the reflection-supporting model. The integrated gradients seem to provide a valuable tool to open the black box of deep learning models and better understand model decisions. For example, mostly positive words in the element of evaluation could indicate that preservice teachers tend to appraise their own teaching, at the risk of reifying their own prior beliefs, which has been reported in prior research (Mena-Marcos et al., 2013). As such, integrated gradients could provide researchers analytical tools for written reflections.

Limitations and Improvements

Several implementation details of the model training and data analysis limit the generalizability of our findings. For example, we chose specific implementations of data representation, optimization procedure, and loss function. These choices were partly based on prior research (Devlin et al., 2018; Wulff et al., 2020). However, we cannot exclude the possibility that alternative implementations of data representation (e.g., stemming, or stopwords removal), optimization procedure (e.g., stochastic gradient descent), or different loss functions (e.g., mean square loss) would have resulted in better classification performance. Furthermore, the fine-tuned BERT model was used with a fixed-size vocabulary of 30,000. Consequently, some words were artificially split into meaningless tokens (e.g., “experiment” to “ex -periment”). Yet, the word “experiment” is important in physics-related written reflections. New pretrained models that incorporate the vocabulary presented in the training dataset could be advantageous, because more attention could be given to the actual word boundaries and structure of the written reflections. This would require BERT to include these important words in the vocabulary that it is pretrained on. Advances in the huggingface library particularly allow for pretraining transformer-based language models.

Another limitation relates to the segmentation of the written reflections. The segmentation was done mainly on the basis of sentences as in previous studies (Carpenter et al., 2020; Ferreira et al., 2013; Wulff et al., 2020). A stronger theoretical foundation such as discourse theory might improve the segmentation (Stede, 2016). For example, the theory of discourse segmentation can help to split segments based on principled decisions rather than relatedness or sentence boundaries. Segmentation can have a large impact on classification performance (Rosé et al., 2008). Even though methods such as sentence segmentation yielded acceptable results in past applications (Wulff et al., 2020), a more solid grounding of the segmentation would allow models to better encode dependencies within the language vis-à-vis the elements of the reflection-supporting model. However, it is also important that sentence-based segmentation can easily be automated today with high accuracy, which motivates the further use of sentence-based segmentation because unseen data can be segmented without human intervention. At the same time, sentence segmentation assumes that all information relevant to classifying a segment into an element lies within the sentence. This assumption is certainly not always true, given the reviewed research of long-range dependencies that characterize language (Ebeling & Neiman, 1995). One way to potentially resolve this issue is through multiple models that attend to different aspects of the written reflections.

Finally, it is important to recognize the limits of language and human communication via texts. Assumptions and certain amounts of world knowledge are rarely explicitly mentioned in texts (Jurafsky & Martin, 2014; McNamara et al., 1996). Rather, a certain amount of common world knowledge is assumed on the part of the reader (Jurafsky & Martin, 2014; McNamara et al., 1996). Implicit and unstated knowledge poses problems to algorithms that take the input at its face value, because only explicitly mentioned facts are used for classification of segments. Furthermore, human raters eventually resolve ambiguities or coreferences that are not explicitly stated in the texts and cannot be used by the computer algorithm. Consequently, human-computer agreement is likely hampered in certain contexts, and validation methods that rely on human-computer agreement are biased.

Implications

Given these limitations, our findings have implications for educational research related to written reflections and to the implementations of educational tools that make use of automated analysis of written reflections. Our findings suggest that pretrained language models can improve classification performance for segments of written reflections. On the basis of improved classification performance, various applications for automated analysis are conceivable.

Future research would further probe the features in the BERT model architecture and training regime. For example, Ostendorff et al. (2019) employed metadata for classification tasks with BERT. It could be helpful to include author-related covariates such as course grades, years of teaching experiences, etc., to boost classification performance or further assessment. Also, additional text features of coded segments could be considered for classification. For example, spacial position of the segment in the written reflection and text length are two meaningful choices for further covariates that eventually increase classification performance. In addition, generative language models such as GPT-3 have been found to be capable of learning new tasks with only few examples (Brown et al., 2020). These capabilities could be utilized in the context of written reflection analytics. For example, generative language models could be used to generate consequences given an evaluation of a teaching situation. Certainly, a consequence should attend to the description and evaluation of the teaching situation. Advancing this line of research would eventually enable the modeling of complex processes for generating written reflections. The capacity of modeling complex processes was attributed to be a major benefit of algorithmic data approaches such as ML as compared to more traditional data models (Breiman, 2001).

An important caveat in the context of reflective writing analytics is the missing link between quality reflection and improved classroom performance (Clarà, 2015). In terms of pedagogical value of written reflections, external validity of the reflection-supporting model needs to be investigated. We propose that the trained models in this study can help evaluate the effectiveness of written reflections in teacher education programs. With the help of the trained models (BERT in particular), preservice physics teachers’ reflections can be scalably analyzed with regards to completeness, frequency, and structuredness of the elements of the reflection-supporting model. On the basis of a large-scale implementation, relationships between reflective writing and variables such as students’ knowledge gains or similar metrics can be quantitatively explored on a broader scale.

Finally, the presented methods of deep learning can help to build reliable and analytical feedback tools that provide instantaneous feedback for a written reflection. Given the requirements for preservice teachers to learn from their teaching experiences (Korthagen, 1999), as well as the limitations and cost of human resources in university-based teacher education (e.g., Nehm and Härtig2012), such systems would be in great need, especially given their effectiveness for improving learning outcomes (Aleven et al., 2016; Chirikov et al., 2020; VanLehn, 2011). Pretrained language models can play an important role in the development of such feedback tools (Chirikov et al., 2020). BERT could be used to automatically classify segments in preservice physics teachers’ written reflections and report descriptive statistics to preservice teachers and instructors. Descriptive statistics could relate to questions such as if all elements in the reflection-supporting model were addressed in the text and to what extent. Self-reflection is also an important element in intelligent tutoring systems such as AutoTutor or Crystal Island (Carpenter et al., 2020; Graesser et al., 2005) that use natural language as a means to facilitate learning (Nye et al., 2014). Carpenter et al. (2020) successfully used deep contextualized word representations (ELMo) to represent and predict students’ reflective depth. The reflection-supporting model and the trained BERT model in our study could be used in addition to these findings to also conceptualize and predict the reflective breadth of students’ writing in systems such as Crystal Island.

Transfer of intelligent tutoring systems to the context of preservice teacher education would hold great potentials to improve experiential learning. Reflective depth and breadth could be assessed with pretrained language models (ELMo, BERT). Prompts and hints could be provided to preservice teachers that were found to be effective in prior studies (Lai and Calandra, 2010). They would relate to teaching experiences by the teachers and provide analytical feedback. For example, it is known in reflective writing analytics that teachers tend to be evaluative in their description of a teaching situation (Mann et al., 2007). A computer-based tutoring system that is based on the trained BERT model (or the ELMo representations) could identify evaluative sentences within a descriptive paragraph and provide hints for rewriting.

Code Availability

Please contact the first author.

Change history

14 February 2023

A Correction to this paper has been published: https://doi.org/10.1007/s40593-023-00330-9

Notes

Note that the famous GPT-3 generative language model that was advanced by OpenAI encompasses 175 billion parameters (Brown et al., 2020)

Details on German BERT training procedure: https://deepset.ai/german-bert (accessed: 18 Dec 2020).

References

Abels, S. (2011). LehrerInnen als ‘Reflective Practitioner’: Reflexionskompetenz für einen demokratieförderlichen Naturwissenschaftsunterricht, (1. Aufl. ed.). Wiesbaden: VS Verl. für Sozialwiss.

Aeppli, J., & Lötscher, HL (2016). EDAMA - Ein Rahmenmodell für Reflexion. Beiträge zur Lehrerinnen- und Lehrerbildung, 34(1), 78–97.

Aleven, V., McLaughlin, E.A., Glenn, R.A., & Koedinger, K.R. (2016). Instruction based on adaptive learning technologies. In RE. Mayer P.A. Alexander (Eds.) Handbook of Research on Learning and Instruction, Educational Psychology Handbook (pp. 522–560). Taylor and Francis, Florence.

Bain, J.D., Ballantyne, R., Packer, J., & Mills, C. (1999). Using journal writing to enhance student teachers’ reflectivity during field experience placements. Teachers and Teaching, 5(1), 51–73.

Bain, J.D., Mills, C., Ballantyne, R., & Packer, J. (2002). Developing reflection on practice through journal writing: Impacts of variations in the focus and level of feedback. Teachers and Teaching, 8(2), 171–196.

Berliner, D.C. (2001). Learning about and learning from expert teachers. International Journal of Educational Research, 35, 463–482.

Breiman, L. (2001). Statistical modeling: The two cultures. Statistical Science, 16(3), 199–231.

Brown, T.B., Mann, B., Ryder, N., Subbiah, M., Kaplan, J., Dhariwal, P., ..., & Amodei, D. (2020). Language models are few-shot learners. arXiv.

Buckingham Shum, S., Sándor, Á., Goldsmith, R., Bass, R., & McWilliams, M. (2017). Towards reflective writing analytics: Rationale, methodology and preliminary results. Journal of Learning Analytics, 4(1), 58–84.

Carlson, J., Daehler, K., Alonzo, A., Barendsen, E., Berry, A., Borowski, A., ..., & Wilson, C.D. (2019). The refined consensus model of pedagogical content knowledge. In A. Hume, R. Cooper, & A. Borowski (Eds.) Repositioning pedagogical content knowledge in teachers’ professional knowledge. Singapore: Springer.

Carpenter, D., Geden, M., Rowe, J., Azevedo, R., & Lester, J. (2020). Automated analysis of middle school students’ written reflections during game-based learning. In I.I. Bittencourt, M. Cukurova, K. Muldner, R. Luckin, & E. Millán (Eds.) Artificial intelligence in education (pp. 67–78). Cham: Springer International Publishing.

Chan, K.K.H., Xu, L., Cooper, R., Berry, A., & van Driel, J.H. (2021). Teacher noticing in science education: do you see what I see? Studies in Science Education, 57(1), 1–44.

Cheng, G. (2017). Towards an automatic classification system for supporting the development of critical reflective skills in L2 learning. Australasian Journal of Educational Technology, 33(4), 1–21.

Chirikov, I., Semenova, T., Maloshonok, N., Bettinger, E., & Kililcec, R.F. (2020). Online education platforms scale college STEM instruction with equivalent learning outcomes at lower cost. Science Advances, 6.

Chollet, F. (2018). Deep learning with Python. Shelter Island, NY: Manning. Retrieved from http://proquest.safaribooksonline.com/9781617294433.

Clarà, M. (2015). What is reflection? Looking for clarity in an ambiguous notion. Journal of Teacher Education, 66(3), 261–271.

Clarke, D., & Hollingsworth, H. (2002). Elaborating a model of teacher professional growth. Teaching and Teacher Education, 18(8), 947–967.

Conneau, A., Khandelwal, K., Goyal, N., Chaudhary, V., Wenzek, G., Guzmán, F., ..., & Stoyanov, V. (2019). Unsupervised cross-lingual representation learning at scale. arXiv:1911.02116.

Darling-Hammond, L., Hammerness, K., Grossman, P.L., Rust, F., & Shulman, L.S. (2017). The design of teacher education programs. In L Darling-Hammond J. Bransford (Eds.) Preparing teachers for a changing world. New York: John Wiley & Sons.

Devlin, J., Chang, M.-W., Lee, K., & Toutanova, K. (2018). BERT: Pre-training of deep bidirectional transformers for language understanding. arXiv:1810.04805.

Dewey, J. (1933). How we think: A restatement of the relation of reflective thinking to the educative process ((New ed.) Ed.). Boston usw.: Heath.

Ebeling, W., & Neiman, A. (1995). Long-range correlations between letters and sentences in texts. Physica A: Statistical Mechanics and its Applications, 215(3), 233–241.

Fenstermacher, G. (1994). Chapter 1: The Knower and the Known: The Nature of Knowledge in Research on Teaching. Review of Research in Education 20.

Ferreira, R., de Souza Cabral, L., Lins, R.D., Pereira e Silva, G., Freitas, F., Cavalcanti, G.D., ..., & Favaro, L. (2013). Assessing sentence scoring techniques for extractive text summarization. Expert Systems with Applications, 40 (14), 5755–5764.

Fischer, H.E., Borowski, A., Kauertz, A., & Neumann, K. (2010). Fachdidaktische Unterrichtsforschung: Unterrichtsmodelle und die Analyse von Physikunterricht. Zeitschrift für Didaktik der Naturwissenschaften, 16, 59–75.

Gibson, A., Kitto, K., & Bruza, P. (2016). Towards the discovery of learner metacognition from reflective writing. Journal of Learning Analytics, 3 (2), 22–36.

Gnehm, A.-S., & Clematide, S. (2020). Text zoning and classification for job advertisements in German, French and English: Proceedings of the Fourth Workshop on Natural Language Processing and Computational Social Science. ACL. Retrieved from https://www.aclweb.org/anthology/2020.nlpcss-1.10.pdf.

Goldberg, Y. (2017). Neural network methods for natural language processing. Morgan and Claypool.

Goodfellow, I., Bengio, Y., & Courville, A. (2016). Deep learning. Cambridge, Massachusetts and London, England: MIT Press. http://www.deeplearningbook.org/.

Graesser, A.C., Chipman, P., Haynes, B.C., & Olney, A. (2005). AutoTutor: An intelligent tutoring system with mixed-initiative dialogue. IEEE Transactions on Education, 48(4), 612–618.

Grossman, P.L., Compton, C., Igra, D., Ronfeldt, M., Shahan, E., & Williamson, P.W. (2009). Teaching practice: A cross-professional perspective. Teachers College Record, 111(9), 2055–2100.

Ha, M., Nehm, R.H., Urban-Lurain, M., & Merrill, J.E. (2011). Applying computerized-scoring models of written biological explanations across courses and colleges: prospects and limitations. CBE Life Sciences Education, 10(4), 379–393.

Hascher, T. (2005). Die Erfahrungsfalle. Journal für Lehrerinnen- und Lehrerbildung, 5(1), 39–45.

Hatton, N., & Smith, D. (1995). Reflection in teacher education: Towards definition and implementation. Teaching and Teacher Education, 11(1), 33–49.

Jurafsky, D., & Martin, J.H. (2014). Speech and language processing (2 ed., Pearson new internat. ed. ed.). Harlow: Pearson Education.

Kahneman, D. (2012). Schnelles Denken, langsames Denken. Siedler Verlag.

Kolb, D. (1984). Experiential learning: Experience as the source of learning and development. Englewood Cliffs, NJ: Prentice Hall.

Korthagen, F.A. (1999). Linking reflection and technical competence: The logbook as an instrument in teacher education. European Journal of Teacher Education, 22(2-3), 191–207.

Korthagen, F.A. (2001). Linking practice and theory: The pedagogy of realistic teacher education. Mahwah, NJ: Erlbaum. http://www.loc.gov/catdir/enhancements/fy0634/00057273-d.html.

Korthagen, F.A. (2005). Levels in reflection: core reflection as a means to enhance professional growth. Teachers and Teaching, 11(1), 47–71.

Korthagen, F.A., & Kessels, J. (1999). Linking theory and practice: Changing the pedagogy of teacher education. Educational Researcher, 28(4), 4–17.

Kovanović, V., Joksimović, S., Mirriahi, N., Blaine, E., Gašević, D., Siemens, G., & Dawson, S. (2018). Understand students’ self-reflections through learning analytics: LAK ’18, March 7–9, 2018, Sydney, NSW, Australia, pp. 389–398.

Lai, G., & Calandra, B. (2010). Examining the effects of computer-based scaffolds on novice teachers’ reflective journal writing. Educational Technology Research and Development, 58(4), 421–437.

LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning. Nature, 521(7553), 436–444.

Levin, D.M., Hammer, D., & Coffey, J.E. (2009). Novice teachers’ attention to student thinking. Journal of Teacher Education, 60(2), 142–154.

Lin, X., Hmelo, C.E., Kinzer, C., & Secules, T. (1999). Designing technology to support reflection. Educational Technology Research and Development, 47(3), 43–62.

Loughran, J., & Corrigan, D. (1995). Teaching portfolios: A strategy for developing learning and teaching in preservice education. Teacher & Teacher Education, 11(6), 565–577.

Luo, W., & Litman, D. (2015). Summarizing student responses to reflection prompts. Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, 1955–1960.

Mann, K., Gordon, J., & MacLeod, A. (2007). Reflection and reflective practice in health professions education: a systematic review. Advances in Health Sciences Education, 14(4), 595.

Mayfield, E., & Rose, C.P. (2010). An interactive tool for supporting error analysis for text mining: Proceedings of the North American Association for Computational Linguistics (NAACL) HLT 2010: Demonstration Session, Los Angeles, CA, June 2010, 25–28.

McNamara, D., Kintsch, E., Butler Songer, N., & Kintsch, W. (1996). Are good texts always better? Interactions of text coherence, background knowledge, and levels of understanding in learning from text. Cognition and Instruction, 14(1), 1–43.

Mena-Marcos, J., García-Rodríguez, M.-L., & Tillema, H. (2013). Student teacher reflective writing: What does it reveal? European Journal of Teacher Education, 36(2), 147–163.

Mitchell, M. (2020). Artificial Intelligence: A guide for thinking humans. Pelican Books.

Nehm, R.H., & Härtig, H. (2012). Human vs. computer diagnosis of students’ natural selection knowledge: Testing the efficacy of text analytic software. Journal of Science Education and Technology, 21(1), 56–73.

Neuweg, G.H. (2007). Wie grau ist alle Theorie, wie grün des Lebens goldner Baum? LehrerInnenbildung im Spannungsfeld von Theorie und Praxis. bwpat 12.

Nowak, A., Kempin, M., Kulgemeyer, C., & Borowski, A. (2019). Reflexion von Physikunterricht [Reflection of physics lessons]. In C. Maurer (Ed.) Naturwissenschaftliche Bildung als Grundlage für berufliche und gesellschaftliche Teilhabe: Jahrestagung in Kiel 2018. Regensburg: Gesellschaft für Didaktik der Chemie und Physik (p. 838).

Nowak, A., Liepertz, S., & Borowski, A. (2018). Reflexionskompetenz von Praxissemesterstudierenden im Fach Physik. In C. Maurer (Ed.) Qualitätsvoller Chemie- und Physikunterricht- normative und empirische Dimensionen: Jahrestagung in Regensburg 2017. Universität Regensburg.

Nye, B.D., Graesser, A.C., & Hu, X. (2014). AutoTutor and family: A review of 17 years of natural language tutoring. International Journal of Artificial Intelligence in Education, 24(4), 427–469.

Ostendorff, M., Bourgonje, P., Berger, M., Moreno-Schneider, J., Rehm, G., & Gipp, B. (2019). Enriching BERT with knowledge graph embeddings for document classification. arXiv:1909.08402v1.

Paszke, A., Gross, S., Massa, F., Lerer, A., Bradbury, J., Chanan, G., ..., & Chintala, S. (2019). PyTorch: An imperative style, high-performance deep learning library. In H. Wallach, H. Larochelle, A. Beygelzimer, d’ Alché-Buc, E. Fox, & R. Garnett (Eds.) Advances in neural information processing systems 32. http://papers.neurips.cc/paper/9015-pytorch-an-imperative-style-high-performance-deep-learning-library.pdf(pp. 8024–8035). Curran Associates, Inc.

Paterson, B.L. (1995). Developing and maintaining reflection in clinical journals. Nurse Education Today, 15(3), 211–220.

Poldner, E., van der Schaaf, M., Simons, P.R.-J., van Tartwijk, J., & Wijngaards, G. (2014). Assessing student teachers’ reflective writing through quantitative content analysis. European Journal of Teacher Education, 37(3), 348–373.

Pratt, L., & Thrun, S. (1997). Machine Learning, 28(5).

Python Software Foundation. (2020). Python Language Reference: version 3.8. http://www.python.org.

Rodgers, C. (2002). Defining Reflection: Another look at John Dewey and reflective thinking. Teachers College Record, 104(4), 842–866.

Roe, M.F., & Stallman, A.C. (1994). A comparative study of dialogue and response journals. Teaching and Teacher Education, 10(6), 579–588.

Rosé, C., Wang, Y.-C., Cui, Y., Arguello, J., Stegmann, K., Weinberger, A., & Fischer, F. (2008). Analyzing collaborative learning processes automatically: Exploiting the advances of computational linguistics in computer-supported collaborative learning. International Journal of Computer-Supported Collaborative Learning, 3(3), 237–271.

Rose, C.P. (2017). A social spin on language analysis. Nature, 545, 166–167.

Schoenfeld, A.H. (2014). What makes for powerful classrooms, and how can we support teachers in creating them? A story of research and practice, productively intertwined. Educational Researcher, 43(8), 404–412.

Schön, D.A. (1983). The reflective practitioner: How professionals think in action. New York: Basic Books. http://www.loc.gov/catdir/enhancements/fy0832/82070855-d.html.

Schön, D.A. (1987). Educating the reflective practitioner: Toward a new design for teaching and learning in the professions, 1st edn. San Francisco, Calif.: Jossey-Bass.

In M. Stede (Ed.) (2016). Handbuch Textannotation: Potsdamer Kommentarkorpus 2.0. Potsdam: Universitätsverlag Potsdam.

Sundararajan, M., Taly, A., & Yan, Q. (2017). Axiomatic attribution for deep networks: Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, PMLR 70.

Swales, J.M. (1990). Genre analysis: English in academic and research settings. Cambridge: Cambridge Univ. Press.

Taher Pilehvar, M., & Camacho-Collados, J. (2020). Embeddings in natural language processing: Theory and advances in vector representation of meaning. Morgan and Claypool.

Taylor, W.L. (1953). “Cloze Procedure”: A new tool for measuring readability. Journalism Quarterly.