Abstract

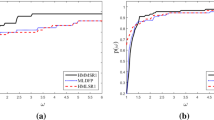

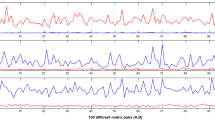

To guarantee heredity of positive definiteness under the popular Wolfe line search conditions, a modification is made on the symmetric rank–one updating formula, a simple quasi–Newton approximation for (inverse) Hessian of the objective function of an unconstrained optimization problem. Then, the scaling approach is employed on a memoryless version of the proposed formula, leading to an iterative method which is appropriate for solving large–scale problems. Based on an eigenvalue analysis, it is shown that the self–scaling parameter proposed by Oren and Spedicato is an optimal parameter for the proposed updating formula in the sense of minimizing the condition number. Also, a sufficient descent property is established for the method, together with a global convergence analysis for uniformly convex objective functions. Numerical experiments demonstrate computational efficiency of the proposed method with the self–scaling parameter proposed by Oren and Spedicato.

Similar content being viewed by others

References

Andrei, N.: Accelerated scaled memoryless BFGS preconditioned conjugate gradient algorithm for unconstrained optimization. Eur. J. Oper. Res. 204(3), 410–420 (2010)

Arguillère, S.: Approximation of sequences of symmetric matrices with the symmetric rank-one algorithm and applications. SIAM J. Matrix Anal. Appl. 36(1), 329–347 (2015)

Babaie-Kafaki, S.: A quadratic hybridization of Polak-Ribière-Polyak and Fletcher-Reeves conjugate gradient methods. J. Optim. Theory Appl. 154(3), 916–932 (2012)

Babaie-Kafaki, S.: On optimality of the parameters of self-scaling memoryless quasi-Newton updating formulae. J. Optim. Theory Appl. 167, 91–101 (2015)

Babaie-Kafaki, S., Ghanbari, R., Mahdavi-Amiri, N.: Two new conjugate gradient methods based on modified secant equations. J. Comput. Appl. Math. 234(5), 1374–1386 (2010)

Barzilai, J., Borwein, J.M.: Two-point stepsize gradient methods. IMA J. Numer. Anal. 8(1), 141–148 (1988)

Conn, A.R., Gould, N.I.M., Toint, PhL: Convergence of quasi-Newton matrices generated by the symmetric rank-one update. Math. Program. 50(2, Ser. A), 177–195 (1991)

Dai, Y.H., Han, J.Y., Liu, G.H., Sun, D.F., Yin, H.X., Yuan, Y.X.: Convergence properties of nonlinear conjugate gradient methods. SIAM J. Optim. 10(2), 348–358 (1999)

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91(2, Ser. A), 201–213 (2002)

Gould, N.I.M., Orban, D., Toint, PhL: CUTEr: a constrained and unconstrained testing environment, revisited. ACM Trans. Math. Softw. 29(4), 373–394 (2003)

Nocedal, J., Wright, S.J.: Numerical Optimization. Springer, New York (2006)

Oren, S.S.: Self-scaling variable metric (SSVM) algorithms. II. Implementation and experiments. Manag. Sci. 20(5), 863–874 (1974)

Oren, S.S., Luenberger, D.G.: Self–scaling variable metric (SSVM) algorithms. I. Criteria and sufficient conditions for scaling a class of algorithms. Manag. Sci., 20(5), 845–862, (1973/74)

Oren, S.S., Spedicato, E.: Optimal conditioning of self-scaling variable metric algorithms. Math. Program. 10(1), 70–90 (1976)

Sugiki, K., Narushima, Y., Yabe, H.: Globally convergent three-term conjugate gradient methods that use secant conditions and generate descent search directions for unconstrained optimization. J. Optim. Theory Appl. 153(3), 733–757 (2012)

Sun, W., Yuan, Y.X.: Optimization Theory and Methods: Nonlinear Programming. Springer, New York (2006)

Watkins, D.S.: Fundamentals of Matrix Computations. John Wiley and Sons, New York (2002)

Xu, C., Zhang, J.Z.: A survey of quasi-Newton equations and quasi-Newton methods for optimization. Ann. Oper. Res. 103(1–4), 213–234 (2001)

Acknowledgements

This research was supported by Research Council of Semnan University. The author is grateful to Professor Michael Navon for providing the line search code. He also thanks the anonymous reviewers for their valuable comments and suggestions helped to improve the quality of this work.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Babaie–Kafaki, S. A modified scaled memoryless symmetric rank–one method. Boll Unione Mat Ital 13, 369–379 (2020). https://doi.org/10.1007/s40574-020-00231-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40574-020-00231-y

Keywords

- Unconstrained optimization

- Large–scale optimization

- Memoryless quasi–Newton method

- Symmetric rank–one update

- Eigenvalue

- Condition number