Abstract

The last decade has introduced a new era of epidemiologic studies of low-dose radiation facilitated by electronic record linkage and pooling of cohorts that allow for more direct and powerful assessments of cancer and other stochastic effects at doses below 100 mGy. Such studies have provided additional evidence regarding the risks of cancer, particularly leukemia, associated with lower-dose radiation exposures from medical, environmental, and occupational radiation sources, and have questioned the previous findings with regard to possible thresholds for cardiovascular disease and cataracts. Integrated analysis of next generation genomic and epigenetic sequencing of germline and somatic tissues could soon propel our understanding further regarding disease risk thresholds, radiosensitivity of population subgroups and individuals, and the mechanisms of radiation carcinogenesis. These advances in low-dose radiation epidemiology are critical to our understanding of chronic disease risks from the burgeoning use of newer and emerging medical imaging technologies, and the continued potential threat of nuclear power plant accidents or other radiological emergencies.

Similar content being viewed by others

Introduction

The characterization of ionizing radiation as an important human carcinogen, which can cause cancer in the majority of organs, was a major achievement of epidemiological and experimental radiation studies in the twentieth century. Although most national and international committees that have reviewed the epidemiological and biological data conclude that the evidence supports the linear no-threshold model for radiation protection, the evidence does not directly prove it with full certainty [1–3]. The linear no-threshold model assumption is that there is no dose below which there is no cancer risk. The dose at which there is considered to be direct evidence of an increased risk of cancer has been very gradually lowered by extensive research to about 50–100 mGy [4]. In addition, there is emerging evidence that the threshold for other stochastic late effects may be lower than originally observed [5]. Many have questioned whether radiation epidemiology has reached its limits in characterizing risks at the lower dose range and assumed that further material advancements were unlikely. In the last decade, however, changing patterns of exposure and technological advances have supported a new era of large-scale radiation epidemiology studies of medically, environmentally, and occupationally exposed populations, and it is those studies and advances that we highlight in this review.

We focus our review on key epidemiologic studies (see Tables 1 and 2 for details) identified from PubMed and published since the most recent major national/international reports, such as BEIR VII phase 2 [1] and the UNSCEAR 2006 Report [2]. The studies highlighted in this review were selected based on the contributions that they have made to the following fundamental questions:

-

Is the linear no-threshold assumption reasonable?

-

Can low-doses of radiation cause stochastic effects other than tumors, including circulatory diseases and cataracts?

-

What is the potential public health impact of the changing patterns of low-dose radiation exposure?

-

How could next generation genomic and epigenetic sequencing of germline and somatic tissues produce a paradigm shift in the field?

From Environmental to Medical Radiation Exposure and Back Again

In the early 1980s, natural background radiation exposure, primarily from indoor radon, was estimated to be the predominant source of exposure to the US population, and the estimated per capita annual dose was 3.6 mSv. By 2006, the estimated per capita dose had nearly doubled to 6.2 mSv per year [6] (Fig. 1). The increase was entirely due to the revolution in medical imaging, particularly computed tomography (CT scans), which rose from 3 million to 70 million scans per year over those three decades in the USA. CT scans save lives and reduce unnecessary medical procedures, but the associated radiation exposure is an order of magnitude higher than a conventional X-ray. The greatest concerns were raised about overuse of CT scans in children, because of their greater radiosensitivity [1] and because exposure settings were not optimized for their smaller body size [7]. These concerns prompted the establishment of a series of retrospective cohort epidemiological studies in Europe, Australia, Israel, and North America to directly assess the potential cancer risks [8•, 9–11]. Other higher-dose evolving diagnostic procedures, such as nuclear medicine and interventional procedures, have also increased over the same period and now account for 26 and 14 % of the collective effective doses from medical sources in the US [5]. Unlike CT scans, these procedures also present increased occupational radiation exposure levels to the physicians and technologists who perform them [12, 13]. Concerns about the higher exposures and risks of cancer and other radiation-related disease risks to medical workers have resulted in the establishment of new retrospective cohort epidemiologic studies for groups, such as cardiologists and radiologic technologists, who perform these procedures [12, 14].

Effective doses to the United States population in the early 1980s and in 2016 by ionizing radiation exposure source [6] (reprinted with permission of the National Council on Radiation Protection and Measurements, http://NCRPpublications.org)

In 2011, the Fukushima nuclear accident in Japan returned the spotlight to environmental radiation exposure. This event not only prompted an immediate need to assess potential risks to the exposed Japanese population [15], but also served as an important reminder of the possible risks to populations surrounding every nuclear power plant. Epidemiological studies based on the Chernobyl accident have been reinvigorated as they can provide information used to estimate the long-term impact of internal radiation exposure from Fukushima and potential future accidents [16].

Exposure Assessment

Accurate estimation of organ or tissue doses from exposure to ionizing radiation and assessment of uncertainties in dose estimation are critical for quantifying radiation-associated health risks in epidemiologic studies. The key measure of dose in epidemiologic studies is absorbed dose, defined as the energy imparted within a given volume and averaged over the mass of an organ (e.g., “organ dose”) measured in Gray (Gy). Biologic effects caused by ionizing radiation derive primarily from damage to DNA and differ by radiation type (e.g., photons, electrons, protons, neutrons or alpha particles) and energy level. Equivalent or radiation-weighted dose incorporates the differences in biologic effects of these different types of radiation by multiplying the absorbed dose by a radiation weighting factor, which places these effects from exposure to different types of radiation on a common scale using a metric designated as Sievert (Sv).

Notable improvements in dose estimates for individuals in epidemiological studies have derived from more sophisticated understanding of the need to assess radiation type and energy level and exposure conditions (e.g., external vs internal, the geometry of exposure conditions, and anatomic site) and individual characteristics [17–19]. It is also important to capture temporal characteristics (e.g., age and time since first exposure), all sources of individual exposure, biologically relevant latency periods, and to incorporate sources of uncertainty for external [20–22] and internal [23] radiation exposures. Since epidemiologic studies are usually launched several years to decades after initial radiation exposure, radiation doses of exposed individuals must be reconstructed for the relevant time period(s) [24–28].

Methods for validation of estimated doses for external irradiation include assessment of chromosomal translocations in lymphocytes and electron paramagnetic resonance of tooth enamel or fingernails [29]. For internal radiation, direct bioassays measure radioactivity in the whole body or specific organs [18].

Limitations of exposure assessment and potential sources of uncertainty in epidemiologic studies of low-dose or low-dose-rate exposures [21–23, 30, 31] include lack of single and repeated measurements at the individual level, inaccurate or incomplete monitoring, and limited information about shielding, individual radiation protection, or behaviors and activities that could influence doses. Failure to identify the many sources of uncertainty, account for the various sources, and incorporate measures to account for shared or unshared sources, may seriously impact individual exposure estimates and disease risk estimates.

The Linear No Threshold Assumption and Cancer Risk

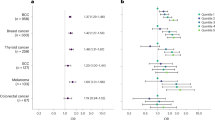

The Life Span Study (LSS) of survivors of the Japanese atomic bombs in 1945 has been foundational for radiation epidemiology because of the large population exposed at all ages to a single acute dose with long-term follow-up and well characterized doses that range from very low to very high. Its power to assess the cancer risk from very low-dose exposure is limited, however, and studies of this population cannot address the question of protracted radiation exposure which are the types of exposures that most of the general population are likely to receive. As reviewed below, recent studies that have directly evaluated cancer risks following relatively low-dose or low-dose-rate exposure to ionizing radiation have overcome some of the limitations of the LSS by compiling larger study populations of the most radiosensitive individuals, incorporating more accurate dose assessments, and assessing cancer types that are relatively uncommon in Japan. Risk of radiation-related outcomes in the LSS and other studies with detailed individual radiation dose information is often estimated by the excess relative risk (ERR), i.e., the proportion of relative risk (RR) due solely to radiation exposure (ERR = RR−1).

Medical Exposures

Recent studies of medical radiation exposures, particularly from diagnostic X-rays and CT scans occurring in utero and during childhood/adolescence, have vastly improved assessment of both exposure and disease by use of medical record abstraction and electronic record linkages. Pearce and colleagues estimated absorbed doses to the red bone marrow (RBM) and brain from CT scans occurring before age 22 years using data abstracted from the medical records of more than 175,000 patients, machine parameters imputed using data from UK-wide surveys (1989–2003), and a series of hybrid computational human phantoms and Monte Carlo radiation transport techniques [8•, 27]. A linear dose–response relationship was observed for increasing radiation dose to the RBM and brain and increased risk of leukemia (n = 74 cases) and brain tumors (n = 135 cases), respectively. Risks of both these cancers were approximately tripled for mean organ doses of 50–60 mGy compared with <5 mGy. A subsequent study of cancer risks after CT scan exposure before age 20 in Australia similarly linked medical record information on CT scans with subsequent cancer registrations [9]. This study found increased risks of several cancer types with increasing numbers of CT scans. It is difficult to compare these study findings directly with the LSS or the UK CT study until the ongoing organ-specific dose–response relationships are published. Additional work is ongoing in both these cohorts to evaluate the influence of underlying cancer-predisposing conditions and the indications for the CT scans. Within the next 5 years, there should be results from retrospective pediatric CT cohorts including approximately two million children [32].

In the US Scoliosis Cohort Study, fractionated exposure to radiation from diagnostic X-rays in childhood and adolescence, assessed using individual patient records, was associated with a non-statistically significant increased risk of breast cancer (n = 78 cases, ERR/Gy = 2.86, 95 % CI−0.07, 8.62) [33]. Data from the United Kingdom Childhood Cancer Study showed that any (versus no) exposure from diagnostic X-rays in utero was associated with a non-significant increased risk of childhood cancer (n = 2690 cases, OR = 1.14, 95 % CI 0.90, 1.45), driven largely by a non-significant positive association with leukemia, specifically acute myeloid leukemia (OR = 1.36, 95 % CI 0.91, 2.02) [34]. No differences were observed by trimester of first exposure. In the US Radiologic Technologists Study, cohort dose reconstruction is in progress for the self-reported diagnostic medical procedures that will allow assessment of dose–response relationships for thyroid, breast, and other radiosensitive cancers in this unique setting (http://www.radtechstudy.nci.nih.gov).

Environmental Exposures

Large-scale record linkage has also resulted in significant improvements in the ability to assess the cancer risks from background radiation exposure. All previous studies of this question were ecological or under-powered [35–40]. In the UK childhood cancer case–control study in Great Britain, natural background exposure from cosmic rays and radon in the home was estimated for residences at birth using the County District mean gamma-ray dose-rates and a predictive map based on domestic measurements for radon for 27,447 childhood cancer cases and controls [41•]. The authors found a significant dose–response for cumulative RBM dose from gamma radiation and childhood leukemia that was driven largely by the most common leukemia subgroup, lymphoid leukemia (mean cumulative equivalent REB dose in controls = 4.0 mSv, range = 0–31 mSv). These associations were in reasonable agreement with risk predictions based on BEIR VII and UNSCEAR models [1, 2]. No significant associations were observed for gamma-ray or radon exposure with risk of other childhood cancers. The key limitation of the study is that exposure was based on residence at birth, and information on potential confounders (e.g., exposure to ionizing radiation from other sources such as medical exams, predisposing genetic syndromes, and birth weight) was not available except for socioeconomic status based on postcode. More detailed exposure assessment and expansion of the study is in progress. A recent study in Switzerland with a similar design to the UK study found increased risks of total cancer, leukemia, lymphoma, and central nervous system tumors associated with terrestrial and cosmic radiation based on locations of residence [42] and no association between domestic radon exposure and childhood cancer [43].

The Techa River Cohort, comprised of individuals exposed to a wide range of radionuclides following the release of radioactive waste into the Techa River by the Mayak Radiochemical Plant between 1949 and 1956, is one of the few general population studies of protracted environmental radiation exposures with long-term follow-up for cancer. Exposure to strontium has been of particular interest in understanding risks of leukemia as it concentrates in the bone. Using an updated dosimetry system, increased risks consistent with linearity were observed for solid cancer mortality [44], all leukemias, leukemia excluding chronic lymphocytic leukemia (CLL), chronic myeloid leukemia (CML), and acute/subacute leukemias through 2007, but no evidence was found for an increased risk of CLL [45].

Occupational Exposures

Updated analyses based on data from the 15-Country Study of nuclear workers, which includes some data from all of the cohorts from the National Registry for Radiation Workers-3 (NRRW-3) and the 3-Country Study except Rocky Flats, focused particularly on risks of leukemia, leukemia excluding CLL, and cause-specific cancer mortality following chronic, low-dose occupational exposure to radiation [46]. Although limited information was available on potential confounding factors, particularly lifestyle-related exposures such as cigarette smoking, this source of bias would have had less influence on risk estimates of leukemia compared with solid cancers. This study, with a mean cumulative dose of 19.4 mSv, showed a non-significant linear association between radiation exposure and mortality from leukemia excluding CLL. A strong but borderline increased risk was observed for CML mortality (ERR/Sv = 10.1, 90 % CI −0.86, 40.2), but no associations were observed for mortality from CLL, ALL, or AML. Significant elevated risks for all-cause mortality, all-cancer mortality, and lung cancer mortality were also observed. However, the excess risk for solid cancer was three times higher than that observed in LSS, and was largely driven by data from the earliest workers in the Atomic Energy of Canada Limited worker cohort [47, 48]; Zablotska et al. concluded that the findings for the earliest workers are more likely attributable to missing dose information than a true effect, and that excluding these individuals from the 15-Country Study would have substantially attenuated the risks observed for all cancer excluding leukemia [47]. Occupational radiation dose was significantly positively associated with cancer (excluding leukemia) and leukemia (excluding CLL) incidence and mortality in the NRRW-3 [49•]. A large-scale pooling study of cancer mortality (INWORKS) is in progress, which includes the NRRW-3, French, and US cohorts, and results are expected later in 2015.

Updated analyses from cohorts of clean-up workers of the 1986 Chernobyl nuclear power plant accident in Ukraine [50] and Belarus, Russia, and Baltic countries [51] have yielded new insights on risks of leukemia and leukemia subtypes resulting from low-dose protracted exposures (mainly gamma and beta particle radiation). A significant linear, dose–response association between protracted exposure (mean bone marrow dose = 132.3 mGy for cases and 81.8 mGy for controls) and leukemia risk was observed in the Ukranian cohort [50]. Risk of leukemia was similarly elevated but not statistically significant in the cohort of workers in Belarus, Russia, and Baltic countries [51]. Both risk estimates were consistent with those from LSS despite lower mean estimated cumulative doses in the Chernobyl clean-up workers compared with the atomic bomb survivors. Significant positive associations for CLL and non-CLL leukemia of a similar magnitude (ERR/Gy = 2.58 [95 % CI 0.02, 8.43] and 2.21 [95 % CI 0.05, 7.61], respectively) were observed in the Ukrainian cohort. The Ukranian Chernobyl clean-up worker cohort is one of the first to report a positive association between radiation exposure and risk of CLL besides the most recent report from LSS, which included just 12 cases [52]. However, CLL incidence is very low in Japan compared with western populations [52]. Moreover, other studies of protracted, low-dose radiation exposure, including the Techa River cohort [44] and 15-Country Study [46, 53], have thus far shown no associations with risk of CLL. As radiation dose estimation was retrospectively assessed and relied on data from in-person interviews, the results from clean-up worker studies may have been biased in the positive direction due to differential recall between cases and controls. Assessment of dose-uncertainty and recall is ongoing.

Individual and collaborative studies of uranium miners have provided consistent evidence linking greater exposure to radon and its decay products, including exposure at lower levels, with an increased risk of lung cancer [54–57]. Mean exposure levels from individual miner studies have ranged from about 20 to 800 working-level months (WLM; one WLM equals 170 h of exposure to air with an alpha dose rate from radon decay product of one WL) [57]. The positive dose–response relationship between radon and lung cancer has been confirmed in studies of residential radon exposure in the general population based on lower exposure doses (at a concentration of about one hundredth to one tenth that found in underground mines [54]), with very similar risks observed per unit radon concentration [55]. A pooled analysis of 13 European studies, with mean measured radon levels of 104 Bq/m3 among lung cancer cases and 97 Bq/m3 in controls, showed an excess risk of lung cancer of 8 % (95 % CI 3–16 %) per 100 Bq/m3 that increased to 16 % per 100 Bq/m3 (95 % CI 5–31 %) after correcting for random uncertainties in measuring radon concentrations [58]. UNSCEAR (2006) estimated that the ERR per 100 Bq/m3 in miners is 0.12 (95 % CI 0.04, 0.2), assuming an ERR per WLM of 0.59 (95 % CI 0.35, 1.0) [55]. Studies of uranium miners have additionally provided important evidence regarding age- and time-related modifiers, including a decline in risk with increasing time since exposure and, to a lesser extent, attained age. Several miner studies have shown an inverse modifying effect of exposure rate, but this effect was not observed at lower levels of cumulative exposure [54, 59, 60]. Cigarette smoking is another potentially important effect modifier; however, smoking data have generally been limited in uranium miner studies. In 1999, the BEIR VI report presented results based on six miner studies having partial smoking information, which supported a sub-multiplicative interaction between smoking and radon on lung cancer [54]. A similar but non-significant sub-multiplicative interaction was observed in a recent collaborative analysis of three case–control studies in Europe in which smoking information was constructed based on self-administered questionnaires and occupational medical archives [60]. The ERR/WLM was 0.010 (95 % CI 0.002, 0.078) for never smokers and 0.005 (95 % CI 0.002, 0.13) for ever smokers (P interaction = 0.42). This study also confirmed that the association between radon exposure and lung cancer death persisted after adjustment for smoking (ERR/WLM = 0.008, 95 % CI 0.004, 0.014).

Can Low Dose Radiation Exposure Cause Circulatory Disease?

Until the 1990s, cancer was the only established stochastic effect after ionizing radiation exposure. Beginning in the late 1990s, evidence began to emerge that very high cardiac doses from radiotherapy were related to increased cardiovascular mortality [61], and doses above 0.5 Gy appeared to increase risk also in the LSS [62]. Whether doses less than 0.5 Gy influence risk of circulatory diseases has remained uncertain, in part due to lack of information regarding possible biological mechanisms. Both BEIR VII and UNSCEAR concluded that there were insufficient data regarding an association between lower-dose ionizing radiation and an increased risk of circulatory disease [1, 2]. Since those reviews, a number of occupational cohorts have reported possible increased risks of ischemic heart disease and stroke at lower doses, including studies of Mayak workers [63, 64], some data from the NRRW-3 study of nuclear industry workers [49•], and a cohort of male employees at British Nuclear Fuels Public Limited Company (which contributed some data to NRRW-3) [65]. However, no association was observed for circulatory disease mortality in the 15-Country Study, which included data from multiple cohorts of occupationally exposed workers at nuclear facilities, including the NRRW [66]. A systematic review and meta-analysis of studies based on LSS data and occupational cohorts published between 1990 and 2010 on low-to-moderate whole-body ionizing radiation and circulatory disease risks found an elevated ERRs/Sv for four broad groups of circulatory diseases: ischemic heart disease (0.10, 95 % CI 0.05, 0.15), non-ischemic heart disease (0.12, 95 % CI −0.01, 0.25), cerebrovascular disease (0.20, 95 % CI 0.14, 0.25), and circulatory diseases not including ischemic heart and cerebrovascular disease (0.10, 95 % CI 0.05, 0.14) [67•]. There was, however, significant heterogeneity observed between studies most likely due to varying quality of dose estimates and classification of endpoints. Although the excess relative risk is much lower for CVD than for cancer, the higher background rates mean that if the low-dose risk is confirmed in future studies, the absolute excess risks are similar to cancer risks [67•].

A major concern in studies of low-dose ionizing radiation exposure and circulatory disease risks is the potential for confounding by smoking and other lifestyle-related factors, particularly in the nuclear worker cohorts, which lack this information. Of the published studies to date, data on lifestyle risk factors for circulatory diseases were only collected in the LSS [68] and Mayak worker [63, 64] cohorts exposed generally to low-to-moderate doses of radiation. Associations observed for radiation exposure and ischemic heart disease and cerebrovascular disease in these cohorts did not differ materially after adjustment for these additional risk factors, including hypertension, body mass index, cigarette smoking, alcohol intake, and history of diabetes [63, 64, 68]. Furthermore, the NRRW-3 cohort, which did not collect information on potential confounders, showed significant excess risks for all circulatory diseases combined that were slightly stronger than, but nonetheless compatible with, findings from LSS [49•]. The US Radiologic Technologists cohort provides a unique opportunity to assess low-dose radiation and cardiovascular and stroke risk with adjustment for potential confounders from detailed questionnaire information. Results are expected in the next couple of years.

In the largest study to date of patients exposed to fractionated low-to-moderate radiation (mean cumulative lung dose = 0.79 Gy, range 0–11.60 Gy), Zablotska and colleagues found that exposure to multiple fluoroscopy examinations to monitor tuberculosis was associated with a significant increased risk of mortality from ischemic heart disease overall after adjusting for dose fractionation, as well as a significant inverse dose-fractionation association, with the highest doses observed for patients with the fewest fluoroscopic procedures per year [69•]. Ischemic heart disease risk declined with increasing time since first exposure and age at first exposure. These results, while informative for radiation-exposed patient populations, require replication.

Risks of Cataracts

Recent studies have challenged the previous conclusions [70, 71] that only high radiation exposure to the lens of the eye (>2 Gy for acute and >5 Gy for fractionated or protracted exposures) influences subsequent risks of cataracts. A large prospective study of US radiologic technologists found a non-significantly positive ERR/Gy (1.98, 95 % CI −0.69, 4.65) for the association between occupational exposure to ionizing radiation and risk of cataracts [72]. Increased risks of borderline statistical significance were observed for workers in the highest (mean = 60 mGy) versus lowest (mean = 5 mGy) category of occupational dose to the lens (HR = 1.18, 95 % CI 0.99, 1.40). In addition, having personally received three or more diagnostic X-rays to the face and/or neck was associated with a 25 % increased risk of cataracts (HR = 1.25, 95 % CI 1.06, 1.47) after adjusting for occupational exposure doses and other covariates [72]. A study of Chernobyl liquidators, 94 % of whom were exposed to <400 mGy to the lens, found a suggestive dose–response association for stage 2–5 cataracts at doses over 200–400 mGy; early, precataractous changes were observed at lens doses under 400 mGy [73]. These findings are consistent with a study based on the LSS showing increased risks of cataracts at low-to-moderate doses and dose thresholds well below 1 Gy [74], and have important implications for radiation safety regulations. As a result, the International Commission on Radiologic Protection (ICRP) issued a statement in 2011 that decreased the threshold in absorbed dose to the lens of the eye to 0.5 Gy [5].

Next Generation Sequencing

The notion that some individuals show greater sensitivity to the effects of radiation than others has been long supported by increased sensitivity in individuals with certain rare hereditary disorders (e.g., ataxia telangiectasia and Nijmegen breakage syndrome) [75, 76]. However, these cancer susceptibility syndromes affect only a small proportion of the general population. It is believed that at least some part of the genetic contribution defining radiation susceptibility is likely to follow a polygenic model, which predicts elevated risk resulting from the inheritance of several low penetrance risk alleles (the “common-variant-common-disease” model) based on the fact that multiple genetic pathways (including DNA damage repair, radiation fibrogenesis, oxidative stress, and endothelial cell damage) have been implicated in radiosensitivity [77].

The BEIR VII section on biological effects of radiation focused on the cellular level, since epidemiological data addressing genetic susceptibility to radiation effects were scant at the time [1]. Since then, a number of population-based epidemiological studies have examined genetic susceptibility to radiation-related risk of cancer using the “candidate-SNP” approach, which assumes prior knowledge of one or more functional single nucleotide polymorphisms (SNPs). Suggestive interactions have been observed between DNA repair SNPs and ionizing radiation for glioma [78, 79], as well as between ionizing radiation and common variants in genes involved in DNA damage repair, apoptosis, and proliferation in a series of nested case–control studies of breast cancer in US radiologic technologists [80–83] and a small hospital-based study of breast cancer [84]. However, none of these results have been convincingly replicated to date. A slightly different approach has been to examine breast cancer risk associated with diagnostic X-ray or mammogram exposure in groups of high-risk individuals (carriers of BRCA1 and BRCA2 mutations). Most [85–87], but not all [88], studies of chest x-rays have reported a slightly elevated risk of breast cancer in at least one subgroup of exposure. For mammograms, the association has generally been null [89–91]. Interpretation of these findings is difficult given that all of these studies are subject to one or more of the following biases: exposure based on self-report, the possibility of confounding by indication, lack of a consistent dose–response association, subgroup findings that could be due to chance, and overlap of study populations. The observation of statistically significant associations with exposure to X-rays but not mammograms points to the strong probability of recall bias for the first set of findings given that self-reported mammogram use is highly accurate [92].

While earlier genetic studies focused on a handful of candidate genes, it is now possible to comprehensively examine the approximately 25,000 coding genes and associated functional elements thanks to the advent of high-throughput technologies that can simultaneously analyze thousands of genetic markers at relatively low-cost, the mapping of linkage disequilibrium between common SNPs across the genome [93], and the definition of functional elements critical for regulation and genomic stability [94]. The genome-wide association study (GWAS) approach has successfully identified hundreds of risk loci in germline DNA for various cancers [95]. However, the assessment of gene-environment interaction for many known environmental carcinogens, including radiation, has remained elusive. Genome-wide association studies have been undertaken of adult contralateral breast cancer in the WECARE study [96] and subsequent malignancies in the Childhood Cancer Survivor Study [97] (both of which have detailed radiation doses from radiotherapy) but results are yet to be published.

The huge advances in DNA sequencing technology have also yielded path-breaking insights into our understanding of somatic mutations. The Cancer Genome Atlas (TCGA), launched in 2005, and the International Cancer Genome Consortium (ICGC), launched in 2008, have been the two main projects driving our comprehensive understanding of the genetics of cancer. These projects characterize not only the genome, but also various aspects of the transcriptome and epigenome, to give a fuller understanding of how genes contribute to tumorigenesis. To date, over 30 distinct human tumor types have been analyzed through large-scale genome sequencing and integrated multi-dimensional analyses, yielding insights into both individual cancer types and across cancers, particularly with respect to the accurate molecular classification of tumors [98]. The bulk of these new discoveries focuses on the genome rather than associated environmental factors. However, a recent landmark paper examining 4,938,362 mutations from 7042 cancers identified strong mutational signatures in tumor tissue marking exposure to tobacco carcinogens and ultra-violet irradiation [99]. The tobacco signal was most evident in cancers of the lung, head and neck, and liver; and the ultra-violet irradiation signal was observed mainly in malignant melanoma and squamous carcinoma of the head and neck. Studies to look for a similar tumor tissue signature for ionizing radiation are currently being planned in populations environmentally exposed to low doses of ionizing radiation.

Certainly, the new era of low-dose radiation epidemiologic studies of cancer and other serious disease risks will continue to feature a search for common genetic markers that can identify individuals susceptible to radiation risk effects and “signatures” that can identify radiation exposure as a causal factor for a particular tumor. While these studies face several challenges (including the need for large sample sizes, high-quality exposure assessment for both radiation and potential confounding factors, and meaningful replication sets), the integrated characterization of germline and somatic alterations as genotyping and analysis methods evolve rapidly in the next few decades promises to yield exciting new avenues of research. If a radiation “signature” could be identified in individuals who received low dose exposure, this would be a paradigm shift in the linear no-threshold field.

Conclusions

The last decade has introduced a new era of low-dose radiation epidemiology. Record linkage studies have suggested for the first time that pediatric CT scans may increase cancer risk, and that natural background radiation may contribute to childhood leukemia. Large pooling projects of occupational cohorts have provided additional insights into the risks from protracted radiation exposure, and also raised questions about the risk of other stochastic effects after low-dose exposures including cardiovascular disease and cataracts. There are potential sources of bias in all of these populations, but the case for causality is strengthened by the evidence of a dose–response and consistency with the existing evidence at higher doses. In the next decade, integrated characterization of both germline and somatic alterations (including inherited mutations, somatic, and epigenetic changes) in populations with well-characterized exposure to ionizing radiation could propel our understanding further regarding thresholds, radiosensitivity of population subgroups and individuals, and the mechanisms of radiation carcinogenesis. These developments will be keenly followed as medical imaging technologies continue to advance and spread, and nuclear power plant accidents and other radiological emergencies remain a threat for populations around the world.

References

Papers of particular interest, published recently, have been highlighted as: • Of importance

BEIR VII Phase 2. Health risks from exposure to low levels of ionizing radiation. Washington DC: National Research Council, 2006.

United Nations Scientific Committee on the Effects of Atomic Radiation (UNSCEAR). Effects of Ionizing Radiation. Volume I: Report to the General Assembly, with Scientific Annexes. New York: United Nations, 2006.

National Radiological Protection Board. Estimates of late radiation risks to the United Kingdom population. Chapter 6: Irradiation in utero. Documents of the NRPB, vol. 4, 1993.

Brenner DJ, Doll R, Goodhead DT, et al. Cancer risks attributable to low doses of ionizing radiation: assessing what we really know. Proc Natl Acad Sci U S A. 2003;100(24):13761–6.

International Commission on Radiation Units and Measurements (ICRP). ICRP statement on tissue reactions/early and late effects of radiation in normal tissues and organs—threshold doses for tissue reactions in a radiation protection context. ICRP publication 118. Ann ICRP. 2012;41(1–2):1–322.

National Council on Radiation Protection and Measurements (NCRP). Ionizing radiation exposure of the population of the United States. NCRP Report No. 160. NCRP: Bethesda, MD, 2009.

Frush DP, Donnelly LF, Bisset 3rd GS. Effect of scan delay on hepatic enhancement for pediatric abdominal multislice helical CT. AJR Am J Roentgenol. 2001;176(6):1559–61.

Pearce MS, Salotti JA, Little MP, et al. Radiation exposure from CT scans in childhood and subsequent risk of leukaemia and brain tumours: a retrospective cohort study. Lancet. 2012;380(9840):499–505. This was the first study to directly examine cancer risks in patients who have undergone CT scans and observed risks were broadly consistent with the Japanese atomic bomb survivors.

Mathews JD, Forsythe AV, Brady Z, et al. Cancer risk in 680 000 people exposed to computed tomography scans in childhood or adolescence: data linkage study of 11 million Australians. BMJ 2013;bmj.f2360.

Meulepas JM, Ronckers CM, Smets AM, et al. Leukemia and brain tumors among children after radiation exposure from CT scans: design and methodological opportunities of the Dutch Pediatric CT Study. Eur J Epidemiol. 2014;29(4):293–301.

Krille L, Dreger S, Schindel R, et al. Risk of cancer incidence before the age of 15 years after exposure to ionising radiation from computed tomography: results from a German cohort study. Radiat Environ Biophys. 2015;54(1):1–12.

Drozdovitch V, Brill AB, Mettler Jr FA, et al. Nuclear medicine practices in the 1950s through the mid-1970s and occupational radiation doses to technologists from diagnostic radioisotope procedures. Health Phys. 2014;107(4):300–10.

Kim KP, Miller DL, de Gonzalez Berrington A. Occupational radiation doses to operators performing fluoroscopically-guided procedures. Health Phys. 2012;103(1):80–99.

Jacob S, Boveda S, Bar O, et al. Interventional cardiologists and risk of radiation-induced cataract: results of a French multicenter observational study. Int J Cardiol. 2013;167(5):1843–7.

United Nations Scientific Committee on the Effects of Atomic Radiation (UNSCEAR). Sources, effects, and risks of ionizing radiation. Report to the General Assembly, with Scientific Annexes. New York: United Nations, 2013.

International Agency for Research on Cancer [Internet]. ARCH: Agenda for Research on Chernobyl Health; 2015 [cited 2015 March 2]. Available from arch.iarc.fr.

National Academies/National Research Council. Radiation dose reconstruction for epidemiologic uses. Washington DC: National Academy Press; 1995.

International Commission on Radiation Units and Measurements (ICRU). Retrospective assessment of exposure to ionizing radiation. ICRU Report 68, Volume 2, No. 2, 2002.

National Council on Radiation Protection and Measurements (NCRP). Radiation dose reconstruction: principles and practices. NCRP Report No. 163. NCRP: Bethesda, MD, 2009.

Gilbert ES. Accounting for errors in dose estimates used in studies of workers exposed to external radiation. Health Phys. 1998;74:22–9.

National Council on Radiation Protection and Measurements (NCRP). Uncertainties in the measurement and dosimetry of external radiation. NCRP Report No. 158. NCRP: Bethesda, MD, 2007.

National Council on Radiation Protection and Measurements (NCRP). Uncertainties in the estimation of radiation risks and probability of disease causation. NCRP Report No. 171. NCRP: Bethesda, MD, 2012.

National Council on Radiation Protection and Measurements (NCRP). Uncertainties in internal radiation dose assessment. NCRP Report No. 164. NCRP: Bethesda, MD, 2009.

Gilbert ES, Thierry-Chef I, Cardis E, et al. External dose estimation for nuclear worker studies. Radiat Res. 2006;166(1 Pt 2):168–73.

Beck HL, Anspaugh LR, Bouville A, et al. Review of methods of dose estimation for epidemiological studies of the radiological impact of Nevada test site and global fallout. Radiat Res. 2006;166(1 Pt 2):209–18.

Thierry-Chef I, Marshall M, Fix JJ, et al. The 15-country collaborative study of cancer risk among radiation workers in the nuclear industry: study of errors in dosimetry. Radiat Res. 2007;167(4):380–95.

Kim KP, De Gonzalez Berrington A, Pearce MS. Development of a database of organ doses for paediatric and young adult CT scans in the United Kingdom. Radiat Prot Dosimetry. 2012;150(4):415–26.

Simon SL, Preston DL, Linet MS, et al. Radiation organ doses received in a nationwide cohort of U.S. radiologic technologists: methods and findings. Radiat Res. 2014;182(5):507–28.

Simon SL, Bouville A, Kleinerman R. Current use and future needs of biodosimetry in studies of long-term health risk following radiation exposure. Health Phys. 2010;98(2):109–17.

National Academies/National Research Council. A review of the dose reconstruction program of the Defense Threat Reduction Agency. Washington DC: National Academy Press; 2003.

United Nations Scientific Committee on the Effects of Atomic Radiation (UNSCEAR). Report to the General Assembly, Volume 1: Effects of Ionizing Radiation with Scientific Annexes A and B. New York: United Nations, 2008.

Einstein AJ. Beyond the bombs: cancer risks of low-dose medical radiation. Lancet. 2012;380(9840):455–7.

Ronckers CM, Doody MM, Lonstein JE, et al. Multiple diagnostic X-rays for spine deformities and risk of breast cancer. Cancer Epidemiol Biomarkers Prev. 2008;17(3):605–13.

Rajaraman P, Simpson J, Neta G, et al. Early life exposure to diagnostic radiation and ultrasound scans and risk of childhood cancer: case–control study. BMJ. 2011;342:d472.

Kohli S, Noorlind Brage H, Lofman O. Childhood leukaemia in areas with different radon levels: a spatial and temporal analysis using GIS. J Epidemiol Community Health. 2000;54(11):822–6.

Raaschou-Nielsen O, Andersen CE, Andersen HP, et al. Domestic radon and childhood cancer in Denmark. Epidemiology. 2008;19(4):536–43.

The United Kingdom Childhood Cancer Study of exposure to domestic sources of ionising radiation: 1: radon gas. Br J Cancer 2002;86(11):1721–6.

The United Kingdom Childhood Cancer Study of exposure to domestic sources of ionising radiation: 2: gamma radiation. Br J Cancer 2002;86(11):1727–31.

Richardson S, Monfort C, Green M, et al. Spatial variation of natural radiation and childhood leukaemia incidence in Great Britain. Stat Med. 1995;14(21–22):2487–501.

Little MP, Wakeford R, Lubin JH, et al. The statistical power of epidemiological studies analyzing the relationship between exposure to ionizing radiation and cancer, with special reference to childhood leukemia and natural background radiation. Radiat Res. 2010;174(3):387–402.

Kendall GM, Little MP, Wakeford R, et al. A record-based case–control study of natural background radiation and the incidence of childhood leukaemia and other cancers in Great Britain during 1980–2006. Leukemia. 2013;27(1):3–9. This study was one of the first (and one of the largest) to provide direct evidence of an association between natural background radiation exposure and childhood leukemia risk.

Spycher BD, Lupatsch JE, Zwahlen M, et al. Background ionizing radiation and the risk of childhood cancer: a census-based nationwide cohort study. Environ Health Perspect [Epub ahead of pring 2015 Feb 23].

Hauri D, Spycher B, Huss A, et al. Domestic radon exposure and risk of childhood cancer: a prospective census-based cohort study. Environ Health Perspect. 2013;121(10):1239–44.

Schonfeld SJ, Krestinina LY, Epifanova S, et al. Solid cancer mortality in the techa river cohort (1950–2007). Radiat Res. 2013;179(2):183–9.

Krestinina LY, Davis FG, Schonfeld S, et al. Leukaemia incidence in the Techa River Cohort: 1953–2007. Br J Cancer. 2013;109(11):2886–93.

Cardis E, Vrijheid M, Blettner M, et al. The 15-country collaborative study of cancer risk among radiation workers in the nuclear industry: estimates of radiation-related cancer risks. Radiat Res. 2007;167(4):396–416.

Zablotska LB, Lane RS, Thompson PA. A reanalysis of cancer mortality in Canadian nuclear workers (1956–1994) based on revised exposure and cohort data. Br J Cancer. 2014;110(1):214–23.

Wakeford R. Nuclear worker studies: promise and pitfalls. Br J Cancer. 2014;110(1):1–3.

Muirhead CR, O’Hagan JA, Haylock RG, et al. Mortality and cancer incidence following occupational radiation exposure: third analysis of the National Registry for Radiation Workers. Br J Cancer. 2009;100(1):206–12. This large, high quality, occupational cohort found increased cancer risks from low-dose, protracted radiation exposure.

Zablotska LB, Bazyka D, Lubin JH, et al. Radiation and the risk of chronic lymphocytic and other leukemias among chornobyl cleanup workers. Environ Health Perspect. 2013;121(1):59–65.

Kesminiene A, Evrard AS, Ivanov VK, et al. Risk of hematological malignancies among Chernobyl liquidators. Radiat Res. 2008;170(6):721–35.

Hsu WL, Preston DL, Soda M, et al. The incidence of leukemia, lymphoma and multiple myeloma among atomic bomb survivors: 1950–2001. Radiat Res. 2013;179(3):361–82.

Vrijheid M, Cardis E, Ashmore P, et al. Ionizing radiation and risk of chronic lymphocytic leukemia in the 15-country study of nuclear workers. Radiat Res. 2008;170(5):661–5.

BEIR VI Report. Committee on Health Risks of Exposure to Radon. Board on Radiation Effects Research. Health Effects of Exposure to Radon. Washington DC: National Research Council.

United Nations Scientific Committee on the Effects of Atomic Radiation (UNSCEAR). Volume II: scientific annex E. sources-to-effects assessment for radon in homes and workplaces. New York: United Nations; 2006.

International Commission on Radiation Units and Measurements (ICRP). Lung cancer risk from radon and progeny and statement on radon. ICRP Publication 115. Ann ICRP. 2010;40(1):1–64.

Tirmarche M, Harrison J, Laurier D, et al. Risk of lung cancer from radon exposure: contribution of recently published studies of uranium miners. Ann ICRP. 2012;41(3–4):368–77.

Darby S, Hilld D, Auvinen A, et al. Radon in homes and risk of lung cancer: collaborative analysis of individual data from 13 European case–control studies. BMJ. 2005;330(7485):223.

Tomasek L, Rogel A, Tirmarche M, et al. Lung cancer in French and Czech uranium miners: radon-associated risk at low exposure rates and modifying effects of time since exposure and age at exposure. Radiat Res. 2008;169(2):125–37.

Leuraud K, Schnelzer M, Tomasek L, et al. Radon, smoking and lung cancer risk: results of a joint analysis of three European case–control studies among uranium miners. Radiat Res. 2011;176(3):375–87.

Darby SC, Ewertz M, McGale P, et al. Risk of ischemic heart disease in women after radiotherapy for breast cancer. N Engl J Med. 2013;368(11):987–98.

Shimizu Y, Pierce DA, Preston DL, et al. Studies of the mortality of atomic bomb survivors. report 12, part II. noncancer mortality: 1950–1990. Radiat Res. 1999;152(4):374–89.

Azizova TV, Haylock RG, Moseeva MB, et al. Cerebrovascular diseases incidence and mortality in an extended Mayak Worker Cohort 1948–1982. Radiat Res. 2014;182(5):529–44.

Moseeva MB, Azizova TV, Grigoryeva ES, et al. Risks of circulatory diseases among Mayak PA workers with radiation doses estimated using the improved Mayak Worker Dosimetry System 2008. Radiat Environ Biophys. 2014;53(2):469–77.

McGeoghegan D, Binks K, Gillies M, et al. The non-cancer mortality experience of male workers at British Nuclear Fuels plc, 1946–2005. Int J Epidemiol. 2008;37(3):506–18.

Vrijheid M, Cardis E, Ashmore P, et al. Mortality from diseases other than cancer following low doses of ionizing radiation: results from the 15-Country Study of nuclear industry workers. Int J Epidemiol. 2007;36(5):1126–35.

Little MP, Azizova TV, Bazyka D, et al. Systematic review and meta-analysis of circulatory disease from exposure to low-level ionizing radiation and estimates of potential population mortality risks. Environ Health Perspect. 2012;120(11):1503–11. This review supported an overall positive association between radiation exposure and risks of circulatory diseases, and predicted that current estimates of mortality attributable to radiation are vastly underestimated.

Shimizu Y, Kodama K, Nishi N, et al. Radiation exposure and circulatory disease risk: Hiroshima and Nagasaki atomic bomb survivor data, 1950–2003. BMJ. 2010;340:b5349.

Zablotska LB, Little MP, Cornett RJ. Potential increased risk of ischemic heart disease mortality with significant dose fractionation in the Canadian Fluoroscopy Cohort Study. Am J Epidemiol. 2014;179(1):120–31. This study of tuberculosis patients provides evidence that moderate dose fractionated radiation exposure increases risk of ischemic heart disease.

International Commission on Radiation Units and Measurements (ICRP). 1990 Recommendations of the International Commission on Radiological Protection. ICRP Publication 60. Ann ICRP. 1991;21(1–3):1–201.

National Council on Radiation Protection and Measurements (NCRP). Limitation of Exposure to Ionizing Radiation. NCRP Report No. 116. NCRP: Bethesda, MD, 1993.

Chodick G, Bekiroglu N, Hauptmann M, et al. Risk of cataract after exposure to low doses of ionizing radiation: a 20-year prospective cohort study among US radiologic technologists. Am J Epidemiol. 2008;168(6):620–31.

Worgul BV, Kundiyev YI, Sergiyenko NM, et al. Cataracts among Chernobyl clean-up workers: implications regarding permissible eye exposures. Radiat Res. 2007;167(2):233–43.

Neriishi K, Nakashima E, Minamoto A, et al. Postoperative cataract cases among atomic bomb survivors: radiation dose response and threshold. Radiat Res. 2007;168(4):404–8.

Taylor AM, Harnden DG, Arlett CF, et al. Ataxia telangiectasia: a human mutation with abnormal radiation sensitivity. Nature. 1975;258(5534):427–9.

Taalman RD, Jaspers NG, Scheres JM, et al. Hypersensitivity to ionizing radiation, in vitro, in a new chromosomal breakage disorder, the Nijmegen Breakage Syndrome. Mutat Res. 1983;112(1):23–32.

Barnett GC, West CM, Dunning AM, et al. Normal tissue reactions to radiotherapy: towards tailoring treatment dose by genotype. Nat Rev Cancer. 2009;9(2):134–42.

Liu Y, Shete S, Wang LE, et al. Gamma-radiation sensitivity and polymorphisms in RAD51L1 modulate glioma risk. Carcinogenesis. 2010;31(10):1762–9.

Bondy ML, Wang LE, El-Zein R, et al. Gamma-radiation sensitivity and risk of glioma. J Natl Cancer Inst. 2001;93(20):1553–7.

Bhatti P, Struewing JP, Alexander BH, et al. Polymorphisms in DNA repair genes, ionizing radiation exposure and risk of breast cancer in U.S. Radiologic technologists. Int J Cancer. 2008;122(1):177–82.

Rajaraman P, Bhatti P, Doody MM, et al. Nucleotide excision repair polymorphisms may modify ionizing radiation-related breast cancer risk in US radiologic technologists. Int J Cancer. 2008;123(11):2713–6.

Bhatti P, Doody MM, Rajaraman P, et al. Novel breast cancer risk alleles and interaction with ionizing radiation among U.S. radiologic technologists. Radiat Res. 2010;173(2):214–24.

Sigurdson AJ, Bhatti P, Doody MM, et al. Polymorphisms in apoptosis- and proliferation-related genes, ionizing radiation exposure, and risk of breast cancer among U.S. Radiologic Technologists. Cancer Epidemiol Biomarkers Prev. 2007;16(10):2000–7.

Hu JJ, Smith TR, Miller MS, et al. Genetic regulation of ionizing radiation sensitivity and breast cancer risk. Environ Mol Mutagen. 2002;39(2–3):208–15.

Andrieu N, Easton DF, Chang-Claude J, et al. Effect of chest X-rays on the risk of breast cancer among BRCA1/2 mutation carriers in the international BRCA1/2 carrier cohort study: a report from the EMBRACE, GENEPSO, GEO-HEBON, and IBCCS Collaborators’ Group. J Clin Oncol. 2006;24(21):3361–6.

Lecarpentier J, Nogues C, Mouret-Fourme E, et al. Variation in breast cancer risk with mutation position, smoking, alcohol, and chest X-ray history, in the French National BRCA1/2 carrier cohort (GENEPSO). Breast Cancer Res Treat. 2011;130(3):927–38.

Gronwald J, Pijpe A, Byrski T, et al. Early radiation exposures and BRCA1-associated breast cancer in young women from Poland. Breast Cancer Res Treat. 2008;112(3):581–4.

John EM, McGuire V, Thomas D, et al. Diagnostic chest X-rays and breast cancer risk before age 50 years for BRCA1 and BRCA2 mutation carriers. Cancer Epidemiol Biomarkers Prev. 2013;22(9):1547–56.

Giannakeas V, Lubinski J, Gronwald J, et al. Mammography screening and the risk of breast cancer in BRCA1 and BRCA2 mutation carriers: a prospective study. Breast Cancer Res Treat. 2014;147(1):113–8.

Narod SA, Lubinski J, Ghadirian P, et al. Screening mammography and risk of breast cancer in BRCA1 and BRCA2 mutation carriers: a case–control study. Lancet Oncol. 2006;7(5):402–6.

Goldfrank D, Chuai S, Bernstein JL, et al. Effect of mammography on breast cancer risk in women with mutations in BRCA1 or BRCA2. Cancer Epidemiol Biomarkers Prev. 2006;15(11):2311–3.

Walker MJ, Chiarelli AM, Mirea L, et al. Accuracy of self-reported screening mammography use: examining recall among female relatives from the Ontario Site of the Breast Cancer Family Registry. ISRN Oncol. 2013;2013:810573.

International HapMap 3 Consortium, Altshuler DM, Gibbs RA. Integrating common and rare genetic variation in diverse human populations. Nature. 2010;467(7311):52–8.

ENCODE Project Consortium. An integrated encyclopedia of DNA elements in the human genome. Nature. 2012;489(7414):57–74.

Chung CC, Chanock SJ. Current status of genome-wide association studies in cancer. Hum Genet. 2011;130(1):59–78.

Bernstein JL, Langholz B, Haile RW, et al. Study design: evaluating gene-environment interactions in the etiology of breast cancer - the WECARE study. Breast Cancer Res. 2004;6(3):R199–214.

Robison LL, Armstrong GT, Boice JD, et al. The Childhood Cancer Survivor Study: a National Cancer Institute-supported resource for outcome and intervention research. J Clin Oncol. 2009;27(14):2308–18.

Chin L, Andersen JN, Futreal PA. Cancer genomics: from discovery science to personalized medicine. Nat Med. 2011;17(3):297–303.

Alexandrov LB, Nik-Zainal S, Wedge DC, et al. Signatures of mutational processes in human cancer. Nature. 2013;500(7463):415–21.

Acknowledgments

This work was supported by the Intramural Research Program of the National Cancer Institute, National Institutes of Health.

Compliance with Ethics Guidelines

ᅟ

Conflict of Interest

The authors declare that they have no competing interest.

Human and Animal Rights and Informed Consent

This article does not contain any studies with human or animal subjects performed by any of the authors.

Author information

Authors and Affiliations

Corresponding author

Additional information

This article is part of the Topical Collection on Global Environmental Health and Sustainability

Rights and permissions

About this article

Cite this article

Kitahara, C.M., Linet, M.S., Rajaraman, P. et al. A New Era of Low-Dose Radiation Epidemiology. Curr Envir Health Rpt 2, 236–249 (2015). https://doi.org/10.1007/s40572-015-0055-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40572-015-0055-y