Abstract

State-based peridynamics is a non-local reformulation of solid mechanics that replaces the force density of the divergence of stress with an integral of the action of force states on bonds local to a given position, which precludes differentiation with the aim to model strong discontinuities effortlessly. A popular implementation is a meshfree formulation where the integral is discretized by quadrature points, which results in a series of unknowns at the points under the strong-form collocation framework. In this work, the meshfree discretization of state-based peridynamics under the correspondence principle is examined and compared to traditional meshfree methods based on the classical local formulation of solid mechanics. It is first shown that the way in which the peridynamic formulation approximates differentiation can be unified with the implicit gradient approximation, and this is termed the reproducing kernel peridynamic approximation. This allows the construction of non-local deformation gradients with arbitrary-order accuracy, as well as non-local approximations of higher-order derivatives. A high-order accurate non-local divergence of stress is then proposed to replace the force density in the original state-based peridynamics, in order to obtain global arbitrary-order accuracy in the numerical solution. These two operators used in conjunction with one another is termed the reproducing kernel peridynamic method. The strong-form collocation version of the method is tested against benchmark solutions to examine and verify the high-order accuracy and convergence properties of the method. The method is shown to exhibit superconvergent behavior in the nodal collocation setting.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Meshfree methods for continuum mechanics can generally be cast under two formulations: Galerkin meshfree methods and collocation meshfree methods [1]. Among these, the governing equations solved under these frameworks can also be different. On one branch, there are meshfree discretizations of the classical local model, namely the differential equation for linear momentum. Using the Galerkin formulation with a meshfree discretization of this equation results in methods such as the diffuse element method [2], the element free Galerkin method [3], the reproducing kernel particle method [4, 5], and the many methods that ensued thereafter [6,7,8,9,10,11,12,13]. On the other hand, due to the global smoothness that can easily be attained with meshfree approximations, collocation of the strong form is straightforward, and several methods using this technique have been proposed starting with radial basis functions [14, 15], and later with the reproducing kernel and moving least squares approximations [16,17,18], among others.

Recently, a non-local reformulation of continuum mechanics has been proposed [19, 20] called peridynamics, in order to circumvent difficulties in treating discontinuities in the local models, first under a bond-based framework [19], and later under the so-called state-based framework [20]. This theory replaces the differentiation in classical continuum mechanics, i.e., the force density of the divergence of stress, with an integral of the actions of bonds to a given position, and admits discontinuities by precluding derivatives in the governing equations. In addition, the action is considered over a finite distance, embedding a length scale in the governing equations, resulting in a formulation which is non-local in nature. While the peridynamic equations can be solved under the Galerkin formulation [21, 22], this approach requires evaluation of a double (six-dimensional) integral [23], which results in considerable computational expense. Therefore, for practical applications, the strong form version is often employed. Due to the simplicity of its implementation and relatively low-computational cost, the peridynamic strong form equations are generally solved by a node-based meshfree approach [24], which is based on nodal collocation and nodal discretization of the integral terms in the peridynamic equations. In this meshfree approach, the unknowns are therefore associated with the nodal points, which are both collocation and quadrature points, similar to smoothed particle hydrodynamics [25, 26]. In this paper, discussions are focused on the collocation meshfree implementation of the state-based version under the correspondence principle [20], which constructs a non-local deformation gradient to facilitate the use of classical constitutive models, and is most closely related to meshfree discretizations of local models as first shown in [27] for uniform discretizations and infinite domains. In particular, the main focus of this study is the accuracy and convergence properties of the state-based peridynamic method, and enhancement thereof.

Theoretical analysis of the accuracy and convergence of local meshfree methods is well established, for instance see [28,29,30,31] for Galerkin analysis, and [18, 32,33,34,35] for collocation analysis. Sufficiently smooth problems solved with monomial basis vectors exhibit algebraic convergence in both strong formulations [35], and weak formulations [29], while global approximations such as radial basis show exponential convergence [33]. In particular, Galerkin meshfree methods with nth monomial completeness exhibit a rate of n + 1 in the displacement solution. On the other hand, meshfree collocation approaches exhibit a rate of n + 1 for a least-squares formulation using more collocation points than source points (approximation functions) [18], while using an equal number of source and collocation points exhibits an odd–even phenomenon where the rate of n is obtained for even orders, and n − 1 for odd orders [17, 36,37,38], which has also been observed in isogeometric collocation [39, 40]. A recently developed recursive gradient formulation [37] has been developed that exhibits superconvergence, that is, rates of n and n + 1 for even and odd orders of approximations, respectively. Notably this allows linear basis to converge in collocation analysis, in contrast to direct gradients [18]. Finally, it should be noted that the accuracy of numerical integration in the weak-form based versions can heavily influence theoretical rates [41], although several approaches are available to rectify this situation (the interested reader is referred to [1] and references therein for details).

For peridynamics, the concept of convergence can be understood in several ways; see [42, 43] for details: (1) N-convergence, when the non-locality of the continuous peridynamic problem is kept fixed while the discretization is refined (convergence to the non-local continuum solution); (2) δ-convergence, when the nonlocality is reduced for a fixed discretization (convergence to the discrete local solution); or (3) N–δ convergence, when both discretization and nonlocality approach the vanishing limit simultaneously (convergence to the continuum local solution). In this work, the third type of convergence is studied. It has been noted that this type of convergence is linked to the concept of asymptotic compatibility, with discretizations being asymptotically compatible if they converge to the correct local model and solution associated with the nonlocal model [43, 44].

The accuracy and convergence properties of state-based peridynamics has been studied in several works. Material models play an important role in convergence study of peridynamics since the results using different models converge to different solutions [45]. In [45], three different material models were tested to attempt to reproduce the solution of static linear elasticity. Among these, only the deformation gradient-based model [20] gave a convergent solution to all problems tested, with a first-order convergence rate in the L2 error norm.

The effect of numerical integration in the non-local integrals has also been a focus in studies, as it may also have a strong effect on convergence in peridynamics [46,47,48,49,50]. In the first meshfree implementation of peridynamics [24], the peridynamic equation of motion was discretized by nodal integration with the full physical nodal volume as the integration weight, resulting in the so-called full volume (FV) integration. The FV integration shows erratic convergence behavior, both converging and diverging with refinement [46, 51]. Several studies suggest this issue is due to rough approximation of the integration weights near the edges of the integration domains in the peridynamic equations [46,47,48,49]. So-called partial volume (PV) integration schemes have been proposed, including approximate PV [47, 48] and analytical PV [49], to more accurately compute the partial volumes intersecting with neighborhoods that serve as integration weights for particles. Partial volume schemes have been shown to improve the accuracy and yield more consistent convergence rates compared with the FV integration [46]. Influence functions that smoothly decay to zero at the boundary of the horizon have also been investigated under the FV integration framework, and it has been shown that this enhances the accuracy and can also give more consistent convergence behavior [46]. The idea behind smoothly decaying influence functions is to reduce the influence of the particles near the neighborhood boundary, mitigating the error due to numerical integration. These techniques exhibit first-order convergence in displacements [46]. The limitation of first-order convergence was attributed in [50] to the piece-wise constant nature of the approximation employed, where the reproducing kernel approximation was introduced in the peridynamic displacement field to increase the convergence rate. However, high-order Gauss integration was employed to achieve the integration accuracy necessary to avoid the aforementioned oscillatory convergence behavior, resulting in an increased computational cost. A high-order non-local deformation gradient was proposed in [52] for stability reasons, but was limited to uniform discretizations, and meanwhile the convergence properties of this method were not tested.

Taking another point of view, the non-local deformation gradient and force density in state-based peridynamics under the correspondence principle can be viewed as mathematical operators with certain approximation properties. In [27, 53] it was shown that for uniform discretizations away from the boundary, the accuracy in the non-local deformation gradient is second-order. This is confirmed by other studies, where it has been further shown that near the boundary the accuracy will be first-order [52, 54].

The precise relationship between the meshfree peridynamic method and the classical meshfree methods discussed has not been made clear. So far, one effort [27] has attempted to examine the relationship between the peridynamic approximation of derivatives via the non-local deformation gradient and the traditional meshfree approximation of derivatives. There it was shown that the state-based peridynamic formulation based on correspondence is equivalent to employing a second-order accurate implicit gradient reproducing kernel approximation [55], for both the deformation gradient operation on displacement, and force density operation on the stress, but this equivalence was established only for uniform discretizations, away from a boundary. However, this relationship also implies that these operations are both second-order accurate, at least in uniform discretizations, and away from the influence of a boundary.

In this paper, the precise relationship between meshfree methods for local models and non-local peridynamic meshfree discretizations under the correspondence principle is introduced, for general non-uniform discretizations, and finite domains. A generalized approximation which unifies these approaches is introduced termed the reproducing kernel peridynamic approximation, under both continuous (integral form) and discrete frameworks. It is shown that this approximation can yield four distinct cases: implicit gradients, the traditional non-local deformation gradient, as well as an arbitrary-order accurate non-local deformation gradient, and arbitrary-order accurate non-local higher-order derivatives. A formulation is then proposed called the reproducing kernel peridynamic (RKPD) method, consisting of the high-order accurate non-local deformation gradients, in conjunction with a high-order accurate force density, which results in an arbitrary-order accurate state-based peridynamic method. The formulation is tested under the node-based collocation framework, although a weak formulation is also possible. In contrast to the original formulation, the method is shown to exhibit convergent solutions with and without ghost boundary nodes, under both uniform and nonuniform discretizations, with superconvergent solutions for odd orders of accuracy.

The remainder of this paper is organized as follows. In Sect. 2, the governing equations for classical local methods and state-based peridynamics are briefly reviewed. The integral forms for the reproducing kernel and implicit gradient approximation are given in Sect. 3, and compared with the integral forms of the state-based peridynamic equations. In addition, the equivalence of implicit gradients and the peridynamic differential operator [56] is established. These formulations are then compared and contrasted, and the orders of accuracy are assessed. In Sect. 4, the continuous reproducing kernel peridynamic approximation is given, which unifies the two formulations, and provides arbitrary-order accurate non-local deformation gradients, and arbitrary-order accurate higher-order non-local derivative approximations. The discrete implicit gradient and peridynamic approximations are then discussed and compared in Sect. 5, with the order of accuracy in the discrete case assessed. Section 6 introduces the discrete reproducing kernel peridynamic approximation. The collocation implementation of the proposed formulation, the reproducing kernel peridynamic method, is then summarized in Sect. 7, and numerical examples are given in Sect. 8. Conclusions, and discussions on implications and possible future work are given in Sect. 9.

2 Governing equations

In this section, the governing equations for classical continuum mechanics and state-based peridynamics under the correspondence principle are briefly reviewed.

2.1 Classical continuum mechanics

The equation of motion for finite-strain continuum mechanics problems stated in the reference configuration \( \Omega \) at material position \( {\mathbf{X}} \) at time \( t \) is

where \( {\ddot{\mathbf{u}}} \equiv \text{D}{\mathbf{v}} /\text{D}t \) is the material time derivative of the velocity \( {\mathbf{v}} \), \( \rho \) is the material density in the undeformed configuration, \( {\varvec{\upsigma}} \) is the 1st Piola–Kirchhoff (PK) stress tensor (\( {\varvec{\upsigma}}^{\text{T}} \) is the nominal stress), \( \nabla \) denotes the del operator with respect to the undeformed configuration, and \( {\mathbf{b}} \) is the body force in the undeformed configuration. In this work a Lagrangian description is adopted, as state-based peridynamics under correspondence relates the 1st PK stress to the force density in the governing equations [20].

Given a strain energy density function \( W({\mathbf{F}}) \), kinetic variables such as the first 1st PK stress \( {\varvec{\upsigma}} \) can be obtained as \( {\varvec{\upsigma}} = {\partial }W({\mathbf{F}}) /{\partial }{\mathbf{F}} \). In state-based peridynamics, an analogous relationship exists between the kinematic and kinetic entities, as described in the next section.

2.2 State-based peridynamics

In order to deal with discontinuities, peridynamics [19, 20] has been introduced which precludes the differentiation involved in the governing equations for classical continuum mechanics (1). In state-based peridynamics, the force density of the divergence of nominal stress in (1) is replaced by an integral of force states \( \underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{{\mathbf{T} }} \) [20]:

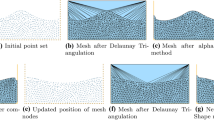

where \( {\mathcal{H}}_{{\mathbf{X}}} \) is the so-called neighborhood of the particle \( {\mathbf{X}} \), which is often defined by a sphere encompassing the point \( {\mathbf{X}} \) with radius \( \delta \) called the horizon, as shown in Fig. 1. The notation employed for the mathematical entity of states is that angle brackets denote the operation on that variable, while square brackets denote the dependence on the variable.

A fundamental kinetic entity is the force state\( \underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{{\mathbf{T} }}\langle \cdot \rangle \), rather than for instance, the 1st PK stress in the Lagrangian formulation of classical solid mechanics. The quantity \( {\mathbf{X}}^{\prime} - {\mathbf{X}} \) is said to be a “bond” of the points \( {\mathbf{X}}^{\prime} \) and \( {\mathbf{X}} \). Thus, it can be seen when comparing (1) to (2) that the force density of the divergence of stress is replaced by an integral of the action of states \( \underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{{\mathbf{T} }} \) on bonds local to \( {\mathbf{X}} \).

A fundamental kinematic entity in peridynamics is the deformation state \( \underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{{\mathbf{Y} }}\langle \cdot \rangle \) which maps (possibly nonlinearly and discontinuously) a bond in the undeformed configuration \( {\mathbf{X}}^{\prime} - {\mathbf{X}} \), to a bond in the current configuration \( {\mathbf{x}}^{\prime} - {\mathbf{x}} \), as shown in Fig. 1:

Analogous to the dependence of stress measures on strain measures in classical mechanics, the force state \( \underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{{\mathbf{T} }} \) depends on the deformation state. On the other hand, in order to facilitate the use of constitutive models in the local theory, a non-local deformation gradient \( {\boldsymbol{{\mathcal{F}}}} \) can be obtained through a principle called reduction [20], which is briefly reviewed in Sect. 3.5. The 1st PK stress can be obtained via \( {\boldsymbol{{\mathcal{F}}}} \), and can then be related to the force state \( \underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{{\mathbf{T} }} \) by means of energy principles.

3 Continuous reproducing kernel and peridynamic approximations

As will be demonstrated, the implicit gradient counterpart [36, 55, 57, 58] to the reproducing kernel (RK) approximation [4, 5] is most closely related to the non-local deformation gradient employed in peridynamics. In this section, the continuous (integral) form of the reproducing kernel and peridynamic approximations are analyzed and compared. A brief review of both is given. The continuous versions must be discretized in practice; the discrete versions will be analyzed and compared in Sect. 5. Remarkably, if the quadrature and discretizations are consistent with one another, the main results of these analyses are the same, with minor exceptions.

3.1 Continuous reproducing kernel approximation

The continuous RK approximation of a function \( u({\mathbf{x}}) \) on a domain \( {\Omega} \subset {\mathbb{R}}^{d} \) is constructed by the product of a kernel function \( \varPhi_{a} \) with compact support, and a correction function composed of a linear combination of basis functions in the following form [4, 5]:

where \( {\mathbf{H}}({\mathbf{x}}) \) is a column vector of complete nth order monomials (although other bases could be employed), and \( {\mathbf{b}}({\mathbf{x}}) \) is a column vector of associated coefficients to be determined. The dependence of these vectors in the RK approximation on the free parameter n is to be understood herein for notational simplicity.

It should be noted that, in much of the literature, the shifted basis term \( {\mathbf{x}} - {\mathbf{x}}^{\prime} \) is employed, while here the basis using \( {{\mathbf{x}}}^{\prime} - {\mathbf{x}} \) is employed in order to unify the reproducing kernel approximation and peridynamic derivative approximation later in the text. The choice is arbitrary, and only results in sign differences in gradient reproducing conditions.

The kernel function \( \varPhi_{a} \) has compact support with measure a, and the smoothness of the approximation is inherited from the kernel. For example, using C2 kernels yields C2 continuity of the approximation. In this work, the cubic B-spline kernel is employed for kernel functions and influence functions, which play the analogous role in peridynamics as discussed in Sect. 3.5. The one-dimensional cubic B-spline kernel shown in Fig. 2 is constructed as:

In multiple dimensions, one may construct a kernel by tensor product yielding a box or cuboid support:

or by defining \( \varPhi_{a} ({\mathbf{x}}^{\prime} - {\mathbf{x}}) = \varPhi_{a} (z) \) with \( z = |{\mathbf{x}}^{\prime} - {\mathbf{x}}|/a \), yielding a spherical support.

When monomials are employed for \( {\mathbf{H}}({\mathbf{x}}) \), the coefficients \( {\mathbf{b}}({\mathbf{x}}) \) are determined by enforcing nth order accuracy of the approximation in (4). This can be achieved by directly enforcing the so-called reproducing conditions (discussed later), or by using a Taylor expansion. In this work, the latter approach is employed in order to fully illustrate the meaning and construction of an implicit gradient. The Taylor series expansion of \( u({\mathbf{x}}^{\prime}) \) around \( {\mathbf{x}} \) truncated to order n is:

where \( \alpha = (\alpha_{\text{1}} , \ldots ,\alpha_{d} ) \) is a multi-index in \( {\mathbb{R}}^{d} \) of non-negative integers equipped with the notation \( \left| \alpha \right| = \alpha_{\text{1}} + \cdots + \alpha_{d} \), \( \alpha ! = \alpha_{\text{1}} ! \cdots \alpha_{d} ! \), \( {\mathbf{x}}^{\alpha } = x_{\text{1}}^{{\alpha_{\text{1}} }} \ldots x_{d}^{{\alpha_{d} }} \), and \( \partial^{\alpha } = \partial^{{\alpha_{\text{1}} }} \ldots \partial^{{\alpha_{d} }} /\partial x_{\text{1}}^{{\alpha_{\text{1}} }} \ldots \partial x_{d}^{{\alpha_{d} }} \).

In matrix form (7) can be expressed as:

where \( {\mathbf{D}}({\mathbf{x}}) \) is a row vector of \( {\{ }\partial^{\beta } u({\mathbf{x}}{)\} }_{|\beta | = 0}^{n} \) and \( {\mathbf{J}} \) is a diagonal matrix with entries \( {\{ }1/\beta {!\} }_{\left| \beta \right| = 0}^{n} \). Substituting (8) into (4) yields

The nth order accuracy of the approximation requires that \( {\mathcal{R}}_{[n]} \{ u\left( {\mathbf{x}} \right)\} = u\left( {\mathbf{x}} \right) \) in the above. Examining (9), this can be phrased as the following vanishing moment conditions:

where the fact that \( {\{ }1 /\beta {!\} |}_{\left| \beta \right| = 0} = 1 \) has been employed. Solving for \( {\mathbf{b}}\left( {\mathbf{x}} \right) \) from (10), the continuous RK approximation is obtained as

where \( \varPsi \left( {{\mathbf{x}}\text{;}{\mathbf{x}}^{\prime} - {\mathbf{x}}} \right) \) is the nth order continuous reproducing kernel function with the dependency on a and n implied, and

is the so-called moment matrix. In arriving at (11) the symmetry of \( {\mathbf{M}} \) was employed, although it is not necessary to construct the approximation.

In addition to nth order accuracy, the approximation can be shown to satisfy the following equivalent reproducing conditions:

which is often employed as the condition (for better conditioning of the moment matrix):

As previously mentioned, it can be seen that the reproducing kernel approximation (11) can also be obtained by directly imposing the reproducing conditions (14) with the construction in (4).

3.2 Continuous implicit gradient

An implicit gradient directly approximates the derivative \( \partial^{\alpha } u\left( {\mathbf{x}} \right) \) of a function \( u\left( {\mathbf{x}} \right) \) for some fixed \( \alpha \) in the same form of the reproducing kernel [55, 57]:

where the notation of multi-indices with parenthesis \( (\alpha ) \) is introduced to indicate evaluation with a fixed value of \( \alpha \) and to distinguish between terms of the form \( {\mathbf{x}}^{\alpha } \). Substitution of the Taylor series expansion in (8) into (15) yields

The condition to reproduce the gradient \( \partial^{\alpha } u \) up to nth order accuracy can be expressed as all moments vanishing except the moment corresponding to \( \alpha \), that is, requiring \( {\mathcal{D}}_{[n]}^{{(\alpha )}} \left\{ {u\left( {\mathbf{x}} \right)} \right\} = \partial^{\alpha } u({\mathbf{x}}) \) for some given \( \alpha \), which can be expressed as:

where \( {\mathbf{H}}^{{(\alpha )}} \) is a column vector of \( \{ \alpha !\delta_{\alpha \beta } \}_{|\beta | = 0}^{n} \) (emanating from \( {\mathbf{J}}^{ - 1} \)):

To make the multi-index notation clear, consider \( {\mathbf{H}}\left( {\mathbf{x}} \right) \) and \( {\mathbf{H}}^{{(\alpha )}} \) in the construction of \( {\mathcal{D}}_{[n]}^{{(\alpha )}} \{ u({\mathbf{x}})\} \) in two dimensions with n = 2, and \( \alpha = {(1,\,0)} \), that is, to approximate first order derivatives with respect to \( x_{1} \) with second-order accuracy:

Solving for \( {\mathbf{b}}^{{(\alpha )}} \) from (17), the continuous implicit gradient approximation for \( \partial^{\alpha } u({\mathbf{x}}) \) is obtained as

where again the symmetry of \( {\mathbf{M}} \) has been employed. From the above, it can be seen that the RK approximation (11) can be considered a special case of (20) with \( |\alpha | = 0 \), i.e., \( \varPsi^{(0,0,0)} = \varPsi \) which has been observed in the early history of meshfree methods [57, 59]. This also demonstrates that since a simple change of \( \mathbf{H}\left( {\mathbf{0}} \right) \) to \( {\mathbf{H}}^{{(\alpha )}} \) can approximate derivatives, the matrix \( {\mathbf{M}}\left( {\mathbf{x}} \right) \) contains information about derivatives as well as the function itself, and provides an efficient way to obtain derivative approximations rather than direct differentiation of (11), for which the cost is not trivial [35]. This fact has been leveraged for solving partial differential equations more efficiently than using direct differentiation [36, 60].

In addition, the implicit gradient approximation has been utilized for strain regularization to avoid ambiguous boundary conditions [55], avoid differentiation in stabilization for convection dominated problems [58], among other applications [60], and historically, the implicit gradient has in fact been widely used (it its discrete form) to solve partial differential equations: the generalized finite difference method [61], synchronized derivatives [57], as well as the pioneering work of the diffuse element method [2] all utilize approximations which are essentially coincident with the implicit gradient (for details, see [1]). Finally as will be discussed in Sect. 3.3, the so-called peridynamic differential operator [56] is also the implicit gradient approximation with the selection of the same bases and weighting functions.

Analogous to the RK approximation, the implicit gradient can be shown to satisfy the following gradient reproducing conditions [55]:

Or equivalently,

Similar to the reproducing kernel approximation, it can be seen that the implicit gradient approximation (20) can be also obtained by directly imposing (22) on the approximation (15).

3.3 Equivalence of the peridynamic differential operator and the implicit gradient

In this section, the implicit gradient approximation reviewed in Sect. 3.2, and the peridynamic differential operator introduced in [56] are compared.

Let us start by recasting the implicit gradient from (15) as:

where \( \hat{\Phi }_{a} \) represents the corrected kernel function:

and \( H_{a} \) is the kernel support, i.e., the portion of the domain where \( \Phi_{a} ({\mathbf{x^{\prime}}} - {\mathbf{x}}) \) is non-zero. Note that \( {\hat{\mathbf{H}}} = {\mathbf{H}} \) in (15), that is, monomial bases of order n are employed, while here \( {\hat{\mathbf{H}}} \) is used to indicate that a generic basis vector can be employed.

Directly imposing the condition of gradient reproduction (22) on (23) can be written as

which is, for \( 0 \le \left| \beta \right| \le n \),

Also, from (26) we get the following system:

or

where \( {\hat{\mathbf{M}}}({\mathbf{x}}) = \int_{{H_{\text{a}} }} {{\mathbf{H}}({\mathbf{x}}^{\prime} - {\mathbf{x}}){\hat{\mathbf{H}}}^{\text{T}} ({\mathbf{x}}^{\prime} - {\mathbf{x}})\Phi_{a} ({\mathbf{x}}^{\prime} - {\mathbf{x}}){\text{d}}{\mathbf{x}}^{\prime}}, \) which, when we select the basis vector \( {\hat{\mathbf{H}}} \) to be \( {\mathbf{H}} \), becomes the moment matrix employed in Sects. 3.1 and 3.2, and leads to the construction in (20).

Consider now the peridynamic differential operator introduced in [56]. A Taylor expansion of a function \( f({\mathbf{x}}) \) is first considered:

where \( {\varvec{\upxi}} = {\mathbf{x}}^{\prime} - {\mathbf{x}} \) and the remainder \( R(n,{\mathbf{x}}) \) is considered negligible.

The main idea of the peridynamic differential operator is to define orthogonal functions \( g_{n}^{{p_{1} p_{2} \ldots p_{d} }} \left( {\varvec{\upxi}} \right) \), where \( p_{i} \), is akin to \( \alpha_{i} \) (i.e., \( p \) and \( \alpha \) are the same), and represents the order of differentiation with respect to \( x_{i} \), with \( i = 1, \ldots ,d \), such that the following gradient reproducing conditions are imposed:

where \( \partial^{p} f = \frac{{\partial^{{p_{1} p_{2} \ldots p_{d} }} f\left( {\mathbf{x}} \right)}}{{\partial x_{1}^{{p_{1} }} \partial x_{2}^{{p_{2} }} \ldots \partial x_{d}^{{p_{d} }} }} \), for some fixed \( p = \left( {p_{1} ,p_{2} , \ldots ,p_{d} } \right) \), and \( H_{{\mathbf{x}}} \) is the peridynamic neighbourhood of \( {\mathbf{x}} \).

By comparing (23) of the implicit gradient and (30) of the peridynamic differential operator we see that they both aim at reproducing derivatives of a function of \({\mathbf{x}}\) through a convolution by finding an appropriate kernel function. In fact:

\( H_{{\mathbf{x}}} \) in (30) is the same as \( H_{a} \) in (23), as long as the RK kernel support and peridynamic neighbourhood coincide

\( f({\mathbf{x}}) \) in (30) is \( u({\mathbf{x}}) \) in (23): they both represent a generic function of \( {\mathbf{x}} \)

\( g_{n}^{{p_{1} p_{2} \ldots p_{d} }} ({\mathbf{x}}^{\prime} - {\mathbf{x}}) \) in (30) plays the role of \( \hat{\Phi }_{a} ({\mathbf{x}};{\mathbf{x}}^{\prime} - {\mathbf{x}}) \) in (23).

The functions \( g_{n}^{{p_{1} p_{2} \ldots p_{d} }} ({\varvec{\upxi}}) \) are found by imposing satisfaction of the following orthogonality property:

that is, using the notation introduced in Sect. 3.2,

where \( \tilde{n} = (n_{1} ,n_{2} , \ldots ,n_{d} ). \) This is the same condition that is imposed on the corrected kernel \( {\hat{\Phi }}_{a} ({\mathbf{x}};{\mathbf{x}}^{\prime} - {\mathbf{x}}) \) of the RK implicit gradient (26). Therefore, if \( {\hat{\Phi }}_{a} \left( {{\mathbf{x}};{\mathbf{x}}^{\prime} - {\mathbf{x}}} \right) = g_{n}^{{p_{1} p_{2} \ldots p_{d} }} \left( {{\mathbf{x}}^{\prime} - {\mathbf{x}}} \right) \) then the reproducing kernel implicit gradient and the peridynamic differential operator coincide. In [56] \( g_{n}^{p} \left( {{\mathbf{x}}^{\prime} - {\mathbf{x}}} \right) \) is defined as:

which can be rewritten as:

where \( {\tilde{\mathbf{H}}}\left( {{\mathbf{x^{\prime}}} - {\mathbf{x}}} \right) \) is a column vector of \( {\{ }w_{q} ({\mathbf{x}}^{\prime} - {\mathbf{x}})^{q} {\} }_{\left| q \right| = 0}^{n} \) and, for a given \( p \), \( \varvec{a}^{\left( p \right)} \) is a column vector of unknown coefficients \( {\{ }a_{q}^{{(p)}} {\} }_{\left| q \right| = 0}^{n} \). For example, in two dimensions (\( d = 2 \)):

By comparing (34) and (24) we notice that the definitions of \( {\hat{\Phi }}_{a} \left( {{\mathbf{x}};{\mathbf{x}}^{\prime} - {\mathbf{x}}} \right) \) and \( g_{n}^{{p_{1} p_{2} \ldots p_{d} }} \left( {{\mathbf{x}}^{\prime} - {\mathbf{x}}} \right) \) are analogous: both are the product of a weighted basis vector (\( {\hat{\mathbf{H}}}^{\text{T}} \left( {{\mathbf{x}}^{\prime} - {\mathbf{x}}} \right)\Phi_{a} \left( {{\mathbf{x}}^{\prime} - {\mathbf{x}}} \right) \) in (24) and \( {\tilde{\mathbf{H}}}^{\text{T}} \left( {{\mathbf{x}}^{\prime} - {\mathbf{x}}} \right) \) in (34), respectively) and unknown coefficients to be determined (i.e., \( {\mathbf{b}}^{{(\alpha )}} \left( {\mathbf{x}} \right) \) and \( \varvec{a}^{\left( p \right)} \)). It is therefore clear that, if the same weighted basis vectors are selected, the two are the same, meaning that the RK implicit gradient and the peridynamic differential operator are the same operator. For example, to select the same weighted basis vectors:

Due to the arbitrariness of the choice of basis, one can select \( {\hat{\mathbf{H}}}^{\text{T}} \left( {{\mathbf{x}}^{\prime} - {\mathbf{x}}} \right) \) and \( \Phi _{a} \left( {{\mathbf{x}}^{\prime} - {\mathbf{x}}} \right) \) in the RK implicit gradient so that \( {\hat{\mathbf{H}}}^{\text{T}} \left( {{\mathbf{x}}^{\prime} - {\mathbf{x}}} \right)\Phi _{a} \left( {{\mathbf{x}}^{\prime} - {\mathbf{x}}} \right) = {\tilde{\mathbf{H}}}^{\text{T}} \left( {{\mathbf{x}}^{\prime} - {\mathbf{x}}} \right) \)

If \( w_{{q_{1} q_{2} \ldots q_{d} }} \left( {\left| {{\mathbf{x}}^{\prime} - {\mathbf{x}}} \right|} \right) \) is chosen so that \( w_{{q_{1} q_{2} \ldots q_{d} }} \left( {\left| {{\mathbf{x}}^{\prime} - {\mathbf{x}}} \right|} \right) = w\left( {\left| {{\mathbf{x}}^{\prime} - {\mathbf{x}}} \right|} \right) \) [56], then \( {\tilde{\mathbf{H}}}^{\text{T}} \left( {{\mathbf{x}}^{\prime} - {\mathbf{x}}} \right) = w\left( {\left| {{\mathbf{x}}^{\prime} - {\mathbf{x}}} \right|} \right){\mathbf{H}}^{\text{T}} \left( {{\mathbf{x}}^{\prime} - {\mathbf{x}}} \right) \). Therefore, by selecting \( {\hat{\mathbf{H}}}^{\text{T}} \left( {{\mathbf{x}}^{\prime} - {\mathbf{x}}} \right) \) = \( {\mathbf{H}}^{\text{T}} \left( {{\mathbf{x}}^{\prime} - {\mathbf{x}}} \right) \) as in Sect. 3.2, and choosing \( \Phi_{a} \left( {{\mathbf{x}}^{\prime} - {\mathbf{x}}} \right) = w\left( {\left| {{\mathbf{x}}^{\prime} - {\mathbf{x}}} \right|} \right) \), the same weighted basis vector is employed.

Now, the unknown coefficients of the peridynamic differential operator are found by substituting the definition of \( g_{n}^{p} \left( {{\mathbf{x}}^{\prime} - {\mathbf{x}}} \right) \) [see (34)] into (32):

which, for some given \( p \) and for \( 0 \le \left| {\tilde{n}} \right| \le n \), leads to

or

where \( {\tilde{\mathbf{b}}}_{n}^{p} \) is a column vector \( \left\{ {\tilde{n}!\delta_{{\tilde{n}p}} } \right\}_{{\left| {\tilde{n}} \right| = 0}}^{n} \) and \( {\mathbf{A}} = \mathop \int \nolimits_{{H_{{\mathbf{x}}} }} {\mathbf{H}}\left( {{\mathbf{x}}^{\prime} - {\mathbf{x}}} \right){\tilde{\mathbf{H}}}^{\text{T}} \left( {{\mathbf{x}}^{\prime} - {\mathbf{x}}} \right){\text{d}}{\mathbf{x}}^{\prime} \) [56]. Again, we can see that for a given gradient to be reproduced, if the same weighted basis vector is chosen (i.e., \( {\hat{\mathbf{H}}}^{\text{T}} \left( {{\mathbf{x}}^{\prime} - {\mathbf{x}}} \right)\Phi_{a} \left( {{\mathbf{x}}^{\prime} - {\mathbf{x}}} \right) = {\tilde{\mathbf{H}}}^{\text{T}} \left( {{\mathbf{x}}^{\prime} - {\mathbf{x}}} \right) \)), then (38) and (39), and (27) and (28) are the same, respectively, and this leads to \( {\mathbf{b}}^{{(\alpha )}} \left( {\mathbf{x}} \right) = {\hat{\mathbf{M}}}^{ - 1} \left( {\mathbf{x}} \right){\mathbf{H}}^{{(\alpha )}} = {\mathbf{A}}^{ - 1} {\tilde{\mathbf{b}}}_{n}^{p} = \varvec{a}^{{\left( {\text{p}} \right)}} \) when \( \alpha = p \). Now, (39) is for reproducing the gradient \( \partial^{p} f \) for a given \( p \). Since \( {\mathbf{A}} \) is independent of \( p \), the equations associated with reproducing gradients \( \partial^{p} f \) for \( 0 \le \left| p \right| \le n \) can be combined [56]. For example, in the two-dimensional case we can write

where

and

In conclusion we have shown, even though the peridynamic differential operator represents arbitrary derivatives of functions through a convolution over the peridynamic neighbourhood, while the RK implicit gradient performs it over the RK kernel support, the two operators and the idea behind them, i.e., correcting a convolution operator using a weighted basis vector to obtain gradients, are the same.

3.4 Deformation gradient under continuous implicit gradients

We now return to the analysis and comparison of the implicit gradient and the way in which state-based peridynamics constructs the non-local deformation gradient. In the Lagrangian RK approximation [62], shape functions are constructed with reference to the material coordinate \( {\mathbf{X}} \), and the approximation to displacement \( {\mathbf{u}}^{h} \) is constructed as:

For simplicity, the dependence of these constructions on \( t \) will be implied for other expressions. The deformation gradient \( {\mathbf{F}} \) is the gradient of the motion of the body \( {\mathbf{x}} = \varphi ({\mathbf{x}}\text{,}t) \) and is constructed as

where \( {\mathbf{I}} \) is the identity tensor. If implicit gradients (20) are employed under the Lagrangian formulation, the deformation is approximated in the material coordinate as

where \( {\mathbf{H}}_{j}^{\nabla } = [0,\delta_{1j} ,\;\delta_{2j} ,\;\delta_{3j} ,0, \ldots ,0]\; \) corresponds to the case of \( {\mathbf{H}}^{{(\alpha )}} \) with \( |\alpha | = 1 \), for approximating first order derivatives with respect to \( X_{j} \), i.e.:

From the derivation by the Taylor expansion in Sect. 3.2, it can be inferred (or directly shown) that the deformation gradient constructed by implicit gradients possess nth order accuracy (or nth order consistency) without additional analysis needed.

3.5 Deformation gradient under continuous peridynamics

To relate the theory of peridynamics to classical continuum mechanics and provide the ability to employ conventional constitutive models, a principle called reduction can be employed [20] to relate the kinematic entity of the state \( \underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{{\mathbf{Y} }} \) to a non-local version of a deformation gradient \( {\boldsymbol{{\mathcal{F}}}} \), which yields

where \( {\mathbf{K}} \) is the reference shape tensor

that describes the undeformed configuration around the point \( {\mathbf{X}} \), and \( {\mathbf{S}} \) is the deformed shape tensor

which describes the deformed configuration around the point \( {\mathbf{X}} \). In the above, the function \( w_{\delta } ({\mathbf{X}}^{\prime} - {\mathbf{X}}) \) is called the influence function, which has compact support with measure \( \delta \). Thus the influence function in the non-local deformation gradient plays a closely analogous role to the kernel function in the construction of the deformation gradient by the implicit gradient (45), since both control the locality of the approximation of deformation.

With \( {\boldsymbol{{\mathcal{F}}}} \) in hand, the associated stress is calculated as in classical continuum mechanics, and is then related to the force state \( \underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{{\mathbf{T} }} \), which will be discussed in Sect. 4.3.

3.6 Analysis of the continuous peridynamic deformation gradient

We next examine the properties of the nonlocal deformation gradient \( {\boldsymbol{{\mathcal{F}}}} \). First, considering that the displacement \( {\mathbf{u}} = {\mathbf{x}} - {\mathbf{X}} \), using the definition of the deformation state \( \underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{{\mathbf{Y} }}\left\langle {{\mathbf{X}}^{\prime} - {\mathbf{X}}} \right\rangle = {\mathbf{x}}^{\prime} - {\mathbf{x}} \) and the reference position state \( \underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{{\mathbf{X} }}\left\langle {{\mathbf{X}}^{\prime} - {\mathbf{X}}} \right\rangle = {\mathbf{X}}^{\prime} - {\mathbf{X}} \), the deformed shape tensor \( {\mathbf{S}} \) can be expressed as:

Using (50), the non-local deformation gradient (47) can then be expressed as

In component form, the above is,

A Taylor expansion on \( u_{i} ({\mathbf{X}}^{{\prime}}) \) gives, after some algebra,

Since the fourth term is an even function about \( {\mathbf{X}} \) for symmetric functions \( w_{\delta } \), we have the following truncation error for the continuous non-local deformation gradient:

When the neighborhood \( {\mathcal{H}}_{{\mathbf{X}}} \) and influence function \( w_{\delta } ({\mathbf{X}}^{\prime} - {\mathbf{X}}) \) are centred around \( {\mathbf{X}} \) and symmetric about each axis (as in the case of spherically-shaped influence functions that are purely a function of \( \left| {{\mathbf{X}}^{\prime} - {\mathbf{X}}} \right| \)), away from the influence of the boundary of the domain (maintaining a perfectly spherical neighborhood) the third term on the right-hand-side vanishes when integrated, since it is then an odd function centred around \( {\mathbf{X}} \) and thus

and the continuous form of the non-local deformation gradient in peridynamics is second-order accurate.

Near the boundary of the domain, or in the case that the shape of \( {\mathcal{H}}_{{\mathbf{X}}} \) or the influence function \( w_{\delta } ({\mathbf{X}}^{\prime} - {\mathbf{X}}) \) is not symmetric about each axis, the third term does not vanish, and we have:

and the continuous non-local deformation gradient is first-order accurate. Thus in finite domains where the neighborhood is not symmetric near the boundary, and in a general case of arbitrary influence functions and neighborhood definitions, the continuous non-local deformation gradient is globally first-order accurate.

3.7 Comparison between continuous implicit gradients and peridynamics

In [27], it was shown that in the interior of a domain (away from the boundary, or in an infinite domain) with uniformly discretized state-based peridynamics using the correspondence principle, the discretized non-local deformation gradient (47) is equivalent to employing a local deformation gradient by the discretized form of implicit gradients (45). However, it will be demonstrated that in the general case, this is not true for both the discretized form and continuous form.

In order to facilitate a more general comparison between the non-local deformation gradient by peridynamics and the RK approximation, we first express the shape tensors (49) in matrix form:

where \( {\mathbf{P}}({\mathbf{X}}) \equiv [\begin{array}{*{20}c} {X_{1} } & {X_{2} } & {X_{3} } \\ \end{array} ]^{\text{T}} \) can be considered a type of “basis vector” consisting of monomials of order one. When the shape tensor is expressed this way, it is immediately apparent that it is coincident with the Lagrangian RK moment matrix with \( a = \delta \), \( \varPhi_{a} \left( {{\mathbf{X}} - {\mathbf{X}}^{\prime}} \right) = w_{\delta } \left( {{\mathbf{X}} - {\mathbf{X}}^{\prime}} \right) \), and linear basis, but omitting the unity term in the RK basis vector \( {\mathbf{H}}({\mathbf{X}}) \). More discussion on this point will follow later in the text.

The deformed shape tensor can also be expressed in matrix form as

Noting that \( {\mathbf{P}}({\mathbf{x}}({\mathbf{X}}^{{\prime}}) - {\mathbf{x}}({\mathbf{X}})) = {\mathbf{P}}({\mathbf{X}}^{\prime} - {\mathbf{X}}) + \left( {{\mathbf{u}}({\mathbf{X}}^{{\prime}}) - {\mathbf{u}}({\mathbf{X}})} \right) \) we have

so that

Introducing a vector \( {\mathbf{P}}_{j}^{\nabla } = [\delta_{1j} ,\;\delta_{2j} ,\;\delta_{3j} ]\; \) and using the symmetry of \( {\mathbf{K}} \), the expression (60) can be recast in indicial notation as:

For comparison, the local deformation gradient \( F_{ij} \) calculated by implicit gradients (45) with \( a = \delta \), and \( \varPhi_{a} \left( {{\mathbf{X}} - {\mathbf{X}}^{\prime}} \right) = w_{\delta } \left( {{\mathbf{X}} - {\mathbf{X}}^{\prime}} \right) \) can be expressed as:

Thus, the implicit gradient can be viewed as a type of non-local operation with length-scale \( a \), which is not surprising since it approximates differentiation by integration, just like the non-local deformation gradient in peridynamics (see [27] for additional discussions). Two key differences can be observed however. One is that the “basis” in peridynamics \( {\mathbf{P}}({\mathbf{X}}) \) omits the unity term in \( {\mathbf{H}}({\mathbf{X}}) \). If \( {\mathbf{P}}({\mathbf{X}}) \) were to be employed in the implicit gradient approximation (20), partition of nullity [the completeness condition for 0th order accuracy in (13)] would not be able to be satisfied. This fact however seems to be “compensated for” in the peridynamic gradient by the convolution with \( u_{i} ({\mathbf{X}}^{{\prime}}) - u_{i} ({\mathbf{X}}) \) rather than \( u_{i} ({\mathbf{X}}^{{\prime}}) \) alone. That is, if \( {\mathbf{u}} = constant \) then the non-local deformation gradient (61) yields the correct result of \( \mathcal{F}_{ij} = I_{ij} \) (0th order accuracy). Thus it can be seen that the form (61) is inherently first-order accurate, and in special cases, as has been demonstrated, is second-order accurate.

Another interesting point is that in examining (61), the non-local peridynamic calculation of a gradient uses values of \( {\mathbf{u}} \) near \( {\mathbf{X}} \), except the actual value at \( {\mathbf{X}} \), while the implicit gradient still uses the value of \( {\mathbf{u}} \) at \( {\mathbf{X}} \). Thus one could interpret the peridynamic operation “more non-local” versus the implicit gradient approximation. Indeed, when \( {\mathbf{X}}^{\prime} = {\mathbf{X}} \) in (61), the peridynamic “kernel” in the convolution also vanishes since \( {\mathbf{P}} \) only contains first-order monomials.

Finally, it should be emphasised that the deformation gradient by implicit gradients, and the non-local deformation gradient by peridynamics, are clearly not the same. In [27] however, an equivalence was established in the special case of a uniform discretization, and away from the influence of the boundary.

4 Continuous reproducing kernel peridynamic approximation

In this section, the continuous reproducing kernel peridynamic approximation is presented, which unifies the way in which state-based peridynamics under correspondence approximates gradients, and the implicit gradient approximation. The unification also provides two other distinct cases which will be discussed.

4.1 Continuous reproducing kernel peridynamic approximation

The convolution operations for the approximation of the gradient of a function in (61) and (62) can be unified as follows. First, consider a kernel estimate of the type (15) with a basis of monomials from order m to n to estimate gradients of a scalar field \( u\left( {\mathbf{X}} \right) \), with the convolution of \( u({\mathbf{X}}^{{\prime}}) - u({\mathbf{X}}) \) rather than \( u({\mathbf{X}}^{\prime}) \) as in (15), and a general weighting function \( \omega_{l} \) with measure \( l \):

where \( {\mathbf{Q}}_{[m,n]} ({\mathbf{X}}) \) is a column vector of the set of monomials \( \{ {\mathbf{X}}^{\beta } \}_{|\beta | = m}^{n} \), and the dependency of the operator on \( m \) and \( l \) is implied for notational simplicity. To facilitate nth order accuracy in this approximation, taking the Taylor expansion on \( u({\mathbf{X}}^{\prime}) \) in (7) yields:

where \( {\bar{\mathbf{D}}}({\mathbf{X}}) \) is a row vector of \( {\{ }\partial^{\beta } u({\mathbf{X}}{)\} }_{|\beta | = 1}^{n} \) and \( {\bar{\mathbf{J}}} \) is a diagonal matrix with entries \( {\{ }1/\beta {!\} }_{\left| \beta \right| = 1}^{n} \). Examining (64), it is apparent that in order to reproduce gradients \( {\bar{\mathcal{D}}}_{[n]}^{{(\alpha )}} \{ u({\mathbf{X}})\} = \partial^{\alpha } u({\mathbf{X}}) \) up to nth order accuracy, we have the following vanishing moment conditions:

where \( {\mathbf{Q}}_{[m,n]}^{{(\alpha )}} \) is a column vector of \( \{ \alpha !\delta_{\alpha \beta } \}_{|\beta | = m}^{n} \):

If m = 1, then the system in (65) has a unique solution. Alternatively, if m = 0 and n > 0 the system is underdetermined and an additional condition is required for determining \( {\bar{\mathbf{b}}}^{(\alpha )} \).

Consider also imposing the partition of nullity on (63) in the case of m = 0 and n > 0, that is, in addition to (65), the following is imposed:

The system (65) can then be recast with (67) in hand to yield a determined system for all free variables:

where

A unified approximation is finally obtained by solving for \( {\bar{\mathbf{b}}}^{(\alpha )} \) from (68) and substituting into (63):

The approximation in (70) for derivatives is termed the continuous reproducing kernel peridynamic approximation herein. The selection of possible values of \( \omega_{l} \), \( |\alpha | \), n and m, yield the implicit gradient approximation, as well as the manner in which the deformation gradient is approximated by peridynamics. In addition, two more approximations can be obtained which are termed the continuous nth order non-local deformation gradient, and continuous nth order non-local higher-order derivatives, enumerated as follows:

- 1.

When \( |\alpha | > 0 \), m = 0, \( \omega_{l} = \varPhi_{a} \) and n is a free variable, (70) yields the implicit gradient approximation (20), since \( {\mathbf{Q}}_{[0,n]} ({\mathbf{x}}) = {\mathbf{H}}({\mathbf{x}}) \) and \( {\mathbf{Q}}_{[0,n]}^{{(\alpha )}} ({\mathbf{x}}) = {\mathbf{H}}^{{(\alpha )}} \), and further if m = 0, then partition of nullity is satisfied by the imposition of (67) in (68), and the approximation (70) yields:

$$ \begin{aligned} {\bar{\mathcal{D}}}_{[n]}^{{(\alpha )}} \left\{ {u\left( {\mathbf{X}} \right)} \right\} & = \int\limits_{\Omega } {\bar{\varPsi }_{[0,n]}^{{(\alpha )}} \left( {{\mathbf{X}}\text{;}{\mathbf{X}}^{\prime} - {\mathbf{X}}} \right)\left( {u\left( {{\mathbf{X}}^{\prime}} \right) - u\left( {\mathbf{X}} \right)} \right)\text{d}{\mathbf{X}}^{\prime}} \\ & = \int\limits_{\Omega } {\bar{\varPsi }_{[0,n]}^{{(\alpha )}} \left( {{\mathbf{X}}\text{;}{\mathbf{X}}^{\prime} - {\mathbf{X}}} \right)u\left( {{\mathbf{X}}^{\prime}} \right)\text{d}{\mathbf{X}}^{\prime}} - u\left( {\mathbf{X}} \right)\underbrace {{\int\limits_{\Omega } {\bar{\varPsi }_{[0,n]}^{{(\alpha )}} \left( {{\mathbf{X}}\text{;}{\mathbf{X}}^{\prime} - {\mathbf{X}}} \right)\text{d}{\mathbf{X}}^{\prime}} }}_{0} \\ & = \int\limits_{\Omega } {\bar{\varPsi }_{[0,n]}^{{(\alpha )}} \left( {{\mathbf{X}}\text{;}{\mathbf{X}}^{\prime} - {\mathbf{X}}} \right)u\left( {{\mathbf{X}}^{\prime}} \right){{\rm d}}{\mathbf{X}}^{\prime}} \\ & = {\mathcal{D}}_{[n]}^{{(\alpha )}} \left\{ {u\left( {\mathbf{X}} \right)} \right\} \\ \end{aligned} $$(71) - 2.

When m = 1, n = 1, and \( |\alpha | = 1 \) (approximating first order derivatives only), and choosing \( \omega_{l} = w_{\delta } \), (70) yields the peridynamic deformation gradient (61) when approximating the derivative of \( u_{i} \), since in this case \( {\mathbf{Q}}_{[1;1]} ({\mathbf{X}}) = {\mathbf{P}}({\mathbf{X}}) \), \( {\bar{\mathbf{M}}}_{[1,1]} ({\mathbf{X}}) = {\mathbf{K}}({\mathbf{X}}) \), and when taking the derivative with respect to \( X_{j} \) we have \( {\mathbf{Q}}_{[m,n]}^{{(\alpha )}} = {\mathbf{Q}}_{[1,1]}^{{(\delta_{j1} {\text{,}}\delta_{j2} {\text{,}}\delta_{j3} )}} = {\mathbf{P}}_{j}^{\nabla } \), and

$$ \begin{aligned} \frac{{\partial u_{i} }}{{\partial X_{j} }} \simeq I_{ij} + {\bar{\mathcal{D}}}_{[1]}^{{(\delta_{j1} {\text{,}}\delta_{j2} {\text{,}}\delta_{j3} )}} \left\{ {u_{i} ({\mathbf{X}})} \right\} = I_{ij} \\ \quad + \int\limits_{{{\mathcal{H}}_{{\mathbf{X}}} }} {\left( {{\mathbf{P}}_{j}^{\nabla } } \right)^{\text{T}} {\mathbf{K}}^{{ - \text{1}}} ({\mathbf{X}}){\mathbf{P}}({\mathbf{X}}^{\prime} - {\mathbf{X}}) \times w_{\delta } ({\mathbf{X}}^{\prime} - {\mathbf{X}})\left( {u_{i} ({\mathbf{X}}^{{\prime}}) - u_{i} ({\mathbf{X}})} \right){{\rm d}}{\mathbf{X}}^{\prime}} \\ = {\mathcal{F}}_{ij}({\mathbf{X}}) \\ \end{aligned} $$(72) - 3.

When m = 1, \( |\alpha | = 1 \), \( \omega_{l} = w_{\delta } \), and n > 1 is a free parameter, when taking the gradient of the displacement \( u_{i} \) with respect to \( X_{j} \), (70) yields a nth order accurate non-local deformation gradient which is denoted \( {\boldsymbol{{\mathcal{F}}}}_{[n]} \) herein:

$$ \frac{{\partial u_{i} }}{{\partial X_{j} }}{ \simeq }\left( {{\boldsymbol{{\mathcal{F}}}}_{[n]} } \right)_{ij} ({\mathbf{X}}) = I_{ij} + {\bar{\mathcal{D}}}_{[n]}^{{(\delta_{j1} {\text{,}}\delta_{j2} {\text{,}}\delta_{j3} )}} \left\{ {u_{i} ({\mathbf{X}})} \right\} $$(73)where

$$ \begin{aligned} & {\bar{\mathcal{D}}}_{[n]}^{{(\delta_{j1} {\text{,}}\delta_{j2} {\text{,}}\delta_{j3} )}} \left\{ {u\left( {\mathbf{X}} \right)} \right\} \\ & \quad = \int\limits_{{{\mathcal{H}}_{{\mathbf{X}}} }} {\left( {{\mathbf{Q}}_{j}^{\nabla } } \right)^{\text{T}} {\bar{\mathbf{M}}}_{[1,n]}^{ - 1} \left( {\mathbf{X}} \right){\mathbf{Q}}_{[1,n]} \left( {{\mathbf{X}}^{\prime} - {\mathbf{X}}} \right)w_{\delta } \left( {{\mathbf{X}}^{\prime} - {\mathbf{X}}} \right)\left( {u\left( {{\mathbf{X}}^{\prime}} \right) - u\left( {\mathbf{X}} \right)} \right)\text{d}{\mathbf{X}}^{\prime}} \\ \end{aligned} $$(74)and

$$ \begin{array}{*{20}c} {{\mathbf{Q}}_{j}^{\nabla } \equiv {\mathbf{Q}}_{[1,n]}^{{(\delta_{j1} {\text{,}}\delta_{j2} {\text{,}}\delta_{j3} )}} = } & {\text{[0,}} & {{ \ldots ,}} & {\text{0,}} & {1\text{,}} & {\text{0,}} & {{ \ldots ,}} & {\text{0]}^{\text{T}} .} \\ {} & {} & {} & {} & \uparrow & {} & {} & {} \\ {} & {} & {} & {} & {jth\;\text{entry}} & {} & {} & {} \\ \end{array} $$(75)In the above, \( {\bar{\mathbf{M}}}_{[1,n]} \) can be interpreted as a high-order reference shape tensor, while \( {\mathbf{Q}}_{[1,n]} \) can also be understood in the context of states. The arbitrarily high-order versions of non-local deformation gradients can also be understood in terms of reduction and expansion of states to tensors, and tensors to states, respectively. Details are given in “Appendix A”.

- 4.

When m = 1, \( |\alpha | > 1 \), and n is a free variable, choosing \( \omega_{l} = w_{\delta } \) in (70) yields nth order accurate non-local higher order derivatives:

$$ {\bar{\mathcal{D}}}_{[n]}^{{(\alpha )}} \left\{ {u\left( {\mathbf{X}} \right)} \right\} = \int\limits_{{{\mathcal{H}}_{{\mathbf{X}}} }} {\left( {{\mathbf{Q}}_{[1,n]}^{{(\alpha )}} } \right)^{\text{T}} {\bar{\mathbf{M}}}_{[1,n]}^{ - 1} \left( {\mathbf{X}} \right){\mathbf{Q}}_{[1,n]} \left( {{\mathbf{X}}^{\prime} - {\mathbf{X}}} \right) \times w_{\delta } \left( {{\mathbf{X}}^{\prime} - {\mathbf{X}}} \right)\left( {u\left( {{\mathbf{X}}^{\prime}} \right) - u\left( {\mathbf{X}} \right)} \right)\text{d}{\mathbf{X}}^{\prime}} ,\quad |\alpha | > 1 $$(76)

The terminology adopted herein is that when m = 1 and \( \omega_{l} = w_{\delta } \), the derivative approximations are termed non-local or peridynamic since they approximate derivatives in the same manner as the non-local deformation gradient, they embed the non-local length scale \( \delta \), and also perform differentiation by integration. The generalization occurs with \( |\alpha | > 1 \) and/or n > 1. That is, the original non-local deformation gradient is recovered in the non-local derivatives when \( |\alpha | = 1 \) and \( n = 1 \). Thus the general expression for nth order accurate non-local derivatives in the continuous case is

Therefore, the present formulation can be regarded as a generalization of the way in which state-based peridynamics approximates derivatives using the non-local technique.

4.2 Continuous nth order non-local gradient and divergence operations

High-order non-local gradient and divergence operations can be derived from the general formulation (70) with m = 1, \( \omega_{l} = w_{\delta } \) as in (77). Examining (70) with \( |\alpha | = 1 \) (for first order derivatives) and casting it as an operator to approximate a non-local derivative of a function \( u \) with respect to \( X_{j} \), one obtains:

where \( {\mathbf{Q}}_{j}^{\nabla } \) is the same vector in (75).

In vector form, (78) can be cast as

where

and here \( \nabla u \) is a column vector. Thus the nth order non-local gradient operation on a vector field f can be expressed as:

where on the right hand side, \( {\mathbf{f}} \) is represented as a column vector. Likewise, the nth order non-local divergence on a vector field f can be expressed as:

4.3 High-order force density via non-local divergence of stress: continuous case

According to the correspondence principle [20], the force state \( \underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{{\mathbf{T} }} \) can be calculated from the 1st PK stress \( {\varvec{\upsigma}} \) as a function of the non-local deformation gradient (61) for state-based peridynamics as:

In the above, and in the following text, \( {\varvec{\upsigma}} \) denotes the matrix form of the 1st PK stress according to the context.

Comparing (1), (2) and (83), the integration of the force state as a function of stress can be interpreted as a type of non-local divergence operation on the nominal stress \( {\varvec{\upsigma}}^{\text{T}} \) as:

where the fact that \( w_{\delta } = w_{\delta } \left( {\left| {{\mathbf{X}} - {\mathbf{X}}^{\prime}} \right|} \right) \) was employed. A force state consistent with the high order non-local deformation gradient (73) can be obtained using the same correspondence principle as (see “Appendix B” for the derivation):

The corresponding non-local divergence operation that results from this force state can be expressed as:

However, despite being derived from the high-order accurate deformation gradient, numerical testing shows that the discretized form of the force density (86) viewed as a mathematical operator on \( {\varvec{\upsigma}}^{\text{T}} \) does not guarantee even 0th order accuracy in the general case. That is, when the nominal stress \( {\varvec{\upsigma}}^{\text{T}} \) is constant, the total force density contribution to a point X may be non-zero. Notably, this is also true of (84), where under a constant state of stress, the corresponding force-density could be non-zero, and the original formulation using (84) also does not possess even 0th order accuracy in the general case. Thus, despite whatever the accuracy the non-local deformation gradient possesses (using the original formulation (47), with linear accuracy, or the high-order formulation (73), with nth order accuracy), the final solution computed using either (84) or (86) may not even have 0th accuracy because of the operation of computing the force density. This assertion is verified numerically in Sect. 6.5.

Accordingly, a non-local divergence of the nominal stress \( {\varvec{\upsigma}}^{\text{T}} \) is proposed, which can be computed from the generalization of (82) from vectors to tensors as

For the linear case (n = 1), the force density computed by the proposed non-local divergence can be expressed as:

where the fact that \( {\mathbf{P}}\left( {{\mathbf{X}}^{\prime} - {\mathbf{X}}} \right) = - {\mathbf{P}}\left( {{\mathbf{X}} - {\mathbf{X}}^{\prime}} \right) \) was employed. Comparing to the standard force density by peridynamics (84), which can also be simplified as

it can be seen that constant and linear accuracy can be introduced easily into state-based peridynamics via a small modification of (89) to (88). In Sect. 6.5, it will be demonstrated that any order of accuracy desired is maintained in the discrete case.

5 Discrete reproducing kernel approximation and peridynamic deformation gradient

In this section, the discretized versions of the continuous approximations discussed in Sect. 3 are analysed and compared.

5.1 Discrete reproducing kernel approximation

A discrete version of the reproducing kernel approximation (4) can be obtained by performing numerical integration on both (11) and (12) at a set of \( NP \) nodes \( \left\{ {{\mathbf{x}}_{J} |{\mathbf{x}}_{J} \in {\Omega }} \right\}_{J = 1}^{NP} \) that discretize a domain \( {\Omega} \):

where \( u_{J} \equiv u({\mathbf{x}}_{J} ) \) are nodal coefficients, \( \varPsi_{J} ({\mathbf{x}}) \) is the reproducing kernel shape function, \( V_{J} \) is the nodal quadrature weight for point \( {\mathbf{x}}_{J} \), and \( \mathbf{M}({\mathbf{x}}) \) is the discrete moment matrix. For notational simplicity, it should be understood that \( \mathbf{M}({\mathbf{x}}) \) and other quantities denote the discrete or continuous counterparts depending on the context in which they are employed.

Unlike the continuous case, the moment matrix (91) is conditionally invertible, which requires \( (n + d\text{)!}/(n\text{!}d\text{!)} \) nodal kernels covering \( {\mathbf{x}} \) which are non-colinear (in 2D) or non-coplanar (in 3D) [29]. The selection of a kernel value of \( a = h(n\text{ + 1)} \) with h the nodal spacing generally suffices as a rule of thumb.

It is important to note that when the quadrature in (90) is the same as in (91), nth order accuracy is maintained [5]. The construction in (90)–(91) can also be derived by using the Taylor expansion procedure in Sect. 3 (the derivation is omitted here), or can be derived by enforcing the reproducing conditions directly on \( \varPsi_{J} \), both of which can also demonstrate that the discretized form possesses nth order accuracy. The interested reader is referred to the literature for these procedures, e.g., [1], and for discussions on quadrature, see [5].

The set of shape functions \( \varPsi_{J} \left( {\mathbf{x}} \right) \) satisfy the so-called nth order reproducing conditions, i.e., possess nth order completeness:

or equivalently, as it is often expressed and employed for better conditioning of the moment matrix:

Alternative to the construction in (90)–(91), determination of quadrature weights may be avoided by constructing the so-called discrete RK approximation [62], which directly imposes (92) on a corrected kernel function.

5.2 Discrete implicit gradient approximation

Analogous to the case of the discrete RK approximation (90), a discrete implicit gradient approximation can be obtained by employing quadrature on (20) at nodal locations:

where \( {\mathbf{M}}({\mathbf{x}}) \) is the same discrete moment matrix in (91). It is apparent that as in the continuous case, as a special case of (94) with \( |\alpha | = 0 \) we obtain (90), that is \( \varPsi_{J}^{{\text{(0,0,0)}}} \left( {\mathbf{x}} \right) = \varPsi_{J} \left( {\mathbf{x}} \right) \).

So long as the moment matrix is discretized with the same quadrature in (94), the gradients also enjoy nth order accuracy, which can be confirmed by deriving (94) from a Taylor expansion point of view (again the derivation is omitted here), as in the RK approximation, or by directly enforcing gradient reproducing conditions on \( \varPsi_{J}^{(\alpha )} \).

It can be shown that the derivative approximations (94) enjoy gradient completeness [55]:

Or again, analogous to (22),

If desired, quadrature weights in implicit gradients may also be avoided by employing a discrete implicit gradient approximation [55], which directly imposes (95) on a corrected kernel.

One final point to note which will be revisited, is that given a discrete set of scattered data \( {\{ }u_{J} {\} }_{J = 1}^{NP} \), an approximation to derivatives \( \partial^{\alpha } u \) can be obtained at any given point \( {\mathbf{x}} \) of interest using the implicit gradient approximation, providing a smooth field of derivative estimates in the entire domain.

5.3 Deformation gradient under discrete implicit gradient approximation

The discretization of the deformation gradient under the implicit gradient approximation (45) is calculated in the Lagrangian coordinates in a similar fashion as the continuous case:

where \( {\mathbf{H}}_{j}^{\nabla } \) is the same vector in (46).

Since the deformation gradient is constructed by discrete implicit gradients with nth order accuracy (or nth order consistency), it can again be directly inferred that the discrete deformation gradient (97) possesses nth order accuracy without additional analysis needed.

5.4 The discrete deformation gradient under peridynamics

To discretize (47)–(49), nodal quadrature at NP nodes is employed in the meshfree implementation [24], similar to the discrete RK approximation and discrete implicit gradient approximation:

The discretized non-local deformation gradient is calculated from the above quantities as

Again, for notational simplicity, depending on the context it should be understood whether \( {\mathbf{K}} \), \( {\mathbf{S}} \) or \( {\boldsymbol{{\mathcal{F}}}} \) is the continuous or discrete form.

5.5 Analysis of the discrete deformation gradient under peridynamics

Following the procedures in the continuous case in Sect. 3.6, a Taylor expansion on the displacement in (98)–(99) obtained from \( {\mathbf{x}}({\mathbf{X}}) = {\mathbf{X}} + {\mathbf{u}}({\mathbf{X}}) \) yields the discrete expression:

In order to interpret the implications of (100), first consider the case of a uniform discretization, away from the boundary, with symmetric influence functions. The fourth term on the right hand side is an even function (centred around \( {\mathbf{X}} \)) and will be non-zero due to the symmetry and constant nodal weights. Under these conditions, the following is obtained:

In the same situation, the third term disappears, but this time only at nodal locations due to the discrete quadrature, as the terms being summed will only cancel when they are “anti-symmetric” (which only occurs at nodal locations):

This also indicates that in the general case, it will be non-zero unless a careful selection of the combination of influence functions, nodal quadrature weights, and so on, are selected, as was performed in [52], but is difficult to generalize to non-uniform discretizations.

Finally, in the case of a non-uniform discretizations, or even in a uniform discretization near the boundary, and also away from nodal locations, the third term in (100) will not disappear and the expression reduces to

Thus the discrete form and the continuous form share the similar order of accuracy and behavior in accuracy; in the best-case they are both second order accurate, and in the general case, they are first-order accurate.

In summary, the same order of accuracy is attained for both continuous and discrete versions of implicit gradients, yet the continuous and discrete versions of the non-local deformation gradient in peridynamics slightly differ. That is, the accuracy is second-order in the best case (away from the boundary, uniform discretizations, symmetric influence functions), but in the discrete case second-order accuracy can only be obtained at the nodes in this situation. In the general case, the constructions are first-order accurate, for both integral and discrete forms. These situations can be rectified with the proposed generalized discrete formulation given in Sect. 6.1.

5.6 Comparison between discrete implicit gradients and peridynamics

In order to facilitate a comparison between the discrete non-local deformation gradient by peridynamics and the discrete RK approximation, we first express the discrete shape tensors (98) in matrix form:

As before, the undeformed shape tensor is coincident with the Lagrangian RK discrete moment matrix (91) with \( a = \delta \), \( \varPhi_{a} = w_{\delta } \), and linear basis, but omitting the unity term in the vector \( {\mathbf{H}}({\mathbf{X}}) \).

Following the procedures in Sect. 3.7, the discrete non-local deformation gradient can be rearranged and expressed as:

For comparison, the local deformation gradient \( F_{ij} \) calculated by discrete implicit gradients (94) with \( a = \delta \), and \( \varPhi_{a} = w_{\delta } \) can be expressed as:

It can be seen that in the discrete case, if \( {\mathbf{P}}({\mathbf{X}}) \) were to be employed in the implicit gradient approximation, partition of nullity would also not be able to be satisfied. This fact is again “compensated for” in the discrete peridynamic gradient by the summation with \( \left[ {u_{i} ({\mathbf{X}}_{J} ) - u_{i} ({\mathbf{X}})} \right] \) rather than \( u_{i} ({\mathbf{X}}_{J} ) \) alone. That is, if \( {\mathbf{u}} = constant \) then the discrete non-local deformation gradient still yields the correct result of \( \mathcal{F}_{ij} = I_{ij} \).

Another interesting point is that the gradient approximation (105) does not allow a continuous gradient field representation from a discrete set of nodal data, since in computing the quantity away from the discrete points with known solutions (e.g., from scattered data or a PDE) \( u_{i} ({\mathbf{X}}_{J} ) \), the quantity \( u_{i} ({\mathbf{X}}) \) is unknown. This places a serious drawback on the approximation, as it can only yield gradient estimations at the scattered data points themselves, but does not provide for an interpolation function for the data. Thus formally, the peridynamic approximation cannot provide a smooth field at all points \( {\mathbf{X}} \) in the domain given a finite set of nodal coefficients \( {\{ }u_{J} {\} }_{J = 1}^{NP} \). It can however, given a function \( u({\mathbf{X}}) \) defined in the entire domain, provide an estimate of derivatives. Finally, it can be noted that it would be possible to interpolate the derivative estimates at nodes, although this yields some additional complexity.

6 Reproducing kernel peridynamic approximation

In this section, the discrete form of the reproducing kernel peridynamic approximation is given. High-order non-local discrete deformation gradients and non-local divergence operations are derived, as well as several other discrete approximations. The order of accuracy of these operators is also verified numerically.

6.1 Reproducing kernel peridynamic approximation

Similar to the continuous case, the operations for the discrete approximation of the gradient by peridynamics and implicit gradients can be unified as follows. First, consider a discrete approximation of the type (94) with a basis of monomials from order m to n to estimate gradients of a scalar field \( u\left( {\mathbf{X}} \right) \), with use of \( u({\mathbf{X}}_{J} ) - u({\mathbf{X}}) \) rather than \( u({\mathbf{X}}_{J} ) \) as in (105):

where \( {\mathbf{Q}}_{[m,n]} ({\mathbf{X}}) \) is the same column vector of the set of monomials \( \{ {\mathbf{X}}^{\beta } \}_{|\beta | = m}^{n} \) as the continuous case. To facilitate nth order accuracy in this approximation, taking the Taylor expansion on \( u\left( {{\mathbf{X}}_{J} } \right) \) in (7) yields:

where \( {\bar{\mathbf{D}}}({\mathbf{X}}) \) and \( {\bar{\mathbf{J}}} \) are the same vectors and matrices in the continuous case (64). Examining (108), it is apparent that in order to reproduce gradients up to nth order accuracy, we have the following discrete vanishing moment conditions:

where \( {\mathbf{Q}}_{[m,n]}^{{(\alpha )}} \) is a again a column vector of \( \{ \alpha !\delta_{\alpha \beta } \}_{|\beta | = m}^{n} \). As before, when m = 0 and n > 0 the system is underdetermined and an additional condition is required for determining \( {\bar{\mathbf{b}}}^{(\alpha )} \). Imposing the partition of nullity on (107) in the case of m = 0 and n > 0:

the system (109) can then be recast with (110) in hand to yield a determined system for all free variables:

where

A unified discrete approximation is obtained by solving for \( {\bar{\mathbf{b}}}^{(\alpha )} \) from (111) and substituting into (107):

The approximation in (113) for derivatives is termed the reproducing kernel peridynamic approximation herein. The selection of possible values of \( \omega_{l} \), \( |\alpha | \), n and m, yield both the discrete implicit gradient approximation, as well as the manner in which the deformation gradient is approximated by the discretized version of peridynamics, and as before, two additional approximations can be obtained which are termed the nth order non-local deformation gradient, and nth order non-local higher order derivatives herein:

- 1.

When \( |\alpha | > 0 \), m = 0, \( \omega_{l} = \varPhi_{a} \), and n is a free variable, (113) yields the discrete implicit gradient approximation (94), since \( {\mathbf{Q}}_{[0,n]} ({\mathbf{x}}) = {\mathbf{H}}({\mathbf{x}}) \) and \( {\mathbf{Q}}_{[0,n]}^{{(\alpha )}} ({\mathbf{x}}) = {\mathbf{H}}^{{(\alpha )}} \), and further if m = 0, then the discrete partition of nullity is satisfied by the imposition of (110), and the approximation yields:

$$ \begin{aligned} \left[ {{\bar{\mathcal{D}}}_{[n]}^{h} } \right]^{{(\alpha )}} \left\{ {u\left( {\mathbf{X}} \right)} \right\} & = \sum\limits_{J = 1}^{NP} {\bar{\varPsi }_{[0,n]J}^{{(\alpha )}} \left( {\mathbf{x}} \right)\left[ {u\left( {{\mathbf{X}}_{J} } \right) - u\left( {\mathbf{X}} \right)} \right]} \\ & = \sum\limits_{J = 1}^{NP} {\bar{\varPsi }_{[0,n]J}^{{(\alpha )}} \left( {\mathbf{x}} \right)u\left( {{\mathbf{X}}_{J} } \right)} - u\left( {\mathbf{X}} \right)\underbrace {{\sum\limits_{J = 1}^{NP} {\bar{\varPsi }_{[0,n]J}^{{(\alpha )}} \left( {\mathbf{x}} \right)} }}_{0} \\ & = \sum\limits_{J = 1}^{NP} {\bar{\varPsi }_{[0,n]J}^{{(\alpha )}} \left( {\mathbf{x}} \right)u\left( {{\mathbf{X}}_{J} } \right)} \\ & = \left[ {{\mathcal{D}}_{[n]}^{h} } \right]^{{(\alpha )}} \left\{ {u\left( {\mathbf{x}} \right)} \right\} \\ \end{aligned} $$(114) - 2.

When m = 1, n = 1, \( \omega_{l} = w_{\delta } \), and \( |\alpha | = 1 \) (approximating first order derivatives only), (113) yields the discrete peridynamic deformation gradient (105) when approximating the derivative of \( u_{i} \), since \( {\mathbf{Q}}_{[1;1]} ({\mathbf{X}}) = {\mathbf{P}}({\mathbf{X}}) \), \( {\bar{\mathbf{M}}}_{[1,1]} ({\mathbf{X}}) = {\mathbf{K}}({\mathbf{X}}) \), and when taking the derivative with respect to \( X_{j} \) we have \( {\mathbf{Q}}_{[1,1]}^{{(\delta_{j1} {\text{,}}\delta_{j2} {\text{,}}\delta_{j3} )}} = {\mathbf{P}}_{j}^{\nabla } \) and thus

$$ \begin{aligned} F_{ij} ({\mathbf{X}}) & \simeq I_{ij} + \left[ {{\bar{\mathcal{D}}}_{[1]}^{h} } \right]^{{(\delta_{j1} {\text{,}}\delta_{j2} {\text{,}}\delta_{j3} )}} \left\{ {u_{i} ({\mathbf{X}})} \right\} \\ & = I_{ij} + \sum\limits_{J = 1}^{NP} {\left[ {{\mathbf{P}}_{j}^{\nabla } } \right]^{\text{T}} {\mathbf{K}}^{{ - \text{1}}} ({\mathbf{X}}){\mathbf{P}}({\mathbf{X}}_{J} - {\mathbf{X}}) \times w_{\delta } ({\mathbf{X}}_{J} - {\mathbf{X}})\left[ {u_{i} ({\mathbf{X}}_{J} ) - u_{i} ({\mathbf{X}})} \right]V_{J} } \\ & = {\mathcal{F}}_{ij} ({\mathbf{X}}) \\ \end{aligned} $$(115) - 3.

When m = 1, \( |\alpha | = 1 \), \( \omega_{l} = w_{\delta } \), and n > 1 is a free parameter, when taking the gradient of the displacement \( u_{i} \), (113) yields a nth order accurate non-local deformation gradient denoted \( {\boldsymbol{{\mathcal{F}}}}_{[n]} \) herein:

$$ \left[ {{\boldsymbol{{\mathcal{F}}}}_{[n]} } \right]_{ij} ({\mathbf{X}}) = I_{ij} + \left[ {{\bar{\mathcal{D}}}_{[n]}^{h} } \right]^{{(\delta_{j1} {\text{,}}\delta_{j2} {\text{,}}\delta_{j3} )}} \left\{ {u_{i} ({\mathbf{X}})} \right\} $$(116)where

$$ \begin{aligned}&\left[ {{\bar{\mathcal{D}}}_{[n]}^{h} }\right]^{{(\delta_{j1} \text{,}\delta_{j2}\text{,}\delta_{j3} )}} = \sum\limits_{J = 1}^{NP} \left[{{\mathbf{Q}}_{j}^{\nabla } } \right]^{\text{T}}{\bar{\mathbf{M}}}_{[1,n]}^{ - 1} \left( {\mathbf{X}}\right) \\{\times}\,&\quad {\mathbf{Q}}_{[1,n]} \left( {{\mathbf{X}}_{J} - {\mathbf{X}}}\right)w_{\delta } \left( {{\mathbf{X}}_{J} - {\mathbf{X}}}\right)\left[ {u\left( {{\mathbf{X}}_{J} } \right) - u\left({\mathbf{X}} \right)} \right]V_{J} \end{aligned} $$(117)and \( {\mathbf{Q}}_{j}^{\nabla } \) is the vector in (75).

- 4.

When m = 1, \( |\alpha | > 1 \), and n is a free variable, choosing \( \omega_{l} = w_{\delta } \), (113) yields nth order accurate non-local higher order derivatives:

$$\begin{aligned} &\left[ {{\bar{\mathcal{D}}}_{[n]}^{h} } \right]^{{(\alpha)}} \left\{ {u\left( {\mathbf{X}} \right)} \right\} =\sum\limits_{J = 1}^{NP} \left({{\mathbf{Q}}_{[1,n]}^{{(\alpha )}} }\right)^{\text{T}} {\bar{\mathbf{M}}}_{[1,n]}^{ - 1} \left({\mathbf{X}} \right) \\&\quad \times {\mathbf{Q}}_{[1,n]} \left( {{\mathbf{X}}_{J} -{\mathbf{X}}} \right)w_{\delta } \left( {{\mathbf{X}}_{J} -{\mathbf{X}}} \right)\left[ {u\left( {{\mathbf{X}}_{J} } \right) -u\left( {\mathbf{X}} \right)} \right]V_{J} ,\;\;\;|\alpha | > 1\end{aligned}$$(118)As before, a general expression for discrete nth order accurate non-local derivatives can be found by setting m = 1 and \( \omega_{l} = w_{\delta } \) in (113):

$$ \begin{aligned}&\left[ {{\bar{\mathcal{D}}}_{[n]}^{h} } \right]^{{(\alpha)}} \left\{ {u\left( {\mathbf{X}} \right)} \right\} =\sum\limits_{J = 1}^{NP} \left({{\mathbf{Q}}_{[1,n]}^{{(\alpha )}} }\right)^{\text{T}} {\bar{\mathbf{M}}}_{[1,n]}^{ - 1} \left({\mathbf{X}} \right) \\ &\quad \times {\mathbf{Q}}_{[1,n]} \left( {{\mathbf{X}}_{J} -{\mathbf{X}}} \right)w_{\delta } \left( {{\mathbf{X}}_{J} -{\mathbf{X}}} \right)\left[ {u\left( {{\mathbf{X}}_{J} } \right) -u\left( {\mathbf{X}} \right)} \right]V_{J} .\end{aligned}$$(119)It should be noted that similar to the discrete deformation gradient in (99), this approximation cannot yield a continuous field of derivative estimates from a set of data \( {\{ }u_{J} {\} }_{J = 1}^{NP} \), since \( u\left( {\mathbf{X}} \right) \) would be required at other points aside from the nodal positions. This again places a limitation on the approximation. However derivative approximations can be obtained at the nodal positions \( {\mathbf{X}}_{I} \) themselves as:

$$ \begin{aligned}&\left. {\left[ {{\bar{\mathcal{D}}}_{[n]}^{h} }\right]^{{(\alpha )}} \left\{ {u\left( {\mathbf{X}}\right)} \right\}} \right|_{{{\mathbf{X}} = {\mathbf{X}}_{I} }} =\sum\limits_{J = 1}^{NP} \left ({{\mathbf{Q}}_{[1,n]}^{{(\alpha )}} }\right)^{\text{T}} {\bar{\mathbf{M}}}_{[1,n]}^{ - 1} \left({{\mathbf{X}}_{I} } \right)\\&\quad{\mathbf{Q}}_{[1,n]} \left({{\mathbf{X}}_{J} - {\mathbf{X}}_{I} } \right)w_{\delta } \left({{\mathbf{X}}_{J} - {\mathbf{X}}_{I} } \right)\left[ {u_{J} - u_{I}} \right]V_{J} \end{aligned}$$(120)with \( u_{J} \equiv u\left( {{\mathbf{X}}_{J} } \right) \).

In contrast, the implicit gradient approximation (94), as well as the direct derivative of the RK approximation (90) can yield an approximation to derivatives of a field at every point, constructed from a set of scattered nodal data \( {\{ }u_{J} {\} }_{J = 1}^{NP} \). For the construction in (119), a form of post-processing or reinterpolation would be involved.