Abstract

Acoustic signals play an essential role in machine state monitoring. Efficient processing of real-time machine acoustic signals improves production quality. However, generating semantically useful information from sound signals is an ill-defined problem that exhibits a highly non-linear relationship between sound and subjective perceptions. This paper outlines two neural network models to analyze and classify acoustic signals emanating from machines: (i) a backpropagation neural network (BP-NN); and (ii) a convolutional neural network (CNN). Microphones are used to collect acoustic data for training models from a computer numeric control (CNC) lathe. Numerical experiments demonstrate that CNN performs better than the BP-NN.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Auscultation is the act of listening to the internal sounds of organs such as the heart and lungs for diagnosing pathological disorders. Health professionals (doctors and nurses) develop pronounced auscultation skills through substantial clinical practice and can easily link body sounds with specific pathological disorders. This paper outlines the concept of machine auscultation, analogous to auscultation, which identifies abnormal sounds emanating from machines with a time resolution better than one second just as a human health professional can recognize abnormal sounds using a stethoscope.

Generating semantically useful information from sound signals is an ill-defined problem that exhibits a highly non-linear relationship between sound and subjective perception [1]. There are several challenges to develop appropriate automated sound recognition based on reliable machine perception. The biggest challenge in deciphering insightful information from sound bites is that time-structure sound signals are masked by time dilation and compression. In other words, the duration of the same sound pattern such as machine chatter can vary by a factor of ten or more. If the focus is on classification, in some cases, the sound signal properties of subclasses in a particular class are similar to the subclasses in other classes while being different from subclasses in its own class. This creates interclass similarity and increases the complexity of the problem [2]. This problem is further exacerbated by the fact that real-world environments such as shop floor induce noise because of the presence of multiple non-target sound sources [3]. Noise and flat spectrum features always influence the accuracy ratio of the final result. In addition, the number and complexity of sound classes, including the amount of training data, also have a direct influence on the performance of sound recognition systems.

As an illustrative example of the machine auscultation concept, we focus on the task of chatter detection. Chatter, which is caused by machine vibration, is the relative movement between a workpiece and a cutting tool. This can occur during multiple machine processes such as milling, drilling, and grinding. Chatter can significantly deteriorate surface quality and reduce tool life in the computer numeric control (CNC) machines [4]. It is caused because of process nonlinearities [5]. Therefore, chatter identification, detection, and prevention are the most important tasks in the manufacturing process [6]. In addition, our focus is on determining machining parameters such as cut depth, feed rate, and spindle speed that produce chatter. Understanding the non-linear relationships between machining parameters and chatter can help in the proper selection of machining parameters to avoid chatter.

This paper outlines the application of neural networks (NNs) in chatter detection from a large-scale audio dataset. Instead of relying on approaches based on existing heuristics and hand-coded features to address the above-mentioned challenges in enabling machine perception from sound, a data-driven approach that learns features of features to identify semantic audio events such as chatter from spectrogram based on raw audio data pre-processing is the core focus of this paper. The contributions of this paper include the following: (i) a novel framework using backpropagation neural network (BP-NN) and convolutional neural network (CNN) to help understand artificial sounds for machine diagnostic purposes; (ii) training the developed NN learning models using large-scale audio datasets of sound recorded from a machine shop CNC machine termed CNCAudioNet database; (iii) very high classification accuracy of our learned models in recognizing audio events such as chatter and manufacturing parameters including feed rate, spindle speed, and cut depth, drastically outperforming state-of-art methods. The code and dataset related to the outlined work is located at Ref. [7].

Our contributions are motivated by the idea that the application of machine learning techniques to manufacturing processes can serve as the backbone of a digital manufacturing paradigm, thereby leading to increased productivity and product quality. In addition, the created dataset enables the examination of the application of deep-learning frameworks with large audio datasets in the manufacturing domain, which helps in exploiting ideas from performance improvements reported in the field of image classification.

This paper is organized as follows. Section 2 presents a discussion on related studies. Section 3 provides an overview of the main components of the paper. Section 4 introduces an acoustic signal collection method. Section 5 outlines sound/figure pre-processing methods that convert recorded raw acoustic signals to processable data for the BP-NN and CNN, separately. Section 6 explains the architectures of two different machine learning models. Section 7 describes the classification performance of the two presented models and possible future work.

2 Related work

2.1 Chatter detection

Chatter identification has been the focus of reported research in the manufacturing domain. In the last couple of decades, chatter detection has been executed via in-process real-time sensor measurements such as force, displacement, and off-line machined surface characterization [8]. Several sensors such as accelerometers, dynamometers, ammeters, or multi-sensor based approaches have been pursued [9,10,11,12]. Displacement, velocity, and acceleration transducers have a common problem of signal bandwidth alteration because of either the sensor’s frequency response, signal filtering, and sensitivity (as a consequence of remote placement) or the fusion of data from multiple sensors. Unlike our study, which primarily focuses on a single modality such as sound, these techniques either focus on multi-modal signals or completely different modalities.

Sound signals can easily overcome the problem of signal bandwidth alteration and are easier to implement, induce less cost, and can accurately analyze chatter. Delio et al. [13] were the first to report the use of audio signals for chatter detection. However, the study reported in Ref. [13] is limited to a very small dataset and cannot be scaled to a large dataset. Moreover, the results were demonstrated with “clean” datasets in which noise from the background was absent. In addition, the method can detect chatter in only fully developed stages and not at the onset of chatter. Our method addresses all such shortcomings.

Several machine learning algorithms have been used for chatter detection such as artificial neural network (ANN) [14], support vector machine (SVM) [15, 16], fuzzy logic [17], and hidden Markov model (HMM) [18]. All these techniques require feature engineering, which involves the development of handcrafted feature vectors to enable chatter detection. For instance, the use of Hilbert–Huang transform (HHT) as a feature vector was proposed [19]. Albeit, because there are only two features, the mean value and standard deviation, in the HHT spectrum, the proposed method cannot effectively describe the complex characteristics of signals, thereby limiting model accuracy.

Several feature vectors based on spectral analysis [20], s-transform [21], total power and dispersion of the dominant forced and chatter vibration modes [22], coarse-grained entropy rate [23], mutual information, [24] and maximum likelihood (ML) estimation [25] have been developed to process different data types for enabling chatter detection. Feature engineering is an arduous and expensive (in terms of time and expertise) process of creating features that encapsulate the complexity of data, making it easier for machine learning algorithms to detect patterns.

Our method utilizes deep learning algorithms that automatically learn high-level features from data and help improve the accuracy of prediction. In addition, the non-linear relationship between manufacturing parameters such as cut depth, feed rate, and spindle speed also plays a critical role in the onset of chatter.

2.2 CNN

In 1998, Lécun proposed LeNet [26], paving the way for modern CNN. His network comprising of five layers, including convolution layers and pooling layers, could efficiently recognize large image datasets. Restricted by the performance of CPU and GPU, CNN became irrelevant for ten years. After 2012, with advances in GPU performance, AlexNet was proposed by Krizhevsky et al. [27]. They used ReLU as an activation function and added a dropout layer that greatly enhanced the accuracy and speed of CNN. In the next few years, deeper networks were proposed such as VGG [28] and GoogLeNet [29]. All these networks enhanced the performance of object classification. Meanwhile, object detection problems became a common topic with the surge in online databases and repositories. Girshick et al. [30] proposed a R-CNN to detect and recognize features in images. Following this, fast R-CNN [31] and faster R-CNN [32] improved the efficiency and accuracy of structure making object detection problems [33].

CNN-based architectures have also been used for sound and speech recognition. Piczak [34] successfully applied CNN for environmental sound classification tasks. The results presented in the paper indicated that CNN was indeed, a suitable approach for sound classification but demonstrated relatively low accuracy. Boddapatia and Petef [35] utilized two leading CNN architectures for image recognition (AlexNet and GoogLeNet) on the same dataset as Karol on three different sound images: spectrogram; mel-frequency cepstrum coefficients (MFCCs); and cross recurrence plot (CRP). Although the test accuracy of GoogLeNet was shown to be close to 93%, its 22-layer architecture was too complex for sound identification and required further simplification. Fu et al. [36] applied an 11-layer CNN model for monitoring machining vibration states using a vibration-time image without traditional feature engineering. The performances among SVM, NN, and CNN models were compared and CNN exhibited the highest accuracy and lowest overfitting phenomenon. These initial studies demonstrated that CNN was a powerful approach for sound signal classification tasks. However, to the best of our knowledge, CNN-based approaches for chatter detection and proper selection of machining parameters did not exist in the literature.

3 Overview

Our goal in this paper is to demonstrate that machine auscultation can be achieved using a large-scale machine audio dataset. The data acquisition process for enabling machine auscultation is first discussed (see Fig. 1a). Because the acquired data are noisy, a data pre-processing method is utilized for noise attenuation (see Fig. 1b). Data pre-processing, if needed, includes the conversion of raw data into a format such as Mel-spectrogram (Section CNN). Two different machine learning algorithms (i) a BP-NN (see Fig. 1 c1), and (ii) a CNN (see Fig. 1 c2) was trained to enable machine perception. Finally, the test dataset (a subset of acquired data) was used to obtain an objective comparison of the performance of BP and CNN-based machine learning approaches.

4 Acoustic data collection

All experiments were performed on the Bridgeport Romi EZ Path CNC Lathe (see Fig. 2). The CNC lathe was programmed to perform turning operations on round stock alloy steel. The acoustic signals generated during the turning operation in all experiments were recorded using the Behringer microphone (ECM8000). The microphone was connected directly to a laptop, allowing sound samples to be saved by the voice recorder App installed on the laptop. The acoustic signal acquisition was performed in two phases. The first phase focused on the acquisition of a chatter dataset (dataset 1), whereas, the second phase recorded acoustic signals from various work situations, resulting in different combinations of machining parameters (dataset 2).

For chatter dataset generation, the CNC lathe was programmed to settings that intentionally induced chatter during the turning of the round stock. For the machining parameter variation dataset, a latin hypercube sampling (LHS) based design of experiment (DoE) approach was used to generate different combinatorial settings of speed, feed rate, and cut depth. LHS is a statistical method of generating a near-random sample of parameter values from a multidimensional distribution [37].

The range of parameters used for the LHS DoE setting generated for dataset 2 is outlined in Table 1. However, because of material and time limitations, CNC lathe was programmed as a subset of the LHS generated parameter settings (32 samples) to generate acoustic signals during a turning operation. The selected parameters are displayed in Fig. 3. The time for recording chatter sound sample is about 80 min (dataset 1). The recording time for speed, feed rate, and cut depth is 3 h (dataset 2). Each sound sample in dataset 2 includes three features at any given time. The data were stored in *.wav file format for signal pre-processing.

5 Signal pre-processing

5.1 Pre-emphasis

The raw acoustic signals obtained from the CNC lathe are marred by environmental noise, inhibiting the signals from being used directly as input data to the machine learning techniques. In our case, during the data collection process, the noise in the recorded acoustic signals is produced from other CNC machines that are simultaneously operating in conjunction with the CNC lathe from which the acoustic signals were recorded. By pre-processing the raw data, it is possible to generate “cleaner” acoustic signals that can be used as inputs to different machine learning techniques.

The low-frequency component of the sound signal normally has more energy than the high-frequency component. The discriminator output noise power spectral density increases as the square of the frequency (low-frequency noise is small, high-frequency noise is large), results in high signal-to-noise ratio across low frequencies and low signal-to-noise ratio across high frequencies. This makes high-frequency transmission difficult. Pre-emphasis is an effective acoustic signal pre-processing method for addressing this issue [38]. Pre-emphasis essentially is a first-order high-pass digital filter designed to increase the magnitude of higher frequencies with respect to the magnitude of lower frequencies. The high-pass filter makes the overall signal-to-noise ratio more consistent across all sound frequencies and minimizes the adverse effects of phenomena such as attenuation distortion in subsequent parts of the processing pipeline. The transfer function underlying the pre-emphasis high-pass filter used in the outlined work is defined as follows

where a is pre-emphasis coefficient and y is the sound signal.

In the outlined work, the value of a is set to 0.98. As shown in Fig. 4, the application of pre-emphasis based pre-processing methods relatively increases the amplitude of high frequencies as compared to the lower frequencies, thereby enhancing consistency across the entire spectrum.

5.2 BP-NN feature extraction

BP-NN is a technique that is dependent on the extraction of characteristic features from the raw data. Characteristic feature extraction compresses raw data through the use of dimensionality reduction and helps in the detection of the most effective data attributes that make sound signal identification tasks easier to execute.

Feature vectors are usually obtained from segmented sound signals to be used for classification [39]. Feature vectors utilize amplitude, frequency, and energy information of the sound signals. Peak intensity and average amplitude values directly correspond to sound characteristics. Different frequency peaks are obvious features for the classification system to use for prediction [40, 41]. Feature extraction algorithms based on wavelet packet decomposition were proposed in Refs. [42, 43]

In the field of acoustics, amplitude refers to the maximum value that a vibration quantity can acquire in a specific time period and is the most intuitive feature when distinguishing different sounds. In this paper, maximum and average amplitudes are chosen as features.

Frequency is another important feature of sound signal and is defined as the number of acoustic vibrations per second. Major peaks in the frequency and the harmonics are the two features used to predict the working condition of the CNC machines in the outlined work. In this paper, the fast Fourier transform (FFT) method is applied to the original sound image to obtain a spectrogram that extracts useful sound classification features. Based on the approach in Ref. [41], five different types of frequency parameters are selected as frequency feature vectors to train neural network models. These parameters include: P1 is the maximum frequency peak in the time period; P2 is the second harmonic peak; P3 is the third harmonic peak; P4 = P1 + P2; P5 = P1 + P2 + P3.

In addition to amplitude and frequency, energy is another valuable feature. Energy features can be attributed to the recent developments in wavelet transform theory. The traditional theory of signal analysis is primarily based on the Fourier transform (FT). However, the FT suffers from the following disadvantages: (i) limitations of localization analysis; and (ii) inability to analyze non-stationary signals. To address these limitations, researchers are improving application methods by short time Fourier transforms (STFT). Unfortunately, the sliding window function of STFT is fixed. Fixed sliding window causes the frequency resolution to be bounded during the signal analysis process. A wavelet transform can overcome this problem. Wavelet analysis is an emerging branch of mathematics that is a perfect addition to Fourier analysis, harmonic analysis, and numerical analysis. In the fields of signal processing, image processing, speech processing, and nonlinear science, wavelet transform is considered to be one of the most effective time-frequency analysis methods following Fourier analysis. Compared with Fourier transforms, wavelet transforms are localized time and frequency domain transforms that can effectively extract valuable information. Wavelet transform solves many of the difficulties plaguing Fourier transforms.

Wavelet transforms can be used to decompose a signal using wavelets, e.g., a family of orthogonal functions such as

where a is the scale factor of a basic wavelet \(\phi \left( t \right)\) and \(\tau\) is a translation variable.

In the outlined work, a 5 dB wavelet transform is applied to enable the multi-scale 5 layers wavelet decomposition. Figure 5 denotes the low-frequency coefficient of layer 5 as A5 and the high-frequency coefficients in the 5 different layers as D1, D2, D3, D4, and D5. All frequency coefficients are calculated simultaneously. The quadratic sum of each layer is calculated and sent as input data for the BP-NN.

Thirteen feature vectors were extracted from the amplitude, frequency, and energy information of the sound dataset to detect chatter. Of the 13 features, two features were extracted from amplitude information; five features were extracted from frequency information; and six features were extracted from energy information. All 13 features are listed in Table 2.

5.3 CNN image processing

Mel-spectrogram image is used as an input to the CNN. Each Mel-spectrogram image is constructed from a sound sample of 0.2 s. Dividing the overall data into shorter time intervals leads to easier feature extraction. FFT is the first step in creating the Mel-spectrogram image. Simply stated, the FFT converts waveform data in the time domain into a frequency domain. The FFT achieves this by breaking down the original time-based waveform into a series of sinusoidal terms, each with a unique magnitude, frequency, and phase. Plotting the amplitude of each sinusoidal term versus its frequency creates a sound spectrum as shown in Fig. 6.

A spectrogram image is obtained after extracting the amplitude-frequency information. A spectrogram image comprises two axes: the first axis represents time; the second axis represents frequency. A Mel-spectrogram is a specific spectrogram that expresses frequency in Mel scale. The Mel scale is a perceptual scale of pitches determined by listeners to be equal in distance from one another. The reference point between this scale and the normal frequency measurement is defined by assigning a perceptual pitch of 1 000 Mels to a 1 000 Hz tone, 40 dB above the listener’s threshold. The frequency (f) hertz is converted into Mels (m) using [44]

Figure 7 illustrates an example of a Mel-spectrogram image obtained from the collected sound dataset.

Two of the most popular image/hand-writing recognition projects in the CNN domains are Cifar-10 [45] and MNIST [26]. The size of the input images is set to 32 × 32 pixels and 28 × 28 pixels, respectively. The small pixel size of input images requires smaller memory allocation and reduces the time to train the overall CNN network. However, smaller pixel resolution leads to a degradation of the defining features. The degradation of features adversely affects the accuracy of the trained model. To obtain the best tradeoff between image size and accuracy for our problem, multiple image sizes were tested in the presented work. When the sound image size was smaller than 100 × 100 pixels, the CNN loss function was unable to converge, thereby resulting in poor training accuracy. Moreover, because of the limitations imposed by the computer configuration, a larger image will result in a memory exhaust problem. Finally, a 150 × 150 pixels Mel-spectrogram is selected in the reported work.

6 Model architecture

6.1 BP-NN

The learning rule for the BP-NN utilizes the steepest descent optimization algorithm while back propagating through the network to continuously adjust the interconnecting weights and thresholds in an attempt to minimize the sum of squared error in the network. BP-NN comprises an input layer, hidden layer, and an output layer. The layers are updated using two main steps: information propagation and error backpropagation steps. Input layer neurons are responsible for receiving feature vectors as input data and transmitting them to the middle hidden layer neurons. The middle hidden layer is the information processing layer and is responsible for information transformation. The most popular transfer function used in BP-NN is the sigmoid logistic, which is expressed as

According to the complexity of the classification task, the middle layer can be designed to be either a single-layer or multi-layers. After information is transferred from the middle hidden layer to the output layer, the information propagation operation is completed. When the actual output is different from the desired output, the error BP process begins. The BP error calculated by the NN is defined as

where \(o_{\text{d}}^{'}\) is the actual output of the sample and \(o_{\text{d}}\) is the desired output. According to error gradient descent methodology, the system adjusts the weights of each layer starting from the output layer and consecutively works back to the input layer. The positive cycle of information dissemination and error back propagation to constantly adjust the weight values in each layer is referred to as the NN training process. The training process continues until the network output error is reduced to an acceptable level or achieves a preset number of learning iterations. Errors decrease during each iteration of the training process. Weight change during each iteration can be computed using the following equation

where w is the weight, t the iteration time, r the learning rate, and \(m^{\prime}\) the momentum factor. For the BP-NN, the number of input nodes is equal to the number of features, i.e., 13 and the output layer has only one node. To build the BP-NN for the detection of chatter, speed, cut depth, and feed rate, 6 architectures with different nodes and hidden layers (13-5-1,13-10-1,13-20-1,13-10-10-1,13-20-20-1,13-30-30-1) are tested. The classification accuracy for these architectures is outlined in the result section. A general BP-NN construction is shown in Fig. 8.

6.2 CNN

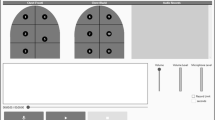

A CNN consists of one input layer, one output layer, and several hidden layers. The hidden layers include a convolution layer, a max-pooling layer, and a fully connected layer. Figure 9 presents the architecture of a CNN model with each layer consisting of a specific name and function in which the input data are depicted in grayscale in the Mel-spectrogram.

The first hidden layer is the convolutional layer. Outputs from the convolution operation are processed by an activation function called rectified linear unit (ReLU). The ReLU function is expressed as

Following the first convolution layer is the down-sampling layer which is also referred to as a max-pooling layer. Every feature value in the max pooling layer is the maximum value obtained from an observed set of neurons in the preceding convolution layer. The controlling parameters for the max pooling layer are filter size and spatial stride. The filter size and spatial stride are both set to 2 for the CNN architecture used in the presented work.

Following the first convolutional and max-pooling layer group, there is another group of convolutional and max-pooling layers in the proposed CNN architecture.

Two fully connected layers separate the second max-pooling layer and a final output layer. Each neuron obtains a value by ReLU with inputs, weights, and bias.

The output layers of a CNN consist of n outputs, each representing a single class. In the 4 different classification tasks, n is equal to the following: 2 (chatter case); 4 (feed rate and cut depth case); and 7 (speed case). The output is computed using a softmax activation function. The output softmax function used in the outlined work is defined as

where \(Z_{i}\) can be expressed as \(w_{i} x + b_{i}\) and is the linear combination of all the weights \(w_{i}\) from penultimate layer output(x) and a bias term \(b_{i}\). \({\text{e}}^{{z_{i} }}\) is the standard exponential function to each \(Z_{i}\) and K indicates the total class number. The output of the softmax function can be used to denote a probability distribution over several different possible outcomes.

The components of CNN for Mel-spectrogram are the same as the first CNN architecture, which includes a convolutional layer, a max pooling layer, and a fully connected layer. However, parameters such as kernel size and feature map quantity require modifications based on different data. In addition, because of some overfitting problems during the training process, the network has low generalization ability. Overfitting occurs when the network learns all the details and noise associated with the training data that will influence the performance of the model on new data. The most intuitionistic measure of overfitting is when the test accuracy is much lower than the training and validation accuracies. A drop out layer is added to the network to address the problems associated with overfitting. A fundamental idea is to randomly drop units (along with their connections) from the NN during training, which prevents units from co-adapting too much [46]. In this paper, for each machining parameter, four models were applied to test the classification results. The details concerning the models’ chatter, feed rate, speed, and cut depth are listed in Tables 3–6, respectively.

7 Results and discussion

7.1 Classification tasks

Data collection was focused on two primary categories of datasets (dataset 1 and dataset 2, details were provided in the data collection section). The first classification task only focused on chatter detection (presence or absence of chatter). For chatter detection, only dataset 1 was used, and it comprised of two classes. The second classification task focused on the classification of machining parameters (speed, feed rate, and cut depth) into three different levels (low, medium, and high). The classification task in dataset 2 was defined by the parameter values listed in Table 7. Each process parameter was divided into three classes (3-3-3 classification task). For training and testing purposes, each dataset (dataset 1 and dataset 2) was further subdivided into three subgroups: training data, validation data, and test data. The numbers of data points in each of these subgroups are listed in Table 8. The allocation of a data point to each of these subgroups was performed using uniform random distribution.

7.2 BP-NN

The results of the application of six different BP-NNs for the classification task related to datasets 1 and 2 (with 3-3-3 classes) are outlined in Table 9. The performance of 13-20-20-1 (two hidden layers with 20 nodes) model is the best compared with other models. Figure 10 depicts the confusion matrix of 13-20-20-1 model for each task. Each row of the matrix denotes instances in a predicted class while each column denotes the instances in an actual class. BP-NN has a relatively high classification accuracy. For each classification task, the accuracy achieved by BP-NN is greater than 90%.

An additional numerical experiment was devised to test the performance of BP-NN in the presence of a higher number of classes. In particular, for dataset 2, the number of speed classes was increased from three to seven. The number of classes for feed rate and cut depth parameters was increased to four. The modified classification task and related parameters are outlined in Table 10 (dataset 2, 7-4-4 classification task). The classification accuracy of BP-NN on dataset 2 (7-4-4 classification task) is listed in Table 11. It can be observed that as the number of classes in the dataset increases, the classification accuracy of BP-NN decreases. Declining accuracy can be mitigated by deeper NNs or the addition of more accurate human-designed features that can be fed into the BP-NN.

7.3 CNN

The CNN-based classification framework was only applied to dataset 1 and dataset 2 (7-4-4) classification tasks. The overall dataset was split into three different subgroups: training, testing, and validation. The numbers of samples for each subgroup are shown in Table 12. The CNN was trained by the training dataset and validated by the validation dataset. Parameters controlling the CNN architecture include the learning rate and batch size. Learning rate is a decreasing function of time and was set to 0.001. Batch size defines the number of samples that are propagated through the network. Batch size was set to 20. The testing dataset is used to check the trained system adaption for new to the network data. The classification results for all 16 models are listed in Table 12 (column 2–5). The best models for chatter, feed rate, speed, and cut depth are model 3, model 1, model 3, and model 1, respectively. The test classification accuracy values are 0.97, 0.92, 0.93, and 0.90, respectively.

Confusion matrices of the four best models are shown in Fig. 11. Each row of the matrix indicates instances in a predicted class, while each column indicates the instances in an actual class. Even with a higher number of classes, the CNN-based classification framework exhibits high classification accuracy (more than 90% in each task).

8 Conclusions

This paper outlined the concept of machine auscultation. It was demonstrated that different machining states can be recognized only from sound signals. Two different machine learning models, BP-NN and CNN, were used to enable artificial sound understanding for machine diagnostics purposes. By comparing the results of the two machine learning models, it was concluded that CNN performed better than the BP-NN architecture for most classification tasks. In addition, the CNN model based on Mel-spectrum image was less demanding and more general in terms of feature engineering in comparison with the BP-NN model, explicitly based on extracted and selected features.

In short, the findings of this paper demonstrate the utility of CNN-based approaches to machine health state monitoring. In spite of the successes of the presented CNN model, the precision of machine parameter determination for practical applications is limited. Advancements in this field are required to improve its practicability. In the future, various types of input sound images, such as asynchronously sampled signal images and MFCC images, can be used as inputs to train the overall CNN model. Asynchronously sampled signal image and MFCC-based sound image inputs may result in improved performance. In addition to the BP-NN and CNN, other models such as a recurrent neural network (RNN) and HMM have been used in image classification and may prove to be useful in sound imaging too. RNN or HMM-based techniques for developing machine state diagnostics is an avenue for future research.

References

Huang HB, Huang XR, Li RX et al (2016) Sound quality prediction of vehicle interior noise using deep belief networks. Appl Acoust 113:149–161

Sharan RV, Moir TJ (2016) An overview of applications and advancements in automatic sound recognition. Neurocomputing 200:22–34

Kumon M, Yoshihiro ITO, Nakashima T et al (2007) Sound source classification using support vector machine. IFAC Proc Vol 40(13):465–470

Thaler T, Potočnik P, Bric I et al (2014) Chatter detection in band sawing based on discriminant analysis of sound features. Appl Acoust 77:114–121

Dombovari Z, Barton DAW, Wilson RE et al (2011) On the global dynamics of chatter in the orthogonal cuttingmodel. Int J Non-lin Mech 46(1):330–338

Pan G, Xu H, Kwan CM et al (1996) Modeling and intellligent chatter control strategies for a lathe machine. Control Eng Pract 4(12):1647–1658

https://github.com/ruoyuyang1991/machine-auscultation-classification. Accessed 10 April 2019

Singh KK, Singh R, Kartik V (2015) Comparative study of chatter detection methods for high-speed micromilling of Ti6Al4V. Proc Manufacturing 1(1):593–606

Li XQ, Wong YS, Nee AYC (1997) Tool wear and chatter detection using the coherence function of two crossed accelerations. Int J Mach Tools Manuf 37(4):425–435

Kuljanic E, Sortino M, Totis G (2008) Multisensor approaches for chatter detection in milling. J Sound Vib 312(4–5):672–693

Toh CK (2004) Vibration analysis in high speed rough and finish milling hardened steel. J Sound Vib 278(1):101–115

Soliman E, Ismail F (1997) Chatter detection by monitoring spindle drive current. Int J Adv Manuf Technol 13(1):27–34

Delio T, Tlusty J, Smith S (1992) Use of audio signals for chatter detection and control. J Manuf Sci Eng 114(2):146–157

Li XQ, Wong YS, Nee AYC (1988) A comprehensive identification of tool failure and chatter using a parallel multi-art2 neural network. J Manuf Sci Eng 120(2):433–442

Jiang AY, Zhang C (2006) Hybrid HMM/SVM method for predicting cutting chatter. In: Proceedings of SPIE–the international society for optical engineering 6280:62801Q-8

Yao ZH, Mei DQ, Chen ZC (2010) On-line chatter detection and identification based on wavelet and support vector machine. J Mater Process Technol 210(5):713–719

Bediaga I, Munoa J, Hernández J et al (2009) An automatic spindle speed selection strategy to obtain stability in high-speed milling. Int J Mach Tools Manuf 49(5):384–394

Zhang CL, Yue X, Jiang YT (2010) A hybrid approach of ann and hmm for cutting chatter monitoring. Adv Mater Res 97:3225–3232

Cao HR, Lei YG, He ZJ (2013) Chatter identification in end milling process using wavelet packets and hilbert–huang transform. Int J Mach Tools Manuf 69:11–19

Kondo E, Ota H, Kawai T (1997) A new method to detect regenerative chatter using spectral analysis, part 1: Basic study on criteria for detection of chatter. J Manuf Sci Eng 119(4A):461–466

Tansel IN, Wang X, Chen P et al (2006) Transformations in machining. Part 2: evaluation of machining quality and detection of chatter in turning by using s-transformation. Int J Mach Tools Manuf 46(1):43–50

Cho DW, Eman KF (1988) Pattern recognition for on- line chatter detection. Mech Syst Signal Process 2(3):279–290

Grabec I, Gradišek J, Govekar E (1999) A new method for chatter detection in turning. CIRP Ann Manuf Technol 48(1):29–32

Berger B, Belai C, Anand D (2003) Chatter identification with mutual information. J Sound Vib 267(1):178–186

Choi T, Shin YC (2003) On-line chatter detection using wavelet-based parameter estimation. Trans-Am Soc Mech Eng J Manuf Sci Eng 125(1):21–28

Lécun Y, Bottou L, Bengio Y et al (1998) Gradient-based learning applied to document recognition. Proc IEEE 86(11):2278–2324

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. Adv Neural Inf Process Syst 1:1097–1105

Simonyan K, Zisserman A (2014) Very deep convo- lutional networks for large-scale image recognition. arXiv preprint arXiv:1409–1556

Szegedy C, Liu W, Jia YQ er al (2015) Going deeper with convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1–9

Girshick R, Donahue J, Darrell T (2014) Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 580–587

Girshick R (2015) Fast R-CNN. In: Proceedings of the IEEE international conference on computer vision, pp 1440–1448

Ren SQ, He KM, Girshick R et al (2015) Faster R-CNN: Towards real-time object detection with region proposal networks. Adv Neural Inform Process Syst 1:91–99

Lécun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521(7553):436–444

Piczak KJ (2015) Environmental sound classification with convolutional neural networks. In: IEEE 25th International workshop on machine learning for signal processing (MLSP), 2015, pp 1–6

Boddapatia V, Petef A, Rasmusson J et al (2017) Classifying environmental sounds using image recognition networks. Proc Comput Sci 112:2048–2056

Fu Y, Zhang Y, Gao Y et al (2017) Machining vibration states monitoring based on image representation using convolutional neural networks. Eng Appl Artif Intell 65:240–251

Loh WL (1996) On latin hypercube sampling. Ann Stat 24(5):2058–2080

Vaseghi SV (2008) Advanced digital signal processing and noise reduction. Wiley, New York

Gupta CN, Palaniappan R, Swaminathan S et al (2007) Neural network classification of homomorphic segmented heart sounds. Appl Soft Comput 7(1):286–297

Kotani M, Katsura M, Ozawa S (2004) Detection of gas leakage sound using modular neural networks for unknown environments. Neurocomputing 62:427–440

Potočnik P, Thaler T, Govekar E (2013) Multisensory chatter detection in band sawing. Proc CIRP 8:469–474

Liang HY, Nartimo I (1998) A feature extraction algorithm based on wavelet packet decomposition for heart sound signals. In: Proceedings of the IEEE-SP international symposium, pp 93–96

Babaei S, Geranmayeh A (2009) Heart sound reproduction based on neural network classification of cardiac valve disorders using wavelet transforms of pcg signals. Comput Biol Med 39(1):8–15

O’shaughnessy D (1987) Speech communication: human and machine. Universities Press, Hyderabad

Krizhevsky A, Hinton G (2009) Learning multiple layers of features from tiny images. Technical report, University of Toronto 1(4):7

Srivastava N, Hinton G, Krizhevsky A et al (2014) Dropout: A simple way to prevent neural networks from overfitting. J Mach Learn Res 15(1):1929–1958

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Yang, RY., Rai, R. Machine auscultation: enabling machine diagnostics using convolutional neural networks and large-scale machine audio data. Adv. Manuf. 7, 174–187 (2019). https://doi.org/10.1007/s40436-019-00254-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40436-019-00254-5