Abstract

Purpose

Increasingly more laparoscopic surgical procedures are performed with robotic platforms, even becoming the standard for some indications. While providing the surgeon with great surgical dexterity, these systems do not improve surgical decision making. With unique detection capabilities and a plurality of tracers available, radioguidance could fulfill a crucial part in this pursuit of precision surgery. There are, however, specific restrictions, limitations, but also great potentials, requiring a redesign of traditional modalities.

Methods

This narrative review provides an overview of the challenges encountered during robotic laparoscopic surgery and the engineering steps that have been taken toward full integration of radioguidance and hybrid guidance modalities (i.e., combined radio and fluorescence detection).

Results

First steps have been made toward full integration. Current developments with tethered DROP-IN probes successfully bring radioguidance to the robotic platform as evaluated in sentinel node surgery (i.e., urology and gynecology) as well as tumor-targeted surgery (i.e., PSMA primary and salvage surgery). Although technically challenging, preclinical steps are made toward even further miniaturization and integration, optimizing the surgical logistics and improving surgical abilities. Mixed-reality visualizations show great potential to fully incorporate feedback of the image-guided surgery modalities within the surgical robotic console as well.

Conclusion

Robotic radioguidance procedures provide specific challenges, but at the same time create a significant growth potential for both image-guided surgery and interventional nuclear medicine.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Technology is taking on the role as an ever-increasing enabler in modern healthcare. As a result of increasing technological capabilities, new patient management strategies have emerged, not the least in the field of surgery. A major technical milestone in the field of surgery has been the uprise of minimally invasive keyhole or laparoscopic surgery during the twentieth century. While initially received with great skepticism, this technology-enabled intervention has now proven its success in reducing surgical trauma for patients [1]. More recent developments have focused on robot-assisted laparoscopic surgery using telemanipulator systems. The main advantages that these robotic systems deliver as opposed to traditional laparoscopic surgery are more precise movements, extended maneuverability, and improved ergonomics.

The concept of using such robotic telemanipulators for surgical applications originates from the concept of telesurgery, whereby an expert surgeon could potentially provide immediate and high-level surgical care from a remote long-distance location. This led to the first preclinical evaluation of prototype telesurgery systems in the 1990’s [2]. Several private industry parties have taken this concept further, performing first in-human surgery in 1997 [3]. Since then, several new systems and system iterations have followed, shifting the focus more and more from the aspect of long-distance surgery to delivering an intuitive robot-assisted laparoscopic surgery platform. As such, the first da Vinci system by Intuitive Inc. was cleared for a restricted number of surgical indications already in the year 2000 [4]. Gradually dominating the market of robot-assisted laparoscopic surgery since then, today the install base of these systems exceeds 7500 [5] and more than 10 million procedures have been performed so far [6]. Over the last years, competition has been growing, with quite a few other telemanipulator-based robotic surgery systems that have recently become available on the clinical market, such as the Hugo (Medtronic Inc.), Versius (CMR Ltd.), Senhance (Asensus Surgical Inc.), Avatera (Avatera Medical GmbH.), Hinotori (Medicaroid corp.), and Revo-i (Meerecompany Inc.) [7].

As early adaptor, urology has become the leading surgical discipline when it comes to the pioneering of robot-assisted laparoscopic interventions. In fact, robotic prostate cancer surgery has been so successful that, in many countries, robotic approaches now set the standard [8]. Thus, maybe even rendering non-robotic surgery a peculiarity for this indication. On top of that, prostate-cancer-related lymphatic dissections (both primary and recurrent cancer), bladder interventions (e.g., cystectomy) ,and kidney interventions (e.g., partial nephrectomy) are frequently performed in the robotic surgery setting. The popularity of robotic surgery has over the years disseminated to other surgical disciplines, including gynecology, as well as thoracic surgery and general surgery indications [9]. Remarkably, these laparoscopic robotic platforms are not confined to abdominal and thoracic interventions only, but are even used in some head and neck surgery indications [10].

Where the robot’s precise steerable instruments enhance the surgeon’s dexterity, they do not improve the surgical decision making. Image-guided surgery can play a crucial role in the improvement of the latter.Image-guided surgery can play a crucial role in the improvement of the latter, more specifically, by the intraoperative target definition via molecular characterization of, for example, cancerous tissue. By integration of a Firefly fluorescence laparoscope within the da Vinci robotic platform, such intra-operative molecular guidance has become widely available. While clearly providing value with real-time and high-resolution visual guidance, severe attenuation of fluorescent light by tissue keeps this technique limited to superficial applications, allowing for target visualization up to roughly ~ 1 cm deep [11]. In addition, such fluorescence guidance is currently confined to the limited clinical availability of Firefly compatible tracers (fluorescence excitation around 800 nm, e.g., tracer ICG [12]). The need for in-depth image guidance and the extension of tracers that can be used, thus, creates a demand for complementary or alternative image-guidance methodologies. Radioguided surgery is one of the earliest and still most used methods of molecular image guidance [13]. This technique benefits from: (1) the ability to identify radiotracers located deep within tissue, and (2) a broad availability of clinically applied radiopharmaceuticals. Where the first is inherent to the use of gamma-rays (penetration depth > 10 cm [14]), the second is facilitated through decades of radiochemical research [13, 15]. When the transition of radioguidance from open to robotic surgery can be realized, this can help provide a wealth of new opportunities.

In the last couple of years, we have seen dedicated radioguidance, as well as hybrid radio and fluorescence guidance, equipment arise in a pursuit of fully integrate image-guided surgery in the robotic era. Hereby, hardware adaptations are required that overcome restrictions in accessibility and that are compatible with steerable laparoscopic instruments that exert a high degree of freedom (DOF) in movement. In this review, we provide an overview of these developments, specifically focusing on the engineering aspects and providing the first clinical applications.

Limitations and potentials for integrating image guidance in robotic surgery

Today’s laparoscopic robotic surgery systems use a master–slave principle. In such a setup, the surgeon sits behind a distant surgical console controlling the sterile-draped robot that is placed at the surgical bedside (see Fig. 1). This robot consists of multiple arms either joined on a single device that bends over the patient, or multiple single arm trollies that are placed around the bedside. One robotic arm holds the laparoscopic camera. The others (often three) operate multi-wristed laparoscopic steerable instruments, enabling surgical actions with high dexterity. Both the instruments and the laparoscopic camera are under full control by the surgeon sitting behind the surgical console (see Fig. 1). This allows for an ergonomic setup, where the surgeon can switch between the different instruments at hand, which are held in place even when the surgeon leaves the console. High-definition, zoomed-in, stereo camera images of the surgical field are visualized in either a closed 3D viewer that the surgeon looks into, or an open 3D screen viewed with accompanying 3D glasses. As such, the surgical console acts as a true ‘surgical cockpit’ from which the intervention is performed.

Overview of typical surgical robot setup. The central vision cart connects both the robotic arms and the surgical console. From behind the surgical console, the surgeon has an HD 3D view inside the patient and operates the robotic instruments. The robotic arms guide the steerable and highly maneuverable robotic instruments inside the patient via 4 trocars (green zoom-in). One additional trocar is often placed for an assistant to support the surgeon during the procedure

All instruments enter the patient using strategically placed instrument portals (also called trocars; see Fig. 1). Note that there is often one additional assistant port, not in use by the robot, through which the bedside assistant can support the operating surgeon in, e.g., tissue retrieval, tissue fixation, and suction/irrigation of fluids. The internal size of these instrument portals determines the size of the instruments and modalities that can be inserted in the abdomen, often requiring a typical diameter of 12 mm or smaller. Critically, image-guided surgery modalities can only access the surgical field when they are either part of the robot or when the bedside assistant introduces them through the assistant port. When the latter is needed, the surgeon has to verbally instruct the bedside assistant to insert and position, e.g., laparoscopes or laparoscopic gamma detectors. When inserted through the assistant trocar, the portal becomes a fixed rotation point in the patient, typically limiting the DOF from 6 to 4 DOFs when compared to open surgery (see Fig. 2). This limited maneuverability means that some anatomical locations can simply not be reached [16]. To partially compensate for the restrictions in maneuverability, laparoscopic modalities sometimes incorporate an angled detection window (e.g., a laparoscopic gamma probe with a 45° or 90° detector window [17]).

Developments in robotic radioguidance. What started with the traditional non-integrated laparoscopic gamma probe (left) [13] was followed-up with the DROP-IN gamma probe (middle), providing improved maneuverability and autonomous control for the surgeon [19, 23]. Preclinical efforts show that an even more miniaturized Click-On gamma probe (left) quantitatively improves surgical logistics even further [24]

When applying image-guided surgery modalities, the robotic setup, thus, comes with critical limitations, especially when the modalities are not integrated but rather introduced as a third-party device. On the other hand, great potential awaits when integration is pursued (see Table 1).

Engineering integrated image-guidance modalities

Tethered radioguidance modalities

Handheld gamma detection probes are the staple of radioguided surgery, providing acoustical and numerical feedback upon detection of a radiopharmaceutical within the tissues encountered during surgery. The most used radioactive isotope within radioguided surgery is 99mTc. Given the problems experienced when applying traditional laparoscopic gamma probes during robotic surgery (i.e., restricted maneuverability and control), combined with the success of tethered ultrasounds probes [18], a tethered DROP-IN gamma probe design was pursued [19]. Being a tethered design, these miniaturized detectors fully enter the abdomen, where they are grasped by the surgeon using the steerable robotic instruments, optimally benefitting from their rotational freedom (i.e., 6 DOF; see Fig. 2). This feature supports the identification of low-tracer-uptake lesions in the vicinity of high-tracer-uptake background tissues, the so-called ‘seeing bird in front of the sun’ effect [19,20,21,22]. Compared to rigid laparoscopic gamma probes, the tethered design enhances the anatomical coverage, and thus detection, especially for those areas that are located on the same side of the patient as the assistant trocar [16].

The rotational coverage of the DROP-IN designs is influenced by the gripping ergonomics. As such, initial studies indicated that, when used with typical robotic instruments, a 0° grip provided an effective scan coverage around the instrument of 0–111°, a 45° grip provided coverage from 0 to 140°, and a 180° grip provided coverage from 0 to 180° [19]. As a 45° gripping was rated most intuitive for probe pickup, this option provided the best balance between intuitive operation and effective scanning range available. Independent of the grip version, the surgeon needs to either use two instruments to pickup the probe, or one instrument if the bedside assistant helps. To ensure a reproducible probe pickup (a feature that has importance for tracking and navigation approaches; see chapter below), complex multi-axes CNC milling was used to produce a grip in the probe housing that perfectly matches the robotic instrument of choice (i.e., ProGrasp instrument) and repeatedly guides the instrument to the exact same location (Fig. 3 top, bottom). Being compatible to common trocar sizes, the initial DROP-IN prototypes had a 12 mm diameter, while one of the current CE-marked systems has decreased this even further to 10 mm (Fig. 3 bottom). Since sterile draping may rip through use of surgical instruments, the probes are completely sterilized before surgical use (e.g., plasma sterilization).

To facilitate the detection of 99mTc-labeled tracers (140 keV), a Eurorad DROP-IN gamma probe was prototyped as early as 2014 [19]. Following technological evaluation in phantoms, pigs, and ex vivo tissue samples, first in-human application with a re-sterilizable clinical prototype was reported in 2017 for the prostate cancer sentinel node procedure [16, 23]. These pioneering studies indicated that the DROP-IN gamma probe design: (1) is clinically feasible and safe; (2) integrates radioguided surgery in the robotic setup, allowing the surgeon to apply the radioguidance him-/her-self; (3) optimally uses the maneuverability of the steerable robotic instruments, providing improved anatomical coverage. As evaluated in these studies, this resulted in a superior detection rate of the DROP-IN with respect to the traditional laparoscopic gamma probe (100% vs 76%). Interestingly, superiority was also shown with respect to fluorescence imaging detection (100% vs 91%). Subsequently, this prototype was implemented during PSMA-targeted salvage surgery in prostate cancer [25, 26], showing a sensitivity of 86% and specificity of 100% when compared to diagnostic PSMA-PET imaging [25]. Additionally, with evaluation in the primary prostate cancer setting, the DROP-IN even showed to find additional lesions not seen on diagnostic PSMA-PET imaging (11 additional lesions in 12 patients), and lymph node metastases ranging in sizes down to 0.1 mm [27]. Combined in and ex vivo measurements delivered a sensitivity of 76% and specificity of 96% when compared to pathology, where almost all missed lesions consisted of micrometastases smaller than 3 mm. Since then, the DROP-IN design has been picked up by other companies. As of 2019, this has resulted in CE-marked products made available by Crystal Photonics (sterilizable Drop-In Probe CXS-OP-DP) and LightPoint Medical (disposable Sensei Drop-In probe). These probes have clear design differences and material choices (see Fig. 3). The sterilizable Crystal Photonics probe is based on a GAGG(Ce) scintillation crystal (resistant to high temperatures) and tungsten(alloy) collimation, housed in a 10 mm diameter probe. Based on their proven line of gamma probes, this detector is compatible with 25–600 keV gamma-rays, including for example 99mTc, 111In, 125I and 131I. It has demonstrated clinical utility in cervix cancer and endometrial cancer sentinel node procedures [28], as well as PSMA-targeted prostate cancer surgery (i.e., primary [29] and salvage [30] settings). Similar to the studies with the Eurorad prototype, the DROP-IN indicated superior detection (100%) over traditional laparoscopic gamma probes (93%) and fluorescence imaging (86%) in sentinel node studies, as well as comparable sensitivity and specificity in PSMA-targeted studies (67% and 100% in the primary setting, respectively). Interestingly, ongoing studies with this CE-marked product indicate that the design is also compatible with traditional laparoscopic instruments (Olympus) and steerable LaproFlex instruments (DEAM B.V.)[28]. The disposable LightPoint probe is based on a more basic cesium iodide scintillation crystal, using tungsten (alloy) collimation and housed in a 12 mm diameter probe. This DROP-IN probe has been used for cervix [31] and prostate cancer [32, 33] SLN procedures, again indicating superiority over the traditional laparoscopic gamma probe (100% vs 89.5%, respectively).

While the DROP-IN probe has truly translated radioguidance to the realm of robotic surgery, providing autonomy and improved detection with its high maneuverability, it does still interrupt the surgical workflow with the necessity to ‘pick it up’ and ‘put it down’ whenever guidance is desired. To this end, it was shown with a next-generation Click-On gamma probe that further integration of the modality itself with the wristed robotic instruments quantitively improves surgical logistics [24], making radioguided surgery even more integrated with the robotic platform (see Fig. 2). However, these developments are currently still within the preclinical stage.

With the plurality of clinically approved radiopharmaceuticals available within the world of nuclear medicine, there has always been interest in radioguidance applications next to those that use 99mTc. Low-to-mid-energy gamma-emitting isotopes (e.g., 99mTc, 111In, 123I, 125I, 131I) are successfully applied in the laparoscopic setting. The need for ‘heavy’ collimation makes it impossible to realize laparoscopic radioguidance for high-energy gamma-emitting isotopes (e.g., 18F and 68 Ga) to this date [13]. Modern beta probe designs, however, accommodate the detection of beta + particles (e.g., 18F and 68 Ga) or even beta- particles (e.g., 90Y) [34]. As these detector materials are mostly transparent for (high energy) gamma photons, they require little shielding and can be produced from disposable or sterilizable plastics [35], bringing miniaturization within its reach. Taking the original Eurorad design (see Fig. 3), this resulted in the generation of a prototype DROP-IN beta probe. Ex vivo evaluations on tissue specimens in 2019 indicated such a device is able to detect both primary tumor margins and lymph node metastases using back-table analysis in primary prostate cancer using 68 Ga-PSMA [36]. To investigate the translation of these results to the in vivo setting, a clinical prototype was produced, which entered first in-human trials in 2022 for robotic prostate cancer surgery [37].

Integrating fluorescence laparoscopic video

Within the last decade, we are experiencing a revival in the use of fluorescence imaging for surgical applications, where dedicated camera systems make tissue structures ‘light up’ upon the detection of a fluorophore substance. Of such fluorophores (also called fluorescent dyes), ICG is currently the most used. Where the first clinical report of ICG fluorescence imaging during robot-assisted surgery relied on an auxiliary ‘third party’ STORZ laparoscope that was handled by the bedside assistant through the assistant trocar [38], current da Vinci platforms facilitate fluorescence imaging with full integration in the robotic laparoscopic camera using their Firefly camera system [39]. Where the da Vinci Si systems facilitated this in a more conventional setup (i.e., CCD camera located outside of the patient, coupled to a laparoscopic rod-lens optic that enters the abdomen through a trocar), the X and Xi systems both use a miniaturized CMOS chip-on-a-tip camera that fully enters the abdomen [12, 40]. Uniquely these robotic systems not only enable detection of the fluorescent dye ICG (820 nm), but with minor modification also support the detection of fluorescein (515 nm; [41]) and even Cy5 (660 nm; [42]). However, other companies are now also following, where for example, the Hugo robotic platform features a fully integrated KARL STORZ laparoscopic camera system, a company that has proven its worth for (laparoscopic) fluorescence guidance with various fluorophores (e.g., ICG, fluorescein, Cy5, Methylene Blue, PpIX/5-ALA; [43]), and the Senhance robot claims to be an open platform, compatible with multiple laparoscopic fluorescence systems available on the market today. The possibility to image multiple fluorescent dyes with a single camera system opens the door to multispectral applications where multiple tracers visualize complementary tissue structures/processes during surgery [43]. With technical feasibility still making up the majority of the multispectral fluorescence guidance efforts [12, 41, 44], this approach has yet to demonstrate patient value. Current studies within the realm of robotic surgery mainly focus on reducing patient complications by visualizing targeted and non-targeted lymphatics in two different colors (see Fig. 4) [45].

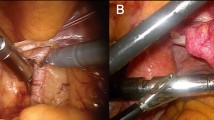

Hybrid radio and fluorescence guidance in robotic surgery. A–B SPECT/CT slide and volume render showing the surgical target (i.e., sentinel lymph node) within anatomical context to plan the surgical procedure. C–D DROP-IN gamma probe in-depth guidance and fluorescence visual guidance (in green) toward the surgical target [16]. E–F Multispectral fluorescence guidance using complementary dyes (blue and yellow/green) might be used to distinguish between different tissue structures (e.g., different lymph drainage patterns) [44, 45]

Where the bulk use of the fluorescent camera systems is currently used to facilitate ICG-based (lymph) angiography, the concept of bi-modal, dual modal, or hybrid tracers has connected this modality with radioguided surgery (see Fig. 4). Robotic sentinel node studies, performed with ICG-99mTc-nanocolloid since 2009, have demonstrated that the integration of radio and fluorescence guidance provides a ‘best of both worlds’ scenario [38]. Hereby, the in-depth detection capabilities of radioguided surgery are complemented with real-time and high-resolution visualization of fluorescence imaging. Clinical evidence indicates this hybrid approach improves the detection of tumor positive lymph nodes, potentially lowering recurrence rates without compromising on patient safety [46, 47], as well as an improved positive predictive value and reduction of histopathology workload [48]. That said, it was also shown that relying on fluorescence guidance only in these procedures greatly reduced successful intra-operative target localization (i.e., missing lesions in about 50% of the patients), underlining that the combination with radioguidance is crucial to reach a 100% [49]. Following these extensive validations during robotic prostate SN procedures (N > 350; [46]), the hybrid concept was later also translated to robotic tumor-targeted procedures, with a proof-of-concept study in renal cancer (CAIX targeting with non-integrated fluorescence camera systems; [50]) and a case series in prostate cancer (PSMA targeting with integrated fluorescence camera systems; [51]).

Following the developments of the DROP-IN gamma probe and Click-On gamma probe, Click-On modalities have even been evaluated preclinically for fluorescence and hybrid (i.e., radio and fluorescence) detection. Uniquely, these concepts explore the possibility of highly maneuverable, miniaturized detectors that can provide continuous read-out on the presence of radio and/or fluorescence tracers [52, 53].

Augmentation of the surgical experience

At the center of the robotic platform stands the surgical cockpit, the control area where the surgeon receives all feedback from the robot and takes the appropriate surgical actions. This makes it an ideal platform for integration of additional image-guided surgery data streams [54]. In its simplest form, this entails the display of data next to the surgical video feed (i.e., a ‘split-screen’ visualization), such as DROP-IN gamma probe counts [25, 31], a video feed from a DROP-IN ultrasound [18] or non-integrated fluorescence camera [44] or a 2D/3D render of the pre-operative patient scans [55] using, for example, the da Vinci TilePro function (see Fig. 5). To optimally visualize these data streams with respect to the surgical anatomy that is observed by the surgeon, initial steps have been taken to move from a split-screen visualization to an augmented reality (AR) visualization, where the data are directly positioned as an overlay on top of the surgical feed. In such a setting, the AR render often consists of a scan-based organ segmentation that is manually aligned with the organ of interest by a dedicated person in the OR (e.g., primary tumor visualization in kidney and prostate cancer [56, 57]). Furthermore, using a tool tracking method to determine the position and orientation (pose) of the instruments within the surgical view, even the video feed of a DROP-IN ultrasound could be directly integrated within the surgical view, visualizing the ultrasound images as if they literally appear to come out of the DROP-IN instrument (Fig. 5; [58]).

Augmentation of the surgical experience. A Multiple data streams can be visualized next to the surgical view in the surgical console, such as a virtual model of the renal tumor, the video feed of the DROP-IN ultrasound and a manual augmented reality overlay [56]. B Using a tool tracking method, it becomes possible to visualize the video feed of a DROP-IN ultrasound directly on top of the surgical view [58]. C–E Using pre- or intra-operative SPECT scans to navigate a fluorescence camera with augmented reality toward the targeted tissues during hybrid radioguided surgery [65]. F–H Navigation of a DROP-IN gamma probe toward tissue targets using a multispectral tool tracking method [67]

Integrating guidance data directly within the surgical video feed slowly moves us into the territory of surgical navigation, using ‘GPS’-like navigation to guide the surgeon within a map of the patient’s anatomy [59]. To accomplish this, three basic components are needed: (1) a map of the patient’s anatomy (often formed with either pre- or intra-operative imaging); (2) a tracking system that continuously determines the position and orientation (pose) of the patient’s anatomy within the operating room, as well as those of the navigated surgical instruments; (3) a computer system that uses this information to calculate and visualize your current distance toward the surgical targets [60]. Within the field of radioguided surgery, various such systems have been proposed, clinically evaluated, and, in some cases, even commercialized; all directing the surgeon toward surgical targets defined on PET- or SPECT-based imaging using a stand-alone virtual reality (VR) visualization or an integrated AR visualization (e.g., sentinel lymph node procedures, radioguided occult lesions localization procedures or tumor-targeted procedures in sarcoma, parathyroid, neuroendocrine, and prostate cancer [61]). While none of these have seen in-human application during robotic surgery yet, quite a collection has been applied during in-human laparoscopic surgery and preclinical robotic applications. Unfortunately, mostly due to soft-tissue deformations, incorrect registration of the patient maps can quickly deprive the navigation workflow of its accuracy [59]; a problem that is present in almost all laparoscopic applications. One method to compensate for such inaccuracies is the navigation of an intra-operative detection modality itself, allowing to correct the target location when it comes within the detection range of the modality (e.g., gamma probe [62] and fluorescence camera [63,64,65]; see Fig. 5). In the preclinical robotic setting, navigation of radio- or fluorescence-guided modalities has shown great potential. Here, AR navigation of the robot-integrated Firefly fluorescence camera might compensate its superficial nature by allowing for navigation toward deeper lying targets not immediately detectable with fluorescence [64]. Furthermore, tracking of the DROP-IN gamma probe might simplify the target localization process with AR cues on the probe viewing direction [66] or even AR navigation toward the location of preoperatively defined targets (see Fig. 5; [67]).

Another method to compensate for deformation-based inaccuracies could be the inclusion of an intra-operative patient scan (i.e., allowing for on update of the patient navigation map). Using the tool tracking systems that are used for navigation, in combination with the read-out of an intra-operative detection modality (e.g., gamma probe, beta probe or fluorescence probe) allows for the generation of a small field-of-view patient scan. Within radioguided surgery, this has led to the freehand SPECT imaging and navigation systems that allow for the generation of a novel intra-operative scan within 5 min [68]. Preclinically, steps are made toward different modalities (i.e., freehand beta [69, 70], freehand fluorescence [71], and freehand magnetic particle imaging [72]), but also the first robotic freehand scans using a prototype DROP-IN gamma probe [73]. Although technically still challenging, this opens the way toward robotic intra-abdominal 3D patient scans.

Future outlook

As we have seen with technological advances such as laparoscopic surgery and robotic surgery, full integration of robotic image-guided surgery only seems to be a matter of time. Radioguidance could play a paramount role in this with the unique (in-depth) detection possibilities and the plurality of tracers available. With the introduction of the DROP-IN gamma probe, again the field of urology has been at the forefront of the action, showing the successes of robotic radioguided surgery in both the sentinel node as well as tumor-targeted (i.e., PSMA-targeted) procedures. However, taking into consideration the vast number of radiotracers that have been used in radioguided clinical trials, it is likely that we have seen only the tip of the robotic radioguided surgery iceberg [13].

With the concept of DROP-IN detectors successfully introduced in ultrasound-guided procedures, as well as translated to radioguided surgery (both gamma and beta detection), it is likely that we will see an expansion to even more detection techniques, which could fulfill an alternative or complementary role (e.g., Raman spectrometry [74], mass spectrometry [75], and electrical bio-impedance [76]). Although technically challenging, quantified improvement in the surgical logistics using Click-On probe designs over DROP-IN designs [24] do suggest further miniaturization and integration will improve the robotic image-guided surgery procedures even further. As seen with the Click-On gamma and fluorescence probes, such detectors can remain attached during the procedure while still allowing the surgical instruments to perform their grasping functions [24, 52], promoting more frequent use of the modalities. Furthermore, since the surgeon is already working from behind a console, surgical robots seem to be an ideal platform for full integration of the feedback as delivered by the image-guidance modality using mixed-reality visualizations (i.e., AR and VR), or even navigated workflows [54].

Taking all this into account, the robotic platforms should soon support, not only great surgical dexterity, but great surgical decision making as well. Making the robotic platforms increasingly intelligent in this way, perhaps first steps are even taken toward fully autonomous surgical systems.

Conclusion

Surgical robotic platforms are here to stay. Robotic radioguidance procedures provide specific challenges, but at the same time create a significant growth potential for the field of image-guided surgery and interventional nuclear medicine. As such, first steps have been made toward the full integration of radioguidance and hybrid radio/fluorescence guidance, providing the operating surgeon with highly maneuverable sensing, augmented visualizations, and improved surgical abilities.

References

Alkatout I, Mechler U, Mettler L et al (2021) The development of laparoscopy—a historical overview. Front Surg. https://doi.org/10.3389/fsurg.2021.799442

George EI, Brand CTC, Marescaux J (2018) Origins of robotic surgery: from skepticism to standard of care. JSLS J Soc Laparoendosc Surg 22:4

Cheah W, Lee B, Lenzi J et al (2000) Telesurgical laparoscopic cholecystectomy between two countries. Surg Endosc 14(11):1085–1085. https://doi.org/10.1007/s004649900788

Yates DR, Vaessen C, Roupret M (2011) From Leonardo to da Vinci: the history of robot-assisted surgery in urology. BJU Int 108(11):1708–1713. https://doi.org/10.1111/j.1464-410X.2011.10576.x

Intuitive. Intuitive Announces Fourth Quarter Earnings. 2023; Available from: https://isrg.intuitive.com/news-releases/news-release-details/intuitive-announces-fourth-quarter-earnings-2#:~:text=The%20Company%20grew%20its%20da,the%20fourth%20quarter%20of%202021.

Intuitive. Intuitive reaches 10 million procedures performed using da Vinci Surgical Systems. 2021; Available from: https://isrg.intuitive.com/news-releases/news-release-details/intuitive-reaches-10-million-procedures-performed-using-da-vinci/

Koukourikis P, Rha KH (2021) Robotic surgical systems in urology: what is currently available? Investig Clin Urol 62:1

Montorsi F, Wilson TG, Rosen RC et al (2012) Best practices in robot-assisted radical prostatectomy: recommendations of the Pasadena Consensus Panel. Eur Urol 62(3):368–381. https://doi.org/10.1016/j.eururo.2012.05.057

Sheetz KH, Claflin J, Dimick JB (2020) Trends in the adoption of robotic surgery for common surgical procedures. JAMA Netw Open 3(1):e1918911–e1918911. https://doi.org/10.1001/jamanetworkopen.2019.18911

Tamaki A, Rocco JW, Ozer E (2020) The future of robotic surgery in otolaryngology–head and neck surgery. Oral Oncol. https://doi.org/10.1016/j.oraloncology.2019.104510

Stoffels I, Dissemond J, Pöppel T et al (2015) Intraoperative fluorescence imaging for sentinel lymph node detection: prospective clinical trial to compare the usefulness of indocyanine green vs technetium Tc 99m for identification of sentinel lymph nodes. JAMA Surg 150(7):617–623. https://doi.org/10.1001/jamasurg.2014.3502

Meershoek P, KleinJan GH, van Willigen DM et al (2021) Multi-wavelength fluorescence imaging with a da Vinci Firefly—a technical look behind the scenes. J Robot Surg 15:751–760. https://doi.org/10.1007/s11701-020-01170-8

Van Oosterom MN, Rietbergen DD, Welling MM et al (2019) Recent advances in nuclear and hybrid detection modalities for image-guided surgery. Expert Rev Med Devices 16(8):711–734. https://doi.org/10.1080/17434440.2019.1642104

Heller S, Zanzonico P (2011) Nuclear probes and intraoperative gamma cameras. Sem Nucl Med. https://doi.org/10.1053/j.semnuclmed.2010.12.004

Povoski SP, Neff RL, Mojzisik CM et al (2009) A comprehensive overview of radioguided surgery using gamma detection probe technology. World J Surg Oncol 7:1–63. https://doi.org/10.1186/1477-7819-7-11

Dell’Oglio P, Meershoek P, Maurer T et al (2021) A DROP-IN gamma probe for robot-assisted radioguided surgery of lymph nodes during radical prostatectomy. Eur Urol 79(1):124–132. https://doi.org/10.1016/j.eururo.2020.10.031

Acar C, Kleinjan GH, van den Berg NS et al (2015) Advances in sentinel node dissection in prostate cancer from a technical perspective. Int J Urol 22(10):898–909. https://doi.org/10.1111/iju.12863

Alenezi AN, Karim O (2015) Role of intra-operative contrast-enhanced ultrasound (CEUS) in robotic-assisted nephron-sparing surgery. J Robot Surg 9:1–10. https://doi.org/10.1007/s11701-015-0496-1

van Oosterom MN, Simon H, Mengus L et al (2016) Revolutionizing (robot-assisted) laparoscopic gamma tracing using a drop-in gamma probe technology. Am J Nucl Med Mol Imag 6(1):1

Kitagawa Y, Kitajima M (2002) Gastrointestinal cancer and sentinel node navigation surgery. J Surg Oncol 79(3):188–193. https://doi.org/10.1002/jso.10065

Kitagawa Y, Kitano S, Kubota T et al (2005) Minimally invasive surgery for gastric cancer—toward a confluence of two major streams: a review. Gastric Cancer 8:103–110. https://doi.org/10.1007/s10120-005-0326-7

Vermeeren L, Valdés Olmos R, Meinhardt W et al (2009) Intraoperative radioguidance with a portable gamma camera: a novel technique for laparoscopic sentinel node localisation in urological malignancies. Eur J Nucl Med Imag 36:1029–1036

Meershoek P, van Oosterom MN, Simon H et al (2019) Robot-assisted laparoscopic surgery using DROP-IN radioguidance: first-in-human translation. Eur J Nucl Med Mol Imaging 46:49–53. https://doi.org/10.1007/s00259-018-4095-z

Azargoshasb S, van Alphen S, Slof LJ et al (2021) The Click-On gamma probe, a second-generation tethered robotic gamma probe that improves dexterity and surgical decision-making. Eur J Nucl Med Mol Imaging 48:4142–4151. https://doi.org/10.1007/s00259-021-05387-z

de Barros HA, van Oosterom MN, Donswijk ML et al (2022) Robot-assisted prostate-specific membrane antigen–radioguided salvage surgery in recurrent prostate cancer using a DROP-IN gamma probe: The first prospective feasibility study. Eur Urol 82(1):97–105. https://doi.org/10.1016/j.eururo.2022.03.002

van Leeuwen FW, van Oosterom MN, Meershoek P et al (2019) Minimal-invasive robot-assisted image-guided resection of prostate-specific membrane antigen–positive lymph nodes in recurrent prostate cancer. Clin Nucl Med 44(7):580–581. https://doi.org/10.1097/RLU.0000000000002600

Gondoputro W, Scheltema MJ, Blazevski A et al (2022) Robot-Assisted Prostate-Specific Membrane Antigen-Radioguided Surgery in Primary Diagnosed Prostate Cancer. J Nucl Med 63(11):1659–1664. https://doi.org/10.2967/jnumed.121.263743

Vidal-Sicart, S., B. Diaz-Feijoo, A. Glickman, et al. Evaluation of a drop-in gamma probe during sentinel lymph node dissection in gynaecological malignancies-comparing use with ridged and steerable laparoscopic instruments. in EUROPEAN JOURNAL OF NUCLEAR MEDICINE AND MOLECULAR IMAGING. 2022. SPRINGER ONE NEW YORK PLAZA, SUITE 4600, NEW YORK, NY, UNITED STATES

Gandaglia G, Mazzone E, Stabile A et al (2022) Prostate-specific membrane antigen radioguided surgery to detect nodal metastases in primary prostate cancer patients undergoing robot-assisted radical prostatectomy and extended pelvic lymph node dissection: Results of a planned interim analysis of a prospective phase 2 study. Eur Urol 82(4):411–418. https://doi.org/10.1016/j.eururo.2022.06.002

Falkenbach, F., L. Budaeus, M. Graefen, et al. Salvage robot-assisted PSMA-radioguided surgery inrecurrent prostate cancer using a novel DROP-INgamma probe. in EUROPEAN JOURNAL OF NUCLEAR MEDICINE AND MOLECULAR IMAGING. 2022. SPRINGER ONE NEW YORK PLAZA, SUITE 4600, NEW YORK, NY, UNITED STATES

Baeten IG, Hoogendam JP, Braat AJ et al (2022) Feasibility of a drop-in γ-probe for radioguided sentinel lymph detection in early-stage cervical cancer. EJNMMI Res 12(1):36. https://doi.org/10.1186/s13550-022-00907-w

Junquera JMA, Harke NN, Walz JC et al (2023) A drop-in gamma probe for minimally invasive sentinel lymph node dissection in prostate cancer: preclinical evaluation and interim results from a multicenter clinical trial. Clin Nucl Med 48(3):213–220. https://doi.org/10.1097/RLU.0000000000004557

Junquera JMA, Mestre-Fusco A, Grootendorst MR et al (2022) Sentinel lymph node biopsy in prostate cancer using the SENSEI® drop-in gamma probe. Clin Nucl Med 47(1):86–87. https://doi.org/10.1097/RLU.0000000000003830

Collamati F, van Oosterom MN, Hadaschik BA et al (2021) Beta radioguided surgery: towards routine implementation? Quart J Nucl Med Mol Imag Off Publ Ital Assoc Nucl Med (AIMN) Int Assoc Radiopharmacol (IAR) Sect Soc 65(3):229–243

Camillocci ES, Baroni G, Bellini F et al (2014) A novel radioguided surgery technique exploiting β−decays. Sci Rep 4(1):4401. https://doi.org/10.1038/srep04401

Collamati F, van Oosterom MN, De Simoni M et al (2020) A DROP-IN beta probe for robot-assisted 68Ga-PSMA radioguided surgery: first ex vivo technology evaluation using prostate cancer specimens. EJNMMI Res 10(1):1–10. https://doi.org/10.1186/s13550-020-00682-6

Collamati, F., A. Florit, N. Bizzarri, et al. Radioguided surgery with direct beta detection: a feasibility study in cervical cancer with 18F-FDG. in EUROPEAN JOURNAL OF NUCLEAR MEDICINE AND MOLECULAR IMAGING. 2022. SPRINGER ONE NEW YORK PLAZA, SUITE 4600, NEW YORK, NY, UNITED STATES

van der Poel HG, Buckle T, Brouwer OR et al (2011) Intraoperative laparoscopic fluorescence guidance to the sentinel lymph node in prostate cancer patients: clinical proof of concept of an integrated functional imaging approach using a multimodal tracer. Eur Urol 60(4):826–833. https://doi.org/10.1016/j.eururo.2011.03.024

Esposito C, Settimi A, Del Conte F et al (2020) Image-guided pediatric surgery using indocyanine green (ICG) fluorescence in laparoscopic and robotic surgery. Front Pediatr 8:314. https://doi.org/10.3389/fped.2020.00314

Lee Y-J, van den Berg NS, Orosco RK et al (2021) A narrative review of fluorescence imaging in robotic-assisted surgery. Lapar Surg 5:23

Meershoek P, KleinJan GH, van Oosterom MN et al (2018) Multispectral-fluorescence imaging as a tool to separate healthy from disease-related lymphatic anatomy during robot-assisted laparoscopy. J Nucl Med 59(11):1757–1760. https://doi.org/10.2967/jnumed.118.211888

Schottelius M, Wurzer A, Wissmiller K et al (2019) Synthesis and preclinical characterization of the PSMA-targeted hybrid tracer PSMA-I&F for nuclear and fluorescence imaging of prostate cancer. J Nucl Med 60(1):71–78. https://doi.org/10.2967/jnumed.118.212720

van Beurden F, van Willigen DM, Vojnovic B et al (2020) Multi-wavelength fluorescence in image-guided surgery, clinical feasibility and future perspectives. Mol Imaging 19:1536012120962333. https://doi.org/10.1177/1536012120962333

van den Berg NS, Buckle T, KleinJan GH et al (2017) Multispectral fluorescence imaging during robot-assisted laparoscopic sentinel node biopsy: a first step towards a fluorescence-based anatomic roadmap. Eur Urol 72(1):110–117. https://doi.org/10.1016/j.eururo.2016.06.012

Berrens A-C, van Oosterom MN, Slof LJ et al (2023) Three-way multiplexing in prostate cancer patients—combining a bimodal sentinel node tracer with multicolor fluorescence imaging. Eur J Nucl Med Mol Imaging 50(4):1262–1263. https://doi.org/10.1007/s00259-022-06034-x

Mazzone E, Dell’Oglio P, Grivas N et al (2021) Diagnostic value, oncologic outcomes, and safety profile of image-guided surgery technologies during robot-assisted lymph node dissection with sentinel node biopsy for prostate cancer. J Nucl Med 62(10):1363–1371. https://doi.org/10.2967/jnumed.120.259788

Wit EM, Acar C, Grivas N et al (2017) Sentinel node procedure in prostate cancer: a systematic review to assess diagnostic accuracy. Eur Urol 71(4):596–605. https://doi.org/10.1016/j.eururo.2016.09.007

Wit, E.M. and et_al, A hybrid radioactive and fluorescence approach is more than the sum of its parts; Outcome of a phase II randomized sentinel node trial in prostate cancer patients European journal of nuclear medicine and molecular imaging, Accepted

Meershoek P, Buckle T, van Oosterom MN et al (2020) Can intraoperative fluorescence imaging identify all lesions while the road map created by preoperative nuclear imaging is masked? J Nucl Med 61(6):834–841. https://doi.org/10.2967/jnumed.119.235234

Hekman MC, Rijpkema M, Muselaers CH et al (2018) Tumor-targeted dual-modality imaging to improve intraoperative visualization of clear cell renal cell carcinoma: a first in man study. Theranostics 8(8):2161. https://doi.org/10.7150/thno.23335

Eder A-C, Omrane MA, Stadlbauer S et al (2021) The PSMA-11-derived hybrid molecule PSMA-914 specifically identifies prostate cancer by preoperative PET/CT and intraoperative fluorescence imaging. Eur J Nucl Med Mol Imaging 48:2057–2058. https://doi.org/10.1007/s00259-020-05184-0

van Oosterom MN, van Leeuwen SI, Mazzone E et al (2023) Click-on fluorescence detectors: using robotic surgical instruments to characterize molecular tissue aspects. J Robot Surg 17(1):131–140. https://doi.org/10.1007/s11701-022-01382-0

van Oosterom, M.N., L.J. Slof, S.I. van Leeuwen, et al. Steerable detector for robot-assisted surgery - a hybrid modality for simultaneous radio- and fluorescence-guidance. in European Molecular Imaging Meeting. 2023. Salzburg, Austria

Wendler T, van Leeuwen FW, Navab N et al (2021) How molecular imaging will enable robotic precision surgery: the role of artificial intelligence, augmented reality, and navigation. Eur J Nucl Med Mol Imaging 48(13):4201–4224. https://doi.org/10.1007/s00259-021-05445-6

Hughes-Hallett A, Pratt P, Mayer E et al (2014) Image guidance for all—TilePro display of 3-dimensionally reconstructed images in robotic partial nephrectomy. Urology 84(1):237–243. https://doi.org/10.1016/j.urology.2014.02.051

Buffi NM, Saita A, Lughezzani G et al (2020) Robot-assisted partial nephrectomy for complex (PADUA score≥ 10) tumors: techniques and results from a multicenter experience at four high-volume centers. Eur Urol 77(1):95–100. https://doi.org/10.1016/j.eururo.2019.03.006

Porpiglia F, Checcucci E, Amparore D et al (2019) Three-dimensional elastic augmented-reality robot-assisted radical prostatectomy using hyperaccuracy three-dimensional reconstruction technology: a step further in the identification of capsular involvement. Eur Urol 76(4):505–514. https://doi.org/10.1016/j.eururo.2019.03.037

Hughes-Hallett A, Pratt P, Mayer E et al (2013) Intraoperative ultrasound overlay in robot-assisted partial nephrectomy. First Clin Exp. https://doi.org/10.1016/j.eururo.2013.11.001

van Oosterom MN, van der Poel HG, Navab N et al (2018) Computer-assisted surgery: virtual-and augmented-reality displays for navigation during urological interventions. Curr Opin Urol 28(2):205–213. https://doi.org/10.1097/MOU.0000000000000478

Waelkens P, van Oosterom MN, van den Berg NS et al (2016) Surgical navigation: an overview of the state-of-the-art clinical applications. Radio Surg Curr Appl Inno Direct Clin Pract. https://doi.org/10.1007/978-3-319-26051-8_4

Boekestijn, I., S. Azargoshasb, C. Schilling, et al. (2021) PET-and SPECT-based navigation strategies to advance procedural accuracy in interventional radiology and image-guided surgery. The Quarterly Journal of Nuclear Medicine and Molecular Imaging: Official Publication of the Italian Association of Nuclear Medicine (AIMN)[and] the International Association of Radiopharmacology (IAR),[and] Section of the Society 65: 3 244–260

Brouwer OR, van den Berg NS, Mathéron HM et al (2014) Feasibility of intraoperative navigation to the sentinel node in the groin using preoperatively acquired single photon emission computerized tomography data: transferring functional imaging to the operating room. J Urol 192(6):1810–1816. https://doi.org/10.1016/j.juro.2014.03.127

KleinJan GH, van den Berg NS, van Oosterom MN et al (2016) Toward (hybrid) navigation of a fluorescence camera in an open surgery setting. J Nucl Med 57(10):1650–1653. https://doi.org/10.2967/jnumed.115.171645

van Oosterom MN, Engelen MA, van den Berg NS et al (2016) Navigation of a robot-integrated fluorescence laparoscope in preoperative SPECT/CT and intraoperative freehand SPECT imaging data: a phantom study. J Biomed Opt 21(8):086008–086008. https://doi.org/10.1117/1.JBO.21.8.086008

van Oosterom MN, Meershoek P, KleinJan GH et al (2018) Navigation of fluorescence cameras during soft tissue surgery—is it possible to use a single navigation setup for various open and laparoscopic urological surgery applications? J Urol 199(4):1061–1068. https://doi.org/10.1016/j.juro.2017.09.160

Huang B, Tsai Y-Y, Cartucho J et al (2020) Tracking and visualization of the sensing area for a tethered laparoscopic gamma probe. Int J Comput Assist Radiol Surg 15(8):1389–1397. https://doi.org/10.1007/s11548-020-02205-z

Azargoshasb S, Houwing KH, Roos PR et al (2021) Optical Navigation of the Drop-In γ-Probe as a Means to Strengthen the Connection Between Robot-Assisted and Radioguided Surgery. J Nucl Med 62(9):1314–1317. https://doi.org/10.2967/jnumed.120.259796

Wendler T, Herrmann K, Schnelzer A et al (2010) First demonstration of 3-D lymphatic mapping in breast cancer using freehand SPECT. Eur J Nucl Med Mol Imaging 37:1452–1461. https://doi.org/10.1007/s00259-010-1430-4

Monge F, Shakir DI, Lejeune F et al (2017) Acquisition models in intraoperative positron surface imaging. Int J Comput Assist Radiol Surg 12:691–703. https://doi.org/10.1007/s11548-016-1487-z

Wendler, T., J. Traub, S.I. Ziegler, et al. Navigated three dimensional beta probe for optimal cancer resection. in Medical Image Computing and Computer-Assisted Intervention–MICCAI 2006: 9th International Conference, Copenhagen, Denmark, October 1–6, 2006. Proceedings, Part I 9. 2006. Springer. https://doi.org/10.1007/11866565_69

van Oosterom MN, Meershoek P, Welling MM et al (2019) Extending the hybrid surgical guidance concept with freehand fluorescence tomography. IEEE Trans Med Imaging 39(1):226–235. https://doi.org/10.1109/TMI.2019.2924254

Azargoshasb S, Molenaar L, Rosiello G et al (2022) Advancing intraoperative magnetic tracing using 3D freehand magnetic particle imaging. Int J Comput Assist Radiol Surg 17:211–218. https://doi.org/10.1007/s11548-021-02458-2

Fuerst B, Sprung J, Pinto F et al (2015) First robotic SPECT for minimally invasive sentinel lymph node mapping. IEEE Trans Med Imaging 35(3):830–838. https://doi.org/10.1109/TMI.2015.2498125

Pinto M, Zorn KC, Tremblay J-P et al (2019) Integration of a Raman spectroscopy system to a robotic-assisted surgical system for real-time tissue characterization during radical prostatectomy procedures. J Biomed Opt 24(2):025001–025001. https://doi.org/10.1117/1.JBO.24.2.025001

Keating MF, Zhang J, Feider CL et al (2020) Integrating the MasSpec Pen to the da Vinci surgical system for in vivo tissue analysis during a robotic assisted porcine surgery. Anal Chem 92(17):11535–11542. https://doi.org/10.1021/acs.analchem.0c02037

Zhu G, Zhou L, Wang S et al (2020) Design of a drop-in ebi sensor probe for abnormal tissue detection in minimally invasive surgery. J Elect Bioimp 11(1):87–95. https://doi.org/10.2478/joeb-2020-0013

Funding

This review was supported by a grant from the Dutch Research funding organization ‘Nederlandse Organisatie voor Wetenschappenlijk Onderzoek’ (NWO; TTW-VICI Grant number: TTW 16141).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

MvO and FvL have patents on Click-On detectors and surgical tracking concepts. There is no further conflict of interest to disclose.

Ethical approval

This article does not contain any novel clinical studies with human participants or animals performed. As such, ethical approval has been covered previously.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

van Oosterom, M.N., Azargoshasb, S., Slof, L.J. et al. Robotic radioguided surgery: toward full integration of radio- and hybrid-detection modalities. Clin Transl Imaging 11, 533–544 (2023). https://doi.org/10.1007/s40336-023-00560-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40336-023-00560-w