Abstract

In this paper, a bilevel multiobjective programming problem, where the lower level is a convex parameter multiobjective program, is concerned. Using the KKT optimality conditions of the lower level problem, this kind of problem is transformed into an equivalent one-level nonsmooth multiobjective optimization problem. Then, a sequence of smooth multiobjective problems that progressively approximate the nonsmooth multiobjective problem is introduced. It is shown that the Pareto optimal solutions (stationary points) of the approximate problems converge to a Pareto optimal solution (stationary point) of the original bilevel multiobjective programming problem. Numerical results showing the viability of the smoothing approach are reported.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Bilevel programming, which is characterized by the existence of two optimization problems in which the constraint region of the first-level problem is implicitly determined by another optimization problem, has been increasingly addressed in literature, both from the theoretical and computational points of view (see the monographs of Dempe [7] and Bard [2] and the bibliography reviews by Vicente and Calamai [22], Dempe [8], and Colson et al. [4]). In the last two decades, many papers have been published about bilevel optimization; however, few of them dealt with bilevel multiobjective programming problems [24].

Shi and Xia [19, 20] presented an interactive algorithm based on the concepts of satisfactoriness and direction vector for nonlinear bilevel multiobjective problem. Abo-Sinna [1] and Osman et al. [18] presented some approaches via fuzzy set theory for solving bilevel and multiple level multiobjective problem, and Teng et al. [21], Deb and Sinha [5, 6] proposed some evolutionary algorithms for some bilevel multiobjective programming problems. Besides, Bonnel and Morgan [3], Zheng and Wan [25] considered a so-called semivectorial bilevel optimization problem and proposed solution methods based on penalty approach. A recent study by Eichfelder [12] suggested a refinement-based strategy in which the algorithm starts with a uniformly distributed set of points on upper level variable. The difficulty with such a technique is that if the dimension of upper level variable is high, generating a uniformly spread upper level variables and refining the resulting upper level variable will be computationally expensive.

In this paper, inspired by the smoothing method for MPEC problems given in [13], we present a new method to solve a class of bilevel multiobjective programs and study its convergence property. Furthermore, we also develop necessary optimality conditions which are directly relevant to the algorithm considered. Our strategy can be outlined as follows. Using the weighted sum scalarization approach and the KKT optimality conditions, we reformulate a class of bilevel multiobjective programs as a one-level nonsmoothly constrained multiobjective optimization problem. Then, to avoid the difficulty of the nonsmooth constraints, we solve a sequence of smooth multiobjective programming problems, which progressively approximate the nonsmooth multiobjective programs. We prove that every accumulation point of the sequence of the points so generated is a solution of the bilevel multiobjective programs. Finally, we give some numerical examples to illustrate the algorithm. It should be noted that the results obtained here can also be generalized to a bilevel multiobjective programming problem, where the upper level is a multiobjective optimization problem and the lower level is a scalar optimization problem.

The remainder of the paper is organized as follows. In the next section, we give the mathematical model of bilevel multiobjective programs, and present some assumptions, under which the bilevel multiobjective programs can be reformulated as an equivalent one-level nonsmoothly constrained multiobjective optimization problem. In Sect. 3, a smoothing approach is introduced by which the nonsmooth multiobjective programs are approximated by a sequence of smooth multiobjective optimization problems. The optimality condition, which plays an important role in proposing algorithm, is studied in Sect. 4. In Sect. 5, we propose the algorithm and give the numerical results. Finally, we conclude the paper with some remarks.

2 Problem Formulation and Preliminaries

We consider the following bilevel multiobjective programming problem:

where \(S(x)\) denotes the efficiency set of solutions to the lower level problem:

and \(x\in {{\mathbb{R}}^{n}},y\in {{\mathbb{R}}^{m}}\), and \(F:{{\mathbb{R}}^{n+m}}\rightarrow {{\mathbb{R}}^{p}},f:{{\mathbb{R}}^{n+m}}\rightarrow {{\mathbb{R}}^{q}},g:{{\mathbb{R}}^{n+m}}\rightarrow {{\mathbb{R}}^{l}}\) are continuously differentiable functions.

Definition 2.1

A point \((x,y)\) is feasible for problem (2.1) if \(y\in S(x)\) and \(g(x,y)\leqslant 0\); the term \(({x^{*}},{y^{*}})\) is a local Pareto optimal solution to problem (2.1), provided that it is a feasible point and there exists no other feasible point \((x,y)\) in the neighborhood of \(({x^{*}},{y^{*}})\) such that \(F(x,y)\leqslant F({x^{*}},{y^{*}})\) and \(F(x,y)\ne F(x^{*},y^{*})\).

Remark 2.2

Note that in problem (2.1) the objective function of the upper level is minimized w.r.t. \(x\) and \(y\), that is, in this work, we adopt the optimistic approach to consider the bilevel multiobjective programming problem [12].

Let \(S=\{(x,y)|g(x,y)\leqslant 0\}\) denote the constraint region of problem (2.1), \(\bar{S}=\{y\in {{\mathbb{R}}^{m}}|g(x,y)\leqslant 0\}\) denote the feasible set of the lower level problem, and \(\Pi _{x}=\{x\in {{\mathbb{R}}^{n}}|\exists y,g(x,y)\leqslant 0\}\) be the projection of \(S\) onto the leader’s decision space. We indicate by \(I(x,y)\) the set of active constraints, i.e.,

For problem (2.1), we make the following assumptions:

- \((A_{1})\) :

-

The constraint region \(S\) is nonempty and compact.

- \((A_{2})\) :

-

At each \(x\in {\Pi _{x}}\) and \(y\in \bar{S}\), the lower level problem \(({P_{x}})\) is a convex parameter multiobjective program, and the partial gradient \(\nabla _{y}g_{i}(x,y), i\in I(x,y)\) is linearly independent.

Remark 2.3

It is noted that the assumption \((A_{2})\) plays a key role in the method of replacing the lower level problem with its KKT optimality conditions [9]. Based on assumption \((A_{2})\), we can adopt the method of replacing the lower level problem with its KKT optimality conditions, and transform the bilevel programming into the corresponding single-level programming problem.

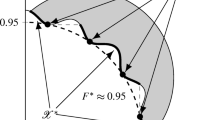

One way to transform the lower level problem \((P_{x})\) into a usual one-level optimization problem is the so-called scalarization technique, which consists of solving the following further parameterized problem,

where the new parameter vector \(\lambda \) is a nonnegative point of the unit sphere, i.e., \(\lambda \) belongs to \(\Omega =\left\{\lambda |\lambda \in {\mathbb{R}}_{+}^{q},\sum \limits _{i=1}^{q}\lambda _{i}=1\right\}\). Since it is a difficult task to choose the best choice \(x(y)\) on the Pareto front for a given upper level variable \(x\), our approach in this paper consists of considering the set \(\Omega \) as a new constraint set for the upper level problem. It is noted that this approach is also used in [10] for the semivectorial bilevel optimization problem.

Hence, problem (2.1) can be replaced by the following bilevel multiobjective programming problem,

Let \(\gamma (x,\lambda )\) denote the efficiency set of solutions to problem (2.2). The link between problems (2.1) and (2.3) will be formalized in the following proposition.

Proposition 2.4

Let \((\bar{x},\bar{y})\) be a Pareto optimization solution to problem (2.1). Then for all \(\bar{\lambda }\in \Omega \) with \(\bar{y}\in \gamma (\bar{x},\bar{\lambda })\), the point \((\bar{x},\bar{y},\bar{\lambda })\) is a Pareto optimal solution to problem (2.3). Conversely, let \((\bar{x},\bar{y},\bar{\lambda })\) be a Pareto optimal solution to problem (2.3). Then \((\bar{x},\bar{y})\) is a Pareto optimal solution of problem (2.1).

Remark 2.5

Proposition 2.4 comes from Proposition 3.1 in [10], and the proof process of Proposition 2.4 is similar to that of Proposition 3.1. To concise this paper, we omit the proof of Proposition 2.4.

Following assumption \((A_{2})\), we can replace the lower level problem in problem (2.3) with its KKT optimality conditions and get the following multiobjective programming problem,

where \(u\in {{\mathbb{R}}^{l}}\) is the lagrangian multiplier.

Proposition 2.6

Let \((\bar{x},\bar{y},\bar{\lambda })\) be a Pareto optimal solution of problem (2.3) on \(U(\bar{x},\bar{y},\bar{\lambda })\), then there exists \(\bar{u}\in {{\mathbb{R}}^{l}}\), such that \((\bar{x},\bar{y},\bar{\lambda },\bar{u})\) is a local Pareto optimal solution of problem (2.4). Conversely, suppose that \((\bar{x},\bar{y},\bar{\lambda },\bar{u})\) is a local Pareto optimal solution to problem (2.4), then \((\bar{x},\bar{y},\bar{\lambda })\) is a local Pareto optimal solution of problem (2.3).

Proof

Let \((\bar{x},\bar{y},\bar{\lambda })\) be a Pareto optimal solution to problem (2.3) restricting on \(U(\bar{x},\bar{y},\bar{\lambda })\). Then following assumptions \((A_{1})\) and \((A_{2})\), for fixed \((\bar{x},\bar{\lambda })\), \(\bar{y}\) must be a global optimal solution to the lower level problem of problem (2.3), and KKT optimality conditions hold and \(\bar{u}\) is a corresponding multiplier. Hence, \((\bar{x},\bar{y},\bar{\lambda },\bar{u})\) is a feasible solution to problem (2.4). Suppose that \((\bar{x},\bar{y},\bar{\lambda },\bar{u})\) is not a local Pareto optimal solution to problem (2.4), then there exists a feasible point \((x,y,\lambda ,u)\) to problem (2.4) on \(U(\bar{x},\bar{y},\bar{\lambda })\times {\mathbb {R}^{l}}\) such that

As for fixed \((x,\lambda )\), \(y\) is a global solution to the lower level problem of problem (2.3), and hence \((x,y,\lambda )\) is obviously a feasible solution to problem (2.3). Then formula (2.5) contradicts the fact that \((\bar{x},\bar{y},\bar{\lambda })\) is a Pareto optimal solution to problem (2.3) on \(U(\bar{x},\bar{y},\bar{\lambda })\).

Conversely, suppose that \((\bar{x},\bar{y},\bar{\lambda },\bar{u})\) is a Pareto optimal solution to problem (2.4) on \(U(\bar{x},\bar{y},\bar{\lambda })\times {\mathbb {R}^{l}}\). Then there is no other feasible solution \((x,y,\lambda ,u)\) that lies in \(U(\bar{x},\bar{y},\bar{\lambda })\times {\mathbb {R}^{l}}\) such that

We now show that \((\bar{x},\bar{y},\bar{\lambda })\) is a Pareto optimal solution to problem (2.3) on \(U(\bar{x},\bar{y},\bar{\lambda })\) by contradiction. It is obvious that \(\bar{y}\) is a global optimal solution to the lower level problem of problem (2.3). Consequently, \((\bar{x},\bar{y},\bar{\lambda })\) is a feasible solution to problem (2.3). Suppose that \((\bar{x},\bar{y},\bar{\lambda })\) is not a local Pareto optimal solution to problem (2.3) on \(U(\bar{x},\bar{y},\bar{\lambda })\). Then there exists \((x,y,\lambda )\), a feasible solution to problem (2.3) on \(U(\bar{x},\bar{y},\bar{\lambda })\), such that formula (2.6) holds. As the KKT optimality conditions hold at \((x,y,\lambda )\), which means that there exists \(u\) such that \((x,y,\lambda ,u)\) is a feasible solution to problem (2.4). This contradicts the optimality of \((\bar{x},\bar{y},\bar{\lambda },\bar{u})\). \(\square \)

Proposition 2.6 shows that we can obtain some Pareto optimal solutions of problem (2.1) by solving problem (2.4), and in the following context, we will give a smoothing approach for problem (2.4).

To facilitate some of the following proofs, we introduce the functions \({H_{1}}:{{\mathbb{R}}^{n+m+q+l}}\rightarrow {{\mathbb{R}}^{m}},\ {H_{2}}:{{\mathbb{R}}^{n+m+q+l}}\rightarrow {{\mathbb{R}}^{l}}, \ {H_{3}}:{{\mathbb{R}}^{n+m+q+l}}\rightarrow {{\mathbb{R}}^{l}},\ {H_{4}}:{{\mathbb{R}}^{n+m+q+l}}\rightarrow {\mathbb{R}},\ {H_{5}}:{{\mathbb{R}}^{n+m+q+l}}\rightarrow {\mathbb{R}}^{q}\), which are defined as

respectively. Then we can rewrite problem (2.4) as

Let \(w^{*}\) be a feasible point of problem (2.4), we define the following index set:

In the following context, we make the following assumption.

- \((A_{3})\) :

-

For a feasible point \({w^{*}}\) of problem (2.7), the gradient vectors \(\nabla {H_{1i}},\ \quad i=1,\cdots ,m;\) \(\nabla {H_{2i}},\ \ i\in \beta \cup \gamma; \) \(\nabla {H_{3i}},\ \ i\in \alpha \cup \beta; \) \(\nabla {H_{4}}\), \(\nabla {H_{5i}}\ \ i\in \zeta \) are linearly independent.

3 Smoothing Approach for Problem (2.7)

We consider a smoothing continuation method for solving problem (2.7). This method uses the perturbed Fischer-Burmeister function \(\phi :{{\mathbb{R}}^{2}}\times {{\mathbb{R}}_{+}} \rightarrow {\mathbb{R}}\) defined by

The function \(\phi \) was first introduced by Kanzow [15] for linear complementarity problems.

When \(\varepsilon =0\), the function \(\phi \) reduces to the Fischer–Burmeister function and satisfies

When \(\varepsilon >0\), this function satisfies

With the perturbed Fisher–Burmeister function \(\phi \), we define the function \({\phi ^{\varepsilon} }:{{\mathbb{R}}^{n+m+q+l}}\rightarrow {{\mathbb{R}}^{l}}\) by

For every \(\varepsilon >0\), we can define a smooth multiobjective optimization problem:

It is noted that when \(\varepsilon =0\), problem (3.4) is equivalent to problem (2.7). Moreover, for \(\varepsilon =0\), the function \(\phi ^{0}\) is not differentiable in general specially. When \({H_{2i}}(\bar{w})={H_{3i}}(\bar{w})=0\) for some \(\bar{w}\) and \(i\), i.e., \(i\in \beta \), the function \(\phi _{i}^{0}\) is not differentiable at \(\bar{w}\). Following the result in [14], the Clarke’s generalized gradient of \(\phi _{i}^{0}\)

is contained in the set

where \(B=\big\{(\xi _{i},\eta _{i})\in {{\mathbb{R}}^{2}}|(1-\xi _{i})^{2}+(1-\eta _{i})^{2}\leqslant 1\big\}\).

Lemma 3.1

For each \(\varepsilon >0\), let \({w^{\varepsilon }}\) be a feasible point of problem (3.4). Suppose that \({w^{\varepsilon} }\rightarrow \bar{w}\) as \(\varepsilon \rightarrow 0\). Then \(\bar{w}\) is a feasible point of problem (2.4). Moreover, if the assumption \(({A_{3}})\) holds at \(\bar{w}\), then the gradients

are linearly independent for all \(\varepsilon >0\) small enough.

Proof

By (3.1), (3.2), and the continuity of the function \(\phi \), it is obvious that \(\bar{w}\) is feasible to problem (2.4).

As

and noticed that

Then, we have that as \(\varepsilon \rightarrow 0\)

where \(dist[s,S]=\min \{\Vert s-s'\Vert \ |s'\in S\}\) for \(v\in {{\mathbb{R}}^{n}}\) and \(S\subset {{\mathbb{R}}^{n}}\). Note that the set \(\partial _{c}\phi _{i}^{0}(\bar{w})\) contains the Clarke’s generalized gradient \(\partial \phi _{i}^{0}(\bar{w})\) of \(\phi _{i}^{0}\) at \(\bar{w}\). Moreover, by continuity, it is obvious that

Then it is not difficult to deduce that the desired result holds under the assumption \(({A_{3}})\).□

4 Optimality Conditions

In this section, we consider optimality conditions for problem (2.7) and relate these conditions to those of the perturbed problem (3.4). Our main aim in deriving optimality conditions for problem (2.7) is the construction of reliable stopping criteria for the algorithm to be described in the next section.

Definition 4.1

[23] A feasible point \({w^{*}}\) of problem (2.7) is called a strong C-stationary point if there exists a unit vector \(\lambda '\geqslant 0\) and \((u',v',\mu ,\nu ')\in {{\mathbb{R}}^{m+l+1+q}}\) such that the following condition holds:

Remark 4.2

The condition (4.1) is the Clarke KKT condition for the nonsmooth problem (2.7). Following Proposition 2.4, problem (2.7) is equivalent to problem (2.4). Therefore, the condition (4.1) is necessarily satisfied at a Pareto optimal solution \(({x^{*}},{y^{*}})\) to problem (2.1).

To facilitate the following proof, we add a new variable \(z\) to problem (2.7) and rewrite problem (2.7) as follows:

It is noted that in (4.2), the new variable \(z\) which, at feasible points, is always equal to \(-{H_{2}}(w)\). Based on problem (4.2), it is easy to see that \(\partial \phi _{i}^{0}(w)=\partial \min ({z_{i}},{H_{3i}}(w))\). Then, we can reformulate (4.1) as

Now, we are in the position to give the main result of this paper.

Theorem 4.3

Let \({w^{\varepsilon} }\) be a Pareto optimal solution to problem (3.4) and suppose that \({w^{\varepsilon} }\rightarrow \bar{w}\) as \(\varepsilon \rightarrow 0\), and the assumption \(({A_{3}})\) holds at \(\bar{w}\); then \({w^{\varepsilon} }\) is a KKT point to problem (3.4) with multiplier \(({u^{\varepsilon} },{v^{\varepsilon} },{\mu ^{\varepsilon} },{\nu ^{\varepsilon} })\); Moreover, if \(({u^{\varepsilon} },{v^{\varepsilon} },{\mu ^{\varepsilon }},{\nu ^{\varepsilon} })\rightarrow (u',v',u', \nu ')\), then \(\bar{w}\) with \((u',v',\mu ',\nu ')\) satisfies the KKT conditions for problem (2.4), i.e., \((\bar{x},\bar{y})\) is a strong C-stationary point of the bilevl multiobjective programming problem (2.1).□

Proof

As the assumption \(({A_{3}})\) holds at \(\bar{w}\), following Lemma 3.1, it is clear that the LICQ holds at \({w^{\varepsilon} }\) for problem (3.4). Then, the first part of Theorem 4.3 is obvious.

Now, we prove the second part. To finish the proof, we only need to check that

where \(\bar{z}=-{H_{2}}(\bar{w})\).

Following the first part of Theorem 4.3, we have that

Taking into account the continuity assumptions, it is easy to see that if

then (4.4) follows from (4.6) by simple continuity arguments. We then check (4.6). If we indicate by \({e_{j}}\) the \(j\)th column of the \((n+m+l+l)\) dimensional identity matrix, and noticed \({H_{2i}}(\bar{w})=\bar{z}_{i}\), we have that

On the other hand, we also have

Now, passing to the limit in (4.8) and comparing with (4.7), we obtain (4.8) and the proof is complete.

5 Algorithm and Numerical Results

Algorithm S

- Step 0:

-

Let \(\{{\varepsilon ^{k}}\}\) be any sequence of nonnegative numbers with \(\lim \nolimits _{k\rightarrow \infty }{\varepsilon ^{k}}=0\). Choose \({w^{0}}:=({x^{0}},{y^{0}},\lambda ^{0},{u^{0}})\in {{\mathbb{R}}^{n+m+q+l}}\), and set \(k:=1\).

- Step 1:

-

Find a Pareto optimal solution \({w^{k}}\) to problem \(({P_{\varepsilon ^{k}}})\).

- Step 2:

-

Set \(k:=k+1\), and go to Step 1.

Theorem 5.1

Let the sequence \({w^{k}}\) be generated by Algorithm S, and if \({w^{*}}=({x^{*}},{y^{*}},{\lambda ^{*}},{u^{*}})\) is a limit point of \({w^{k}}\), then \(({x^{*}},{y^{*}})\) is a strong C-stationary point of problem (2.1).

Proof

Following Theorem 4.3, Theorem 5.1 is obvious.□

Although Algorithm S is quite realistic, we have not considered yet the stopping criterion problem. Furthermore we would give a stopping criterion under which we can find an approximate stationary point of problem (2.4). We address these issues in the following algorithm.

Algorithm AS

- Step 0:

-

Let \(\{{\varepsilon ^{k}}\}\) be any sequence of nonnegative numbers with \(\lim \nolimits _{k\rightarrow \infty }{\varepsilon ^{k}}=0\). Choose \({w^{0}}:=({x^{0}},{y^{0}},{\lambda ^{0}},{u^{0}})\in {{\mathbb{R}}^{n+m+q+l}}\), a stopping tolerance \(\varepsilon >0\) and set \(k:=1\).

- Step 1:

-

Find a Pareto optimal solution \({w^{k}}\) to problem \((P_{\varepsilon ^{k}})\).

- Step 2:

-

If \(\Vert {w^{k}}-{w^{k-1}}\Vert \leqslant \varepsilon \), STOP.

- Step 3:

-

Set \(k:=k+1\), and go to Step 1.

In Step 1, to facilitate the resolution of problem (3.4), we adopt the \(\varepsilon \)-constraint approach [11]. The reason why we do not adopt other approaches to get the Pareto optimal front of problem (3.4) is that our primary aim in this paper is to illustrate the usefulness and viability of a smoothing approach in the bilevel multiobjective programs field. Then, whether we can get the Pareto optimal front of problem (3.4) is not so important.

In Step 2, the stopping criterion is standard, and is usually a part of any practical stopping criterion in commercial codes for the solution of smooth optimization problems. Based on the stopping criterion, we can find an approximate stationary point of problem (2.4).

To illustrate Algorithm AS, we consider the following bilevel programming problem,

Example 5.2

[16]

Following problem (3.4), we can get the following smooth multiobjective programming problem,

Loop 1

- Step 0:

-

Choose \({w^{0}}=({x^{0}},{y^{0}},{\lambda ^{0}},{u^{0}})=(0,0,0,0,0,0)\), \({\varepsilon ^{0}}=0.001\), a stopping tolerance \(\varepsilon =0.001\) and set \(k:=1\).

- Step 1:

-

Solve problem (5.1) using \(\varepsilon \)-constraint method and obtain a Pareto optimal solution \({w^{1}}=({x^{1}},{y^{1}},{\lambda ^{1}},{u^{1}})=(0,0,0.053,0.947,0,0.106)\).

- Step 2:

-

\(\Vert {w^{0}}-{w^{1}}\Vert _{2}=0.954\;4>\varepsilon \).

- Step 3:

-

Set \(\varepsilon _{k}:=0.1\varepsilon _{k}\), \(k:=k+1\), and go to Step 1.

Loop 2

- Step 1:

-

Solve problem (5.1) using \(\varepsilon \)-constraint method and obtain a Pareto optimal solution \({w^{2}}=({x^{2}},{y^{2}},{\lambda ^{2}},{u^{2}})=(0,0,0.053,0.947,0,0.106)\).

- Step 2:

-

\(\Vert {w^{2}}-{w^{1}}\Vert _{2}=0<\varepsilon \), stop. Pareto optimal solution to Example 5.2 is \(({x^{*}},{y^{*}})=(0,0)\) and the corresponding upper level objective value is \(F({x^{*}},{y^{*}})=(0,250.0)\).

It is noted that in reference [16], a Pareto optimal solution to Example 5.2 is \(({x^{*}},{y^{*}})=(4.995\,3,5.011\,1)\) , and the corresponding upper level objective value is \(F({x^{*}},{y^{*}})=(41.588\,4,62.222\,8)\). It is obvious that the optimal solution obtained here is a Pareto optimal solution to Example 5.2.

Go to a step further. To verify the viability of the algorithm proposed, we consider the following three examples.

Example 5.3

[17]

Example 5.4

[5]

Example 5.5

[21]

In the rest of the three examples, we obtain the Pareto optimal solutions, which are presented in Table 1, and the comparisons with the results in the references are presented in Table 2. By comparison, we can find that the solutions in Table 1 are Pareto optimal solutions to the corresponding examples. From this point of view, the smoothing approach proposed in this paper to bilevel multiobjective programming problem is usefulness and viability.

6 Conclusion

In this paper, we introduce a new method for solving a class of bilevel multiobjective programs. The method is based on approximating a nonsmooth multiobjective optimization problem reformulation of the bilevel multiobjective programming problem by a series of smooth multiobjective programming problems. We show that every sequence of solutions so generated converges to solutions of the bilevel multiobjective programming problem. The numerical results reported illustrated that the smoothing method introduced in this paper can be numerically efficient.

It is noted that, besides its theoretical properties, the new algorithm proposed in this paper has one distinct advantage: it only requires the use of practicable algorithms for the solution of smooth multiobjective optimization problems, no other complex operations are necessary.

References

Abo-Sinna, M.: A bilevel nonlinear multiobjective decision making under fuzziness. J. Oper. Res. Soc. India 38(5), 484–495 (2001)

Bard, J.: Practical Bilevel Optimization: Algorithm and Applications. Nonconvex Optimization and Its Applications. Kluwer, Dordrecht (1998)

Bonnel, H., Morgan, J.: Semivectorial bilevel optimization problem: penalty approach. J. Optim. Theory Appl. 131(3), 365–382 (2006)

Colson, B., Marcotte, P., Savard, G.: An overview of bilevel optimization. Ann. Oper. Res. 153, 235–256 (2007)

Deb, K., Sinha, A.: Constructing test problems for bilevel evolutionary multi-objective optimization. In: IEEE congress on evolutionary computation, 1153–1160 (2009)

Deb, K., Sinha, A.: Solving bilevel multi-objective optimization problems using evolutionary algorithms. Lect. Notes Comput. Sci.: Evol. Multi-criterion Optim. 5467, 110–124 (2009)

Dempe, S.: Foundations of Bilevel Programming. Nonconvex Optimization and Its Applications. Kluwer, Dordrecht (2002)

Dempe, S.: Annotated bibliography on bilevel programming and mathematical programs with equilibrium constraints. Optimization 52(3), 333–359 (2003)

Dempe, S., Dutta, J.: Is bilevel programming a special case of a mathematical program with complementarity constraints. Math. Program. Ser. A 131, 37–48 (2012)

Dempe, S., Gadhi, N., Zemkoho, A.B.: New optimality conditions for the semivectorial bilevel optimization problem. J. Optim. Theory Appl. 157, 54–74 (2013)

Ehrgott, M., Ruzika, S.: Improved \(\varepsilon \)-constraint method for multiobjective programming. J. Optim. Theory Appl. 138, 375–396 (2008)

Eichfelder, G.: Multiobjective bilevel optimization. Math. Program. 123, 419–449 (2010)

Facchinei, F., Jang, H., Qi, L.: A smoothing method for mathematical programs with equilibrium constraints. Math. Program. 85, 107–134 (1999)

Fukushima, M., Pang, J.: Convergence of a smoothing continuation method for mathematical programs with complementarity constraints. Ill-Posed Variational Problems and Regularization Techniques, Lecture Notes in Economics and Mathematical Systems, Springer-Verlag, Berlin/Heidelberg 477, 99–110 (1999)

Kanzow, C.: Some noninterior continuation methods for linear complementarity problems. SIAM J. Matrix Anal. Appl. 17, 851–868 (1996)

Li, L., Teng, X.: Global optimization methods of a class of multi-objective decision making problem. J. Harbin Univ. Sci. Technol. 6(6), 25–29 (2001)

Lin, D., Chou, Y., Li, Q.: Multi-objective evolutionary algorithm for multi-objective bilevel programming problems. J. Syst. Eng. 22(2), 181–185 (2007)

Osman, M., Abo-Sinna, M., et al.: A multilevel nonlinear multiobjective decision making under fuzziness. Appl. Math. Comput. 153(1), 239–252 (2004)

Shi, X., Xia, H.: Interactive bilevel multiobjective decision making. J. Oper. Res. Soc. 48(9), 943–949 (1997)

Shi, X., Xia, H.: Model and interative algorithm of bilevel multiobjective decision making with multiple interconnected decision makers. J. Multi-Criteria Decis. Anal. 10, 27–34 (2001)

Teng, C., Li, L., et al.: A class of genetic algorithms on bilevel multiobjective decision making problem. J. Syst. Sci. Syst. Eng. 9(3), 290–296 (2000)

Vicente, L., Calamai, P.: Bilevel and multilevel programming: a bibliography review. J. Glob. Optim. 5(3), 291–306 (1994)

Ye, J.: Necessary optimality conditions for multiobjective bilevel programs. Math. Oper. Res. 36(1), 165–184 (2011)

Zhang, G., Lu, J., Dillon, T.: Decentralized multi-objective bilevel decision making with fuzzy demands. Knowl.-Based Syst. 20(5), 495–507 (2007)

Zheng, Y., Wan, Z.: A solution method for semivectorial bilevel programming problem via penalty method. J. Appl. Math. Comput. 34, 207–219 (2011)

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was supported by the National Natural Science Foundation of China (Nos. 11201039, 71171150, and 61273179).

Rights and permissions

About this article

Cite this article

Lü, YB., Wan, ZP. A Smoothing Method for Solving Bilevel Multiobjective Programming Problems. J. Oper. Res. Soc. China 2, 511–525 (2014). https://doi.org/10.1007/s40305-014-0059-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40305-014-0059-6