Abstract

Background

Accurate measurement of child sedentary behavior is necessary for monitoring trends, examining health effects, and evaluating the effectiveness of interventions.

Objectives

We therefore aimed to summarize studies examining the measurement properties of self-report or proxy-report sedentary behavior questionnaires for children and adolescents under the age of 18 years. Additionally, we provided an overview of the characteristics of the evaluated questionnaires.

Methods

We performed systematic literature searches in the EMBASE, PubMed, and SPORTDiscus electronic databases. Studies had to report on at least one measurement property of a questionnaire assessing sedentary behavior. Questionnaire data were extracted using a standardized checklist, i.e. the Quality Assessment of Physical Activity Questionnaire (QAPAQ) checklist, and the methodological quality of the included studies was rated using a standardized tool, i.e. the COnsensus-based Standards for the selection of health Measurement INstruments (COSMIN) checklist.

Results

Forty-six studies on 46 questionnaires met our inclusion criteria, of which 33 examined test–retest reliability, nine examined measurement error, two examined internal consistency, 22 examined construct validity, eight examined content validity, and two examined structural validity. The majority of the included studies were of fair or poor methodological quality. Of the studies with at least a fair methodological quality, six scored positive on test–retest reliability, and two scored positive on construct validity.

Conclusion

None of the questionnaires included in this review were considered as both valid and reliable. High-quality studies on the most promising questionnaires are required, with more attention to the content validity of the questionnaires.

PROSPERO registration number: CRD42016035963.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

In children and adolescents, no self-report or proxy-report sedentary behavior questionnaires are available that are both valid and reliable. |

To improve the methodological quality of future studies, researchers need to adopt standardized tools such as COSMIN for the evaluation of measurement properties. In addition, reviewers and journal editors should also take into consideration whether such tools have been used when evaluating research articles. |

Content validity needs more attention to ensure that questionnaires measure what they intend to measure. |

1 Introduction

Sedentary behavior is defined as activities performed in a seated or lying posture with very low energy expenditure (<1.5 metabolic equivalents [METs]) [1]. Sedentary behavior comprises a wide variety of activities, e.g. watching television, quiet play, passive transport, and studying. Excessive engagement in sedentary activities is seen in countries all over the world, i.e. 68 % of girls and 66 % of boys from 40 different countries in North America and Europe watch television for 2 or more hours per day [2]. Moreover, screen time seems to cover only a small part of the total time spent sedentary [3].

The relationship between sedentary behavior and health risks in children and adolescents is therefore of great interest. A recent review of reviews found strong evidence for an association between sedentary behavior and obesity in children [4]. Furthermore, moderate evidence for an association between blood pressure, physical fitness, total cholesterol, academic achievements, social behavioral problems, self-esteem, and sedentary behavior was found [4]. However, a major part of the existing evidence is based on cross-sectional studies, and subsequently no conclusion about causality can be drawn. Furthermore, sedentary behavior is often assessed using measurement instruments with inadequate or unknown measurement properties, and in some cases only screen time as an indicator of total sedentary time is assessed. Reviews examining the prospective relationship between sedentary behavior and different health outcomes concluded that there is no convincing evidence [5]. In addition, the evidence varied across type of measurement instrument and type of sedentary behavior [6].

Accelerometers and inclinometers are acknowledged as both valid and reliable instruments for measuring sedentary behavior in children and adolescents [7–9]; however, these measures are labor-intensive for researchers and are costly [10], and cannot provide information on the type and setting of sedentary behavior. Additionally, accelerometers cannot properly distinguish standing from sitting [11]. On the other hand, self- or proxy-report questionnaires are relatively inexpensive and easy to administer [10, 12]. Moreover, they can provide information on the type and setting of sedentary behavior. However, the use of questionnaires is not without limitations as social desirability and problems with accuracy of recall are factors of bias [12, 13].

A combination of objective measures, such as inclinometers providing information on duration and interruptions, and self-report providing information on the type and setting of sedentary behavior, would be optimal for measuring sedentary behavior. Different questionnaires for specific target populations have been developed, using different recall periods and formats, measuring different types and settings of sedentary behavior, and with different outcomes for measurement properties. This large variety of questionnaires available makes it difficult to choose the best instrument when conducting research; therefore, an overview of the measurement properties and characteristics of existing sedentary behavior questionnaires is highly warranted.

In 2011, Lubans et al. [7] reviewed studies examining the validity and reliability of questionnaires measuring sedentary behavior, indicating mixed results for both validity and reliability. As the amount of studies assessing the measurement properties of sedentary behavior questionnaires in children and adolescents has more than doubled since then, an update is required. Furthermore, an overview of the characteristics (e.g. target population, setting measured, recall period) of the included questionnaires was not incorporated in the review of Lubans et al., and studies in children under the age of 3 years were excluded [7]. Therefore, the aim of this review was to summarize studies that focused on assessing the measurement properties (e.g. validity, reliability, responsiveness) of self- or proxy-report questionnaires assessing (constructs of) sedentary behavior in children and adolescents under the age of 18 years, including a methodological quality assessment. Moreover, a summary of the questionnaire characteristics is provided.

2 Methods

This review was registered at PROSPERO, the international prospective register of systematic reviews (registration number CRD42016035963), and the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) reporting guidelines were followed.

2.1 Literature Search

Systematic literature searches were carried out using the PubMed, SPORTDiscus (complete database up until December 2015), and EMBASE (complete database up until November 2015) databases. In PubMed, search terms were used in ‘AND’ combination and related to the following topics: ‘sedentary behavior’, ‘children’, (e.g. child, childhood, sedentary time, prolonged sitting), and ‘measurement properties’ (e.g. reliability, reproducibility, validity, responsiveness). The search was limited to humans and a variety of publication types (e.g. case reports, biography) were excluded (by using the ‘NOT’ combination). Free-text, Medical Subject Heading (MeSH), and Title/Abstract (TIAB) search terms were used. In SPORTDiscus, search terms regarding ‘children’ and ‘sedentary behavior’ were used in ‘AND’ combination. Search terms were used as title and abstract words. In EMBASE, both TIAB and EMTREE ‘sedentary behavior’ and ‘measurement properties’ search terms were used in ‘AND’ combination, and the EMBASE limits for children (e.g. infant, child) were applied (‘AND’ combination). In addition, reference lists and author databases were screened for additional studies. The full search strategies can be found in electronic supplementary material Appendix S1.

2.2 Inclusion and Exclusion Criteria

Studies were included if they met the following criteria: (i) the study evaluated one or more of the measurement properties of a self- or proxy-report questionnaire, including sedentary behavior items; (ii) the aim of the questionnaire was to measure one or more of the constructs and dimensions of sedentary behavior; (iii) the average age of the study population was <18 years; and (iv) the study was published in the English language. Exclusion criteria were (i) studies examining questionnaires including physical activity and sedentary behavior items that had no separate score for sedentary behavior items; (ii) studies only reporting correlations between sedentary behavior constructs and non-sedentary constructs (e.g. correlation of self-reported or proxy-reported sedentary behavior with total activity counts measured by accelerometry); and (iii) studies evaluating the measurement properties of the questionnaire in a clinical sample.

2.3 Selection Procedures

Two reviewers (TA and LH) independently selected studies of potential relevance based on titles and abstracts. Thereafter, both reviewers checked whether the full texts met the inclusion criteria. A third reviewer (MC) was consulted when inconsistencies arose.

2.4 Data Extraction

Two independent reviewers (TA and LH) extracted data regarding the characteristics of the questionnaire under study, as well as the methods and results of the assessed measurement properties of the questionnaire, using structured forms. Disagreement between reviewers with respect to data extraction was discussed until consensus was reached.

Data regarding the questionnaire characteristics were extracted using the Quality Assessment of Physical Activity Questionnaire (QAPAQ) checklist, Part 1, which appraises the qualitative attributes of physical activity questionnaires [14]. Although originally developed for physical activity questionnaires, the QAPAQ checklist was also considered appropriate for sedentary behavior as physical activity and sedentary behavior questionnaires have similar structures and formats. Five of the nine checklist items were considered necessary to provide an informative summary of sedentary behavior questionnaires: (i) the constructs measured by the questionnaire, e.g. watching television, passive transport, quiet play, total sedentary behavior; (ii) the setting, e.g. at home, at school, leisure time; (iii) the recall period; (iv) the target population for whom the questionnaire was developed; and (v) the format, including the dimensions (i.e. duration, frequency), the number of questions, and the number and type of response categories. In addition, the following data regarding the methods and results of the assessed measurement properties were extracted: study sample, comparison measure, time interval, statistical methods, and results for each measurement property.

2.5 Methodological Quality Assessment

Methodological quality of the studies was assessed using a slightly modified version of the COnsensus-based Standards for the selection of health Measurement INstruments (COSMIN) checklist with a 4-point scale (i.e. excellent, good, fair, or poor) [15–17]. Two independent reviewers (LH, and either MC, CT, or LM) assessed the methodological quality of each study, and disagreements were discussed until consensus was reached. The final methodological quality score was determined by applying the ‘worse score counts’ method (i.e. if one item was scored ‘poor’, the final score of the methodological quality was scored as ‘poor’) for each study separately.

Reliability, measurement error, internal consistency, and structural validity were rated using the designated COSMIN boxes, while convergent, criterion, and construct validity were rated as construct validity. None of the studies examined criterion validity, although this term was used in some studies that actually assessed construct validity. Content validity was not rated as too little information was available on the methods used for developing the questionnaire. Instead, a description of the questionnaire was included in the results section. None of the included studies examined the responsiveness of sedentary behavior questionnaires in children or adolescents.

One slight modification was applied to the original COSMIN, i.e. the percentage agreement was added as an excellent statistical method in the measurement error box as it is considered a parameter of measurement error rather than reliability [18]. For completing the reliability box, standards previously described by Chinapaw et al. [19] were used to assess the appropriateness of the time interval in a test–retest reliability study; i.e. (i) questionnaires recalling a usual week should have a time interval between >1 day and <3 months; (ii) questionnaires recalling the previous week should have a time interval between >1 day and <2 weeks; and (iii) questionnaires recalling the previous day should have a time interval between >1 day and <1 week.

2.6 Questionnaire Quality Assessment

2.6.1 Reliability

Reliability refers to the extent to which scores for persons who have not changed are the same, with repeated measurement under several conditions [20]. The outcomes regarding reliability of the included questionnaires were seen as acceptable in the following situations: (i) an outcome of >0.70 for intraclass correlations and kappa values [21]; or (ii) an outcome of >0.80 for Pearson and Spearman correlations as a result of not taking systematic errors into account [22]. For an adequate measurement error the smallest detectable change (SDC) should be smaller than the minimal important change (MIC) [21]. Internal consistency was considered acceptable when Cronbach’s alphas were calculated on unidimensional scales and were between 0.70 and 0.95 [21].

The majority of studies provided separate correlations for the different constructs of sedentary behavior, as presented in the questionnaire, e.g. providing separate correlations for watching television, passive transport, and reading. Therefore, to obtain a final reliability rating, an overall evidence rating was applied in the present review, incorporating all available correlations for each questionnaire per study. A questionnaire received a positive evidence rating (+) when there were ≥80 % acceptable correlations, a mixed evidence rating (+/−) when the acceptable correlations were ≥50 and <80 %, and a negative rating (−) when there were <50 % acceptable correlations. No evidence rating for measurement error could be conducted as information on the MIC is currently lacking for all included questionnaires, which is needed for interpretation of the findings. Therefore, only a description of results is given.

2.6.2 Validity

Validity refers to the degree to which a measurement instrument measures what it is supposed to measure [20]. Validity concerns three measurement properties, i.e. content validity, structural validity, and construct validity. Content validity refers to the degree to which the content of a questionnaire adequately reflects the constructs to be measured [20]; structural validity refers to the degree to which the scores of a questionnaire are an adequate reflection of the dimensionality of the construct to be measured [20]; and construct validity refers to the degree to which the scores of a measurement instrument agree with hypotheses, e.g. agreement with scores of another measurement instrument [20]. In case of structural validity, a factor analysis was considered appropriate if the explained amount of variance by the extracted factors was at least 50 % of when the comparative fit index (CFI) was >0.95 [21, 22]. However, as most of the included construct validity studies lacked a priori formulated hypotheses it was unclear what was expected, making it difficult to interpret these results. Table 1 presents the criteria for judging the results of construct validity studies. Level 1 indicates strong evidence, level 2 indicates moderate evidence, and level 3 indicates weak evidence, yet worthwhile to investigate further. Similar to the reliability rating, an overall evidence rating for construct validity was applied, incorporating all available correlations provided for each questionnaire per study. As no hypotheses for validity were available in relation to mean differences and limits of agreement, only a description of the results is included in Sect. 3.

3 Results

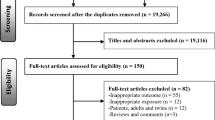

A total of 3049, 4384, and 2016 studies were identified in the PubMed, EMBASE, and SPORTDiscus databases, respectively. After removing duplicates, 7904 studies remained. After screening titles and abstracts, 72 full-text papers were assessed for eligibility, of which 30 met the inclusion criteria. Another 16 studies were found through cross-reference searches. Eventually, 46 studies on 46 questionnaires were included (Fig. 1), of which 33 assessed test–retest reliability, nine assessed measurement error, two assessed internal consistency, 22 assessed construct validity, eight assessed content validity, and two assessed structural validity. Two of the included questionnaires were assessed by two studies, i.e. the Patient-Reported Outcome Measurement Information System [23, 24] and the Girls Health Enrichment Multi-site Studies Activity Questionnaire [25, 26]. In addition, multiple modified versions of questionnaires were examined by the included studies, i.e. two versions of the Canadian Health Measures Survey [27, 28], the Adolescent Sedentary Activity Questionnaire [29, 30], the International Physical Activity Questionnaire–Short Form [31, 32], and the Youth Risk Behavior Survey [34, 35]. Furthermore, three versions of the Self-Administered Physical Activity Checklist [36–38] and the Health Behavior in School-aged Children were included [39–41]. The remaining questionnaires were only examined by one single study.

3.1 Description of Questionnaires

Electronic supplementary material Table S1 provides a description of the included questionnaires, stratified by age group, i.e. preschoolers younger than 6 years of age, children aged between 6 and 12 years, and adolescents from the age of 12 years. Of the included questionnaires, 8 were designed for preschoolers, 24 were designed for children, and 14 were designed for adolescents. Nineteen of the questionnaires merely focused on screen time, while 27 focused on a variety of constructs of sedentary behavior. Response categories were mostly categorical (e.g. Likert scale) or continuous (e.g. time spent, in hours and/or minutes). Recall periods varied across questionnaires, including past few months, last week, previous day, and a usual/habitual/typical day/week.

3.2 Test–Retest Reliability

Table 2 summarizes the test–retest reliability studies, of which four were in preschoolers, 18 were in children, and 11 were in adolescents and older children. None of the studies received an excellent methodological quality rating, 9 had a good rating, 17 had a fair rating, 6 had a poor rating, and 1 of the studies received both a fair rating and a poor rating due to the use of multiple time intervals. A small sample size and no description of how missing items were handled were the major reasons for the low methodological quality ratings. In preschoolers, the Energy Balance-Related Behaviors self-administered primary caregiver questionnaire [42] seemed the most reliable, currently available questionnaire for assessing sedentary behavior, although the methodological quality of this study was only rated as fair and the evidence was mixed. For children and adolescents, the most reliable, currently available questionnaires were the Sedentary Behavior and Sleep Scale [43] (i.e. good methodological quality, mixed evidence rating) and the Adolescent Sedentary Activity Questionnaire (Brazilian version) [30] (i.e. fair methodological quality, positive evidence rating), respectively.

3.3 Measurement Error

Table 3 shows an overview of the nine studies that assessed the measurement error of questionnaires. One of the included measurement error studies received a good methodological quality rating, while eight of the studies received a fair rating, predominantly due to the lack of describing how missing items were handled. The questionnaires showing the highest percentage of agreement between two measurements are the ‘Questionnaire for measuring length of sleep, television habits and computer habits’ [44], and the ‘Measures of out-of-school sedentary and travel behaviors of the iHealt(H) study’ [45], for children and adolescents, respectively.

3.4 Internal Consistency

Internal consistency was analyzed in two of the included studies, demonstrating acceptable Cronbach’s alphas (i.e. 0.75 for the unidimensional sedentary lifestyle subscale [35], and 0.78 for the unidimensional sedentary behavior subscale [46]). The methodological quality was rated as good and excellent, respectively.

3.5 Construct Validity

Of the included construct validity studies, 3 included preschoolers as a study population, 13 studies included children, and 6 studies included adolescents and older children. Table 4 summarizes the construct validity studies (n = 21) examining the relationship of the questionnaire with other measurement instruments. None of these studies received an excellent or good methodological quality rating, 5 received a fair rating, and 16 were rated as poor. Major reasons for the low methodological quality scores were both the lack of a priori formulated hypotheses and the use of comparison measures with unknown measurement properties. In preschoolers, the Direct Estimate [47] seemed the most valid, currently available, sedentary behavior questionnaire as it received a positive level 2 evidence rating and a fair methodological quality rating. In children, the Youth Activity Profile [52] seemed the most valid questionnaire as it received a positive level 2 evidence rating and a fair methodological quality. Studies in adolescents only received negative evidence ratings, thus no final conclusion regarding the most valid sedentary behavior questionnaires can be drawn. One of the construct validity studies was not included in Table 4 [46] as it examined construct validity by testing a hypothesis with regard to differences in scores between known groups. On the Energy Retention Behavior Scale, scores for known group validity demonstrated statistically significant higher scores for overweight or obese children than for underweight or normal-weight children, which was in line with the a priori hypothesis.

3.6 Structural Validity

Two of the included studies analyzed the structural validity of the questionnaire, i.e. the Korean Youth Risk Behavior Survey (KYRBS) [35] and the Energy Retention Behavior Scale for Children (ERB–C scale) [46]. Structural validity was assessed by performing confirmatory factor analysis. The KYRBS includes five subscales, including one sedentary lifestyle subscale, while the ERB–C scale includes two subscales, one of which is sedentary behavior. Both studies showed acceptable fit of the expected factor structures, i.e. Normed Fit Index (NFI) 0.960, Turker–Lewis Index (TLI) 0.956, CFI 0.969 and root mean squared error of approximation (RMSEA) 0.034 for the KYRBS [35], and NFI 0.91, non-NFI (NNFI) 0.92, CFI 0.95, and RMSEA 0.08 for the ERB–C scale [46]. The methodological quality was rated as good and excellent, respectively.

3.7 Content Validity

Eight studies evaluated the content validity of the questionnaire, of which four predominantly focused on the comprehensibility of the questionnaire by asking children or parents about, for example, terminology, appropriateness of reading level, ambiguity, and other difficulties [29, 44, 46, 48]. The other four studies focused on the content of the questionnaire by consulting experts, e.g. researchers active in the field of physical activity, about, for example, relevance of items [30, 44, 46, 48]. Due to the minimal information about the procedures available in the greater part of the included studies, it was impossible to assess the quality of the content validity studies and to thus interpret the results. In addition, in seven of the included studies, pilot testing of the questionnaire for comprehensibility was incorporated. Unfortunately, too little information was provided to assess the methodology of the content validity examination [33, 38, 45, 49–52]. Additionally, translation processes were mentioned in six [24, 30, 42, 45, 53, 54] of the included studies. Due to minimal information about the methods used, the quality of the greater part of these studies was unclear.

4 Discussion

The aim of this review was to summarize existing evidence on the measurement properties of self-report or proxy-report questionnaires assessing sedentary behavior in children and adolescents under the age of 18 years. Additionally, we summarized the characteristics of the included self-report and proxy-report questionnaires. Our summary yielded a wide variety of questionnaires, designed for different target populations and assessing different constructs and dimensions of sedentary behavior. Test–retest reliability correlations of the included questionnaires ranged from 0.06 to 0.97. In addition, correlations found for construct validity ranged from −0.16 to 0.84. Although a number of studies received a positive evidence rating for test–retest reliability or construct validity, the methodological quality of the studies was mostly rated as fair or poor. Unfortunately, no questionnaires assessing total sedentary behavior or other constructs of sedentary behavior with both a positive evidence rating for reliability and validity were available. Hence, we have no conclusive recommendation about the best available sedentary behavior self-report or proxy-report questionnaire in children and adolescents.

4.1 Reliability and Measurement Error

As the methodological quality of the included studies assessing test–retest reliability and/or measurement error was mainly rated as fair or poor, no definite conclusion can be drawn about the reliability of the majority of the examined sedentary behavior questionnaires. Moreover, the lack of multiple studies assessing the same questionnaire in the same target population further limited the ability to draw final conclusions. To achieve higher methodological quality for both reliability and measurement error, we recommend that future studies include detailed descriptions of the methods used, e.g. how missing items were handled, and to include an appropriate sample size [15, 17]. Additionally, as correlations varied across different recall periods (e.g. usually, or yesterday), and different time frames and constructs of sedentary behavior (e.g. weekdays and weekend days, overall sedentary behavior, and watching television), no conclusion can be drawn about specific time frames or constructs of sedentary behavior being more reliable than others. Additionally, when measurement errors occur, information on the MIC should be available to allow interpretation of the results [21]. To the best of our knowledge, no information on the MIC is available as yet.

4.2 Construct Validity

Due to the low methodological quality of the included studies examining validity, and the lack of multiple studies assessing the same questionnaire, no conclusive conclusion can be drawn about the validity of the examined questionnaires. We specifically recommend future validity studies to describe a priori hypotheses, and choose comparison measures with known and acceptable measurement properties. The low methodological quality of all included validity studies might partly explain the high prevalence of negative evidence ratings, i.e. <50 % acceptable correlations.

Studies demonstrating acceptable correlations often used comparison measures providing weaker levels of evidence, i.e. other questionnaires or cognitive interviews (level 3 evidence). In general, higher correlations were found when lower levels of evidence comparison measures were used. A possible explanation might be the equivalence of dependence on recall in both the questionnaire under study and the comparison measure, i.e. other questionnaires or cognitive interviews, compared with objective, higher levels of evidence comparison measures, e.g. inclinometers and accelerometers. Other potential factors that may explain the low correlations may be inadequate content validity, the lack of a gold standard, and a mismatch in time frames between questionnaire and comparison measures. As the studies lack information about the development of the questionnaires (e.g. a justification of the constructs included, and the dimensions measured), and lack appropriate testing of the relevance, comprehensiveness, and comprehensibility of the content of the questionnaires, it remains unclear whether the content validity of the included questionnaires is acceptable. Evaluating the content validity of questionnaires is essential to obtaining insight into the comprehensibility of the questionnaire for the target population, and to ensure all relevant aspects of the construct are measured and that no irrelevant aspects are included [20]. Without evaluating these aspects of validity, there is no certainty the questionnaire measures what it is supposed to measure. The limited attention to content validity is also shown by the wide variety of constructs (e.g. watching television, quiet play, studying), and dimensions (e.g. duration and frequency) being measured by the included questionnaires. A justification of these choices is lacking. Only two studies, by Tucker et al. [23, 24], provided sufficient description and support for the development of their questionnaire, e.g. experts of the field and the target population were consulted and contributed to the content of the questionnaire.

Furthermore, studies using a translated version of an existing questionnaire often did not report sufficient information about the translation processes. Only the studies by de Fátima Guimarães et al. [30] and Tucker et al. [24] included adequate descriptions of the translation process, e.g. translations by language experts, and review by experts in the field. Moreover, cross-cultural validation of the translated questionnaires was often not conducted, making it impossible to examine whether the questionnaire truly measured the same constructs as the original questionnaire [22].

Additionally, the available objective measures of sedentary behavior, e.g. inclinometers or accelerometers, are still subject to subjectivity, e.g. the definition of non-wear time, the minimum number of valid hours per day and number of valid days, and the selection of a cut point for sedentary behavior remain subjective decisions. The accelerometer cut points for sedentary behavior in the included studies varied from <100 to <699 cpm, leading to different estimates of sedentary time. Importantly, constructs measured by questionnaire and accelerometer may not correspond when cut points deviating from <100 cpm are applied [55] as measured constructs may not match, i.e. they may exclude parts of sedentary time or include light physical activity, respectively. The problem of mismatched constructs also occurs in some cases due to non-corresponding time frames addressed by the measurement instrument and the comparison measures, e.g. leisure time versus all day.

4.3 Strengths and Limitations

A major strength of our review is that the methodological quality rating was performed separately from the interpretation of the findings. This makes the final evidence rating more transparent, e.g. whether negative evidence ratings are due to low-quality questionnaires in case of good or excellent methodological quality studies, or may be biased, in case of poor methodological quality. Additionally, through structured cross-reference searches, we also included studies that were not primarily aimed at examining measurement properties. Another strength is that at least two independent authors conducted the literature search and data extraction, as well as the quality rating. However, our review also has limitations. As most included studies did not report all details needed for an adequate quality rating, the quality ratings of the studies may have been underestimated. We did not contact authors for additional information as this would favor recent studies over older studies, thereby optimizing quality ratings of recent papers. Furthermore, only English-language papers were included, and as a result we might have missed relevant studies. Moreover, in some studies that were found through cross-reference searches, examining the measurement properties was not the primary aim. There is a possibility that not all such studies were found through cross-reference searches, yet finding these studies through systematic literature searches seems impossible as information on the assessment of measurement properties or sedentary behavior assessment by the questionnaires is lacking in the titles and abstracts.

4.4 Recommendations for Future Studies

Studies focusing on the development of questionnaires need to pay more attention to content validity. Moreover, the content validity of currently available questionnaires needs to be examined by testing the relevance, comprehensiveness, and comprehensibility of the content of the questionnaires, using appropriate qualitative methods [22]. The COSMIN group is currently developing detailed standards for assessing content validity of health status questionnaires, which may also be useful for assessing content validity of sedentary behavior questionnaire (see http://www.cosmin.nl for more information). Criteria that, in our opinion, need to be considered are (i) a clear description and adequate reflection of the construct to be measured; (ii) comprehensibility of questions; (iii) appropriate response options; (iv) appropriate recall period; (v) appropriate mode of administration; and (vi) an appropriate scoring algorithm. A justification of choices needs to be provided, for example based on input from experts in the field and the target population.

More high-quality research on construct validity, reliability, measurement error, and responsiveness of the questionnaire is also needed, as well as studies on internal consistency and structural validity for questionnaires where this is applicable. To acquire high methodological quality studies, we recommend using a standardized tool, e.g. the COSMIN checklist [16, 56]. This tool can be used for the design of the study and provides an overview of what should be reported. Additionally, we recommend that when reviewers and journal editors evaluate studies, they take into consideration whether the investigators used such a standardized tool in order to prevent publishing of studies with inadequate information and low methodological quality. This need for a standardized tool for the assessment of measurement properties is consistent with recommendations by Kelly et al. [57].

In addition, for the construct validity of questionnaires assessing total sedentary time, we recommend using more objective, high-level evidence, comparison measures with available and acceptable measurement properties, e.g. inclinometers or accelerometers, instead of using measurement instruments with unknown or unacceptable measurement properties. Furthermore, appropriate accelerometer cut points for sedentary behavior need to be applied, e.g. <100 cpm [55, 58]. However, as the accuracy of accelerometers for measuring sedentary behavior remains questionable, and distinguishing sitting from standing quietly remains problematic [11], we recommend using the activPAL as an objective comparison measure for total sedentary time [9]. Importantly, the questionnaire in use and the comparison measure need to measure corresponding constructs and/or time frames. Additionally, stating a priori hypotheses should be carried out at all times to ensure unbiased interpretation of the results.

Finally, as a wide variety of questionnaires are available, we recommend researchers to critically review whether existing or slightly modified questionnaires are adequate for use in new studies, instead of developing new questionnaires. Moreover, we recommend authors of papers on measurement properties include the questionnaire under study and provide more details about its characteristics, e.g. questions and response options, so that researchers can assess whether existing questionnaires are adequate for their research.

5 Conclusions

None of the self- or proxy-report sedentary behavior questionnaires for children and adolescents included in this review were considered both valid and reliable. Whether this is due to the low methodological quality of the included studies or to poorly developed questionnaires is unclear. In addition, the lack of multiple studies assessing both the validity and reliability of a questionnaire in the same study population also hampered our ability to draw a definite conclusion on the best available instruments. Therefore, we recommend more high-quality studies examining the measurement properties of the most promising sedentary behavior questionnaires. Acquiring high methodological quality can be obtained by using standardized tools such as the COSMIN checklist [16].

References

Network Sedentary Behaviour Research. Letter to the editor: standardized use of the terms “sedentary” and “sedentary behaviours”. Appl Physiol Nutr Metab. 2012;37:540–2.

Hallal PC, Andersen LB, Bull FC, et al. Global physical activity levels: surveillance progress, pitfalls, and prospects. Lancet. 2012;380:247–57.

Olds TS, Maher CA, Ridley K, et al. Descriptive epidemiology of screen and non-screen sedentary time in adolescents: a cross sectional study. Int J Behav Nutr Phys Act. 2010;7:92.

de Rezende LFM, Rodrigues Lopes M, Rey-López JP, et al. Sedentary behavior and health outcomes: an overview of systematic reviews. PLoS One. 2014;9:e105620.

Chinapaw MJM, Proper KI, Brug J, et al. Relationship between young peoples’ sedentary behaviour and biomedical health indicators: a systematic review of prospective studies. Obes Rev. 2011;12:e621–32.

van Ekris E, Altenburg TM, Singh A, et al. An evidence-update on the prospective relationship between childhood sedentary behaviour and biomedical health indicators: a systematic review and meta-analysis. Obes Rev. 2016 (Epub 3 Jun 2016).

Lubans DR, Hesketh K, Cliff DP, et al. A systematic review of the validity and reliability of sedentary behaviour measures used with children and adolescents. Obes Rev. 2011;12:781–99.

Basterfield L, Adamson AJ, Pearce MS, et al. Stability of habitual physical activity and sedentary behavior monitoring by accelerometry in 6- to 8-year-olds. J Phys Act Health. 2011;8:543–7.

Aminian S, Hinckson EA. Examining the validity of the ActivPAL monitor in measuring posture and ambulatory movement in children. Int J Behav Nutr Phys Act. 2012;9:119.

Welk GJ, Corbin CB, Dale D. Measurement issues in the assessment of physical activity in children. Res Q Exerc Sport. 2000;71:59–73.

de Vries SI, Engels M, Garre FG. Identification of children’s activity type with accelerometer-based neural networks. Med Sci Sports Exerc. 2011;43:1994–9.

Sallis JF. Self-report measures of children’s physical activity. J Sch Health. 1991;61:215–9.

Kohl HW, Fulton JE, Caspersen CJ. Assessment of physical activity among children and adolescents: a review and synthesis. Prev Med. 2000;31:S54–76.

Terwee CB, Mokkink LB, van Poppel MNM, et al. Qualitative attributes and measurement properties of physical activity questionnaires: a checklist. Sports Med. 2010;40:525–37.

Terwee CB, Mokkink LB, Knol DL, et al. Rating the methodological quality in systematic reviews of studies on measurement properties: a scoring system for the COSMIN checklist. Qual Life Res. 2012;21:651–7.

Mokkink LB, Terwee CB, Patrick DL, et al. The COSMIN checklist for assessing the methodological quality of studies on measurement properties of health status measurement instruments: an international Delphi study. Qual Life Res. 2010;19:539–49.

Terwee CB. COSMIN checklist with 4-point scale. 2011. Available at: http://www.cosmin.nl/cosmin_checklist.html. Accessed 1 Aug 2015.

de Vet HCW, Mokkink LB, Terwee CB, et al. Clinicians are right not to like Cohen’s κ. BMJ. 2013;346:f2125.

Chinapaw MJM, Mokkink LB, van Poppel MNM, et al. Physical activity questionnaires for youth: a systematic review of measurement properties. Sports Med. 2010;40:539–63.

Mokkink LB, Terwee CB, Patrick DL, et al. The COSMIN study reached international consensus on taxonomy, terminology, and definitions of measurement properties for health-related patient-reported outcomes. J Clin Epidemiol. 2010;63:737–45.

Terwee CB, Bot SDM, de Boer MR, et al. Quality criteria were proposed for measurement properties of health status questionnaires. J Clin Epidemiol. 2007;60:34–42.

de Vet HCW, Terwee CB, Mokkink LB, et al. Measurement in medicine: a practical guide, 1st ed. Cambridge: Cambridge University Press; 2011.

Tucker CA, Bevans KB, Teneralli RE, et al. Self-reported pediatric measures of physical activity, sedentary behavior, and strength impact for PROMIS: conceptual framework. Pediatr Phys Ther. 2014;26:376–84.

Tucker CA, Bevans KB, Teneralli RE, et al. Self-reported pediatric measures of physical activity, sedentary behavior, and strength impact for PROMIS: item development. Pediatr Phys Ther. 2014;26:385–92.

Treuth MS, Sherwood NE, Butte NF, et al. Validity and reliability of activity measures in African–American girls for GEMS. Med Sci Sports Exerc. 2003;35:532–9.

Treuth MS, Sherwood NE, Baranowski T, et al. Physical activity self-report and accelerometry measures from the girls health enrichment multi-site studies. Prev Med. 2004;38:43–9.

Colley RC, Wong SL, Garriguet D, et al. Physical activity, sedentary behaviour and sleep in canadian children: parent-report versus direct measures and relative associations with health risk. Health Rep. 2012;23:45–52.

Sarker H, Anderson LN, Borkhoff CM, et al. Validation of parent-reported physical and sedentary activity by accelerometry in young children. BMC Res Notes. 2015;8:735.

Hardy LL, Booth ML, Okely AD. The reliability of the Adolescent Sedentary Activity Questionnaire (ASAQ). Prev Med. 2007;45:71–4.

de Fátima Guimarães R, da Silva MP, Legnani E, et al. Reproducibility of adolescent sedentary activity questionnaire (ASAQ) in Brazilian adolescents. Braz J Kinanthropom Hum Perform. 2013;15:276–85.

Rangul V, Holmen TL, Kurtze N, et al. Reliability and validity of two frequently used self-administered physical activity questionnaires in adolescents. BMC Med Res Methodol. 2008;8:47.

Wang C, Chen P, Zhuang J. Validity and reliability of international physical activity questionnaire-short form in Chinese youth. Res Q Exerc Sport. 2013;84:S80–6.

Ching PLYH, Dietz WH. Reliability and validity of activity measures in preadolescent girls. Pediatr Exerc Sci. 1995;7:389–99.

Brener ND, Kann L, McManus T, et al. Reliability of the 1999 youth risk behavior survey questionnaire. J Adolesc Health. 2002;31:336–42.

Park JM, Han AK, Cho YH. Construct equivalence and latent means analysis of health behaviors between male and female middle school students. Asian Nurs Res. 2011;5:216–21.

Affuso O, Stevens J, Catellier D, et al. Validity of self-reported leisure-time sedentary behavior in adolescents. J Negat Results Biomed. 2011;10:2.

Brown TD, Holland BV. Test-retest reliability of the self-assessed physical activity checklist. Percept Mot Skills. 2004;99:1099–102.

Sallis JF, Strikmiller PK, Harsha DW, et al. Validation of interviewer- and self-administered physical activity checklists for fifth grade students. Med Sci Sports Exerc. 1996;28:840–51.

Vereecken CA, Todd J, Roberts C, et al. Television viewing behaviour and associations with food habits in different countries. Public Health Nutr. 2006;9:244–50.

Liu Y, Wang M, Tynjälä J, et al. Test-retest reliability of selected items of Health Behaviour in School-aged Children (HBSC) survey questionnaire in Beijing, China. BMC Med Res Methodol. 2010;10:73.

Bobakova D, Hamrik Z, Badura P, et al. Test–retest reliability of selected physical activity and sedentary behaviour HBSC items in the Czech Republic, Slovakia and Poland. Int J Public Health. 2014;60:59–67.

González-Gil EM, Mouratidou T, Cardon G, et al. Reliability of primary caregivers reports on lifestyle behaviours of European pre-school children: the ToyBox-study. Obes Rev. 2014;15:61–6.

Mellecker RR, McManus AM, Matsuzaka A. Validity and reliability of the sedentary behavior and sleep scale (SBSS) in young Hong Kong Chinese children. Asian J Exerc Sports Sci. 2012;9:21–36.

Garmy P, Jakobsson U, Nyberg P. Development and psychometric evaluation of a new instrument for measuring sleep length and television and computer habits of Swedish school-age children. J Sch Nurs. 2012;28:138–43.

Cerin E, Sit CHP, Huang Y-J, et al. Repeatability of self-report measures of physical activity, sedentary and travel behaviour in Hong Kong adolescents for the iHealt(H) and IPEN—adolescent studies. BMC Pediatr. 2014;14:142.

Chen S-W, Cheng C-P, Wang R-H, et al. Development and psychometric testing of an energy retention behavior scale for children. J Nurs Res. 2015;23:47–55.

Anderson DR, Field DE, Collins PA, et al. Estimates of young children’s time with television: a methodological comparison of parent reports with time-lapse video home observation. Child Dev. 1985;56:1345–57.

Dwyer GM, Hardy LL, Peat JK, et al. The validity and reliability of a home environment preschool-age physical activity questionnaire (Pre-PAQ). Int J Behav Nutr Phys Act. 2011;8:86.

Bacardi-Gascón M, Reveles-Rojas C, Woodward-Lopez G, et al. Assessing the validity of a physical activity questionnaire developed for parents of preschool children in Mexico. J Health Popul Nutr. 2012;30:439–46.

Wong SL, Leatherdale ST, Manske SR. Reliability and validity of a school-based physical activity questionnaire. Med Sci Sports Exerc. 2006;38:1593–600.

Schmitz KH, Harnack L, Fulton JE, et al. Reliability and validity of a brief questionnaire to assess television viewing and computer use by middle school children. J Sch Health. 2004;74:370–7.

Saint-Maurice PF, Welk GJ. Validity and calibration of the youth activity profile. PLoS One. 2015;10:e0143949.

Singh AS, Vik FN, Chinapaw MJM, et al. Test-retest reliability and construct validity of the ENERGY-child questionnaire on energy balance-related behaviours and their potential determinants: the ENERGY-project. Int J Behav Nutr Phys Act. 2011;8:136.

Huang YJ, Wong SHS, Salmon J. Reliability and validity of the modified Chinese version of the Children’s Leisure Activities Study Survey (CLASS) questionnaire in assessing physical activity among Hong Kong children. Pediatr Exerc Sci. 2009;21:339–53.

Ridgers ND, Salmon J, Ridley K, et al. Agreement between activPAL and ActiGraph for assessing children’s sedentary time. Int J Behav Nutr Phys Act. 2012;9:15.

Terwee CB, Mokkink LB, Hidding LM, et al. Comment on “Should we reframe how we think about physical activity and sedentary behavior measurement? Validity and reliability reconsidered”. Int J Behav Nutr Phys Act. 2016;13:66.

Kelly P, Fitzsimons C, Baker G. Should we reframe how we think about physical activity and sedentary behaviour measurement? Validity and reliability reconsidered. Int J Behav Nutr Phys Act. 2016;13:32.

Yıldırım M, Verloigne M, de Bourdeaudhuij I, et al. Study protocol of physical activity and sedentary behaviour measurement among schoolchildren by accelerometry: cross-sectional survey as part of the ENERGY-project. BMC Public Health. 2011;11:182.

Bonn SE, Surkan PJ, Trolle Lagerros Y, et al. Feasibility of a novel web-based physical activity questionnaire for young children. Pediatr Rep. 2012;4:127–9.

Janz KF, Broffitt B, Levy SM. Validation evidence for the Netherlands physical activity questionnaire for young children: the Iowa Bone Development Study. Res Q Exerc Sport. 2005;76:363–9.

Salmon J, Campbell KJ, Crawford DA. Television viewing habits associated with obesity risk factors: a survey of Melbourne schoolchildren. Med J Aust. 2006;184:64–7.

Vik FN, Lien N, Berntsen S, et al. Evaluation of the UP4FUN intervention: a cluster randomized trial to reduce and break up sitting time in European 10-12-year-old children. PLoS One. 2015;10:e0122612.

Bringolf-Isler B, Mäder U, Ruch N, et al. Measuring and validating physical activity and sedentary behavior comparing a parental questionnaire to accelerometer data and diaries. Pediatr Exerc Sci. 2012;24:229–45.

Barbosa N, Sanchez CE, Vera JA, et al. A physical activity questionnaire: reproducibility and validity. J Sports Sci Med. 2007;6:505–18.

Chinapaw MJM, Slootmaker SM, Schuit AJ, et al. Reliability and validity of the Activity Questionnaire for Adults and Adolescents (AQuAA). BMC Med Res Methodol. 2009;9:58.

Strugnell C, Renzaho A, Ridley K, et al. Reliability of the modified child and adolescent physical activity and nutrition survey, physical activity (CAPANS-PA) questionnaire among Chinese-Australian youth. BMC Med Res Methodol. 2011;11:122.

Philippaerts RM, Matton L, Wijndaele K, et al. Validity of a physical activity computer questionnaire in 12- to 18-year-old boys and girls. Int J Sports Med. 2006;27:131–6.

Rey-López JP, Ruiz JR, Ortega FB, et al. Reliability and validity of a screen time-based sedentary behaviour questionnaire for adolescents: the HELENA study. Eur J Public Health. 2012;22:373–7.

Busschaert C, De Bourdeaudhuij I, Van Holle V, et al. Reliability and validity of three questionnaires measuring context-specific sedentary behaviour and associated correlates in adolescents, adults and older adults. Int J Behav Nutr Phys Act. 2015;12:117.

Wright ND, Groisman-Perelstein AE, Wylie-Rosett J, et al. A lifestyle assessment and intervention tool for pediatric weight management: the HABITS questionnaire. J Hum Nutr Diet. 2011;24:96–100.

Sithole F, Veugelers PJ. Parent and child reports of children’s activity. Health Rep. 2008;19:19–24.

Hardy LL, Bass SL, Booth ML. Changes in sedentary behavior among adolescent girls: a 2.5-year prospective cohort study. J Adolesc Health. 2007;40:158–65.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Funding

The contributions of Teatske Altenburg and Mai Chinapaw were funded by the Netherlands Organization for Health Research and Development (ZonMw Project Number 91211057). No other sources of funding were used to assist in the preparation of this article.

Conflict of interest

Lidwine Mokkink and Caroline Terwee are founders of the COSMIN initiative. Lisan Hidding, Teatske Altenburg and Mai Chinapaw declare that they have no conflicts of interest relevant to the content of this review.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Hidding, L.M., Altenburg, T.M., Mokkink, L.B. et al. Systematic Review of Childhood Sedentary Behavior Questionnaires: What do We Know and What is Next?. Sports Med 47, 677–699 (2017). https://doi.org/10.1007/s40279-016-0610-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40279-016-0610-1