Abstract

Cost-effectiveness analyses (CEAs) can be used to assess the value of diagnostics in clinical practice. Due to the introduction of the European in vitro diagnostic and medical devices regulations, more clinical data on new diagnostics may become available, which may improve the interest and feasibility of performing CEAs. We present eight recommendations on the reporting and design of CEAs of diagnostics. The symptoms patients experience, the clinical setting, locations of test sampling and analysis, and diagnostic algorithms should be clearly reported. The used time horizon should reflect the time horizon used to model the treatment after the diagnostic pathway. Quality-adjusted life-years (QALYs) or disability-adjusted life-years (DALYs) should be used as the clinical outcomes but may be combined with other relevant outcomes, such as real options value. If the number of tests using the same equipment can vary, the economy of scale should be considered. An understandable graphical representation of the various diagnostic algorithms should be provided to understand the results, such as an efficiency frontier. Finally, the budget impact and affordability should be considered. These recommendations can be used in addition to other, more general, recommendations, such as the Consolidated Health Economic Evaluation Reporting Standards (CHEERS) or the reference case for economic evaluation by the international decision support initiative.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

To assess the value of diagnostic interventions, cost-effectiveness analyses can be used; however, we previously identified some gaps in the design and reporting of these studies. |

Compared with pharmaceutical interventions, assessing the cost effectiveness of diagnostic strategies can be more challenging, as various diseases or treatment options may be important to consider. |

We provide eight recommendations related to the design and reporting of health-economic analyses of diagnostics, which can be used in addition to more general guidelines. |

1 Introduction

Over the past decades, policy makers in the healthcare sector have tried to control the rising costs of pharmaceuticals in different ways [1, 2]. As one approach, value-based pricing of new drugs aims to maximise the health-related and economic outcomes given a prespecified willingness to pay; in many countries, this has become a widespread method to assess the pricing and reimbursement of new pharmaceuticals entering the market [3, 4]. In recent years, attention has also expanded towards companion diagnostics for innovative treatments: highly specialised diagnostic tests paired to a specific drug in the context of what is labelled personalised medicine [5, 6]. Personalised medicine entails that drugs are targeted more to specific patient subgroups, with the aim of reducing the uncertainty of whether the drug will be effective before administration and correspondingly improve cost effectiveness of the drug considered.

Diagnostic tests are used more widely in modern medicine than just as companion diagnostics, and often in less well-defined populations. Examples include C-reactive protein (CRP) tests to check whether a patient with a cough has a viral or bacterial infection, an international normalised ratio (INR) test to diagnose bleeding disorders, or an HbA1c test for diabetes. Many national pharmacoeconomic guidelines nowadays also consider the assessment of non-pharmaceuticals, such as diagnostics, although, in practice, these analyses are not as common [7]. There is limited evidence on the pricing and reimbursement policies of diagnostics [8, 9]. A recent report on pricing and reimbursement policies in various European countries concluded that health technology assessment is rarely used for diagnostics [8]. We believe the role of the cost effectiveness of diagnostic methods will increase in the coming years, but, with that, certain challenges will arise.

Compared with pharmaceuticals, for which the market entry regulations are well established for various jurisdictions [2], the evidence for diagnostics, and medical devices in general, is very limited [8]. In 2017, the new European Union (EU) regulation on in vitro diagnostic (IVD) medical devices was approved, which will come into full effect starting in 2022 [10]. An estimated 85% of IVDs will be under the oversight of a notified body, compared with 20% previously [11]. IVD companies will need to collect more data on the technical and clinical performance of new devices before market entry, and also increase postmarket surveillance [11]. Consistent and high-quality data will provide healthcare professionals and policy makers with more tools to assess the safety and effectiveness of new IVDs in clinical practice. We expect this will also lead to an increase in cost-effectiveness analyses (CEA) of these devices. Diagnostics are not limited to IVDs; software or devices used for the diagnosis of a disease fall under the EU regulation on medical devices (MDR) [12] and similar regulations related to oversight apply [11]. An example of this would be a smartphone application (app) used by clinicians to determine the most likely disease and optimal treatment, based on a patient’s symptoms.

2 Aim and Approach

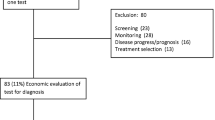

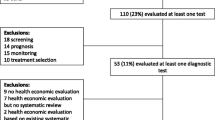

We recently systematically reviewed many CEAs of diagnostic strategies of infectious disease, focusing on the modelling techniques used [13, 14]. In the process, we identified several gaps in the reporting of diagnostic interventions, but also common structural problems related to the design of health-economic models. These gaps included incomplete descriptions of the assessed diagnostic and setting, short time horizons, and limited use of generalisable outcomes. Additionally, we consider our experience in consulting on the health-economic aspects of clinical trials of diagnostics. Our aim was to provide specific recommendations to aid in the design and reporting of CEAs of diagnostics.

Excellent recommendations are already available to aid in the design and reporting of economic evaluations. The Consolidated Health Economic Evaluation Reporting Standards (CHEERS) statement is a collection of 24 recommendations aiding in the reporting of the methods and results of economic analyses for interventions in healthcare [15]. CHEERS is not tailored to any specific intervention and can be used for preventive measures, diagnostics and treatment [15]. The International Decision Support Initiative’s reference case for economic evaluation provides 11 principles to guide the conduct and reporting of economic evaluations to improve their methodological quality and transferability [16]. The methodological specifications relate to the health outcomes used, and the estimation of costs and transparency, among others. However, due to their broad scope, these recommendations do not provide specific guidance for diagnostic strategies. We link this diagnostic-specific guidance to the related items of the more general CHEERS statement and the reference case [15, 16], to enable other researchers to use this guidance in addition to the already available recommendations.

3 Definition of Diagnostics

While many different tests are performed in the healthcare sector, not all of them can be considered diagnostic tests. We consider three types of strategies, depending on the aim of the test [17]:

-

Screening: Finding diseases in a defined population, in people without, or unaware of, symptoms [18].

-

Diagnosing: Identifying the most likely cause of, and, optionally, optimal treatment for, a previously undetected disease in a clinically suspect patient who is seeking care [13, 19]. A diagnostic specifically aimed at determining the optimal treatment option for a patient with a previously diagnosed disease is considered a companion diagnostic [5].

-

Monitoring: Periodic or continuous tests to observe a biological condition or function, including the effectiveness of treatment [20].

Although similar or identical tests may be used for each of the strategies, the decision problem related to the various strategies is quite different, hence each strategy presents unique challenges when designing a CEA. In this paper, we specifically focus on diagnostic strategies.

4 Recommendations

An overview of our recommendations is displayed in Table 1, including related CHEERS recommendations [15] and specifications from the reference case for economic evaluations [16]. The recommendations are explained in more detail below.

4.1 Target Population

A common way to specify a certain population in the medical field is to identify patients having a specific disease, for example heart failure patients or patients with neuroendocrine tumours. Especially in clinical trials, these specifications are often extended to patient characteristics such as age and comorbidities or with ranges of disease-specific biomarkers. When diagnosing a patient, a specific disease is often not yet known, but the symptoms are. These specific symptoms will influence the clinician’s decision to request additional diagnostic tests or use point-of-care (POC) diagnostics. Other determinants a clinician may use in deciding to use certain diagnostics include age, comorbidities and, if available, vaccination status.

Therefore, when specifying the target population of a diagnostic intervention, it is highly important to specify the symptoms patients have and other relevant determinants that may influence the clinician’s decision to continue diagnosing a patient. Additionally, it should be clear whether the patient population is screened, diagnosed, or monitored. However, this may be more difficult in the case of genomic tests, with potential spillover effects to relatives, where the population of interest is broader than just the patient tested [21]; the diagnosis of one patient may lead to the screening of family members or may inform reproductive planning.

4.2 Setting and Location

Linked to the target population are the setting and location. Populations presenting in primary care are different from patients who are referred to hospital care, who are different from patients admitted to the intensive care unit. In a healthcare system where the general practitioner (GP) has a gatekeeping role, a decision, based on clinical experience, to refer a patient to a hospital without performing any tests should already be regarded as a diagnostic intervention. The probability of having a disease will be higher in the hospital setting, considering the GP does not refer everyone and does not refer at random. Not all health systems rely on the gatekeeping role of the GP [22], and factors for patients seeking care differ culturally [23]. These factors will have an influence on the prevalence and severity of diseases at different settings within the healthcare sector. Hence, this context is important to include when describing the setting in which diagnostic tests are performed.

Another consideration linked to the setting is the location where the test sample will be collected and where it will be analysed, and how this affects the overall costs. Historically, samples were analysed in the laboratory, but, increasingly, testing will be performed at the POC [24]. Clearly specifying the location of sample collection and analysis is important, especially when a CEA compares different tests at different locations. This may be especially relevant for low- and middle-income countries (LMICs), where logistics can be more challenging. Although POC tests may be relatively expensive compared with tests analysed in large-scale laboratories [24], having a test result available during a consult can more directly influence a clinician’s decision on prescribing treatment and enables the clinician to use the information when communicating with the patient [25].

4.3 Comparators

The strategies being compared in the CEA should be clearly described [15]. While it may be convenient to think about comparing different individual tests in the context of CEAs of diagnostics, it may be more fitting to compare different diagnostic algorithms. A diagnostic cannot be regarded in isolation. If we consider a single diagnostic test, the diagnostic algorithm already contains three steps. First the clinician decides to perform the test, which is influenced by guidelines and the clinician’s experience; then there is the diagnostic itself, which may present a binary result, i.e., positive or negative, but also a quantitative result, an image or a recommendation; the final step is the interpretation of this result by the clinician and/or the patient, which may result in a decision to make lifestyle changes, to start treatment or continue with other diagnostics. Different diagnostics can be added, either simultaneously or sequentially, based on the results of prior tests. There may also be differences in the implementation of the algorithm in clinical practice, e.g., the implementation in clinical decision support software. Eventually, a diagnostic algorithm should lead to determining the most likely cause of a patient’s symptoms and aid in identifying the most suitable treatment. These types of algorithms are already very common in economic analyses, where they translate into decision-tree models [13, 14, 26]. For diagnostic algorithms that include many different outcomes, i.e., a decision tree branching out to hundreds of outcomes, simplifications may be warranted or more flexible modelling approaches can be considered [27].

We highly recommend specifying these algorithms very clearly in any economic analysis of a diagnostic strategy. Even when comparing a switch from one diagnostic test to another, the algorithm in which the test operates may have a major impact. The decisions made and information gathered before performing the test influences the prior probabilities of obtaining a positive or negative test result. For diagnostic algorithms that are more expensive than the comparator, the eventual cost effectiveness is determined by to what extent the information gathered can improve patient outcomes, i.e., whether the information leads to more tailored treatment.

4.4 Time Horizon

Many economic evaluations of diagnostics primarily use the algorithm or decision tree to model the health-economic outcomes, as specified above. However, this may lead to challenges in assessing the long-term clinical outcomes for patients as these cannot be modelled explicitly. Generally, a lifetime horizon should be used [16], however there could be reasons to have a shorter time horizon but they should cover all relevant costs and outcomes. Economic analyses only assessing a time horizon as long as the diagnostic process, as seen rather frequently in literature [13], will in most cases not cover all relevant costs and outcomes. The time horizon should be similar to the time horizon over which costs and consequences of treatment following the diagnostic process are typically evaluated.

An additional factor to consider for economic evaluations of diagnostics, is the time to correct diagnosis. A faster diagnostic algorithm may result in time reductions for patients, clinicians or laboratory technicians, leading to a more efficient decision-making process [25]. In case of infectious disease, faster diagnosis may reduce the transmission of a disease, a factor generally considered to be an important aspect of value in health care (fear and risk of contagion) [28].

Combining very short-term (time to correct diagnosis) and long-term modelling (a lifetime time horizon) may lead to rather complex models for economic assessments of diagnostics, such as a combination of a discrete-event simulation and a transmission model to model tuberculosis diagnostics in Tanzania [29]. Depending not only on clinical perspectives but also on data availability, it may be feasible to focus on only short- or long-term modelling. This decision process should be reported in a transparent manner.

4.5 Choice of Health Outcomes

Quality-adjusted life-years (QALYs) or disability-adjusted life-years (DALYs) are generally the preferred outcomes for economic analyses [16]. Possibly due to the relatively many studies in the field of diagnostics with a short time horizon, authors commonly focus on rather short-term outcomes other than QALYs and DALYs [13, 30, 31]. Examples are outcomes based on the technical performance of the test (e.g. proportion of correct diagnoses) or the treatment decision (e.g. antibiotics prescribed) [13]. As stated in the introduction, IVD companies will be required to gather more information on the clinically relevant outcomes of novel diagnostics [10], which presents an opportunity to also include utility-based outcomes. This is not to say that other outcome measures are not relevant—we believe they are.

Other elements of value of particular interest to diagnostics are reduction of uncertainty due to a new diagnostic, adherence-improving factors, fear of contagion (already described above), insurance value and real options value [28]. The reduction of uncertainty is relevant for both payers as it reduces the uncertainty of the effectiveness of reimbursed care, and for patients and providers, as it may lead to more informed treatment decisions. This may also lead to increased adherence to treatment. There are several elements of value for diagnostics that may benefit the individual patient and have broader societal advantages. The fear of contagion has already been described above, but closely related to this is the insurance value, which may relate to the risk of an individual becoming sick [28]. For hereditary diseases, the results of diagnosing one patient may affect family members [21], and, for infectious diseases, the data gathered by diagnosing one group of patients may inform empiric treatment for another group of patients [32]. Finally, real options value is relevant for infectious disease where resistance may occur. Prescribing treatment provides a risk that the treatment will be less effective in the future; simultaneously, it is uncertain that novel treatment options will be developed in the future. A diagnostic, which increases the adequacy of prescriptions, can decrease the probability of untreatable, resistant infections in the future [33]. Discussions on how to include these other, still novel, elements of value are ongoing and will depend on factors such as the disease area covered and the health system assessed [21, 28]. Continuing this discussion with all stakeholders, including policy makers, clinicians and patients, is important, as well as experimentation with novel methods in the field of CEAs. For some diseases with limited data on the effectiveness of treatment, such as genomic tests used for rare genetic disorders, it may be challenging to perform a CEA [21]. In these cases, multicriteria decision analysis may be a feasible alternative [34].

4.6 Estimating Resources and Costs

For CEAs in general, the included costs depend on the perspective used and the decision problem analysed. Depending on the perspective used, diagnostic and subsequent treatment costs may be included differently or even not be considered at all. For diagnostics, the costs are of particular interest as there may be more flexibility compared with most drugs. The whole chain from collecting the patient sample to the reporting of the result will impact the eventual cost of the diagnostic. While large volumes of tests performed in laboratories will be relatively inexpensive, a POC test performed by the GP may yield more diagnostic value, i.e., the test can immediately influence the clinical decision. The following costs will be relevant for a CEA assessing a novel diagnostic:

-

diagnostic sample collection costs (including personnel, reagent and material costs);

-

transport costs (if the test is not performed at the POC);

-

costs of performing the test (including personnel, reagent, materials and depreciation costs);

-

costs associated with reporting the test result to the clinician and/or the patient, and, if applicable, changing the clinical decision.

How precise test-related costs should be estimated depends on the perspective and decision problem—microcosting will not always be useful or feasible [26]. However, using a fixed price per diagnostic test may underestimate the scale benefits associated with performing more tests using the same equipment [35]. Sensitivity analyses to assess the impact of various assumptions to the economies (and diseconomies) of scale related to performing more (or fewer) tests should be considered and should be consistent with the evaluated setting and populations, including any health system factors that may limit scale-up. For tests that can be used to diagnose various diseases (i.e., are part of several diagnostic algorithms, with patients experiencing different symptoms), these scale advantages should also be considered.

4.7 Incremental Costs and Outcomes

It is common to compare various diagnostic algorithms simultaneously within a CEA [13], as explained above. The different algorithms may not only contain different diagnostic techniques but may also be performed in different sequences or at different locations (e.g., at the POC or in a laboratory). Clearly presenting the differences in incremental costs and outcomes is important. A common graphical method to present the incremental costs and outcomes of various algorithms is an efficiency or cost-effectiveness frontier [29, 36, 37]. This may be more easily interpretable than only providing a table of the results. An added benefit is that the efficiency frontier can be used to draw conclusions about the cost effectiveness in the absence of a willingness-to-pay threshold, as described elsewhere [38].

4.8 Affordability and Reimbursement

Factors outside of the direct scope of a CEA, but very relevant for its context, are the affordability and reimbursement of diagnostic interventions. The budget impact was seldom included in CEAs of diagnostics [13]; however, we believe this may provide important information regarding the affordability [39]. Especially if the current standard-of-care is based on clinical expertise, a new diagnostic test may greatly increase the total costs and may have a major budget impact. This is particularly relevant for LMICs, where resource constraints are more prevalent than in high-income countries. An additional constraint in LMICs may be the availability of skilled personnel to perform and operate new diagnostic tests [16].

In general, the perspective of the budget impact analysis is important, and also in relation to reimbursement of the various diagnostics considered and the payers involved: can healthcare providers claim the diagnostic costs, should they pay for it themselves, or should a patient pay a fee? Additionally, it is relevant whether the diagnostic test is funded out of the same budget as subsequent treatment. This does not directly influence the cost effectiveness, but it will probably affect the implementation and uptake of a novel diagnostic test, e.g., a very cost-effective test for which the patient has to pay may have a lower uptake than a test that is provided free of charge (i.e., paid for by the health system). These factors can be explored in the discussion of an economic analysis of a novel diagnostic.

5 Summary and Conclusions

In this paper, we formulated eight recommendations for CEAs of diagnostics. The symptoms patients experience, the clinical setting, locations of test sampling and analysis, and diagnostic algorithms should be clearly reported. The used time horizon should reflect the time horizon used to model the treatment after the diagnostic pathway. QALYs or DALYs should be used as the clinical outcomes but may be combined with other relevant outcomes, such as real options value. If the number of tests using the same equipment can vary, the economy of scale should be considered. An understandable graphical representation of the various diagnostic algorithms should be provided to understand the results, such as an efficiency frontier. Finally, the budget impact and affordability should be considered.

These are not meant to supplant the CHEERS recommendations or reference case for economic evaluations but may provide useful additions when designing and reporting CEAs of diagnostics. Although we based the recommendations in this paper on extensive reviews of the literature [13, 14] and the views of the authors, they were not developed or validated through a formal process, such as a Delphi process. Although we expect the issues raised in the paper to be generalisable to diagnostics or all disease areas, some issues relevant for specific disease areas may not have been included. However, this research could be used as a starting point for a follow-up project to further develop diagnostic-specific guidelines or a reference case for diagnostic CEAs.

Compared with pharmaceutical interventions, assessing the cost effectiveness of diagnostic strategies can be more challenging as various diseases or treatment options may be important to consider. At the same time, the EU IVDR and MDR [10, 12] may drive manufacturers to collect more clinical evidence, which aids in the assessment of the value of diagnostics, providing opportunities for investments in better diagnosis and improved clinical care. The recommendations provided in this paper can be used to improve the design and reporting CEAs in the field of diagnostics, resulting in well-informed reimbursement decisions by policy makers.

References

Wettstein DJ, Boes S. Effectiveness of National Pricing Policies for Patent-Protected Pharmaceuticals in the OECD: a systematic literature review. Appl Health Econ Health Policy. 2019;17:143–62.

Vogler S, Haasis MA, Dedet G, Lam J, Pedersen HB. Medicines Reimbursement Policies in Europe. Copenhagen: World Health Organization. 2018. https://www.euro.who.int/en/publications/abstracts/medicines-reimbursement-policies-in-europe. Accessed4 Jan 2021.

Garattini L, Curto A, Freemantle N. Pharmaceutical price schemes in Europe: time for a ‘Continental’ One? Pharmacoeconomics. 2016;34:423–6.

Belloni A, Morgan D, Paris V. Pharmaceutical expenditure and policies: past trends and future challenges. OECD. 2016. https://www.oecd-ilibrary.org/social-issues-migration-health/pharmaceutical-expenditure-and-policies_5jm0q1f4cdq7-en. Accessed 30 Nov 2020.

Doble B, Tan M, Harris A, Lorgelly P. Modeling companion diagnostics in economic evaluations of targeted oncology therapies: systematic review and methodological checklist. Expert Rev Mol Diagn. 2015;15:235–54.

Blair ED, Stratton EK, Kaufmann M. The economic value of companion diagnostics and stratified medicines. Expert Rev Mol Diagn. 2012;12:791–4.

Garfield S, Polisena J, Spinner DS, Postulka A, Lu CY, Tiwana SK, et al. Health Technology Assessment for Molecular Diagnostics: Practices, Challenges, and Recommendations from the Medical Devices and Diagnostics Special Interest Group. Value Health. 2016;19:577–87.

Fischer S, Vogler S, Windisch F, Zimmermann N. HTA, reimbursement and pricing of diagnostic tests for CA-ARTI: an international overview of policies. Vienna: Gesundheit Österreich. 2021. https://www.value-dx.eu/wp-content/uploads/2021/04/VALUE-DX_Report_Task5.5_Deliverable5.2_Final.pdf.

Vogler S, Habimana K, Fischer S, Haasis MA. Novel policy options for reimbursement, pricing and procurement of AMR health technologies. Vienna: Gesundheit Österreich. 2021. https://globalamrhub.org/wp-content/uploads/2021/03/GOe_FP_AMR_Report_final.pdf.

European Parliament and Council. Regulation on in vitro diagnostic medical devices. 2017/746. 2017. http://data.europa.eu/eli/reg/2017/746/2017-05-05.

European Commission. Factsheet for Authorities in non-EU/EEA States on Medical Devices and in vitro diagnostic medical devices. 2019. https://ec.europa.eu/docsroom/documents/33863. Accessed 30 Nov 2020.

European Parliament and Council. Regulation on medical devices. 2020/561. 2020. https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A02017R0745-20200424.

van der Pol S, Garcia PR, Postma MJ, Villar FA, van Asselt ADI. Economic analyses of respiratory tract infection diagnostics: a systematic review. Pharmacoeconomics. https://doi.org/10.1007/s40273-021-01054-1 (Epub 1 Jul 2021).

Rojas García P, van der Pol S, van Asselt ADI, Postma M, Rodríguez-Ibeas R, Juárez-Castelló CA, et al. Efficiency of diagnostic testing for helicobacter pylori infections—a systematic review. Antibiotics (Basel). 2021;10(1):55.

Husereau D, Drummond M, Petrou S, Carswell C, Moher D, Greenberg D, et al. Consolidated health economic evaluation reporting standards (CHEERS) statement. Pharmacoeconomics. 2013;31:361–7.

Wilkinson T, Sculpher MJ, Claxton K, Revill P, Briggs A, Cairns JA, et al. The international decision support initiative reference case for economic evaluation: an aid to thought. Value Health. 2016;19:921–8.

Gilbert R, Logan S, Moyer VA, Elliott EJ. Assessing diagnostic and screening tests. West J Med. 2001;174:405–9.

Wald N, Law M. Medical screening. Oxford Textbook of Medicine. Oxford University Press; 2010. pp. 94–108. http://www.oxfordmedicine.com/view/10.1093/med/9780199204854.001.1/med-9780199204854-chapter-030302. Accessed 7 Dec 2020.

Merriam-Webster. Definition of DIAGNOSIS. Merriam-Webster. 2020. https://www.merriam-webster.com/dictionary/diagnosis. Accessed 2 Mar 2020.

Definition of MONITORING. 2021. https://www.merriam-webster.com/dictionary/monitoring. Accessed 10 Jun 2021.

Payne K, Gavan SP, Wright SJ, Thompson AJ. Cost-effectiveness analyses of genetic and genomic diagnostic tests. Nat Rev Genet. 2018;19:235–46.

Kroneman MW, Maarse H, van der Zee J. Direct access in primary care and patient satisfaction: a European study. Health Policy. 2006;76:72–9.

Hordijk PM, Broekhuizen BDL, Butler CC, Coenen S, Godycki-Cwirko M, Goossens H, et al. Illness perception and related behaviour in lower respiratory tract infections—a European study. Fam Pract. 2015;32:152–8.

Goble JA, Rocafort PT. Point-of-care testing: future of chronic disease state management? J Pharm Pract. 2017;30:229–37.

Miller MB, Atrzadeh F, Burnham C-AD, Cavalieri S, Dunn J, Jones S, et al. Clinical utility of advanced microbiology testing tools. J Clin Microbiol. 2019;57:e00495-19.

Drummond M. Methods for the economic evaluation of health care programmes, 4th edn. Oxford, New York: Oxford University Press; 2015.

Incerti D, Thom H, Baio G, Jansen JP. R You still using excel? The advantages of modern software tools for health technology assessment. Value Health. 2019;22:575–9.

Lakdawalla DN, Doshi JA, Garrison LP, Phelps CE, Basu A, Danzon PM. Defining elements of value in health care—a health economics approach: an ISPOR Special Task Force Report [3]. Value Health. 2018;21:131–9.

Langley I, Lin H-H, Egwaga S, Doulla B, Ku C-C, Murray M, et al. Assessment of the patient, health system, and population effects of Xpert MTB/RIF and alternative diagnostics for tuberculosis in Tanzania: an integrated modelling approach. Lancet Glob Health. 2014;2:e581–91.

Watts RD, Li IW, Geelhoed EA, Sanfilippo FM, John AS. Economic evaluations of pathology tests, 2010–2015: a scoping review. Value Health. 2017;20:1210–5.

Giusepi I, John AS, Jülicher P. Who conducts health economic evaluations of laboratory tests? A Scoping review. J Appl Lab Med. 2020;5:954–66.

Chandna A, White LJ, Pongvongsa T, Mayxay M, Newton PN, Day NPJ, et al. Accounting for aetiology: can regional surveillance data alongside host biomarker-guided antibiotic therapy improve treatment of febrile illness in remote settings? Wellcome Open Res. 2019;4:1.

Attema AE, Lugnér AK, Feenstra TL. Investment in antiviral drugs: a real options approach. Health Econ. 2010;19:1240–54.

Schey C, Postma MJ, Krabbe PFM, Topachevskyi O, Volovyk A, Connolly M. Assessing the preferences for criteria in multi-criteria decision analysis in treatments for rare diseases. Front Public Health. 2020;8:162.

van der Pol S, Dik J-WH, Glasner C, Postma MJ, Sinha B, Friedrich AW. The tripartite insurance model (TIM): a financial incentive to prevent outbreaks of infections due to multidrug-resistant microorganisms in hospitals. Clin Microbiol Infect. 2021;27:665–7.

Jha S, Ismail N, Clark D, Lewis JJ, Omar S, Dreyer A, et al. Cost-effectiveness of automated digital microscopy for diagnosis of active tuberculosis. PLoS One. 2016;11(6):e0157554.

Suen S, Bendavid E, Goldhaber-Fiebert JD. Cost-effectiveness of improvements in diagnosis and treatment accessibility for tuberculosis control in India. Int J Tuberc Lung Dis. 2015;19(9):1115–xv.

van der Pol S, de Jong LA, Vemer P, Jansen DEMC, Postma MJ. Cost-effectiveness of Sacubitril/Valsartan in Germany: an application of the efficiency frontier. Value Health. 2019;22:1119–27.

Sullivan SD, Mauskopf JA, Augustovski F, Jaime Caro J, Lee KM, Minchin M, et al. Budget impact analysis—principles of good practice: report of the ISPOR 2012 Budget Impact Analysis Good Practice II Task Force. Value Health. 2014;17:5–14.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Funding

This project has received funding from the Innovative Medicines Initiative 2 Joint Undertaking under grant agreement no. 820755. This Joint Undertaking receives support from the European Union’s Horizon 2020 research and innovation programme and EFPIA and bioMérieux SA, Janssen Pharmaceutica NV, Accelerate Diagnostics S.L., Abbott, Bio-Rad Laboratories, BD Switzerland Sàrl, and The Wellcome Trust Limited. This paper reflects the authors’ views only, not that of the funder or supporting organisations.

Conflict of Interest

Professor Maarten J. Postma received grants and honoraria from various pharmaceutical companies all unrelated to this research, except those underlying the IMI EU VALUE-Dx project underlying this work. Simon van der Pol, Paula Rojas Garcia, Fernando Antoñanzas Villar, and Antoinette D.I. van Asselt have no conflicts of interest to declare.

Author Contributions

All authors contributed to the study conception and design. The first draft of the manuscript was written by SvdP and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License, which permits any non-commercial use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc/4.0/.

About this article

Cite this article

van der Pol, S., Rojas Garcia, P., Antoñanzas Villar, F. et al. Health-Economic Analyses of Diagnostics: Guidance on Design and Reporting. PharmacoEconomics 39, 1355–1363 (2021). https://doi.org/10.1007/s40273-021-01104-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40273-021-01104-8