Abstract

Spatiotemporal analysis of fire activity is vital for determining why wildfires occur where they do, assessing wildfire risks, and developing locally relevant wildfire risk reduction strategies. Using various spatial statistical methods, we determined hot spots of large wildfires (> 100 acres) in Washington, the United States, and mapped spatiotemporal variations in large wildfire activity from 1970 to 2020. Our results found that all hot spots are located east of the crest of the Cascade Range. Our spatiotemporal analysis found that the geographic area wherein most of the state’s acres burned has shrunk considerably since 1970 and has become concentrated over the north-central portion of the state over time. This concentration of large wildfire activity in north-central Washington was previously unquantified and may provide important information for hazard mitigation efforts in that area. Our results highlight the advantages of using spatial statistical methods that could aid the development of natural hazard mitigation plans and risk reduction strategies by characterizing previous hazard occurrences spatially and spatiotemporally.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The increase in large, severe wildfires in the state of Washington over the past few decades (Wing and Long 2015) follows what is generally happening in wildfire-prone regions around the world. More frequent large fires (Jolly et al. 2015) are resulting in increases of annual average acres burned (Dennison et al. 2014) and more extensive property damage (Rasker 2015) in such regions. Large wildfires that directly impact the built environment and populated areas are often followed by short-term economic instability, and, in particularly extreme and deadly events, long-term recovery into the billions of dollars (von Kaenel 2020).

Wildfires that do directly impact communities and result in property damage also go beyond physical damage and economic impacts. Wildfire smoke is diminishing air quality in the western United States (McClure and Jaffe 2018) with observable increases in mortality among some Washington residents (Doubleday et al. 2020). Wildfires can also trigger cascading impacts or multi-hazard events that can include flooding (Brogan et al. 2017) and erosion and sedimentation (Sankey et al. 2017).

While the ecological benefits of frequent, low-severity wildfires (Barros et al. 2018) and influence of suppression on wildfire severity (Zald and Dunn 2018) should not be ignored, the scientific literature suggests the issues wildfires present as a natural hazard to human communities are likely to persist or worsen in the future. Summer temperature increases and summer precipitation decreases caused by climate change are primary drivers of longer and more intense fire seasons in the western United States (Abatzoglou et al. 2017). In Washington, forests are likely to become more water-limited, with areas of drought-induced severe water limitations expected to increase along with continued summer temperature increases resulting in more acres burned (Snover et al. 2013). The increasing likelihood of longer wildfire seasons with more frequent and more severe wildfires will present a major challenge to wildland firefighters as well as the emergency managers, planners, and policymakers responsible for allocating finite resources dedicated to wildfire risk mitigation when fires threaten populated areas and property. As Washington’s wildland-urban interface (WUI) continues to develop (WA EMD 2018), the expected increase in wildfire occurrence also has major implications for the built environment and public safety. Each of these considerations is likely to influence hazard mitigation planning as emerging information on risks to populations, structures, natural environments, and economies informs and shapes effective risk reduction measures.

In this article, we presuppose that hazard mitigation practitioners must go beyond merely knowing that climate change is increasing hazard risks, but also be able to incorporate analyses of natural hazard risk specific to the needs of the hazard mitigation field. As the emergency management profession and the hazard mitigation field become more focused on the influence of climate change as a threat-multiplier and an exacerbator of existing natural hazards (FEMA 2011a; Banusiewicz 2014; Stults 2017), the use of quantitative methods to develop data-driven hazard occurrence analyses and hazard mitigation strategies will become more essential for understanding the complexity of climate-influenced natural hazard events. Using wildfire data from Washington Department of Natural Resources (DNR) (Dozic 2020; DNR 2020), we employed a variety of statistical tools in a geographic information system (GIS) (ArcGIS Pro version 2.7) to (1) determine the location of apparent clusters of wildfire activity in Washington; (2) determine whether identified clusters are statistically significant hot spots; and (3) identify any spatiotemporal variation in wildfire locations and/or acres burned. These and other similar methods have been used to identify hot spot trends in wildfire activity in regions around the globe, including in Honduras using the 2009 version of the built-in hot spot analysis tool in the ArcGIS software (Caceres 2011), in Portugal using space-time permutation scan statistics (Pereira et al. 2015), and in Florida using directional distribution analysis (McLemore 2017) (see Mohd Said et al. 2017; Shekede et al. 2019 for further examples). Previous research using spatial statistical methods in the Pacific Northwest used only data on a 25-year period (1984−2008) (Wing and Long 2015). Our study applied comparable methods from those used in Honduras, Portugal, Florida, and the Pacific Northwest to Washington state specifically, while taking advantage of the 50-year timespan supplied by the combined DNR datasets, giving us fire location and size data between August 1970 and August 2020. As such, this study is the first to apply multiple spatial statistical analyses to wildfires over a 50-year period in Washington state, offering new insights into where hot spots have been historically and where they may be intensifying.

This is also the first study to apply these methods within the specific context of hazard mitigation planning as a recommended approach for mitigation planners looking to quantify and characterize hazard occurrences in their region of interest. There are multiple other hazard characterization studies found in the literature, including the directional distribution analysis of tropical cyclones in the North Atlantic (Rahman et al. 2019), the directional distribution analysis and mean centers of earthquakes in Kyrgyzstan (Djenaliev et al. 2018), and spatiotemporal analysis of post wildfire debris flows (Haas et al. 2016). Use of standard deviational ellipses also appears in recent research on other natural hazards, such as Rahman et al. (2019) who compared observed and forecasted tropical cyclone trajectories to evaluate forecast accuracy and Djenaliev et al (2018) who identified the directional distribution of earthquakes based on their magnitudes. These studies show the range of applicability of spatiotemporal analysis for hazard characterization, in particular. However, these previous studies do not present the mapping products associated with spatiotemporal analysis within the context of local hazard mitigation planning, which we felt was a gap that needs to be addressed.

As such, our discussion includes how our techniques and their mapping products could be used in local hazard mitigation plans to help communities meet Federal Emergency Management Agency (FEMA) requirements for hazard type, location, and extent identification (FEMA 2011b) as an effort to address the knowledge gap we identified in the literature. For local hazard mitigation plans developed under the FEMA guidelines, we believe the mapping products in our study can also be used by local planners to characterize previous hazard occurrences according to type, location, and extent (FEMA 2011b), which is a foundational part of an integrated risk assessment. The importance of adequate characterization and classification of natural hazards in hazard mitigation plans cannot be overstated given the opportunity they present for quantitative hazard analysis, the evidence that natural hazard-related disasters are increasing in frequency and magnitude (CEMHS 2019), and exposure to natural hazards increases with development in hazard-prone areas (Weinkle et al. 2018).

It should also be noted that our study focuses on wildfire location and acres burned, which on fire-adapted forests (such as those found east of the Cascade Range) is perhaps of minor importance for determining impacts compared to fire severity, for example. However, we feel location and acres burned are appropriate variables to consider given our interest in the potential of wildfire to impact the built environment and human communities.

2 Methods

We used two open-source wildfire occurrence datasets available from the Washington Department of Natural Resources (DNR 2020; Dozic 2020) that, once combined, provided a 50-year history of wildfire data around the state, from 1970 to 2020. Visual inspection of all wildfires in the complete dataset (n = 47,369) showed some noticeable clusters of wildfire activity throughout the state, including large contiguous clusters in the northeastern and southwestern corners, among others (Fig. 1a). However, the full dataset includes all fire class sizes, including Class A fires (≤ 0.25 acres burned), which are heavily concentrated in western Washington known for its wetter, milder climate, fewer major wildfires, and denser population centers (for example, Seattle-Tacoma metropolitan area). We chose to perform our analysis on only Class D fires and above (≥ 100 acres burned), shown in Fig. 1b. Total fires Class D and above is 639. The large continuous cluster in western Washington shown in Fig. 1a disappears, although apparent clusters in the central and eastern parts of the state still occur, but noticeably more dispersed. Although this dataset provides the most complete record of wildfires in Washington currently available by date, location, and acres burned, it is limited to wildfires reported to DNR. Therefore, it is possible that some large fires more than 100 acres in size are not included in the dataset, and, as such, are not included in our study, although this is unlikely.

The clusters we identified visually were evaluated statistically using a density-based clustering algorithm. This method employed an unsupervised machine learning algorithm and automatically detected spatial patterns based on a feature’s location and distance to a specified number of its neighbors (that is, core-distance) (Campello et al. 2013; Esri 2020). The minimum number of features per cluster was set arbitrarily to 50. We used a self-adjusting (HDBSCAN) clustering method to separate clusters from noise. The HDBSCAN algorithm is considered a data-driven clustering method requiring the least user input (Esri 2020). Reachability distances using the HDBSCAN algorithm are, essentially, nested levels of clusters with each level resulting in different collections of clusters (Campello et al. 2013). The output includes the probability that each clustered feature belongs to its assigned collection. Because two primary clusters were found (see Sect. 3), we chose to explore the data deeper via hot spot analyses and directional distribution.

2.1 Hot Spot Analyses

Optimized hot spot (OHS) analysis was performed first by letting the tool’s defaults run without any overrides. We chose to aggregate points using a hexagon grid mesh rather than a fishnet grid to better represent potential curvature of the spatial patterns in our dataset. Additionally, hexagon grids reduce distortion from the Earth’s curvature more efficiently than a fishnet grid, which is especially important when analyzing patterns over a large geographic extent (Agarwadkar et al. 2013) as we do in this study. Lastly, hexagon grids allow for more neighbors to be included in the calculations than fishnet grids (a minimum of six versus four), thereby reducing chances for error.

We chose to use a bounding polygon for our study to allow areas without wildfire incidents but where wildfires are possible to be included in the hot spot calculations. The bounding polygon we used covers the entire state’s landmass minus water features larger than 1 km2. Because hot spots are determined as such relative to the entire study area, we removed the state’s numerous large waterbodies (for example, Puget Sound) from being considered in our analysis to help ensure they did not skew our results. Optimal hexagon size (approximately 9 km (5.5 miles)) and distance band (approximately 47 km (29 miles)) according to the default run were visually compared to the density-based clusters to confirm they exhibited the same general pattern. Additional runs with override settings reflecting neighborhood conceptualizations of 32 km (20 miles) and 80 km (50 miles) but maintaining the default hexagon size were also completed for validation purposes against the default run.

We then created space-time cubes by aggregating the point features in our dataset. The date on which the wildfire was listed as being extinguished was chosen as the time field since this date will coincide with total acres burned. Our time step interval chosen was “years” to capture year-over-year change in hot spot status. We aggregated points using hexagon cubes with the same dimensions as those used in the OHS analysis to maintain visually comparable map products. The conceptualization of spatial relationships for this trend analysis included defining each cube’s neighborhood as its six spatial neighbors (that is, only the bordering cubes). We chose this method to improve our model’s ability to account for the drastic climatic differences between western and eastern Washington as much as possible (even though it is a global model) and minimize the ability of cubes located in one climatic regime to influence cubes in another. That being said, we recognize the challenges of using a global model over diverse landscapes with non-stationarity data, even with the improvements we made with our bounding polygon.

The trend analysis tool, known as Create Space Time Cubes by Aggregating Points in ArcGIS Pro 2.7’s Space-Time Pattern Mining toolbox, uses the Mann-Kendall trend test (Hamed 2009) on every location with date to determine statistically significant trends. This is a rank correlation analysis for each cube’s count through the time sequence. The value for each year in the cube’s time series is compared to the value in the next preceding year and received a score of +1 if the first is smaller than the second, -1 if the first is larger, or zero if the results are tied. The results were then summed, with the expectation of summing to zero (indicating no trend over time). The observed results were then compared to the expectation of no trend to test for statistical significance.

2.2 Directional Distribution (Standard Deviational Ellipse)

We divided the dataset into five decadal segments beginning with 1970 and created a standard deviational ellipse for each decade. This method has been previously applied to wildfires by McLemore (2017), who analyzed fire trends in Florida over a 30-year timespan using standard deviational ellipses for each decade therein.

Our ellipses were performed to capture wildfires by size within one standard deviation of the mean for each decade, similar to the method used in McLemore (2017). This method typically captures approximately 63% of a given dataset, depending on the variables used in the analysis. We used acres burned as a weight, allowing larger wildfires to influence the shape and location of the ellipse more than smaller fires and, therefore, our results would indicate where the majority of acres burned occurred each decade but may not capture a majority of total wildfire occurrences.

3 Results

The results are presented in two sections: Sect. 3.1 presents the results for the hot spot analyses (that is, cluster analysis, OHS, and trend analysis) while Sect. 3.2 presents the directional distribution analysis results. Spatial and spatiotemporal considerations are discussed for both sets of analyses.

3.1 Hot Spot Analyses

Our results found two primary clusters of large wildfire occurrences in the state, based on the HDBSCAN algorithm chosen. Although large wildfires have occurred on both sides of the Cascades since 1970, the extent of overlapping wildfire incidents in the central and eastern regions is far greater than anywhere else. The central cluster totalled 164 wildfires and stretched across five counties, all of which include some portion of the eastern slopes and foothills of the Cascade Range. The eastern cluster totalled 119 wildfires stretched across four counties, coinciding with the forested areas of northeastern Washington and the Selkirk Mountain foothills near the Idaho border.

The optimized hot spot (OHS) analysis was used to determine whether clusters contributed to statistical hot spots as well. Our OHS results based on the default settings are shown in Fig. 2. Using the default settings resulted in 628 valid features (that is, wildfire incidents) and 16 outliers. No features had fewer than 8 neighbors. The hexagon mesh resulted in each cell measuring 9 km across, ending with a total of 2527 cells needed to cover the entire state. The maximum number of incidents in any single cell was 9 (number of cells with this value was 2, located in Yakima and Okanogan Counties). The default neighborhood scale was 47 km.

We validated the results of the default run with additional runs of smaller and larger neighborhood scales. The maximum number of incidents by cell did not change under neighborhood scales of 32 and 80 km, although the distribution of hot and cold spots changed drastically. For the 32 km scale, hot spots followed the same basic shape and general location as the default, although were fewer in overall number. Statistically significant hot spots (≥ 95% confidence, z ≥ 1.96) under the default neighborhood scale (47 km) totalled 538 cells, or approximately 21% of the state. Total grid cells that are statistically significant hot spots under the 32 km scale decreased to 403, or 16% of the state. When the scale of analysis was expanded to 80 km, the total number of statistically significant hot spots increased to 788, or 31% of the state.

The default settings in the OHS used one of two methods for determining the most appropriate neighborhood scale as 47 km: incremental spatial autocorrelation or K nearest neighbors. Incremental spatial autocorrelation will reflect peak distances where the underlying processes driving clustering are most pronounced. Once a peak is identified, the OHS tool uses this distance to define neighborhood scale for the entire study area. If no peak is identified, the tool will use the average distance that will yield K neighbors, when K is calculated as 0.05 × N (N = number of features in the Input Features layer). K in our study would be calculated as 31.95, or 32, neighbors (0.05 × 639). Because these processes are hidden when the default model settings are used, it is not immediately clear which method of neighborhood scale was chosen to create the output in Fig. 2. However, when examining the output attribute table of the default run, we found 9 grid cells (0.4% of the state) with fewer than K neighbors—all of them at the extreme northwestern, southwestern, northeastern, and southeastern corners of the state where fewer neighbors are to be expected. The minimum number of neighbors using the default scale was 24. This suggests that incremental spatial autocorrelation was used and that some peak distance of clustered features was identified.

When we set the scale of analysis to 32 km, 427 grid cells (17% of the state) produced fewer than K neighbors and accounted for the entire outer edge of the state. Such a large proportion of the state having fewer than K neighbors suggests that the hot and cold spot delineations based on a 32 km scale may not be the most trustworthy. Regarding the 80 km scale, no grid cells had fewer than K neighbors. The minimum was 56 at the grid cell located at the northwestern-most tip of the state, considered to be the most geographically remote location in Washington. Although each grid cell had more than K neighbors, the results of the 80 km scale of analysis should be considered within the context that distance from each grid cell is the primary variable.

Figure 3 is a two-dimensional projection of the space-time cubes we created for the trend analysis that shows which areas are trending upward or downward in wildfire occurrence since 1970. We found a general upward trend in wildfire counts in 20 grid cells, all of them east of the Cascade Range with the most significant upward trends in the central part of the state along lower elevation areas of the Cascades’ eastern slopes. We also found some spots indicating a downward trend, the most significant of which were in southwestern Washington.

3.2 Directional Distribution (Standard Deviational Ellipse)

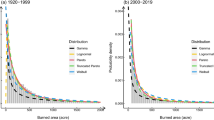

Our results show that large wildfires have generally followed a southwest-northeast distribution, roughly centered over the dry eastern slopes of the Cascade Range (Fig. 4). The decade 2000−2010 is the only decade exhibiting a southeast-northwest distribution, due to the influence of an extremely large wildfire event in Walla Walla County that burned more than 100,000 acres. The influence of southeastern fires on the 1990−2000 ellipse is also evident, though less pronounced.

Directional distribution analysis results showing a standard deviational ellipse for each decade between 1970 and 2020, with more recent decades in darker shades. Ellipses have shrunk in size since 1970 but contain more acres burned, suggesting that large wildfires are becoming more concentrated in the north-central part of the state

Each ellipse captures most of the acres burned during that decade. Over time, these distributions have become more confined to the north-central portion of the state, in particular Chelan, Douglas, and Okanogan Counties. The most constrained ellipse is for the most recent period of 2010−2020, even though this decade also saw large fires in the southeast region. This can be interpreted as those fires in the southeast having been out-influenced by more and larger fires in the north-central region during that timeframe, resulting in the constrained ellipse. The ellipse for 2010−2020 is approximately 62% smaller than the ellipse for 1970−1980, suggesting that the area where a majority of the state’s acres burn has shrunk in size since 1970 and that large wildfires have become more concentrated in the north-central part of the state. Table 1 shows the area of each ellipse in km2, the total number of wildfires for each decade, and total number in each ellipse, as well as the percentage of occurrences captured by the ellipse.

4 Discussion

Although our focus is on wildfires in Washington state, it is possible to use these methods to characterize other natural hazards in other regions. It should also be noted that our study focuses on wildfire location and acres burned, which on fire-adapted forests (such as those found east of the Cascade Range) is perhaps of minor importance for determining impacts compared to fire severity, for example. However, we feel location and acres burned are appropriate variables to consider given our interest in the potential for wildfire to impact the built environment and human communities.

Our results show the usefulness of OHS in characterizing clusters of previous wildfire occurrences in the Pacific Northwest. The advantage of using OHS in such cases is its ability to identify spatial patterns in areas of both high and low spatial densities (Jaquez 2008). Additionally, wildfires often occur in or near areas of previous fire activity (Jolly et al. 2015), making the OHS a potentially useful starting point for those interested in understanding where future fires may occur, although more rigorous methods, for example, spatial regression modeling, should be used. Climate change is increasing the volume of burnable area globally (Jolly et al. 2015), thereby expanding the areas susceptible to wildfires. It is possible that the hot spots we identified could expand geographically and/or increase in intensity under continued climate change. Such expansion or intensification could further complicate hazard mitigation decision making in hot spot areas, particularly for areas that are not currently considered a hot spot but may become so in the future, including areas in western Washington (Dunagan 2020). As such, reliable prediction of future fire occurrences in our study area would need to go beyond the characterization of historical fire locations and include data on the drivers of fire activity—for example, climate and human activities.

The relative increase in hot spots in our OHS analysis as scale increases makes intuitive sense, since the larger radius used to define each grid cell’s neighborhood would increase the number of grid cells used to determine statistical significance. The expanded or contracted scale of analysis redefines what is near and what is distant, and, in our study, this meant that both cold and hot spots grew or shrunk relative to the scale chosen. This is perhaps a reflection of the intense clustering found east of the Cascades and dispersal west of them. It is also the likely reason for the poorer performance of the OHS using 32 km distance band since the 9 km pixel size deemed optimal for a 47 km distance band may be too coarse for 32 km. Future research could investigate a more local scale of analysis using smaller pixel sizes and finer-scale aggregation patterns, such as those gained from using satellite-based wildfire detections (for example, MODIS/VIIRS) that can increase the number of observations per cell (Levin and Heimowitz 2012; Olivia and Schroeder 2015). The drastic changes in hot spot location under the various neighborhood distances also reflects the need identified in the scientific literature for multiscale hot spot analyses to support decision making at multiple scales across a wide range of societal issues (Liu et al. 2017; Guo et al. 2021; Lv et al. 2021). In such cases, local clusters could be useful for local planners and decision makers, while coarser scale clusters can be used for state-scale decision making.

Because we only focused on incident count per grid cell, other variables shown to have a relationship with wildfire occurrence, such as climate regime (Westerling et al. 2003), and variables shown to be associated with wildfire risk to human communities, such as housing patterns (Syphard et al. 2021) were not considered in this study. Additionally, these missing variables can change dramatically over a 47 km distance in some parts of our study area, such as the Cascade Range. The Cascade Range ridgeline is considered the general border between western Washington’s wet, humid climate and eastern Washington’s dry, semiarid climate. Even when grid cells nearest the ridge include potentially dozens of neighbors from different climatic regimes, that delineation between the two climates was maintained in our OHS analysis, which was an unexpected result. This could be due to the intensity of spatial clustering of wildfires in central and eastern Washington, and the random, dispersed pattern in western Washington. This delineation is also generally followed in the default run in Fig. 2, although more cold spots are shown in the highest elevations of the Cascade Range, which follows logic given these areas see fewer people, often have year-round snow fields, colder temperatures, and sparser vegetation.

Hot spot analyses over large and diverse landscapes can, at times, fail to capture the nuances of a local scale analysis without additional consideration given to the stationarity of the data (Nelson and Boots 2008), and our study is vulnerable to such weaknesses. Our results show that there may be additional variation in wildfire activity even within significant hot spots. Our trend analysis seems to indicate this as well, with five downward trending grid cells in the northeastern part of the state that also coincide with significant hot spots. One of the downward trending cells is near the Spokane population center and could be related to urbanization. Research into the causes of that local variation would be helpful for those communities and may provide additional needed context for mitigation practitioners.

That being said, the local-scale variation in fire activity does not negate the results of the directional distribution analysis, which reaffirms the increasing number of large wildfires in the north-central part of the state (also where we found significant upward trends in fire activity in the trend analysis). Compared to other similar studies, Washington has seen more spatiotemporal variation in fire activity than, say, Florida (McLemore 2017). McLemore’s ellipses hardly changed in size and orientation over time, compared to ours that changes drastically in size (less drastically in orientation other than 2000−2010). Because we do not have many other directional distribution studies of wildfire in the United States, especially in the Pacific Northwest, to compare our results to, we cannot say whether Washington is an outlier in this regard, regionally. This perhaps indicates the novelty of our approach and our hope for additional research using this method in the future.

In any case, our results can have wide implications on hazard mitigation planning, generally speaking. Knowing where, statistically, wildfire occurrences and/or acres burned are increasing in frequency in a relatively small geographic area (for example, the size of a grid cell in our study) may be an indication of increasing risk (Meng et al. 2015) or where hazard mitigation efforts may need to be focused and should be studied further. Conversely, knowing where wildfire occurrences are becoming less likely or where acres burned are decreasing may free up resources that can be shifted to more risky areas. Again, more research into the drivers of increasing/decreasing fire activity would need to be conducted before such decisions should be made.

These results have other direct ties to hazard mitigation planning and resource allocation at state and local levels. Such information should be used at local jurisdiction scales (for example, county level) to inform their risk and vulnerability assessments, which are required in US-based hazard mitigation plans for jurisdictions to remain eligible for FEMA’s Hazard Mitigation Assistance grants. Although FEMA does not require the use of a statistical approach to local risk and vulnerability assessment, these methods show how statistical approaches can reveal apparent changes in hazard occurrence over time and space, thereby reducing the need for assumptions or guesswork when determining hazard and vulnerability later on. These statistical approaches can also provide decision makers with some of the information needed when choosing where limited mitigation resources should be applied, such as funding for creating defensible spaces around critical assets, targeted fuels reduction, and “fire adapted” communities, although fire intensity, impact, and benefit-cost analysis of mitigation options should also be considered when allocating resources for wildfire risk mitigation.

The 50-year timeframe used in this study should help improve the ability to establish trends that can be helpful for mitigation planners required to evaluate any changes in conditions or developments since the previous plan was approved that may influence vulnerability. For many jurisdictions, this is done with the common five-year planning horizon given FEMA’s requirement that all mitigation plans should be updated every five years to maintain eligibility for federal mitigation dollars (FEMA 2011b). Planners are also required to examine the possible extent of hazards in their community (FEMA 2011b), which the methods used in this study help to achieve by reducing uncertainty around hazard extent, type, and location through characterization of previous events.

5 Conclusion

It is clear from these analyses that the distribution of wildfire occurrences in Washington has changed since 1970. Although large fires can happen across the state, the concentration of large fires over the north-central part of the state over time is worrisome. This information should be used to inform decision making around where wildfire mitigation strategies should be focused and prioritized across the state.

Future studies could improve on our methods by creating a bounding polygon that also removes unvegetated areas, such as those covered by glacial ice, to get a more accurate representation of where wildfires are possible but where data may be missing. For a more substantive analysis of fire occurrence, future research could make use of the Fire Occurrence Database (Short 2017) or other ignition location data. Also important is the consideration of fire severity in determinations of risk (Zald and Dunn 2018), which was beyond the scope of our study. Other future work could improve the detection of hot spots across large study areas like ours by more closely considering the non-stationarity of the data and by using local models.

For work done in Washington, future research should determine what is driving the concentration of large wildfires over the Chelan-Douglas-Okanogan County area, with specific consideration given to land use, vegetation and soil types, and climatic variables (for example, precipitation and temperature). Hazard mitigation planning occurring in this region should reflect the need to identify if the risk of large, damaging wildfires is increasing for critical assets in the region. Local officials and planners may have localized knowledge that can suggest what might be driving the intensity of the north-central hot spot that can also be used for developing their vulnerability assessments required by FEMA. This local knowledge should inform mitigation strategies as well. Spatial regression analyses have been shown to help specify which specific variables may be contributing the most to wildfire occurrences around the world (Koutsias et al. 2010; Rodrigues et al. 2014; Shekede et al. 2019) and can therefore validate (or invalidate) assumptions found in any strategies developed by jurisdictions to reduce their risk.

The statistical tests used in this study present a method for evaluating how a natural hazard has changed over time and space. This is vastly important information for researchers, planners, and decision makers in the hazard mitigation field, especially as climate change is influencing the frequency, severity, and seasonality of many natural hazards. In essence, climate change is making historical patterns of hazard occurrences less reliable as indicators of future occurrences, which will require hazard mitigation planners to use different techniques for determining probabilities of future occurrence and risk mapping. Hazard mitigation planners should work to incorporate more sophisticated tools in their risk analyses to capture the complexity of climate-influenced natural hazards. Understanding the spatiotemporal variation in hazard occurrence is one factor among many to help direct where mitigation projects are most needed, provide a quantitative check on long-held assumptions about where hazards are most likely, and establish a foundation for further study to determine why hazards are occurring where they do and what may be driving spatiotemporal variation. Additionally, the tools used in this study are available in a common GIS software available to many practitioners and researchers, suggesting that improving mitigation plans through the use of sophisticated statistical analysis or similar quantitative methods is more possible than it seems.

References

Abatzoglou, J.T., C.A. Kolden, A.P. Williams, J.A. Lutz, and A.M. Smith. 2017. Climatic influences on interannual variability in regional burn severity across western US forests. International Journal of Wildland Fire 26: 269–275.

Agarwadkar, A.M., S. Azmi, and A.B. Inamdar. 2013. Understanding grids and effectiveness of hexagonal grid in spatial domain. In Proceedings of the International Conference on Recent Trends in Information Technology and Computer Science (ICRTITCS – 2012), 17–18 December 2012, Mumbai, India, 25–27.

Banusiewicz, J.D. 2014. Climate change can affect security environment, Hagel says. DOD News, 12 October 2014. https://www.defense.gov/Explore/News/Article/Article/603437/climate-change-can-affect-security-environment-hagel-says/. Accessed 21 Aug 2020.

Barros, A.M., A.A. Ager, M.A. Day, M.A. Krawchuk, and T.A. Spies. 2018. Wildfires managed for restoration enhance ecological resilience. Ecosphere 9(3): Article e02161.

Brogan, D.J., P.A. Nelson, and L.H. MacDonald. 2017. Reconstructing extreme post-wildfire floods: A comparison of convective and mesoscale events. Earth Surface Processes and Landforms 42(15): 2505–2522.

Caceres, C.F. 2011. Using GIS in hotspots analysis and for forest fire risk zones mapping in the Yeguare Region, southeastern Honduras. Winona, MN: Saint Mary’s University of Minnesota, University Services Press.

Campello, R., D. Moulavi, and J. Sander. 2013. Density-based clustering based on heirarchical density estimates. In Proceedings of the 17th Pacific-Asia Conference on Knowledge Discovery and Data Mining, 14–17 April 2013, Gold Coast, Australia, 160–172.

CEMHS (Center for Emergency Management and Homeland Security). 2019. Spatial hazard events and losses database for the United States (version 18.8). Arizona State University, Tempe, AZ, USA.

Dennison, P.E., S.C. Brewer, J.D. Arnold, and M.A. Moritz. 2014. Large wildfire trends in the western United States, 1984–2011. Geophysical Research Letters 41(8): 2928–2933.

Djenaliev, A., M. Kada, A. Chymyrov, O. Hellwich, and A. Muraliev. 2018. Spatial statistical analysis of earthquakes in Kyrgyzstan. International Journal of Geoinformatics 14(1): 11–20.

DNR (Washington Department of Natural Resources). 2020. DNR fire statistics 1970–2007. Washington Department of Natural Resources. http://geo.wa.gov/datasets/wadnr::dnr-fire-statistics-1970-2007-1. Accessed 20 Aug 2020.

Doubleday, A., J. Schulte, L. Sheppard, M. Kadlec, R. Dhammapala, J. Fox, and T. Busch Isaksen. 2020. Mortality associated with wildfire smoke exposure in Washington state, 2006–2017: A case-crossover study. Environmental Health 19: Article 4.

Dozic, A. 2020. DNR fire statistics 2008–present. Washington Department of Natural Resources (DNR). http://geo.wa.gov/datasets/wadnr::dnr-fire-statistics-2008-present-1. Accessed 20 Aug 2020.

Dunagan, C. 2020. Fire danger returning to western Washington. Salish Sea Currents Magazine, 14 May 2020. https://www.eopugetsound.org/magazine/IS/fire-danger. Accessed 26 Aug 2020.

Esri. 2020. How density-based clustering works. https://pro.arcgis.com/en/pro-app/tool-reference/spatial-statistics/how-density-based-clustering-works.htm. Accessed 18 Aug 2020.

FEMA (Federal Emergency Management Agency). 2011a. FEMA climate change adaptation policy statement. https://www.fema.gov/sites/default/files/2020-07/fema_climate-change-policy-statement_2013.pdf. Accessed 28 Aug 2020.

FEMA (Federal Emergency Management Agency). 2011b. Local Mitigation Plan Review Guide. Washington, DC: FEMA.

Guo, L., R. Liu, C. Men, Q. Wang, Y. Mao, M. Shoaib, Y. Wang, L. Jiao, et al. 2021. Multiscale spatiotemporal characteristics of landscape patterns, hotspots, and influencing factors for soil erosion. Science of the Total Environment 779: Article 146474.

Haas, J.R., M. Thompson, A. Tillery, and J.H. Scott. 2016. Capturing spatiotemporal variation in wildfires for improving post wildfire debris-flow hazard assessments. In Natural hazard uncertainty assessment, ed. K. Riley, P. Webley, and M. Thompson, 301–317. Washington, DC: American Geophysical Union.

Hamed, K.H. 2009. Exact distribution of the Mann-Kendall trend test statistic for persistent data. Journal of Hydrology 365(1–2): 86–94.

Jaquez, G.M. 2008. Spatial cluster analysis. In Handbook of geographic information science, ed. J.P. Wilson, and A.S. Fotheringham. Oxford: Blackwell Publishing.

Jolly, W.M., M.A. Cochrane, P.H. Freeborn, Z.A. Holden, T.J. Brown, G.J. Williamson, and M.J. Bowman. 2015. Climate-induced variations in global wildfire danger from 1979 to 2013. Nature Communications 6: Article 7537.

Koutsias, N., J. Martinez-Fernandez, and B. Allgower. 2010. Do factors causing wildfires vary in space? Evidence from geographically weighted regression. GIScience & Remote Sensing 47: 221–240.

Levin, N., and A. Heimowitz. 2012. Mapping spatial and temporal patterns of Meditteranean wildfires from MODIS. Remote Sensing of the Environment 126: 12–26.

Liu, Y., J. Bi, Z. Ma, and C. Wang. 2017. Spatial multi-scale relationships of ecosystem services: A case study using geostatistical methodology. Scientific Reports 7(1): Article 9486.

Lv, F., L. Deng, Z. Zhang, Z. Wang, Q. Wu, and J. Qiao. 2021. Multiscale analysis of factors affecting food security in China, 1980–2017. Environmental Science and Pollution Research 29(5): 6511–6525.

McClure, C.D., and D.A. Jaffe. 2018. US particulate matter air quality improves except in wildfire-prone areas. PNAS 115(31): 7901–7906.

McLemore, S. 2017. Spatio-temporal analysis of wildfire incidence in the state of Florida. Msc thesis. University of Southern California, Los Angeles, CA, USA.

Meng, Y., Y. Deng, and P. Shi. 2015. Mapping forest wildfire risk of the world. In World atlas of natural disaster risk, ed. P. Shi, and R. Kasperson, 261–275. Heidelberg: Springer.

Mohd Said, S., E.-S. Zahran, and S. Shams. 2017. Forest fire risk assessment using hotspot analysis in GIS. The Open Civil Engineering Journal 11: 786–801.

Nelson, T.A., and B. Boots. 2008. Detecting spatial hot spots in landscape ecology. Ecography 31: 556–566.

Olivia, P., and W. Schroeder. 2015. Assessment of VIIRS 375 m active fire detection product for direct burned area mapping. Remote Sensing of the Environment 160: 144–155.

Pereira, M.G., L. Caramelo, C. Vega Orozco, R. Costa, and M. Tonini. 2015. Space-time clustering analysis performance of an aggregated dataset: The case of wildfires in Portugal. Environmental Modelling & Software 72: 239–249.

Rahman, M.S., T.T. Isaba, and A.M. Riyadh. 2019. Comparison between directional distribution of observed and forecasted trajectories of hurricane in North Atlantic Basin. In Proceedings of the International Conference on Disaster Risk Management, 12–14 January 2019, Dhaka, Bangladesh, 562–567.

Rasker, R. 2015. Resolving the increasing risk from wildfires in the American West. The Solutions Journal 6(2): 55–62.

Rodrigues, M., J. de la Riva, and S. Fotheringham. 2014. Modeling the spatial variation of the explanatory factors of human-caused wildfires in Spain using geographically weighted logistic regression. Applied Geography 48: 52–63.

Sankey, J.B., J. Kreitlet, T.J. Hawbaker, J.L. McVay, M.E. Miller, E.R. Mueller, N.M. Vaillant, S.E. Lowe, and T.T. Sankey. 2017. Climate, wildfire, and erosion ensemble foretells more sediment in western USA watersheds. Geophysical Research Letters 44(17): 8884–8892.

Shekede, M.D., I. Gwitira, and C. Mamvura. 2019. Spatial modelling of wildfire hotspots and their key drivers across districts of Zimbabwe Southern Africa. Geocarto International 36(8): 874–887.

Short, K.C. 2017. Spatial wildfire occurrence data for the United States, 1992–2015, 4th edn. US Forest Service, Missoula Fire Sciences Laboratory. https://www.fs.usda.gov/rds/archive/catalog/RDS-2013-0009.4. Accessed 15 Sept 2021.

Snover, A.K., G.S. Mauger, L.C. Whitely Binder, M. Krosby, and I. Tohver. 2013. Climate change impacts and adaptation in Washington state: Technical summaries for decision makers. State of Knowledge Report for the Washington State Department of Ecology. Climate Impacts Group, University of Washington, Seattle, WA, USA.

Stults, M. 2017. Integrating climate change into hazard mitigation planning: Opportunities and examples in practice. Climate Risk Management 17: 21–34.

Syphard, A., H. Rustigian-Romsos, and J.E. Keeley. 2021. Multiple-scale relationships between vegetation, the wildland-urban interface, and structure loss to wildfire in California. Fire 4(1): Article 12.

von Kaenel, C. 2020. How much of $1 billion in disaster grants wil Butte County get? It could take a year to find out. Chico Enterprise-Record. https://www.chicoer.com/2020/02/24/how-much-of-1-billion-in-disaster-grants-will-butte-county-get-it-could-take-a-year-to-find-out/. Accessed 19 Aug 2020.

WA EMD (Washington Emergency Management Division). 2018. Washington State Enhanced Hazard Mitigation Plan. Camp Murray, WA: Washington Military Department, Emergency Management Division. https://mil.wa.gov/enhanced-hazard-mitigation-plan. Accessed 29 Jul 2020.

Weinkle, J., C. Landsea, D. Collins, R. Musulin, R.P. Crompton, P.J. Klotzbach, and R. Pielke. 2018. Normalized hurricane damage in the continental United States 1900–2017. Nature Sustainability 1(12): 808–813.

Westerling, A.L., A. Gershunov, T.J. Brown, D.R. Cayan, and M.D. Dettinger. 2003. Climate and wildfire in the western United States. Bulletin for the American Meteorological Society 84(5): 595–604.

Wing, M.G., and J. Long. 2015. A 25-year history of spatial and temporal trends in wildfire activity in Oregon and Washington, USA. Modern Applied Science 9(3): 117–132.

Zald, H.S., and C.J. Dunn. 2018. Severe fire weather and intensive forest management increase fire severity in a multi-ownership landscape. Ecological Applications 28(4): 1068–1080.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zerbe, K., Polit, C., McClain, S. et al. Optimized Hot Spot and Directional Distribution Analyses Characterize the Spatiotemporal Variation of Large Wildfires in Washington, USA, 1970−2020. Int J Disaster Risk Sci 13, 139–150 (2022). https://doi.org/10.1007/s13753-022-00396-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13753-022-00396-4