Abstract

The WWW contains a huge amount of documents. Some of them share the same subject, but are generated by different people or even by different organizations. A semi-structured model allows to share documents that do not have exactly the same structure. However, it does not facilitate the understanding of such heterogeneous documents. In this paper, we offer a characterization and algorithm to obtain a representative (in terms of a resemblance function) of a set of heterogeneous semi-structured documents. We approximate the representative so that the resemblance function is maximized. Then, the algorithm is generalized to deal with repetitions and different classes of documents. Although an exact representative could always be found using an unlimited number of optional elements, it would cause an overfitting problem. The size of an exact representative for a set of heterogeneous documents may even make it useless. Our experiments show that, for users, it is easier and faster to deal with smaller representatives, even compensating the loss in the approximation.

Similar content being viewed by others

Notes

Proof has been moved to “Appendix B.1”.

Proof has been moved to “Appendix B.2”.

Proof has been moved to “Appendix B.3”.

Proof has been moved to “Appendix B.4”.

Proof has been moved to “Appendix B.5”.

\(\alpha =\beta =1\) unless explicitly said otherwise.

References

Abiteboul S (1997) Querying semi-structured data. In: Proceedings of 6th international conference on database theory (ICDT’97), LNCS, vol 1186. Springer, pp 1–18

Bertino E, Guerrini G, Mesiti M (2004) A matching algorithm for measuring the structural similarity between an XML document and a DTD and its applications. Inf Syst 29(1):23–46

Abiteboul S, Buneman P, Suciu D (2000) Data on the Web–from relations to semistructured data and XML. Morgan Kaufmann, Burlington

Wang L, Hassanzadeh O, Zhang S, Shi J, Jiao L, Zou J, Wang C (2015) Schema management for document stores. Proc VLDB Endow 8(9):922–933

W3C, Extensible Markup Language (XML) 1.0, 3rd Edition (February 2004)

Albert J, Giammarresi D, Wood D (2001) Normal form algorithms for extended context-free grammars. Theor Comput Sci 267(1–2):35–47

Garofalakis M, Gionis A, Rastogi R, Sechadri S, Shim K (2003) XTRACT: learning document type descriptors from XML document collections. Data Min Knowl Discov 7(1):23–56

Nestorov S, Abiteboul S, Motwani R (1998) Extracting schema from semistructured data. In: Proceedings of the ACM SIGMOD international conference on management of data (SIGMOD 1998). ACM, pp 295–306

Sanz I, Pérez J, Berlanga R, Aramburu M (2003) XML schemata inference and evolution. In: Proceedings of 14th international conference on databases and expert systems applications (DEXA’03), LNCS, vol 2736. Springer, pp 109–118

Nayak R, Iryadi W (2007) XML schema clustering with semantic and hierarchical similarity measures. Knowl Based Syst 20(4):336–349

Widom J (1999) Data management for XML: research directions. IEEE Data Eng Bull 22(3):44–52

Guerrini G, Mesiti M, Sanz I (2007) An overview of similarity measures for clustering XML documents. In: Akali A, Pallis G (eds) Emerging techniques and technologies: web data management practices. IGI Global, Hershey, pp 56–78

Wang K, Liu H (1997) Schema discovery for semistructured data. In: 3rd International conference on knowledge discovery and data mining (KDD-97), pp 271–274

Hegewald J, Naumann F, Weis M (2006) XStruct: efficient schema extraction from multiple and large XML documents. In: Proceedings of the 22nd international conference on data engineering workshops, ICDE 2006, 3–7 Apr 2006, Atlanta, p 81

Jung J-S, Oh D-I, Kong Y-H, Ahn J-K (2002) Extracting information from XML documents by reverse generating a DTD. In: Proceedings of the EurAsia-ICT 2002, LNCS, vol 2510. Springer, pp 314–321

Bex GJ, Gelade W, Neven F, Vansummeren S (2010) Learning deterministic regular expressions for the inference of schemas from XML data. ACM Trans Web 4(4):14:1–14:32

Moh D-H, Lim E-P, Ng W-K (2000) Re-engineering structures from Web documents. In: 5th ACM conference on digital libraries (DL 2000). ACM, pp 67–76

Moh D-H, Lim E-P, Ng W-K (2000) DTD-miner: a tool for mining DTD from XML documents. In: Second international workshop on advance issues of E-commerce and web-based information systems (WECWIS 2000). IEEE Computer Society, pp 144–151

Leonov AV, Khusnutdinov RR (2005) Study and development of the DTD generation system for XML documents. Program Comput Softw 31(4):197–210

Min J-K, Ahn J-Y, Cung C-W (2003) Efficient extraction of schemas for XML documents. Inform Process Lett 85:7–12

Izquierdo JLC, Cabot J (July 8-12, 2013) Discovering implicit schemas in JSON data. In: Web engineering—13th international conference, ICWE 2013, Aalborg, Proceedings, 2013, pp 68–83

Boobna U, de Rougemont M (2004) Correctors for XML data. In: Proceedings of 2nd international XML database symposium (XSYM’04), LNCS, vol 3186. Springer, pp 97–111

Dalamagas T, Cheng T, Winkel K-J, Sellis T (2006) A methodology for clustering XML documents by structure. Inform Syst 31:187–228

Zhang Z, Shasha D (1989) Simple fast algorithms for the editing distance between trees and related problems. SIAM J Comput 18(6):1245–1262

Klettke M, Störl U, Scherzinger S (2015) Schema extraction and structural outlier detection for JSON-based NOSQL data stores. In: Datenbanksysteme für Business, Technologie und Web (BTW), 16. Fachtagung des GI-Fachbereichs "Datenbanken und Informationssysteme" (DBIS), 4.-6.3.2015 in Hamburg, Proceedings, pp 425–444

Batagelj V, Bren M (1995) Comparing resemblance measures. J Classif 12(1):73–90

Lian W, Cheung D, Mamoulis N, Yiu S-M (2004) An efficient and scalable algorithm for clustering XML documents by structure. IEEE Trans Knowl Data Eng 16(1):82–96

Gallinucci E, Golfarelli M, Rizzi S (2018) Schema profiling of document-oriented databases. Inform Syst 75:13–25

Baader F, Calvanese D, McGuinness D, Nardi D, Patel-Schneider P (eds) (2003) The description logic handbook. Cambridge University Press, Cambridge

Teege G (1994) Making the difference: a substraction operation for description logics. In: Proceedings of the international conference on principles of knowledge representation and reasoning (KR’94). Morgan Kaufmann, pp 540–550

Estivill-Castro V, Yang J (2000) Fast and robust general purpose clustering algorithms. In: Proceedings of 6th Pacific Rim international conference on artificial intelligence (PRICAI 2000), LNCS, vol 1886. Springer, pp 208–218

Acknowledgements

We would also like to thank Age Fotostock for allowing us to use their files for testing purposes. Special thanks also to Toni Gutzens for his help on implementing the prototype and Jon Bosak for allowing us to use his documents for testing purposes.

Author information

Authors and Affiliations

Corresponding author

Appendices

A Experimental Study

We have conducted the experiments in two different directions. On the one hand, we have analyzed the usefulness of the obtained schema, and how informative and useful it is with regard to the perfect-matching (in Section A.1). On the other hand, we have also tested the performance of the algorithm to find one MP (shown in Section A.2), by means of randomly generated documents. The performance on finding different classes has not been analyzed, because it strictly depends on the cost of the clustering algorithm used (i.e. k-means in our case), and this is out of the scope of this work.

1.1 A.1 Usefulness

This section scrutinizes the usefulness of approximating the schema of a set of documents instead of using the perfect-matching. Four different sets of real-life documents have been used in the experiment (in Sects. A.1.1, A.1.2, A.1.3 and A.1.4), so that an approximated schema has been generated for each of them.Footnote 6 These have been used in a user study of usability against the perfect ones (in Sect. A.1.5).

1.1.1 A.1.1 Religious Texts

The documents in this section are authored by Jon Bosak,Footnote 7 and correspond to four religious texts (i.e. “The Old Testament”, “The New Testament”, “The Quran”, and “The Book of Mormon”). LHS of Fig. 13 shows the MP of the four documents under consideration. Characteristics of the documents and parameters are as follows:

-

Number of documents: 4

-

Real number of classes: 4

-

Used number of classes: 1

With those parameters, we obtain a resemblance of 47.6%. This would mean that documents are quite different, and we should define different classes of documents. However, this does not make sense if we only have four documents. Surprisingly, we would get 93.5% (not 100%) of resemblance for four different classes. This is because not even the repetitions of the same element inside a document share the same structure.

Notice that elements like “suracoll”, “sura” and “witness” are not considered relevant. Nevertheless, some that were optional, like “coverpg”, “titlepg”, and “preface”) appear enough times to be in the output.

1.1.2 A.1.2 Shakespeare Texts

The documents in this section are authored by Jon Bosak,Footnote 8 and show a set of the plays of William Shakespeare. LHS of Fig. 14 shows the DTD of the thirty-seven documents under consideration. Characteristics of the documents and parameters are as follows:

-

Number of documents: 37

-

Real number of classes: 27

-

Used number of classes: 1

With those parameters, we obtain the schema at RHS of Fig. 14, resulting in a resemblance of 90%. This would mean that the 37 kinds of documents are quite similar. The maximum resemblance would be for 37 classes, which results in 96%. As in Sect. A.1.1, this is because not even the repetitions of the same element inside a document share the same structure. Just by adding optional elements to the schema in Fig. 14, we get a 98.5% of resemblance.

Notice that some optional elements like “EPILOGUE”, “PROLOGUE” and “INDUCT” do not appear in the MP, because only few documents contain them (for instance, “INDUCT” appears in just 2 out of 37 documents). Thus, it seems clear that to understand the meaning of a “PLAY”, “INDUCT” is not relevant. The same can be said on “ACT”. If you want to explain your child what an act is, you would just say that it has a title, and one or more scenes. Only if s/he is really interested and old enough, you would point out that it may contain subtitles, prologue and epilogue.

On the other hand, “PERSONA” and “PGROUP” are important enough to appear in the MP. They are not optional nor a choice, because most documents contain them. All documents contain “PERSONA”, and only four documents do not contain “PGROUP” inside “PERSONAE”.

1.1.3 A.1.3 Response/Request Documents

The documents in this section are authored by Age Fotostock, which is an imagery agency in all areas (both rights protected and royalty free). Age provides a technical hosting platform (THP) for the sharing of images among imagery agencies around the world. In this example, four classes of documents are provided, which correspond to a licensed protocol for B2B image sharing. First, an agency needs to request the existing resolutions for one or more images (classes one and three). Secondly, an agency requests an specific high-resolution file for one or more images (classes two and four). The documents have been extracted from the service log files of the company. Thus, there was no available DTD. Characteristics of the documents and parameters are as follows:

-

Number of documents: 189

-

Real number of classes: 6

-

Used number of classes: 4

If we try to find the MP of these documents, it results in a resemblance of 36.8%. This means that there exist completely different kinds of documents. By looking for four classes, we obtain those in Fig. 15, which results in a resemblance of 99.3%. Looking for less than four classes results in unrealistic DTDs where some of those four are united. This effect can be avoided by increasing \(\beta \) above one (1.2 was enough in our experiments).

1.1.4 A.1.4 Photo Documents

The documents in this section are also authored by Age Fotostock, and are an stratified random extraction of the imagery database. Thus, again, there was no available DTD. Characteristics of the documents and parameters are as follows:

-

Number of documents: 2497

-

Real number of classes: \(\sim \) 100

-

Used number of classes: 1

All elements found in the MP (those at LHS of Fig. 16) appear in more than two thousand documents. Thus, it looks reasonable to consider them as mandatory. On the other hand, optional elements (those new at RHS of Fig. 16) appear in less than one thousand. The resemblance obtained to the MP is 91%, which increases up to 97.4% if we also consider the optional elements. This means that there is only one class of documents, that can be well described by the obtained MP.

1.1.5 A.1.5 Usability Test

In this section, we study the comprehensibility of approximated schemas against perfect-matching. First of all, let’s see the usability and maintainability measures defined in [22]:

- Size:

-

is the number of nodes in the graph representing the schema.

- Complexity:

-

is defined as the number of edges, plus one, minus the number of nodes (i.e. the number of edges that should be removed to obtain a tree, which would mean zero complexity).

- Depth:

-

is the maximum depth of the graph representing the schema.

- Fan-In:

-

is the maximum number of children among the elements of the schema.

- Fan-Out:

-

is the maximum number of parents among the elements of the schema.

Table 2 shows those metrics in the schemas considered in previous sections (from A.1.2, we use the approximated schema without optional elements). Just notice that only in one case (i.e. A.1.3) these metrics are not affected. In this case, only the optionality of some elements has changed. In the other three pairs of schemas, we can observe that all usability metrics have been improved, which means that the approximated schema is much simpler in any sense.

In order to demonstrate the efficiency and effectiveness of the approximated schemas, a user study has been conducted. Some schemas (some perfect and other approximated) have been given to each individual, besides a list of five randomly chosen elements from the perfect one (notice that some of these may not be present in the approximated one) for each schema. The individual had to answer the number of paths leading to each element, the depth of each one of these paths and whether the path must be in every document or not (i.e. the optionality of the path). In the approximated schema, the queried element may not be present or if present, it may have been considered non-optional (when, actually, it is optional). Any of these cases has also been considered a user error. Another important point in the study is that the mistakes have been weighted by the importance of the element (i.e. a mistake in an optional leaf that only appears from time to time counts proportionally less than a mistake in the root or any other mandatory element).

Table 3 shows the average time expressed in seconds (column “#” shows the number of individuals that answered every questionnaire). In all four cases, the approximated schema results in less average time for users to answer. A T test has been done in order to discard that those results have been obtained by chance. For the first one, second one and fourth one, there is a probability of 0.2, 3 and 6%, respectively, of obtaining these results just by chance. Only in the third one (that has a really high similarity) the probability of obtaining these results by chance can be considered high (i.e. 27%).

Regarding the effectiveness, Table 4 summarizes the experimental results. Each of the five queried elements counts by one, so the maximum score would be 5 (meaning answers for all five elements are correct) and the minimum would be 0 (meaning that the five elements are mandatory and all five answers are wrong). In the first case, since the resemblance was really low (i.e. 47.6%), users made much more mistakes working on the approximated schema and this is not by chance (0.3% in the T test). Third and fourth cases have similar resemblances, and so are the experimental results. Users make more mistakes with the approximated schema and there is an 11% of probability of making such mistakes by chance. However, the most surprising result is the third one. In this case, the effectiveness is improved with the approximated schema (with a probability of only 8% of being by chance). Notice that in this case, the resemblance is really high.

Thus, to check the influence of different resemblances, we clustered the documents in A.1.2 into five clusters and generated a new approximated schema, whose resemblance to the perfect one is now 95% (instead of 90% as the previous approximation). Giving this new schema to eight people, we obtained the results in Table 5. As in the previous experiment, when the resemblance is high, there is not any relevant difference between the average time users spend in understanding the schema, either perfect or approximated. However, as also happened in the other case of high resemblance, the effectiveness has been improved (just a 14% of having this improvement by chance), since individuals have more correct answers.

These experiments corroborate our intuition that giving simpler schemas to the users, they will spend less time working with them. If these schemas are too different (resemblance 91% or bellow in our experiments), users make mistakes due to the information loss we have in the approximation of the schema. However, if the resemblance we obtain is high enough (95% or above in our experiments) the effectiveness of users compensates the loss in the approximation. Thus, spending the same time, users understand the contents of the schema much better and make less mistakes.

1.2 A.2 Performance

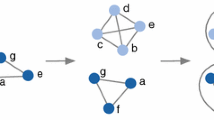

This section contains experimental performance results obtained from a self generated data set. These sets have been generated randomly from the schema in the LHS of Fig. 17, parametrizing the probability of appearance of repeated and optional elements as follows:

- +:

-

\(0.25*0.75^{n-1}\) (being n the number of repetitions)

- *:

-

\(0.25*0.75^{n}\) (being n the number of repetitions)

- ?:

-

0.75

- \(\mid \) :

-

\(\frac{k}{\frac{n(n+1)}{2}}\) (for the element at position k in a choice of n elements)

In the LHS of Fig. 17, we have the original schema, while its RHS shows the obtained MP from one thousand randomly generated documents. Resulting resemblance is 65%. However, we should not analyze it in this case, because the purpose of this test is just to corroborate the linear behavior of the algorithm and the documents used are senseless. Characteristics of the set and parameters used are as follows:

-

Number of documents: 1000

-

Real number of classes: \(\sim \) 180

-

Used number of classes: 1

Figure 18 shows the time (in milliseconds) to obtain the MP of 10, 20, 30, and 40 thousand documents following the previous DTD. We can see that, as expected, it increases linearly on the number of documents.

B Proofs

This section contains the proofs of the different theorems (“s” stands for “size”, to make equations shorter).

1.1 Proof of Theorem 1

Proof

Let be C and C’ two schemas so that \(r(C,E)\ge r(C',E)\). Expanding equations, we get:

By crossing denominators,

Simplifying \((\sum _{d\in E} s(lcs(C,d)))\cdot (\sum _{d\in E} s(lcs(C',d)))\) at both sides results in:

This can also be written like

which shows that all we need to compare are common elements (in numerator), and the sizes of both schemas (in denominator). \(\square \)

1.2 B.2 Proof of Lemma 1

Proof

Let be C and C’ two schemas and e an element so that \(C=C'\sqcap DL_\bot (e)\), and \(r(C,E)>r(C',E)\) (i.e. C contains one more element and this improves resemblance). Retaking the inequality at the end of proof of Theorem 1:

Which by adding and subtracting \(s(lcs(C',d))\) to every term in the left numerator, and \(s(C')\) to the left denominator results in:

And reordering sums, we obtain

Which (given that \(\frac{a+b}{c+d}\ge \frac{a}{c}\) iif \(\frac{b}{d}\ge \frac{a}{c}\)) is true if and only if

Since we assume that the size of adding a non-optional element to a schema is always equal to the size of the schema plus the added element, we can transform the left hand side as follows:

Therefore, either adding an element or not does not depend on the size of the element, but on the number of times it appears in the documents. Thus, if adding an element is worthwhile, so it is adding any set of elements appearing the same number of times. \(\square \)

1.3 B.3 Proof of Corollary 1

Proof

Since by hypothesis, \(k_1>k_2\), then \(\frac{k_1}{\beta \cdot \mid E\mid } > \frac{k_2\cdot s(e_2)}{\beta \cdot \mid E\mid \cdot s(e_2)} \). Therefore, if \(e_2\) improved the resemblance (i.e. by proof of Lemma 1, we know that \(\frac{k_2}{\beta \cdot \mid E\mid }\ge \frac{\sum _{d\in E} s(lcs(C,d))}{\alpha \cdot \sum _{d\in E} s(d) +\beta \cdot \mid E\mid \cdot s(C)}\)), then \(e_1\) improves it even more:

\(\square \)

1.4 B.4 Proof of Theorem 2

Proof

By hypothesis, let’s suppose that there is an MP \(M=\sqcap _{i=1..q} DL_\bot (e_i)\) that maximizes the resemblance and is not a conjunction of LCSs. Let’s define \(E_C=\{d\in E\mid d\sqsubseteq C\}\), and divide the proof in three steps:

-

Step 1:

Every element in M subsumes some document in E (i.e. \(\forall i\in 1..q: E_{DL_\top (e_i)}\ne \emptyset \))

Let’s suppose not (proof by contradiction), i.e. \(\exists i=1..q:E_{DL_\top (e_i)}=\emptyset \). We can remove the last k tags from \(e_i\) until there exists some document d with an element matching \(e'_i\) (being \(e'_i=(t_1, t_2,\ldots ,t_{l_i-k})\)). Now, \(d\sqsubseteq DL_\top (e'_i)\). Let be \(M'=DL_\bot (e_1)\sqcap ...\sqcap DL_\bot (e_{i-1})\sqcap DL_\bot (e'_i)\sqcap DL_\bot (e_{i+1}) \sqcap ... \sqcap DL_\bot (e_q)\). It is easy to see that \(w_c(M,E)=w_c(M',E)\), \(w_p(M,E)=w_p(M',E)\), and \(w_m(M,E)>w_m(M',E)\). So,

$$\begin{aligned} r(M,E)\le r(M',E) \end{aligned}$$which means they are either equal (if \(\beta =0\)) or contradicts the hypothesis of r(M, E) being the maximum resemblance. Therefore, we can assume that \(\forall i=1..q: E_{DL_\top (e_i)}\ne \emptyset \).

-

Step 2:

All elements \(e_i\) in M are leafs of \(lcs(E_{DL_\top (e_i)})\) (i.e. \(lcs(E_{DL_\top (e_i)})\sqsubseteq DL_\bot (e_i)\), which is only possible if every \(e_i\) is a leaf of some document)

Let’s suppose not, because exists \(e_i\) so that the corresponding chain of existentials in \(lcs(E_{DL_\top (e_i)})\) is longer than \(DL_\bot (e_i)\) (notice that it can never be shorter, by construction of \(E_{DL_\top (e_i)}\) and definition of the LCS). This means that \(e_i\) is not a leaf of any document in E.

Let’s call \(e_L\) to \((t_1,t_2,\ldots ,t_{l_i},\ldots ,t_{l_i+k})\) so that it results in the corresponding chain of existentials of \(lcs(E_{DL_\top (e_i)})\), and let be \(M'=DL_\bot (e_1)\sqcap ...\sqcap DL_\bot (e_{i-1})\sqcap DL_\bot (e_L)\sqcap DL_\bot (e_{i+1}) \sqcap ... \sqcap DL_\bot (e_q)\). Therefore, since \(e_L\) must be present in all documents in \(E_{DL_\top (e_i)}\), we can see the following equalities:

$$\begin{aligned} w_c(M',E)= & {} w_c(M,E)+\mid E_{DL_\top (e_i)}\mid \cdot (s(DL_\bot (e_L)) \\&-s(DL_\bot (e_i))) \\ w_p(M',E)= & {} w_p(M,E)+\mid E_{DL_\top (e_i)}\mid \cdot (s(DL_\bot (e_i)) \\&-s(DL_\bot (e_L))) \\ w_m(M',E)= & {} w_m(M,E)+\mid E\setminus E_{DL_\top (e_i)}\mid \\&\cdot (s(DL_\bot (e_L)) -s(DL_\bot (e_i))) \end{aligned}$$Notice that \(\forall d\in E\setminus E_{DL_\top (e_i)}: s(lcs(d,DL_\top (e_L)))=s(lcs(d,DL_\top (e_i)))\), because if exists a document with an element \(e'\) so that \(DL_\top (e_L)\sqsubseteq DL_\top (e')\sqsubset DL_\top (e_i)\), by definition it belongs to \(E_{DL_\top (e_i)}\).

By hypothesis, \(r(M,E)\ge r(M',E)\) (M maximizes resemblance). Thus, expanding both resemblances (“\(e_i\)” stands for “\(DL_\bot (e_i)\)”, and “\(e_L\)” stands for “\(DL_\bot (e_L)\)”),

$$\begin{aligned}&\frac{w_c(M,E)}{w_c(M,E)+\alpha w_p(M,E)+\beta w_m(M,E)} \\&\quad \ge \frac{w_c(M,E)+\mid E_{e_i}\mid \cdot (s(e_L)-s(e_i))}{(w_c(M,E)+\alpha w_p(M,E)+\beta w_m(M,E))+((1-\alpha )\cdot \mid E_{e_i}\mid +\beta \mid E\setminus E_{e_i}\mid )(s(e_L)-s(e_i))} \end{aligned}$$Let be \(e'_i=(t_1,t_2,\ldots ,t_{l_i-1})\). Since, as stated before, by hypothesis, \(e_i\) is not a leaf in any document, \(\forall d\in E\setminus E_{DL_\top (e_i)}: s(lcs(d,DL_\top (e'_i)))=s(d,DL_\top (e_i))\). Thus, as before, we can define \(M''=DL_\bot (e_1)\sqcap ...\sqcap DL_\bot (e_{i-1})\sqcap DL_\bot (e'_i)\sqcap DL_\bot (e_{i+1})\sqcap ... \sqcap DL_\bot (e_q)\):

$$\begin{aligned} w_c(M',E)= & {} w_c(M,E)+\mid E_{DL_\top (e_i)}\mid \cdot (s(DL_\bot (e'_i)) \\&-s(DL_\bot (e_i))) \\ w_p(M',E)= & {} w_p(M,E)+\mid E_{DL_\top (e_i)}\mid \cdot (s(DL_\bot (e_i)) \\&-s(DL_\bot (e'_i))) \\ w_m(M',E)= & {} w_m(M,E)+\mid E\setminus E_{DL_\top (e_i)}\mid \\&\cdot (s(DL_\bot (e'_i)) -s(DL_\bot (e_i))) \end{aligned}$$By hypothesis, \(r(M,E)\ge r(M',E)\) (M maximizes resemblance). Thus, expanding both resemblances (“\(e_i\)” stands for “\(DL_\bot (e_i)\)”, and “\(e'_i\)” stands for “\(DL_\bot (e'_i)\)”),

$$\begin{aligned}&\frac{w_c(M,E)}{w_c(M,E)+\alpha w_p(M,E)+\beta w_m(M,E)} \\&\quad \ge \frac{w_c(M,E)-\mid E_{e_i}\mid (s(e_i)-s(e'_i))}{(w_c(M,E)+\alpha w_p(M,E)+\beta w_m(M,E))-((1-\alpha )\cdot \mid E_{e_i}\mid +\beta \mid E\setminus E_{e_i}\mid )(s(e_i)-s(e'_i))} \end{aligned}$$However, both inequalities (i.e. \(\frac{a}{b}\ge \frac{a+ck}{b+dk}\) and \(\frac{a}{b}\ge \frac{a-ck'}{b-dk'}\)) are not possible at the same time, because \(s(DL_\bot (e_L))-s(DL_\bot (e_i))\) and \(s(DL_\bot (e_i))-s(DL_\bot (e'_i))\) (which correspond to k and \(k'\), respectively) are both strictly positive numbers. Therefore, the hypothesis is not true and \(e_i\) must be a leaf of \(lcs(E_{B_i})\).

-

Step 3:

All elements in \(lcs(E_{DL_\top (e_i)})\) are also elements of M (i.e. \(M\sqsubseteq lcs(E_{DL_\top (e_i)})\))

This means that \(e_i\) appears exactly in \(\mid E_{DL_\top (e_i)}\mid \) documents, and by definition of LCS, all other elements in \(lcs(E_{DL_\top (e_i)})\) appear at least in those documents. Therefore, by Lemma 1, all those elements also belong to M.

Therefore, since \(E_{DL_\top (e_i)}\) is never empty (as shown in step 1), and being \(M\sqsubseteq \sqcap lcs(E_{DL_\top (e_i)}) \sqsubseteq \sqcap DL_\bot (e_i)\) (as shown in steps 2 and 3), then by the equivalence of M (\(M\equiv \sqcap DL_\bot (e_i)\)) we get that \(M\equiv \sqcap lcs(E_{DL_\top (e_i)})\). \(\square \)

1.5 B.5 Proof of Lemma 2

Proof

Let’s suppose not (i.e. \(S_i\subseteq S_j\)). Then, by definition of LCS, we get that \(lcs(S_i)\sqsubseteq lcs(S_j)\)

-

1.

If \(lcs(S_i)\equiv lcs(S_j)\) then we can remove one of them from the schema.

-

2.

If \(lcs(S_i)\sqsubset lcs(S_j)\) then \(lcs(S_i)\sqcap lcs(S_j)\equiv lcs(S_i)\). Therefore, we can remove \(S_j\) from the schema.

\(\square \)

Rights and permissions

About this article

Cite this article

Abelló, A., de Palol, X. & Hacid, MS. Approximating the Schema of a Set of Documents by Means of Resemblance. J Data Semant 7, 87–105 (2018). https://doi.org/10.1007/s13740-018-0088-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13740-018-0088-0