Abstract

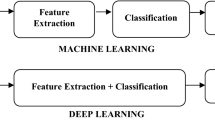

Cascade forward neural network (CFNN) is a well-known static neural network where the signals move in forward direction only. Dynamic neural network such as Elman neural network (ENN) is built in such a way that allows the signals to travel in both directions. Dynamic neural network has been used widely in various applications such as speech recognition and time series and rarely used in static applications because of their poor performance. This paper proposes to hybrid the CFNN with the ENN to take the advantages of both networks with signals travel in both directions. The proposed system is named as HECFNN, and its effectiveness is evaluated using a number of benchmarks. The benchmarks investigated include datasets of Wine, Ionosphere, Iris, Wisconsin breast cancer, glass and Pima Indians diabetes. Firstly, the performances of the hybrid system are compared with those of the CFNN and ENN. The simulations demonstrate that the proposed hybrid network structure can effectively model both linear and nonlinear static systems with high accuracy. The proposed system achieves an improvement in terms of accuracy as compared to the results of the CFNN and ENN. Secondly, the results are compared with different methods reported by Hoang. It is found that the accuracy of the proposed system is as good as, if not better than, other methods. Thirdly, the HECFNN results are also compared with best results reported from different methods in the literature review. It also found that the HECFNN results are better than those methods in general. Based on the results obtained, the proposed HECFNN system demonstrates better performance, thus justifying its potentials as a useful and effective system for prediction and classification.

Similar content being viewed by others

References

McCulloch, W.W.P.: Logical calculus of ideas immanent in nervous activity. Bull. Math. Biophys. 5(4), 115–133 (1943)

Kit-Po, W.: Artificial intelligence and neural network applications in power systems. In: 2nd International Conference on Advances in Power System Control, Operation and Management, 1993. APSCOM-93 (1993)

Classification, D.U.F.: Comparison of results. Nicolaus Copernicus University, Department of Informatics (2008). http://www.fizyka.umk.pl/kmk/projects/datasets.html

Hoang, A.: Supervised classifier performance on the UCI database. Master Thesis, Department of Computer Science, University of Adelaide, Adelaide (1997)

Eklund, P.; Hoang, A.: A comparative study of public domain supervised classifier performance on the UCI database. Research Online (2006-01-01)

Eklund, P.; Hoang, A.: A performance survey of public domain machine learning algorithms. Technical Report, School of Information Technology, Griffith University (2002)

Haykin, S.: Neural Networks: A Comprehensive Foundation. Macmillan, Basingstoke (1994)

Kim, D.-S.; Lee, S.-Y.: Intelligent judge neural network for speech recognition. Neural Process. Lett. 1(1), 17–20 (1994)

Liu, Y.; et al.: Speech recognition using dynamic time warping with neural network trained templates. In: International Joint Conference on Neural Networks, 1992. IJCNN (1992)

Elman, J.L.: Finding structure in time. Cognit. Sci. 14(1990), 179–211 (1990)

Demuth, H.; Beale, M.H.; Hagan, M.T.: Neural Network Toolbox User’s Guide. The MathWorks, Inc., Natrick (2009)

Abdul-Kadir, N.A.; et al.: Applications of cascade-forward neural networks for nasal, lateral and trill Arabic phonemes. In: 2012 8th International Conference on Information Science and Digital Content Technology (ICIDT) (2012)

Lashkarbolooki, M.; Shafipour, Z.S.: Trainable cascade-forward back-propagation network modeling of spearmint oil extraction in a packed bed using SC-CO2. J. Supercrit. Fluids 73, 108–115 (2013)

Khatib, T.; Mohamed, A.; Sopian, K.; Mahmoud, M.: Assessment of artificial neural networks for hourly solar radiation prediction. Int. J. Photoenergy 2012, 7 (2012). Article ID 946890. doi:10.1155/2012/946890

Sumit, G.; Kumar, G.G.: Cascade and feedforward backpropagation artificial neural networks models for prediction of sensory quality of instant coffee flavoured sterilized drink. Can. J. Artif. Intell. Mach. Learn. Pattern Recognit. 2(6), 78–82 (2011)

Al-Allaf, O.N.A.; Tamimi, A.A.; Mohammad, M.A.: Face recognition system based on different artificial neural networks models and training algorithms. Int. J. Adv. Comput. Sci. Appl. (IJACSA) 4(6), 40–47 (2013)

Al-Allaf, O.N.A.: Cascade-forward vs. function fitting neural network for improving image quality and learning time in image compression system. In: Proceedings of the World Congress on Engineering, vol. 2 (2012)

Geiger, H.: Storing and processing information in connectionist systems. In: Eckmiller, R. (ed.) Advanced Neural Computers, pp. 271–277. North-Holland, Amsterdam (1990)

Poggio, T.; Girosi, F.: Networks for approximation and learning. Proc. IEEE 78(9), 1481–1496 (1990)

Mashor M.Y.: Hybrid multilayered perceptron networks, Int. J. Syst. Sci. 31(6), 771–785 (2000)

Newman, D.J.; Hettich, S.; Blake, C.L.; Merz, C.J.; Aha, D.W.: Machine Learning Databases. Department of Information and ComputerScience, UCI Repository of University of California, Irvine, 1998 of Conference. http://archive.ics.uci.edu/ml/datasets.html (1998)

Gao, X.Z.; Gao, X.M.; Ovaska, S.J.: A modified Elman neural network model with application to dynamical systems identification. In: IEEE International Conference on Systems, Man, and Cybernetics, 1996 (1996)

Cybenko, G.: Approximations by superposition of a sigmoidal function. Math. Control Sig. Syst. 2, 303–314 (1989)

Patuwo, E.; Hu, M.Y.; Hung, M.S.: Two-group classification using neural networks. Decis. Sci. 24(4), 825–845 (1993)

Liu, C.; Jiang, D.; Zhao, M.: Application of RBF and Elman neural networks on condition prediction in CBM. In: Wang, H.; et al. (eds.) The 6th International Symposium on Neural Networks (ISNN 2009), pp. 847–855. Springer, Berlin (2009)

Wang, L.; et al.: An improved OIF Elman neural network and its applications to stock market. In: Gabrys, B., Howlett, R., Jain, L. (eds.) Knowledge-Based Intelligent Information and Engineering Systems, pp. 21–28. Springer, Berlin. (2006)

Baum, E.B.; Haussler, D.: What size net give valid generalization? Neural Comput. 1, 151 (1989)

Isa, N.A.M.; et al.: Suitable features selection for the HMLP and MLP networks to identify the shape of aggregate. Constr. Build. Mater. 22(3), 402–410 (2008)

Al-Batah, M.S.; et al.: Modified recursive least squares algorithm to train the hybrid multilayered perceptron (HMLP) network. Appl. Soft Comput. 10(1), 236–244 (2010)

Mat-Isa, N.A.; Mashor, M.Y.; Othman, N.H.: An automated cervical pre-cancerousdiagnostic system. Artif. Intell. Med. 42(1), 1–11 (2008)

Al-Batah, M.S.; et al.: A novel aggregate classification technique using moment invariants and cascaded multilayered perceptron network. Int. J. Miner. Process. 92(1–2), 92–102 (2009)

Aeberhard, S.; Coomans, D.; Vel, O.D.: Comparison of classifiers in high dimensional settings. Technical Report Number 92–02. Department of Computer Science and Department of Mathematics and Statistics, James Cook University of North Queensland (1992)

Duch, W.; Adamczak, R.; Grabczewski, K.: A new methodology of extraction, optimization and application of crisp and fuzzy logical rules. IEEE Trans. Neural Networks 12, 277–306 (2001)

Domeniconi, C.; Peng, J.; Gunopulos, D.: An adaptive metric machine for pattern classification. In: Leen, T.K., Dietterich, T.G., Tresp, V. (eds.) Advances in Neural Information Processing Systems, vol. 13, pp. 458–464. MIT Press (2001)

Michie, D.; Spieglhalter, D.; Taylor, C.C. (eds.): Machine Learning, Neural and Statistical Classification. Elis Horwood, London (1994)

Mohammed, M.; Lim, C.; Quteishat, A.: A novel trust measurement method based on certified belief in strength for a multi-agent classifier system. Neural Comput. Appl. 24, 421–429 ( 2014)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Alkhasawneh, M.S., Tay, L.T. A Hybrid Intelligent System Integrating the Cascade Forward Neural Network with Elman Neural Network. Arab J Sci Eng 43, 6737–6749 (2018). https://doi.org/10.1007/s13369-017-2833-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13369-017-2833-3