Abstract

Whole genome prediction models are useful tools for breeders when selecting candidate individuals early in life for rapid genetic gains. However, most prediction models developed so far assume that the response variable is continuous and that its empirical distribution can be approximated by a Gaussian model. A few models have been developed for ordered categorical phenotypes, but there is a lack of genomic prediction models for count data. There are well-established regression models for count data that cannot be used for genomic-enabled prediction because they were developed for a large sample size (n) and a small number of parameters (p); however, the rule in genomic-enabled prediction is that p is much larger than the sample size n. Here we propose a Bayesian mixed negative binomial (BMNB) regression model for counts, and we present the conditional distributions necessary to efficiently implement a Gibbs sampler. The proposed Bayesian inference can be implemented routinely. We evaluated the proposed BMNB model together with a Poisson model, a Normal model with untransformed response, and a Normal model with transformed response using a logarithm, and applied them to two real wheat datasets from the International Maize and Wheat Improvement Center. Based on the criteria used for assessing genomic prediction accuracy, results indicated that the BMNB model is a viable alternative for analyzing count data.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Of all the computationally intensive methods for fitting complex multilevel models, the Gibbs sampler is most popular. Its popularity is due to its simplicity and its ability to effectively generate samples from a high-dimensional probability distribution (Park and van Dyk 2009). Despite these two advantages, we know of no efficient, closed-form Gibbs sampler for count data that is available for performing Poisson and negative binomial regression analyses. The Gibbs sampler proposed by Albert and Chib (1993) is built on a data augmentation approach and is one of the most widely used samplers for Bayesian probit regression. Recently, an analogous Gibbs sampler for Bayesian logistic regression was introduced by Polson et al. (2013). Their method differs from that of Albert and Chib (1993) in that they used Pólya–Gamma random variables instead of truncated normal random variables. Also, Polson et al. (2013) point out that their method can be applied to specific likelihoods related to the logistic function where it is possible to augment the joint density with auxiliary variables following a Pólya–Gamma distribution; this leads to a closed-form Gibbs sampler for binary and over-dispersed counts. However, Polson et al. (2013) focus most of their paper on binary outcomes. For this reason, in this paper, we provide a derivation of the closed-form Gibbs sampler for implementing a Bayesian mixed negative binomial (BMNB) regression model for counts applied in genomic selection.

Predicting yet-to-be observed phenotypes or unobserved genetic values for complex traits and inferring the underlying genetic architecture utilizing genomic data are interesting and fast developing areas in the context of plant and animal breeding, and even in human diseases (Goddard and Hayes 2009; de los Campos et al. 2010, 2013a; Riedelsheimer et al. 2012; Zhang et al. 2014). Rapid genetic progress requires that such predictions are accurate and can be produced early in life. For these reasons, the use of whole genome prediction models continues to increase.

In genomic prediction, all markers are simultaneously included in the model used for prediction. Real data analysis and simulation studies promote the use of this methodology for increasing genetic progress in less time. For continuous phenotypes, models have been developed to regress phenotypes on all available markers using a linear model (Zhang et al. 2014; de los Campos et al. 2013b). However, in plant breeding, the response variable in many traits is a count \((y = 0,1,2,{\ldots })\), for example, panicle number per plant, seed number per panicle, weed count per plot, number of infected spikelets per spike, etc. Statistical models used to analyze continuously distributed traits are not always optimal for analyzing categorical responses such as counts that are discrete, non-negative, integer-valued, and typically have right-skewed distributions. In classic probability theory, Poisson regression and negative binomial (NB) regression are often used to deal with count data. However, NB regression is preferred when the variance of the counts is larger than the mean, because the Poisson regression assumes that, conditional on any fixed values of the explanatory variables, the response mean and variance are equal. These models are different from an ordinary linear regression model. First, they do not assume that counts follow a normal distribution. Second, rather than modeling y as a linear function of the regression coefficients, they model a function of the response mean as a linear function of the coefficients. Regression models for counts are usually nonlinear and have to take into consideration the specific properties of counts, including discreteness and non-negativity, and are often characterized by overdispersion (variance greater than the mean).

Despite the special characteristics of discrete response data, it is still common practice, in the context of genomic selection, to apply linear regression models to such data or transformed data (Montesinos-López et al. 2015). In genomic prediction, Kizilkaya et al. (2014) studied the reduction in model prediction accuracy for ordinal categorical traits relative to continuous traits. For smaller counts, data analysts are often advised to use logarithmic or square root transformations. There is mounting evidence that transformations do more harm than good for the models required by the vast majority of contemporary plant and soil science researchers (Stroup 2015). In this paper, we propose a NB regression model for counts using a data augmentation approach. We build on the fact that the gamma distribution is the conjugate prior of the NB parameter r, and that the NB random variable can be generated under a compound Poisson representation, which produces an efficient Gibbs sampling.

The article is organized as follows. In Sect. 2, we present the two datasets (Sect. 2.1) and the various models used (Sects. 2.2–2.5). The NB distribution is presented in Sect. 2.2, and the models applied to the two datasets are described in Sect. 2.3. Section 2.4 gives the prior distributions. Section 2.5 provides the full conditional distributions of the proposed models; details of model implementation are given in Sect. 2.6. In Sect. 2.7, the different criteria for assessing prediction accuracy are described. In Sect. 3, we illustrate the various methods with two real datasets and compare the proposed NB model with the Poisson and normal models. We discuss the results in Sect. 4 and finalize the study with the conclusions in Sect. 5.

2 Materials and Methods

2.1 Phenotype and Genotype Data

The data used in this study are from the Global Wheat Program of the International Maize and Wheat Improvement Center (CIMMYT) and comprise the 46th (C46) and 47th (C47) International Bread Wheat Screening Nurseries (IBWSN) that were distributed worldwide in 2011 and 2012. The 297 lines from nursery C46 and the 425 lines from nursery C47 were evaluated for Fusarium Head Blight (FHB) resistance at El Batan Experiment Station, located at CIMMYT Headquarters near Texcoco (state of México, México). Ten spikes from each wheat line in the nurseries were tagged at anthesis using red sticky tape in the morning, followed by spray inoculation in the afternoon. The number of infected spikelets per spike was counted on each of the 10 tagged spikes; these numbers constitute the FHB count data.

Genotypes of the C46 and C47 lines were obtained with 45,000 Genotyping-By-Sequencing (GBS) markers following the protocol of Poland et al. (2012) where the absence or presence of marker genotypes of the wheat lines is represented by 0 and 1, respectively. We kept 13,913 and 13,120 GBS for C46 and C47 nurseries, respectively, that had \(<\)50 % missing data; after deleting monomorphic markers with minor allele frequency (MAF) of \(\le \) 0.05, we ended up with a total of 11,218 and 11,510 GBS for the lines in the C46 and C47 nurseries, respectively. The remaining missing markers were imputed using the multivariate normal expectation maximization (EM) algorithm described in Poland et al. (2012).

2.2 Negative Binomial Distribution

Given that the NB distribution can arise in different ways, next we present its Gamma-Poisson representation. Let \(Y|\mu \sim Pois\left( \mu \right) \) and \(\mu |r,\pi \sim G\left( {r,\frac{\pi }{1-\pi }} \right) \), where \(Pois\left( \mu \right) \) is the Poisson distribution with mean and variance \(\mu ,\) and \(G\left( {r,\frac{\pi }{1-\pi }} \right) \) is the distribution of a gamma random variable with shape parameter r and scale \(\pi /\left( {1-\pi } \right) \), with \(\pi \in \left( {0,1} \right) \). It can be shown that the marginal distribution of Y has a probability mass function

where \({\varGamma } ( \cdot )\) denotes the gamma function. The resulting probability mass function in (2.1) corresponds to the NB distribution with parameters r and \(\pi \), which from here on will be denoted as \({ NB}( {r,\pi } )\). Therefore, the NB distribution is also known as the Gamma-Poisson distribution. The mean of this distribution \(E( Y )=\mu =\frac{r\pi }{\left( {1-\pi } \right) }\) is smaller than variance \(Var\left( Y \right) =\frac{r\pi }{\left( {1-\pi } \right) ^{2}}=\mu +\frac{\mu ^{2}}{r}\) with the variance-to-mean ratio denoted as \(\left( {1-\pi } \right) ^{-1}\) and the overdispersion level as \(r^{-1}\). In terms of the mean parameter \(\mu \), we will use an alternative notation NB\((\mu ,r)\). The NB distribution can also be generated using a Poisson representation (Quenouille 1949) as \(Y=\sum \nolimits _{l=1}^L u_l ,\hbox {where}\,u_l \sim Log\left( \pi \right) \) and is independent of \(L\sim Pois(-r\log \left( {1-\pi } \right) ),\) where Log and Pois denote logarithmic and Poisson distributions, respectively. The distribution in (2.1) is also valid for any positive real value r.

2.3 Models for the Data

Except where otherwise noted, we use \(i=1,\,\ldots ,n\) to index n lines, \(j=1,2,\ldots ,m_i \) to index \(m_i\) spikes for the \(i\hbox {th}\) line, and \(k=1,2,\ldots ,p\) to index p markers. We use \(y_{ij} \) to represent the number of infected spikelets for the \(j\hbox {th}\) spike of the \(i\hbox {th}\) line, and \(x_{ik}\) to represent the genotype of the \(i\hbox {th}\) line at the kth single-nucleotide polymorphism (SNP) marker. (For a given marker, the genotype for the \(i\hbox {th}\) line is coded as the number of copies of a designated marker-specific allele carried by the \(i\hbox {th}\) line). Each of the data models we consider involves the linear predictor

where \(\beta _0 \) is the intercept parameter, \({\varvec{x}}_i^\mathrm{T} =\left[ {x_{i1} ,\ldots x_{ip} } \right] \) is the marker genotype information for the \(i\hbox {th}\) line, \({\varvec{\beta }} ^\mathrm{T}=\left[ {\beta _{1,} \ldots ,\beta _{p,} } \right] \) is a vector of fixed allele substitution effects, and \(u_i\) is a random effect for the ith line that represents the genetic value of line i not captured by genotypes at the p markers. We propose the following four models for analyzing the wheat dataset described in Sect. 2.1.

Model NB: \(y_{i1} ,\ldots ,y_{im_i } \left| {\eta _i } \right. \mathop \sim \limits ^{i.i.d}\hbox {NB}(\mu _i ,r)\), with r being the overdispersion parameter, \(\mu _i =\exp \left( {\eta _i } \right) .\)

Model Pois: Similar to Model NB, except that \(y_{i1} ,\ldots ,y_{im_i } \left| {\eta _i } \right. \mathop \sim \limits ^{i.i.d} \hbox {Pois}(\mu _i )\).

Model Normal: Similar to Model NB, except that \(y_{i1} ,\ldots ,y_{im_i } \left| {\eta _i } \right. \mathop \sim \limits ^{i.i.d} N(\eta _i ,\sigma _e^2 )\) with identity link function.

Model Log-normal: Similar to Model NB, except that \(\log \big ( {y_{i1} +1} \big ),\ldots ,\log \big ( {y_{im_i } +1} \big )\left| {\eta _i } \right. \sim N\, (\eta _i ,\sigma _e^2 )\) with identity link function.

Note that in genomic-enabled prediction, Model Normal without random effects is called Bayesian Ridge Regression (BRR) when the sparseness is included in the model by specifying a \({\varvec{\beta }} \sim N_p \left( {\mathbf{0},{\mathbf {I}}\sigma _\beta ^2 } \right) \) prior for the parameter \({\varvec{\beta }}.\) Also, Model Normal without the \({\mathbf {x}}_i^\mathrm{T} {\varvec{\beta }} \) term is called the Genomic Best Linear Unbiased Predictor (GBLUP) model (de los Campos et al., 2013b) and \({\mathbf {u}}\) denotes the additive genetic values of lines. Under the Bayesian GBLUP, the prior density of the genetic values \({\mathbf {u}}=\left( {u_1 ,\ldots ,u_n } \right) ^\mathrm{T}\sim N\left( {\mathbf{0},\,{\mathbf {G}}\sigma _u^2} \right) \) is a conjugate multivariate normal \(N\left( {\mathbf{0},\,{\mathbf {G}}\sigma _u^2 } \right) \), where the matrix \({\mathbf {G}}\) is estimated from marker data \({\mathbf {X}}\) (for \(k=1,2,\ldots ,p\) markers) as \({\mathbf {G}}=\frac{{\mathbf {XX}}^{{\mathbf {T}}}}{p}\) (VanRaden 2007, 2008) and called the genomic relationship matrix (GRM). In the next section, we describe the implementation of Bayesian mixed negative binomial (BMNB) regression.

2.4 Prior Distributions

Considering Model NB, note that conditionally on \(u_i \), the probability that the random variable \(Y_{ij} \) takes the value \(y_{ij} \) can be expressed as

since \(\pi _{ij} =\frac{\mu _i }{r+\mu _i }=\frac{\hbox {exp}\left( {\eta _i^*} \right) }{1+\hbox {exp}\left( {\eta _i^*} \right) }\), where \(\eta _i^*=\beta _0^*+{\mathbf {x}}_i^\mathrm{T} \varvec{\beta } +u_i,\) with \(\beta _0^*=\beta _0 -\hbox {log}\left( r \right) \). Then

which was obtained using the equality given by Scott and Pillow (2013): \(\frac{\left( {e^{\psi }} \right) ^{a}}{\left( {1+e^{\psi }} \right) ^{b}}=2^{-b}e^{\kappa \psi }\mathop \smallint \nolimits _0^\infty e^{-\frac{\omega \psi ^{2}}{2}}P\left( {\omega ;b,0} \right) d\omega \), where \(\kappa =a-b/2\) and \(P\left( {.;b,0} \right) \) is the density of \(PG\left( {b,c=0} \right) \), the Pólya–Gamma distribution with parameters b and \(c=0\) (see Definition 1 in Polson et al. 2013).

From here, conditional on \(\omega _{ij} \sim PG\left( {y_{ij} +r,0} \right) \),

To complete the Bayesian specification, here we provide the prior distributions, \(f\left( {\varvec{\theta }} \right) ,\) for all the unknown model parameters \(\beta _0^*,\, {\varvec{\beta }},\, \sigma _\beta ^2\), \({\mathbf {u}}\), \(\sigma _u^2\), and r. We assume prior independence between the parameters, that is,

The prior specification in terms of \(\beta _0^*\) instead of \(\beta _0 \) is for convenience, as we shall see in what follows. Since we have no prior information, we assign conditionally conjugate but weakly informative prior distributions to the parameters. Also, to guarantee proper posteriors, we adopt proper priors with known hyper-parameters whose values we specify in Sect. 2.6. We assume that \(\beta _0^*\sim N\left( {{\beta } _f ,{\sigma } _0^2 } \right) ,\, {\varvec{\beta }} \sim N_p \left( {{\varvec{\beta }} _v ,\mathbf {\Sigma }_{v}\sigma _\beta ^2 } \right) , \,\sigma _\beta ^2 \sim IG\left( {a_\beta ,b_\beta } \right) ,\) where \(IG\left( {a_\beta ,b_\beta } \right) \) denote the inverse gamma distribution with shape \(a_\beta \) and scale \(b_\beta \) parameters, \({\mathbf {u}}\sim N_n \left( {\mathbf{0},{\mathbf {G}}\sigma _u^2 } \right) \), \(\sigma _u^2 \sim IG\left( {a_u ,b_u } \right) \), and \(r\sim G\left( {a_0 ,1/b_0 } \right) \). Next we combine (2.4) using all data with priors to get the full conditional distribution for the parameters \(\beta _0^*,\, {\varvec{\beta }} ,\, \sigma _\beta ^2,\, {\mathbf {u}},\,\sigma _u^2\), and r.

2.5 Full Conditional Distributions

From (2.4) and the prior specification given in the previous section, the full conditional for \(\beta _0^*\) is

where \(\tilde{\sigma }_0^2 =\left( {\sigma _0^{-2} +\mathbf{1}_m^\mathrm{T} {\mathbf {D}}_{\varvec{\omega }} \mathbf{1}_m } \right) ^{-1},\, \tilde{\beta }_0 =\tilde{\sigma }_0^2 \left( {\sigma _0^{-2} \beta _f -\mathbf{1}_m^\mathrm{T} {\mathbf {D}}_{\varvec{\omega }} {\mathbf {X}}{\varvec{\beta }} -\mathbf{1}_m^\mathrm{T} {\mathbf {D}}_{\varvec{\omega }} {\mathbf {Zu}}+\mathbf{1}_m^\mathrm{T} {\varvec{\kappa }} } \right) \), \({\varvec{\kappa }} =\left[ {{\varvec{\kappa }} _1^\mathrm{T} ,\ldots ,{\varvec{\kappa }}_n^\mathrm{T} } \right] ^\mathrm{T},\,{\varvec{\kappa }}_i =\frac{1}{2}\left[ {y_{i1} -r,\ldots ,y_{im_i } -r} \right] ^\mathrm{T}\), \({\mathbf {X}}=\left[ {{\mathbf {X}}_1^\mathrm{T} ,\ldots ,\,{\mathbf {X}}_n^\mathrm{T} } \right] ^\mathrm{T},{\mathbf {X}}_i =\left[ {\mathbf{1}_{m_i }^\mathrm{T} \otimes {\mathbf {x}}_i } \right] ^\mathrm{T}, \quad {\mathbf {D}}_{\varvec{\omega }} =\hbox {diag}\left( {{\mathbf {D}}_{\omega 1} ,\,\ldots ,\,{\mathbf {D}}_{\omega n} } \right) , \quad {\mathbf {D}}_{\omega i} =\hbox {diag}\left( {\omega _{i1} ,\,\ldots ,\,\omega _{im_i } } \right) ,\mathbf{1}_m =\left[ {\mathbf{1}_{m_1 }^\mathrm{T} ,\ldots ,\,\mathbf{1}_{m_n }^\mathrm{T} } \right] ^\mathrm{T},\,{\mathbf {u}}=\left[ {u_1 ,\ldots ,\,u_n } \right] ^\mathrm{T},\, {\mathbf {Z}}=\left[ {{\begin{array}{lll} {{\begin{array}{ll} {\mathbf{1}_{m_1 } }&{}\mathbf{0} \\ \mathbf{0}&{}{\mathbf{1}_{m_2 } } \\ \end{array} }}&{}{{\begin{array}{l} \cdots \\ \cdots \\ \end{array} }}&{}{{\begin{array}{l} \mathbf{0} \\ \mathbf{0} \\ \end{array} }} \\ {{\begin{array}{ll} \vdots &{} \vdots \\ \end{array} }}&{} \ddots &{} \vdots \\ {{\begin{array}{ll} \mathbf{0}&{} \mathbf{0} \\ \end{array} }}&{}\quad \cdots &{}\quad {\mathbf{1}_{m_n } } \\ \end{array} }} \right] \) and \(\mathbf{1}_{m_i } =\left[ {1,\,\ldots ,\,1} \right] ^\mathrm{T}\) is a vector of dimension \(m_i \) with entries equal to 1. If we use a prior for \(\beta _0^*\propto \hbox {constant}\) (improper uniform prior), then in \(\tilde{\sigma } _0^2 \) and \(\tilde{\beta }_0 \), we set the term \(\sigma _0^{-2} \) to zero (further details in Appendix 1).

The full conditional distribution of \(\omega _{ij} \) is

The full conditional distribution for \({\varvec{\beta }}\) is as follows:

where \(\tilde{{\varvec{\Sigma }}}_v =\left( {{\varvec{\Sigma }} _v^{-1} \sigma _\beta ^{-2} +{\mathbf {X}}^\mathrm{T}{\mathbf {D}}_{\varvec{\omega }} {\mathbf {X}}} \right) ^{-1},\, \tilde{{\varvec{\beta }}}_v =\tilde{{\varvec{\Sigma }}}_v \Big ( {{\varvec{\Sigma }}_v^{-1} \sigma _\beta ^{-2} {\varvec{\beta }} _v -{\mathbf {X}}^\mathrm{T}{\mathbf {D}}_{\varvec{\omega }} \mathbf{1}_m \beta _0^*-{\mathbf {X}}^\mathrm{T}{\mathbf {D}}_{\varvec{\omega }} {\mathbf {Zu}}}{+{\mathbf {X}}^\mathrm{T}{\varvec{\kappa }}} \Big )\). Also, if here we use a prior for \({\varvec{\beta }} \propto \hbox {constant}\) (improper uniform prior), then in \(\tilde{{\varvec{\Sigma }}}_v\) and \(\tilde{{\varvec{\beta }}}_v \), we set the term \({\varvec{\Sigma }}_v^{-1} \sigma _\beta ^{-2} \) to zero.

The full conditional distribution for \(\sigma _\beta ^2 \) is

The full conditional distribution for \({\mathbf {u}}\) is

where \({\mathbf {F}}=\left( {\sigma _u^{-2} {\mathbf {G}}^{-1}+{\mathbf {Z}}^\mathrm{T}{\mathbf {D}}_\omega {\mathbf {Z}}} \right) ^{-1}\) and \(\tilde{{\mathbf {u}}}={\mathbf {F}}\left( {{\mathbf {Z}}^\mathrm{T}{\mathbf {k}}-{\mathbf {Z}}^\mathrm{T}{\mathbf {D}}_\omega \mathbf{1}_m \beta _0^*-{\mathbf {Z}}^\mathrm{T}{\mathbf {D}}_\omega {\mathbf {X}}{\varvec{\beta }} } \right) .\)

The conditional distribution of \(\sigma _u^2 \) is

The full conditional for r is not known, and the development of a Metropolis-Hasting step for this implies evaluating the density of a Pólya–Gamma distribution. This evaluation cannot be done directly because an alternating infinite series is involved. Therefore, after obtaining a sample of \(\beta _0^*,\,{\varvec{\beta }} ,\sigma _\beta ^2 \,\), and \(\sigma _u^2 \) given r, we can adopt the strategy of Zhou et al. (2012) to obtain a sample of the full conditional of r by alternating

where \(CRT\left( {y_{ij} ,r} \right) \) denote the Chinese restaurant table (CRT) count random variable and can be generated as \(L_{ij} =\Sigma _{l=1}^{y_{ij} } d_l ,\) where \(d_l\mathop {\sim }\limits ^{iid} Bernoulli\left( {\frac{r}{l-1+r}} \right) \) (Zhou and Carin 2015). Further details on the full conditional distributions for these parameters are given in Appendix 1.

In summary, samples of the joint posterior distribution of all the parameters involved can be obtained by sampling repeatedly from the following loop:

-

1.

Sample \(\omega _{ij} \) values from the Pólya-Gamma distribution in (2.6).

-

2.

Sample \(\beta _0^*\) from the normal distribution in (2.5).

-

3.

Sample \({\varvec{\beta }}\) from the normal distribution in (2.7).

-

4.

Sample the variance effect \((\sigma _\beta ^2)\) from the inverse gamma distribution in (2.8).

-

5.

Sample \({\mathbf {u}}\) from the normal distribution in (2.9).

-

6.

Sample the variance effect \((\sigma _u^2)\) from the inverse gamma distribution in (2.10).

-

7.

Sample the parameter (r) from the gamma distribution in (2.11) and (2.12).

Return to step 1 or terminate if chain length is adequate to meet convergence diagnostics.

The Gibbs sampler proposed above without random effects \(({\mathbf {u}})\) and assuming a normal distribution \(N_p (\mathbf{0},{\mathbf {I}}_p \sigma _\beta ^2 )\) as prior of the parameter \({\varvec{\beta }}\) produces the following conditional posterior expectation

which is analogous to the BRR with normal phenotype but with pseudo response \({\mathbf {y}}^{*}={\varvec{\kappa }} -{\mathbf {D}}_{\varvec{\omega }} \mathbf{1}_m \beta _0^*\). This can be viewed as Bayesian ridge regression for counts (count BRR). Implementation of the count BRR model is straightforward using the Gibbs sampler proposed above but ignoring steps 5 and 6. On the other hand, ignoring the term \({\mathbf {x}}_i^\mathrm{T} {\varvec{\beta }} \, ({\mathbf {X}}{\varvec{\beta }})\) in the linear predictor of (2.2) and defining \(u_i ={\mathbf {x}}_i^\mathrm{T} {\varvec{\beta }} \) gives the GBLUP count, since the posterior expectation of the additive genetic values is equal to \({\mathbf {E}}\left( {{\mathbf {u}}|{\mathbf {y}},{\varvec{\omega }} } \right) =\left( {\sigma _u^{-2} {\mathbf {G}}^{-1}+{\mathbf {Z}}^\mathrm{T}{\mathbf {D}}_\omega {\mathbf {Z}}} \right) ^{-1}{\mathbf {Z}}^\mathrm{T}\left( {{\mathbf {k}}-{\mathbf {D}}_\omega \mathbf{1}_m \beta _0^*} \right) \). The GBLUP count with the proposed Gibbs sampler can also be implemented by ignoring steps 3 and 4 above. Both models (BRR count and GBLUP count) are expected to produce the same results, since they are different parameterizations of the same model (de los Campos et al. 2010).

Since \(\mathop {\lim }\nolimits _{r\rightarrow \infty } NB\left( {r,\frac{\mu }{\mu +r}} \right) =\mathrm{Pois}\left( \mu \right) ,\) Model Pois was implemented with the above method fixing r to a large value depending on the mean count. We used \(r=1000,\) which is a good choice when the mean count is less than 100. Also, Model Normal and Model log-normal were implemented under a Bayesian framework following Kärkkäinen and Sillanpää (2012).

2.6 Model Implementation

The Gibbs sampler described above for the BMNB model (Model NB) was implemented in the R-software (R Core Team 2015). Implementation was done under a Bayesian approach using Markov Chain Monte Carlo (MCMC) through the Gibbs sampler algorithm, which samples sequentially from the full conditional distributions until it reaches a stationary process, converging to the joint posterior distribution (Gelfand and Smith 1990). To decrease the potential impact of MCMC errors on prediction accuracy, we performed a total of 60,000 iterations with a burn-in of 30,000, so that 30,000 samples were used for inference. We did not apply thinning of the chains following the suggestions of Geyer (1992), MacEachern and Berliner (1994), and Link and Eaton (2012), who provide justification of the ban on subsampling MCMC output for approximating simple features of the target distribution (e.g., means, variances and percentiles) since thinning is neither necessary nor desirable, and unthinned chains are more precise.

We implemented the prior specification given in Sect. 2.4 with \(\beta _0^*\sim N\big ( {\beta _f =0,\sigma _0^2 =}{10000} \big )\), given \(\sigma _\beta ^2 \) we take \({\varvec{\beta }} \sim N_p \left( {{\varvec{\beta }} _v =\mathbf{0}_p^\mathrm{T} ,{\mathbf {I}}_p \sigma _\beta ^2 } \right) ,\,\sigma _\beta ^2 \sim \,IG\left( {a_\beta =0.01,b_\beta =0.01} \right) ,\) given \(\sigma _u^2 \) we take \({\mathbf {u}}\sim N_n \left( {\mathbf{0}_n^\mathrm{T} ,{\mathbf {G}}\sigma _u^2 } \right) ,\, {\mathbf {G}}\) is the GRM, that is, the covariance matrix of the random effects, \(\sigma _u^2 \sim IG\left( {a_u =0.01,b_u =0.01} \right) \) and \(r\sim G\left( {a_0 =0.01,1/(b_0 =0.01)} \right) .\) All these hyper-parameters were chosen to lead weakly informative priors. The convergence of the MCMC chains was monitored using trace plots and autocorrelation functions. Also, when considering a sensitivity analysis on the use of the inverse gamma priors for the variance components, we found that the results are fairly robust under different choices of prior.

2.7 Assessing Prediction Accuracy

We used cross-validation to estimate the prediction accuracy of the proposed models for count phenotypes. The dataset was divided 10 times into training and validation sets, with 90 % of the dataset used for training and 10 % for testing (since each line has 10 replications, we used 9 replicates for training and one replicate for testing). The training set was used to fit the model and the validation set was used to evaluate the prediction accuracy of the proposed models. Among the variety of methods for comparing the predictive posterior distribution to the observed data (generally termed “posterior predictive checks”), we used five criteria: the Deviance Information Criterion (DIC) (Spiegelhalter et al. 2002), the sum of the logged conditional predictive ordinate, also known as log-marginal pseudo-likelihood (LMPL) (Gelfand 1996), the Chi-Square statistic, \(\chi _\mathrm{cal}^2 \), (Gelman et al. 2004), the L criterion (L) (Laud and Ibrahim 1995), and the Pearson correlation (Corr). Models with small DIC, \(\chi _\mathrm{cal}^2 \), and L indicate better fitting, and a higher LMPL, and absolute values of Corr also indicate better fitting. The predicted observations for Models NB and Pois, \(\hat{y}_{ij}\), were calculated with M collected Gibbs samples as \(\hat{y}_{ij} =\frac{1}{M}\sum \nolimits _{s=1}^M \exp \left[ {\beta _0^{*( s )} +\log ( {r^{( s )}} )+{\mathbf {x}}_i^\mathrm{T} {\varvec{\beta }} ^{( s )}+u_i^{( s )} } \right] \), where \(\beta _0^{*( s )},\, r^{( s )},\, {\varvec{\beta }}^{(s)}\) and \(u_i^{( s)} \) are the values in the sample s of the intercept, overdispersion parameter, the beta regression coefficients, and the random effect for the line i, respectively. For the Model Normal, the \( \hat{y}_{ij}\) were calculated as \(\hat{y}_{ij} =\frac{1}{M}\sum \nolimits _{s=1}^M \left[ {\beta _0^{( s )} +{\mathbf {x}}_i^\mathrm{T} {\varvec{\beta }} ^{( s )}+u_i^{( s )} } \right] \), while for Model Log-normal , the \(\hat{y}_{ij}\) were calculated as \(\hat{y}_{ij} =\frac{1}{M}\sum \nolimits _{s=1}^M \hbox {exp}\left[ {\beta _0^{( s )} +{\mathbf {x}}_i^\mathrm{T} {\varvec{\beta }} ^{( s )}+u_i^{( s )} +\sigma _e^{2( s )} /2} \right] -1,\) where \(\sigma _e^{2( s )} \) is the value of the variance component of the error in sample s.

3 Results

Figure 1a depicts the histogram of the number of infected spikelets per spike for the entire C46 dataset. The mean and variance of this dataset were 2.4721 and 5.8438, respectively, while the minimum and maximum numbers of spikelets on a spike were 0 and 13, respectively. Figure 1b shows that in 213 lines, the mean is smaller than the variance. However, this data set, C46, has 702 zero counts (23.63 %), which always causes problems for fitting the Poisson and NB models. In dataset C47, the mean and variance were 3.2451 and 6.9594, respectively (Fig. 1c) and the minimum and maximum numbers of spikelets on a spike were 0 and 19, respectively. Also in dataset C47, 195 out of 425 lines had a mean that is smaller than the variance (Fig. 1d).

Table 1 shows a comparison of the four models used for the five proposed criteria using the full datasets (no random partitions were used). Concerning criterion DIC, for dataset C46, the best and second best models were Model Log-normal and Model NB, respectively, and for dataset C47, the best two models were Model Log-normal and Model Pois. In terms of \(\chi _\mathrm{cal}^2\), the best model was Model NB in both datasets. With the L criteria, the best model was Model Pois in both data sets. For the LMPL criterion, the best model was Model Log-normal in both datasets. Finally, with the Pearson correlation (Corr), the best model for both datasets was Model Log-normal. We also got the average of the ranks of the five proposed criteria for each model and, in this situation, the rank of models for dataset C46 was 1 for Model Log-normal, 2 for Model Pois, and 3 for Models NB and Normal. For data set C47, the models ranked as follows: 1 for Model Log-normal, 2 for Model Pois, 3 for Model Normal, and 4 for Model NB.

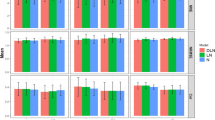

Also for the four models, we show the histogram representation of the posterior distributions for scalar parameters for both data sets. In all plots in Figs. 2 (for dataset C46) and 3 (for dataset C47), it can be observed that the priors for each parameter in Models NB, Pois, Normal, and Log-normal are not informative.

In Table 2, we present the results of 10 cross-validations, where prediction accuracy was also assessed with the five proposed criteria. However, here these metrics were calculated using only the testing set (and not the whole dataset, as in Table 1). In Table 3, we present the ranking of the four models for each criterion. Since we are comparing four models, the values of the ranks range from 1 to 4, and the lower the values, the better the model. For ties, we assigned the average of the ranks that would have been assigned had there been no ties. From the ranking given in Table 3, we can see that there is no clear winner in terms of prediction accuracy. For example, in DIC and LMPL, the best model was Model NB for dataset C46, whereas for dataset C47, the best model was Model Pois in three criteria.

The rank given by the \(\chi _\mathrm{cal}^2 \) criterion is near the opposite of the rank given by criteria DIC and L; for \(\chi _\mathrm{cal}^2\), the best model was Model Log-normal in both data sets. In terms of the L criterion, the best model was Model Pois for both datasets, while in terms of Corr, the best model was Model Normal for dataset C46, and all Models (NB, Pois, Normal, and Log-normal) for dataset C47. We also got the average of the ranks for each model of the five criteria, which is given in the last row in Table 3. In terms of the average ranking for dataset C46, the best model was Model NB, followed by Model Normal; Model Pois came in last. In dataset C47, the best model was Model Pois, the second best was Model Normal, and the last one was Model Log-normal.

Model Pois often gave better results than Model NB, even though Model Pois is a special case of Model NB with r fixed at 1000. It seems surprising that the posterior of r in these cases concentrates at values quite far from 1000. As a check to show that this behavior is possible, we performed a small simulation experiment with the following linear predictor \(\eta _i =\beta _0 +u_i \) with \(i=1,\ldots ,40\) to index lines, \(j=1,2,\ldots ,10\) to index spikes per line. In the first scenario, we simulated data according to Model NB with hyper-parameters equal to \(\beta _0 =0.66,\, r=4.87\), and \(\sigma _u^2 =0.55\). In the second scenario, we simulated data according to Model Pois with hyper-parameters \(\beta _0 =0.66,\, r=1000\), and \(\sigma _u^2 =0.55\). We expect that Model NB will work well and will be able to capture the true parameters when fitting Model NB to data generated according to Model NB. We also expect that Model Pois will work well and will be able to capture the true parameters when fitted to data generated according to Model Pois. Table 4 gives the estimates of fitting both models (Models NB and Pois) to each simulated dataset; we also calculated the DIC, \(\chi _\mathrm{cal}^2 \), L, LMPL, and Corr for each fitted model to compare the models’ performance.

Results shown in Table 4 are based in 50 replications and in each replication we computed 20,000 MCMC samples. Bayes estimates were computed with 10,000 samples since the first 10,000 were discarded as burn-in. Table 4 shows that the estimates obtained by fitting Models NB and Pois are close to the true values when the data were simulated under Model NB, with the exception of the parameter r, which was fixed under Model Pois. Upon comparing the performance of both models, we see that fitting Model NB to data generated according to Model NB gave better performance, since in three of the five criteria, this model is the best. On the other hand, for the data simulated according to Model Pois, we see that the estimates of \(\beta _0 \) and \(\sigma _u^2 \) are close to the true values when fitting both Models NB and Pois. However, the estimate of the parameter r was 61.94 when fitting Model NB, that is, a value far from the fixed value of \(r=1000\) that is assumed for Model Pois.

The reason that the estimated value of r is far from 1000 is because, for small counts, the r required to approximate the Poisson distribution with a negative binomial distribution is small. Comparing the performance of both models with the five criteria, we see that in DIC and \(\chi _\mathrm{cal}^2 \), the best is Model NB, while in L and LMPL, Model Pois was the best. However, Model Pois is preferred because it has one less parameter to be estimated. With this small simulation example, we observed that Model NB performed better when it was applied to data generated from Model NB. Likewise, the more parsimonious Model Pois performed adequately when applied to data generated according to Model Pois. For this reason, we conclude that the results (given above) produced by using real data are congruent.

4 Discussion

4.1 The Poisson and Negative Binomial Models Through Data Augmentation

The proposed Bayesian regression models for count data take into account the nonlinear relationships between responses and consider the specific properties of counts, including discreteness, non-negativity, and overdispersion (variance greater than the mean). Although the Poisson and negative binomial distributions are well documented in the related statistical literature, to the best of our knowledge, this is the first time the Poisson and negative binomial models have been explored in genomic-enabled prediction. The proposed negative binomial models were derived using the Pólya–Gamma data augmentation approach proposed by Polson et al. (2013) for count data; this approach is elegant, efficient, and leads to familiar complete conditionals on the target quantity (Windle et al. 2013). The data augmentation method is novel and consists of augmentation approaches with closed-form solutions and analytical update equations available for Gibbs sampling. One augmentation approach is concerned with the inference of the NB parameter r using compound Poisson representation, and the other approach is concerned with the inference of the regression coefficients \({\varvec{\upbeta }}\) using Pólya–Gamma distribution.

Our Bayesian NB models (Models NB and Pois) for genomic-enabled prediction are different from that proposed by Polson et al. (2013), because we incorporate random effects in addition to fixed effects. For this reason, we call these models the Bayesian mixed negative binomial models. However, incorporating a random effect in the linear predictor requires that in addition to the full conditionals of \(\omega _{ij},\, {\varvec{\beta }},\, L_{ij}\), and r, we derive the full conditionals of \(\sigma _\beta ^2 , {\mathbf {u}}\), and \(\sigma _u^2 \). Fortunately, even with the addition of random effects, we were able to get closed forms for conditional distributions of the parameters involved in the joint posterior distribution; this allows using Markov Chain Monte Carlo through the Gibbs sampler (Gelfand and Smith 1990). Adding the random effects to the predictor allowed us to extend the conventional BRR and GBLUP to count data (here called BRR count and GBLUP count), as was done for ordinal categorical phenotypes by Montesinos-López et al. (2015) using the threshold model for genome-enabled prediction, which they called TGBLUP.

This extension of GBLUP to count data is very important, since it allows modeling count data in a scientific manner, without assuming that the data are normally approximated and without using transformation, which many times produces estimations and predictions outside of non-negativity, which makes no sense for count data. The BRR count is slightly different from that proposed by Polson et al. (2013), since we assumed the value of the variance of marker effects is unknown and gave this variance an inverse gamma prior distribution.

4.2 Assessing the Models’ Genomic Prediction Accuracy

Our proposed models (Models NB and Pois) proved superior to Model Normal and the normal model with transformed data (Model Log-normal) for dataset C46 for criteria DIC and LMPL but not for all five criteria. Model Pois, however, was clearly the winner in three of the criteria for dataset C47. For dataset C46, the mean rank favored Model NB, whereas for data set C47, the best model was Model Pois. However, this finding can be attributed to the large datasets used and to the fact that a considerable proportion of the response variable had zeros in the C46 dataset. Comparing the posterior predictive mean of the number of observations in dataset C46 with the observed data when the data were fitted with Model NB, we did not observe the presence of an excessive number of zeros (see Fig. 4a). However, when this dataset was fitted with Model Pois, we observed that the number of zeros is large relative to the posterior predictive mean of the number of zeros; it seems there is evidence of zero inflation (Fig. 4b). While in dataset C47 we observed fewer zeros than expected under Model NB (Fig. 4c), we also see there is an excess number of twos in this dataset (Fig. 4c, d). In the presence of an excess number of zeros, an alternative modeling approach can be obtained using a zero-inflated negative binomial (or Poisson) regression. Zero-inflated models consist of a mixture where a zero-inflated structure is incorporated such that there are two classes of zeros in the count process, one coming from a point mass and the other from a non-truncated process (Boone et al. 2012). However, the Gibbs sampler proposed in this paper is not straightforward or generalizable to zero-inflated counts and should be considered for future research.

Previous studies found that the accuracy for an ordinal trait is lower than that predicted from a continuous trait of the same population (Kizilkaya et al. 2014). Our results show that the proposed models (Models NB and Pois) for genomic-enabled predictions of count data can also be used for modeling count data with equal mean and variance (assuming a Poisson distribution of data given the random effects) by fixing the overdispersion parameter r to a large value, such as 1000, as was done in this study. However, as mentioned in the introduction, rarely is this assumption (equal mean and variance) met in real count data. Finally, more research is needed to extend these proposed genomic-enabled prediction models to deal with so many zeros in count response variables. Further research is also required to examine the BMNB with other count data sets with a smaller sample size and a lower percentage of zeros.

5 Conclusions

Genomic-enabled prediction models are useful in genomic selection for choosing candidate individuals early in life and achieving rapid genetic gains. A plethora of statistical models has been developed for genome-wise marker prediction. However, these models assume that the response variable is continuous and that its empirical distribution can be approximated by a Gaussian model. Also, the standard regression models for count data cannot deal with cases where the sample size (n) is smaller than the number of marker parameters (p). In this study, we propose a Bayesian mixed negative binomial (BMNB) regression model for count data derived using a Pólya–Gamma data augmentation for count data. We describe the conditional distributions necessary to efficiently implement a Gibbs sampler. The BMNB model (Model NB) together with a Poisson model (Model Pois), a Normal model with untransformed response (Model Normal), and a Normal model with transformed response using a logarithm (Model Log-normal) were applied to two wheat datasets (C46 and C47) using five criteria for determining the best predictive model. Based on the criteria used for assessing prediction accuracy, results indicated that the BMNB model is a viable alternative for analyzing count data; based on one selection criterion, Model NB (BMNB) was the best predictive model for fitting dataset C46, whereas Model Pois (Poisson) was the best model for predicting data C47 based on four criteria. Results based on five criteria for assessing prediction accuracy did not determine one model to be the best based on all five criteria. Nevertheless, BMNB and the Poisson seem to be good alternative models for genomic prediction of unobserved individuals.

References

Albert, J. H., & Chib, S. (1993). Bayesian analysis of binary and polychotomous response data. Journal of the American Statistical Association, 88(422): 669-679.

Boone, E. L., Stewart-Koster, B., & Kennard, M. J. (2012). A hierarchical zero-inflated Poisson regression model for stream fish distribution and abundance. Environmetrics, 23(3), 207-218.

de los Campos, G., Gianola, D., & Allison, D. B. (2010). Predicting genetic predisposition in humans: the promise of whole-genome markers. Nat Rev Genet, 11: 880-886. doi:10.1038/nrg2898.

de los Campos, G., Vazquez, A. I., Fernando, R., Klimentidis, Y. C., & Sorensen, D. (2013a). Prediction of Complex Human Traits Using the Genomic Best Linear Unbiased Predictor. PLoS Genetics 9 (7) e1003608.

de los Campos, G., Hickey, J. M., Pong-Wong, R., Daetwyler, H. D., & Calus, M. P. L. (2013b). Whole Genome Regression and Prediction Methods Applied to Plant and Animal Breeding. Genetics, 193(2), 327-345.

Gelfand, A. E. (1996). Model determination using sampling-based methods. In: Gilks, W. R., Richardson, S., & Spiegelhalter, D. J., editors. Markov Chain Monte Carlo in practice. London: Chapman & Hall. Pp. 145-60.

Gelfand, A. E., & Smith, A. F. (1990). Sampling-based approaches to calculating marginal densities. Journal of the American Statistical Association, 85(410), 398-409.

Gelman, A., Carlin, J. B., Stern, H. S., & Rubin, D. B. (2004). Bayesian data analysis. 2. Boca Raton: Chapman & Hall.

Geyer, C. J. (1992). Practical Markov Chain Monte Carlo. Statistical Science, 473-483.

Goddard, M. E., & Hayes, B. J. (2009). Mapping genes for complex traits in domestic animals and their use in breeding programmes. Nat Rev Genet, 10: 381-391. doi:10.1038/nrg2575.

Kärkkäinen, H. P., & Sillanpää, M. J. (2012). Back to basics for Bayesian model building in genomic selection. Genetics, 191(3), 969-987.

Kizilkaya, K., Fernando, R. L., & Garrick, D. J. (2014). Reduction in accuracy of genomic prediction for ordered categorical data compared to continuous observations. Genetics Selection Evolution, 46:37 doi:10.1186/1297-9686-46-37.

Laud, P. W., & Ibrahim, J. G. (1995). Predictive Model Selection. Journal of the Royal Statistical Society, B 57, pp. 247-262.

Link, W. A., & Eaton, M. J. (2012). On thinning of chains in MCMC. Methods in Ecology and Evolution, 3(1), 112-115.

MacEachern, S. N., & Berliner, L. M. (1994). Subsampling the Gibbs sampler. The American Statistician, 48(3), 188-190.

Montesinos-López, O. A., Montesinos-López, A., Pérez-Rodríguez, P., de los Campos, G., Eskridge, K. M., & Crossa, J. (2015). Threshold models for genome-enabled prediction of ordinal categorical traits in plant breeding. G3: Genes| Genomes| Genetics, 5(1), 1-10.

Park, T., & van Dyk, D. A. (2009). Partially collapsed Gibbs samplers: Illustrations and applications. Journal of Computational and Graphical Statistics, 18(2), 283-305.

Polson, N. G., Scott, J. G., & Windle, J. (2013). Bayesian inference for logistic models using Pólya-Gamma latent variables. Journal of the American Statistical Association, 108(504), 1339-1349.

Poland, J.A., Brown, P.J., Sorrells, M.E., Jannink J.-L. 2012. Development of high-density genetic maps for barley and wheat using a novel two-enzyme genotyping-by-sequencing approach. PloS ONE, 7:e32253.

Quenouille, M. H. (1949). A relation between the logarithmic, Poisson, and negative binomial series. Biometrics, 5(2), 162-164.

R Core Team. (2015). R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. ISBN 3-900051-07-0, URL http://www.R-project.org/.

Riedelsheimer, C., Czedik-Eysenberg, A., Grieder, C., Lisec, J., Technow, F., et al. (2012). Genomic and metabolic prediction of complex heterotic traits in hybrid maize. Nat Genet 44: 217-220. doi:10.1038/ng.1033.

Scott, J., & Pillow, J. W. (2013). Fully Bayesian inference for neural models with negative-binomial spiking. In Advances in neural information processing systems, pp. 1898-1906.

Spiegelhalter, D. J., Mejor, N. G., Carlin, B. P., & van der Linde, A. (2002). Bayesian Measures of Model Complexity and Fit. Journal of the Royal Statistical Society, B 64, pp. 583-639.

Stroup, W. W. (2015). Rethinking the Analysis of Non-Normal Data in Plant and Soil Science. Agronomy Journal, 107(2): 811-827.

VanRaden, P. M. (2007). Genomic measures of relationship and inbreeding. Interbull Bull 37: 33-36.

——– (2008). Efficient methods to compute genomic predictions. J. Dairy Sci. 91: 4414-4423.

Windle, J., Carvalho, C. M., Scott, J. G., & Sun, L. (2013). Pólya–Gamma Data Augmentation for Dynamic Models. arXiv preprint arXiv:1308.0774.

Zhang, Z., Ober, U., Erbe, M., Zhang, H., Gao, N., He, J., & Simianer, H. (2014). Improving the accuracy of whole genome prediction for complex traits using the results of genome-wide association studies. PloS One, 9(3), e93017.

Zhou, M., & Carin, L. (2015). Negative binomial process count and mixture modeling. IEEE Transactions on Pattern Analysis and Machine Intelligence, 37(2), 307-320.

Zhou, M., Li, L., Dunson, D., & Carin, L. (2012). Lognormal and gamma mixed negative binomial regression. In Machine Learning: Proceedings of the International Conference on Machine Learning (vol. 2012, p. 1343). NIH Public Access.

Acknowledgments

We very much appreciate the CIMMYT field and lab assistants and technicians who collected the data used in this study. We also thank the anonymous reviewers and the Co-Guest Editor of JABES for the time and effort they invested in correcting and improving the quality of the manuscript.

Author information

Authors and Affiliations

Corresponding author

Appendix 1

Appendix 1

Full conditional for \({\beta _0^*}\)

where \(\tilde{\sigma }_0^2 =\left( {\sigma _0^{-2} +\mathbf{1}_m^\mathrm{T} {\mathbf {D}}_{\varvec{\omega }} \mathbf{1}_m} \right) ^{-1},\, \tilde{\beta }_0 =\tilde{\sigma } _0^2 \left( {\sigma _0^{-2} \beta _f -\mathbf{1}_m^\mathrm{T} {\mathbf {D}}_{\varvec{\omega }} {\mathbf {X}}{\varvec{\beta }} -\mathbf{1}_m^\mathrm{T} {\mathbf {D}}_{\varvec{\omega }} {\mathbf {Zu}}+\mathbf{1}_m^\mathrm{T} {\varvec{\kappa }}} \right) \),

Full conditional for \({\omega _{ij}}\)

where \(PG\left( {b,c} \right) \) denotes a Pólya-Gamma distribution with parameters \(b\,\hbox {and}\,c\) and density

\(P\left( {\omega ;b,c} \right) =\left\{ {\cosh ^{b}\left( {\frac{c}{2}} \right) } \right\} \,\frac{2^{b-1}}{{{\Gamma }}\left( b \right) }\mathop \sum \limits _{k=0}^\infty \left( {-1} \right) ^{n}\frac{{{\Gamma }} \left( {n+b} \right) \left( {2n+b} \right) }{{{\Gamma }} \left( {n+1} \right) \sqrt{2\pi \omega ^{3}}}\hbox {exp}(-\frac{\left( {2n+b} \right) ^{2}}{8\omega }-\frac{c^{2}}{2}\omega )\), where cosh denotes the hyperbolic cosine.

Full conditional for \({\varvec{\beta }}\)

where \(\tilde{{\varvec{\Sigma }}}_v =\left( {{\varvec{\Sigma }}}_v^{-1} \sigma _\beta ^{-2} +{\mathbf {X}}^\mathrm{T}\mathbf{D}_{\varvec{\omega }} {\mathbf {X}} \right) ^{-1},\, \tilde{{\varvec{\beta }}}_v =\tilde{{\varvec{\Sigma }}} _v \Bigg ( {\varvec{\Sigma }}_v^{-1} \sigma _\beta ^{-2} {\varvec{\beta }} _v -{\mathbf {X}}^\mathrm{T}{\mathbf {D}}_{\varvec{\omega }} \mathbf{1}_m \beta _0^* -{\mathbf {X}}^\mathrm{T}{\mathbf {D}}_{\varvec{\omega }} {\mathbf {Zu}}\,+\,{\mathbf {X}}^\mathrm{T}{\varvec{\kappa }} \Bigg )\).

Full conditional for \({\sigma _\beta ^2}\)

Full conditional for \({{\mathbf {u}}}\)

where \({\mathbf {F}}=\left( {\sigma _u^{-2} {\mathbf {G}}^{-1}+{\mathbf {Z}}^\mathrm{T}{\mathbf {D}}_\omega {\mathbf {Z}}} \right) ^{-1}\) and \(\tilde{{\mathbf {u}}}={\mathbf {F}}\left( {{\mathbf {Z}}^\mathrm{T}{\mathbf {k}}-{\mathbf {Z}}^\mathrm{T}{\mathbf {D}}_\omega {\mathbf {1}}_m \beta _0^*-{\mathbf {Z}}^\mathrm{T}D_\omega {\mathbf {X}}{\varvec{\beta }} } \right) .\)

Full conditional for \({\sigma _u^2}\)

If \(\sigma _u^2 \sim IG\left( {a_u ,b_u } \right) \), then

Full conditional for r

To make inference on r, we first place a gamma prior on it as \(r\sim G\left( {a_0 ,1/b_0 } \right) \). Then we infer a latent count L for each \(Y\sim NB\left( {r,\pi } \right) \) conditional on Y and r. Since \(L\sim \mathrm{Pois}(-r\log \left( {1-\pi } \right) ),\) by construction we can use the Gamma-Poisson conjugacy to update r. Then

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Montesinos-López, O.A., Montesinos-López, A., Pérez-Rodríguez, P. et al. Genomic Prediction Models for Count Data. JABES 20, 533–554 (2015). https://doi.org/10.1007/s13253-015-0223-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13253-015-0223-4