Abstract

We describe observation driven time series models for Student-t and EGB2 conditional distributions in which the signal is a linear function of past values of the score of the conditional distribution. These specifications produce models that are easy to implement and deal with outliers by what amounts to a soft form of trimming in the case of t and a soft form of Winsorizing in the case of EGB2. We show how a model with trend and seasonal components can be used as the basis for a seasonal adjustment procedure. The methods are illustrated with US and Spanish data.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Time series are often subject to observations that, when judged by the Gaussian yardstick, are outliers. This is a very real issue for many economic time series. Agustin Maravall’s seasonal adjustment program, TRAMO-SEATS, which he developed jointly with Victor Gomez, tackles the problem by identifying outliers and, where appropriate, replacing them by dummy variables. Here we take a different approach in which we employ a new class of robust models where the dynamics of the level, or location, are driven by the score of the conditional distribution of the observations. These called dynamic conditional score (DCS) models have recently been developed by Creal et al. (2011, 2013) and Harvey (2013). They are relatively easy to implement and their form facilitates the development of a comprehensive and relatively straightforward theory for the asymptotic distribution of the maximum likelihood estimator.

The changing level of a Gaussian time series is usually obtained from an ARIMA process or explicitly modeled as an unobserved component. The statistical treatment of linear Gaussian unobserved components models is straightforward, with the Kalman filter playing a key role. Additive outliers may be captured by dummy variables. A different way forward is to let the noise have a Student t-distribution, thereby accommodating the outliers. However, the treatment of such a model requires computationally intensive procedures, as described in Durbin and Koopman (2012). The DCS-t model proposed by Harvey and Luati (2014) provides an alternative approach which is observation-driven in that the conditional distribution of the observations is specified. A model of this kind may be compared and contrasted with the methods in the robustness literature; see Maronna, Martin and Yohai (2006, ch 8) and McDonald and Newey (1988), where a parametric approach is called ‘partially adaptive’. Robust procedures for guarding against additive outliers typically respond to large observations in one of two ways: either the response function converges to a positive (negative) constant for observations tending to plus (or minus) infinity or it goes to zero. These two procedures are usually classified as Winsorizing or as trimming. The score for a t-distribution converges to zero and so can be regarded as a parametric form of trimming. Similarly a parametric form of Winsorizing is given by the exponential generalized beta distribution of the second kind (EGB2) distribution. The article by Caivano and Harvey (2014) sets out the theory for the DCS location model with an EGB2 distribution and illustrates its practical value.

The article is organized as follows. Section 2 reviews the idea behind the DCS location model and expands on the reason for using the conditional score. The various classifications of distributions in terms of their tails are then set out and this is followed by a discussion of the score as it relates to location and scale and the link with robust estimation. This material leads on to the contrast between the DCS t and EGB2 models in Sect. 3. Section 4 compares the fit of EGB2 and t-distributions for two US macroeconomic time series. A comparison between the way in which Gaussian and DCS models adapt to structural breaks is made in Sect. 5. This issue is important, because it may be thought that the price paid by DCS models for their robustness is a slow response to structural breaks.

DCS models with trend and seasonal components are described in Sect. 6. These models can be regarded as robust counterparts to the unobserved components ‘basic structural model’ (BSM). Seasonal adjustment can be carried out with the BSM by extracting smoothed components using the standard Kalman filter and smoother. Because DCS models only give filtered components, it is necessary to devise a method for smoothing. In Sect. 7 we apply this method to a monthly series of tourists arriving in Spain and compare the extracted trend and seasonal components with those obtained by fitting a BSM with the outliers handled by dummy variables.

2 Filters, heavy tails and robust estimation

The first sub-section below sets out a simple unobserved components model and shows how the innovations form of the Kalman filter may be adapted to form a DCS model. The second sub-section provides a rationale for the use of the conditional score. The way in which tails of distributions may be classified is reviewed in the third sub-section and in the fourth, tail behaviour is related to the considerations of robustness. The treatment of these topics is more general and integrated than in Harvey (2013).

2.1 Unobserved components and filters

A simple Gaussian signal plus noise model is

where the irregular and level disturbances, \(\varepsilon _{t}\) and \(\eta _{t} \) respectively, are mutually independent and the notation \(NID\left( 0,\sigma ^{2}\right) \) denotes normally and independently distributed with mean zero and variance \(\sigma ^{2}\). The autoregressive parameter is \(\phi , \) while the signal-noise ratio, \(q=\sigma _{\eta }^{2}/\sigma _{\varepsilon }^{2},\) plays the key role in determining how observations should be weighted for prediction and signal extraction. The reduced form (RF) of (1) is an ARMA(1,1) process

but with restrictions on \(\theta .\) For example, when \(\phi =1\), \(0\le \theta \le 1.\)

The UC model in (1) is effectively in state space form and, as such, it may be handled by the Kalman filter (KF); see Harvey (1989). The parameters \(\phi \) and q may be estimated by maximum likelihood (ML), with the likelihood function constructed from the one-step ahead prediction errors. The KF can be expressed as a single equation which combines \(\mu _{t\mid t-1},\) the optimal estimator of \(\mu _{t}\) based on information at time \(t-1,\) with \(y_{t}\) in order to produce the best estimator of \(\mu _{t+1}\). Writing this equation together with an equation that defines the one-step ahead prediction error, \(v_{t},\) gives the innovations form of the KF:

The Kalman gain, \(k_{t},\) depends on \(\phi \) and q. In the steady-state, \( k_{t}\) is constant. Setting it equal to \(\kappa \) in (3) and re-arranging gives the ARMA model (2) with \(\xi _{t}=v_{t}\) and \( \phi -\kappa =\theta .\)

When the noise in (1) comes from a heavy-tailed distribution such as Student’s t it can give rise to observations which, when judged against the yardstick of a Gaussian distribution, are additive outliers. As a result fitting a Gaussian model is inefficient and may even yield estimators which are inconsistent. Simulation methods, such as Markov chain Monte Carlo (MCMC) and particle filtering, provide the basis for a direct attack on such non-Gaussian models; see Durbin and Koopman (2012). However, simulation-based estimation can be time-consuming and subject to a degree of uncertainty. In addition the statistical properties of the estimators are not easy to establish.

The DCS approach begins by writing down \(f_{t}(y_{t}),\) the distribution of the t-th observation conditional on past observations. The time-varying parameter is then updated by a suitably defined filter. Such a model is said to be observation driven. In a linear Gaussian UC model, the KF depends on the one step-ahead prediction error. The main ingredient in the DCS filter for non-Gaussian distributions is the replacement of \(v_{t}\) in the KF equation by a variable, \(u_{t},\) that is proportional to the score of the conditional distribution; compare Maronna, Martin and Yohai (2006, p. 272–4) and the references therein. Thus the second equation in (3) becomes

where \(u_{t}=\partial \ln f_{t}(y_{t})/\partial \mu _{t\mid t-1}\) and \( \kappa \) is treated as an unknown parameter. This filter could be regarded as an approximation to the computer intensive solution for the parameter driven unobserved components model. The attraction of regarding it as a model in its own right is that it becomes possible to derive the asymptotic distribution of the maximum likelihood estimator and generalize in various directions.

2.2 Why the Score?

Suppose that at time \(t-1\) we have \(\widehat{\theta }_{t-1}\) the ML estimate of a parameter, \(\theta .\) Then the score is zero, that is

where \(\ell _{j}(\theta ;y_{j})=f(y_{j};\theta ).\) When a new observation becomes available, a single iteration of the method of scoring gives

where \(I_{t}(\widehat{\theta }_{t-1})=t.I(\widehat{\theta }_{t-1})\) is the information matrix for t observations and the last expression follows because of (5). For a Gaussian distribution a single update goes straight to the ML estimate at time t (recursive least squares).

Remark 1

We may sometimes be able to choose a link function so that the information quantity does not depend on \(\theta .\)

As \(t\rightarrow \infty \), \(I_{t}(\widehat{\theta }_{t-1})\rightarrow \infty \) so the recursion becomes closed to new information. If it is thought that \(\theta \) changes over time, the filter needs to be opened up. This may be done by replacing 1 / t by a constant, which may be denoted as \(\kappa .\) Thus

With no information about how \(\theta \) might evolve, the above equation might be converted to the predictive form by letting \(\widehat{\theta } _{t+1\mid t}=\widehat{\theta }_{t}.\) Thus

For a Gaussian distribution in which \(\theta \) is the mean and the variance is known to be \(\sigma ^{2},\)

and (6) is an exponentially weighted moving average (EWMA).

If there is reason to think that the parameter tends to revert to an underlying level, \(\omega ,\) the updating scheme might become

where \(\left| \phi \right| <1.\) This scheme corresponds to a first-order autoregressive process. More generally we might introduce lags so as to smooth out the changes or allow for periodic effects.

2.3 Heavy tails

The Gaussian distribution has kurtosis of three and a distribution is said to exhibit excess kurtosis, or to be leptokurtic, if its kurtosis is greater than three. Although some researchers take excess kurtosis as defining heavy tails, it is not, in itself, an ideal measure, particularly for asymmetric distributions. Most classifications in the insurance and finance literature begin with the behaviour of the upper tail for a non-negative variable, or one that is only defined above a minimum value; see Embrechts et al. (1997). The two which are relevant here are as follows.

A distribution is said to be heavy-tailed if

where \(\overline{F}(y)=\Pr (Y>y)=1-F(y)\) is the survival function. When y has an exponential distribution, \(\overline{F}(y)=\exp (-y/\alpha ),\) so \( \exp (y/\alpha )\overline{F}(y)=1\) for all y. Thus the exponential distribution is not heavy-tailed.

A distribution is said to be fat-tailed if, for a fixed positive value of \(\eta ,\)

where c is a non-negative constant and L(y) is slowly varying,Footnote 1 that is

The parameter \(\eta \) is the tail index. The implied PDF is a power law PDF

where \(\sim \) is defined such that \(a(x)\sim b(x)\) as \(x\rightarrow x_{0}\) if \(\lim _{x\rightarrow x_{0}}(a/b)\rightarrow 1.\) The m-th moment exists if \(m< \eta \). The Pareto distribution is a simple case in which \( \overline{F}(y)=y^{-\eta }\) for \(y>1.\) If a distribution is fat-tailed then it must be heavy-tailed, but the converse is not true; see Embrechts, Kluppelberg and Mikosch (1997, p. 41–2).

The above criteria are related to the behavior of the conditional score and whether or not it discounts large observations. This, in turn, connects to robustness, as shown in the sub-section following. More specifically, consider a power law PDF, (9), with y divided by a scale parameter\(,\varphi \), so that \(\overline{F}(y/\varphi )=cL(y/\varphi )(y/\varphi )^{-\eta }\) and \(f(y)\sim cL(y)\varphi ^{-1}\eta (y/\varphi )^{-\eta -1}.\) Then

and so the score is bounded. With the exponential link function, \(\varphi =\exp (\lambda )\), \(\partial \ln f/\partial \lambda \sim \eta \) as \( y\rightarrow \infty .\) Similarly as \(y\rightarrow 0\), \(\partial \ln f/\partial \lambda \sim \overline{\eta }.\)

The logarithm of a variable with a fat-tailed distribution has exponential tails. Let x denote a variable with a fat-tailed distribution in which the scale is written as \(\varphi =\exp (\mu )\) and let \(y=\ln x.\) Then for large y

whereas as \(y\rightarrow -\infty \), \(f(y)\sim cL(e^{y})\overline{\eta }e^{ \overline{\eta }(y-\mu )}\), \(\overline{\eta }>0.\) Thus y is not heavy-tailed, but it may exhibit excess kurtosis. The score with respect to location, \(\mu ,\) is the same as the original score with respect to the logarithm of scale and so tends to \(\eta \) as \(y\rightarrow \infty .\)

2.4 Robust estimation

The location-dispersion model is

where \(\varepsilon _{t}\) is a standardized variable with PDF denoted \(\exp \rho (\varepsilon _{t})\) and the scale, \(\varphi \), is called the dispersion for \(y_{t};\) see Maronna, Martin and Yohai (2006, p. 37–8). The density for \( y_{t}\) is

where \(\xi \) denotes one or more shape parameters, and the scores for \(\mu \) and \(\varphi \) are given by differentiating \(\ln f(y_{t})= \rho ((y_{t}-\mu )/\varphi )-\ln \varphi .\) The score for location is

where \(z_{t}=(y_{t}-\mu )/\varphi ,\) whereas the score for scale is

Note that \(\psi _{S}(z_{t})=\varphi ^{-1}z_{t}\psi _{L}(z_{t}).\) When the scale is parameterized with an exponential link function, \(\varphi =\exp \lambda ,\) the score is

If \(y_{t}=\ln x_{t},\) where \(x_{t}=\varphi \varepsilon _{t}\) as in (11) and then the logarithm of the scale parameter for \(x_{t},\) that is \(\ln \varphi ,\) becomes the location for \(y_{t}.\) Hence \(\psi _{L}(y_{t})=\psi _{S}(x_{t}).\)

The ML estimators are asymptotically efficient, assuming certain regularity conditions hold. More generally \(\rho (.)\) may be any function deemed to yield estimators with good statistical properties. In particular, the estimators should be robust to observations which would be considered to be outliers for a normal distribution. When normality is assumed, the ML estimators of the mean and variance are just the corresponding sample moments, but these can be subject to considerable distortion when outliers are present. Robust estimators, on the other hand, are resistant to outliers while retaining relatively high efficiency when the data are from a normal distribution.

The M-estimator, which features prominently in the robustness literature, has a Gaussian response until a certain threshold, K, whereupon it is constant; see Maronna, Martin and Yohai (2006, p. 25–31). This is known as Winsorizing as opposed to trimming, where observations greater than K in absolute value are given a weight of zero.Footnote 2

3 DCS location models

The stationary first-order DCS model corresponds to the Gaussian innovations form, (3), and is

where \(\omega =\delta /(1-\phi )\) is the unconditional mean of \(\mu _{t\mid t-1}\), \(\varepsilon _{t}\) is a serially independent variate with unit scaleFootnote 3 and \( u_{t} \) is proportional to the conditional score, that is \(u_{t}=k.\partial \ln f(y_{t}\mid y_{t-1}\), \(y_{t-2},...)/\partial \mu _{t\mid t-1},\) where k is a constant.

More generally, an ARMA-type model of order (p, r) is

In the Gaussian case \(u_{t}= y_{t}-\mu _{t\mid t-1}\) and if q is defined as \(max(p,r+1),\) then \(y_{t}\) is an ARMA(p, q) process with MA coefficients \(\theta _{i}=\phi _{i}-\kappa _{i-1}\), \(i=1,..,q.\) Nonstationary ARIMA-type models may also be constructed as may structural times series models with trend and seasonal components. Explanatory variables can be introduced into DCS models, as described in Harvey and Luati (2014).

Maronna, Martin and Yohai (2006, Sect 8.6 and 8.8) give a robust algorithm for AR and ARMA models with additive outliers. For a first-order model their filter is essentially the same as (13) except that their dynamic equation is driven by a robust \(\psi -function\) and they regard the model as an approximation to a UC model.Footnote 4

3.1 Student t model

The Student \(t_{\nu }\) distribution has fat tails for finite degrees of freedom, \(\nu ,\) with the tail index given by \(\nu \). Moments exist only up to and including \(\nu -1\). The excess kurtosis, that is the amount by which the normal distribution’s kurtosis of three is exceeded, is \(6/(\nu -4),\) provided that \(\nu >4.\)

When the location changes over time, it may be captured by a model in which, conditional on past observations, \(y_{t}\) has a \(t_{\nu }\)-distribution with \(\mu _{t\mid t-1}\) generated by a linear function of

where \(v_{t}=y_{t}-\mu _{t\mid t-1}\) is the prediction error. Differentiating the log-density shows that \(u_{t}\) is proportional to the conditional score, \(\partial \ln f_{t}/\partial \mu _{t\mid t-1}=(\nu +1)\nu ^{-1}\exp (-2\lambda )u_{t}.\) For low degrees of freedom, the score function is such that observations that would be seen as outliers for a Gaussian distribution are far less influential. As \(\left| y\right| \rightarrow \infty ,\) the response tends to zero. Redescending M-estimators, which feature in the robustness literature, have the same property. For example, Tukey’s biweight function is \(\psi (z)=[1-(x/4.685)^{2}]_{+}^{2}z,\) where [ \(]_{+}\) denotes the positive part of the term in [ ]. This function implements ‘soft trimming’, as opposed to metric trimming, where \( \psi (z)=z\) for \(\left| z\right| \le K\) and is zero thereafter. The t score function is even softer.

The variable \(u_{t}\) can be written \(u_{t}=(1-b_{t})(y_{t}-\mu _{t\mid t-1}), \) where

is distributed as \(beta(1/2,\nu /2)\); see Harvey (2013, Chap. 3). Because the \(u_{t}^{\prime }s\) are \(IID(0,\sigma _{u}^{2})\), \(\mu _{t\mid t-1}\) is weakly and strictly stationary so long as \(\left| \phi \right| <1.\) Although determining the statistical properties of \(\mu _{t\mid t-1}\) requires assuming that it started in the infinite past, the filter needs to be initialized in practice and this may be done by setting \(\mu _{1\mid 0}=\omega \).

All moments of \(u_{t}\) exist and the existence of moments of \(y_{t}\) is not affected by the dynamics. The autocorrelations can be found from the infinite MA representation; the patterns are as they would be for a Gaussian model.

Maximum likelihood estimation is straightforward and for a first-order dynamic equation, as in (13), an analytic expression for the information matrix is available.

There are a number of ways in which skewness may be introduced into a t-distribution. One possibility is the method proposed by Fernandez and Steel (1998). There is a minor technical issue in that the score is not differentiable at the mode but as, Zhu and Galbraith (2011) show, the asymptotic theory for the ML estimator still goes through in the usual way. The asymptotic theory for the DCS skew-t location model also goes through; see Harvey (2013, Sect 3.11).

3.2 Exponential generalized beta distribution model

The exponential generalized beta distribution of the second kind (EGB2) is obtained by taking the logarithm of a variable with a generalized beta distribution of the second kind (GB2) distribution. What was the logarithm of scale in GB2 now becomes location in EGB. A reparameterization in terms of the standard deviation, \(\sigma ,\) gives the PDF as

where \(\xi \) and \(\varsigma \) are shape parameters which determine skewness and kurtosis and \(h=\sqrt{\psi ^{\prime }(\xi )+\psi ^{\prime }(\varsigma )}\) . The EGB2 distribution has exponential tails and all moments exist. When \( \xi =\varsigma \), the distribution is symmetric; for \(\xi =\varsigma =1\) it is a logistic distribution and when \(\xi =\varsigma \rightarrow \infty \) it tends to a normal distribution. When \(\xi =\varsigma =0\) the distribution is double exponential or Laplace. The distribution is positively (negatively) skewed when \(\xi >\varsigma \) (\(\xi <\varsigma \)) and its kurtosis decreases as \(\xi \) and \(\varsigma \) increase. Skewness ranges between \(-\)2 and 2 and kurtosis lies between 3 and 9. There is excess kurtosis for finite \(\xi \) and/or \(\varsigma .\) The EGB2 distribution is more peaked than a t with the same kurtosis, but as kurtosis increases, the differences between the peaks become more marked.

Multiplying the score function with respect to location by the variance gives

where

Because \(0\le b_{t}(\xi ,\varsigma )\le 1,\) it follows that as \( y\rightarrow \infty ,\) the score approaches an upper bound of \(\sigma \sqrt{2 }\), whereas \(y\rightarrow -\infty \) gives a lower bound of \(-\sigma \sqrt{2}. \) As \(\varsigma =\xi \rightarrow \infty ,\) the distribution becomes normal with \(u_{t}\simeq y_{t}-\mu _{t\shortmid t-1}.\)

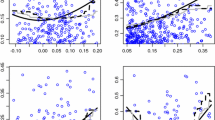

Figure 1 shows the score functions for EGB2 and t distributions with a standard deviation of one and an excess kurtosis of two. The shape parameters for the two distributions are \(\xi =0.5\) and \(\nu =7\). As can be seen, the score for the t-distribution is redescending, reflecting the fact that it has fat tails, whereas the EGB2 score is bounded.

4 Macroeconomic time series

Dynamic location models were fitted to the growth rate of US GDP and industrial production using EGB2, Student’s t and normal distributions. GDP is quarterly, ranging from 1947q1 to 2012q4. Industrial production data are monthly and range from January 1960 to February 2013. All data are seasonally adjusted and taken from the Federal Reserve Economic Data (FRED) database of the Federal Reserve of St. Louis.

When first-order Gaussian models are fitted, there is little indication of residual serial correlation. There is excess kurtosis in all cases, but no evidence of asymmetry. For example, with GDP the Bowman-Shenton statistic is 30.04, which is clearly significant because the distribution under the null hypothesis of Gaussianity is \(\chi _{2}^{2}.\) The non-normality clearly comes from excess kurtosis, which is 1.9, rather than from skewness, which is only 0.18 (with a p value of 0.24). Comparing the residuals with a fitted normal shows them to have a higher peak at the mean, as well as heavier tails; see Fig. 2.

Tables 1 and 2 report the estimation results and Table 3 compares goodness of fit. The Student-t model and the EGB2 outperform the Gaussian model with the shape parameter, \({ \nu }\) or \({ \xi ,}\) confirming the excess kurtosis. The \(\lambda \) parameter is the logarithm of scale, \(\nu ,\) but the estimates of \(\sigma \) are shown because these are comparable across different distributions. For GDP the EGB2 models gives a slightly better fit, whereas the t-distribution is better for industrial production. However, the differences between the two are small compared with the Gaussian model.

5 Structural breaks

It might be thought that the EGB2 and t filters will be less responsive to a permanent change in the level than the linear Gaussian filter. However, for moderate size shifts, the score functions in Fig. 1 suggest that this might not be the case, because only for large observations is the Gaussian response bigger than the response of the robust filters. For example, for the logistic (EGB2 with unit shape parameters), the score is only smaller than the observation (and hence the linear filter) when it is more than (approximately) 1.6 standard deviations from the mean. The behaviour of the t-filter is similar.

In order to investigate the issue of adapting to a permanent change in level, an upward shift was added to the US industrial production data at the beginning of 2010. The size of the shift was calibrated so as to be proportional to the sample standard deviation of the series. The results are shown in the Figs. 3 and 4. For a one standard deviation shift the paths of all three filters after the break are similar. For two standard deviations the Gaussian filter adapts more quickly, but it is still well below the new level initially and after 9 or 10 months it is virtually indistinguishable from the other two filters.

6 Trend and seasonality

The simplest nonstationary unobserved components model is the Gaussian local level model, that is a random walk plus noise. In this case the Kalman filter is an exponentially weighted moving average (EWMA) of past observations. For the DCS-t filter

and the initial value, \(\mu _{1\mid 0},\)is treated as an unknown parameter that needs to estimated along with \(\kappa \) and \(\nu .\) Since \( u_{t}=(1-b_{t})(y_{t}-\mu _{t\mid t-1})\), re-arranging the dynamic equation gives

which can be regarded as an EWMA modified to deal with outliers: when \( y_{t}^{2}\) is large, \(b_{t}\) is close to one.

Generalizing the above model to include a slope and seasonals provides the basis for a robust treatment of seasonal adjustment.

6.1 Basic structural model

Stochastic trend and seasonal components may be introduced into Gaussian unobserved components models for location. These models, called structural time series models, are implemented in the STAMP package of Koopman et al. (2009). The BSM is made up of mutually independent trend, seasonal and irregular components. Thus

where \(\mu _{t}\) is a local linear trend with a stochastic level and slope and \(\gamma _{t}\) is a stochastic seasonal. The trend is

where \(\zeta _{t}\) and \(\eta _{t}\) are independent of each other and of \( \varepsilon _{t}.\) The random walk plus noise or local level model is a special case. As regards the seasonal component, let \(\gamma _{jt}\) denote the effect of season j at time t and define \({\gamma } _{t}=(\gamma _{1t},...,\gamma _{st})^{\prime }\). The full set of seasonals evolves as a multivariate random walk

where \({\omega }_{t}=\left( \omega _{1t},...,\omega _{st}\right) ^{\prime }\) is a zero mean disturbance with \(Var\left( {\omega } _{t}\right) =\sigma _{\omega }^{2}\left( {\mathbf {I}}-s^{-1}{\mathbf {ii}} ^{\prime }\right) ,\) with \(\sigma _{\omega }^{2}>0.\) Although all s seasonal components are continually changing, only one affects the observations at any particular time, that is \(\gamma _{t}=\gamma _{jt}\) when season j is prevailing at time t. The requirement that the seasonal components always sum to zero is enforced by the restriction that the disturbances sum to zero at each t. This restriction is implemented by the correlation structure of \({\omega }_{t}\), where \(Var\left( {\mathbf {i}} ^{\prime }{\omega }_{t}\right) =0,\) coupled with initial conditions constraining the seasonals to sum to zero at \(t=0.\)

The multiplicative seasonal ARIMA model known as the ‘airline model’ is

Box and Jenkins (1976, pp. 305–6) gave a rationale for this model in terms of EWMAs at monthly and yearly intervals. The reduced form of the BSM is \( \Delta \Delta _{s}y_{t}\sim MA\left( s+1\right) \). Maravall (1985), compared the autocorrelation functions of \(\Delta \Delta _{s}y_{t}\) for the BSM and airline model for some typical values of the parameters and finds them to be quite similar, particularly when the seasonal MA parameter, \(\Theta \), is close to minus one. In the limiting case when \(\Theta \) is equal to minus one, the airline model is equivalent to a BSM with \(\sigma _{\zeta }^{2}=\sigma _{\omega }^{2}=0\).

6.2 Stochastic trend and seasonal in the DCS model

The DCS model for trends and seasonals,

has a structure which is similar to that of the innovations form of the Kalman filter for the BSM.

The filter for the trend is

An integrated random walk (IRW) trend in the UC local linear trend model implies the constraint \(\kappa _{2}=\kappa _{1}^{2}/(2-\kappa _{1}),\quad 0<\kappa _{1}<1,\) which may be found from Harvey (1989, p. 177). The restriction can be imposed on the DCS-t model by treating \(\kappa _{1}=\kappa \) as the unknown parameter, but without unity imposed as an upper bound.

The filter for the seasonal is

where the \(s\times 1\) vector \({\mathbf {z}}_{t}\) picks out the current season from the vector \({\gamma }_{t\mid t-1}.\) If \(\kappa _{jt}\), \( j=1,..,s, \) denotes the jth element of \({\kappa }_{t}\), then in season j we set \(\kappa _{jt}=\kappa _{s},\) where \(\kappa _{s}\) is a non-negative unknown parameter, whereas \(\kappa _{it}=-\kappa _{s}/(s-1)\), \( i\ne j,\quad i=1,..,s.\) The amounts by which the seasonal effects change therefore sum to zero. The initial conditions at time \(t=0\) are estimated by treating them as parameters.

The above filter may be regarded as a robust version of the well-known Holt-Winters filter; see Harvey (1989, p. 31). However, it differs from Holt-Winters in the Gaussian case by enforcing the restriction that the seasonals sum to zero. This is an important advantage.

6.3 Seasonal adjustment

In contrast to the Gaussian BSM, the DCS model has no exact solution for smoothing. Some possibilities are suggested in Harvey (2013, Sect 3.7), but these are difficult to generalize beyond the local level model. The best way to employ the DCS model for seasonal adjustment is to use it to mitigate the effects of outliers by modifying them rather than eliminating them by dummy variables. A dummy variable effectively means that the corresponding observation is treated as though it were missing; in other words it corresponds to hard trimming.

We first fit the DCS model and use it to construct pseudo-observations from the signal, that is \(\mu _{t\mid t-1}^{s}=\mu _{t\mid t-1}+\gamma _{t\mid t-1}.\) Thus

For the DCS-t model we can write

where \(b_{t}\) is as in (16) with \(\mu _{t\mid t-1}\) replaced by \( \mu _{t\mid t-1}^{s}.\) In the EGB2, we work directly with (18).

The pseudo-observations are now used to estimate the parameters in a BSM and the signal is estimated by smoothing. This new signal, denoted \(\mu _{t\mid T}^{s},\) is then used to construct new pseudo-observations as

with \(b_{t}\) replaced by

for the t-distribution and by

for the EGB2. The BSM may be re-estimated and the whole process iterated until convergence. If desired, \(\lambda \) and \(\sigma \) can be updated at each step using the sample variance of the \(u_{t}^{\prime }s\). The relationship between variance of the \(u_{t}^{\prime }s\) and \(\lambda \) for the t-distribution is given in Harvey (2013, p. 62). For the EGB2 (with \(\xi =\varsigma )\) it follows from Caivano and Harvey (2014) that \(\sigma _{u}^{2}=\sigma ^{2}h^{2}\xi ^{2}/(2\xi +1).\)

The seasonally adjusted observations are constructed as

where \(v_{t\mid T}=y_{t}-\mu _{t\mid T}^{s}.\) If it is felt that the outliers should be modified to make them less extreme, we could let \( y_{t}^{s}=\mu _{t\mid T}+u_{t\mid T}.\)

The above procedure could be implemented with TRAMO-SEATS rather than the unobserved components BSM.

7 Tourists in Spain

The logarithm of the number of tourists entering Spain from January 2000 to April 2014 (source: Frontur) is plotted in Fig. 5. Comparing a Gaussian unobserved components model with dummy variables with a DCS-t model provides a contrast between hard and soft trimming.

Fitting a BSM gives a Bowman-Shenton statistic of 19.29 for the residuals so normality is clearly rejected. (The 1 % critical value for a \(\chi _{2}^{2}\) is 9.21.) The automatic outlier detection option in STAMP finds five outliers, three of which are in 20012, along with a small structural break which, although not sudden, is attributed to April 2008. The corresponding (edited) output is shown below. Fitting a BSM with dummy variables included for the outliers and break gives the smoothed components shown in Fig. 5.

The ML estimates for the parameters of a DCS-t random walk model (the drift was not significant) with a seasonal component are as follows:

with initial values \(\widetilde{\mu _{0}}=15.1018(0.0174)\) and \(\widetilde{ \gamma _{0}}= [-0.5795(0.0270),-0.4761(0.0269)\), \( -0.1780(0.0264),0.1733(0.0244),0.1475(0.0291),0.2648(0.0261),0.5791(0.0259)\), 0.4566(0.0209), \( 0.3157(0.0327),0.1167(0.0304),-0.3862(0.0289),-0.4339]\), for the seasonal factors. The figures in parentheses are numerical standard errors and the initial value for the last seasonal factor is \(\widetilde{ \gamma }_{0,12}=0.018\) which is constructed by from the others and thus has no standard error.

The filtered DCS-t trend shown in Fig. 6 appears not to be affected by the outliers, which are downweighted, and the trend, shown in the two top panels, adjusts to the fall in 2008 as quickly as the Gaussian filter from the outlier-adjusted BSM which is fitted with a dummy for a break in 2008(4). The figure also shows the seasonal component and contrasts the prediction errors (irregular component) and scores; it is evident that extreme observations are downweighted by the score. The autocorrelation functions of irregular and score are shown in Fig. 7. The autocorrelations of the scores tend to be slightly bigger in absolute value, reflecting the fact that they are not weakened by outliers in the same way as the raw predictions errors.

Figure 8 shows the filtered trend together with the smoothed trends for the first three iterations, obtained as described in Sect. 6.3. The trend at the second iteration is almost indistinguishable from the one obtained at the third.

The above procedure was repeated, but using TRAMO-SEATS to obtain a smoothed estimate of the trend from the DCS pseudo-observations. The result is shown in Fig. 9, together with the smoothed trend obtained from the full (automatic) TRAMO-SEATS procedure As can be seen, the two trends are very close. Figure 10 shows the corresponding smoothed irregular components.

We also fitted an EGB2 model, but the fit was not as good as for the DCS-t model. Generally the results were similar except that the seasonal pattern for EGB2 was found to be deterministic. The ML estimates for the asymmetric model were:

The two shape parameters are very close and there is virtually no loss in imposing symmetry, that is \(\xi =\varsigma .\)

8 Conclusions

This article has shown how DCS models with changing location and/or scale can be successfully extended to cover EGB2 conditional distributions. Most of the theoretical results on the properties of DCS-t models, including the asymptotic distribution of ML estimators, carry over to EGB2 models. However, whereas the t-distribution has fat-tails, and hence subjects extreme observations to a form of soft trimming, the EGB2 distribution has light tails ( but excess kurtosis) and hence gives a gentle form of Winsorizing. The examples show that the EGB2 distribution can give a better fit to some macroeconomic series.

The way in which DCS models respond to breaks was examined and it was shown that, contrary to what might be expected, they adjust almost as rapidly as Gaussian models.

A seasonal adjustment procedure may be carried out with DCS models that include both trend and seasonal components. Having fitted the DCS model, the scores are used to adjust the data before smoothing using a standard Gaussian model. Two or three iterations seem to be sufficient. The method was illustrated with data on tourists in Spain. There is a case for a structural break in April, 2008, but the DCS model quickly adjusts to it and indeed it could be reasonably argued that it is better to let the change take place over several months rather than assigning it to just one. In summary, our new DCS procedure, like TRAMO-SEATS, provides a practical approach to seasonal adjustment in the presence of outliers.

Notes

More generally regularly varying is \(\lim _{y\rightarrow \infty }(L(ky)/L(y))=k^{\beta };\) see Embrechts, Kluppelberg and Mikosch (1997, p. 37, 564). Fat-tailed distributions are regularly varying with \(\eta =-\beta >0.\)

In both cases a (robust) estimate of scale needs to be pre-computed and the process of computing M-estimates is then often iterated to convergence.

The standard deviation is \(\sqrt{\nu /(\nu -2)}\) times the scale.

Muler, Peña and Yohai (2009, p. 817) note two shortcomings of the estimates obtained in this way. They write: ‘First, these estimates are asymptotically biased. Second, there is not an asymptotic theory for these estimators, and therefore inference procedures like tests or confidence regions are not available.’ They then suggest a different approach and show that it allows an asymptotic theory to be developed.

References

Caivano M, Harvey AC (2014) Time series models with an EGB2 conditional distribution. J Time Ser Anal 34:558–571

Creal D, Koopman SJ, Lucas A (2013) Generalized autoregressive score models with applications. J Appl Econ 28:777–795

Creal D, Koopman SJ, Lucas A (2011) A dynamic multivariate heavy-tailed model for time-varying volatilities and correlations. J Bus Econ Stat 29:552–563

Durbin J, Koopman SJ (2012) Time series analysis by state space methods, 2nd edn. Oxford Statistical Science Series, Oxford

Embrechts P, Kluppelberg C, Mikosch T (1997) Modelling extremal events. Springer, Berlin

Fernandez C, Steel MFJ (1998) On Bayesian modeling of fat tails and skewness. J Am Stat Assoc 99:359–371

Harvey AC (1989) Forecasting, structural time series models and the kalman filter. Cambridge University Press, Cambridge

Harvey AC (2013) Dynamic models for volatility and heavy tails., Econometric Society MonographCambridge University Press, Cambridge, New York

Harvey AC, Luati A (2014) Filtering with heavy tails. J Am Stat Assoc 109:1112–1122

Koopman SJ, Harvey AC, Doornik JA, Shephard N (2009) STAMP 8.2 structural time series analysis modeller and predictor. Timberlake Consultants Ltd, London

Maravall A (1985) On structural time series models and the characterization of components. J Bus Econ Stat 3:350–355

Maronna R, Martin D, Yohai V (2006) Robust statistics: theory and methods. Wiley, Chichester

McDonald JB, Newey WK (1988) Partially adaptive estimation of regression models via the generalized t distribution. Econ Theory 4:428–457

Muler N, Pena D, Yohai VJ (2009) Robust estimation for ARMA models. Ann Stat 37:816–840

Zhu D, Galbraith JW (2011) Modelling and forecasting expected shortfall with the generalised asymmetric student-t and asymmetric exponential power distributions. Journal of Empir Financ 18:765–778

Acknowledgments

We are grateful to the Gabriele Fiorentini and a referee for helpful comments on the first draft.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Caivano, M., Harvey, A. & Luati, A. Robust time series models with trend and seasonal components. SERIEs 7, 99–120 (2016). https://doi.org/10.1007/s13209-015-0134-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13209-015-0134-1