Abstract

This paper codifies in a systematic and transparent way a historical chronology of business cycle turning points for Spain reaching back to 1850 at annual frequency, and 1939 at monthly frequency. Such an exercise would be incomplete without assessing the new chronology itself and against others—this we do with modern statistical tools of signal detection theory. We also use these tools to determine which of several existing economic activity indexes provide a better signal on the underlying state of the economy. We conclude by evaluating candidate leading indicators and hence construct recession probability forecasts up to 12 months in the future.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Late in the third quarter of 2007, as the fuse of the Global Financial Recession was being lit across the globe, 20.5 million Spaniards held a job.Footnote 1 4 years later, that number stood at 18.2 million—a loss of over 235,000 jobs at a time when the working age population grew by about 800,000 individuals. Measured by the peak to trough decline in GDP—a 5 % loss—one would have to reach back to the Great Depression (excluding the Spanish Civil War) to find a steeper decline in output. Moreover, employment prospects remain dim in the waning hours of 2011 for many that joined the ranks of the unemployed back in 2007. Given this environment, dating turning points in economic activity may seem the epitome of the academic exercise. Yet the causes, consequences and solutions to the current predicament cannot find their mooring without an accurate chronology of the Spanish business cycle.

Not surprisingly, the preoccupation with business cycles saw its origin in the study of crises. Whereas early economic historians found the roots of economic crises in “war or the fiscal embarrassments of governments,”Footnote 2 by the early twentieth century it became clear that economies experienced contractions in economic activity whose origin could not be easily determined.

As economies became less dependent on agriculture, more industrialized, more globalized and therefore more financialized, the vagaries of the weather were soon replaced by the vagaries of the whim. Asset price bubbles and financial crises littered the mid-nineteenth and early twentieth centuries (see Schularick and Taylor, 2012). The period from 1870 to 1929 saw no less than four global financial panics, each engulfing a large portion of the industrialized world—and by most accounts upwards of 50 % of global GDP at the time (see Jordà et al. 2011).

It was against this backdrop that the National Bureau of Economic Research (NBER) was created in 1920. The NBER now views as its core mission “the aggregate economy, examining in detail the business cycle and long-term economic growth.”Footnote 3 Early exponents of this mission can be found in “Simon Kuznets’ pioneering work on national income accounting, Wesley Mitchell’s influential study of the business cycle, and Milton Friedman’s research on the demand for money and the determinants of consumer spending [\(\ldots \)]”Footnote 4. In fact, it is the work of Wesley C. Mitchell and Arthur F. Burns (1946) which laid the foundations for the study of the business cycle at the NBER. And since 1978 a standing Business Cycle Dating Committee (BCDC) was formed to become the arbiter of the American business cycle, a chronology that now reaches back to 1854. Slowly, other countries have been creating similar committees, such as the Euro Area Business Cycle Dating Committee of the Center for Economic Policy Research, founded in 2002. But to our knowledge, no such independent arrangement has been created in Spain.

A chronology of the Spanish business cycle is not only a necessity for the modern study of the origins of macroeconomic fluctuations and the design of optimal policy responses, it is a necessity that as of September 7, 2011 would appear to be a matter of constitutional law. The constitutional reform of article 135 passed by parliament that day states that: “The limits of the structural deficit and public debt volume may be exceeded only in case of natural disasters, economic recession or extraordinary emergency situations that are either beyond the control of the State or significantly impair the financial situation or the economic or social sustainability of the State, as appreciated by an absolute majority of the members of the Congress of Deputies” (emphasis added). It would appear that the whimsy of the business cycle is at the purview of the legislature rather than the economic brain trust. If nothing else, this observation serves to cement the importance that an independent committee, whose job is to determine turning points in economic activity, can play in the economic and political life of a country.

But what is a recession? The BCDC offers a clear yet less than operational definition:Footnote 5

A recession is a significant decline in economic activity spread across the economy, lasting more than a few months, normally visible in production, employment, real income, and other indicators. Determination of the December 2007 Peak in Economic Activity, December 2008. Business Cycle Dating Committee of the National Bureau of Economic Research.

And most institutions in the business of keeping a chronology of economic cyclical activity use a similarly intuitive yet entirely mathematically imprecise definition of recession. How then would one determine whether or not a business cycle dating committee (or a legislature) is appropriately sorting the historical record into periods of expansion and periods of recession? After all, the true state of the economy (expansion or recession) is inherently unobservable—an infinite sample of data can only improve the precision of the estimated probabilities associated to each state, but it does not reveal the states themselves.

Our quest to formalize a chronology of the Spanish business cycle begins with a brief description of the statistical methods that have been used in the literature to achieve a classification of turning points. We begin with the early methods that Gerhard Bry and Charlotte Boschan introduced in 1971 at the NBER. The Bry and Boschan (1971) algorithm comes closest to translating the NBER’s definition into practice: remove seasonals from the data in levels (there is no need to detrend), smooth the data lightly to remove aberrations, then identify local minima and maxima in the series. The local minima and maxima are constrained to ensure that cycles have a certain duration, that they alternate, and that complete cycles last at least 15 months. A local minimum is a trough and the following local maximum a peak, so that the period between trough and peak is an expansion, and from peak to trough a recession. The Bry and Boschan (1971) algorithm was applied to quarterly data by Harding and Pagan (2002a, b), Kose et al. (2003, 2008a, b), among others. As arbitrary as the Bry and Boschan (1971) algorithm may seem, it is simple to implement, reproducible, and perhaps more critically, it does not require that the data be detrended.

A more structural view of how fluctuations around trend-growth are determined is to suppose that the data are generated by a mixture process. In the econometrics literature, the pioneering work of Hamilton (1989) was the first to characterize the stochastic process of economic fluctuations as a mixture. The idea is to conceive of the data as being generated by two distributions (one for each state, expansion or recession) and to characterize the transition between states as a hidden-Markov process. In the statistics literature, the problem of identifying the underlying state of the economy closely resembles pattern recognition problems in computational learning, or more briefly decoding.

Decoding is most often referred to in information theory as an algorithm for recovering a sequence of code words or messages from a given sequence of noisy output signals (Geman and Kochanek 2001). In fact, almost every cell-phone on earth uses a version of the Viterbi algorithm (Viterbi 1967), itself based on filtering a hidden-Markov process. More recently, an application of these principles with non-parametric computational techniques was introduced by Hsieh et al. (2006) in what they call the hierarchical factor segmentation (HFS) algorithm. An application of HFS to economic data is found in Hsieh et al. (2010a). The basic principle of the HFS algorithm is to use the recurrence time distribution of certain events (say, record each time output grows below a given threshold) to come up with an optimal non-parametric mixture using the maximum entropy principle of Jaynes (1957a, b). Interestingly, the idea of using recurrence times dates back to Poincaré (1890).

Each method can be applied to different series, resulting in several different recession chronologies, one for each variable. Alternatively, one could aggregate the data first, perhaps with a factor model, and then use the aggregate series to date the business cycle. The combine-then-date approach appears to be the most commonly used at present (a good example is Stock and Watson 2010), perhaps reflecting the popularity that factor models currently enjoy in other areas of economics. Moreover, a single indicator of economic activity has the advantage of being a succinct tool of communication. From that perspective, our investigation will take us to consider a variety of such indicators that have been proposed to characterize business conditions in Spain. Among these, we will investigate the OECD’s composite leading indicator (CLI) index,Footnote 6 the index of economic activity constructed by the Spanish think tank FEDEA,Footnote 7 and two recent more sophisticated indexes, the MICA-BBVA indexFootnote 8 of Camacho and Doménech (2011), and Spain-STINGFootnote 9 by Camacho and Quirós (2011).

Yet as we shall see, variables do not always fluctuate synchronously—consider the behavior of employment versus output across recent business cycles—an observation that favors the date-then-combine approach if interest is tilted toward constructing a single series of turning points. Moreover, the variables in our data set are observed over different spans and at different frequencies, features that complicate the factor model approach. Instead, a simple method of date-combination based on the network connectivity properties of each chronology (see, e.g., Watts and Strogatz 1998), turns out to provide insight into the determinants of economic fluctuations and is a straightforward method to generate a single chronology of turning points.

It is not enough to come up with a chronology of turning points, one must also formally assess the quality of any given chronology. A scientific defense of the quality of such a chronology requires formal statistical assessment and to this end we reach back to 1884 and Charles S. Peirce’s “Numerical Measure of the Success of Predictions,” the direct precursor to the Youden index (Youden 1950) for rating medical diagnostic tests, and the receiver operating characteristics (ROC) curve by Peterson and Birdsall (1953) in the field of radar detection theory. Today, the ROC curve is a standard statistical tool in the assessment of medical diagnostic procedures (going back to Lusted 1960), but it is also used routinely in atmospheric sciences (see Mason 1982) and machine learning (Spackman 1989). In economics, early uses appear for the problem of credit scoring, but more recently for the evaluation of zero-cost investments, such as the carry trade (see Jordà and Taylor 2009; Berge et al. 2011). Jordà and Taylor (2011) provide perhaps the most detailed overview of this literature and emphasize the correct classification frontier, a relative of the ROC curve, as the more appropriate tool in economics. Applications of these techniques to the classification of economic data into expansions and recessions in the US is done in Berge and Jordà (2011).

Our pursuits end by gazing into the future: What can we say about the problem of predicting future turning points? In another departure from traditional econometric practice, the problem of choosing good predictors for classification purposes does not require that the predictors be accurate in the usual root mean squared error sense. Moreover, we will argue that, unlike conventional time series modelling, it is best to tailor the set of predictors to the forecast horizon under consideration. In our experience, we have found that variables can be good classifiers in the short-run but poor classifiers in the long-run and vice versa. If, as is common practice, one fits a model based on short-run prediction and then iterates forward to longer horizons, the model will tend to put too much weight on the short-run classifiers and generate worse predictions than if a different model is chosen for each horizon—a practice commonly referred to as direct forecasting. Seen through this lens, the outlook for the Spanish economy remains grim.

2 Dating turning points

The BCDC’s September 10, 2010 press release pronounced the trough of US economic activity to have occurred June 2009.Footnote 10 In that release, the committee made available the data and figures used to make that determination, thus offering a more intimate glimpse at how decisions on turning points are made. The Bry and Boschan (1971) algorithm is perhaps the most direct expression of this process. The algorithm attempts to identify peaks and troughs in the level of a business cycle indicator. We explain the details of how this is done below taking note of the data that the BCDC analyzes in order to replicate a similar analysis with Spanish data. The results of this analysis form the basis of our proposed chronology of the Spanish business cycle.

If instead one focuses on the rates of growth in economic activity, so that the data can be reasonably thought of as being stationary, an alternative way to conceive of cyclical phenomena is to speculate that the data are generated by a mixture process whose alternating pattern is driven by a hidden-Markov process. Thinking of the data generation process (DGP) in this manner calls for a filtering method. The Hamilton (1989) filter is the most commonly used in economics, which we briefly describe below. If one prefers to be less specific about the stochastic processes describing the evolution of the data in each regime, there exist a number of non-parametric filtering algorithms within the statistics literature. One that has been applied to the problem of classifying business cycles is the hierarchical factor segmentation (HFS) algorithm, which is also described below. In our application to Spanish data, these two hidden Markov models are estimated on real GDP growth data to serve as a counterpoint to the cyclical turning points we identify with the Bry and Boschan (1971) approach.

However, the application of these methods to Spanish data leave a jumble of dates and discrepancies across series to contend with. This we do using network connectivity measures. The result is a unique candidate chronology of Spanish recessions that at least forms the basis for a more informed conversation about the Spanish business cycle. In the next section we will examine different tools that can be used to evaluate this chronology against other available chronologies (such as those produced by the OECD and the Economic Cycle Research Institute, or ECRI). Perhaps not surprisingly, we find strong empirical evidence in support of our chronology.

2.1 Bry and Boschan (1971)

The Bry and Boschan (1971) algorithm is easily expressed when the data are annual. In this case, the algorithm is simple: it looks for local maxima and minima in the raw data. As an example, Fig. 1 displays the time series of Spanish real GDP per capita from 1850 to 2008 (assembled by Prados de la Escosura 2003), with Bry–Boschan recessions shaded. Let \(y_{t}\) denote the logarithm of real GDP per capita. \(P_t\) is a binary indicator that takes the value of 1 if date \(t\) is a peak of economic activity, 0 otherwise, and \(T_{t}\) is a binary indicator that equals 1 if \(t\) is a trough of economic activity, 0 otherwise. Peak and trough dates are calculated as follows:

Looking at Fig. 1, recessions arrive more frequently in the early part of the sample, likely reflecting among other things, the preponderance of an agricultural sector that is much more sensitive to fluctuations in weather patterns. The average growth rate of per capita GDP during the period prior to the Civil War is about 1.2 %. The destruction of economic activity during the Civil War is massive, with a loss of per capita output near 35 %; recovering to trend growth required almost the entire duration of Franco’s dictatorship. Since then, the rate of per capita growth has stabilized around a 2 % rate, which is largely comparable to other industrialized economies. Table 1 provides the list of peak and trough dates that we calculate with expression (1), which we refer to with the acronym BBY.

To motivate the filtering methods that we discuss below, it is useful to calculate the empirical mixture distribution of the annual growth rate in real GDP per capita, using the BBY algorithm to estimate the states. This is displayed in Fig. 2. The kernel density estimates for the recession and expansion distributions overlap roughly over the interval \([-5~\%, 5~\%]\). Clearly, the dating of business cycles cannot rely on a simple mechanical exercise of recording when output is below or above some threshold. Rather, cyclical activity refers to recurrent patterns of depressed and burgeoning periods of economic activity, each of which can countenance a degree of variation.

Kernel estimates of the empirical mixture distribution of real GDP per capita, 1850–2008. Kernel density estimates of the empirical distribution of per capita real GDP growth annualized in expansions (solid line) and in recessions (dashed line). Recession dates are based on the dates in Table 1 and displayed in Fig. 1

When the data are quarterly or monthly, the application of the Bry–Boschan algorithm requires a few additional steps.Footnote 11 First, the data are seasonally adjusted. Next, the data are smoothed using a moving average filter to remove sources of idiosyncratic variation that are unrelated to the cyclical behavior of the variable (the smoothing step is omitted when using quarterly data due to the coarse frequency of the data). In addition, two important ad-hoc rules are added to an expression like (1). A restriction on the minimum length of a recession—6 months or two quarters, depending on the frequency of the data—as well as a restriction on the minimum length of a complete recession-expansion cycle—15 months or four quarters—ensure that the business cycles occur at the appropriate frequency.

The application of the Bry and Boschan (1971) algorithm to quarterly data (with the gastronomical acronym BBQ as Harding and Pagan fittingly recognized) is presented in Fig. 3 and Table 2. Figure 3 contains two panels, the top panel displays the raw real GDP data available from the Spanish National Accounts, which comes organized into three overlapping windows depending on the base year used to calculate prices. The samples are 1970Q1–1998Q4, 1980Q1–2004Q4, and 1995Q1–2011Q2. The first two samples share two recessions in common and the timing is rather similar, usually within 2 quarters of each other. The second panel displays employment data (total employed from the household survey), which starts a little later, 1976Q3–2011Q2. At the start of the sample and up until the trough of 1985Q2, employment is steadily declining so it is difficult to date the beginning of that recession with employment data alone. However, the dates of the last two recessions overlap reasonably well with those identified with GDP, although employment appears to decline earlier than GDP and recover later. This is presented more clearly in Table 2. Moreover, the dates presented in Table 2 relate well to the dates we identified using the historical data and presented in Table 1.

Recession dating using quarterly real GDP data and employment based on the Bry and Boschan (1971) algorithm. Panel 1 Real GDP windows based on original source using 1986, 1995, and 2000 as base years. Panel 2 Total employment

Finally, we show the results of the Bry and Boschan (1971) algorithm when applied to monthly data (BBM). In an effort to replicate the same series used by the BCDCFootnote 12 for Spain, we examine linearly interpolated quarterly data on real GDP and employment (used earlier for the BBQ analysis) and we add the number of registered unemployed, the industrial production index and an index of wage income.Footnote 13

In all, we have five series from which to construct a single chronology of peaks and troughs, which we do in the next section. Instead, Table 3 summarizes each individual BBM chronology. There are a number of adjustments that deserve comment. Figure 4 displays the registered unemployed series and serves to highlight these adjustments. The most obvious pattern in the figure is the run-up in the number of registered unemployed at the end of 1975 to about 1985. This is a striking change that likely reflects a number of institutional changes. Franco dies in November, 1975 and the referendum on the Spanish Constitution takes place in 1978—two of the early salvos in the creation of the modern Spanish state. In addition, there are the two oil crises of 1973–1974 and 1979. Separating that subsample from the rest, it is clear that the cyclical behavior of the data after 1985 is different than it was before 1975. Clearly, it would be very difficult to come up with a model that could describe the entire sample; here is where the Bry and Boschan (1971) algorithm can be quite useful. In addition, notice the adjustment in the series in November 1995 that has nothing to do with the business cycle. We adjusted the dates of peaks and troughs produced by the BBM algorithm accordingly to avoid detecting a spurious recession.

Number of registered unemployed and BBM recessions. Recessions are computed separately over three regions determined by breaks in 1975 and 1985. For the middle regime, we detrend the data linearly. Also notice that there is a break in the way registered unemployed are accounted for the observation dated November 1995

Before we discuss how all this information can be reconciled to generate a unique chronology, we discuss two alternative methods that we use as a robustness check of the results reported here.

2.2 Hamilton’s Markov switching model

The Markov switching model due to Hamilton (1989) is one of the most commonly applied methods for identifying business cycles in economic data. While a complete description of the model introduced in Hamilton (1989) is beyond the scope of this paper, the basic ideas can be expressed succinctly. Suppose \(y_{t}\) refers to the annualized growth rate of quarterly real GDP. The mean of \(y_t\) takes on one of two values depending on the state \(s \in \{1,2\},\) so that:

where \(\mu _s \in \{\mu _1, \mu _2\}\). When \(\rho =0,\) Eq. (2) is the expression of a Gaussian mixture with common variance but different means. The literature has extended this basic setup in many directions, for example, by allowing the dynamics and the variance to be state dependent, considering more than two states, etc.

The transition between states is assumed to be described by a first-order, two-state Markov process with transition probabilities

where \(i,j,k=1,2\) so that information prior to time \(t-2\) is not needed. The true state of the process, \(s_t\), is not directly observable but can be inferred from the sample. One way to estimate the model and make inferences about the unobserved state is to cast the model in state-space form (see e.g. Kim and Nelson 1999). The model can then be estimated by maximum likelihood and the transition probabilities can be calculated as a by-product of the estimation. Moreover, the specification of the filter permits a convenient way to obtain accurate estimates of these transition probabilities through a backwards smoothing step. The resulting probability estimates are the quantities that we will report in our examples.Footnote 14

As an illustration, consider the annualized growth rate of quarterly real GDP. Figure 5 compares the smoothed transition probabilities for the recession state against the recession regions identified with the BBM algorithm on the linearly interpolated GDP data. Table 4 collects the BBQ dates for GDP, those from the Hamilton (1989) filter, and those from the HFS algorithm, to be discussed briefly. Figure 5 and Table 4 show that the Hamilton (1989) filter selects fewer recessions, only three in the 1970Q1 to 2011Q2 period, relative to five selected by BBQ and eight selected by HFS. However, the dates of those three recessions largely coincide across methods. If anything, the evidence from the five monthly indicators discussed in the previous section suggest that the Hamilton (1989) dates may be too conservative—see Table 3. Nevertheless, it is reassuring that for the recessions detected, the dates largely line up with those from other methods.

The Hamilton (1989) smoothed transition probabilities against the BBM recessions. Based on yearly growth rate of real GDP: 1970Q1–2011Q2 then interpolated linearly to monthly

2.3 The hierarchical factor segmentation algorithm: HFS

Introduced by Hsieh et al. (2006), the hierarchical factor segmentation (HFS) algorithm is a non-parametric, pattern-recognition procedure that exploits the recurrence distribution of separating events, an idea that traces its origins perhaps as far back as Poincaré (1890). The reader is referred to the original source for a more in-depth description. HFS belongs to the larger class of hidden Markov models and in that sense, it can be considered as the non-parametric cousin to Hamilton (1989) model. Here we provide a succinct summary.

HFS is a procedure whose underlying premise is that the data has been generated by a mixture model—much like the specification of the Markov switching model presented above. However, rather than specifying the complete stochastic process of the data, one proceeds in a series of steps. First, determine a separating event—a feature of the data more likely to belong to one distribution than the other—then use that event to generate a preliminary partition of the data. In our application, this separating event is based on observations in the bottom 30th percentile of the empirical distribution of quarterly real GDP growth. This step may appear ad-hoc, but the success of HFS does not depend on a precise determination of this separating event (see Hsieh et al. 2010a).

Next, the data is further partitioned into clusters, that is, periods where the observed frequency of separating events is high and periods when it is low. Entropy arguments (Jaynes 1957a, b) suggest that the duration between events can be best characterized by a Geometric mixture (see Hsieh et al. 2010b, c) and the final partition into expansions and recessions is the result of maximizing the empirical likelihood of this mixture.

As a way to illustrate the procedure in practice, we used the same real GDP growth data that we used to estimate the Hamilton (1989) model described in the previous section. The dates of peaks and troughs are described in Table 4, which we discussed previously. Relative to BBQ and Hamilton (1989), HFS tends to identify more recessions: eight versus five and three respectively. However some of these additional recessions appear to find a counterpart in the monthly variables that we analyzed in Table 3.

2.4 Summary, network connectivity and a chronology

The previous sections have generated a multiplicity of business cycle chronologies, each derived from a particular method and using different underlying data. Along the way we have learned several lessons worth summarizing. A chronology of peaks and troughs facilitates the cataloguing of basic empirical facts and for this reason, we think the Bry and Boschan (1971) algorithm codifies that which is more likely to be of interest to researchers. Moreover the Bry and Boschan (1971) method is robust: it does not require detrending the data, the dates will not change as a sample expands over time, and it is easy to communicate. On the downside, the algorithm feels ad-hoc and it requires a few observations past the turning point to make a sound determination (undoubtedly, one of the reasons the NBER takes anywhere between 12 to 18 months). On the other hand, methods based on the hidden Markov approach, such as Hamilton (1989) and HFS, have more solid statistical justification and can generate more timely pronouncements (subject to inevitable revisions in the data), but have a less intuitive feel.

We conclude this section by discussing how we reconcile the patchwork of dates that we have uncovered using different economic indicators to generate a single chronology. This is the procedure used by the BCDC committee of the NBER. To formalize the process, here we present procedures based on the theory of networks (see Watts and Strogatz 1998). In particular, we use two popular measures of network connectivity: the incidence rate and the wiring ratio. Suppose that we generate a binary indicator of recession out of each of the five indicators that we considered above. The incidence rate computes the ratio of the number of indicators flashing recession relative to the total number of indicators at every point in the sample:

where the binary recession indicator \(r_{it} \in \{0,1\}\) for \(t=1,\ldots ,T;\)\(i=1,\ldots ,n\) and \(n\) is the total number of indicators.

The incidence rate is simple and intuitive. However, notice that the marginal weight that it attaches to an additional indicator flashing recession is constant. If one instead wants the marginal value of an additional signal to be increasing—that is, low when only a few indicators flash recession and high otherwise—the wiring ratio is an attractive alternative. The wiring ratio is based on the number of pair-wise active connections relative to the total number of possible pair-wise connections. It is calculated as:

The samples available for each of the five indicators we consider vary. Prior to 1970 we can only rely on data for the number of registered unemployed. As time goes by, we are able to incorporate information from the other indicators, and by 1985 we have information on all of them. Thus in practice we replace n with \(n_t\) in (3) and (4), so that the number of indicators available is not time-invariant.

Both of these network connectivity measures are displayed in Fig. 6 along with an interpolated measure of real GDP to provide some context. The figure also displays recessions calculated as periods when the incidence rate is above 50 %. The resulting dates are also listed in Table 5. The first column simply summarizes the yearly chronology of peaks and troughs using the historical data of Prados de la Escosura (2003) described earlier, where as the second column contains monthly dates of peaks and troughs based on increasingly more data and the 50 % incidence rule.

Incidence rate, wiring ratio and recessions. Spain January 1939 to October 2011. Recession shading based on a 0.5 threshold for the incidence rate (displayed). Real GDP per capita interpolated from yearly frequency observations (Prados de la Escosura 2003). Early recessions coincide with the wiring ratio because there is no other data available. More series become available starting in the early 1970’s. See text for details

We present this chronology because its construction is transparent and replicable, but not because we think it is the last word on the Spanish business cycle. There are certainly other variables one may have considered and at all times one must be aware of what economic history tells us to be able to refine the dates that we present. But we think this chronology is a reasonable starting point that we hope will be of service to other researchers.

3 Tools of evaluation

If the true state of the economy (expansion versus recession) is not directly observable, by what metric would one then judge one chronology as being superior to another? This would seem to be an impossible question to answer but statistical methods dating back to the nineteenth century provide ways to get a handle on this question. Before we get there, we find it useful to begin our discussion taking a chronology of business cycles as given, and then asking how good is a given variable in sorting history into expansions and recessions. Such a problem, it turns out, is not all that different from evaluating a medical diagnostic procedure, determining whether an e-mail is spam or not, or judging a tornado warning system, to mention a few applications. In all cases the object we wish to predict is a binary outcome and how we judge the quality of a variable as a classifier depends to a great extent, on the costs and benefits associated with each possible classifier, outcome pair.

Much of this discussion borrows from Berge and Jordà (2011) and Jordà and Taylor (2011) and finds its origin in the work of Peirce (1884) and the theory of signal detection in radars by Peterson and Birdsall (1953). Specifically, let \(y_{t}\) denote the classifier, an object that we require only to be ordinal. The classifier could be a number of things: an indicator variable (perhaps an observable variable of economic activity, an index, or a factor from a group of variables), a real-time probabilistic prediction, and so on. The distinction is unnecessary for the methods we describe. \(y_{t}\) together with a threshold \(c\) define a binary prediction recession with \(s_{t}=1\) whenever \(y_{t} \le c,\) and expansion (\(s_{t}=0\)) whenever \(y_{t}>c.\) Obviously the sign convention is for convenience. If we used the unemployment rate as our classifier \(y_{t},\) we could just as easily reformulate the problem in terms of the negative of the unemployment rate.

Associated with these variables, there are four possible classifier, outcome (\(\{y_{t},s_{t}\}\)) pairs: the true positive rate\(TP(c)=P[y_{t}\le c|s_{t}=1],\) the false positive rate\(FP(c)=P[y_{t}\le c|y_{t}=0],\) the true negative rate\(TN(c)=P[y_{t}>c|s_{t}=0]\) and the false negative rate\(FN(c)=P[y_{t}>c|s_{t}=1].\) It is straightforward to see that \(TP(c)+FN(c)=TN(c)+FP(c)=1\) with \(c\in (-\infty ,\infty )\). Clearly, as \(c \rightarrow \infty \), \(TP(c) \rightarrow 1\) but \(TN(c)\rightarrow 0\). The reverse is true when \(c\rightarrow -\infty \). To an economist, this trade-off is familiar since it has the same ring as the production possibilities frontier. For a given technology and a fixed amount of input, dedicating all the input to the production of one good restricts production of the other good to be zero and vice versa. And the better the technology the more output of either good or a combination can be produced. For this reason Jordà and Taylor (2011) label the curve representing all the pairs \(\{TP(c),TN(c)\}\) for \(c\in (-\infty ,\infty )\) as the correct classification frontier (CCF). The curve representing all the pairs \(\{TP(c),\)\(FP(c)\}\) is called the receiver operating characteristics curve or ROC curve, but this is just the mirror image of the CCF and it shares the same statistical properties.

A good classifier is one that has high values of \(TP(c)\) and \(TN(c)\) regardless of the choice of \(c\) and in the ideal case it turns out that \(TP(c)=TN(c)=1\) for any \(c.\) In that case, it is easy to see that the CCF is just the unit square in \(TP(c)\times TN(c)\) space, as shown in Fig. 7. At the other extreme, an uninformative classifier is one in which \(TP(c)=1-TN(c) \) for any \(c\) and the CCF is the diagonal bisecting the unit-square in \(TP(c) \times TN(c)\) space. Using the colorful language of the pioneering statistician Charles Sanders (Peirce 1884), the classifiers corresponding to these two extreme cases would be referred to as the “infallible witness” and the “utterly ignorant person” (Baker and Kramer 2007). In practice, the CCF is a curve that sits between these two extremes as depicted in Fig. 7.

Depending on the trade-offs associated with \(TP(c)\) and \(TN(c)\) (and implicitly \(FP(c)\) and \(FN(c)),\) Peirce (1884) tells us that the “utility of the method” can be maximized by choosing \(c\) such that:

where \(\pi \) is the unconditional probability \(P(s_{t}=1).\) A good rule of thumb is to assume that \(U_{pP}=U_{nN}=1\) and \(U_{nP}=U_{pN}=-1\) so that we are equally happy correctly identifying periods of expansion and recession, and equally unhappy when we make a mistake. Yet to a policymaker these trade-offs are unlikely to be symmetric, especially if the costs of intervening are low relative to the costs of misdiagnosing a recession as an expansion. Therefore, Fig. 7 plots a generic utility function that makes clear, just as in the production possibilities frontier textbook model, that the optimal choice of \(c\) is achieved at the point where the CCF and the utility function are tangent (assuming no corner solutions such as when we have a perfect classifier). This is sometimes called the optimal operating point.

In the canonical case with equal utility weights for hits and misses and \(\pi =0.5,\) the optimal operating point maximizes the distance between the average correct classification rates and 0.5, the average correct classification rate of an uninformative classifier. This is just another way of expressing the well-known Kolmogorov–Smirnov (KS) statistic (see Kolmogorov 1933; Smirnov 1939):

Intuitively, the KS statistic measures the distance between the empirical distribution of \(y_{t}\) when \(s_{t}=1,\) and the empirical distribution of \(y_{t}\) when \(s_{t}=0.\) An example of this situation is displayed in Fig. 2 presented earlier, which shows the kernel estimates for the empirical distribution of per capita real GDP in expansions and in recessions.

An evaluation strategy now begins to materialize. In the situation where the chronology of business cycles is a given and \(y_{t}\) is, say, a linear combination of leading indicators, the more clear the separation between the empirical distribution of \(y_{t}\) when \(s_{t}=1\) from when \(s_{t}=0\), the easier it will be to correctly sort the data into expansion and recession when making predictions. But this argument can be inverted to judge the chronology itself. If a candidate chronology (the sequence \(\{s_{t}\}_{t=1}^{T}\)) is “good,” then cyclical variables \(y_{t}\) should have empirical distributions that are easily differentiated in each state. Consider again Fig. 2. If the chronology of recessions and expansions carried no useful information, then the two conditional empirical distributions would lie on top of one another, so that any given observation of real GDP would be as likely to have been drawn in expansion as in recession.

However, there are several reasons why the KS statistic is somewhat unappealing. First, we could desire some statistical metric that summarizes the space of all possible trade-offs as a function of the threshold \(c\). In addition, we do not know the proper utility weights, and when looking at expansions and recessions, we know for a fact that \(\pi \) is not 1/2. In fact, in the Spanish business cycle—as we have dared to characterize it—if we reach back to 1939, periods of recession represent about 1/3 of the sample (closer to 1/4 more recently). Finally, the KS statistic has a non-standard asymptotic distribution.

The CCF presented earlier provides a simple solution to these shortcomings. In particular, the Area Under the CCF or AUC provides an alternative summary statistic.Footnote 15 In its simplest form, the AUC can be calculated non-parametrically since Green and Swets (1966) show that \(AUC=P[Z>X],\) where \(Z\) denotes the random variable associated with observations \(z_{t}\) drawn from \(y_{t}\) when \(s_{t}=0;\) and similarly, \(X\) denotes the random variable associated with observations \(x_{t}\) drawn from \(y_{t}\) when \(s_{t}=1.\) Hence, a simple non-parametric estimate is:

where \(i,j\) are a convenient way to break down the index \(t\) into those observations for which \(s_{t}=0,1\) respectively, \(I(A)\) is the indicator function that takes the value of 1 when the event \(A\) is true, 0 otherwise and \(T_{0}+T_{1}=T\) simply denote the total number of observations for which \(s_{t}=0,1\) respectively. There are more sophisticated non-parametric estimates of (6) using kernel weights and there are also parametric models (for a good compilation see Pepe 2003), but expression (6) has intuitive appeal. Under mild regularity conditions and based on empirical process theory (see Kosorok 2008), Hsieh and Turnbull (1996) show that

although in general (especially when \(y_{t}\) is itself the generated from an estimated model), it is recommended that one use the bootstrap. In what follows, we use the AUC as our preferred tool to evaluate our proposed business cycle chronology in a variety of ways.

3.1 Evaluation of alternative chronologies

This section compares our proposed business cycle chronology with a chronology proposed by the OECD,Footnote 16 and two chronologies provided by the Economic Cycle Research Institute (ECRI):Footnote 17 their business cycle chronology and their growth rate chronology. The latter may or may not result in recessions as their website explains, but we include it for completeness. Table 6 summarizes several experiments used to assess each chronology. First, we consider each individual indicator separately and ask how well each chronology classifies the data into the two empirical expansion/recession distributions. Next, we repeat the exercise, but by allowing up to 12 leads and lags of each series to search for that horizon that would maximize the AUC. We do this because some of the chronologies that we consider may be tailored to a single indicator rather than being a combination of dates as we have proposed. This can be particularly problematic since labor related indicators may lead into the recession and exit much later than production indicators. By searching for the optimal horizon, we handicap our own chronology and uncover some interesting timing issues associated with each indicator.

Broadly speaking, we find that ECRI’s business cycle chronology and ours deliver very similar results whereas ECRI’s growth rate and the OECD’s chronologies are clearly far inferior, in many cases, no better than the null of no-classification ability. Our proposed chronology tends to do better with labor related indicators (employment, registered unemployed and the wage income index) whereas ECRI’s does better with production indicators (GDP and IPI). Looking at the horizon at which our chronology maximizes the AUC, we note that leads between 3 to 8 months would generate slightly higher AUCs. At the front end, this implies delaying the start and/or end of the recessions slightly. However, one has to be careful because the samples available for each indicator are slightly different and in fact, as we will show, the synchronicity between each indicator and chronology at which the AUC is maximized is much better in recent times.

We compare for each indicator the AUCs of our chronology for Spain against those of the BCDC for the US to provide a benchmark. The results for the US can be found in Table 3 of Berge and Jordà (2011). The AUC for GDP in the US is 0.93 compared with 0.82 in Spain; for personal income in the US it is 0.85 compared with 0.94 for the wage index in Spain; industrial production has an AUC of 0.89 in the US versus 0.84 in Spain; and personal/household employment in the US has an AUC of 0.82/0.78 versus an AUC of 0.96 in Spain. Broadly speaking, both chronologies appear to have similar properties, an observation that is further supported by the evaluation of economic activity indexes in the next section.

Finally, it is useful to summarize some of the salient features of the business cycles identified for Spain against the NBER business cycles for the US. A summary of the raw peak and trough dates for each is provided in Table 7. Table 8 summarizes the salient features of the recessions using each method and compares these features to US recessions. Setting aside the ECRI-growth chronology for a moment (which ECRI itself warns is not meant to be a chronology of business cycles properly speaking), it is clear that Spain and the US have suffered a similar number of recession periods, although recessions in the US are shorter-lived. In the US, the average recession lasts about one year whereas in Spain recessions last over 2 years on average. The number of months in recession represents less than 20 % of the sample in the US but close to 30 % in Spain. Focusing on more recent samples, these differences appear to be fairly constant.

4 Evaluating economic activity indices

A historical record of turning points in economic activity serves primarily as a reference point for academic studies. As a result, due to data revisions and because it is important not to have to revise the dating, determining the precise date of a turning point requires some time after the event has passed. The NBER, for example, will usually delay by between 12 to 18 months any public announcement of business cycle turning points. Yet for policymakers and other economic agents, it is important to have a means to communicate effectively and in real time the current situation of the economy. In the US, several indices of aggregate economic activity intend to do just this. The Chicago Fed National Activity IndexFootnote 18 or CFNAI, and the Philadelphia Fed Business Conditions IndexFootnote 19 or ADS to use the more common acronym representing the last names of the authors (Aruoba et al. 2009), are two frequently updated indexes commonly cited in the press and in policy circles. In Spain, we consider four similar indexes: an index produced by FEDEA,Footnote 20 a composite index of leading indicators constructed by the OECD,Footnote 21 the MICA-BBVAFootnote 22 index of Camacho and Doménech (2011), and the Spain-STINGFootnote 23 of Camacho and Quirós (2011). In very broad terms, we can characterize these indexes as factors from a model that combines activity indicator variables, sometimes observed at different frequencies. The most commonly cited precursor for this type of index is Stock and Watson (1991).

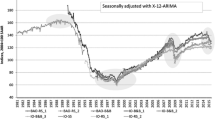

Figure 8 presents a time series plot of each of the four indexes for Spain. Each chart also displays the recession chronology we introduced in Table 5 as shaded regions. For each index, we calculate the optimal threshold that would maximize expression (5). These optimal values are virtually identical to the mean at which the indexes are centered—zero for FEDEA, MICA-BBVA and STING, and 100 for OECD CLI. In terms of how well the indexes correspond to our recession periods, it is easy to see that FEDEA, MICA-BBVA and STING conform rather well so that observations below the zero threshold indicate mostly periods of recession. The OECD index is somewhat more variable and appears to fluctuate by a larger amount between our preferred periods of recession.

Four Economic Activity Indexes. Recessions shaded using Table 5. We report the optimal thresholds for each index that would allow one to determine whether the economy is in expansion or recession using equal weights. See text for more details

The observations in Fig. 8 are confirmed by a more formal analysis presented in Table 9. As before, we consider how well our proposed chronology sorts the empirical distributions of expansion/recession for each of the indexes contemporaneously, as well as up to 12 leads and lags of the index. This will reveal whether the indexes work better as lagging or leading indicators. We also consider the sorting ability of chronologies produced by the OECD and ECRI. In principle, the former ought to match well with the OECD CLI. The exercise thus serves several purposes: it is another form of evaluating the chronology that we propose; it helps determine the lagging, coincident or leading properties of the indexes; and it serves to compare the performance across the indexes themselves.

The OECD and ECRI-growth chronologies perform relatively poorly at sorting the data—something we already suspected from the results in the previous section. Their AUC values are often not meaningfully different from the null of no classification ability; to paraphrase Charles S. Peirce, they are the “utterly ignorant” chronologies. As we knew from the analysis in the previous section, our chronology and ECRI’s are both similar. Our chronology attains the highest AUC values across all indexes both contemporaneously or at the optimal lead/lag, but the differences are minor. Within indexes, the suspicions we raised when discussing Fig. 8 are confirmed. Focusing on our proposed chronology, the STING index achieves the highest contemporaneous score with an AUC \(=\) 0.96, which is very close to the perfect classifier ideal of 1. This is closely followed by MICA-BBVA (AUC \(=\) 0.93), followed by FEDEA (AUC \(=\) 0.89), and far behind OECD (AUC \(=\) 0.69). In terms of the optimal lead/lag, STING comes closest to being a contemporaneous indicator with a one-month lag (the OECD CLI attains its maximum contemporaneously, but the AUC is much lower), followed by FEDEA (which attains its maximum with a one-quarter lag) and finishing with MICA-BBVA (with a 5-month lag at which point its AUC is virtually identical to STING’s). Except for the OECD, at their optimal the three remaining indexes all achieve AUCs above 0.9.

How does this performance compare with the performance of CFNAI and ADS for the US? (Berge and Jordà 2011) Table 5 reports the AUC for CFNAI to be 0.93 and for ADS to be 0.96 using the NBER’s business cycle dates, which are essentially the values we have found for MICA-BBVA and STING using our chronology for Spain. This is another dimension one can use to assess our chronology, and by and large the results are not materially different from what one finds in the US

5 Turning point prediction

A historical chronology of business cycle fluctuations between periods of expansion and recession is an important tool for research. We provide such a chronology in this paper but more helpfully, we present simple methods by which one can generate such a record in a replicable manner, and how one can evaluate whether the proposed chronology is any “good.” Determining turning points demands some patience to sort out data revisions and other delays—in real time, indexes on economic activity such as FEDEA, MICA-BBVA and STING offer a reliable indication about the current state of the economy. What about future turning points? This section investigates a collection of potential indicators of future economic activity and constructs turning point prediction tools. The predictions we obtain indicate that economic activity is likely to remain subdued at least until summer of next year (our forecast horizon ends in August 2012). Here is how we go about making this determination.

We begin by exploring a number of candidate variables listed in Table 10 and described in more detail in the appendix. The choice of variables does not follow an exhaustive search and we expect that others will come up with additional variables with useful predictive properties. But the variables listed in Table 10 will probably resonate with most, and offer a reasonable benchmark. Variables such as cement and steel production; new vehicle registrations; and air passenger and cargo transportation among others, are meant to provide leading indicators on economic output. Financial variables such as Madrid’s stock market index and the spread between the three-month and one-year interbank rates have often been found to be good predictors of future economic activity in the US —the S&P 500 index and the spread between the federal funds rate and the 10 year T-bond rate are two of the variables in the index of leading economic indicators produced by the Conference Board. Finally, more recently available survey data, such as consumer confidence, outlook on household finances and economic outlook expectations find its mirror in the consumer confidence survey maintained, among others, by the University of Michigan, which is also a leading indicator used by the Conference Board in the US.

In previous work (see Berge and Jordà 2011), we found that different variables have predictive power at different horizons. This observation suggests that for the purposes of generating forecasts at a variety of horizons, it is a bad idea to use a one-period ahead model and then iterate forward to the desired horizon. The reason is that the loadings on the different predictors should differ depending on the forecast horizon and iterating the one-period ahead model is likely to put too much weight on good short-term predictors. Moreover, because the important metric here is classification ability rather than model fit, issues of parameter estimation uncertainty play a more secondary role than in traditional forecasting environments, where the root mean square error metric and the usual trade-offs between bias and variance often favor more parsimonious approaches.

Table 10 provides a summary of each variable’s classification ability using the AUC and also reports the lead horizon over which the AUC is maximized. For example, the stock market index data has a maximum AUC \(=\) 0.65 seven months in the future, meaning that this variable should probably receive a relatively high weight when predicting turning points around the half-year mark. The survey data tend to have very high AUCs (all three surveys surpass 0.90), but we should point out that these data go back about 25 years only. By the same token, cement production and Madrid’s stock market index have more middling AUCs but go back over 50 years—a more turbulent period that includes the end of the dictatorship, a new Constitution, and a coup d’état attempt—and for which we have to rely on less information to come up with the chronology of turning points.

With these considerations in mind, we are interested in modeling the posterior probabilities \(P[s_{t+h}=s | x_{t}]\) for \(h=1,\ldots ,12\) and where \(s=0,1\) with 0 for expansion, 1 for recession and where \(x_{t}\) is a \(k\times 1\) vector of indicator variables. We then assume that the log-odds ratio of the expansion/recession probabilities at time \(h\) is an affine function of \(x_{t}\) so that

This is a popular model for classification in biostatistics and with a long tradition in economics; it is the logit model. In principle, one could use other classification models, for example linear discriminant analysis (LDA), a standard classification algorithm that combines a model such as (7) with a marginal model for \(x_{t}.\) However, Hastie et al. (2009) have argued that it is often preferable to stick to a model such as (7) rather than rely on LDA in practice. For these reasons, we feel the model in expression (7) is a reasonable choice.

Figure 9 displays the results of fitting (7) to three samples of data. We estimate a long-range sample that begins in January 1961, but that only includes data on cement production, new car registrations and Madrid’s stock market index. For brevity, we have omitted a graph of these predictions although Fig. 10 displays the AUC of the in-sample classification ability of such a modeling approach. We see that these long-range predictors carry a modest degree of predictive ability, again with the caveat that this sample covers a series of turbulent periods. The medium-range sample begins in January 1976 and adds data on imports and exports, new registered firms, steel production and new truck registrations. The top panel of Fig. 9 displays the one-step ahead probability predictions of recession against our recession dates. Because the data ends in August 2011, after that date we produce out of sample predictions on the odds of recession up to 12 months into the future. The middle panel of Fig. 10 displays the AUC of the in-sample classification ability for each horizon when using this set of predictors. Finally, the short-range sample begins in June 1986 and adds to the set of predictors consumer confidence survey data. The top panel presents the one-period ahead probability of recession forecasts and the out of sample forecasts starting August 2011 and ending August 2012. The right-hand panel of Fig. 10 displays the AUC of the in-sample classification ability of the model 1 to 12 periods into the future.

Predicting the probability of recession. Berge and Jordà (2011) versus OECD recessions. The figures reports in-sample one period ahead probability forecasts and out-of-sample forecasts up to August 2012. Recessions shaded are those reported in Table 5. Top panel uses all but survey and term structure data (for a longer sample); bottom panel uses all indicators (available over a shorter sample)

Classification ability of the predictive models. Berge and Jordà (2011) versus OECD recessions. Areas under the correct classification curve (AUC) per forecast horizon (from 1 to 12 months), depending on the length of the sample available to fit the predictive model. For the first sample (January 1961 to July 2011), the indicators considered are: car registrations, cement production, and Madrid’s stock market. For the second sample (January 1976 to July 2011), we add to the previous indicators: imports and exports, new registered firms, steel production and truck registration. The last sample (June 1986 to July 2011) includes in addition: consumer confidence survey data, economic outlook survey data, and household outlook survey data

Estimates based on all the data (but for the short-range sample) have very good in-sample classification ability (the sample is too short for any serious out-of-sample evaluation) and even at the 12-month ahead mark, the AUC remains above 0.90. This is easy to see in the top panel of Fig. 9 as well, with well delineated probabilities that coincide well with our proposed chronology.

Few will be surprised by the predictions that we report: regardless of the sample chosen, the outlook of the Spanish economy going to August 2012 is dim. The medium-range model uses less data but contains more observations. As we can see in Fig. 10, the forecast is somewhat noisier. At forecast horizons of one month, the model produces an in-sample AUC near 0.90, which then tapers toward 0.75 at the 12-month mark. The top panel of Fig. 9 therefore displays a noisier predicted probability series but the forecasts beginning in August 2011 are all above 0.5 (they are in fact increasing over time). Focusing on the model that uses all indicators, we see in Fig. 10 that this model seems to produce a more accurate signal of the risks of recession at all horizons. Again, in Fig. 9 we see that this model also portends troubled times for the Spanish economy, as the out-of-sample forecasts from this model continue to hover near 100 %.

6 Conclusion

A major area of macroeconomic research investigates the alternating periods of expansion and contraction experienced by economies as they grow. Business cycle theory seeks to understand the causes, consequences and policy alternatives available to tame these economic fluctuations. One of the empirical foundations on which this research rests is a historical record of when the economy drifts from one state to another. This paper shows how to construct and assess such a record and applies the proposed methods to Spanish economic activity.

The most venerable of business cycle chronologies is surely to be found in the US, with the NBER as its custodian. One of the objectives of this paper was to systematize the manner in which the NBER’s BCDC determines turning points to generate a similar historical record for Spain. The overriding principles we sought were to strive for simplicity, transparency, reproducibility and formal assessment. We hope on that score to have provided the beginnings of a formal reconsideration of the Spanish business cycle chronology.

A historical record of expansion and recession periods has significant academic value. However, a policymaker’s actions are guided by the current and future state of the economy. We find that existing indexes of economic activity provide a clear picture in real time about that state, much like similar indexes available for the US speak about the American economic cycle.

Preemptive policymaking requires an accurate reading of the future. As Charles S. Peirce recognized back in 1884, the actions taken as a result of a forecast require that we rethink how probability forecasts are constructed and evaluated. The usual bias-variance trade-offs neatly encapsulated in the traditional mean-square error loss need to make way for methods that reorient some of the focus toward assessing classification ability. Using this point of view, we construct predictive models on the odds of recession that have good classification skill for predictions 1- to 12-months into the future.

To be sure, the last word on the past, present and future of the Spanish business cycle has not yet been written. We hope instead that our modest contribution serves to organize the conversation on how our chronology of turning points could be improved.

Notes

Source: Encuesta de Población Activa, Ocupados. Instituto Nacional de Estadística.

Wesley C. Mitchell (1927, p. 583).

From the NBER’s website on the history of the NBER available at: http://www.nber.org/info.html.

Ibid.

The OECD’s CLI index can be downloaded directly from the OECD’s website: http://www.oecd.org/std/cli.

FEDEA stands for Fundación de Estudios de Economía Aplicada, and their website is http://www.fedea.es.

MICA-BBVA stands for factor Model of economic and financial Indicators which is used to monitor Current development of Economic Activity by Banco Bilbao Vizcaya Argentaria (BBVA). We thank Máximo Camacho for making these data readily available to us.

STING stands for Short-Term Indicator of Growth. We thank Máximo Camacho and Gabriel Pérez Quirós for making the data readily available to us.

The BCDC looks at lots of data but in their website, special emphasis is made on the following variables: linearly interpolated from quarterly real Gross Domestic Product (GDP); linearly interpolated from quarterly real Gross Domestic Income (GDI); Industrial Production Index (IPI); real Personal Income less transfers (PI); payroll employment (PE); household employment (HE); real Manufacturing and Trade Sales (MTS).

The sources and transformations for all the data are provided in more detail in the appendix.

There are many sources of code available to estimate Markov switiching models, including code available from Hamilton’s own website at: http://weber.ucsd.edu/~jhamilto/. We used MATLAB code available from Perlin, M. (2010) MS Regress available at SSRN: http://ssrn.com/abstract=1714016.

For a summary of the voluminous literature on the AUC, see Pepe (2003).

FEDEA stands for fundación de estudios de economía aplicada. The index can be found at: http://www.crisis09.es/indice/.

MICA-BBVA stands for factor Model of economic and financial Indicators which is used to monitor the Current develpment of the economic Activity by Banco Bilbao Vizcaya Argentaria. We thank Máximo Camacho and Rafael Doménech for making the data available to us.

STING stands for short-term INdicator of euro area Growth. We thank Máximo Camacho and Gabriel Pérez Quirós for making the data available to us.

References

Aruoba B, Diebold FX, Scotti C (2009) Real-time measurement of business conditions. J Bus Econ Stat 27(4):417–427

Baker SG, Kramer BS (2007) Pierce, Youden, and Receiver Operating Characteristics Curves. Am Stat 61(4):343–346

Berge TJ, Jordà Ò (2011) Evaluating the classificatin of economic activity into recessions and expansions. Am Econ J Macroecon 3(2):246–277

Berge TJ, Jordà Ò, Taylor AM (2011) Currency carry trades. In: Clarida RH, Frankel JA, Giavazzi F (eds) International seminar on macroeconomics, 2010. The University of Chicago Press for the National Bureau of Economic Research, Chicago

Bry G, Boschan C (1971) Cyclical analysis of time series: selected procedures and computer programs. National Bureau of Economic Research, New York

Burns AF, Mitchell WC (1946) Measuring business cycles. National Bureau of Economic Research, New York

Camacho M, Doménech R (2011) MICA-BBVA: a factor model of economic and financial indicators for short-term GDP forecasting. J Spanish Econ Assoc Ser. doi:10.1007/s13209-011-0078-z

Camacho M, Quirós GP (2011) Spain-STING: spain short term indicator of growth. Manchester School 79:594–616

Geman S, Kochanek K (2001) Dynamic programming and the graphical representation of error-correcting codes. IEEE Trans Inf Theory 47(2):549–568

Green DM, Swets JA (1966) Signal detection theory and psychophysics. Peninsula Publishing, Los Altos

Hamilton JD (1989) A new approach to the economic analysis of nonstationary time series and the business cycle. Econometrica 57(2):357–384

Harding D, Pagan A (2002a) Dissecting the cycle: a methodological investigation. J Monetary Econ 49:365–381

Harding D, Pagan A (2002b) A comparison of two business cycle dating methods. J Econ Dyn Control 27:1681–1690

Hsieh F, Turnbull WB (1996) Nonparametric and semiparametric estimation of the receiver operating characteristics curve. Ann Stat 24:25–40

Hsieh F, Hwang C-R, Leee T-K, Lan Y-C, Horng S-B (2006) Exploring and reassembling patterns in female been weevil’s cognitive processing networks. J Theoret Biol 238(4):805–816

Hastie T, Tibshirani R, Friedman J (2009) The elements of statistical learning: data mining, inference and prediction. Springer, New York

Hsieh F, Chen S-C, Berge TJ, Jordà Ò (2010a) A chronology of international business cycles through non-parametric decoding. U.C. Davis, mimeo

Hsieh F, Chen S-C, Hwang CR (2010b) Non-parametric decoding on discrete time series and its applications in bioinformatics. Stat Biosci (forthcoming)

Hsieh F, Chen S-C, Hwang CR (2010c) Discovering stock dynamics through multidimensional volatility-phases. Quant Finance. doi:10.1080/14697681003743040

Jaynes ET (1957a) Information theory and statistical mechanics I. Phys Rev 106:620

Jaynes ET (1957b) Information theory and statistical mechanics II. Phys Rev 108:171

Jordà Ò, Taylor AM (2009) The carry trade and fundamentals: nothing to fear but FEER itself. NBER Working Papers no. 15518

Jordà Ò, Taylor AM (2011) Performance evaluation of zero net-investment strategies. NBER Working Papers no. 17150

Jordà Ò, Schularick M, Taylor AM (2011) Financial crises, credit booms and external imbalances: 140 years of lessons. IMF Econ Rev 59:340–378

Kim C-J, Nelson CR (1999) State-space models with regime switching: classical and Gibbs-sampling approaches with applications. MIT Press, Cambridge

King RG, Plosser CI (1994) Real business cycles and a test of the adelmans. J Monetary Econ 33(2):405–438

Kolmogorov AN (1933) Sulla Determinazione Empirica di una Legge di Distribuzione. Giornale dell’Istituto Italiano degli Attuari 4:83–91

Kose MA, Prasad ES, Terrones ME (2003) How does globalization affect the synchronization of business cycles? Am Econ Rev Pap Proc 93(2):57–62

Kose MA, Otrok C, Whiteman CH (2008a) Understanding the evolution of world business cycles. J Int Econ 75(1):110–130

Kose MA, Otrok C, Prasad ES (2008b) Global business cycles: convergence or decoupling? NBER Working Papers no. 14292

Kosorok MR (2008) Introduction to empirical processes and semiparametric inference. Springer, New York

Lusted LB (1960) Logical analysis in roentgen diagnosis: memorial fund lecture. Radiology 74(2):178–193

Mason I (1982) A model for assessment of weather forecasts. Aust Metereol Mag 30(4):291–303

Mitchell WC (1927) Business cycles: the problem and its setting. National Bureau of Economic Research, New York

Peirce CS (1884) The numerical measure of the success of predictions. Science 4:453–454

Pepe MS (2003) The statistical evaluation of medical tests for classification and prediction. Oxford University Press, Oxford

Peterson WW, Birdsall TG (1953) The theory of signal detectability: part I. The General Theory. Electronic Defense Group, Technical Report 13, June 1953. Available from EECS Systems Office, University of Michigan

Poincaré JH (1890) Sur les Equations aux Dérivé

Prados de la Escosura L (2003) El Progreso Econòmico de España (1850–2000). Fundaciòn BBVA, Bilbao

Schularick M, Taylor AM (2012) Credit booms gone bust: monetary policy, leverage cycles, and financial crises, 1870–2008. Am Econ Rev 102(2):1029–1061

Smirnov NV (1939) Estimate of deviation between empirical distribution functions in two independent samples. Bull Moscow Univ 2:3–16

Spackman KA (1989) Signal detection theory: valuable tools for evaluating inductive learning. In: Proceedings of the sixth international workshop on machine learning, Ithaca

Stock JH, Watson MW (1991) A probability model of the coincident economic indicators. In: Lahiri K, Moore G (eds) Leading economic indicators, new approaches and forecasting records. Cambridge University Press, Cambridge

Stock JH, Watson MW (2010) Estimating turning points using large data sets. NBER working paper 16532

Viterbi AJ (1967) Error bounds for convolutional codes and an asymptotically optimum decoding algorithm. IEEE Trans Inf Theory 13(2):260–269

Watts DJ, Strogatz SH (1998) Collective dynamics of ‘small-world’ networks. Nature 393(4):440–442

Youden WJ (1950) Index for rating diagnostic tests. Cancer 3:32–35

Author information

Authors and Affiliations

Corresponding author

Additional information

This paper was prepared for the Plenary Lecture of the XXXVI Symposium of the Spanish Economic Association held in Málaga, December 15–17, 2011. We thank Victor Aguirregabiria and Carlos Hervés Beloso for their kind invitation. Máximo Camacho Alonso and Gabriel Pérez Quirós were gracious enough to share their data with us. We are grateful to Jesús Fernández Villaverde and his collaborators at FEDEA for sharing their data with us. The views expressed herein are solely the responsibility of the authors and should not be interpreted as reflecting the views of the Federal Reserve Bank of Kansas City, San Francisco or the Board of Governors of the Federal Reserve System.

Data appendix

Data appendix

1.1 Yearly frequency

-

Real GDP per capita (Producto Interior Bruto per capita, precios constantes de 2000, en euros). Sample: 1850–2008. Source: Leandro (Prados de la Escosura 2003), see: http://e-archivo.uc3m.es/bitstream/10016/4518/1/wh0904.pdf.

1.2 Quarterly frequency

-

Real GDP (Producto Interior Bruto a precios constantes, 1986 pta, 1995 euro, 2000 euro). Samples: 1970Q1–1998Q4; 1980Q1–2004Q4; 1995Q1-2011Q2. Source: Contabilidad Nacional Trimestral de España. Instituto Nacional de Estadística: www.ine.es.

-

Real GDP yearly growth rate (Tasa de variación anual del Producto Interior Bruto, base 2000 en euros). Sample: 1970Q1–2011Q2. Source: Contabilidad Nacional Trimestral de España. Instituto Nacional de Estadística: www.ine.es.

-

Employment (Ocupados, Encuesta de Población Activa). Sample: 1976Q3–2011Q2. Source: Encuesta de Población Activa, Instituto Nacional de Estadística: www.ine.es.

1.3 Monthly frequency

1.3.1 Series used for turning point chronology

-

Real GDP linearly interpolated from quarterly to monthly.

-

Employment linearly interpolated from quarterly to monthly.

-

Industrial Production Index (Índice de Producción Industrial, base 2005). Sample: January 1975 to August 2011. Source: Instituto Nacional de Estadística: www.ine.es.

-

Registered Unemployed (Paro registrado, personas). Sample: September 1939 to September 2011. Source: Instituto de Empleo Servicio Público de Empleo Estatal (INEM), Instituto Nacional de Estadística: www.ine.es.

-

Real Wage Income Index (Indicador de Renta Salarial). Sample: January 1977–September 2011. Source: Ministerio de Economía y Hacienda: www.meh.es.

1.3.2 Indexes of economic activity

-

OECD Composite Leading Indicators. Sample: September 1963-August 2011. Source: www.oecd.org/std/cli. Component series: Production: future tendency manufacturing, % balance; Order books/demand: future tendency in manufacturing, % balance; Finished goods stocks: level manufacturing, % balance, inverted; Source: Ministerio de Industria, Comercio y Turismo. Nights in hotels (number). Source: Instituto Nacional de Estadística: www.ine.es. Yield over 2-year government bonds (% per annum) inverted. Source: Banco de España.

-

FEDEA. Sample: January 1984–October 2011. Source: www.crisis09.es/indice/calendario.html. Component series: beginning 1982, real GDP (PIB, Source: Contabilidad Nacional Trimestral de España. Instituto Nacional de Estadística: www.ine.es ), electricity consumption (Source: Red Eléctrica de España), social security afiliations (Source: Ministerio de Trabajo). Beginning 1987, add survey of consumer sentiment (Source: European Comission). Beginning 1989, add new car registrations (Source: Asociación Española de Fabricantes de Automoviles). Beginning 1993 add industrial production index (Instituto Nacional de Estadística). Beginning 1995 add retail sales (Source: Instituto Nacional de Estadística).

-

MICA-BBVA. Sample: January 1981–October 2011. Source: see (Camacho and Doménech 2011) for details.

-

Spain-STING. Sample: January 1984–October 2011. Source: see Camacho and Quirós (2011) for details.

1.3.3 Leading indicators

Rather than listing individual sources we note that these data can be downloaded from the Boletín Estadístico del Banco de España at: http://www.bde.es/webbde/es/estadis/infoest/bolest.html.

-

Electricity Production in Kw/h (millions). Sample: January 1977 to July 2011.

-

Cement Production in metric tons. Sample: January 1955 to September 2011.

-

Steel Production in metric tons. Sample: January 1968 to July 2011.

-

New Truck and Bus Registrations. Sample: January 1964 to September 2011.

-

New Car Registrations. Sample: January 1960 to September 2011.

-

Number of Hotel Nights. Sample: April 1965 to August 2011.

-

Number of Air Passengers and Metric Tons of Air Cargo. Sample: January 1965 to July 2011.

-

Consumer Confidence, Household Outlook and Economic Outlook Surveys. Sample: June 1986 to October 2011.

-

Exports and Imports. Sample: September 1971 to August 2011.

-

Madrid Stock Exchange. Sample: January 1950 to October 2011.

-

Interbank Rates. Sample: September 1979 to September 2011.

-

New Registered Firms. Sample: January 1967 to August 2011.

Rights and permissions

This article is published under license to BioMed Central Ltd. Open Access This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

About this article

Cite this article

Berge, T.J., Jordà, Ò. A chronology of turning points in economic activity: Spain, 1850–2011. SERIEs 4, 1–34 (2013). https://doi.org/10.1007/s13209-012-0095-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13209-012-0095-6