Abstract

The importance of characterizing kerogen type in evaluating source rock and the nature of hydrocarbon yield is emphasized. However, traditional laboratory geochemical assessments can be time-intensive and costly. In this study, an innovative approach was taken to bridge this gap by utilizing machine learning techniques to ascertain key parameters—Organic Oxygen Index (OI), Hydrogen Index (HI), and kerogen type—from petrophysical logs of a well in the Perth Basin, Western Australia. This approach assembled geochemical data from 138 cutting samples of the Kockatea and Woodada formations and petrophysical log data. Subsequently, six machine learning algorithms were applied to predict the OI and HI parameters. The efficacy of these methods was assessed using statistical parameters, including Coefficient of Determination (R2), Average Percentage Relative Error, Average Absolute Percentage Relative Error, Root Mean Square Error, and Standard Deviation. The Support Vector Machines method emerged as the standout performer, with an R2 of 0.993 for the OI and 0.989 for the HI, establishing itself as an optimal tool for predicting these indices. Additionally, six classifiers were employed to determine kerogen types, with accuracy tested using precision, recall, F1-Score, and accuracy parameters.The study's findings highlight the superiority of the Gradient Boosting method in kerogen-type classification, achieving an impressive accuracy rate of 93.54%. It is concluded that when utilized with petrophysical logs, machine learning methodologies offer a powerful, efficient, and cost-effective alternative for determining OI, HI, and kerogen type. The novelty of this approach lies in its ability to accurately predict these crucial parameters using readily available well-log data, potentially revolutionizing traditional geochemical analysis practices.

Graphical abstract

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The exploration and evaluation of petroleum resources from source rocks heavily rely on petroleum geochemistry, encompassing crucial parameters such as kerogen type, hydrogen index (HI), and oxygen index (OI). These parameters serve as pivotal proxies for identifying the hydrocarbons' nature and understanding organic matter maturation, thus determining the hydrocarbon generation potential (Durand 1980; Breyer 2012; Dembicki 2016; Chen et al. 2017; Lee 2020). However, the complexity of extracting unconventional petroleum resources presents significant challenges, urging the need for more efficient and less error-prone evaluation methods.

Traditional techniques like Rock-Eval pyrolysis have long been established but suffer from several limitations. These include labor-intensive procedures, the risk of sample contamination, inaccuracies in depth determination, high costs, and time-consuming processes (Lafargue et al. 1998; Behar et al. 2001). As the demand for unconventional petroleum resources escalates, these methods face criticism and calls for improved alternatives.

In recent years, machine learning techniques have emerged as potential problem solvers in the domain of petroleum geochemistry and related fields (Schmoker 1979, 1981; Fertle & Rickie 1980; Meyer & Nederlof 1984; Schmoker & Hester 1983; Fertle 1988; Herron 1988; Carpentier, 1989; Passey et al. 1990; Tariq et al. 2020; Rui et al. 2019; Wang et al. 2019; Khalil Khan et al. 2022). However, these solutions require more extensive validation and practical application, a task that this study aims to undertake.

This study focuses on the geochemical parameters of kerogen type, HI, and OI, hypothesizing that these can be successfully estimated through a novel application of machine learning techniques. The main objective is to establish a cost-effective and efficient methodology for evaluating source rocks using hybrid machine learning techniques, filling a pressing need within the field. The analysis will primarily concentrate on the Perth Basin, drawing insights from a comprehensive range of studies (Khoshnoodkia et al. 2011; Mahmoud et al. 2017; Alizadeh et al. 2018; Johnson et al. 2018; Rui et al. 2019; Shalaby et al. 2019; Lawal et al. 2019; Wang et al. 2019; Handhal et al. 2020; Tariq et al. 2020; Kang et al. 2021; Safaei-Farouji & Kadkhodaie 2022; Deaf et al. 2022; Nyakilla et al. 2022; Zhang et al. 2022; Khalil Khan et al. 2022; Maroufi & Zahmatkesh 2023).

While the forthcoming sections will explore the application of various machine learning techniques to predict OI and HI, such as Support Vector Machines (SVM), Group Method of Data Handling (GMDH), Multi-Layer Perceptron (MLP), Decision Tree (DT), Adaptive Neuro-Fuzzy Inference System (ANFIS), and Radial Basis Function (RBF), it is essential to acknowledge the limitations of the methodology. Some potential limitations include the availability and quality of well-log data, the representativeness of the selected dataset, and the generalization of the results to other geological settings. Additionally, the performance of machine learning models may be influenced by hyperparameter tuning and the choice of input features. It is vital to address these limitations to ensure the robustness and reliability of the study's findings.

Ultimately, the implications of this research extend beyond the academic sphere, providing practical solutions for petroleum geochemistry. By offering an innovative, efficient, and accurate methodology for assessing the production potential of Perth basin source rock, this study contributes significantly to the field. The findings herein stand to not only streamline the process of source rock evaluation but also enhance our understanding of the hydrocarbon production potential in unconventional petroleum resources. By acknowledging and addressing the limitations, the study aims to bolster the confidence and applicability of the proposed methodology in real-world scenarios.

Geological setting

The Perth Basin is a sizable sedimentary basin with a north-to-northwest trend that stretches for around 1,300 km along the western edge of the Australian continent. It was formed during the separation of Australia and Greater India in the early Permian to Early Cretaceous. It comprises a crucial onshore component and extends offshore to the continent-ocean boundary to water depths of approximately 4500 m (Rollet et al. 2013).

The Darling Fault defines the basin's eastern edge, while the Indian Ocean covers its offshore region to the west. The tectonic evolution of the basin is mainly under the direction of the Darling Fault (Owad-Jones & Ellis 2000).

Two primary tectonic stages with a tensional system are associated with the basin's origin and history. A rifting basin is linked to the late Permian's initial development phase. The second event occurred between the Late Jurassic and Early Cretaceous and is associated with splitting of the Australian plate from India. Most of the rocks in the basin are clastic and date from the Permian to more recent times (Marshall et al. 1989).

The basin is divided into a complex graben system with several sub-basins by several normal faults with a north–south trend and younger northwest-southeast trending shift faults (Crostella & Backhouse 2000). The thicknesses of the same sedimentary unit are spatially highly variable, reflecting the relative differences in subsidence rates. The continental and marine sedimentary settings fluctuated throughout the basin's history due to this differential subsidence and related relative sea-level fluctuations (Delle Piane et al. 2013).

In this basin, sediments from the Late Permian through the Cretaceous have been deposited in various settings, from marine to terrestrial. Sandstone, siltstone, and mudstone are typically present, along with low quantities of coal, conglomerate, and carbonate (Playford et al. 1976; Crostella 1995).

The offshore northern Perth Basin consists of three main depocenters; the Abrolhos, Houtman, and Zeewyck sub-basins. The Abrolhos Sub-basin is an elongated north–south-oriented depocenter carrying up to 6000 m of Cisuralian to Lower Cretaceous sedimentary rocks deposited during multiple rift events (Jones et al. 2011; Grosjean et al. 2017). Figure 1 shows the structural setting of the Perth Basin together with its faults and sub-basins.

Perth Basin map of Structural components showing major faults, depocenter age, and sub-basins (modified from Bradshaw et al. 2003)

The most important source and cap rock in the basin was thought to be the Early Triassic Kockatea Shale (Playford et al. 1976; Karimian Torghabeh et al. 2014). The Dandaragan Trough, where sediment thicknesses of more than 1000 m were deposited, experienced active subsidence during the Kockatea Shale deposition that covered the northern Perth Basin (Iasky & Mory 1993). It comprises limestone beds, siltstone, minor sandstone, and black shale. The unit comprises thin, red, purple, or brown ferruginous siltstone or fine-grained sandstone outcrops (Crostella 1995). Except for three wells in the Houtman Sub-basin, every well drilled in the offshore northern Perth Basin converges on the Lower to Middle Triassic Woodada Sequence. The thickness of the Woodada Sequence ranges from 45 to 183 m, except for Wittecarra 1, where the sequence is up to 685 m thick (Jorgensen et al. 2011). Interbedded fine-grained sandstone and carbonaceous siltstone make up the Woodada Formation. The unit has a transitional character on wireline logs between the Lesueur Sandstone, the overlying coarser material, and the Kockatea Shale, the underlying fine-grained material (Mory & Iasky 1996). Figure 2 shows the stratigraphic chart for the offshore northern Perth Basin.

Materials and methods

Data

One hundred thirty-eight cutting samples from the Kockatea and Woodada formations were collected for this study to evaluate their geochemical properties. In order to achieve the ultimate temperatures of 800 °C in the pyrolysis oven and 850 °C in the oxidation oven, the Rock-Eval 6 pyrolysis was used based on the presented workflow of (Espitalie et al. 1977) and (Lafargue et al. 1998) under standard test conditions with a temperature plan of 25 °C min-1. Each sample was removed of iron filings from the drill bit and micas generated from lost circulation material, pulverized after being ground, and measured to a weight of 60–70 mg before being sent through the device. Rock–Eval 6 pyrolysis offers a potent method for assessing a hydrocarbon source rock's quantity, thermal maturity, and type —three crucial elements. The system outputs S1: free hydrocarbons [mg HC/g rock], S2: hydrocarbons cracked [mg HC/g rock], TOC: total organic carbon (wt.%), and Tmax: the temperature at which the maximum amount of hydrocarbon generation occurs in a sample (°C), which include the main acquired characteristics as well as several calculated parameters from results obtained, such as PI (Production index): S1/(S1 + S2), HI (Hydrogen index): (S2/TOC) × 100 [mg HC/g TOC], and OI (Oxygen index): (S3/TOC) × 100 [mg CO2/g TOC].

Numerous researchers, including (Schmoker (1979, 1981); Carpentier et al. (1989); Fertle and Rieke (1980); Herron (1988); Fertle (1988); Meyer and Nederlof (1984); Schmoker and Hester (1983); Passey et al. (1990); Bolandi et al. (2017); Zhao et al. (2019)), have investigated the relationships between geochemical parameters and responses of well-logging tools. Therefore, the input parameters for calculating the HI and OI values for the rock samples chosen for this study were collected from well-log data. The well-log data were collected using sonic (DT), gamma-ray (GR), neutron (NPHI), and bulk density (RHOB) logs. Table 1 and Fig. 3 present the statistical description and the complete data set utilized to train ML models in the Kockatea and Woodada formations.

Figure 4 shows the relationship between conventional well-logs and HI and OI. Gamma-ray (GR) log quantifies the radioactivity of a formation. OM contains uranium, thorium, and potassium, so GR readings rise when it exists. This shows that OI decreases while HI increases as GR increases. (Fig. 4a, e). Cross-plotting GR versus HI (Fig. 4a) resulted in the highest coefficient of determination (R2) (0.0481).

An elastic wave's velocity in a formation can be calculated using the sonic (DT) log, quantifying the time it takes to move through the formation. Porosity, lithology, and fluid types like water, oil, gas, and kerogen all impact the sonic log. Consequently, an increase in OM concentration could be the reason for its values to increase (Kamali & Mirshady 2004).

The bulk density of a formation is measured by the density (RHOB) log, which is affected by fluids and matrix elements. Because of the lower OM density (~ 1 g/cm3), source rocks typically have low bulk densities. Consequently, higher OI and lower HI are related to greater density. Plotting OI versus RHOB yielded the R2 (0.0184) (Fig. 4g).

The concentration of hydrogen atoms in a formation is monitored using the neutron log [63]. When subjected to high HI, organic-rich intervals, neutron porosity values rise because a formation's hydrogen atoms and porosity are strongly correlated with the OM content. In the hydrogen index case, the relationship between the NPHI log and hydrogen index (0.0246) exhibits the most indirect behavior (the R2 value is the lowest) (Fig. 4d). The opposite is seen for oxygen index (OI), where the oxygen index (OI) vs. NPHI log plot (Fig. 4h) shows the most direct relationship (R2 = 0.0645).

Machine learning methods

Machine learning (ML) techniques have been widely used in various scientific and engineering disciplines, including petroleum engineering, since the early 1990s. They are simple, flexible tools that allow accurate prediction and require little modeling work (Lary et al. 2016; Mohaghegh 2017; Al-Fatlawi 2018).

Even with plentiful studies based on soft computing techniques, studies have yet to be done in kerogen-type prediction by conventional well-log data. The group method of data handling (GMDH), decision tree (DT), random forest (RF), support vector machine (SVM), multilayer perceptron (MLP), Radial basis function neural network (RBF), adaptive neuro-fuzzy inference system (ANFIS), extreme gradient boosting (XGBoost), light gradient boosting (LGBM), and gradient boosting (GB) are among the ten distinct ML techniques used in this paper, which is the first attempt to achieve this purpose. The schematic forms of the methods of this paper are presented in Figs. 5 and 6.

Group method of data handling)GMDH(

A feed-forward neural network called the group method of data handling (GMDH) can address complicated nonlinear issues (Ebtehaj et al. 2015). This algorithm is a group of self-organized neurons. This technique employs an equation of quadratic polynomial to add a neuron in the subsequent layer by connecting distinct pairings of neurons in every layer (Nariman-Zadeh et al. 2005; Kalantary et al. 2009). The optimal model structure, the impact of input variables, and the number of layers and neurons are all automatically determined by the GMDH algorithm. The GMDH algorithm's primary goal is to reduce the square of the discrepancy between the actual output and the predicted values (Ebtehaj et al. 2015). Detailed information on GMDH and its flowchart are presented in Table 6 and Fig. 7, respectively.

Support vector machine (SVM)

A reliable ML technique, the support vector machine (SVM), is helpful in many scientific fields, including industrial and medical engineering (Wong & Hsu 2006; Adewumi et al. 2016). Unlike other neural networks, the SVM does not experience under-fitting or over-fitting (Cortes & Vapnik 1995; Vapnik 1999). By creating hyperplanes among the data, SVM effectively executes regressions and classifications, both nonlinear and linear. This structure converts inputs into a higher spatial dimension using the kernel function. Data mapping into a larger dimensional space, which would distinctly differentiate data classes, is necessary to create the hyperplane furthest from the data borders (Scholkopf et al., 2002). Figure 8 displays a straightforward schematic version of the SVM. Tables 7 and 8 also illustrate the optimized parameters for the SVM for prediction and the optimal parameter values for the SVM classifier after hyperparameter optimization.

Multilayer perceptron (MLP)

The MLP is the most used ML model frequently used to simulate various geochemical characteristics of source rock. There are various layers in an MLP algorithm, including input, output, and hidden layers. The input layer is the initial layer. The number of input variables is the same as the number of neurons in the input layer. The output layer, the final layer in an MLP's structure, typically only has one neuron in issues (Mohaghegh 2000; Mohagheghian et al. 2015). Also known as hidden layers, these are located Between the output and input layers. To develop a solid link between the inputs and outputs of the model, the number of hidden layers and associated neurons should be adjusted in this framework. Each neuron's value in the preceding layer is boosted by a specific weight to get its value in the hidden and output layers. A bias term then increases the sum of these values. The result is then subjected to a nonlinear activation function (Mohaghegh 2000; Mohagheghian et al. 2015). In Fig. 9, the MLP flowchart is displayed. Additionally, the properties of the used Multilayer perceptron, including two hidden layers and optimized parameters for the MLP classifier, are shown in Table 9 and 10.

Radial basis function neural network (RBF)

A feed-forward neural network utilized for classification and regression issues is the RBF (Broomhead & Lowe 1988). Three layers make up RBF: input, hidden, and output (Hemmati-Sarapardeh et al. 2018). This method uses a single hidden layer to connect the input and output layers (Zhao et al. 2015). Output has a dimensionally larger space has the input. Nodes in the hidden layer reside within a circle whose center and radius are predetermined. It is calculated using the separation between the input vector and the respective center of each neuron. Afterward, a radial basis transfer function transfers the computed distances from the hidden neurons to the output neurons (kernel function). The kernel functions and particular weights connect the hidden and output layers linearly. Table 11 and Fig. 10 show the operating RBF network's properties and flowchart.

Adaptive neuro-fuzzy inference system (ANFIS)

Another rigorous data-driven approach approved for numerous modeling problems is the adaptive neuro-fuzzy inference system. The ANFIS approach was previously introduced by (Jang et al. 1997) as a layered system with five layers that combines a fuzzy system and a neural network. Combining the back-propagation method and the hybrid learning algorithm is a common way of training ANFIS models (Afshar et al. 2014). Detailed information on ANFIS and its flowchart are presented in Table 12 and Fig. 11, respectively.

Decision tree (DT).

One of the top-tier supervised machine learning algorithms is the decision tree. Both classification and regression problems can be solved using this technique. According to historical development, the first DT was Automatic Interaction Detection (AID), which Morgan and Sonquist introduced in 1963 (Morgan & Sonquist 1963). This algorithm's concept has a hierarchical structure. To achieve this, the modeling technique uses fundamental components, including the root, leaf, internal nodes, and branches. It is important to note that identifying a DT structure depends on the variety of the data used. This means that even a tiny change in the data will significantly change the ideal DT structure. In Fig. 12, the decision tree's flowchart is shown.

Random forest (RF)

The RF is a nonparametric prediction structure that can be used for classification and regression (Breiman 2001). Due to its adaptability to multiple types of inputs (categorical or continuous) and its capability to describe complicated relationships in the data, it has grown in prominence in many geospatial applications. Overfitting is a problem with classification and regression trees (CART) that random forest addresses. It uses sampling with replacement to generate various CARTs and bootstrap aggregating, sometimes known as bagging, to create subsets of the training data (Breiman 1996). For a particular x-valued data point, each CART makes up the forest predictions or votes, and the forest then provides the majority vote for classification or the average forest prediction for regression. The random forest's voting method makes it feasible to identify complicated relationships in the data that might not otherwise be possible. RF has given the noise and overfitting issues that would influence a single decision tree a strong performance. Additionally, RF is naturally suited for multi-class situations and can efficiently handle enormous data sizes (Robnik-Šikonja 2004). The optimal parameter values for the RF classifier's hyperparameter optimization method are displayed in Table 13Gradient boosting.

Gradient boosting is a system used in creating prediction models. The technique is frequently used in regression and classification procedures (Hastie et al. 2009; Piryonesi & El-Diraby 2020). A prediction model is produced via gradient boosting from a group of weak models, typically decision trees. Throughout the learning process, successive trees are produced. With the aid of this approach, the first model may estimate the value and determine the loss or the discrepancy between the predicted value and the actual value. A second model is created to predict the loss after the first. This procedure continues until a pleasing outcome is obtained (Guelman 2012). The optimal parameter values for the gradient boosting classifier, as determined by the hyperparameter optimization procedure, are displayed in Table 14.

Extreme gradient boosting (XGBoost)

Recent years have seen the XGBoost algorithm rise to prominence as a data classification and prediction technique. It has demonstrated strong performance in many ML tasks and innovative scientific endeavors (Chen & Guestrin 2016). With shrinkage and regularization approaches, XGBoost, based on the gradient tree-boosting algorithm (Hastie et al. 2009), can handle sparsity and prevent overfitting (Chen & Guestrin 2016).

A boosting algorithm's main goal is to combine weak learners' outputs to improve performance sequentially (Hastie et al. 2009). XGBoost uses gradient boosting to merge several classification and regression trees (CART). The standardized objective function for enhanced generalization, shrinkage, and gradient tree boosting for additive training and column subsampling to prevent overfitting are the three essential components of XGBoost (Chen & Guestrin 2016). Some of the crucial XGBoost classifier parameters are included in Table 15

Light gradient boosting machine (LGBM)

Data mining activities, including classification, regression, and sorting, use the gradient-boosting decision tree technique known as the light gradient-boosting machine. It combines the predictions of various decision trees to get the final prediction that generalizes well. Integrating several "weak" learners into one "strong" learner is the fundamental tenet of LGBM. The construction of ML algorithms based on this concept is motivated by two factors. First of all, "weak" learners are simple to pick up. Second, grouping numerous learners typically results in more substantial generalization than just one learner (Ke et al. 2017). The optimal parameter values for the LGBM classifier, as determined by the hyperparameter optimization method, are displayed in Table 16

Results

OI and HI estimation

This research implemented a comprehensive methodology to predict the geochemical properties of source rocks, explicitly focusing on the Oxygen Index (OI) and Hydrogen Index (HI). The employed models for this process were the Group Method of Data Handling (GMDH), Support Vector Machine (SVM), Decision Trees (DT), Radial Basis Function (RBF), Multilayer Perceptron (MLP), and Adaptive Neuro-Fuzzy Inference System (ANFIS).

The available data was strategically divided into two subsets: 70% was utilized for training the models, while the remaining 30% served as the test set for model evaluation. This data division strategy proved appropriate for our data volume, backed by its proven effectiveness in prior research.

A rigorous assessment of the six models was conducted using a range of statistical parameters to gauge their efficiency and accuracy. Metrics such as the coefficient of determination (R2), average percent relative error (APRE), root mean square error (RMSE), average absolute percent relative error (AAPRE), and standard deviation (SD) were employed. The complete results of these measures are detailed in Tables 2 and 3.

Figures 13 and 14 visually encapsulate the comparative assessment of the models using these statistical parameters, presenting APRE (subfigure a), AAPRE (subfigure b), RMSE (subfigure c), SD (subfigure d), and R2 (subfigure e) for each model. These illustrations emphasize the prediction of HI and OI values, offering a clear depiction of the performance of each model.

Moreover, the analysis was expanded by investigating the percentage error of each model's predictions across three unique datasets, each consisting of 46 samples. This percentage error offers a robust measure of the models' performance and supports the data in Figs. 13 and 14.

Figures 15 and 16 provide further insights through cross-plots, visually comparing HI and OI's predicted and actual values. These figures encompass all six models, covering the training, testing, and total datasets. In tandem, Fig. 17a and b graphically illustrate the percentage error in estimating HI and OI by each model across the three datasets.

These analyses collectively demonstrated the superior performance of the SVM model. It outperformed the others consistently, exhibiting the closest fit to the experimental values, the most negligible dispersion, and the slightest degree of deviation. The superiority of SVM is further reflected in its R2 values of 0.993 and 0.989 for the testing set, 0.983 and 0.986 for the training set, and 0.987 for the total data set, marking it as the superior model for estimating OI and HI (Tables 2 and 3). Additionally, SVM achieved the lowest AAPRE, RMSE, and SD (Tables 2 and 3). Equations (1, 2, 3, 4, 5), used to compute these statistical metrics, are elaborated in the Methods section.

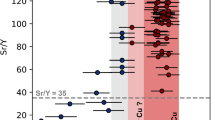

Finally, Fig. 18 provides a schematic depiction of the actual and predicted kerogen types across the total (a), training (b), and testing (c) datasets using the pseudo van Krevelen diagram. This figure further enriches the analysis by comparing the actual and estimated values, adding another layer of comprehension to the models' predictive capabilities and their application in real-world geochemical analysis.

In these formulas, xi,exp, xi,pred, and N, respectively, represent the experimental OI and HI data and model-predicted values of OI and HI, and the quantity of pieces of data.

Kerogen type estimation

During the subsequent stage of the research, the prediction of kerogen types was addressed by utilizing a spectrum of machine learning classifiers. The process of classification was informed by the pseudo van Krevelen diagram, resulting in the segregation of the kerogen types into four distinct categories: type II, type III, mixed II & III, and type IV. This categorization was graphically exhibited in Fig. 19 and numerically reported in Table 4.

A variety of machine learning algorithms, including the Light Gradient Boosting Classifier (LGBM), Extreme Gradient Boosting Classifier (XGBoost), Random Forest Classifier (RF), Multilayer Perceptron Classifier (MLP), Support Vector Classifier (SVM), and Gradient Boosting Classifier, were employed for the classification of these kerogen types. The parameters of these classifiers were optimized in a comprehensive process, the details of which are presented in Tables 12 through 16.

The classifiers' performance was evaluated upon completing the classification stage, leveraging a testing dataset. The selected evaluation metrics, precision, recall, accuracy, Area Under Curve (AUC), and F1 score, facilitated a detailed appraisal of the efficacy of each classifier.

A comparative assessment of performance metrics for all models is demonstrated in Table 5 and Fig. 20, providing an objective evaluation of the efficiency of each machine learning classifier. The average precision scores for all classification models are graphically presented in Fig. 21.

To address the occasional misleading outcomes of the ROC curve, especially in instances of class imbalance, the precision-recall curve was employed alongside the ROC curve. This practice offered a more comprehensive perspective on the performance of each classifier, consistent with the findings of Davis (2006). Figure 22a, b, c, d, e, f illustrate the ROC curves for each kerogen type across all models, emphasizing the superior performance of the Gradient Boosting classifier.

The performance of the classifiers was then consolidated and evaluated using a confusion matrix. This matrix calculated the core metrics—precision, accuracy, recall, and F1-score. Furthermore, the capability of a classifier to distinguish between different classes was gauged through the Area Under Curve (AUC) metric, as per Fawcett (2006). These performance metrics were calculated by Eqs. (6) through (9).

Notably, the Gradient Boosting classifier excelled, achieving the highest scores across all metrics. It registered an impressive accuracy of 93.54% and scores of 0.94, 0.93, 0.93, and 0.95 for precision, recall, F1-score, and AUC, respectively. Moreover, it demonstrated the least misclassifications, as illustrated by the confusion matrix in Fig. 23. As a result of this comprehensive evaluation, the Gradient Boosting classifier was confirmed as a highly effective method for identifying kerogen types.

where TP = True Positive, TN = True Negative, FP = False Positive, FN = False Negative.

Discussion

OI and HI estimation

The study's results underscore the effectiveness of the SVM model for predicting the Oxygen Index (OI) and Hydrogen Index (HI) in the source rock. Based on the calculated statistical parameters, SVM consistently outperformed the other five models—GMDH, DT, RBF, MLP, and ANFIS—regarding accuracy and reliability.

While comparing the performance of the models, a suite of statistical metrics was employed, namely R2, APRE, RMSE, SD, and AAPRE. These parameters provided a holistic evaluation of the models' performance, encompassing measures of fit efficiency, relative deviation, dispersion, and precision. The SVM model registered the highest R2 value, which signifies the strongest fit between the model predictions and experimental data. Lower values of AAPRE, RMSE, and SD in SVM confirm that it has a lesser degree of dispersion and deviation, thus underscoring its superior precision.

The models' performance was further evaluated through a visual examination using cross plots. These graphical analyses reinforced the statistical findings, revealing the SVM model's high accuracy in estimating OI and HI. The SVM model demonstrated a higher concentration of data points near the Y = X line, implying a closer match between experimental and estimated values.

These findings not only point towards the strength of SVM in estimating OI and HI but also draw attention to the versatility and applicability of different models in geosciences. While SVM showed a strong performance, the other models also delivered reasonably accurate results, indicating their potential utility in other tasks or data contexts.

Kerogen type estimation

In the kerogen type estimation, six machine learning algorithms were evaluated, and amongst these, the Gradient Boosting model emerged as the top performer. While the SVM model excelled in OI and HI prediction, it could have been more effective in kerogen-type classification, emphasizing that model selection should be guided by the specific task.

Performance metrics such as accuracy, precision, recall, AUC, and F1 score were used to assess the classifiers' performance. The Gradient Boosting model achieved the highest values across all these metrics, thereby establishing its superiority in classifying kerogen types. In addition to the numerical scores, visual assessments through precision-recall and ROC curves further supported the dominance of Gradient Boosting in this task.

While Gradient Boosting was the standout performer, the results also highlight the strong performance of other algorithms, including Random Forest, LightGBM, and XGBoost. This points to the potential of these models as alternatives for kerogen-type classification, given the appropriate tuning and optimization of hyperparameters.

These findings illustrate that model selection and performance largely depend on the specific task and data characteristics. This underscores the need for careful model selection and fine-tuning to cater to the data's specificities and the analysis's objectives. Future studies could extend these findings by exploring the application of these models to more extensive or different datasets or investigating other promising machine learning models and techniques.

Conclusions

This research provides compelling evidence of the transformative impact of machine learning techniques within geosciences, specifically in predicting organic richness indicators and kerogen types. Through creating and validating bespoke machine learning models across various algorithms, this study has demonstrated their formidable capability in accurately predicting kerogen type, hydrogen index, and oxygen index from well-log data—a significant accomplishment given the often constrained availability of geochemical data. Essential conclusions from this study are summarized as follows:

-

1.

The Support Vector Machine model has distinguished itself as an exceptional performer in accurately predicting source rocks' Oxygen Index and Hydrogen Index. Its superior performance amplifies the significance of machine learning applications in enhancing prediction accuracy within geosciences.

-

2.

The models' resilience and reliability are manifest in their robustness against overfitting, a prevalent concern in machine learning implementations. This underscores the meticulous design and optimization processes invested in developing these models, reinforcing their value for real-world applications.

-

3.

Among the classification models, the Gradient Boosting and Random Forest models have proven to be particularly efficient in kerogen-type classification, further affirming the transformative role machine learning can play in refining classification tasks in geosciences.

-

4.

The proposed machine learning approach illuminates a path toward greater economic efficiency within geosciences. Optimally harnessing readily available well-log data can considerably reduce the demand for costly geochemical laboratory analyses, thus fostering more cost-effective operational practices.

-

5.

The versatility of this research is evident in the continued effectiveness of the proposed machine learning models, even in scenarios where data is sparse or completely absent. This underscores the models' resilience, positioning them as robust solutions to prevalent data-related challenges in the field.

-

6.

The innovative application of the pseudo-van Krevelen diagram approach for kerogen-type classification adds to the uniqueness of this study. This novel approach promotes a more precise categorization of kerogen types, thereby enhancing the effectiveness of these analyses.

In summary, this research contributes a new perspective to the existing literature by introducing a machine learning-centric approach for predicting organic richness indicators and kerogen type using well-log data. As a significant step towards improved efficiency and cost-effectiveness in geosciences, this study fuels promising opportunities for future exploration and research. The conclusions drawn here underscore the profound potential of machine learning within geosciences, inspiring further innovative research and application and marking a significant contribution to the continuous evolution of this crucial scientific discipline.

Abbreviations

- RO :

-

Vitrinite Reflectance

- R2 :

-

Coefficient of Determination

- Vp :

-

P-wave velocity

- I:

-

Kerogen Type 1

- II:

-

Kerogen Type 2

- III:

-

Kerogen Type 3

- IV:

-

Kerogen Type 4

- K:

-

Potassium

- S1:

-

Number of free hydrocarbons (gas and oil) in the sample

- S2:

-

Number of hydrocarbons generated through thermal cracking of nonvolatile organic matter

- S3:

-

Amount of CO2 (in milligrams CO2 per gram of rock) produced during pyrolysis of kerogen

- TH:

-

Thorium

- U:

-

Uranium

- AAPRE:

-

Average Absolute Percentage Relative Error

- AID:

-

Automatic Interaction Detection

- ANFIS:

-

Adaptive Neuro-Fuzzy Inference System

- APRE:

-

Average Percentage Relative Error

- AUC:

-

Area Under Curve

- BP-ANN:

-

Back propagation artificial neural network

- BRNN:

-

Bidirectional recurrent neural networks

- CART:

-

Classification and regression trees

- CNL:

-

Compensated neutron log

- DEN:

-

Density

- DT:

-

Sonic log

- DT:

-

Decision tree

- F1-Score:

-

Measures a model's accuracy

- FIS:

-

Fuzzy inference system

- FN:

-

False Negative

- FP:

-

False Positive

- GB:

-

Gradient boosting

- GMDH:

-

Group method of data handling

- GR:

-

Gamma ray

- HC:

-

Hydro carbon

- HI:

-

Hydrogen Index

- KNN:

-

K-nearest neighbour

- LGBM:

-

Light gradient boosting machine

- LLD:

-

Laterolog deep

- LLS:

-

Laterolog shallow

- ML:

-

Machine learning

- MLP:

-

Multilayer perceptron

- NMR:

-

Nuclear magnetic resonance

- NPHI:

-

Neutron Porosity Hydrogen Index

- OI:

-

Oxygen index

- OM:

-

Organic matter

- PI:

-

Production index

- RBF:

-

Radial Basis Function

- RF:

-

Random forest

- RHOB:

-

Bulk density

- RLLD:

-

Resistivity of laterolog deep

- RMSE:

-

Root mean square error

- RMSFL:

-

Resistivity of microspherically focused log

- ROC:

-

Receiver operating characteristic

- RT:

-

True resistivity

- SD:

-

Standard deviation

- SMO:

-

Sequential minimal optimization

- SP:

-

Spontaneous Potential

- SVM:

-

Support vector machine

- SVR:

-

Support vector regression

- Tmax:

-

The temperature at which the rate of hydrocarbon generation is at its maximum during pyrolysis (25 °C/min)

- TN:

-

True Negative

- TOC:

-

Total organic carbon

- TP:

-

True Positive

- XGBOOST:

-

Extreme gradient boosting

References

Adegoke AK, Abdullah WH, Yandoka BMS, Abubakar MB (2015) Kerogen characterisation and petroleum potential of the Late Cretaceous sediments. Chad Basin, Northeastern Nigeria

Adewumi AA, Owolabi TO, Alade IO, Olatunji SO (2016) Estimation of physical, mechanical and hydrological properties of permeable concrete using computational intelligence approach. Appl Soft Comput 42:342–350. https://doi.org/10.1016/j.asoc.2016.02.009

Afshar M, Gholami A, Asoodeh M (2014) Genetic optimization of neural network and fuzzy logic for oil bubble point pressure modeling. Korean J Chem Eng 31(3):496–502. https://doi.org/10.1007/s11814-013-0248-8

Ahangari D, Daneshfar R, Zakeri M, Ashoori S, Soulgani BS (2022) On the prediction of geochemical parameters (TOC, S1 and S2) by considering well log parameters using ANFIS and LSSVM strategies. Pet 8(2):174–184. https://doi.org/10.1016/j.petlm.2021.04.007

Akinlua A, Ajayi TR, Jarvie DM, Adeleke BB (2005) A re-appraisal of the application of rock-eval pyrolysis to source rock studies in the niger delta. J Pet Geol 28(1):39–48. https://doi.org/10.1111/j.1747-5457.2005.tb00069.x

Al-Fatlawi OF (2018) Numerical simulation for the reserve estimation and production optimization from tight gas reservoirs. Curtin University, Australia

Alizadeh B, Maroufi K, Heidarifard MH (2018) Estimating source rock parameters using wireline data: an example from Dezful Embayment, South West of Iran. J Pet Sci Eng 167:857–868. https://doi.org/10.1016/j.petrol.2017.12.021

Baskin DK (1997) Atomic H/C ratio of kerogen as an estimate of thermal maturity and organic matter conversion. AAPG Bull 81(9):1437–1450. https://doi.org/10.1306/3B05BB14-172A-11D7-8645000102C1865D

Behar F, Beaumont V, Penteado HDB (2001) Rock-Eval 6 technology: performances and developments. Oil Gas Sci Technol 56(2):111–134. https://doi.org/10.2516/ogst:2001013

Bolandi V, Kadkhodaie A, Farzi R (2017) Analyzing organic richness of source rocks from well log data by using SVM and ANN classifiers: a case study from the Kazhdumi formation, the Persian Gulf basin, offshore Iran. J Pet Sci Eng 151:224–234. https://doi.org/10.1016/j.petrol.2017.01.003

Bradshaw B E (2003). A revised structural framework for frontier basins on the southern and southwestern Australian continental margin. ASN Record 2003/03, 43 pp. http://pid.geoscience.gov.au/dataset/ga/42056.

Breiman L (1996) Bagging predictors. Mach Learning 24(2):123–140. https://doi.org/10.1007/BF00058655

Breiman L (2001) Random forests. Mach Learning 45(1):5–32. https://doi.org/10.1023/A:1010933404324

Breyer J, (2012). Shale Reservoirs: giant resources for the 21st Century, AAPG Memoir 97 (Vol. 97). AAPG.

Broomhead DS, Lowe D (1988) Radial basis functions, multi-variable functional interpolation and adaptive networks. RSRE, UK

Carpentier B, Huc AY, Bessereau G (1991) Wireline logging and source rocks-estimation of organic carbon content by the CARBOLOG method. Log Anal 32(3):279–297

Carrie J, Sanei H, Stern G (2012) Standardisation of Rock-Eval pyrolysis for the analysis of recent sediments and soils. Org Geochem 46:38–53. https://doi.org/10.1016/j.orggeochem.2012.01.011

Chen Z, Liu X, Jiang C (2017) Quick evaluation of source rock kerogen kinetics using hydrocarbon pyrograms from regular rock-eval analysis. Energy Fuel 31(2):1832–1841. https://doi.org/10.1021/acs.energyfuels.6b01569

Chen T and Guestrin C (2016), August. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd acm sigkdd international conference on knowledge discovery and data mining (pp. 785–794). https://doi.org/10.1145/2939672.2939785.

Cortes C, Vapnik V (1995) Support-Vector Networks. Mach Learning 20(3):273–297. https://doi.org/10.1007/BF00994018

Crostella A (1995) An evaluation of the hydrocarbon potential of the onshore northern Perth Basin. Western Australia, Australia

Crostella, A. and Backhouse, J., 2000. Geology and petroleum exploration of the central and southern Perth Basin, Western Australia (No. 57). Perth, WA: Geological Survey of Western Australia

Davis J and Goadrich M (2006). The relationship between precision-recall and ROC curves. In Proceedings of the 23rd international conference on machine learning (pp. 233–240). https://doi.org/10.1145/1143844.1143874

Deaf AS, Omran AA, El-Arab ESZ, Maky ABF (2022) Integrated organic geochemical/petrographic and well logging analyses to evaluate the hydrocarbon source rock potential of the Middle Jurassic upper Khatatba Formation in Matruh Basin, northwestern Egypt. Mar Pet Geol 140:105622. https://doi.org/10.1016/j.marpetgeo.2022.105622

Delle Piane C, Esteban L, Timms NE, Ramesh Israni S (2013) Physical properties of Mesozoic sedimentary rocks from the Perth Basin Western Australia. Aust J Earth Sci 60(6–7):735–745. https://doi.org/10.1080/08120099.2013.831948

Dembicki H (2016) Practical petroleum geochemistry for exploration and production. Elsevier

Durand B (1980) Kerogen: Insoluble organic matter from sedimentary rocks, 1st edn. Editions Technip, Paris

Ebtehaj I, Bonakdari H, Zaji AH, Azimi H, Khoshbin F (2015) GMDH-type neural network approach for modeling the discharge coefficient of rectangular sharp-crested side weirs. Eng Sci Technol Int J 18(4):746–757. https://doi.org/10.1016/j.jestch.2015.04.012

Engel MH, Macko SA (2013) Organic geochemistry: principles and applications. Springer, New York, NY, pp 861

Espitalié J, Laporte JL, Madec M, Marquis F, Leplat P, Paulet J, Boutefeu A (1977) Rapid method for source rock characterization, and for determination of their petroleum potential and degree of evolution. Oil Gas Sci Technol Revue 32:23–42

Fawcett T (2006) An introduction to ROC analysis. Pattern Recogn Lett 27(8):861–874. https://doi.org/10.1016/j.patrec.2005.10.010

Fertl WH, Chilingar GV (1988) Total organic carbon content determined from well logs. SPE Form Eval 3(02):407–419. https://doi.org/10.2118/15612-PA

Fertl WH, Rieke HH III (1980) Gamma ray spectral evaluation techniques identify fractured shale reservoirs and source-rock characteristics. J Pet Technol 32(11):2053–2062. https://doi.org/10.2118/8454-PA

Gradstein FM, Ogg JG, Schmitz M, Ogg G (2012) The geologic time scale. Elsevier

Grosjean E, Hall L, Boreham CJ, Buckler T (2017) Source rock geochemistry of the offshore northern Perth Basin: Record 2017/18. ASN, Canberra. https://doi.org/10.11636/Record.2017.018

Guelman L (2012) Gradient boosting trees for auto insurance loss cost modeling and prediction. Expert Syst Appl 39(3):3659–3667. https://doi.org/10.1016/j.eswa.2011.09.058

Handhal AM, Al-Abadi AM, Chafeet HE, Ismail MJ (2020) Prediction of total organic carbon at Rumaila oil field, Southern Iraq using conventional well logs and machine learning algorithms. Mar Pet Geol 116:104347. https://doi.org/10.1016/j.marpetgeo.2020.104347

Harwood RJ (1977) Oil and gas generation by laboratory pyrolysis of kerogen. AAPG Bull 61(12):2082–2102. https://doi.org/10.1306/C1EA47CA-16C9-11D7-8645000102C1865D

Hastie T, Tibshirani R, Friedman JH, Friedman JH (2009) The elements of statistical learning: data mining, inference, and prediction. Springer, New York USA

Hemmati-Sarapardeh A, Varamesh A, Husein MM, Karan K (2018) On the evaluation of the viscosity of nanofluid systems: Modeling and data assessment. Renew Sustain Energy Rev 81:313–329. https://doi.org/10.1016/j.rser.2017.07.049

Herron S, Letendre L, Dufour M (1988) Source rock evaluation using geochemical information from wireline logs and cores. AAPG Bull, United States

Huang Z, Williamson MA (1996) Artificial neural network modelling as an aid to source rock characterization. Mar Pet Geol 13(2):277–290. https://doi.org/10.1016/0264-8172(95)00062-3

Iasky RP, Mory AJ (1993) Structural and tectonic framework of the onshore Northern Perth Basin. Explor Geophys 24(4):585–592. https://doi.org/10.1071/EG993585

Jang JSR, Sun CT, Mizutani E (1997) Neuro-fuzzy and soft computing-a computational approach to learning and machine intelligence [Book Review]. IEEE Trans Autom Control 42(10):1482–1484

Johnson LM, Rezaee R, Kadkhodaie A, Smith G, Yu H (2018) Geochemical property modelling of a potential shale reservoir in the Canning Basin (Western Australia), using artificial neural networks and geostatistical tools. Comput Geosci 120:73–81. https://doi.org/10.1016/j.cageo.2018.08.004

Jones A (2011) New exploration opportunities in the offshore northern Perth Basin. The APPEA Journal 51(1):45–78. https://doi.org/10.1071/AJ10003

Jones AT, Kennard JM, Nicholson CJ, Bernardel G, Mantle D, Grosjean E, Boreham CJ, Jorgensen DC, Robertson D (2011) New exploration opportunities in the offshore northern Perth Basin. The APPEA Journal 51:45–78. https://doi.org/10.1071/aj10003

Jones A T, Kelman A P, Kennard J M , Le Poidevin S, Mantle D J and Mory A J, (2012). Offshore Perth Basin biozonation and stratigraphy 2011. Chart, 38.

Jorgensen DC, Jones AT, Kennard JM, Mantle D, Robertson D, Nelson G, Lech M, Grosjean E, Boreham CJ (2011) Offshore northern Perth Basin well folio. ASN Rec 9:72

Kadkhodaie-Ilkhchi A, Rahimpour-Bonab H, Rezaee M (2009) A committee machine with intelligent systems for estimation of total organic carbon content from petrophysical data: An example from Kangan and Dalan reservoirs in South Pars Gas Field. Iran Comput Geosci 35(3):459–474. https://doi.org/10.1016/j.cageo.2007.12.007

Kalantary F, Ardalan H, Nariman-Zadeh N (2009) An investigation on the Su–NSPT correlation using GMDH type neural networks and genetic algorithms. Eng Geol 104(1–2):144–155. https://doi.org/10.1016/j.enggeo.2008.09.006

Kamali MR, Mirshady AA (2004) Total organic carbon content determined from well logs using ΔLogR and Neuro Fuzzy techniques. J Pet Sci Eng 45(3–4):141–148. https://doi.org/10.1016/j.petrol.2004.08.005

Kang D, Wang X, Zheng X, Zhao YP (2021) Predicting the components and types of kerogen in shale by combining machine learning with NMR spectra. J Fuel 290:120006. https://doi.org/10.1016/j.fuel.2020.120006

Karimian Torghabeh A, Rezaee R, Moussavi-Harami R, Pimentel N (2014) Unconventional resource evaluation: Kockatea shale, Perth Basin, Western Australia. Int J Oil Gas Coal Technol 8(1):16–30. https://doi.org/10.1504/IJOGCT.2014.064420

Ke G, Meng Q, Finley T, Wang T, Chen W, Ma W, Ye Q, Liu TY (2017) Lightgbm: A highly efficient gradient boosting decision tree. Adv Neural Inform Process Syst 30

Khalil Khan H, Ehsan M, Ali A, Amer MA, Aziz H, Khan A, Abioui M (2022) Source rock geochemical assessment and estimation of TOC using well logs and geochemical data of Talhar Shale. Front Earth Sci, Southern Indus Basin, Pakistan. https://doi.org/10.3389/feart.2022.969936

Khoshnoodkia M, Mohseni H, Rahmani O, Mohammadi A (2011) TOC determination of Gadvan Formation in South Pars Gas field, using artificial intelligent systems and geochemical data. J Pet Sci Eng 78(1):119–130. https://doi.org/10.1016/j.petrol.2011.05.010

Lafargue E, Marquis F, Pillot D (1998) Rock-Eval 6 applications in hydrocarbon exploration, production, and soil contamination studies. Rev Inst Fr Pét 53(4):421–437. https://doi.org/10.2516/ogst:1998036

Langford FF, Blanc-Valleron MM (1990) Interpreting Rock-Eval pyrolysis data using graphs of pyrolizable hydrocarbons vs. total organic carbon. AAPG Bull 74(6):799–804. https://doi.org/10.1306/0C9B238F-1710-11D7-8645000102C1865D

Lary DJ, Alavi AH, Gandomi AH, Walker AL (2016) Machine learning in geosciences and remote sensing. Geosci Front 7(1):3–10. https://doi.org/10.1016/j.gsf.2015.07.003

Lawal LO, Mahmoud M, Alade OS, Abdulraheem A (2019) Total organic carbon characterization using neural-network analysis of XRF data. Petrophysics 60(4):480–493. https://doi.org/10.30632/PJV60N4-2019a2

Lee KJ (2020) Characterization of kerogen content and activation energy of decomposition using machine learning technologies in combination with numerical simulations of formation heating. J Pet Sci Eng 188:106860. https://doi.org/10.1016/j.petrol.2019.106860

Macko SA, Engel MH, Parker PL (1993) Early diagenesis of organic matter in sediments: assessment of mechanisms and preservation by the use of isotopic molecular approaches. Org Geochem Princ Appl. https://doi.org/10.1007/978-1-4615-2890-6_9

Mahmoud AAA, Elkatatny S, Mahmoud M, Abouelresh M, Abdulraheem A, Ali A (2017) Determination of the total organic carbon (TOC) based on conventional well logs using artificial neural network. Int J Coal Geol 179:72–80. https://doi.org/10.1016/j.coal.2017.05.012

Maroufi K, Zahmatkesh I (2023) Effect of lithological variations on the performance of artificial intelligence techniques for estimating total organic carbon through well logs. J Pet Sci Eng 220:111213. https://doi.org/10.1016/j.petrol.2022.111213

Marshall JF, Lee CS, Ramsay DC, Moore AM (1989) Tectonic controls on sedimentation and maturation in the offshore north Perth Basin. The APPEA Journal 29(1):450–465

McCarthy K, Rojas K, Niemann M, Palmowski D, Peters K, Stankiewicz A (2011) Basic petroleum geochemistry for source rock evaluation. Oilfield Rev 23(2):32–43

Meyer BL, Nederlof MH (1984) Identification of source rocks on wireline logs by density/resistivity and sonic transit time/resistivity crossplots. AAPG Bull 68(2):121–129. https://doi.org/10.1306/AD4609E0-16F7-11D7-8645000102C1865D

Mohaghegh S (2000) Virtual-intelligence applications in petroleum engineering: Part 1—Artificial neural networks. J Pet Technol 52(09):64–73. https://doi.org/10.2118/58046-JPT

Mohaghegh S D (2017). Data-driven reservoir modeling. SPE.

Mohagheghian E, Zafarian-Rigaki H, Motamedi-Ghahfarrokhi Y, Hemmati-Sarapardeh A (2015) Using an artificial neural network to predict carbon dioxide compressibility factor at high pressure and temperature. Korean J Chem Eng 32(10):2087–2096. https://doi.org/10.1007/s11814-015-0025-y

Morgan JN, Sonquist JA (1963) Problems in the analysis of survey data, and a proposal. J Am Stat Assoc 58(302):415–434

Mory AJ, Iasky RP (1996) Stratigraphy and structure of the onshore northern Perth Basin. Western Australia Geological Survey of Western Australia, Australia

Nariman-Zadeh N, Atashkari K, Jamali A, Pilechi A, Yao X (2005) Inverse modelling of multi-objective thermodynamically optimized turbojet engines using GMDH-type neural networks and evolutionary algorithms. Eng Optim 37(5):437–462. https://doi.org/10.1080/03052150500035591

Nezhad YA, Moradzadeh A, Kamali MR (2018) A new approach to evaluate Organic Geochemistry Parameters by geostatistical methods: A case study from western Australia. J Pet Sci Eng 169:813–824. https://doi.org/10.1016/j.petrol.2018.05.027

Nyakilla EE, Silingi SN, Shen C, Jun G, Mulashani AK, Chibura PE (2022) Evaluation of source rock potentiality and prediction of total organic carbon using well log data and integrated methods of multivariate analysis, machine learning, and geochemical analysis. Nat Resour Res 31(1):619–641. https://doi.org/10.1007/s11053-021-09988-1

Orr WL (1986) Kerogen/asphaltene/sulfur relationships in sulfur-rich Monterey oils. Org Geochem 10(1–3):499–516. https://doi.org/10.1016/0146-6380(86)90049-5

Owad-Jones D, Ellis G (2000) Western Australia Atlas of Petroleum Fields: Onshore Perth Basin. Department of Minerals and Energy WA Petroleum Division, Australia

Palu T J, Hall L S, Edwards D, Grosjean E, Rollet N, Boreham, C, Buckler T, Higgins K, Nguyen D and Khider K, (2017). Source rocks and hydrocarbon fluids of the Browse Basin. In AAPG/SEG, 2017 International Conference and Exhibition, London, England (pp. 15–18).

Passey QR, Creaney S, Kulla JB, Moretti FJ, Stroud JD (1990) A practical model for organic richness from porosity and resistivity logs. AAPG Bull 74(12):1777–1794. https://doi.org/10.1306/0C9B25C9-1710-11D7-8645000102C1865D

Peters KE (1986) Guidelines for evaluating petroleum source rock using programmed pyrolysis. AAPG Bull 70(3):318–329. https://doi.org/10.1306/94885688-1704-11D7-8645000102C1865D

Piryonesi SM, El-Diraby TE (2020) Data analytics in asset management: Cost-effective prediction of the pavement condition index. J Infrastruct Syst 26(1):04019036

Playford PE, PE P, AE C and GH L (1976). Geology of the Perth Basin Western Australia.

Radtke R J. Lorente M, Adolph B, Berheide M, Fricke S, Grau J, Herron S, Horkowitz J, Jorion B, Madio D and May D, (2012). A new capture and inelastic spectroscopy tool takes geochemical logging to the next level. In SPWLA 53rd Annual Logging Symposium. OnePetro.

Revill AT, Volkman JK, O’leary T, Summons RE, Boreham CJ, Banks M, Denwer K (1994) Hydrocarbon biomarkers, thermal maturity, and depositional setting of tasmanite oil shales from Tasmania Australia. GCA 58(18):3803–3822. https://doi.org/10.1016/0016-7037(94)90365-4

Robnik-Šikonja M (2004) September. In European conference on machine learning, Springer, Berlin, Heidelberg, Improving random forests. https://doi.org/10.1007/978-3-540-30115-8_34

Rollet N, Nicholson C, Jones A, Grosjean E, Bernardel G, Kennard J (2013) New exploration opportunities in the offshore Houtman and Abrolhos sub-basins, northern Perth Basin WA. APPEA J 53(1):97–114. https://doi.org/10.1071/AJ12008

Rui J, Zhang H, Zhang D, Han F, Guo Q (2019) Total organic carbon content prediction based on support-vector-regression machine with particle swarm optimization. J Pet Sci Eng 180:699–706. https://doi.org/10.1016/j.petrol.2019.06.014

Safaei-Farouji M, Kadkhodaie A (2022) Application of ensemble machine learning methods for kerogen type estimation from petrophysical well logs. J Pet Sci Eng 208:109455. https://doi.org/10.1016/j.petrol.2021.109455

Schmoker JW (1979) Determination of organic content of appalachian devonian shales from formation-density logs: Geologic notes. AAPG Bull 63(9):1504–1509. https://doi.org/10.1306/2F9185D1-16CE-11D7-8645000102C1865D

Schmoker JW (1981) Determination of organic-matter content of Appalachian Devonian shales from gamma-ray logs. AAPG Bull 65(7):1285–1298. https://doi.org/10.1306/03B5949A-16D1-11D7-8645000102C1865D

Schmoker JW, Hester TC (1983) Organic carbon in Bakken formation, United States portion of Williston basin. AAPG Bull 67(12):2165–2174. https://doi.org/10.1306/AD460931-16F7-11D7-8645000102C1865D

Schölkopf B, Smola AJ, Bach F (2002) Learning with kernels support vector machines regularization optimization and beyond. MIT Press 1(2)

Serra O (1984) The Acquisition of Logging Data: Part A. Elsevier

Sfidari E, Kadkhodaie-Ilkhchi A, Najjari S (2012) Comparison of intelligent and statistical clustering approaches to predicting total organic carbon using intelligent systems. J Pet Sci Eng 86:190–205. https://doi.org/10.1016/j.petrol.2012.03.024

Shalaby MR, Jumat N, Lai D, Malik O (2019) Integrated TOC prediction and source rock characterization using machine learning, well logs and geochemical analysis: case study from the Jurassic source rocks in Shams Field, NW Desert. Egypt J Pet Sci Eng 176:369–380. https://doi.org/10.1016/j.petrol.2019.01.055

Tariq Z, Mahmoud M, Abouelresh M, Abdulraheem A (2020) Data-driven approaches to predict thermal maturity indices of organic matter using artificial neural networks. ACS Omega 5(40):26169–26181. https://doi.org/10.1021/acsomega.0c03751

Tissot B, Durand B, Espitalie J, Combaz A (1974) Influence of nature and diagenesis of organic matter in formation of petroleum. AAPG Bull 58(3):499–506. https://doi.org/10.1306/83D91425-16C7-11D7-8645000102C1865D

Van Krevelen DW (1993). Coal: Typology-physics-chemistry-constitution.

Vandenbroucke M (2003) Kerogen: from types to models of chemical structure. Oil Gas Sci Technol 58(2):243–269. https://doi.org/10.2516/ogst:2003016

Vapnik V (1999) The nature of statistical learning theory. SSBM

Wang H, Wu W, Chen T, Dong X, Wang G (2019) An improved neural network for TOC, S1 and S2 estimation based on conventional well logs. J Pet Sci Eng 176:664–678. https://doi.org/10.1016/j.petrol.2019.01.096

Welte D H and Tissot P B (1984) Petroleum formation and occurrence. Springer, -verlag.

Wong WT, Hsu SH (2006) Application of SVM and ANN for image retrieval. Eur J Oper Res 173(3):938–950. https://doi.org/10.1016/j.ejor.2005.08.002

Zhang W, Shan X, Fu B, Zou X, Fu LY (2022) A deep encoder-decoder neural network model for total organic carbon content prediction from well logs. J Asian Earth Sci 240:105437. https://doi.org/10.1016/j.jseaes.2022.105437

Zhao N, Wen X, Yang J, Li S, Wang Z (2015) Modeling and prediction of viscosity of water-based nanofluids by radial basis function neural networks. Powder Technol 281:173–183. https://doi.org/10.1016/j.powtec.2015.04.058

Zhao P, Ostadhassan M, Shen B, Liu W, Abarghani A, Liu K, Luo M, Cai J (2019) Estimating thermal maturity of organic-rich shale from well logs: case studies of two shale plays. J Fuel 235:1195–1206. https://doi.org/10.1016/j.fuel.2018.08.037

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Contributions

AA: Methodology, software, data curation, visualization, writing, original draft, writing, review and editing. HS: Data curation, visualization, writing, review and editing. AK: Supervision, conceptualization, methodology, validation, writing, review and editing. FY: Visualization and data curation. ARR: Supervision, conceptualization, validation, writing, review and editing.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests to personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A: Optimized parameters for machine learning methods

Appendix A: Optimized parameters for machine learning methods

Appendix A consists of eleven tables (Table 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16), each table displaying the optimized parameters for a specific regression or classification machine learning method employed in the research.

Table 6 illustrates the values of GMDH parameters for prediction. Optimized parameters for the SVM method used for prediction are detailed in Table 7, while Table 8 reveals optimized parameters for the SVM classifier. The architecture parameters of the MLP network for prediction are presented in Table 9, and Table 10 provides the optimized parameters for the MLP classifier. RBF network properties for prediction are outlined in Table 11

The values of ANFIS parameters for prediction are demonstrated in Table 12. Following that, optimized parameters for the RF classifier, gradient boosting classifier, XGBoost classifier, and LGBM classifier are documented in Table 13, 14, 15, and 16 respectively.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Azadivash, A., Soleymani, H., Kadkhodaie, A. et al. Petrophysical log-driven kerogen typing: unveiling the potential of hybrid machine learning. J Petrol Explor Prod Technol 13, 2387–2415 (2023). https://doi.org/10.1007/s13202-023-01688-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13202-023-01688-1