Abstract

Feature evaluation and selection is an important preprocessing step in classification and regression learning. As large quantity of irrelevant information is gathered, selecting the most informative features may help users to understand the task, and enhance the performance of the models. Margin has been widely accepted and used in evaluating feature quality these years. A collection of feature selection algorithms were developed using margin based loss functions and various search strategies. However, there is no comparative research conducted to study the effectiveness of these algorithms. In this work, we compare 14 margin based feature selections from the viewpoints of reduction capability, classification performance of reduced data and robustness, where four margin based loss functions and three search strategies are considered. Moreover, we also compare these techniques with two well-known margin based feature selection algorithms ReliefF and Simba. The derived conclusions give some guidelines for selecting features in practical applications.

Similar content being viewed by others

References

Dash M, Liu H (1997) Feature selection for classification. Intell Data Anal 1:131–156

Dash M, Choi K, Scheuermann P, Liu H (2002) Feature selection for clustering—a filter solution. In: Proceeding of second international conference on data mining, pp 115–122

Tong DL, Mintram R (2010) Genetic Algorithm-Neural Network (GANN): a study of neural network activation functions and depth of genetic algorithm search applied to feature selection. Int J Mach Learn Cybern 1(1–4):75-87

Boehm O, Hardoon DR, Manevitz LM (2011) Classifying cognitive states of brain activity via one-class neural networks with feature selection by genetic algorithms. Int J Mach Learn Cybern 2(3):125–134

Sharma A, Imoto S, Miyano S, Sharma V (2012) Null space based feature selection method for gene expression data. Int J Mach Learn Cybern 3(4):269–276

Pal M (2009) Margin-based feature selection for hyperspectral data. Int J Appl Earth Observ Geoinf 11:212–220

Liu CL, Jaeger S, Masaki N (2004) Offline recognition of Chinese characters: the state of art. IEEE Trans Pattern Anal Mach Intell 26:198–213

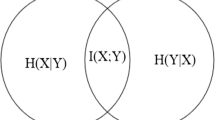

Verron S, Tiplica T, Kobi A (2008) Fault detection and identification with a new feature selection based on mutual information. J Process Control 5:479–490

Oh IS, Lee JS, Moon BR (2004) Hybrid genetic algorithms for feature selection. IEEE Trans Pattern Anal Mach Intell 11:1424–1437

Hall MA (1999) Correlation-based feature subset selection for machine learning, Hamilton, pp 7–45

Dash M, Liu H (2003) Consistency-based search in feature selection. Artif Intell 11:155–176

Wang X, Dong C (2009) Improving generalization of fuzzy if-then rules by maximizing fuzzy entropy. IEEE Trans Fuzzy Syst 17(3):556–567

Peng H, Long F, Ding C (2005) Feature selection based on mutual information: criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans Pattern Anal Mach Intell 27:1226–1238

Huang D, Chow TWS (2005) Effective feature selection scheme using mutual information. Neurocomputing 63:325–343

Yu H, Yang J (2001) A direct LDA algorithm for high-dimensional data-with application to face recognition. Pattern Recognit 34:2067–2070

Witold M (1981) On an extended Fisher criterion for feature selection. IEEE Trans Pattern Anal Mach Intell 5:611–614

Hu Q, Yu D, Pedrycz W, Chen D (2011) Kernelized fuzzy rough sets and their applications. IEEE Trans Knowl Data Eng 11:1649–1667

He Q, Xie Z, Hu Q, Wu C (2011) Boundary instance selection based on neighborhood model. Neurocomputing 10:1585–1594

Wang X, Dong L, Yan J (2012) Maximum ambiguity based sample selection in fuzzy decision tree induction. IEEE Trans Knowl Data Eng 24(8):1491–1505

Wang X, Zhai J, Lu S (2008) Induction of multiple fuzzy decision trees based on rough set technique. Inf Sci 178(16):3188–3202

Rakotomamonjy A (2003) Variable selection using SVM-based criteria. J Mach Learn Res 3:1357–1370

Gilad-Bachrach R, Navot A, Tishby N (2004) Margin based feature selection–theory and algorithms. In: Proceedings of the 21st international conference on machine learning, pp 40–46

Sun Y. (2007) Iterative RELIEF for feature weighting: algorithms, theories, and applications. IEEE Trans Pattern Anal Mach Intell 6:1–17

Vapnik VN (1998) Statistical learning theory, New York

Bartlett PL, Shawe-Taylor J (1999) Generalization performance of support vector machines and other pattern classifiers. In: Advances in Kernel methods: support vector learning. MIT Press, Cambridge, pp 43–54

Li Y, Lu B (2009) Feature selection based on loss-margin of nearest neighbor classification. Pattern Recognit 42:1914–1921

Chen B, Liu H, Chai J, Bao Z (2009) Large margin feature weighting method via linear programming. IEEE Trans Knowl Data Eng 10:1475–1486

Hu Q, Zhu P, Yang Y, Yu D (2011) Large-margin nearest neighbor classifiers via sample weight learning. Neurocomputing 7: 656–660

Garg A, Roth D (2003) Margin distribution and learning algorithms. In: Proceedings of the twentieth international conference on machine learning, pp 210–217

Nguyen X, Wainwright MJ, Jordan MI (2009) On surrogate loss functions and f-divergences. Ann Stat 2:876–904

Rudin C, Schapire RE, Daubechies I (2007) Analysis of boosting algorithms using the smooth margin function. Ann Stat 6:2723–2768

Freund Y (2001) An adaptive version of the boost by majority algorithm. Int Conf Mach Learn 43:293–318

Friedman JH, Hastie T, Tibshirani R (2000) Additive logistic regression: a statistical view of boosting. Ann Stat 28:337–407

Burges CJC (1998) A tutorial on support vector machines for pattern recognition. Knowl Discov Data Min 2:121–167

Freund Y, Schapire RE (1996) Experiments with a new boosting algorithm. Thirteenth international conference in machine learning, pp 1–15

Warmuth MK, Glocer K, Ratsch G (2007) Boosting algorithms for maximizing the soft margin. Advances in Neural Information Processing Systems. MIT Press, pp 340–346

Vezhnevets A, Barinova O (2007) Avoiding boosting overfitting by removing confusing samples. In: Proceedings of the 18th European conference on machine learning, pp 430–441

Friedman JH, Hastie T, Tibshirani R (2000) Additive logistic regression: a statistical view of boosting (with discussion). Ann Stat 28:337–407

Romero E, Marquez L, Carreras X (2004) Margin maximization with feed-forward neural networks: a comparative study with SVM and AdaBoost. Neurocomputing 57: 313–344

Kanamori T, Takenouchi P, Eguchi P, Murata N (2007) Robust loss functions for boosting. NeuralComputation 8:2183–2244

Allen GI (2012) Automatic feature extraction via weighted Kernels and regularization. J Comput Graph Stat (to appear)

Parka C, Koob JY, Kimc PT, Leeb JW (2008) Stepwise feature selection using generalized logistic loss. Comput Stat Data Anal 52(7):3709–3718

Weinberger KQ, Blitzer J, Saul LK (2009) Distance metric learning for large margin nearest neighbor classification. J Mach Learn Res 10:207–244

Ng Andrew Y (2004) Feature selection L1 vs. L2 regularization, and rotational invariance. In: Proceedings of the 21 st international conference on machine learning

Zhang T (2009) On the consistency of feature selection using Greedy least squares regression. J Mach Learn Res 10:555–568

Farahat AK, Ghodsi A, Kamel MS (2011) An efficient Greedy method for unsupervised feature selection. IEEE 11th international conference on data mining

Weston J, Mukherjee S, Chapelle O, Pontil M, Poggio T, Vapnik V (2000) Feature selection for SVMs, ICML

Liu X, Yu T, Gen. Electr, Global Res. Niskayuna (2007) Gradient feature selection for online boosting. IEEE 11th international conference on computer vision, pp 1–8

Domingos P, Pazzani M (1997) On the optimality of the simple Bayesian classifier under zero-one loss. Mach Learn 29:103–130

Zhang T, Oles FJ (2001) Text categorization based on regularized linear classification methods. Inf Retriev 4:5–31

Burges CJC (1998) A tutorial on support vector machines for pattern recognition. Data Min Knowl Discov 2:121–167

Lee Y-J, Mangasarian OL (2001) SSVM: a smooth support vector machine for classification. Comput Optim Appl 1:320–344

Crammer K, Gilad-Bachrach R, Navot A, Tishby N (2002) Margin analysis of the LVQ algorithm. In: Proceedings of the 17’th conference on neural information processing systems, pp 462–469

Wright J, Yang AY, Ganesh A, Shankar Sastry S, Ma Y (2009) Robust face recognition via sparse representation. IEEE Trans Pattern Anal Mach Intell 2:210–227

Zhang L, Zhou WD (2011) Sparse ensembles using weighted combination methods based on linear programming. Pattern Recognit 1:97–106

Tan M, Wang L, Tsang IW (2010) Learning sparse SVM for feature selection on very high dimensional datasets. In: Proceedings of the 27th international conference on machine learning, pp 301–308

Perkins S, Lacker K, Theiler J (2003) Grafting: fast incremental feature se- lection by gradient descent in function space. J Mach Learn Res 3:1333–1356

Carpenter B (2008) Lazy sparse stochastic gradient descent for regularized multinomial logistic regression, Technical report 1–12

Tsuruoka Y, Tsujii J, Ananiadou S (2009) Stochastic gradient descent training for L1-regularized log-linear models with cumulative penalty. In: Proceedings of the 47th annual meeting of the ACL and the 4th IJCNLP of the AFNLP, pp 477–485

Merz CJ, Merphy P (1996) UCI repository of machine learning databases [OB/OL]. http://www.ics.uci.edu/mlearn/MLRRepository.html

Perou CM, Solie T, Eisen MB et al (2000) Molecular portraits of human breast tumours. Nature 406:747–752

Alizadeh A et al (2000) Distinct types of diffuse large B-cell lymphoma identified by gene expression profiling. Nature 405:503–511

Beer DG, Kardia SLR, Huang CC et al (2008) Gene-expression profiles predict survival of patients with lung adenocarcinoma. Nat Med 8:816–824

Khan J, Weil JS, Ringner M, Saall LH, Ladanyi M et al (2001) Classification and diagnostic prediction of cancers using gene expression profiling and artificial neural networks. Nat Med 7:673–679

Kononenko I (1994) Estimating attributes: analysis and extensions of RELIEF. Eur Conf Mach Learn, 171–182

Acknowledgments

This work is supported by the National Natural Science Foundation of China under Grants 61222210 and 61175027.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wei, P., Ma, P., Hu, Q. et al. Comparative analysis on margin based feature selection algorithms. Int. J. Mach. Learn. & Cyber. 5, 339–367 (2014). https://doi.org/10.1007/s13042-013-0164-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-013-0164-6