Abstract

Purpose

Echocardiography is a difficult tool to master. Competency requires the supervised interpretation of hundreds of exams. Perceptual learning modules (PLMs) are novel learning tools that aim to speed up this learning process by enabling learners to go online and interpret numerous clinical images, followed systematically by expert feedback. We developed and tested a PLM aimed at improving novices’ ability to quickly visually estimate left ventricular ejection fraction (LVEF) on transesophageal echocardiography images, a critical skill in acute care. We hypothesized that using the PLM would improve the accuracy and the speed of learners’ estimations.

Methods

Learners without echocardiography experience were randomly assigned to a group that used the 96-case PLM (n = 26) or a control group (n = 26) that did not. Both groups took a pre-test and an immediate post-test that measured the accuracy of their visual estimations during a first session. At six months, participants also completed a delayed post-test.

Results

In the immediate post-test, the PLM group showed significantly better accuracy than the control group (median absolute estimation error 6.1 vs 9.0; difference 95% CI, 1.0 to 4.6; P < 0.001). Nevertheless, at six months, estimation errors were similar in both groups (median absolute estimation error 10.0 vs 10.0; difference 95% CI, -1.3 to 2.1; P = 0.27).

Conclusions

Participation in a short online PLM significantly improved novices’ short-term ability to accurately estimate LVEF visually, compared with controls. The effect was not sustained at six months.

Trial registration

www.clinicaltrials.gov (NCT03245567); registered 7 August 2017.

Résumé

Objectif

L’échocardiographie est un outil difficile à maîtriser. Afin d’acquérir cette compétence, l’interprétation supervisée de centaines d’examens est nécessaire. Les modules d’apprentissage perceptuel (MAP) sont des outils d’apprentissage innovants qui visent à accélérer ce processus d’apprentissage en permettant aux apprenants d’aller en ligne et d’interpréter de nombreuses images cliniques, lesquelles sont systématiquement suivies par des rétroactions d’experts. Nous avons mis au point et testé un MAP visant à améliorer la capacité des nouveaux apprenants à rapidement estimer visuellement la fraction d’éjection ventriculaire gauche (FEVG) sur des images d’échocardiographie transœsophagienne, une compétence critique dans les soins aigus. Nous avons émis l’hypothèse que l’utilisation du MAP améliorerait la précision et la rapidité des estimations des apprenants.

Méthode

Les apprenants sans expérience de lecture d’échocardiographie ont été aléatoirement alloués à un groupe qui a utilisé le MAP de 96 cas (n = 26) ou à un groupe témoin (n = 26) qui ne l’a pas utilisé. Les deux groupes ont passé un prétest et un post-test immédiat qui ont mesuré l’exactitude de leurs estimations visuelles au cours d’une première séance. Six mois plus tard, les participants ont également passé un autre post-test retardé.

Résultats

Dans le post-test immédiat, le groupe MAP a démontré une précision significativement meilleure que le groupe témoin (erreur d’estimation absolue médiane, 6,1 vs 9,0; différence de l’IC 95 %, 1,0 à 4,6; P < 0,001). Néanmoins, à six mois, les erreurs d’estimation étaient similaires dans les deux groupes (erreur d’estimation absolue médiane, 10,0 vs 10,0; différence de l’IC 95 %, -1,3 à 2,1; P = 0,27).

Conclusion

La participation à un bref MAP en ligne a considérablement amélioré la capacité à court terme des nouveaux apprenants à estimer visuellement et avec précision la FEVG, par rapport à un groupe témoin. L’effet n’était pas maintenu à six mois.

Enregistrement de l’étude

www.clinicaltrials.gov (NCT03245567); enregistrée le 7 août 2017.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Echocardiography is playing a growing role in perioperative and acute care settings. Nevertheless, it is difficult to master. Several hundred exams must be performed and interpreted under expert supervision during training.1 This can be challenging and can consume significant time and resources.2

Visually estimating left ventricular ejection fraction (LVEF) is one aspect of echocardiography expertise that is traditionally acquired slowly and with difficulty.1 Nevertheless, because it is much faster than recommended methods like the Simpson biplane,1,3 and because these quantitative methods are not always available on equipment used at the bedside, visual estimation often plays a crucial role in dynamic acute care situations. Moreover, visual assessment of LVEF is frequently used by experts to corroborate quantitative measures.

Simulators may improve the accessibility and efficiency of training. Nevertheless, with echocardiography, simulators have mainly been used for probe manipulation and image acquisition.4 Their ability to bolster image interpretation is more limited, possibly because this requires substantial exposure to the many variants of both normal and pathological exams that current simulators do not provide (typically, simulators will only offer a limited number of exemplars of each category). Large banks of interpreted exams can also be useful. However, their access is often restricted to selected learners, and they can be inefficient for focused training on a specific, perhaps more challenging aspect of image interpretation, like visual estimation of LVEF.

Perceptual learning modules (PLMs) represent an accessible and efficient way to improve the capacity of learners to interpret echocardiographic images. First tested in aviation,5 PLMs expose trainees to a large number of images or clips over a short period of time, generally via a digital platform. Each of these images or clips is accompanied by a question. The learner’s answer is immediately followed by brief expert feedback. This repeating cycle promotes efficient learning through complex mechanisms, both conscious and unconscious.6 As expertise develops, the extraction and organization of the perceptual information becomes both more accurate and more efficient.7 Compared with traditional learning at the bedside or with image banks of interpreted exams, the PLM strategy is faster-paced and based on seeing a high volume of exams to hone a narrower set of skills.

Perceptual learning modules represent a complementary training strategy and are already used in other areas of medicine such as surgery,8 radiology,9 histology,7 and dermatology.10,11 A pilot study by Romito et al.12 found that echocardiography training with a PLM improved the ability of junior anesthesiology residents to quickly establish a correct diagnosis from transesophageal echocardiography (TEE) clips.

We developed an online PLM to improve visual estimation of LVEF in learners with no echocardiography experience. We hypothesized that using the PLM would improve the accuracy and the speed of learners’ estimations when tested after learning (Kirkpatrick level 2).13

Methods

The study was approved by the Ethics Review Board of the Centre Hospitalier de l’Université de Montréal (CHUM, #17.119) and registered prior to participant enrollment at clinicaltrials.gov (NCT03245567). Reporting follows the CONSORT guidelines14 and its extension for healthcare simulation research.15 A team of professional programmers (Edgebee Studios, Montreal, QC, Canada) created the online platform for this study, which ran from July 2018 to May 2019.

Image bank development: exams selection and validation

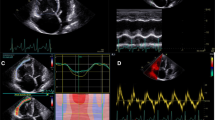

Transesophageal echocardiography exams (diagnostic studies performed by cardiologists or intraoperative exams performed by anesthesiologists) were retrieved from hospital archives. Exams needed to contain one good quality video loop from each of the three following midesophageal views: four-chamber, two-chamber, and long-axis.

The three views from the selected exams were completely anonymized and uploaded to the platform. Each exam was independently reviewed online by two expert cardiologists having completed level 3 training in echocardiography (highest level)16 and practicing in a high-volume echocardiography laboratory. Each expert gave a visual estimation of the LVEF for each case as an integer between 1% and 100%. The experts rejected exams they deemed of insufficient quality to reliably estimate LVEF. Exams for which expert estimations differed by less than 10% were retained for the study. The average of the two experts’ assessments, rounded to the closest integer, was used as the consensus LVEF value.

The two experts reviewed 277 exams: 44 (16%) were rejected by at least one expert on quality grounds and another five because the experts’ visual estimations of LVEF differed by more than ten percent. There was a strong correlation between the two experts’ visual estimation (r = 0.97, see Electronic Supplementary Material [ESM] eFig. 1). From this bank of 228 exams, 96 were used in the PLM and 40 were retained to create two versions of a test (flowchart of the process of exam validation and attribution provided in ESM eFig. 2). No exam was used twice. Many exams needed to be validated to ensure an adequate representation of the entire range of LVEFs seen in practice (92 mainly normal exams remained unused).

Perceptual learning module development

Each exam in the PLM included three looping video clips showing the four-chamber, two-chamber, and long-axis views, simultaneously, side by side, for up to 20 sec (see eVideo as ESM). For each exam, participants had to enter their estimation of the LVEF as an integer between 0% and 100% as soon as they could. The three clips disappeared from the screen if no response was provided within 20 sec, and the learner had to give an answer before receiving feedback. This brief feedback consisted of the expert consensus rating, displayed on screen together with the looping clips for an additional ten seconds. The maximum time to complete the 96 PLM exams was therefore 48 min.

The 96 PLM exams were grouped into eight blocks of 12 exams to track learner progress. Each block included three exams each of LVEF < 30%, LVEF 30–39%, LVEF 40–49%, and LVEF > 49%. The clips were randomly ordered within each block. Participants viewed blocks in the same order.

Performance test development

As in Romito et al.,12 we created two blocks of 20 exams (A and B) for testing purposes. Each block consisted of five exams each of LVEF < 30%, LVEF 30–39% LVEF 40–49%, and LVEF > 49%. Visual presentation, random ordering, and prompting during testing were identical to those used in PLM training. Nevertheless, no feedback was provided during testing.

Study evaluation of PLM’s effect on LVEF assessment by novices

Fourth year medical students and first year residents from University of Montreal were approached to participate in the study. Medical students were recruited during their clinical rotation in anesthesiology, and first year residents following a short presentation given to their entire cohort. Candidates needed to have access to a high-speed internet connection and have no experience in echocardiography. All participants gave written informed consent via the platform.

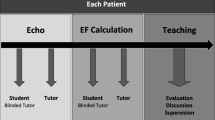

Participants were randomly assigned to four balanced groups (Fig. 1): first, to PLM or control and second, to receive test block A at pre-test and block B at post-test, or vice-versa.

All participants completed the assigned pre-test to establish a baseline rating of their ability to visually estimate LVEF. Participants in the PLM group then immediately underwent a single training session with the PLM. All participants subsequently completed their assigned post-test to measure their ability to visually estimate LVEF at the end of the first session. Controls thus visualized a total of 40 TEE exams (pre- and post-test), compared with 136 for the PLM group (20 pre-test, 96 PLM training, and 20 post-test). Participants were contacted via email six months after the first session and asked to complete a delayed post-test of the 20 TEE exams used in their baseline pre-test.

Outcomes

The primary outcome of the study was the ability to visually estimate LVEF, assessed by the absolute value of the difference between the participant and expert consensus ratings. The median of these absolute errors was used as a summary of performance on a particular block of exams. Secondary outcome was time to complete evaluations.

Statistical analysis

Group performances at each time point are summarized as median [interquartile range (IQR)] (25th–75th percentiles) by bootstrapping. Wilcoxon tests were used to compare groups within each time point and participant progress across time points (empirical P values calculated by permutation testing). Logistic regression was used to test for informative drop-out at long-term post-test (e.g., if participants with poor performance were more likely to skip the long-term post-test). Mixed models were used to jointly analyze error on the 20 individual case results with time, treatment, time by treatment interaction, sex, student status, and actual LVEF (from expert ratings) as predictors, and compound-symmetric covariance matrix for the residuals. We found no evidence of subject-specific variance in intercept or slope parameters (results not shown). Psychometric analyses included Cronbach’s alpha to estimate each test battery’s internal consistency and continuous response models to assess individual item difficulty. Analyses were conducted with SAS 9.4 (SAS Institute Inc., Cary, North Carolina, USA).

Power analysis

A pilot study used for grant application (n = 10 participants per group, 20 exams per test) suggested a PLM effect of 2.5 points on the primary outcome (standard deviation = 2.7; 95% confidence interval [CI], 0.82 to 4.5) for a standardized effect size D near 1.0. Power (α = 0.05) for a paired t test for n = 25 per group was estimated at over 90% for D = 1, over 80% for D > 0.4, and 55% for D = 0.30 (the smallest expected effect size).

Results

Fifty-two participants completed the first session (26 each in the PLM and control groups). Perceptual learning modules and control participants were similar in terms of median [IQR] age (24 [23–28] vs 25 [24–28]), sex (46 vs 42% female) and status (65 vs 73% medical students).

Immediate post-test

At baseline, the ability to visually estimate LVEF was similar in both groups (Fig. 2 and Table; median error 7.5 in the PLM group and 9.9 in controls, difference 2.4 points; 95% CI, -0.3 to 3.8; P = 0.23). At immediate post-test, LVEF errors were significantly lower in the PLM group than in controls (median error 6.1 in the PLM group and 9.0 in controls; difference 2.9 points; 95% CI, 1.0 to 4.6; P < 0.001).

Performance in the PLM group significantly improved from pre- to immediate post-test, from 7.5 to 6.1 (P = 0.02; difference 1.4 points; 95% CI, 0.33 to 4.24). There was no observable improvement in the control group (9.9 vs 9.0; P = 0.23; 95% CI, -0.57 to 2.34).

These observations are confirmed by a significant group by time interaction effect (P < 0.001) in the mixed model analysis, with the individual test-case results as multivariate outcomes, adjusted for sex and expert rating. Females scored slightly better (errors lower by 1.9 points; P < 0.02) and errors increased with expert rating (P < 0.001, discussed below). An analysis of covariance, controlling for baseline scores, yielded identical results (PLM vs control: P = 0.003).

In sum, three different approaches led to the same conclusion that the PLM has an effect at the immediate post-test: simple pairwise comparison, a fixed-effect model with post-test value as outcome and pre-test value as covariate, and a multivariate mixed model with time, time*group, and other covariates.

Long-term post-test

Seventeen participants from the PLM group and 13 from the control group completed the six-month post-test (65% and 50%, respectively). There was no association between the propensity to complete the delayed post-test with treatment group assignment, nor with performance at baseline and post-test (P = 0.70, 0.81, and 0.13, respectively, from a logistic regression). At six months, performance was identical in both groups (median, 10.0).

Estimation times

Median [IQR] estimation times decreased significantly in the control group, from a median of 12.0 [8.4–6.1] sec to 10.2 [7.2–13.4] sec (P < 0.001), but remained unchanged in the PLM group, from 9.6 [8.3–11.7] sec to 9.8 [8.1–12.6] sec at baseline and post-test, respectively.

Evolution of performance in the PLM group over time

Regarding the evolution of performance in the PLM group over time, it was not possible to determine a threshold after which learners’ performance plateaued. There seemed to be a decrease in accuracy improvement after the fourth or fifth block of cases in most learners (ESM eFig. 3), but this did not reach statistical significance.

Estimation error vs LVEF

The correlation between expert and participant ratings increased significantly in the PLM group from baseline to post-test (Spearman r, 0.57 to 0.74 at baseline and post-test) but remained unchanged in the control group (r, 0.54 vs 0.57). Nevertheless, participants tended to underestimate high LVEF and overestimate low LVEF (Fig. 3). If the subject’s ratings were unbiased, then a regression line should have an intercept of 0 and a slope of 1. This was examined more formally using a mixed model analysis of the individual outcomes, with expert rating as predictor, and allowing intercept and slope to vary by group and over time (pre-test vs post-test). At baseline, the common slope and intercept were 12.5 (95% CI, 10.92 to 14.07) and 0.66 (95% CI, 0.63 to 0.68). We found that participation in the PLM led to a significantly lower intercept (8.84; 95% CI, 6.34 to 11.34; -3.8 points decrease; P = 0.01) and a slope closer to 1 (b = 0.75; 95% CI, 0.70 to 0.81; 0.10 increase; P = 0.007).

Individual case difficulty vs LVEF

Psychometric analyses were performed on both versions of the test, and on the individual exams they contained. Cronbach’s alpha was 0.72 and 0.75 for both version of the test, suggesting moderate internal consistency. There is evidence that some cases were more difficult than others. A continuous response model was used to determine individual exam difficulty and discriminatory power. No clear relationship was identified between LVEF estimation by the experts on an exam and its difficulty or its discriminatory power (see ESM eFig. 4).

Discussion

In this study, participation in a short online PLM significantly improved novices’ short-term ability to accurately estimate LVEF visually, compared with controls. This is consistent with the positive effects of PLMs that have been shown for other clinical interpretation skills.9,10,11,12,17 Nevertheless, this effect is less important than what has been reported elsewhere and was not sustained at six months.

Romito et al.,12 working on a module to improve students’ diagnostic skills in TEE, found a doubling of accuracy in the immediate post-test and a sustained effect at six months. These differences from our results may be explained by several factors. First, visually estimating LVEF accurately might be much harder to improve than recognizing completely different pathologies, the similarities between categories being much greater in the former than in the latter. It is likely that much more practice than the 48 minutes maximum offered in this study is necessary to approach the accuracy and reliability shown by experts.18,19 Second, the PLM in our study did not use adaptive technology to modify the case selection based on the participants’ previous responses, which may have reduced learning efficiency.7 Finally, this study used an experimental design20 that better controls for potential biases and confounding variables,21 which may have increased the apparent effect of the PLM in Romito’s study and in other studies of PLMs in medical imaging10,12,17 that used quasi-experimental designs that did not involve the randomized allocation of subjects.

A strength of the blocked construction of our PLM is its potential ability to show a learning curve. Our results suggest a possible plateau in accuracy improvement after the fourth or fifth block, perhaps due to learner fatigue or decreasing concentration or motivation. Nevertheless, these differences in participant performance across blocks of cases during the PLM did not reach statistical significance, possibly because of insufficient power. Unequal block difficulty might also have played a role: we created blocks by matching cases for LVEF, expecting difficulty to be greater with intermediate LVEFs than in cases with very low or normal LVEFs. Nevertheless, in novices, LVEF turned out to be a poor predictor of case difficulty.

Contrary to other studies of PLM, we did not find any sustained effect at six months. This might again be due to the difficulty of the skill being learned, and to the lack of practice during the six months interval. The significant number of participants that did not take the delayed post-test in this study limits our ability to draw strong conclusions, although it is reassuring to find that the propensity to take the delayed post-test was unrelated to initial participant performance. A potential strategy to improve long-term skill retention would be to increase practice time (more cases). Another would be to space out the learning over multiple sessions. Distributed practice has indeed been shown to improve retention,22 and could also potentially help improve learner engagement: in the PLM group, two learners showed a significant deterioration of performance, possibly due to a loss of concentration or interest. Finally, to be most useful, PLMs will have to be integrated to other training strategies.

This study has limitations. Our design involved a control group who received no educational intervention. Since this study was the first attempt the test medical PLMs under fully experimental conditions, it was important to document any change in performance of the control group from pre to immediate post-test, especially with regards to any effect of the testing itself, as was done with initial PLM testing in other domains.5 Indeed, participants could have become, with time, more adept at taking the test, independent of their proficiency at estimating LVEF.

Another limitation of this study is that transgastric views of the LV, commonly used by anesthesiologists performing TEE to estimate LVEF, where not included in the PLM. Indeed, in the absence of any previous literature to guide PLM design, only three midesophageal views were chosen to estimate LVEF. This decision was motivated by the fact that these three views combined images of all 17 left ventricular segments, and because adding extra views would have meant decreasing the size of each view on the screen, or using multiple screens for each case, increasing PLM complexity and time spent on each case. The excellent correlation between our experts’ estimations, made by visualizing the cases on the same interface and using the same views as the study participants, suggests the midesophageal views are sufficient to make useful estimates of LVEF.

Another limitation is that the choice of medical students and junior residents as the study population limits the generalization of our results to a population of more experienced learners.

This study focused specifically on improving visual estimation of LVEF without teaching quantitative measures like the Simpson’s method of disks.3 The objective was to isolate and train the important pattern recognition mechanism involved in visual estimations. Nevertheless, considering the poor accuracy of many visual estimations provided by the inexperienced participants of this and other studies,23 quantitative measures of LVEF must also be taught, and should be used whenever they are available and time permits.

In conclusion, this study shows that participation in a short online PLM significantly improved novices’ short-term ability to accurately estimate LVEF visually, compared with controls, but that the effect was not sustained at six months.

The PLM is available online at map.anesthesiologie.umontreal.ca

References

Hahn RT, Abraham T, Adams MS, et al. Guidelines for performing a comprehensive transesophageal echocardiographic examination: recommendations from the American Society of Echocardiography and the Society of Cardiovascular Anesthesiologists. J Am Soc Echocardiogr 2013; 26: 921-64.

Kirkpatrick JN, Grimm R, Johri AM, et al. Recommendations for echocardiography laboratories participating in cardiac point of care cardiac ultrasound (POCUS) and critical care echocardiography training: report from the American Society of Echocardiography. J Am Soc Echocardiogr 2020; 33: 409-22.e4.

Lang RM, Badano LP, Mor-Avi V, et al. Recommendations for cardiac chamber quantification by echocardiography in adults: an update from the American Society of Echocardiography and the European Association of Cardiovascular Imaging. J Am Soc Echocardiogr 2015; 28: 1-39.e14.

Nazarnia S, Subramaniam K. Role of simulation in perioperative echocardiography training. Semin Cardiothorac Vasc Anesth 2017; 21: 81-94.

Kellman PJ, Kaiser MK. Perceptual learning modules in flight training. Proceedings of the Human Factors and Ergonomics Society Annual Meeting 1994; DOI: https://doi.org/10.1177/154193129403801808.

Fahle M. Perceptual learning: gain without pain? Nat Neurosci 2002; 5: 923-4.

Kellman PJ, Krasne S. Accelerating expertise: perceptual and adaptive learning technology in medical learning. Med Teach 2018; 40: 797-802.

Guerlain S, Brook Green K, LaFollette M, et al. Improving surgical pattern recognition through repetitive viewing of video clips. 2004; 34: 699-707.

Sowden PT, Davies IR, Roling P. Perceptual learning of the detection of features in X-ray images: a functional role for improvements in adults’ visual sensitivity? J Exp Psychol Hum Percept Perform 2000; 26: 379-90.

Rimoin L, Altieri L, Craft N, Krasne S, Kellman PJ. Training pattern recognition of skin lesion morphology, configuration, and distribution. J Am Acad Dermatol 2015; 72: 489-95.

Speelman C, Martin K, Flower S, Simpson T. Skill acquisition in skin cancer detection. Percept Mot Skills 2010; 110: 277-97.

Romito BT, Krasne S, Kellman PJ, Dhillon A. The impact of a perceptual and adaptive learning module on transoesophageal echocardiography interpretation by anaesthesiology residents. Br J Anaesth 2016; 117: 477-81.

Kirkpatrick JD, Kirkpatrick WK. Kirkpatrick’s Four Levels of Training Evaluation. Alexandria, VA: Association for Talent Development; 2016. p. 256.

Schulz KF, Altman DG, Moher D; CONSORT Group. CONSORT. Statement: updated guidelines for reporting parallel group randomised trials. Trials 2010; DOI: https://doi.org/10.1186/1745-6215-11-32.

Cheng A, Kessler D, Mackinnon R, et al. Reporting guidelines for health care simulation research: extensions to the CONSORT and STROBE statements. Adv Simul (Lond) 2016; DOI: https://doi.org/10.1186/s41077-016-0025-y.

Burwash IG, Basmadjian A, Bewick D, et al. 2010 Canadian Cardiovascular Society/Canadian Society of Echocardiography Guidelines for Training and Maintenance of Competency in Adult Echocardiography. Can J Cardiol 2011; 27: 862-4.

Krasne S, Hillman JD, Kellman PJ, Drake TA. Applying perceptual and adaptive learning techniques for teaching introductory histopathology. J Pathol Inform 2013; DOI: https://doi.org/10.4103/2153-3539.123991.

Mueller X, Stauffer JC, Jaussi A, Goy JJ, Kappenberger L. Subjective visual echocardiographic estimate of left ventricular ejection fraction as an alternative to conventional echocardiographic methods: comparison with contrast angiography. Clin Cardiol 1991; 14: 898-902.

Gudmundsson P, Rydberg E, Winter R, Willenheimer R. Visually estimated left ventricular ejection fraction by echocardiography is closely correlated with formal quantitative methods. Int J Cardiol 2005; 101: 209-12.

Seel NM Experimental and quasi-experimental designs for research on learning. In: Seel NM, editor. Encyclopedia of the Sciences of Learning. Boston, MA: Springer; 2012. https://doi.org/10.1007/978-1-4419-1428-6_716.

Campbell DT, Stanley JC. Experimental and quasi-experimental designs for research on teaching. In: Gage NL, editor. Handbook of Research on Teaching. Chicago: Rand McNally; 1963. p. 171-246.

Dunlosky J, Rawson KA, Marsh EJ, Nathan MJ, Willingham DT. Improving students’ learning with effective learning techniques: promising directions from cognitive and educational psychology. Psychol Sci Public Interest 2013; 14: 4-58.

Cole GD, Dhutia NM, Shun-Shin MJ, et al. Defining the real-world reproducibility of visual grading of left ventricular function and visual estimation of left ventricular ejection fraction: impact of image quality, experience and accreditation. Int J Cardiovasc Imaging 2015; 31: 1303-14.

Author contributions

Philippe Champagne helped with study design, data collection, and writing a first draft of the paper. François Girard helped with study design, data interpretation, and reviewing the paper. Véronique Cyr and Giovanni Romanelli helped with study design, provided expert exam validation, and reviewed the paper. Monique Ruel helped with study design, data collection, and reviewed the paper. Alexandre Todorov helped with study design, data interpretation, and reviewed the paper. Arnaud Robitaille was responsible for study conception, data collection, data interpretation, and writing the final draft of the paper.

Disclosures

None.

Funding statement

This work was supported by research funds provided by Jean-François Hardy MD, by the Fédération des médecins résidents du Québec, and by the Department of anesthesiology and pain medicine, Université de Montréal.

Editorial responsibility

This submission was handled by Dr. Philip M. Jones, Deputy Editor-in-Chief, Canadian Journal of Anesthesia/Journal canadien d’anesthésie.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Supplementary material 3 (MP4 50064 kb) eVIDEO Demonstration of perceptual learning modules in both the testing and learning modes.

Rights and permissions

About this article

Cite this article

Champagne, P., Girard, F., Cyr, V. et al. The impact of a perceptual learning module on novices’ ability to visually estimate left ventricular ejection fraction by transesophageal echocardiography: a randomized controlled study. Can J Anesth/J Can Anesth 68, 1527–1535 (2021). https://doi.org/10.1007/s12630-021-02066-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12630-021-02066-3