Abstract

The purpose of the study is to investigate rating behavior between Korean and native English speaking (NES) raters. Five Korean English teachers and five NES teachers graded 420 essays written by Korean college freshmen and completed survey questionnaires. The grading data were analyzed with FACETS program. The results revealed Korean raters’ inferiority in measuring linguistic components. Furthermore, the Korean raters were more severe in scoring grammar, sentence structure, and organization, whereas the NES raters were stricter toward content and overall scores. In addition, the analysis of the raters’ responses on survey discovered that the NNS raters’ perception spread into content and grammar as the most difficult feature to grade, while all NES raters thought content as the most difficult. Based on these research findings, future research suggestions and implications are discussed.

Similar content being viewed by others

References

Andrews, S. J. (2003). Teacher language awareness and the professional knowledge base of the L2 teacher. Language Awareness, 12(2), 81–95. doi:10.1080/09658410308667068.

Borg, S. (2003). Teacher cognition in grammar teaching: A literature review. Language Awareness, 12(2), 96–108. doi:10.1080/09658410308667069.

Brown, J. D. (1991). Do English and ESL faculties rate writing samples differently? TESOL Quarterly, 25, 587–603. doi:10.2307/3587078.

Davies, E. E. (1983). Error evaluation: The importance of viewpoint. ELT Journal, 37(4), 304–311. doi:10.1093/elt/37.4.304.

Douglas, D., & Selinker, L. (1992). Analyzing oral proficiency test performance in general and specific purpose contexts. System, 20, 317–328. doi:10.1016/0346-251X(92)90043-3.

Hamp-Lyons, L. (1989). Raters respond to rhetoric in writing. In H. W. Dechert & M. Raupach (Eds.), Interlingual processes (pp. 229–244). Tϋbingen: Gunter Narr.

Hamp-Lyons, L., & Zhang, B. W. (2001). World Englishes: Issues in and from academic writing assessment. In L. Flowerdew & M. Peacock (Eds.), Research perspectives on English for academic purposes (pp. 101–116). Cambridge: Cambridge University Press.

Hinkel, E. (1994). Native and nonnative speakers’ pragmatic interpretations of English texts. TESOL Quarterly, 28, 353–376. doi:10.2307/3587437.

Hughes, A., & Lascaratou, C. (1982). Competing criteria for error gravity. ELT Journal, 26, 175–182. doi:10.1093/elt/36.3.175.

Hyland, K., & Anan, E. (2006). Teachers’ perceptions of error: The effects of first language and experience. System, 34(4), 509–519. doi:10.1016/j.system.2006.09.001.

Jolivet, C. A. (1997). Comparing native and nonnative speakers’ error correction in foreign language writing. Texas Papers in Foreign Language Education, 3(1), 1–14.

Khalil, A. (1985). Communicative error evaluation: Native speakers’ evaluation and interpretation of written errors of Arab EFL learners. TESOL Quarterly, 19, 335–351. doi:10.2307/3586833.

Kobayashi, T. (1992). Native and nonnative reactions to ESL compositions. TESOL Quarterly, 26, 81–112. doi:10.2307/3587370.

Kobayashi, H., & Rinnert, C. (1996). Factors affecting composition evaluation in an EFL context: Cultural rhetorical pattern and readers’ background. Language Learning, 46, 397–437. doi:10.1111/j.1467-1770.1996.tb01242.x.

Lee, I. (2004). Error correction in L2 secondary writing classroom: The case of Hong Kong. Journal of Second Language Writing, 13(4), 285–312. doi:10.1016/j.jslw.2004.08.001.

Linacre, J. (1989). Many-Faceted Rasch Measurement. Unpublished doctoral dissertation. University of Chicago.

Linacre, J., & Wright, B. (1990). Facets [computer software]. Chicago, IL: MESA Press.

McNamara, T. F. (1996). Measuring second language performance. New York: Addison Wesley Longman.

Porte, G. (1999). Where to draw the red line: Error toleration of native and non-native EFL faculty. Foreign Language Annals, 32(4), 426–434. doi:10.1111/j.1944-9720.1999.tb00873.x.

Santos, T. (1988). Professors’ reactions to the academic writing of non-native speaking students. TESOL Quarterly, 22(1), 69–90. doi:10.2307/3587062.

Sheorey, R. (1986). Error perceptions of native-speaking and non-native speaking teacher of ESL. ELT Journal, 40(4), 306–312. doi:10.1093/elt/40.4.306.

Shi, L. (2001). Native- and nonnative-speaking EFL teachers’ evaluation of Chinese students’ English writing. Language Testing, 18(3), 303–325.

Song, B., & Caruso, I. (1996). Do English and ESL faculty differ in evaluating the essays of native English-speaking and ESL students? Journal of Second Language Writing, 5(2), 163–182. doi:10.1016/S1060-3743(96)90023-5.

Takashima, H. (1987). To what extent are non-native speakers qualified to correct free compositions: A case study. British Journal of Language Teaching, 25, 43–48.

Author information

Authors and Affiliations

Corresponding author

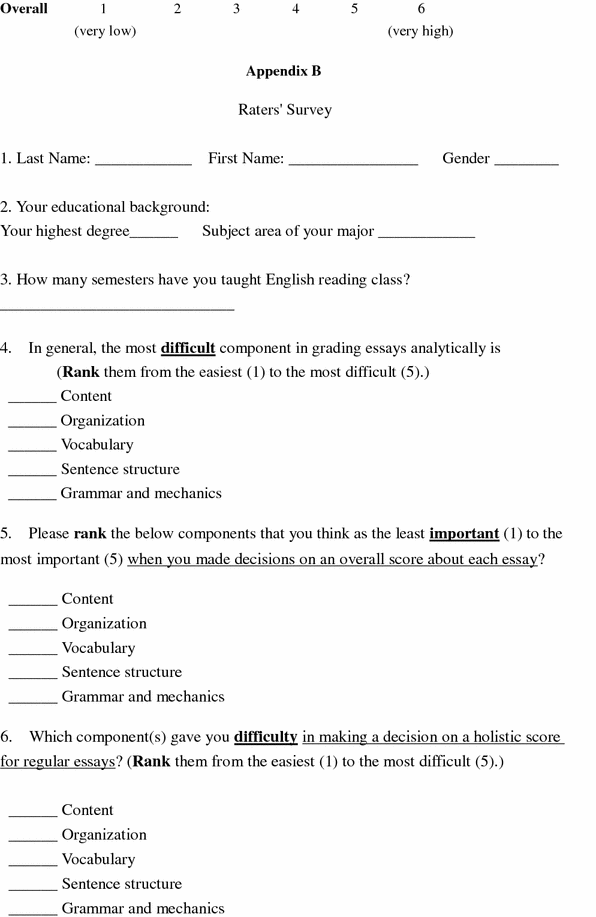

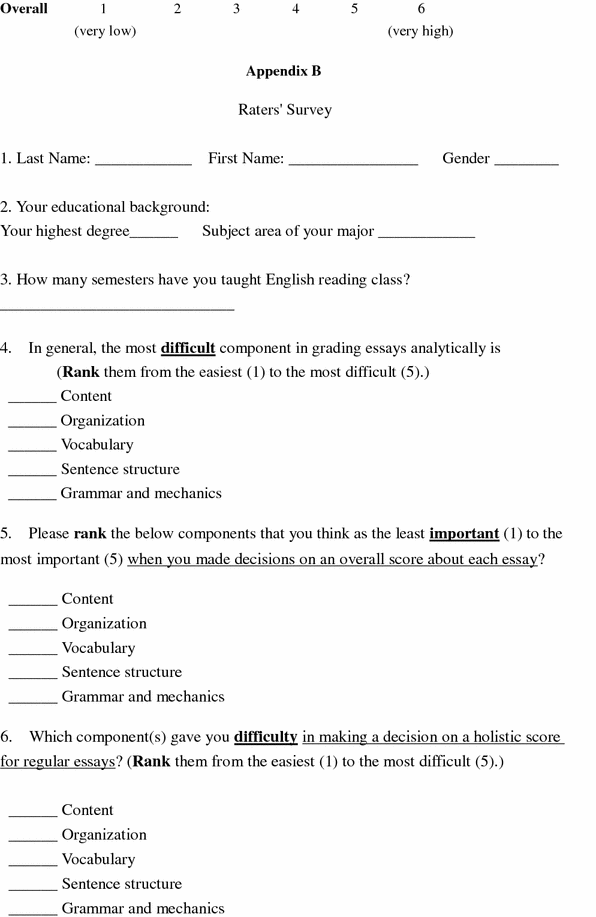

Appendices

Appendix A: Feature analysis form

Essay ID: ______________ Reader’s name: ________________

Directions: There are six statements for each feature. Please CIRCLE the statement that you think best represents the feature of the essay.

Content

-

6

effectively and insightfully develops a point of view on the issue and demonstrates outstanding critical thinking, using clearly appropriate examples, reasons, and other evidence to support its position

-

5

effectively develops a point of view on the issue and demonstrates strong critical thinking, generally using appropriate examples, reasons, and other evidence to support its position

-

4

develops a point of view on the issue and demonstrates competent critical thinking, using adequate examples, reasons, and other evidence to support its position

-

3

develops a point of view on the issue, demonstrating some critical thinking, but may do so inconsistently or use inadequate examples, reasons, or other evidence to support its position

-

2

develops a point of view on the issue that is vague or seriously limited, and demonstrates weak critical thinking, providing inappropriate or insufficient examples, reasons, or other evidence to support its position

-

1

develops no viable point of view on the issue, or provides little or no evidence to support its position

Organization

-

6

is well organized and clearly focused, demonstrating clear coherence and smooth progression of ideas

-

5

is well organized and focused, demonstrating coherence and progression of ideas

-

4

is generally organized and focused, demonstrating some coherence and progression of ideas

-

3

is limited in its organization or focus, or may demonstrate some lapses in coherence or progression of ideas

-

2

is poorly organized and/or focused, or demonstrates serious problems with coherence or progression of ideas

-

1

is disorganized or unfocused, resulting in a disjointed or incoherent essay

Vocabulary

-

6

exhibits a skillful use of language, using a varied, accurate, and apt vocabulary

-

5

exhibits facility in the use of language, using appropriate vocabulary

-

4

exhibits adequate but inconsistent facility in the use of language, using generally appropriate vocabulary

-

3

displays developing facility in the use of language, but sometimes uses weak vocabulary or inappropriate word choice

-

2

displays very little facility in the use of language, using very limited vocabulary or incorrect word choice

-

1

displays fundamental errors in vocabulary

Sentence structure

-

6

demonstrates meaningful variety in sentence structure

-

5

demonstrates variety in sentence structure

-

4

demonstrates some variety in sentence structure

-

3

lacks variety or demonstrates problems in sentence structure

-

2

demonstrates frequent problems in sentence structure

-

1

demonstrates severe flaws in sentence structure

Grammar and mechanics

-

6

is free of most errors in grammar, usage, and mechanics

-

5

is generally free of most errors in grammar, usage, and mechanics

-

4

has some errors in grammar, usage, and mechanics

-

3

contains an accumulation of errors in grammar, usage, and mechanics

-

2

contains errors in grammar, usage, and mechanics so serious that meaning is somewhat obscured

-

1

contains pervasive errors in grammar, usage, or mechanics that persistently interfere with meaning

Rights and permissions

About this article

Cite this article

Lee, HK. Native and nonnative rater behavior in grading Korean students’ English essays. Asia Pacific Educ. Rev. 10, 387–397 (2009). https://doi.org/10.1007/s12564-009-9030-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12564-009-9030-3