Abstract

The subjectivity and inaccuracy of in-clinic Cognitive Health Assessments (CHA) have led many researchers to explore ways to automate the process to make it more objective and to facilitate the needs of the healthcare industry. Artificial Intelligence (AI) and machine learning (ML) have emerged as the most promising approaches to automate the CHA process. In this paper, we explore the background of CHA and delve into the extensive research recently undertaken in this domain to provide a comprehensive survey of the state-of-the-art. In particular, a careful selection of significant works published in the literature is reviewed to elaborate a range of enabling technologies and AI/ML techniques used for CHA, including conventional supervised and unsupervised machine learning, deep learning, reinforcement learning, natural language processing, and image processing techniques. Furthermore, we provide an overview of various means of data acquisition and the benchmark datasets. Finally, we discuss open issues and challenges in using AI and ML for CHA along with some possible solutions. In summary, this paper presents CHA tools, lists various data acquisition methods for CHA, provides technological advancements, presents the usage of AI for CHA, and open issues, challenges in the CHA domain. We hope this first-of-its-kind survey paper will significantly contribute to identifying research gaps in the complex and rapidly evolving interdisciplinary mental health field.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Cognitive health assessments (CHA) measure an individual’s cognitive abilities and functioning. These assessments are typically used in diagnosing and monitoring conditions that affect cognitive abilities, such as dementia, Alzheimer’s disease, and other forms of cognitive decline. They can also assess cognitive abilities in individuals with brain injuries and neurological conditions. CHA typically involves a series of tests and tasks designed to evaluate specific aspects of cognitive function, such as memory, attention, language, reasoning, and perception. Healthcare professionals, such as neurologists, psychologists, or occupational therapists, usually administer these assessments. The dysfunction or inability of the brain to perform cognitive functions such as learning, thinking, remembering, comprehension, decision-making, and attention has been termed Cognitive Impairment (CI) [1]. A natural cognitive decline occurs as one ages; however, if this decline is more than what usually comes with age, it is termed the onset of Mild Cognitive Impairment (MCI) [2]. MCI can be triggered by stress, depression, stroke, brain injury, and other underlying health conditions. The condition gradually progresses from Early Mild Cognitive Impairment (EMCI) to MCI to Late Mild Cognitive Impairment (LMCI), and finally, to Alzheimer’s Disease (AD). Although AD is the most frequent type of dementia, MCI could progress to dementia with Lewy bodies as well. The impairment in all these stages affects a patient’s daily activities and can show varying degrees of cognitive decline symptoms [3, 4]. Figure 1 presents the early signs of MCI.

According to the Alzheimer’s Association, a leading voluntary health organisation in Alzheimer’s care, support, and research, by 2050, the number of AD patients is expected to rise to 13.8 million. The overall cost of care and management of AD is very high, and in 2019 alone, it was valued at $290 billion. Hence, it has been observed that diagnosed and managed MCI provides medical benefits and reduces long-term care costs. Forecasts suggest that early diagnosis in the MCI stage in the year 2050 can bring health care costs down by $231 billion [5].

MCI screening and early intervention is currently the most widely accepted strategy to manage AD. Diagnosis of MCI is established through various assessments. These assessments not only help diagnose but also help characterise cognition at the stage of MCI; hence they may help in understanding the cognitive pathophysiology [6]. Unfortunately, the lack of proper guidelines and standardised screening has resulted in undiagnosed MCI. The likelihood of this disease's progression and decline in cognitive health is becoming common. With the advent of the Internet of Medical Things (IoMT) and Artificial Intelligence (AI), several researchers have proposed automated assessment techniques to improve the accuracy of diagnosis. Needless to say, this has been driven by AI and Machine Learning (ML) due to their recent contributions in the methodological developments for diverse problem domains, including computational biology [7, 8], cyber security [9,10,11,12], disease detection [13,14,15,16,17,18,19] and management [20,21,22,23,24,25], elderly care [26, 27], epidemiological study [28], fighting pandemic [29,30,31,32,33,34,35], healthcare [36,37,38,39,40], healthcare service delivery [41,42,43], natural language processing [44,45,46,47,48], social inclusion [49,50,51] and many more. This paper is a detailed study of different automated CHA techniques and technologies available in the literature, their challenges, and the future prospects in objectively assessing cognitive health using state-of-the-art technology. The notations used in the paper is listed in Table 1.

Existing Studies

Misdiagnosed or undetected CI can lead to a rapid decline in the mental health of a patient and can rapidly progress to AD. To automate the assessment process, many researchers have been working to develop systems that can use different technologies to automate CI assessment leading to accurate and timely prediction of MCI so that it’s progression can be slowed down and effective management of the disease can be put in place to improve the quality of life of the patients. Several reviews have been found on similar topics assessing the validity of different AI tools and techniques used for cognitive health management.

Authors in [52] reviewed different AI techniques used in detecting AD from MRI images. They discussed in detail the different MRI image-based datasets commonly used by researchers and the most prominent AI tools, like ML and CNN, used for feature extraction and classification of AD. The authors have explored only the image-based datasets and their efficacy in CHA.

Authors in [53] provided another review that discusses the AI approaches to predict cognitive decline in the elderly. The authors highlighted six different areas of features and datasets that researchers have used in employing AI techniques. These include socio-demographic data, clinical and psychometric assessments, neuroimaging and neurophysiological data, Electronic Health Record (EHR) data and claims data, various assessment data (e.g., sensor, handwriting, and speech analyses), and genomic data. Furthermore, the authors discussed about the future prospects and the ethical use of AI in CHA. One limitation of the paper was that it lacked an in-depth analysis of different AI tools and techniques.

Authors in [54] reviewed the different AI techniques like ML-supervised learning, unsupervised learning, Deep Learning (DL), and Natural Language Processing (NLP) to predict AD or develop diagnostic markers for AD. The authors discuss how researchers have used AI techniques to obtain and analyse data to predict AD. Augmented Reality (AR), Kinematic Analysis (KA), and Wearable Sensors (WS) have also been discussed as tools to establish digital biomarkers for AD. In this review, the discussion on AI techniques is brief and does not include the state-of-the-art AI tools for disease prediction and management.

Authors in [55] reviewed computer-aided diagnosis tools used in assessing and evaluating areas such as: 1) in the use of AI tools for early detection of AD, 2) in identifying MCI subjects who are at risk of AD, 3) in predicting the course and progression of the disease and 4) the use of AI in precision medicine for AD. It discussed the promising work of different researchers using ML, DL, NLP, Virtual Reality (VR),WS, etc., to analyse patient data. The authors discussed the possibility of precision medicine for AD using AI by highlighting novel research based on layered clusters.

Authors in [56] presented a detailed review of existing automated approaches used in neurodegenerative disorders, like AD, Parkinson’s Disease (PD), Huntington’s Disease (HD), Amyotrophic Lateral Sclerosis (ALS), and Multiple System Atrophy (MSA). It also includes a discussion on open challenges and future perspectives. The authors analysed symptom-wise computational methods that different researchers have used along with the classification methodologies and datasets used for each disease and their symptoms.

Authors in [57] presented a systematic literature review of 51 articles on using AI, speech, and language processing to predict CI and AD. The author categorised the features into text-based and speech-based and further elaborated on the speech and text-based datasets that different researchers have used in identifying digital biomarkers that can be assessed using ML. A detailed analysis of the current research regarding their objectives, population samples, datasets and datatypes used, feature extraction methods, pre-processing techniques, etc. have been performed. Unfortunately, the authors just explored one modality and analysed the role of natural language and speech processing in the prediction and diagnosis.

Authors in [58] reviewed 11 research studies that discuss different computerised assessment techniques like tablet-based cognitive assessment, remotely administered tablet and smartphone-based cognitive assessment, and other recent data collection systems and analysis procedures like speech, eye movement, and spatial navigation assessment. A study of their validation with AD biomarkers has been presented along with their potential in evaluating AD. This work just discussed the data acquisition techniques. It lacked a discussion on the potential barriers to implementation, future challenges, and data security issues.

Authors in [59] provided a systematic literature review on the significance of technologies producing digital biomarkers used for home-based monitoring of MCI and AD. The authors gathered research and categorised them according to the mode of automatic data collection via different sensors and other modalities, e.g., data from embedded or passive sensors in homes and cars, data from dedicated wearable sensors, data from smartphone-based automated interviews, Nintendo Wii, and VR. Also data obtained from secondary sources of everyday computer use and Activities of Daily Living assessment were included. Very little discussion regarding the different AI tools used in the domain and the future challenges was presented in this paper.

Authors in [60] also reviewed 16 papers discussing 11 assessment tools conducted over a computer, laptop, tablet, or touchscreen. The authors then evaluated all the outcomes and compared the accuracy, sensitivity, specificity, and Area Under the Curve (AUC) measure. The Authors in [61] compared different ML algorithms to detect and diagnose Dementia. The authors suggested a model whereby ML and MRI scan images can yield the best results. Authors in [62] reviewed different applications used for medical assessment of dementia and AD and presented a summary of features evaluated by different AD screening apps. It further listed the cognitive assessment tools used in various apps and their validity measures. It then reported their sensitivity and specificity measures to evaluate the performance of these screening tools. The limitation of this paper is that the authors have only explored the use of smartphones for cognitive assessment.

Table 2 concisely compares the important survey papers and highlights the existing surveys’ gaps, focusing on the different AI techniques used to diagnose and predict CI using different data modalities. Considering the limitations and gaps of the existing surveys, there is a high need for research focusing on different the state-of-the-art AI techniques for a precise and timely diagnosis of the disease to slow down cognitive decline and it’s progression.

Research Methodology

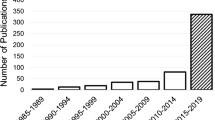

In formulating this research paper, we employed the systematic literature review process defined by the benchmark PRISMA approach. First, the existing surveys in the domain of AI-based CHA assessment were analysed to identify the research gaps. Keeping the research gaps in mind, an electronic search was performed using the keywords “Cognitive” and/or “Assessment” and/or “Impairment” and/or “Mild Cognitive Impairment” and/or “Dementia” and/or “Alzheimer’s” and/or “Artificial Intelligence”, over the different digital repositories like Google Scholar, IEEE, Elsevier, Springer and ACM to find papers relevant to our study. Only high-quality peer-reviewed articles from reputed journals, conferences, workshops, and books were selected. Papers were included based on relevancy, accuracy, and timeliness. All research studies before the year 2018 were excluded from the research. After performing the initial search, screening and shortlisting were done systematically. Initially, articles were screened based on their titles and relevance to the research objectives, and then they were further shortlisted by carefully reviewing the abstracts and contributions of the articles. Low-quality and irrelevant papers were excluded at each stage. The selected articles were then scrutinised. Finally, the included articles referenced in the survey have been selected after scrupulously and meticulously studying them.

Motivation

With the prevalence of cognitive diseases, the need for timely diagnosis and management is highly essential. At the current pace, it is estimated that by 2050 the cost of disease management and care services is expected to increase 9.5 times worldwide, which amounts to approximately 9.1 trillion dollars [63]. Delayed or missed diagnosis has been the leading cause of the prevalence of this disease. It has been observed that MCI can result in an early onset of AD and dementia. Early, timely and accurate diagnosis is the key to managing and delaying the onset of the disease and reducing the related health risks. We performed extensive research to compile different studies by authors in the domain of early detection of CI. Existing surveys lack an in-depth search of all the AI techniques used for MCI, AD, and dementia detection using different data acquisition means and modalities. Existing systematic literature reviews and surveys focus on either a single data modality like neuroimaging, speech, language datasets and explore the different AI techniques used in detecting and diagnosing MCI, AD, and dementia. Some research papers briefly overview the different AI techniques employed in the domain. Only two researchers explored the different datasets that researchers have used.

To the best of our knowledge, no comprehensive survey has been compiled that discusses all the different AI techniques applied to different data modalities used for automated CHA, their challenges, the state-of-the-art technology, open issues, and future directions. Our work will assist future authors in developing solutions covering the identified research gaps and enhancing the CI assessment process so that the progression to AD can be reduced by timely management and intervention. Furthermore, this detailed survey will help identify the evaluation techniques that are most effective and accurate in the early detection of CI and predicting cognitive disease progression.

Contributions

This paper is a detailed survey and analysis of all major AI-based CHA techniques and approaches. It also highlights the challenges and future directions in the domain. The automation of cognitive assessment is rapidly progressing, transforming the process into an accurate, dependable, and objective one. Documenting and summarising all the findings into one comprehensive survey is the need of the hour so that future researchers can explore the research gaps and further improve the assessment process. Among the main contributions of the current paper are the following:

-

1.

We analyse the existing clinical cognitive assessment tools, their drawbacks, and the evolution of cognitive assessment tools and techniques through the incorporation of AI techniques.

-

2.

We highlight the different popular data acquisition methods currently used in obtaining data regarding CI individuals.

-

3.

We identify and evaluate the latest advancements in technology which can uplift the health industry and reduce the financial burden caused by misdiagnosis of CI and AD.

-

4.

We explore the different AI techniques researchers use like conventional ML, DL, and NLP.

-

5.

Lastly, we discuss the challenges and future directions in the domain of CHA, hence presenting an overview of the research gaps in the area.

Organisation

“Introduction” section introduces the paper which includes a discussion on the present background of CHA, a look at the existing literature reviews and surveys on similar topics, our motivation to compile this survey, and its contributions to the research domain. “Background and Enabling Technologies for Automated Cognitive Health Assessment” section explains all the enabling technologies used for CHA, like IoT and cloud computing. This is followed by “Artificial Intelligence Techniques for Assessing Cognitive Health” section, which provides a detailed discussion of the different AI techniques researchers use for CHA. “Data Acquisition Channels” section explores different data acquisition methods used in acquiring data to analyse using AI techniques. “Cognitive Health Assessment Datasets” section presents a comprehensive table highlighting the different datasets researchers have used for CHA. Finally, “Challenges and Open Issues and Future Directions” section discusses the challenges, open issues, and future directions in the field of AI for CHA. Figure 2 shows the structure of the paper.

Background and Enabling Technologies for Automated Cognitive Health Assessment

This section presents the existing background and enabling technologies for automated cognitive health assessment.

Present Background of Cognitive Healthcare Assessment

The current diagnosis of cognitively impaired individuals is a step-by-step process. A patient undergoes an initial assessment at a local clinic. Further testing is done at the neurological centre. Various screening tools like self-administered questionnaires, interview-administered assessments, telephone-based assessments, and iPad assessment versions have been introduced to screen patients with cognitive symptoms [64]. According to PubMed, roughly eight cognitive domains are evaluated in a cognitive assessment: sensation, perception, motor skills and construction, attention and concentration, memory, executive functioning, processing speed, and language and verbal skills [65]. To assess these domains, several screening tools have been used in the past ten years [64, 66]. Table 3 lists some of these. Furthermore, Fig. 3 presents the cognitive assessment domains.

The available objective methods for assessing Cognitive Impairment are frequently based on interview-based and self-administered questionnaires or are conducted in the clinical setting where an artificial environment can influence response, thus increasing the possibility for biases and misinformation. Automating the assessment and prediction process is the key to timely diagnosis and management.

Enabling technologies (i.e. Internet of Things (IoT), Cloud Computing, Edge Computing, Internet of Medical Things (IoMT), Big data Analytics, 5G and Beyond, Ubiquitous Computing, and Virtual Reality) play a vital role to improves the way of living style of cognitively impaired individuals. This section provides the enabling technologies for automated cognitive health assessment.

Internet of Things

IoT is one key enabling technology for automated cognitive health assessment. IoT is a vast linked network of various components that enable smart devices to detect, distribute and evaluate data and deploy these devices in different domains like food chain management, healthcare monitoring, smart homes, smart cities, and environmental mentoring [69, 70]. A considerable increase has been in IoT devices like smartwatches, sensors, smoke detectors, heartbeat monitors, smart homes, and smartphones. There were 8.4 billion IoT devices in 2020, which will touch 20.4 billion in 2022 [71]. IoT is significantly growing in the healthcare sector. The IoT industry is slowly picking up pace due to many issues, such as scalability, accepted standards, heterogeneity, and integration in IT infrastructure. To monitor patients’ health, wearable devices are gaining popularity in IoT. For the duration of medical examination and treatment, wearable devices are connected to an individual’s body to collect patient health data.

Smart Home

IoT significantly improves the living style by introducing smart homes. A smart home provides the ability to perform daily routines from simple to complex tasks to cognitively impaired individuals. Various sensors embedded in smart homes, like temperature, motion, heat, and light, are intelligent enough to make decisions for the smart home environment. In the healthcare system, smart homes are used for activity recognition to promote sustainability and improve the lifestyle of cognitively impaired individuals [72]. The activity recognition process recognises the individual’s daily activities, such as preparing food, eating, drinking, toileting, reading newspapers or books, walking, sleeping, watching TV, and home cleaning. Several techniques are available for activity recognition, like on-body intrusive sensors, on-body non-intrusive sensors, and wearable devices [73]. Still, smart homes and smartphones are frequently used due to their non-obtrusive behaviour.

The motivation for using IoT for a cognitive health assessment is to provide immediate care and treatment to cognitively impaired individuals. IoT devices help keep track of cognitively impaired individuals’ daily activities to perform daily life tasks efficiently. IoT devices also allow healthcare professionals to monitor cognitively impaired individuals remotely.

The healthcare sector faces several significant challenges in the security and privacy of individual data. IoT devices help collect data for efficient treatment, but private data may be lost. The availability of IoT devices in the health care system makes personal data a more valuable target for cyber attackers. Data Analysis is also a challenge for IoT devices because the growing number of sensors, smart devices, and connected things indicate that a vast volume of data is being generated on daily bases; thus, it is challenging for IoT in the healthcare system to be able to analyse data and extract exact data for treatment. The connectivity of smart devices has also become an issue nowadays. A disruption in internet connectivity interrupts the interaction between healthcare professionals and cognitively impaired individuals. This can be disastrous for the monitoring process. IoT is a vast linked network that is used for cognitive health assessment. The primary purpose of using IoT is to assess people’s mental health and provide immediate care and treatment. In the healthcare system, IoT has gained immense importance. The healthcare system faces several challenges related to privacy and security, data analysis, and internet connection interruption.

Cloud Computing

Cloud computing has become the basic need of the healthcare industry due to complete access, automation backups, and disaster recovery options. Cloud computing provides an adaptable architecture where data is accessible from different locations without losing it [74, 75]. In the health care system, cloud computing offers a new level of safety and low-cost treatment. It helps healthcare professionals conduct voice and video appointments and access patients’ required private data for better treatment.

Cloud computing is impressively helpful in the healthcare sector. Telemedicine is the major motivation for using cloud computing for cognitive health assessment, as healthcare professionals can access a patient’s clinical history and mobile data through mobile telemedicine applications [76]. Thus cloud computing provides an opportunity to improve fast services, easily share information, improve operational efficiency and streamline costs.

Cloud computing is an emerging technology and faces many challenges in information handling in the healthcare industry. Security is the most common concern of cloud computing in healthcare. HIPPA compliance, data protection, and infrastructure changes are a few problems in cloud computing.

Cloud computing is a new model for computing resources and self-service internet infrastructure. Cloud computing improves patients’ immediate care and treatment at a low cost, and healthcare professionals can easily access patients’ clinical histories. Cloud computing also faces security and privacy problems in the healthcare sector. It is noticed s that cyber-attacks have been patiently reduced by compressing cloud computing to other storage magnesium [77].

Edge Computing

Edge computing and IoT are potent ways to analyse data in real-time quickly. Edge computing is a distributed computing framework in which data is collected, stored, processed, and analysed near the data source instead of in a centralise data-processing warehouse. Edge computing allows IoT devices to collect and process data at the edge where it is collected. Cloud computing becomes an inefficient structure for vast data processing and analysis collected from IoT devices [78]. Thus, edge computing becomes a basic need for moving data and services from centralised nodes to the network’s edge.

In the healthcare sector, edge computing plays a vital role. The primary motivation for edge computing is data efficiency, increased data security and ethical integrity, and reduced dependency on remote centralised servers [77].

Edge computing is also facing some major problems in the healthcare industry. It has been noticed that the primary challenges are coping with large data sets produced by medical sensors, patients’ personal medical data legal issues, and the integration of artificial intelligence in a 5G environment. Some challenges in intelligent manufacturing include operation and maintenance, scalability, and reliability of the data centres [79].

The exploration of edge computing is moving rapidly. Edge computing has become a basic need for moving data and services to the network’s edge due to limitations in the cloud computing platform. Edge computing enables real-time medical equipment management and helps monitor patient history like glucose monitors and blood pressure cuffs remotely, alert medical professionals regarding patient health [80, 81]. Security and lack of consistent regulation is the noticeable challenge for edge computing.

Internet of Medical Things (IoMT)

The internet of medical Things (IoMT) is a huge network of physical devices integrated with sensors, network connections, and electronics, enabling the devices to collect patient data for medical purposes. The IoMT has been called “Smart Healthcare” as the technology for creating a digitised healthcare system, connecting available medical resources and healthcare services. IoMT technology enables virtually any medical device to connect analyses and send data across digital devices and non-digital devices such as hospital beds and pills [82]. IoMT improves healthcare quality while reducing costs. IoM is an infrastructure of network software, health system devices, and service-connected to collect user data.

The major motivations for using the IoMT are Real-time data care systems and healthcare analytics. The IoMT in the medical field allows doctors to manage operations data systematically. Further to healthcare experts, the data is then examined and delivered to offer a better health solution for patients in real-time [83]. The real-time data care system can give the best health solution.

IoM is also facing challenges in the healthcare field, like other enabling technologies. Security and Privacy of IoT data are still on top. IoM has introduced other significant challenges like lack of standards and communication protocols, errors in patient data handling, data integration, managing device diversity, and interoperability [84]. IoT devices have provided many ways to improve data collection and quality. IoM is considered the best option for collecting real-time patient data and monitoring. IoM has connected doctors, patients, researchers, manufacturers, insurers, and industries [85]. In the future, it is estimated that bringing the IoT into medicine will help in more substantial, healthier, and easier patient care.

Big Data Analytics

Big data is vital in improving healthcare delivery, helping to measure and evaluate comprehensive healthcare data [86]. Much medical data must be integrated and accessed intelligently to support better and fast health care. Big data introduced a new network by measuring and monitoring data processes digitally, and we can compare data more efficiently. Such insights facilitate streamlined workflows, greater efficiencies, and improved patient care. Big data only benefits healthcare when structured, relevant, intelligent, and accessible.

The primary motivation to use big data analytics in healthcare is to provide insight from large data sets and improve outcomes at a low cost. Big data analytics reduce medical errors, detect disease early, and provide more accurate treatment with real-time data collection. Big data analytics is associated with many challenges like data collection, cleaning, security of collected data, storage, and real-time updates [87]. Accessing big data from external sources is also a big challenge. The Healthcare sector is still concerned with privacy and security.

It has been noticed that big data analysis plays a major role in healthcare. In the healthcare industry, a massive amount of data is generated daily, stored in different locations, and expected to be efficiently accessed by healthcare professionals; thus, big data can potentially improve the quality of healthcare delivery at low costs [88]. Meanwhile, big data analysis faces security and privacy issues, but at the same time, it is considered the best technology to handle vast data effectively.

5G and Beyond

The promise of digital health is materialised without switching to fifth-generation (5G) cellular technology. 5G network directly contribute to better diagnosis and faster triage with higher bandwidth and low latency, saving lives [89]. 5G connected wearables (e.g. smartwatch, bands SGPS/GPRS body control, VR headset, and smart glasses) will facilitate real-time data streaming [90]. 5G will enrich everything from prevention to treatment to rehabilitation, teaching, and mentoring.

The primary motivation for using 5G networks is speed. 5G networks will allow devices to download more than 1 GB of data in one second; it could be 100 times faster than today’s 4G. 5G promises high bandwidth and low latency. 5G can make many areas of telehealth, remote surgery, and continual treatment information efficient.

Health care is about communicating and connecting better. 5G will cause a paradigm shift in healthcare. However, with the implementation of the 5G network, many companies and individuals are concerned about the loss of sensitive information and data. Data mismanagement, medical identity theft, health privacy, and data security are the big challenges for 5G [91].

5G network can make communication easier, faster, and more real-time. In the healthcare sector, the 5G network can boost collaboration between healthcare professionals and make them collaborate during surgery and scans to provide faster care and health treatment to patients [92]. 5G can make smart hospitals efficient and reliable at low latency. 5G directly supports telemedicine in the aspect of security and privacy.

Mobile Computing

People can access data and information from everywhere through mobile computing. Mobile computing forms like Cell phones, tablets, wireless laptops, and push-to-talk devices make life easy. Mobile computing provides more security than other enabling technologies. Mobile computing strengthens the IoT health-based system with various services, applications, third-party APIs, and mobile sensors [93].

Mobile Computing is used to support healthier lifestyles. Mobile computing allows patients to stay out of hospital beds and give them home-based healthcare services at a low cost. Mobile computing monitors patient health in surgery, spectroscopy, and Magnetic Resonance Imaging (MRI) [94]. It is helpful to maintain and secure data storage and diagnostic databases.

Mobile computing is also facing many challenges, like other enabling techniques. The major challenges are the usability of mobile applications, system integration, data security and privacy, network access, and, most importantly, reliability.

Mobile computing links the human brain and computers to improve the healthcare system. The computer system can tackle complex decision-making processes by learning patterns and behaviours and becoming more intelligent [95]. Mobile applications are vital in giving the cognitively impaired a healthier lifestyle.

Virtual Reality

Virtual reality (VR) is a computer-generated simulation that allows users to simulate a situation and experience of interest using a VR headset [96, 97]. VR can be similar to or completely different from the real world. VR is revolutionising the healthcare industry by using different VR applications. Moreover, VR improves the knowledge and skills of medical professionals and students. Currently, many medical organisations are paying attention to various VR medical training. VR plays a vital role in different areas of healthcare, facilitating medical trainers, treatment of phantom limb pain, VR therapy, treatment of patients, Relief tools for doctors, helping autistic children and adults, pain management, VR surgery simulation, and real-time conferences.

VR has many drawbacks in the healthcare sector, like the high cost of VR software and equipment, VR addiction, Disorienting users, lack of proper trials, and insufficient training.

Healthcare is the most important field, whether in the past, present, or future. VR is considered the best option for cognitive health assessment, establishing symptom correlates, identifying differential predictors, and identifying environmental predictors [98].

Artificial Intelligence Techniques for Assessing Cognitive Health

Artificial Intelligence (AI) is a scientific field that falls under the computer sciences discipline. AI’s primary focus is on building systems or machines. These machines help carry out tasks typically involving decision-making and human intelligence. AI algorithms learn from datasets and continuously update their learning on the availability of new data [99]. The output of AI models depends on various factors, including the size of the dataset, feature selection, and parameter selection. AI techniques are designed to handle large volumes and the complexity of datasets. These AI techniques produce ideal results in various complex examples and are more accurate and efficient than human beings [100]. The development of various AI techniques has contributed effectively to early detections, disease diagnoses, and referral management because experts are limited regarding performance, knowledge diversity and daily exertion can also affect their performance. AI-based systems are addressing these limitations. Machine learning (ML) is a sub-category of AI that learns from the data without being explicitly programmed. ML algorithms are being used in solving problems with high level of similarities within data [101]. The advancement in machine learning techniques has also changed the field of computer-assisted medical image analysis and computer-assisted diagnosis (CAD). ML techniques have shown promising results in the timely prediction of disease. In this section, various ML techniques, including deep learning [102], supervised and unsupervised approaches [103], Reinforcement Learning [104], Natural Language Processing [105], and Computer Vision [106] have been discussed to assess the cognitive health of a person.

Supervised Learning Approaches

Supervised Learning (SL) approaches need labelled data (e.g. diagnosis of cognitive disorder vs healthy people). This labelled data is given as input to a model, which learns from this labelled data. Based on the learning, the trained model predicts the output [103]. The correctness of the SL models depends on the “ground truth” behind the labelled outcomes. Figure 4 illustrates how supervised learning occurs. Supervised learning techniques are divided into supervised deep learning techniques and ML techniques. Below we have given a comprehensive tour of state-of-the-art supervised learning approaches.

Machine Learning

Supervised machine learning algorithms are designed for regression and classification-based problems. Various supervised learning techniques have been used for assesment [72, 107,108,109,110] and detection of cognitive health diseases [111,112,113,114, 114,115,116,117,118,119,120,121,122,123,124]. Popular examples of supervised ML techniques include Bayesian, Decision trees, Support Vector Machine (SVM) and its variants, Logistic regression, and many more. Authors in [113] a supervised machine learning method are discussed to detect patients with cognitive disorders. It utilised a multifold Bayesian kernelisation approach and classified the patients into three classes, namely Alzheimer’s, MCI, and normal controls. This method produced good results in identifying Alzheimer’s and normal controls but not in identifying patients with MCI. Catá Villá [125] Bayesian classifier used for Alzheimer’s disease detection. This technique achieved accuracy up to 92.0% when applied to the MRI dataset [126]. Another technique that used a Bayesian classifier to identify Alzheimer’s disease is presented in [112]. However, it used PCA and Linear Discriminate Analysis to extract features before applying Bayesian. Extracting features with PCA and LDA boosted the performance of the proposed model. Input images used in this method are PET and Single Photon Emission Computed Tomography (SPECT).

Authors in [115] utilised supervised learning and used Random Forest to classify multi-class Alzheimer’s Disease with an accuracy of 92.4%. Laske et al. [114] proposed a Support Vector Machine to classify subjects with Alzheimer’s disease. This technique scored an accuracy of 81.7%. Authors in [116] PET and SPECT images are used for the detection of cognitive health of a patient using SVM. It has been shown that classification using SPECT images achieved higher accuracy than PET images. SVM is used for both regression and classification-based problems. SVM and its variants show promising results in identifying people with cognitive health issues. SVM-based Alzheimer’s detection [117] showed that instead of using a single input image, two types of images (MRI and FDG-PET) are combined for input to SVM could significantly increase the detection accuracy of Alzheimer’s patients. Input images used in [117] are taken from ADNI and Leipzig Cohorts datasets. SVM-based Alzheimer’s detection model [127] focused on Hippocampi input and distinguished patients with Alzheimer’s disease from healthy controls with an accuracy of 94.6%. Zhang et al. [128] classified elderly subjects into three classes, Alzheimer’s, MCI, and Normal control. They utilised 5-fold cross-validation for KSVM-DT. Authors in [129] combined a non-negative matrix factorisation with an SVM classifier to distinguish between patients with Alzheimer’s and healthy controls. Authors in [130] used combined MRI and SPECT images with SVM, and good results were obtained for disease identification. Histogram and Support Vector Machine are combined to distinguish patients with Alzheimer’s Disease from other normal subjects. Using MRI images as input, accuracy, sensitivity, and specificity of 94.6%, 91.5%, and 96.6%, respectively, proved the technique’s novelty. Another hybrid technique is proposed in [118], which stacked Wavelet Transform and SVM together for diseased and normal patients classification. It produced accuracy, sensitivity, and specificity of 80.44%, 87.80%, and 73.08%, respectively.

Logistic Regression performs classification for Alzheimer’s disease by looking into the probabilistic value returned through the logistic sigmoid function. Authors in [119] have shown that timely detection and progression of Alzheimer’s disease can be detected with the help of logistic regression classifier. A two-staged methodology is presented in [120], which used Logistic regression to detect dementia. Authors in [131] introduced trace ratio linear discriminate analysis (TR-LDA) to distinguish patients with Dementia disease from healthy subjects. Fisher Linear Discriminant (LDA) develops a linear discriminant function which results in a minimum error. This hybrid technique worked well and achieved an accuracy of 90.01%.

Meng et al. [132] found new areas of the brain which are helpful in the early diagnosis of Alzheimer’s. Feature extraction described by them is a fusion of voxel-based features extracted from MRI and eigenvalues of Single Nucleotide Polymorphisms that can identify the important brain voxels that can classify AD, early mild cognitive impairment, and healthy control by using single kernel SVM and standard multi-kernel SVM. Another algorithm for early detection of Alzheimer’s is introduced in [121]. This technique is built upon an operational research’s subdiscipline Multicriteria Decision Aid (MCDA) classification method. To adjust the weights and thresholds of the MCDA classifier, a Genetic Algorithm engine and ELECTRE IV algorithm are deployed. Authors in [108] utilised the three segments of the MRI dataset: Corpus Callosum, Hippocampus, and Cortex. SVM is used to classify the controls and attained an accuracy of 91.67% in the early Alzheimer’s disease finding.

Authors in [111], used resting-state functional magnetic resonance imaging (rsfMRI) to compute the time series of some anatomical regions and then applied the Latent Low-Rank Representation method to extract suitable features. For the classification task, SVM was used to classify all the input patients in either of the classes, i.e. MCI and healthy controls. The obtained classification accuracy for this method is more than 97.5%. Experiments are performed using images of 43 healthy subjects, 36 mild cognitive impairment patients, and 32 Alzheimer’s patients.

In [109], Clinical Assessment using an Activity Behaviour (CAAB) based approach is described where a person’s health is assessed by detecting the change in their behaviour. CAAB considered the activity-labelled sensor dataset to find activity performance features. From these features, it then extracted statistical activity features (variance, autocorrelation, skewness, kurtosis, etc.), which are given as input to an SVM to calculate the cognitive and mobility scores. Authors in [72], used supervised learning to examine the simple daily living activities and complex daily living activities performed by smart home residents. This technique was assessed based on temporal features and classified the individuals into healthy, Mild Cognitive Impairment, and dementia. PCA was used to select the most valuable features. This feature selection step is beneficial in avoiding overfitting, and at the same time, it improves accuracy and training time. Selected features are then used to classify the individuals into various classes. Classification models discussed in [72] are Decision Tree, Naıve Bayes, Sequential Minimal Optimization, Multilayer Perceptron, and Ensemble AdaBoost. Among these classification models, Ensemble Adaboost best predicted individuals with cognitive impairment. Authors in [110] showed that a person’s cognitive health could easily be assessed based on the performance of different tasks. For this reason, the Day Out Task (DOT) is designed. DOT includes various activities and subtask performs to complete these activities. Various participants are asked to perform these tasks, and their response is captured using sensors. Features collected from these sensors are then fed into a machine learning algorithm to determine the quality of an individual’s performance based on DOT. Supervised and unsupervised machine learning techniques are used to classify the collected features. The supervised approach uses SVM with sequential machine optimisation and bootstrap aggregation. For the unsupervised approach, PCA is used to reduce the dimensions. Min-max normalisation is then applied to transform the variables to a uniform range. SVM is finally used to classify the participant into various classes depending on the sensor-based activity features. Classes defined in this work are dementia, mild cognitive impairment, and cognitively healthy.

The technique presented in [107] detected behaviour change through smartwatch data. These smartwatches are designed to collect data continuously. The main goal is to detect and quantify the difference in overall behaviour activity patterns promoted by the intervention. Permutation-based change detection (PCD) algorithm detects the change in activity pattern. The autoptimisationd a Permutation-Based Change Detection (PCD) method. A random forest algorithm is applied to detect behaviour normalisation. The algorithm calculated the change in behaviour between time points with an accuracy of 0.87.

Authors in [133] proposed an onset detection model for dementia and MCI. Motion, power usage, and other related sensors detect activity in a smart environment. The dataset used is self-created and collected from an IoT-enabled smart environment. Features are classified using several supervised machine learning algorithms and Ensemble RUSBoosted Model. These models have achieved 90.74% accuracy in detecting the onset of dementia. Limitations of this work include limited feature values, and while collecting IoT-based data, security and privacy concerns are not considered. Authors in [134] used a supervised dictionary learning technique that applies Correlation-based Label Consistent K-SVD (CLC-KSVD) on extracted patches spectral features from EEG.

Anomaly detection techniques help to find the unrelated sample, also called an anomaly, from the given data. Such anomaly detection techniques are applied to the features to predict a sudden change in a person’s behaviour, which indicates a cognitive health issue. In [135], an anomaly detection method is proposed, which performs well than traditional anomaly detection methods. This algorithm is designed to provide indirect supervision to unsupervised methods. An Indirectly Supervised Detector of Relevant Anomalies (Isudra) detects anomalies from time series data. Isudra employed Bayesian optimisation to select time scales, features, base detector algorithms, and algorithm hyperparameters.

Workplace stress is one of the reasons for producing cognitive health issues. Therefore, Alberdi et al. [136] collected data from an office setting and applied various machine learning techniques, Naïve Bayes, Linear SVM, Ada boost, and C4.5, to this data. It is found that mental stress and workload levels define an employee’s behaviour change. Also, computer-use patterns combined with body posture helped predict a worker’s cognitive health.

For dementia patients, repeating the same task, e.g. taking medicine and eating a meal, could be dangerous. Therefore, these repeated tasks must be recognised correctly. To recognise the similar behaviour of a person with dementia. Authors in [137] integrated hand movements with indoor position information to identify the activity performed. Data is collected with the help of wearable sensors. In the DPMM-Layer, the hand’s movements are clustered based on feature values, so each hand’s movement is associated with one specific cluster. The indoor position is detected on the Bluetooth sensor’s data using the supervised algorithm Random Forest and Finite State Machine to recognise the individual’s position. The precision and recall of “eat” is 92.1% and 97.5%, and for “having Medicine” are 99.1% and 99.5%, respectively.

Authors in [138] discussed the relationship between behaviour and cognitive health. A set of digital behaviour markers is developed to predict clinical scores from activity-labelled time series data. Data is collected by using data collected from ambient and wearable sensors of 21 participants in a smart home environment. These digital behaviour markers aim to predict clinical scores for the data collected. Mobile-AR and Home-AR algorithms are used to label activities in real time. Gradient boosting regressor is used to predict the clinical score.

Deep Learning

Deep Learning is a subset of ML that shows promising results when the input data is unstructured and complex. The deep learning algorithm requires input data in large volumes. Deep Learning models consist of multiple layers, each with a unique way of interpreting the data. Spasov et al. [139] discussed a deep learning algorithm that took multiple sorts of data as input. The input data comprised structural magnetic resonance imaging, neuropsychological, demographic, and APOe4 genetic data. This approach used a deep convolutional neural network framework involving grouped and single convolutions. The most noticeable characteristic of this deep learning model is that this model can simultaneously classify the individuals into Mild Cognitive Impairment vs Alzheimer’s Detection and Alzheimer’s Detection vs healthy subjects and attained classification accuracy of 86%.

Choi and Jin [140] developed a deep learning system to evaluate a patient’s cognitive health. They considered 3D-PET images as input. This system used CNN to identify a person’s cognitive decline. The overall accuracy of this algorithm is 84.2%. Habuza et al. [141] came up with a model to detect the level of cognitive decline using MRI images. This model is based on a Convolutional Neural Network (CNN) classification model to estimate the level of cognitive decline. The architecture of this CNN model is similar to AlexNet. The T1-weighted MRI images dataset is pre-processed before being input to the CNN. To draw a line between healthy people and Alzheimer’s disease people, the Predicted Cognitive Gap is used as the biomarker. The proposed model is tested on a dataset that includes 422 healthy control and 377 Alzheimer’s disease cases. The performance of the proposed solution is 0.987 (ADAS-cog), 0.978 (MMSE) for averaged brain and 0.985 (ADAS-cog), 0.987 (MMSE) for the middle sliced image.

Authors in [124] analysed the effectiveness of ADNI data in determining how much the classification models can differentiate people with various cognitive diseases by classifying them into three classes. Multi-Layer Perceptron (MLP) models and a Convolutional Bidirectional Long Short-Term Memory model are explored in [124]. MLP model outperforms the ConvBLSTM model with an accuracy of 86%. Authors in [142] utilised the attention mechanism in deep network architecture to detect early-stage Alzheimer’s. The model is tested on a self-created dataset. Authors in [143] proposed the LeNet-5 deep model to distinguish Alzheimer’s patients using functional MRI (fMRI). The model produced a classification accuracy of 96.85%.

Authors in [144] predicted the existence of Alzheimer’s disease using multiple types of input data. The unique feature of this paper is that it presented deep learning models that use image and non-image data to detect Alzheimer’s disease. The input data consisted of socio-demographic, clinical notes, and MRI data. Deep convolutional Neural Network ResNet-50 predicts the clinical dementia rating (CDR) presence and severity from the input MRI whereas gradient boosted machine predicted Alzheimer’s using non-image data. The key limitations of this study include only 416 individuals whose MRI images are included in the dataset. Each image is composed of 51 selected slices of each patient’s MRI. The selection of slices is made carefully because eliminating a few slices may eliminate slices that help diagnose Alzheimer’s disease. In [145], another deep CNNs is proposed, which runs on MRI scans of the ADNI dataset. This deep model gave output by classifying each MRI image in either of the four classes, namely Alzheimer’s, Mild Cognitive Impairment, Late mild cognitive impairment, and healthy person. Various deep CNNs are tested, like GoogLeNet, ResNet-18, and ResNet-152; among these CNNs, GoogLeNet produced the highest accuracy of 99.88%. Authors in [122], a 3-dimensional VGG convolutional neural network is tested on two publically available datasets, namely, ADNI and OASIS. This 3D model has the advantage of preserving information as data loss may occur during converting 3-dimensional MRI into two-dimensional images and processing them by two-dimensional convolutional filters. Classification accuracy achieved by this 3D model is 73.4% on ADNI and 69.9% on the OASIS dataset. These results show that the 3D model outperforms the 2D network models. Table 4 presents the summary of supervised studies for cognitive health assessment.

Critical Analysis: Supervised learning techniques used for cognitive health assessment cannot detect cognitive disease but are also very helpful in detecting the progression of such cognitive disorders. As we have seen in the studies mentioned above, both traditional ML and DL techniques produced exceptional results in cognitive disease detection; nonetheless, the main challenge of these techniques is the availability of labelled data with interventions from human beings. In a hospital setting, such labelled data is unavailable and cannot be easily labelled at run time. Labelling data requires much effort by experts. DL techniques take the lead in performance as compared to ML techniques. Also, if a deep model is trained on one dataset, transfer learning techniques are available to apply this trained model to another dataset without retraining it from scratch. However, it is notable that the quality of the output of the deep learning model mainly depends on the size of the dataset. Data augmentation has addressed this issue to some extent, but as the size increases, it also extends the training time. Therefore, the DL technique is a tradeoff between performance and training complexity. Overfitting is another challenge with supervised learning techniques. Therefore, if it is possible to collect a large dataset, then deep learning approaches will be a good choice; otherwise, ML approaches should be considered.

Unsupervised Learning Approaches

Unsupervised Learning (UL) algorithms work with unlabeled data. A dataset for the classification task consists of data from all the classes, and an unsupervised algorithm is not privy to this knowledge [146]. Instead, the algorithm searches unstructured data for features and classifies them together in clusters. Figure 5 shows how data can be classified using the unsupervised learning approach. In this way, the data is segmented into different groups. All the grouped items share some common characteristics. Some items are classified as irrelevant, which means they do not possess the properties of a specific group. If this data is comprised of medical-related data, then involving a medical expert is a must to derive the meaning of identified clusters. In the following section, state-of-the-art Unsupervised Learning Approaches are discussed for the assessment [147,148,149,150] and detection [151,152,153,154,155,156,157,158,159,160,161,162] of the cognitive health of a person.

Machine Learning

Designing an activity recogniser for in-home settings and continuous data is very challenging. This difficulty is even more enhanced when the home residents are more than one. Estimating the number of residents and identifying each resident’s activity in a smart home is an open challenge that needs to be addressed. To address this challenge, [149] introduced an unsupervised learning approach based on a multi-target Gaussian mixture probability hypothesis density filter. If [149] is applied in the smart home environment. It can improve the accuracy of cognitive health assessment algorithms.

Authors in [152] discussed an unsupervised Alzheimer’s detection approach using a finite mixture model. Input data comprises a cerebrospinal fluid, magnetic resonance imaging, and cognitive measures. Researchers in [153] discussed an anomaly detection model which recognised activity by first applying a probabilistic neural network on the pre-segmented activity data obtained from the sensors deployed at different locations in a smart home. H2O autoencoder is used to identify the anomalous from the regular instances of activities. Anomalies are further categorised based on the criteria such as missing or extra subevents and unusual duration of action. Datasets for training and test purpose are Aruba and Milan datasets. The accuracy of detected achieved is more than 95% in all activities. For Alzheimer’s detection, [151] suggested a technique that used KNN to segment the areas in the MRI image. Authors of [163] presented an unsupervised technique on MRI data to detect various phases of Alzheimer’s disease. Authors in [164] provided techniques for making various clustering procedures more efficient. The authors aimed to describe cerebrum muscle physiognomies for assessing Alzheimer’s disease in various phases. Rodrigues et al. [148] discriminate AD cases from normal subjects by utilising K-means clustering. They observed K-means as the best method for an unsupervised diagnosis of EEG temporal arrangements. Authors in [154] K-means is applied to different data features to divide the subjects into pathologic groups. “Bio profile” [155] is analysed to reveal the pattern for Alzheimer’s disease. Here the researchers used K-means clustering to detect AD on the Alzheimer’s Disease Neuroimaging Initiative (ADNI) database. Detection of biomarkers is important, and therefore [147] suggested an unsupervised learning mixture modeling method to detect biomarker patterns of Alzheimer’s patients from the Alzheimer’s Disease Neuroimaging Initiative database. Authors in [156] identified the groups of patients with signs of Alzheimer’s and divided the patients into three clusters where three showed low, medium, and high extrapyramidal burden. The importance of brain imaging is highlighted in [165], where the researchers diagnosed AD or vascular dementia using the unsupervised model on brain imaging.

Authors in [159] LSTM process electronic health records, a deep learning technique to differentiate between a healthy person and a person with mild Cognitive Impairment. The clustering of patients is done by using a denoising autoencoder to represent the patient data better. The output of this autoencoder is visualised and clustered using t-stochastic neighbour embedding. The accuracy of LSTM is 73%, and the F1-score is 0.43. [159] highlights that if temporal characteristics of the patient’s data are incorporated into the deep learning model, it gives greater results in predicting Mild Cognitive Impairment. Authors in [157] is another unsupervised technique that used K-means clustering to divide patients with Alzheimer’s into clusters. A cortical thickness-based clustering method is proposed in [166]. Here the clustering is performed on the data collected from 77 patients’ MRI, PET, and cerebrospinal fluid (CSF).

Gamberger et al. [167] applied a multi-clustering method to an AD dataset of male and female patients comprising 243 biological and clinical features. Authors of [168] observed three clusters in a 3D-MRI, dominated by medial-temporal atrophy, parietal atrophy, and diffuse atrophy. Authors in [158] propose a clustering-based technique to detect Alzheimer’s using pathological data. Patients often face sleep problem issues with cognitive impairment. To detect Alzheimer’s through sleep disorder, [169] took samples of 205 patients with AD from the Alzheimer’s Disease Patient Registry to investigate patterns of sleep problems. The authors applied hierarchical cluster analysis.

Deep Learning

An unsupervised Convolutional Neural Network (CNN) is discussed in [170], which is used to diagnose cognitive diseases. PCANet learned the features from one slice and three orthogonal panels of MRI images and then used K-means clustering, an unsupervised classification technique. Experiments are done on the ADNI dataset. Results generated through this model are auspicious, with an accuracy of 95.52% for AD vs MCI classification and 90.63% accuracy for MCI vs NC when only one slice of MRI is taken as input. When three orthogonal panels of MRI images are considered, the accuracy improved even more, 97.01% for AD vs MCI and 92.6% for MCI vs NC. Here NC represents standard control.

Authors in [160] used a Stacked autoencoder to extract latent features on a huge set of features obtained from MRI and PET images. This is an unsupervised model for the assessment of the cognitive health of a person. Authors in [171] used a Stacked autoencoder accompanied by supervised fine-tuning to classify Alzheimer’s, Mild Cognitive Impairment, and healthy controls. Experiments were conducted on the ADNI dataset. A deep 3D convolutional neural network prediction model of Alzheimer’s disease is presented in [172], which can learn generic features capturing Alzheimer’s disease biomarkers. The advantage of this technique is that it can be used on different domain datasets. An autoencoder is an artificial neural network used to learn efficient coding of unlabeled data and its variant stacked autoencoders and a softmax output layer to detect Alzheimer’s disease from MRI and PET images [173]. The technique presented in [174] is a novel method for a high-level latent feature representation from neuroimaging data via an ensemble classifier. Authors in [175] used fMRI data to distinguish between MCI and healthy controls. The use of deep AutoEncoder with HMM produced good results in prediction.

Han et al. [176] proposed a two-step unsupervised anomaly detection approach based on multiple-slice reconstruction. This technique showed promising results by detecting AD at a late stage with AUC 0.894. Alzheimer’s detection across different disease stages using MRI is presented in [177]. This algorithm can detect Alzheimer’s disease at various locations. Unsupervised Alzheimer’s detection using the deep convolutional generative adversarial network is discussed in [178]. It performed anomaly detection on brain MRIs to diagnose AD and achieved an accuracy of 74.44%. Authors in [162] explore the anomaly analysis of Alzheimer’s disease using PET images for GANs. A feature vector is calculated using CNN and DCN. ADNI dataset is used to perform experiments. Authors in [179] proposed an algorithm to generate images of the brain for different stages of Alzheimer’s disease. They used PET images, and the algorithm used was deep GANs. Authors in [180] used a restricted Boltzmann machine to classify cognitive normal and early mild cognitive impairment of Alzheimer’s disease by applying the Restricted Boltzmann Machine to fMRI data. Authors in [150] employed a Conditional Restricted Boltzmann Machine to simulate Alzheimer’s disease progression from a 1909 patient. Table 5 presents the summary of unsupervised studies for cognitive health assessment.

Critical Analysis: In the supervised learning section, we have seen various models’ superb performance in cognitive health assessment. However, we can not always give lots of samples with supervision. Most of the time, the machine needs to learn from the training data, and here comes the role of unsupervised learning. These unsupervised methods save time and energy in labelling the data. It is a powerful tool for discovering patterns and structures in the unlabeled dataset, which we have seen is available in medical and smart home environments. Due to the high number of ADLs performed by the residents of the smart homes, it is not feasible to enumerate every activity. Even in hospital settings, EHR and other medical records are available in such a way that opens the avenue to exploit clustering algorithms to learn patient data automatically. However, such clustering techniques do not give good accuracy compared to supervised learning methods. Another important aspect to consider is that if the information is collected over a more extended period, it will have diversified features, making clustering difficult if several clusters are defined a priori.

Reinforcement Learning

Reinforcement is a sub-category of artificial intelligence which trains the model by rewarding desired behaviour and punishing undesired behaviour. The unique characteristic of Reinforcement Learning (RL) is that it solves the problem by allowing the agent to collaborate and interact with the environment. The agent takes the most appropriate action according to its learning. If the action is correct, the agent rewards itself and vice versa. In this way, the agent keeps on learning without the interference of any human being. The process of reinforcement learning is illustrated in Fig. 6.

RL has been used to solve various real-life problems [181]. Reinforcement learning shows promising results when applied in assesment [104, 188] and detection [182,183,184,185,186,187] of cognitive health. It can sustain change for a more extended time. Some important RL methods are discussed below:

Authors in [104] described a Deep Recurrent Q Learning-based Reinforcement Model (DRQLRM) based on the reinforcement learning process. This model is designed to investigate the presence of Alzheimer’s in individuals. In this model, a retraining stage has been added in which a recurrent neural network is used to reduce the overfitting. In the learning stage, the reinforcement approach identified the unknown pattern. Sensor data is collected from the CASA smart home of 400 persons. The dataset comprised Activities of Daily Living (ADL), such as cooking, bathing, and toileting. DRQLRM shows promising results with 98% Accuracy and 12% MSE. Authors in [182] presented an LSTM-based reinforcement learning technique to detect early dementia and guided the patients who have dementia to perform ADL. LSTM model is used to infer the current goal of the person by observing previous normal ADL patterns to infer the current state. The authors then used a situ-learning agent that helped individuals to take the right action, thus preventing adverse events while guiding individuals through the task sequence that led to the goal state. In addition, a naive agent is also used to resolve the uncertainty older adults with early-stage dementia might experience. The accuracy achieved by this algorithm is 90.1%.

Authors in [188] performed individual and population-level behaviour analysis from time series sensor data. They developed a novel algorithm-Resident Relative Entropy-Inverse Reinforcement Learning (RRE-IRL) — to analyse a single smart home resident or a group of residents from time series sensor data. By employing this method, an individual’s behavioural routine preferences are learned. It then analysed an individual’s daily routines and observed that routine behavioural preferences change over time. Furthermore, the behaviour preferences are used by a random forest classifier to predict a resident’s cognitive health diagnosis, with an accuracy of 0.84. One limitation of this technique is the participant sample size. Their analyses are based on large data collected from smart homes over many days. However, data for only eight participants are considered.

Authors in [183] proposed an interpretable multimodal deep reinforcement learning model. It helps in the diagnosis of AD. In the first step, the compressed-sensing MRI image is reconstructed using an interpretable deep reinforcement learning model. Then, the resultant MRI is fed into the CNN to generate a pixel-level disease probability risk map (DPM) of the whole brain for AD. Finally, an attention-based deep CNN is used to classify Alzheimer’s patients. The algorithm was tested on ADNI, AIBL, and NACC datasets and obtained an accuracy of 99.6% for ANDI.

A reinforcement learning framework is designed in [184] which uses an agent trained on clinical transcripts and sketches a disease-specific lexical probability distribution. Therefore, it detects MCI by dialogue with the patient in a minimum number of conversations. In [186] a reinforcement learning-based technique showed promising results. Multi-agent reinforcement learning is discussed in [185] which detected anatomical landmarks from MRI images using a novel communicative multi-agent reinforcement learning (C-MARL). Authors in [189] Alzheimer’s disease progression is presented by combining differential equations and reinforcement learning with a domain knowledge model. The researchers use deep Q-Network [187] for landmark detection in 3D medical scans in a single and multi-agent. Another automatic detection of anatomical landmarks is discussed by [186], which robustly localised target landmarks in medical scans and is also helpful in further investigation of diseases like Alzheimer’s. Table 6 presents the summary of reinforcement learning studies for cognitive health assessment.

Critical Analysis: Reinforcement learning has achieved tremendous success in the past years despite its complex nature, notably in games but unfortunately, in the medical context, particularly in cognitive health assessment applications, it has not appropriately benefited from this technique. In most AI-based health systems, the sequential nature of decisions is not considered. Instead, the decisions exclusively rely on the current state of patients. RL offers an attractive alternative to such systems, considering the immediate effect of treatment and the long-term benefit to the patient. Besides the potential of RL to make a revolution in the medical field, there are a few obstacles that have to be removed in order to apply RL algorithms in health assessment. RL algorithm typically learns by its action, but guesswork has no room in the medical field. Moreover, RL algorithms should be designed to learn from existing data.

Natural Language Processing Techniques

Natural Language Processing (NLP) is the ability of computers to understand the verbal and written communication of human beings [190, 191]. NLP includes speech recognition, sentiment analysis, and optical character recognition [192]. NLP works by transferring data into a format understandable by NLP algorithms. NLP has been used in assesment [193] and detection [105, 194,195,196,197,198,199,200] of cognitive health. In these applications, NLP techniques are applied to the natural language speech or text, followed by some ML techniques, i.e. Supervised Learning, Unsupervised Learning, and Deep Learning for classification purposes. Figure 7 is an illustration of the main features of NLP.

Authors in [198], speech recordings are transcribed, and linguistic and acoustic variables are extracted through NLP and automated speech analysis. These linguistic and acoustic variables help find a cognitively impaired person who faces difficulty in finding the correct word while speaking. NLP-extracted variables include lexical, semantic, and syntactic aspects of the recording. Acoustic variables include sound wave properties, speech rate, and several pauses. Spearman’s correlation is used to find the correlations between the NLP technique and clinically captured speech characteristics.

Speech disfluency is defined as any pause or abruption which occurs during fluent speech. Cognitively impaired people have a short vocabulary, which leads to speech disfluencies. Therefore speech disfluency can detect cognitively impaired people. Authors in [194] asked Patients to give spontaneous answers to the questions asked by an expert by recalling two short back and white films. Acoustic parameters in a patient’s speech signals are noted both manually and automatically. The ML technique then uses these features to classify participants into healthy and unhealthy classes. These features are then used to classify the patients in MCI and healthy controls classes with an F1-score of 78.8%. Acoustic parameters used are hesitation ratio, speech tempo, length and number of silent and filled pauses, and length of utterance.

NLP technique for detecting the mild cognitively impaired person is presented in [195]. Mann–Whitney U-test is used to select the most relevant features from speech. For classification into MCI and healthy control class, ML algorithms used are using K-nearest neighbours (K-NN), Support Vector Machines, Multilayer Perceptron (MLP), and a CNN. Classification Error Rate (CER) has been used to evaluate the results. Early-stage dementia and mild cognitive impairment are important to identify, and authors in [197] used Linguistic features with a Transformer-based deep learning model to detect whether the individual has dementia. This model can detect linguistic deficits with much accuracy.

Yi et al. [196] indicated that NLP models have the potential to extract lifestyle information from clinical notes. This lifestyle information can include extreme diet, physical activity, sleep deprivation, and substance abuse. Various ML models are trained and evaluated on NLP exacted data. The bagging, random forest, KNN, and random forest showed promising results among these models.

A method to detect preclinical stages of dementia is discussed in [201] where 96 people are asked to describe a complex picture, a typical working day, and recall a last remembered dream. Linguistic features of spontaneous speech transcribed and analysed by NLP techniques showed significant differences between controls and pathological states. These features include computing rhythmic, acoustic, lexical, morpho-syntactic, and syntactic features, and participants are categorised as healthy or cognitively impaired. The statistical analysis is performed by the non-parametric Kruskal-Wallis test and Mann–Whitney U-test.

Psychological disorders could affect cognitive health. Therefore detection of psychological disorders is also as important in the cognitive health assessment scenario. Psychological disorders like bipolar disorder, obsessive-compulsive disorder, and schizophrenia can be detected from speech input using the model discussed in [193]. This paper used an ASGD Weight-Dropped long short-term memory (AWD-LSTM) language model for psychological disease diagnosis.

In [202] progress notes and discharge summaries are parsed using NLP techniques. This paper has used NLP with Electronic Health Record data. Machine learning techniques logistic regression, multilayer 37 perceptrons, and random forest are used to classify impaired and healthy persons using the clinical terms extracted by NLP. Random forest method showed the best performance. The limitation of this technique is that it can only give good results if the input dataset size is large. Authors in [203] discussed the NLP approach based on clinical notes. This approach extracts lifestyle exposures and intervention strategies to predict Alzheimer’s disease. MetaMap tool maps biomedical texts to standard medical concepts using the Unified Medical Language System (UMLS). Validity of results is done by comparing the proposed algorithm with data captured independently by clinicians. Authors in [204] discussed the use of NLP. Features are extracted from electronic medical records with the help of the NLP algorithm, and then the classification algorithm identifies whether a person has dementia or not. Classification algorithms discussed in this paper are gradient boosted models, neural networks, lasso, and ridge regression. Authors in [205] developed a system for identifying MCI from clinical text without screening or other structured diagnostic information. NLP and Least absolute shrinkage and selection operator logistic regression approach (LASSO) were used to detect MCI. Chatting and immediate formation of new sentences can detect a person with cognitive impairment, as discussed by [199]. They proposed an automatic detection of cognitive impairment through chatbot dialogue. NLP algorithms were used to generate the sentences for a chatbot. Authors in [206] fully automated MCI detection technique is proposed based on multilingual word embeddings to create multilingual information units. Researchers trained an ANN using the spectrogram of the audio signal to detect Alzheimer’s in [105]. To distinguish between MCI and early-stage Alzheimer’s, patients are asked to perform tasks such as countdown, picture description, Scene description, and Semantic fluency (animals) [207]. The patients’ responses are recorded and analysed using NLP algorithms. Spontaneous speech again showed an excellent tool to be used in Alzheimer’s detection [208]. The authors proposed an NLP-based model for detecting early Alzheimer’s through spontaneous speech datasets in 2020. A recent technique [200] proposed an ensemble classifier based on four classifiers: audio, language, dysfluency, and interactivity. This system works with two modules proactive listener and ensemble AD detector. Authors in [209] explored the lexical performance through spontaneous speech to detect Alzheimer’s.

The relevance of acoustic features of spontaneous speech for cognitive impairment detection is shown in [210]. Here the authors applied machine learning methods for classification, which showed promising results. Authors of [105] claimed that they produced a fully automated audio file processing without manual feature extraction. Therefore they introduced a new direction of cognitive health assessment through NLP. Authors in [211] an algorithm is proposed for predicting probable Alzheimer’s disease using linguistic deficits and biomarkers with the help of NLP and machine learning. Authors in [212] automated analysis of Semantic Verbal Fluency (SVF) tests method is discussed for detection of MCI. Table 7 presents the summary of NLP studies for cognitive health assessment.