Abstract

Backtracking search algorithm (BSA) is a nature-based optimization technique extensively used to solve various real-world global optimization problems for the past few years. The present work aims to introduce an improved BSA (ImBSA) based on a multi-population approach and modified control parameter settings to apprehend an ensemble of various mutation strategies. In the proposed ImBSA, a new mutation strategy is suggested to enhance the algorithm’s performance. Also, for all mutation strategies, the control parameters are updated adaptively during the algorithm’s execution. Extensive experiments have been performed on CEC2014 and CEC2017 single-objective benchmark functions, and the results are compared with several state-of-the-art algorithms, improved BSA variants, efficient differential evolution (DE) variants, particle swarm optimization (PSO) variants, and some other hybrid variants. The nonparametric Friedman rank test has been conducted to examine the efficiency of the proposed algorithm statistically. Moreover, six real-world engineering design problems have been solved to examine the problem-solving ability of ImBSA. The experimental results, statistical analysis, convergence graphs, complexity analysis, and the results of real-world applications confirm the superior performance of the suggested ImBSA.

Similar content being viewed by others

Introduction

To solve the nonlinear complex optimization problems, the backtracking search algorithm (BSA) [1] is one of the most efficient and powerful tools because of the simplicity and user-friendly approach of the algorithm. Due to these advantages, numerous attempts have been made towards further improving the BSA, and at the same time, these improved versions have been applied to solve various optimization problems in the past few years [2,3,4,5,6,7,8,9]. For example, based on self-adaptive strategy design [10,11,12,13] and hybridization mechanism [2, 3, 11, 14], many variants of BSA have been studied by several authors in the recent past. These improved and hybridized variants of BSA have been applied to obtain the optimum solution of various problems from diverse fields, such as parameters estimation of photovoltaic models [11], identifying soil parameters [15], job-shop scheduling [9], and fuzzy multiproduct multistage scheduling problem [16].

On the other hand, other than BSA, many biologically inspired algorithms have been used in numerous real-life applications. Due to the advantages of these algorithms to solve various types of optimization problems which are otherwise very hard to solve by the conventional methods, many researchers have introduced a variety of bio-inspired algorithms. Together with their variants, those algorithms have been used to solve numerous problems from both academia and industry. For example, Saha et al. [17] applied the HSOS algorithm on the pseudo-dynamic-bearing capacity of shallow strip footing; the critical failure mechanisms were determined via optimization with a genetic algorithm (GA). Different bio-inspired algorithms have been used to optimize the factor of safety and other related parameters of slope stability analysis in [18]. The weight and cost of the cantilever retaining wall have been minimized using a hybrid algorithm, namely, h-BOASOS in [19]. Sharma et al. proposed MPBOA [20], appending mutualism and commensalism phase in BOA, and used it to segment images using multilevel thresholding strategy. Chakraborty et al. [21] updated the whale optimization algorithm (WOA), modifying the parameters, increasing the exploration ability with random initialization of solution during the global search phase, and reducing the population. Later, the updated method mWOAPR was used to segment COVID-19 X-ray images. Mohammadzadeh et al. [22] improved the grey wolf optimization (GWO) algorithm with the hill-climbing method and chaos theory to solve workflow scheduling in green cloud computing. Trinh et al. [23] used moth-flame optimization algorithm (MFO) to tune the fuzzy logic controllers to improve life span, reduce retransmission, and normalize packet loss percentage in wireless sensor networks. Some other modifications of bio-inspired algorithms can be found in [24,25,26].

One of the exciting findings in the field of nature-inspired algorithms was “No Free Lunch (NFL) theorems” [27]. Logically, it has been proven that no optimization algorithm is ideal for solving all optimization problems. Alternatively, a specific algorithm can produce promising effects on a set of optimization problems, but it can perform poorly in another set of problems. This theorem unlocks the field for further studies on these algorithms, strengthening existing methods and suggesting new algorithms.

BSA is executed through four significant steps: selection-I, mutation, crossover, and selection-II operators. It has two algorithm-specific control parameters: scaling factor (F) and mixrate parameter (M). The scaling factor controls the direction of the population in the mutation strategy, and the mixrate parameter controls the crossover process. The proper execution of BSA can be done by setting the appropriate value of these parameters [13]. The value of these parameters can be set in many ways [8]. Some parameter settings can enhance the convergence speed [12], whereas some other settings are effective for solving separable functions [28]. Besides, solving one specific optimization problem may require a parameter setting where parameters vary automatically during the execution process [4].

The works carried out on BSA indicate that the algorithm produces adequate results in solving unimodal and multimodal problems but does not always provide optimum solutions. In some cases, it tends to converge in local optima. Also, in some test functions, when dimensions are increased, the performance of the BSA decreases. Therefore, in this work, the core search technique of the traditional BSA has been updated to answer all these questions and increase the efficiency to make a better trade-off between exploration and exploitation.

Also, some of the researchers studied the “multi-swarm” and “multi-population island models” [29] concept on some of the state-of-the-art algorithms for improving the performance of those algorithms. Basically, in those works, the initial population is partitioned into several subpopulations [17]. The core objective behind these works is to maintain the entire population’s assortment in the search space and maintain better local and global search abilities.

Motivated by the arguments mentioned above, in this work, a novel-enhanced BSA variant (ImBSA, in short) is proposed by utilizing the multi-population-based approach with multiple mutation operators. During the evolutionary process, the whole population is split into multiple subpopulations. The study’s main objective is to apprehend the automated parameter tuning approach among each mutation strategy over the populations to produce a new variant of BSA. ImBSA has applied on single-objective CEC 2014 [30] and CEC 2017 [31] test functions with dimension 50. The extensive comparative performance of ImBSA is exhibited with several state-of-the-art standard algorithms, improved BSA, efficient DE variants, PSO variants, and some other hybrid variants. Moreover, the proposed algorithm is applied to solve six real-world engineering design problems, namely frequency modulation sounds parameter identification problem, Lennard–Jones potential problem, Tersoff potential function minimization problem, speed reducer design problem, I-beam design problem, cantilever beam design problem, and speed reducer design problem. Through the experimental research, we seek to better understand the actions of the algorithm, in particular how exploratory and exploitative processes are balanced within this study.

The remaining part of the paper is arranged in the following way: a brief discussion of the basic BSA is presented in “Overview of the Backtracking Search Algorithm” section. The “The Proposed Algorithm” section offers a detailed description of ImBSA. The “Performance Results Analysis of CEC 2014 and CEC 2017 Test Functions” section reports the performance analysis of the proposed ImBSA on CEC 2014 and CEC 2017 test functions. The application of ImBSA to real-world optimization problems has been presented in the “Application of ImBSA to Real-World Problem” section. Finally, the “Conclusion” section reviews the influence of the present study.

Overview of the Backtracking Search Algorithm

The BSA is a population-based iterative, evolutionary algorithm that executes the process through five significant components: initialization, selection-I, mutation, crossover, and selection II.

To start with an initial population (Pop), a uniform distribution \(U(lb,ub)\) is used in BSA, represented by Eq. (1).

where \(i=1, \mathrm{2,3},\dots\ \dots\ \dots\ .., NP; j=1, \mathrm{2,3},\dots\ \dots\ .., D\); \(lb\) and \(ub\) are the lower and upper bound of the optimization problem; and rand is a uniformly distributed random number. NP is the number of population; D is the dimension of the optimization problem.

Initial, historical population (OldPop) is obtained by utilizing the Eq. (2). Then selection I operator is used to redefine OldPop at the beginning of each evolutionary process through Eq. (3). Once OldPop is determined, a random shuffling function is applied to transform each individual’s position using Eq. (4).

Here, permuting change the position of the OldPop.

To generate the search-direction matrix, OldPop is used, which means that BSA takes partial advantage of previous experiences for generating a new trial population. BSA uses the mutation operator to generate a trial population (Mutant) during the evolutionary process using Eq. (5).

Here, \(\left(OldPop - Pop\right)\) is called the search-direction matrix, and F controls it. The value of F is defined by Eq. (6). F is the scaling factor.

Here, \(N\left(\mathrm{0,1}\right)\) is the normally distributed random number.

After completing the mutation operator, the BSA crossover operator is applied to determine the trial population’s appropriate system (T). Two steps execute it. An NP*D matrix (map) of binary integer value in the crossover operator is initially calculated. The map is defined by Eqs. (7) and (8). Moreover, it is controlled by the mixrate parameter (M). In the second step, the trial population (T) is determined according to the Eq. (9).

Here M is the mixrate parameter, and its value is 1; 'rand' is a uniformly distributed random number; randi is an integer between 1 and D.

Next, the determined trial population T is updated into the population set Pop using the selection II operator. In the selection II operator, the better objective function value of the individuals of T is updated to the corresponding individual of Pop for producing the current population and to use in the next step through Eq. (10).

The Proposed Algorithm

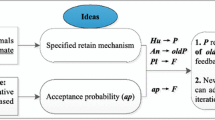

This section discusses the newly suggested improved backtracking search algorithm (ImBSA) incorporating the multi-population strategy, adaptive parameter setting, and a new mutation operator in the original BSA.

Modified Mutation Operator

For an optimization algorithm’s success, the significant development of the algorithm’s local and global search capabilities is essential [36, 37]. Global exploration capability indicates that the population visited the entire search region. In contrast, local exploitation capability specifies that the population visited nearer of those potential regions of the search space where the population visited previously. BSA mutation operation uses a historical population (\(OldPop\)) to produce new mutants to avoid the local optimums through their exploration capability. BSA remembers the historical population until the new population is created. To find the direction of the population,\(OldPop\) is used; thus, BSA takes partial advantage of the previous best-performing individual (\(BestPop\)) for producing a new trial population. Therefore, BSA can remember the best previous population (\(BestPop\)). For the above reasons, we have modified the mutation operator of BSA and formulated the new mutation operator as follows:

Multi-Population-Based Ensemble Mutation Strategy Approach

The ensemble of the BSA mutation strategy can provide an efficient algorithm for solving various complex global optimization problems. In this study, two mutation strategies, “Mutation strategy 1” and “Mutation strategy 2,” are considered for producing a mutant, each of which has respective advantage. The “Mutation strategy 1” is used to explore the whole search space of the optimization problem, and “Mutation strategy 2” is used to exploit the population nearer the potential solutions.

Mutation strategy 1:

Mutation strategy 2:

To appreciate the effectiveness of the ensemble of two mutation strategies, the whole population is divided into two sub-populations in each evaluation, which may be equal or unequal in size. In this study, we have considered equal sub-populations. If Pop denotes the entire population and \({Pop}_{1}\) and \({Pop}_{2}\) represent the two sub-populations, then, \(Pop={Pop}_{1}\cup {Pop}_{2}\). After that, a shuffle function is applied to the whole population to randomly change each population’s position. Thus, during the implementation of ImBSA, each population can exchange information by utilizing different mutation strategies in other evaluations.

Parameter Adaptation of BSA

BSA has three algorithm-specific parameters (NP, M, and F). F controls the direction of the matrix \(\left(OldPop - Pop\right)\). The crossover operator is controlled by the mixrate parameter M. The mixrate parameter M is adopted in the BSA crossover operator to control the population that will mutate in a new trial population [3]. However, for finding BSA’s significant performance on different optimization problems, the appropriate value of control parameters, viz. scaling factor “F” and mixrate “M,” has until now not been stated by some authors [4, 7, 8, 10]. Hence finding the appropriate values of F and M are a difficult task in the study of BSA. Under this consideration, self-adaption schemes for F and M have been suggested in this work. The self-adaption scheme can automatically maintain the appropriate value of these two parameters during the execution. The objective is to develop a highly tuneable algorithm based on BSA to solve a wide range of real-world optimization problems. The initial value of scaling factor F is determined within 0.45 and 2.0 for the population Pop. Popi defines the ith solution in the population, and the value of “F” is determined by Eq. (14) [4].

If \(F=0\), then the new trial population is generated by utilizing the crossover operator only but no mutation operator. The lower and upper values of F are considered as \({F}_{l}=0.45\) and \({F}_{u}=2.0\). Here, \(f({T}_{i})\) and \(f({Pop}_{i})\) represent the value of the ith trial population and the population’s objective function. From the observation of the Eq. (14), it can be said that the proper value of F is evaluated between 0.45 and 2.0. The value of F is obtained before the mutation operator of BSA is performed.

The value of the mixrate parameter “M” for the ith population is determined by Eq. (15).

Here, \({r}_{i}\in [0, 1]\). From Eq. (15), the value of “M” is always less than 1. Thus, in the proposed ImBSA, the user does not need to guess the better values of F and M.

Boundary Control Operator

If an individual moves outside the search space, it should be returned into the search space (feasible region). To keep the individuals in the feasible region, the following rule has been introduced:

where \(i=\mathrm{1,2},3,\dots\ \dots\ \dots\ ..,NP; j=\mathrm{1,2},3,\dots\ \dots\ ..,D.\)

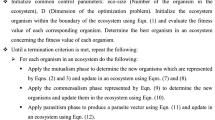

Incorporating the above modifications in the basic BSA, an improved BSA algorithm called ImBSA has been proposed to search the maximum possible region and exploit the population information. The framework of ImBSA is given in Algorithm 1.

Performance Results Analysis of CEC 2014 and CEC 2017 Test Functions

The proposed ImBSA is applied on CEC 2014 and CEC 2017 functions to justify the better performance of the algorithm and compared with various state-of-the-art algorithms. In the following subsections, we will discuss the results obtained by ImBSA and other algorithms on different test functions cited above.

Result and Discussion on CEC 2014 Test Function

The proposed ImBSA is executed on a suit of thirty test functions of the CEC 2014 special session and competition. A detailed description of these thirty functions can be seen in [30]. For a comprehensive comparison, each comparative algorithm runs 51 times over the test functions with dimension 50, population size 100, and maximum function evaluations D*1000. All the algorithms are run in the MATLAB R2010a platform. The lower and upper bound of each test function is considered as −100 and 100, respectively.

Comparison with State-of-the-Art Algorithms

Computational results of thirty CEC 2014 test functions with dimension 50, obtained by BSA [1], PSO [32], ABC[33], DE [34], ABSA [13], and ImBSA, are presented in Table 1. Results obtained by ImBSA are given in boldface if they are the best among the compared algorithms. From this table, several observations and conclusions can be made. Table 2 presents the performance results of ImBSA where it is inferior to, similar to, and superior to other compared algorithms. From Table 2, it is seen that the performance of ImBSA is superior to DE, PSO, ABC, BSA, and ABSA on 27, 27, 26, 29, and 29 test functions, respectively; inferior to DE, PSO, ABC, and BSA on 3, 3, 4, and 1 test functions respectively; and equal to ABSA on one occasion.

The Friedman rank test is conducted by utilizing each benchmark function’s mean results obtained by all algorithms. For each algorithm, the average ranks and variance of positions are obtained and presented in Table 3. At 95% confidence level, the critical value, i.e., the F-statistic value, is 2.576. Based on the average rank of all CEC 2014 test functions, the calculated Friedman statistics, i.e., F-Score values, are reported in Table 3. In Table 3, it is noticed that, at a 95% confidence level, the F-statistics value (2.576) is less than the calculated F-Score value (3.625). Hence, the null hypothesis is rejected, and it indicated that the average ranks obtained by ImBSA utilizing the average performance of all the benchmark test functions are statistically significant.

Next, we apply a pairwise post hoc Bonferroni-Dunn test [35] to identify the significant performance of the proposed ImBSA compared to other existing algorithms. Bonferroni-Dunn test states that “if the average ranks difference is superior to the critical difference (CD) value, then the algorithm is statistically significant.” From the observation of Table 3, it is seen that, at a 95% level of confidence, the obtained average rank difference is superior to the calculated CD (1.239978804). Therefore, from the above discussion and as shown in Fig. 1, it can be concluded that the performance of ImBSA on thirty CEC 2014 special session and competition test functions is statistically significant. Some graphical representations of the convergence performance of ImBSA are reported in Fig. 2.

Results from Fig. 2 show that ImBSA converges substantially more quickly than BSA in all functions. The convergences of both methods are somewhat comparable for several functions in the beginning stages, while the ImBSA convergence is superior for later stages.

Comparison with Some Self-Adaptive DE Variants

Computational results of thirty test functions with dimension 50, obtained by DE/best/1/bin [36], DE/rand/2/bin [37], DE/target-to-best/2/bin [37], DE/target-to-best/1/bin [38], jDE [39], CoDE [40], and ImBSA, are reported in Table 4. Results obtained by ImBSA are given in boldface if they are the best among the compared algorithms. From this table, several observations and conclusions can be made. The numbers of occasions where ImBSA is inferior to, equal to, and superior to other comparative algorithms are presented in Table 5. From the observation of Table 5, it is seen that ImBSA is superior to DE/rand/2/bin, DE/best/1/bin, DE/target-to-best/1/bin, DE/target-to-best/2/bin/, jDE, CoDE on 28, 23, 24, 24, 28, and 26 test functions respectively; inferior to DE/rand/2/bin, DE/best/1/bin, DE/target-to-best/1/bin, DE/target-to-best/2/bin/, jDE, and CoDE on 1, 6, 5, 5, 1, and 3 test function respectively; and equal to one test function with each algorithms.

The Friedman rank test is also applied to thirty CEC 2014 special session and competition test functions (P) and seven algorithms (A) in this study. For each algorithm, the average ranks, the variance of ranks, and the average rank difference obtained by utilizing the average results of all test functions are presented in Table 6. At 95% confidence level, the critical value, i.e., F-statistic value, is 2.638. The calculated F-Score, i.e., Friedman statistics based on the average rank of all CEC2014 test functions, is shown in Table 6. It is observed that, at a 95% confidence level, the calculated F-Score value (3.543640898) is greater than the F-statistics value (2.638). Hence, the null hypothesis is rejected, and it is indicated that the mean ranks obtained by utilizing the average results of all the test functions are statistically different. Table 6 shows that, at a 95% confidence level, the computed critical difference value (1.4714061) is less than the mean rank difference. Hence, from the analysis of the result in Fig. 3, it is observed that ImBSA’s performance on thirty CEC 2014 special session and competition test functions is statistically significant at a 95% confidence level.

Comparison with some PSO variants

Table 7 shows the comparison of computational results obtained by PSO-cf [32], FI-PS, CLPSO [41], DNLPSO, PSOGSA [41], DE-PSO, and ImBSA of thirty CEC2014 test functions with dimension 50. Results obtained by ImBSA are bolded if they are the best among the compared algorithms. From this table, the performance results of ImBSA, where it is inferior to, equal to, and superior to the above algorithms, are presented in Table 8. From Table 8, it is seen that the performance of ImBSA is superior to PSO-cf, FI-PS, CLPSO, DNLPSO, PSOGSA, DE-PSO on 24, 27, 29, 24, 23, and 22 test functions; inferior on 6, 2, 0, 6, 7, 8, and 6 occasions; and similar to FI-PS, CLPSO, and DNLPSO on 1 case, respectively.

Next, the Friedman rank test has been conducted on thirty CEC2014 benchmark functions on ImBSA and seven above algorithms. For each algorithm, the average ranks and variance of positions obtained by utilizing all test functions’ average results are presented in Table 9. Here, at 95% confidence level, the critical value, i.e., F-statistic value, and the calculated F-Score, i.e., Friedman statistics, are reported in Table 9. From Table 9, it is seen that, at a 95% confidence level, the calculated F-Score value (4.243870263) is greater than the F-statistics value (2.638). Hence, the null hypothesis is rejected, and it indicates that the average ranks obtained by utilizing the average results of all the test functions are statistically different. Also, it is observed from Table 9 and Fig. 4 that the mean rank difference is superior to the calculated CD value (1.4714061). Moreover, the analysis summarized that ImBSA presents acceptable performance on thirty CEC 2014 special session and competition test functions.

Results and Discussion on CEC 2017 Test Function

In CEC 2017 test functions, there are unimodal functions, simple multimodal functions, hybrid functions, and composition functions. One can see the details of these functions in [40]. The obtained results of ImBSA are compared with ABSA [13], IBSA [4], OBSA [42], HBSA [2], SCA [43], and HSCA [44] respectively. For fare comparison, the parameter setting for all the algorithms is kept the same. The performance results of these algorithms on the test functions are presented in Table 10. Table 11 presents the counting number that ImBSA is better than, worse than, and similar to the compared algorithms. This table shows that ImBSA is superior to ABSA, IBSA, OBSA, HBSA, SCA, and HSCA on 27, 28, 24, 26, 29, and 27 test functions.

For statistical analysis, the Friedman rank test has been conducted based on the mean performance on CEC 2017 for each algorithm. By utilizing the average results of all CEC2017 test functions, the obtained average ranks and variance of ranks are presented in Table 14. At 95% confidence level, the obtained critical value (F-statistic value) and Friedman statistics (F-Score) are reported in Table 12. Table 12 shows that, at a 95% confidence level, the calculated F-Score value (37.86853147) is greater than the F-statistics value (2.638), which indicates that the average ranks obtained by utilizing the average results of all the test functions are statistically different. It is also observed from Table 12 and Fig. 5 that the mean rank difference is superior to the calculated CD value (1.496560163). Hence, it is summarized that ImBSA presents outstanding performance on the CEC2017 special session and competition test functions from the analysis.

Complexity Analysis

This section details the complexity of our ImBSA algorithms with dimensions 50 and 10 correspondingly for IEEE CEC 2014 and 2017 test functions. Analysis of an algorithm’s complexity is useful for comparing the suggested algorithm with other algorithms regarding the time consumed to solve a problem. The algorithm with lower complexity is considered efficient. The present work calculates the complexity according to the definition presented in IEEE CEC 2014 and 2017. Algorithms 2 and 3 represent the simple codes used to evaluate the value of \(T0\) in IEEE CEC 2014 and 2017 functions, respectively. These codes were recommended in the problem definition and evaluation criteria of IEEE CEC 2014 and 2017, respectively. The calculated algorithm complexity of the test function IEEE CEC 2014 [34] and 2017 [35] is shown in Tables 13 and 14.

Algorithm 2 | |

|---|---|

for i = 1:1,000,000 x = 0.55 + (double)i; x = x + x; x = x/2; x = x*x; x = sqrt(x); x = log(x); x = exp(x); x = x/(x + 2); end |

Algorithm 3 | |

|---|---|

x = 0.55; for i = 1:1,000,000 x = x + x; x = x/2; x = x*x; x = sqrt(x); x = log(x); x = exp(x); x = x/(x + 2); end |

-

\(T1\) is the time to execute 200,000 evaluations of benchmark function F18 by itself with D dimensions.

-

\(T2\) is the time to execute ImBSA with 200,000 evaluations of F18 in D dimensions.

-

\(\widehat{T2}\) is the average \(T2\) value of 5 runs.

According to Tables 13 and 14, both \(T1\) and \(\widehat{T2}\) scaled linearly higher of BSA than ImBSA for the respective dimensions, as shown by the paired comparison of the value of \(( \widehat{T2}-T1)/T 0\).

Figures 6 and 7 have been drawn to depict the intricacy better. From these two figures, it can be observed that the height of the cone of ImBSA is lower than the height of the cone of BSA. This indicates that BSA is more complex than ImBSA. Thus, in terms of runtime complexity, ImBSA has a superior algorithm than BSA.

State-of-the-Art Recent BSA Variants

In this section, the proposed ImBSA is compared with some recently proposed variants of BSA, namely GWO-BSA [45], e-SOSBSA [46], BS-DE [47], LFBSA [11], IBS [10], and hDEBSA [48]. For this comparison, the CEC 2017 test functions with ten dimensions have been considered.

The details of these functions can be seen in [31]. For fare comparison, the parameter setting of all the algorithms is kept the same. The performance results of these algorithms on the test functions are presented in Table 15. Table 16 presents the number of functions where the proposed ImBSA is better than, worse than, and similar to the compared algorithms. This table shows that ImBSA is superior to GWO-BSA, BS-DE, LFBSA, IBS, and hDEBSA on all the twenty-nine test functions, whereas ImBSA is superior to e-SOSBSA on 20 test functions.

For statistical analysis, the Friedman rank test has been conducted based on the mean performance on CEC 2017 for each algorithm. By utilizing the average results of all CEC 2017 test functions, the obtained average ranks and variance of ranks are presented in Table 17. At 95% confidence level, the obtained critical value (F-statistic value) and Friedman statistics.

(F-Score) are reported in Table 17. Table 17 shows that, at a 95% confidence level, the calculated F-Score value (13.149847095) is greater than the F-statistics value (2.638), which indicates that the average ranks obtained from the mean results of all the test functions are statistically different. It is also observed from Table 17 and Fig. 8 that the mean rank difference is superior to the calculated CD value (1.496560163). Hence, it is summarized that ImBSA presents outstanding performance on CEC 2017 special session and competition test functions than its competitors considered in this study.

Application of ImBSA to Real-World Problem

In this part, six recognized engineering test problems [49] are tested with the proposed updated version of BSA, namely, (i) frequency modulation sounds parameter identification problem, (ii) Lennard–Jones potential problem, (iii) Tersoff potential function minimization problem, (iv) speed reducer design problem, (v) I-beam design problem, (vi) cantilever beam design problem, and (vii) speed reducer design engineering problem. The following can be summarized in the numerical engineering test problem:

Frequency Modulation Sounds Parameter Identification Problem [49]

The sound wave is a widespread optimization issue and plays a significant part in numerous current music systems. Frequency-modulation (FM) fusion is skillful in producing unique sounds but is not always properly regulated. A small number of factors can play a crucial role in delivering an extensive range of sound tones. Noises that are comparable to the goal sounds can be produced; therefore, using ImBSA for FM synthesis, the FM matching FM approach is described to identify optimum parameter values. Initially, a set of parameters is established by ImBSA, and the FM synthesizer generates the sound.

The distances between the target and synthesized sounds are treated as the fitness value in this task (or objective function value). This engineering benchmark problem is a tough multimodal challenge. Six of the sound waves are the dimension of this problem. The objective function has the following definition:

where Eqs. (18) and (19) give the primary sound wave and target sound.

Six variables are \(y=\left\{{a}_{1},{\omega }_{1},{a}_{2},{\omega }_{2},{a}_{3},{\omega }_{3}\right\},\) and the domain of these variables is [− 6.4, 6.35]. Here \(\theta =2\pi /100.\)

Lennard–Jones Potential Problem [49]

The potential challenge of Lennard–Jones (LJ) is to minimize the molecular power potential of the pure LJ cluster. An icosahedral core and a combination of surface grid points are present in the grid structure of the LJ cluster. The algorithm ImBSA is implemented to make the particle less energy-efficient to adapt its molecular structure to construct the atoms. This hypothetical challenge of multimodal optimization energy reduction involves an endless number of local minimum levels. The Cartesian coordinates provide the cluster setup of “n” atoms in LJ problems.

The following are given for the mathematical creation of Lennard–Jones’ atom “N” pair potential:

And the gradient is

Ten atomic issues were taken into account, and a total of 30 variables were added. Any allowing and translating the {pj} order lead to the identical functional value, as \({V}_{N}\left(p\right)\) only depends on the pair distances. The search space of first three variables are (0, 4), (0, 4), and (0, π), and for other variables are:\(\left[-4-\frac{1}{4}\bigg\lceil\frac{i-4}{3}\bigg\rceil,4+\frac{1}{4}\bigg\lceil\frac{i-4}{3}\bigg\rceil\right]\) where \(\lceil r \rceil\) is the nearest integer of r.

Tersoff Potential Function Minimization Problem for Model Si(B) [49]

The total Tersoff potential energy function of the system is the sum of individual potentials of atoms and is defined as

The Tersoff potential of individual atoms can be formally defined as follows:

Here \({r}_{i,j}\) is the distance between atoms \(i\) and \(j\), \({V}_{R}\) is a repulsive term, \({V}_{A}\) is an attractive term, \({f}_{c}({r}_{i,j})\) is a switching function, and \({B}_{i,j}\) is a many-body term that depends on the positions of atoms \(i\) and \(j\) and the neighbors of atom \(i\). The term \({B}_{i,j}\) is given by

In Eq. (26), \({n}_{1}\) and γ are known fitted parameters, and the term \({\xi }_{i,j}\) for atoms, \(i\) and \(j\) (i.e., for bond), are given by:

In Eq. (27), the term \({\theta }_{ijk}\) is the bond angle between bonds \(ij\) and \(ik\), and the function \(g\) is given by:

The quantities \({\lambda }_{3}\) and \(c, d, h\) which appear in Eqs. (27) and (28) are also known as fitted parameters. The terms \({V}_{R}\left({r}_{i,j}\right)\), \({V}_{A}\left({r}_{i,j}\right)\) and the switching function \({f}_{c}\left({r}_{i,j}\right)\), appear in Eq. (25) are given by:

where A, B, \({\lambda }_{1}\) R, D, and \({\lambda }_{2}\) are given fitted parameters.

Thus, the cost function now can be redefined as:

In general, for N atoms, the no. of unknown variables is n = 3 × N − 6. Now the cluster X of N atoms can be redefined as

The search region for Si(B) model of Tersoff potential is

The number of atoms is therefore regarded to be 10, with the total no variables being 30. Reference [49] provides a complete overview of this problem.

Spread Spectrum Radar Polyphase Code Design Problem[49]

The spectrum radar polyphase code design challenge is one of the most frequent design optimization issues for global optimization techniques. The choice of proper waveform must be given close attention when a radar system is designed using pulse compression. There are several modulation methods of radar pulse that allow pulse compression. Polyphase codes are appealing because they give reduced side globes in the compressed signals and make digital processing techniques easier to apply. This synthesis is based upon the features of the aperiodic autocorrelation function, assuming that the receiver is being consistently radar pulse processed. The problem has constant variables and a min–max nonlinear non-convex optimization problem with several local solutions. This problem is mathematically formed as follows:

where \(X=\left\{\left({x}_{1},{x}_{2},{x}_{3},\dots ,{x}_{4}\right)\right.\in {R}^{D}\left|0\le {x}_{j}\le 2\pi ,j=\mathrm{1,2},3,\dots ,D\right\}, and\ m=2D-1, with\)

In this context, the objective is to optimize (minimize) the most extensive module among the autocorrelation function linked to the compressed radar pulse at optimum recipient output. At the same time, the variables indicate symmetry phase differences. This is a problem of NP-hard optimization. Due to its objective function, the problem may be characterized as a class of continuous minimal global optimization problems. The problem includes 20 variables, and the variable domain is [0, 2π].

Cantilever Beam Design Engineering Problem [49]

The topic is the optimization of the weight of a square cross-sectional cantilever beam depicted in Fig. 9. At node 1, the beam is firmly held, while at node 5, a vertical force exists. The five design variables include the heights (or widths) of the beam and the thickness of the fixed elements of the beams. The analysis of the problem is as follows:

Subject to,

I-beam Design Engineering Problem [46]

The ImBSA algorithm tested a new design problem, i.e., the problem of I-beam design, incorporating four variables, in addressing actual engineering difficulties. The goal of I-beam design optimization is to decrease vertical I-beam deflection, as seen in Fig. 10. With the I-beam design optimization issue, cross-sectional areas and stress limits are concurrently fulfilled with specified loads. The following are the formulation of the I-beam optimization problem:

Subject to,

Speed Reducer Design Engineering Problem

The proposed ImBSA is employed to determine the speed reducer design problem [46]. Various components of this problem are the number of teeth on pinion \((z)\), the module of teeth \((m)\), face width \((b)\), the diameter of shaft 1 (\({d}_{1}\)), and diameter of shaft 2 (\({d}_{2}\)), length of shaft 1 between bearings (\({l}_{1}\)), and length of shaft 2 between bearings (\({l}_{2}\)) as shown seen in Fig. 11. The objective of this engineering benchmark design optimization problem is to minimize the total weight of the speed reducer. The constraints included in this problem are bending stress, surface stress, and transverse deflections. The analytical expressions of this problem are as follows:

Subject to

where \(2.6\le {u}_{1}\le 3.6\), \(0.7\le {u}_{2}\le 0.8\), \(17\le {u}_{3}\le 28\), \(7.3\le {u}_{4}\le 8.3\), \(7.3\le {u}_{5}\le 8.3\), \(2.9\le {u}_{6}\le 3.9\), and \(5.0\le {u}_{7}\le 5.5\),\(\overrightarrow{u}=\left({u}_{1},{u}_{2},{u}_{3},{u}_{4},{u}_{5},{u}_{6},{u}_{7}\right)=(z, m, b, {l}_{1},{l}_{2}, {d}_{1}, {d}_{2})\).

Results and Discussions on Real-World Problems

The ImBSA has calculated 1000 function evaluations of 50 population sizes and 25 runs for the first four tasks. Tables 18, 19, 20, and 21 describe the result of four issues, i.e., the optimal solution. The findings of the problems mentioned above are derived from the reference [49].

The result achieved is compared to ISOS, SOS, ASOS_BF1, ASOS_BF2, ASOS_BF1&2, BSA, ABSA, DE, and ImBSA for the frequency-modulated (FM) parameter estimation problem. Table 18 contains the best, median, worst, median, and SD obtained by ImBSA and some other algorithms on this problem. Table 18 shows that ImBSA receives the best solution. Therefore, the findings of Table 18 suggest that the performance of the ImBSA for this problem is best compared to other methods.

The outcomes achieved by the proposed algorithm are compared with ISOS, SOS, ASOS BF1, ASOS BF2, ASOS BF1&2, BSA, ABSA, DE, and ImBSA for Lennard–Jones potential problem. Table 19 presents the best, median, worst, average, and SD showing the performance outcomes of this problem by ImBSA and aforesaid algorithms. Table 19 displays that ImBSA obtains the best, median, and mean results. Thus, one can readily sum up from the findings that the performance of the ImBSA is the best compared to other techniques.

The findings obtained are compared with other algorithms like ISOS, SOS and ASOS BF1, ASOS BF2, ASOS BF1&2, BSA and ABSA, and ImBSA for Tersoff’s Si(B) potential. Table 20 contains the best, median, worst, mean, and SD performance outcomes of this optimization task. Table 20 shows that the BSA obtains the best answer, although ImBSA obtains the best median and mean outcomes. The results suggest that the ImBSA version demonstrates a superior way than some algorithms compared here.

The comparison table of the results obtained by ISOS, SOS, ASOS BF1, ASOS BF2, ASOS BF1&2, BSA, ABSA, DE, and ImBSA for the radar polyphase code design problem is presented in Table 21. Table 21 shows that ImBSA obtains the best solution, while ASOS BF2 gets the worst outcome. So, by observing Table 21, it can be said that the ImBSA works better than any other approach. This suggests ImBSA’s overall superiority to solve this problem. The explanation above indicates that the performance of the ImBSA is acceptable to solve this problem.

The proposed ImBSA has been employed to solve the cantilever beam design with the same configuration as the reference [46]. The optimal output attained is shown in Table 22, and results other than ImBSA are derived from [46]. In comparison with m-SCA, SCA, CS, GCA(I), GCA(II), MMA, CONLIN, and e-SOSBS, the optimal performance of the suggested ImBSA is better. Compared to the other optimizer offered in this study, the comparative research shown in Table 22 shows ImBSA’s efficient performance capability.

Cauchy-GWO, ARSM, improved ARSM, CS, GWO, and e-SOSBSA were solved I-beam design engineering optimization problems previously. The suggested ImBSA research is also employed to solve this significant optimization issue with the same parameter configuration used in reference [46]. The best outcome achieved by ImBSA is presented in Table 23, and the results other than ImBSA are taken from [46]. The optimized values of the four design variables are 80, 50, 1.7646138, and 5.0000000, and an ideal I-beam vertical deflection is 0.0071004879203201. Table 23 demonstrates that ImBSA handles the problem with improved performance in the design of beams compared to other algorithms given in this table.

The speed reducer design problem has previously been optimized with a different algorithm which can be seen in [46]. Table 24 provides a summary of the best solution for the recorded methods. The results were achieved with the same parameter setting as in reference [46] to compare the performance of ImBSA. The optimum output is compared with e-SOSBSA, SC-GWO, CS, SCA, PSO, wPSO, GWO, Ray and Saini, mGWO, wGWO, m-SCA, OBSCA, SSA, MFO, WOA, ISCA, Chaotic SSA, Akhtar et al., Ku et al., Montes, and Coello. The results of these methods are taken from [46]. The optimum values of all decision variables are 3.5008989, 0.7000000, 17.0000000, 7.3007000, 7.7159679, 3.3538547, and 5.2846039, and the optimum weight of the speed reducer is 2994.4710501. The results’ comparison with other algorithms is also shown in the same table, demonstrating the proposed ImBSA over different algorithms.

It is evident from the preceding discussion, findings, and reviews that the quality, efficiency, and resilience of ImBSA have improved to address real-life challenges. In addition, ImBSA may also be used for practical applications as an alternative optimizer.

Conclusion

In this study, a new mutation strategy of the BSA has been proposed, which exploits the searchability of the algorithm in a better way. A new modified parameter scheme has been considered in ImBSA. An ensemble of mutation strategies with a multi-population concept has also been incorporated into this study. Incorporating the concepts above, a novel BSA variant, called multi-population-based self-adaptive BSA (ImBSA, in short), has been proposed in this study. The extensive performance of ImBSA on CEC 2014 and CEC 2017 test functions has been tested by comparing with several algorithms found in the literature. The comparison results show that ImBSA outperforms some other efficient algorithms. For statistical analysis, a nonparametric Friedman test in which a pairwise post hoc Bonferroni-Dunn test with a 95% confidence level is utilized to highlight the significant difference of performance of ImBSA among other compared algorithms. The statistical analysis also confirms that ImBSA is statistically more effective than the compared algorithms.

The study addresses the efficiency of the proposed algorithm employing six problems of engineering design optimization. Results on technical challenges also approve that ImBSA has been improved significantly compared to different optimizers in the literature.

Based on the findings of experimental results, statistical testing, and real-world applications, some concluding remarks are given below:

-

Compared with conventional BSA, the improved stability of BSA’s exploitation and exploration has been established and improved. With auto-adaptive mutation rate and mixrate parameters, in the suggested ImBSA, the stability and convergence speed have been improved.

-

The numerical findings of the CEC 2014 and CEC 2017 benchmark suites demonstrate that the proposed ImBSA has increased search capacity compared to some of the competitors.

-

The convergence curve drawn between the fitness values at each generation determines the stability within the suggested methodology for exploitation and identifies the optimal value throughout the process of searching.

-

A convergence and statistical validation study of the data confirms the improved performance by ImBSA when addressing issues with optimization compared to traditional BSA and other comparative optimization methods.

-

To determine the numerical findings, the challenge of technical test problems, including diverse levels of complexity and space of research, also promotes the efficiency of the suggested ImBSA.

Limitation and Future Direction

There is hardly any study without limitations. Although we have tried to enhance the efficiency of the BSA, this study has several limitations. Some of these limitations and future directions are given below:

-

The adaptive parameter setting based control parameters value has only been suggested here. But this can be done in many other ways such as the fuzzy adaptive, neighborhood-based, concept of the topology of the population, and fitness distance-based. These are some future directions of the current work.

-

The algorithm’s stochastic analysis, such as convergence analysis and stability analysis, may also be analyzed so that the proposed algorithm may have a strong mathematical base.

-

The best-guided search-direction matrix is used in the proposed method, but this can be further improved in different ways.

-

This method is applied only on single-objective constrained and unconstrained optimization problems which may be extended to the multi-objective scenario.

-

This approach may be used to determine numerous optimization problems from academia and industry.

References

Civicioglu P. Backtracking search optimization algorithm for numerical optimization problems. Appl Math Comput. 2013;219:8121–44. https://doi.org/10.1016/j.amc.2013.02.017.

Nama S, Saha AK. A novel hybrid backtracking search optimization algorithm for continuous function optimization. Decis Sci Lett. 2019;8:163–74. https://doi.org/10.5267/j.dsl.2018.7.002.

Nama S, Saha AK, Ghosh S. A new ensemble algorithm of differential evolution and backtracking s algorithm with adaptive control parameter for function optimization. Int J Ind Eng Comput. 2016;7:323–38. https://doi.org/10.5267/j.ijiec.2015.9.003.

Nama S, Saha AK, Ghosh S. Improved backtracking search algorithm for pseudo dynamic active earth pressure on retaining wall supporting c-Ф backfill. Appl Soft Comput J. 2017;52:885–97. https://doi.org/10.1016/j.asoc.2016.09.037.

Chen D, Zou F, Lu R, Li S. Backtracking search optimization algorithm based on knowledge learning. Inf Sci (Ny). 2019;473:202–26. https://doi.org/10.1016/j.ins.2018.09.039.

Tian Z. Backtracking search optimization algorithm-based least square support vector machine and its applications. Eng Appl Artif Intell. 2020;94: 103801. https://doi.org/10.1016/j.engappai.2020.103801.

Zhang Y, Ma M, Jin Z. Backtracking search algorithm with competitive learning for identification of unknown parameters of photovoltaic systems. Expert Syst Appl. 2020;160: 113750. https://doi.org/10.1016/j.eswa.2020.113750.

Zhang Y, Huang C, Jin Z. Backtracking search algorithm with reusing differential vectors for parameter identification of photovoltaic models. Energy Convers Manag. 2020;223: 113266. https://doi.org/10.1016/j.enconman.2020.113266.

Caldeira RH, Gnanavelbabu A, Vaidyanathan T. An effective backtracking search algorithm for multi-objective flexible job shop scheduling considering new job arrivals and energy consumption. Comput Ind Eng. 2020;149: 106863. https://doi.org/10.1016/j.cie.2020.106863.

Emami H, Sharifi AA. An improved backtracking search optimization algorithm for cubic metric reduction of OFDM signals. ICT Express. 2020;6:258–61. https://doi.org/10.1016/j.icte.2020.03.001.

Zhang Y, Jin Z, Zhao X, Yang Q. Backtracking search algorithm with Lévy flight for estimating parameters of photovoltaic models. Energy Convers Manag. 2020;208: 112615. https://doi.org/10.1016/j.enconman.2020.112615.

Zhang Y. Backtracking search algorithm with specular reflection learning for global optimization. Knowl Based Syst. 2021;212: 106546. https://doi.org/10.1016/j.knosys.2020.106546.

Duan H, Luo Q. Adaptive backtracking search algorithm for induction magnetometer optimization. IEEE Trans Magn. 2014;50:1–6. https://doi.org/10.1109/TMAG.2014.2342192.

Guha D, Roy P, Banerjee S. Quasi-oppositional backtracking search algorithm to solve load frequency control problem of interconnected power system. Iran J Sci Technol Trans Electr Eng. 2020;44:781–804. https://doi.org/10.1007/s40998-019-00260-0.

Jin YF, Yin ZY. Enhancement of backtracking search algorithm for identifying soil parameters. Int J Numer Anal Methods Geomech. 2020;44:1239–61. https://doi.org/10.1002/nag.3059.

Yan X, Han Y, Gu X. An improved discrete backtracking searching algorithm for fuzzy multiproduct multistage scheduling problem. Neurocomputing. 2020;398:153–65. https://doi.org/10.1016/j.neucom.2020.02.066.

Saha A, Nama S, Ghosh S. Application of HSOS algorithm on pseudo-dynamic bearing capacity of shallow strip footing along with numerical analysis. Int J Geotech Eng. 2019. https://doi.org/10.1080/19386362.2019.1598015.

Lohar G, Sharma S, Saha AK, Ghosh S. Optimization of geotechnical parameters used in slope stability analysis by metaheuristic algorithms. In: Mandal J, Mukhopadhyay S, Roy A, editors. Lecture Notes in Networks and Systems. Singapore: Springer Science and Business Media; 2021. p. 223–31.

Sharma S, Saha AK, Lohar G. Optimization of weight and cost of cantilever retaining wall by a hybrid metaheuristic algorithm. Eng Comput. 2021;1–27. https://doi.org/10.1007/s00366-021-01294-x.

Sharma S, Kumar A. MPBOA - a novel hybrid butterfly optimization algorithm with symbiosis organisms search for global optimization and image segmentation. Multimed Tools Appl. 2020. https://doi.org/10.1007/s11042-020-10053-x.

Chakraborty S, Saha AK, Nama S, Debnath S. COVID-19 X-ray image segmentation by modified whale optimization algorithm with population reduction. Comput Biol Med. 2021;139: 104984. https://doi.org/10.1016/j.compbiomed.2021.104984.

Mohammadzadeh A, Masdari M, Gharehchopogh FS, Jafarian A. Improved chaotic binary grey wolf optimization algorithm for workflow scheduling in green cloud computing. Evol Intell. 2021;14:1997–2025. https://doi.org/10.1007/s12065-020-00479-5.

Trinh C, Huynh B, Bidaki M, et al. Optimized fuzzy clustering using moth-flame optimization algorithm in wireless sensor networks. Netherlands: Springer; 2021.

Sharma S, Kumar A. m-MBOA : a novel butterfly optimization algorithm enhanced with mutualism scheme. Soft Comput. 2019. https://doi.org/10.1007/s00500-019-04234-6.

Chakraborty S, Kumar Saha A, Sharma S, et al. A novel enhanced whale optimization algorithm for global optimization. Comput Ind Eng. 2020;153: 107086. https://doi.org/10.1016/j.cie.2020.107086.

Chakraborty S, Saha AK, Sharma S, et al. A hybrid whale optimization algorithm for global optimization. Heidelberg: Springer, Berlin; 2021.

Wolpert DH, Macready WG. No free lunch theorems for optimization. IEEE Trans Evol Comput. 1997;1:67–82. https://doi.org/10.1109/4235.585893.

Zhu L, Ma Y, Bai Y. A self-adaptive multi-population differential evolution algorithm. Nat Comput. 2020;19:211–35. https://doi.org/10.1007/s11047-019-09757-3.

Li X, Wang L, Jiang Q, Li N. Differential evolution algorithm with multi-population cooperation and multi-strategy integration. Neurocomputing. 2021;421:285–302. https://doi.org/10.1016/j.neucom.2020.09.007.

Liang JJ, Qu BY, Suganthan PN. Problem definitions and evaluation criteria for the CEC 2014 special session on single objective real-parameter numerical optimization. Singapore: Nanyang Technological University; 2013. p. 1–21. Tech Rep 201311.

Awad NH, Ali MZ, Liang JJ, Qu BY, Suganthan PN. Problem definitions and evaluation criteria for the CEC 2017 special session and competition on single objective bound constrained real-parameter numerical optimization. Singapore: Nanyang Technology University; 2016. Tech Report.

Clerc M, Kennedy J. The particle swarm-explosion, stability, and convergence in a multidimensional complex space. IEEE Trans Evol Comput. 2002;6:58–73. https://doi.org/10.1109/4235.985692.

Karaboga D, Basturk B. A powerful and efficient algorithm for numerical function optimization: artificial bee colony (ABC) algorithm. J Glob Optim. 2007;39:459–71. https://doi.org/10.1007/s10898-007-9149-x.

Storn R, Price K. Differential evolution - a simple and efficient heuristic for global optimization over continuous spaces. J Glob Optim. 1997;11:341–59. https://doi.org/10.1023/A:1008202821328.

Demšar J. Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res. 1993;7:1–30. https://doi.org/10.5555/1248547.1248548.

Pant M, Thangaraj R, Abraham A. DE-PSO: a new hybrid meta-heuristic for solving global optimazation problems. New Math Nat Comput. 2011;07:363–81. https://doi.org/10.1142/s1793005711001986.

Liang JJ, Suganthan PN. Dynamic multi-swarm particle swarm optimizer. In: Proceedings - 2005 IEEE Swarm Intelligence Symposium. SIS; 2005. p. 127–32.

Storn R. On the usage of differential evolution for function optimization. In: Biennial Conference of the North American Fuzzy Information Processing Society - NAFIPS. IEEE; 1996. p. 519–23.

Brest J, Greiner S, Bošković B, et al. Self-adapting control parameters in differential evolution: a comparative study on numerical benchmark problems. IEEE Trans Evol Comput. 2006;10:646–57. https://doi.org/10.1109/TEVC.2006.872133.

Wang Y, Cai Z, Zhang Q. Differential evolution with composite trial vector generation strategies and control parameters. IEEE Trans Evol Comput. 2011;15:55–66. https://doi.org/10.1109/TEVC.2010.2087271.

Liang JJ, Qin AK, Suganthan PN, Baskar S. Comprehensive learning particle swarm optimizer for global optimization of multimodal functions. IEEE Trans Evol Comput. 2006;10:281–95. https://doi.org/10.1109/TEVC.2005.857610.

Lin J. Oppositional backtracking search optimization algorithm for parameter identification of hyperchaotic systems. Nonlinear Dyn. 2015;80:209–19. https://doi.org/10.1007/s11071-014-1861-8.

Mirjalili S. SCA: a sine cosine algorithm for solving optimization problems. Knowledge-Based Syst. 2016;96:120–33. https://doi.org/10.1016/j.knosys.2015.12.022.

Gupta S, Deep K. A novel hybrid sine cosine algorithm for global optimization and its application to train multilayer perceptrons. Appl Intell. 2020;50:993–1026. https://doi.org/10.1007/s10489-019-01570-w.

Lakshmi Priya J, Jaya Christa ST. An effective hybridized GWO-BSA for resolving optimal power flow problem with the inclusion of unified power flow controller. IETE J Res. 2021. https://doi.org/10.1080/03772063.2021.1942245.

Nama S, Saha AK, Sharma S. Performance up-gradation of symbiotic organisms search by backtracking search algorithm. J Ambient Intell Humaniz Comput. 2021. https://doi.org/10.1007/s12652-021-03183-z.

Kuyu YÇ, Onieva E, Lopez-Garcia P. A hybrid optimizer based on backtracking search and differential evolution for continuous optimization. J Exp Theor Artif Intell. 2021. https://doi.org/10.1080/0952813X.2021.1872109.

Nama S, Saha AK, Saha A. The hDEBSA global optimization method: a comparative study on CEC2014 test function and application to geotechnical problem. In: Bhoi A, Mallick P, Liu CM, Balas V, editors. Studies in Computational Intelligence. Singapore: Springer; 2021. p. 225–58.

Das S, Suganthan PN. Problem definitions and evaluation criteria for CEC 2011 competition on testing evolutionary algorithms on real world optimization problems. Singapore: Nanyang Technological University; 2011. p. 1–42.

Acknowledgements

The authors would like to thank the editor and anonymous reviewers for valuable feedback towards the improvement of the manuscript.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Nama, S., Saha, A.K. A Bio-Inspired Multi-Population-Based Adaptive Backtracking Search Algorithm. Cogn Comput 14, 900–925 (2022). https://doi.org/10.1007/s12559-021-09984-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-021-09984-w