Abstract

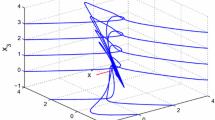

In this paper, we study a gradient-based neural network method for solving strictly convex quadratic programming (SCQP) problems. By converting the SCQP problem into a system of ordinary differential equation (ODE), we are able to show that the solution trajectory of this ODE tends to the set of stationary points of the original optimization problem. It is shown that the proposed neural network is stable in the sense of Lyapunov and can converge to an exact optimal solution of the original problem. It is also found that a larger scaling factor leads to a better convergence rate of the trajectory. The simulation results also show that the proposed neural network is feasible and efficient. The simulation results are very attractive.

Similar content being viewed by others

References

Agrawal SK, Fabien BC. Optimization of dynamic systems. Netherlands: Kluwer Academic Publishers; 1999.

Ai W, Song YJ, Chen YP. An improved neural network for solving optimization of quadratic programming problems. In: Proceedings of the fifth international conference on machine learning and cybernetics, Dalian; 2006. p.13–6.

Anguita D, Boni A. Improved neural network for SVM learning. IEEE Trans Neural Netw. 2002;13:1243–44.

Avriel M. Nonlinear programming: analysis and methods. Englewood Cliffs, NJ: Prentice-Hall; 1976.

Bazaraa MS, Sherali HD, Shetty CM. Nonlinear programming—theory and algorithms, 2nd ed. New York: Wiley; 1993.

Bertsekas DP. Parallel and distributed computation: numerical methods. Englewood Cliffs, NJ: Prentice-Hall; 1989.

Boggs PT, Domich PD, Rogers JE. An interior point method for general large-scale quadratic programming problems. Ann Oper Res. 1996;62:419–37.

Boland NL. A dual-active-set algorithm for positive semi-definite quadratic programming. Math Program. 1996;78:1–27.

Boyd S, Vandenberghe L. Convex optimization. Cambridge: Cambridge University Press; 2004.

Facchinei F, Jiang H, Qi L. A smoothing method for mathematical programs with equilibrium constraints. Math Program. 1999;35:107-34.

Fletcher R. Practical methods of optimization. New York: Wiley; 1981.

Hale JK. Ordinary differential equations. New York: Wiley-Interscience; 1969.

Hu SL, Huang ZH, Chen JS. Properties of a family of generalized NCP-functions and a derivative free algorithm for complementarity problems. J Comput Appl Math. 2009;230:69–82.

Huang YC. A novel method to handle inequality constraints for convex programming neural network. Neural Process Lett. 2002;16:17–27.

Jiang M, Zhao Y, Shen Y. A modified neural network for linear variational inequalities and quadratic optimization problems. Lecture Notes in Computer Science, 5553, Springer, Berlin: Heidelberg; 2009.p. 1–9.

Kalouptisidis N. Signal processing systems, theory and design. New York: Wiley; 1997.

Kennedy MP, Chua LO. Neural networks for nonlinear programming. IEEE Trans Circuits Syst. 1988;35:554–62.

Lillo WE, Loh MH, Hui S, Zăk SH. On solving constrained optimization problems with neural networks: a penalty method approach. IEEE Trans Neural Netw. 1993;4(6):931–39.

Liu Q, Cao J. Global exponential stability of discrete-time recurrent neural network for solving quadratic programming problems subject to linear constraints. Neurocomputing. 2011;74:3494–01.

Liu Q, Wang J. A one-layer recurrent neural network with a discontinuous hard-limiting activation function for quadratic programming. IEEE Trans Neural Netw. 2008;19:558–70.

Liu Q, Zhao Y. A continuous-time recurrent neural network for real-time support vector regression. In: Computational Intelligence in Control and Automation (CICA), 2013 IEEE Symposium on, 16–19 April 2013; p. 189–193.

Maa CY, Shanblatt MA. Linear and quadratic programming neural network analysis. IEEE Trans Neural Netw. 1992;3(4):580–94.

Malek A, Yashtini M. Image fusion algorithms for color and gray level images based on LCLS method and novel artificial neural network. Neurocomputing. 2010;73:937–43.

Miller RK, Michel AN. Ordinary differential equations. NewYork: Academic Press; 1982.

More JJ, Toroaldo G. On the solution of large quadratic programming problems with bound constraints. SIAM J Optim. 1991;1:93–13.

Nazemi AR. A dynamical model for solving degenerate quadratic minimax problems with constraints. J Comput Appl Math. 2011;236:1282–95.

Nazemi AR. A dynamic system model for solving convex nonlinear optimization problems. Commun Nonlinear Sci Numer Simul. 2012;17:1696–05.

Nazemi AR, Omidi F. A capable neural network model for solving the maximum flow problem. J Comput Appl Math. 2012;236:3498–13.

Nazemi AR, Omidi F. An efficient dynamic model for solving the shortest path problem. Transp Res Part C Emerg Technol. 2013;26:1–19.

Pan S-H, Chen J-S. A semismooth Newton method for the SOCCP based on a one-parametric class of SOC complementarity functions. Comput Optim Appl. 2010;45:59–88.

Pyne IB. Linear programming on a electronic analogue computer. Trans Am Inst Elect Eng. 1956;75:139-43.

Quarteroni A, Sacco R, Saleri F. Numerical mathematics. Texts in applied mathematics, vol. 37, 2nd ed. Berlin: Springer; 2007.

Sun D, Sun J. Strong semismoothness of the Fischer–Burmeister SDC and SOC complementarity functions. Math Program. 2005;103(3):575–81.

Sun J, Zhang L. A globally convergent method based on Fischer–Burmeister operators for solving second-order cone constrained variational inequality problems. Comput Math Appl. 2009;58:1936–46.

Sun J, Chen J-S, Ko C-H. Neural networks for solving second-order cone constrained variational inequality problem. Comput Optim Appl. 2012;51:623–48.

Tao Q, Cao J, Sun D. A simple and high performance neural network for quadratic programming problems. Appl Math Comput. 2001;124:251–60.

Xia Y, Feng G. An improved network for convex quadratic optimization with application to real-time beamforming. Neurocomputing. 2005;64:359–74.

Xia Y, Wang J. Primal neural networks for solving convex quadratic programs. In: International joint conference on neural networks; 1999. p. 582–87.

Xia Y, Wang J. A recurrent neural network for solving linear projection equations. Neural Netw. 2000;13:337–50.

Xia Y, Wang J. A general projection neural network for solving monotone variational inequality and related optimization problems. IEEE Trans Neural Netw. 2004;15:318–28.

Xia Y, Wang J. A one–layer recurrent neural network for support vector machine learning. IEEE Trans Syst Man Cybern Part B: Cybern. 2004;34:1261–69.

Xia Y, Leung H, Wang J. A projection neural network and its application to constrained optimization problems. IEEE Trans Circuits Syst. 2002;49:447–58.

Xia Y, Feng G. Solving convex quadratic programming problems by an modefied neural network with exponential convergence. IEEE International Conference Neural Networks and Signal Processing, Nanjing, China; 2003. p. 14–17.

Xia Y, Feng G, Wang J. A recurrent neural network with exponential convergence for solving convex quadratic program and related linear piecewise equation. Neural Networks. 2004;17:1003–15.

Xue X, Bian W. A project neural network for solving degenerate convex quadratic program. Neurocomputing. 2007;70:2449–59.

Yang Y, Cao J. A feedback neural network for solving convex constraint optimization problems. Appl Math Comput. 2008;201:340–50.

Zhang J, Zhang L. An augmented lagrangian method for a class of inverse quadratic programming problems. Appl Math Optim. 2010;61:57–83.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Nazemi, A., Nazemi, M. A Gradient-Based Neural Network Method for Solving Strictly Convex Quadratic Programming Problems. Cogn Comput 6, 484–495 (2014). https://doi.org/10.1007/s12559-014-9249-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-014-9249-0