Abstract

The Steiner tree problem is a challenging NP-hard problem. Many hard instances of this problem are publicly available, that are still unsolved by state-of-the-art branch-and-cut codes. A typical strategy to attack these instances is to enrich the polyhedral description of the problem, and/or to implement more and more sophisticated separation procedures and branching strategies. In this paper we investigate the opposite viewpoint, and try to make the solution method as simple as possible while working on the modeling side. Our working hypothesis is that the extreme hardness of some classes of instances mainly comes from over-modeling, and that some instances can become quite easy to solve when a simpler model is considered. In other words, we aim at “thinning out” the usual models for the sake of getting a more agile framework. In particular, we focus on a model that only involves node variables, which is rather appealing for the “uniform” cases where all edges have the same cost. In our computational study, we first show that this new model allows one to quickly produce very good (sometimes proven optimal) solutions for notoriously hard instances from the literature. In some cases, our approach takes just few seconds to prove optimality for instances never solved (even after days of computation) by the standard methods. Moreover, we report improved solutions for several SteinLib instances, including the (in)famous hypercube ones. We also demonstrate how to build a unified solver on top of the new node-based model and the previous state-of-the-art model (defined in the space of arc and node variables). The solver relies on local branching, initialization heuristics, preprocessing and local search procedures. A filtering mechanism is applied to automatically select the best algorithmic ingredients for each instance individually. The presented solver is the winner of the DIMACS Challenge on Steiner trees in most of the considered categories.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The Steiner tree problem (STP), in any of its various versions, is a challenging NP-hard problem that involves two related decisions: choosing the nodes to cover, and then covering them at minimum cost. Once the first decision has been taken, the second one is just trivial as it amounts to solving a minimum-cost tree spanning the selected nodes.

In this paper we introduce a new mixed-integer linear programming (MIP) approach for solving hard instances of the Steiner tree problem (close) to optimality. Instead of modeling graph connectivity using edge or arc variables (where many of them can exist), we propose to model it by using node variables only. Our model is particularly suited for “uniform” cases where all edges have the same cost. Besides the fact that these node-based models contain significantly less variables, they also avoid equivalences induced by uniform edge weights. For very dense graphs, or those containing a lot of symmetries, this strategy significantly outperforms the standard models where connectivity is modeled by using edge variables. We then demonstrate how to build a unified solver on top of the new node-based model and the previous state-of-the-art model from [22] (defined in the space of arc and node variables). The solver relies on local branching, initialization heuristics, preprocessing and local search procedures. A filtering mechanism is applied to automatically select the best algorithmic ingredients for each instance individually.

Our approach works for different variants of the Steiner tree problem, including the (rooted) prize-collecting STP (PCSTP), the node-weighted STP (NWSTP), the maximum-weight connected subgraph problem (MWCS), and also the degree-constrained STP (DCSTP). To have a unified framework, in the following we will therefore focus on a slightly more general variant of the PCSTP, with a (potentially empty) set of terminal nodes. This general problem definition covers both the classical Steiner tree problem in graphs and its prize-collecting counterpart, as special cases. Necessary adaptations for the remaining problems will be explained below. It is worth mentioning that our code (with four variants submitted under the names mozartballs, staynerd, hedgekiller and mozartduet) was the winner of the DIMACS Challenge on Steiner trees [1] in most of the categories (see [12] for more details). This article contains a summary of the main ingredients of this implementation.

Definition 1

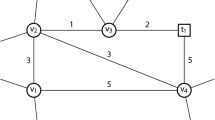

(The prize-collecting Steiner tree problem (PCSTP)) Given an undirected graph \(G=(V,E)\) with a (possibly empty) set of real terminals \(T_r \subset V\), edge costs \(c:E \mapsto \mathbb {R}^+\) and node revenues \(p:V \mapsto \mathbb {R}^+\), the goal is to find a subtree \(\mathscr {T}=(V[\mathscr {T}],E[\mathscr {T}])\) that spans all real terminals and such that the cost

is minimized.

In the classical PCSTP version studied in the previous literature, \(T_r = \emptyset \), and the problem can be equivalently stated as searching for a subtree that maximizes the difference between the collected node revenues (\(\sum _{i \in V[\mathscr {T}]} p_i\)) and the costs for establishing the links of that tree (\(\sum _{e \in E[\mathscr {T}]} c_e\)). One objective value can be transformed into another by subtracting \(c(\mathscr {T})\) from the sum of all node revenues (\(P = \sum _{i \in V} p_i\)).

In general, each node in \(V{\setminus }T_r\) is considered as a Steiner node, i.e., it can be used as an intermediate node to connect real terminals, or those with positive revenues.

Observe that there always exists an optimal PCSTP solution in which non-terminal nodes with zero revenue are not leaves. The same holds for each node \(i \in V\) such that \(p_i > 0\) and \(\min _{\{i,j\} \in E} c_{ij} > p_i\). Note that we impose strict inequality in the latter condition. Hence, besides real terminals only a specific subset of nodes in the PCSTP can be leaves of an optimal solution. We will refer to those nodes as potential terminals.

Definition 2

(Potential terminals) Among the nodes \(i\in V {\setminus } T_r\), only those with revenues \(p_i > 0\) such that at least one adjacent edge is strictly cheaper than \(p_i\) are considered as potential leaves. These nodes are referred to as potential terminals, and the associated set is denoted by \(T_p\):

In the following, we will call the set \(T = T_r \cup T_p\), the set of terminal nodes. Our general problem definition covers the Steiner tree problem in graphs, since in this case all node revenues are equal to zero (\(p_v = 0\), for all \(v \in V\)) and the set of real terminals is nonempty, i.e., \(\emptyset \ne T = T_r \subset V\).

Previous work A MIP model for the PCSTP using both node and arc variables has been proposed in [22]. The formulation is based on a transformation of the PCSTP to a directed instance. A similar cut-based model has also been applied to the STP in [19]. In our work the model of [22] has been extended to the general problem definition of the PCSTP presented in this article which covers both problems. Given an instance \(I=(G(V,E),c,p,T_r)\) of the PCSTP, an instance \(I'=(G'(V',A'),c',p,T_r,r)\) is constructed as follows: An artificial root node r is added, i.e., \(V'=V\cup \{r\}\). For each edge \(\{i,j\}\in E\), \(A'\) contains two anti-parallel arcs (i, j) and (j, i) with \(c'_{ij}=c_{ij}-p_j\). Furthermore, r is connected by arcs (r, j) with \(c'_{rj}=-p_j\) to each potential terminal \(j \in T_p\). The set of terminals and revenues are left unchanged. For \(S \subset V\), let \(\delta ^-(S)\) be defined as the set \(\{(i,j) \in A' : i \notin S, j \in S\}\). Subsequently, a MIP model for the PCSTP is formulated as follows:

Binary arc variables \(x_{ij}, \forall (i,j) \in A '\), are set to one if arc (i, j) is part of the solution. Similiarly, binary node variables \(y_v, \forall v \in V'{\setminus } \{r\}\), are set to one if node v is part of the solution. Constraints (2) ensure that each node selected has exactly one incoming arc, and link node and arc variables. Cut constraints (3) guarantee that the solution is connected. Constraint (4) ensures that exactly one artificial root arc is chosen.

Constraints (5) have been added to allow the incorporation of real terminals \(T_r\) into the model, which have not been considered in [22]. Note that if \(|T_r|\ge 1\), instead of adding an artificial root and its associated arcs, it is sufficient to choose an arbitrary real terminal \(v \in T_r\) as root. In this scenario constraint (4) must be excluded from the model.

The formulation may be augmented with further valid or strengthening inequalities, e.g., flow-balance inequalities, root asymmetry constraints, and generalized subtour elimination constraints (GSEC) of size two (cf. [22] for details). The advantage of this formulation is that it makes little assumptions on instance structure, however the number of arc variables may be computationally prohibitive on dense graphs. Throughout this article, we will refer to this formulation as (x, y)-model.

2 A node-based MIP model

Node-based models for solving the maximum-weight connected subgraph problem have been compared, both theoretically and computationally, in a recent publication [2]. Since there are no edge-costs involved in the objective of the MWCS, a natural MIP modeling approach is to derive a formulation in the space of node variables only. The first node-based model for the MWCS has been proposed in [5], and has been shown to computationally outperform extended formulations (involving both edge and node variables) on a benchmark set of instances from bioinformatics applications. However, as demonstrated in [2], the cycle-elimination model of [5] provides arbitrarily bad lower bounds and can be computationally improved by considering the notion of node separators whose definition is provided below. For brevity, in the rest of the article a node separator will be referred to simply as separator.

For STP/PCSTP instances, uniform edge costs can be embedded into node revenues (as shown below), so that using node-based MIPs appears natural in this case.

Definition 3

(Node separators) For two distinct nodes k and \(\ell \) from V, a subset of nodes \(N \subseteq V {\setminus } \{k,\ell \}\) is called \((k,\ell )\)-separator if and only if after eliminating N from V there is no \((k,\ell )\) path in G. A separator N is minimal if \(N{\setminus } \{i\}\) is not a \((k,\ell )\)-separator, for any \(i \in N\). Let \(\mathscr {N}(k,\ell )\) denote the family of all \((k,\ell )\)-separators.

Note that in order to make sure that a subset of chosen nodes is connected, it is sufficient to impose connectivity between the pairs of terminals (due to the minimization of the objective function). Therefore, we are mainly interested in separators between pairs of terminals.

Let \(\mathscr {N}_{\ell } = \cup _{k \in T, k \ne \ell } \mathscr {N}(k,\ell )\) be the family of all such separators with respect to a node \(\ell \in T\). We will refer to elements from \(\mathscr {N}_{\ell }\) as \(\ell \)-separators. Finally, let \(\mathscr {N}= \cup _{\ell \in T} \mathscr {N}_\ell \) be the set of all node subsets that separate two arbitrary terminals.

Let us assume that we are dealing with an undirected graph G with node revenues \(p_v\) and uniform edge costs \(c_e = c\) for all \(e \in E\).

In order to derive a node-based model, we will first shift edge costs into node costs as follows:

Let \(P = \sum _{v \in V} p_v\) be the sum of all node revenues in G. Binary node-variables \(y_v\), \(\forall v\in V\), will be set to one if node v is part of the solution, and the node-based MIP model can be derived as follows:

where \(y(N) = \sum _{v \in N} y_v\).

Connectivity constraints (8) are used to ensure connectivity of the underlying solution. Basically, whenever two distinct terminals \(i,j \in T\) are part of a solution, at least one node from any separator \(N \in \mathscr {N}(i,j)\) has to be chosen as well, in order to ensure that there exists a path between i and j.

There is a difference between our model and the one considered in [2], where a more general MWCS variant on digraphs has been studied. In this latter variant, a root node needs to be established, i.e., a node r with in-degree zero and such that there is a directed path from r to any node j being part of the solution. Consequently, an additional set of node variables was needed to locate the root, separators were defined on digraphs, and connectivity constraints have been lifted with respect to root variables. Since our input graphs are undirected, we rely on the undirected model to keep the number of variables as small as possible. Only very recently, connectivity constraints (8) were given more attention in the literature concerning polyhedral studies. In particular, [26] studies the connected subgraph polytope, involving node variables only, and show that (8) define facets if and only if N is a minimal separator separating i and j.

Node-degree inequalities The following node-degree inequalities are also valid for our model:

where \(A_i = \{ v \in V \mid \exists \{v,i\} \in E\}\) is the set of all neighboring nodes of i. For terminals, these constraints ensure that at least one of their neighbors is part of the solution (assuming \(\sum _{v\in V} y_v \ge 2\), which can be safely assumed after preprocessing single-node solutions). Clearly, they are just a special case of the inequalities (8), but can be used to initialize the model for a branch-and-cut approach. For the remaining nodes, constraints (11) make sure that each such node that belongs to a solution will be used as an intermediate node, i.e., at least two of its neighbors have to be included in the solution as well. These constraints are not implied by (8), in fact they can improve the quality of lower bounds of the original model.

It is sufficient to consider only minimal separators in inequalities (8) (since they dominate the remaining ones). In order to derive minimal separators associated to node-degree constraints, we observe that nodes from \(V{\setminus } T\) that are only adjacent to i and other nodes from \(A_i\) do not play a role in connecting i to the remaining terminals. Let J be the set of all such neighbors of a given \(i\in T\), i.e., \(J = \{j \in A_i{\setminus } T \mid A_j \subseteq A_i \cup \{i\}\}\). If \(V{\setminus } A_i\) contains other terminals, then \(A_i {\setminus } J\) is a minimal i-separator, and we can correspondingly strengthen the node-degree inequalities (11).

Finally, observe that for potential terminals \(i \in T_p\), the node-degree inequality can be lifted as follows:

2-Cycle inequalities Observe the following: if node \(i \in V\) is adjacent to a node \(j \in T_p\), so that \(c_{ij} < p_j\), then if i is part of the optimal solution, j has to be included as well, i.e.,

2.1 Separation of connectivity constraints

Whenever we want to cut off a fractional solution \(\tilde{y}\), we can separate the connectivity cuts (8) by applying a maximum flow algorithm. For each pair of distinct terminals (i, j) such that \(\tilde{y}_i + \tilde{y}_j - 1 > 0\), one would need to find a minimum (i, j)-cut in a support digraph D which is constructed as follows. First, each edge \(e \in E\) is replaced by two directed arcs. Then, each node \(v \in V{\setminus }\{i,j\}\) is replaced by an arc \((v',v'')\) whose capacity is defined as \(\tilde{y}_v\), all arcs entering v are now directed into \(v'\), and all arcs leaving v are now directed out of \(v''\). Capacities of these arcs are set to \(\infty \). Since all arcs except the node-arcs are of infinite capacity, the maximum (i, j)-flow returns the desired violated connectivity constraint.

According to our computational experience, however, the above procedure is rather time consuming (all terminal pairs need to be considered, and for each pair, the maximum flow is calculated). As there is always a certain trade-off between the quality of lower bounds obtained by separating fractional points and the associated computational effort, we refrain from the separation of fractional points in our default implementation.

Consequently, to ensure the validity of our branch-and-cut approach, we need to cut off infeasible integer points enumerated during the branching procedure (or detected by the heuristics of the MIP solver, given that the solver was not provided a complete information about the structure of the problem). Infeasible points are cut off by means of a LazyCutCallback in our setting based on the commercial MIP solver IBM ILOG CPLEX. For a given pair of distinct terminal nodes \(i,j \in T\) such that \(\tilde{y}_i = \tilde{y}_j = 1\), our separation procedure runs in linear time (with respect to |E|) and works as outlined below.

To derive our algorithm, we use the following well-known property of node separators (see, e.g., [18]):

Lemma 1

Let \(N \in \mathscr {N}(i,j)\) be an (i, j)-separator for \(i,j \in T\), \(i \ne j\), and let \(C_i\) and \(C_j\) be connected components of \(G - N\) such that \(i \in C_i\), \(j \in C_j\). Then N is a minimal (i, j) node separator iff every node in N is adjacent to at least one node in \(C_i\) and to at least one node in \(C_j\).

Let \(\tilde{y}\) be an integer solution, and let \(G_{\tilde{y}} = (V, E_{\tilde{y}})\) denote the support graph induced by \(\tilde{y}\), where

If \(\tilde{y}\) is infeasible, then \(G_{\tilde{y}}\) contains at least two connected components, say \(C_i\) and \(C_j\), with \(i\in C_i\), \(j\in C_j\), and \(\tilde{y}_i=\tilde{y}_j=1\). Let \(A(C_i)\) be the set of neighboring nodes of \(C_i\) in G, i.e., \(A(C_i) = \{ v \in V{\setminus } C_i \mid \exists \{u,v\} \in E, u \in C_i\}\). Clearly, \(\{i,j\} \not \in E\) and hence \(A(C_i) \in \mathscr {N}_i\). However, \(A(C_i)\) is not necessarily a minimal (i, j)-separator, and Algorithm 1 below describes how to derive a minimal separator starting from \(A(C_i)\).

In practice, set \(R_j\) can be found by just running a standard Breadth-First Search (BFS) on the original graph G, starting from node j, with the additional rule that nodes in \(A(C_i)\) are never put in the BFS queue.

Proposition 1

Algorithm 1 returns a minimal separator \(N \in \mathscr {N}(i,j)\) in time O(|E|).

Proof

By definition of N, i and j are not connected in \(G - N\). To see that N is a minimal (i, j)-separator, consider \(G - N\) and let \(C'_i\) and \(C'_j\) be two connected components, containing i and j, respectively. Clearly, \(C_i \subset C'_i\) and \(C'_j=R_j {\setminus } N\). Hence, by Lemma 1, it follows that N is minimal. \(\square \)

In case \(p>2\) connected components exist in \(G_{\tilde{y}}\) (each of them containing at least one terminal), one can repeat the procedure described in Algorithm 1 for each pair of distinct components \(C_i\) and \(C_j\).

We conclude this section by observing that, for the pure STP case with real terminals only, connectivity constraints translate into

where \(\mathscr {N}\) is the family of all separators between arbitrary real terminal pairs. Our model can therefore be interpreted as set covering problem with an exponential number of elements to be covered. As demonstrated by our computational experiments, this property can be exploited to derive specialized set covering heuristics for pure STP instances with uniform costs, with a significant performance boost.

3 Algorithmic framework

The proposed node-based model can be solved by means of a branch-and-cut (B&C) algorithm, to be initialized with a high-quality feasible solution and with a suitable set of relevant connectivity constraints.

Our initial MIP model, called the basic model in what follows, is made by (7), (9), (10), plus the node-degree inequalities (11) and the 2-cycle inequalities (12).

The overall algorithmic framework is shown in Algorithm 2. In an initialization phase, an initial solution pool \(\mathscr {S}_\mathrm {init}\) is generated by means of some problem-dependent heuristics. In a subsequent local branching phase, multiple calls of the B&C algorithm are used to explore the neighborhood of starting solutions chosen from the solution pool \(\mathscr {S}_\mathrm {init}\). All connectivity constraints separated during this phase are globally valid, hence they are stored in a global cut pool \( CutPool \), and added to the initial MIP model before each B&C re-execution. The incumbent solution (denoted by \( Sol \)) is updated correspondingly. A detailed description of the LocalBranching procedure and of its parameters is given in Sect. 3.1.

The local branching phase implements a multiple restart policy (with different seed values and a maximum number of iterations \( LBMaxRestarts \)), intended to gather relevant information about the problem at hand, namely good primal solutions and a relevant set of connectivity constraints; see e.g. [16] for a recent application of multiple restarts to MIPs with uncertainty. The availability of such information at the root node of each B&C re-execution turns out to be very important, as it triggers a more powerful preprocessing as well as the generation of a bunch of useful general-purpose cuts (in particular, \(\{0,1/2\}\)-cuts [3, 6]) based on the problem formulation explicitly available on input.

The algorithm terminates after proving the optimality, or after reaching the given time limit.

3.1 Local branching

Local branching (LB) has been proposed in [15] as a solution approach that uses the power of a general-purpose MIP solver as a black box to strategically explore promising solution subspaces. LB is in the spirit of large-neighborhood search metaheuristics, with the main difference that the underlying local search black-box algorithm is a MIP with a specific local branching constraint that restricts the search for an optimal solution within a certain neighborhood of a given reference solution.

The LB framework is built on top of our B&C solver. As already mentioned, our solver deals with two sets of inequalities: those in the basic model, that are always part of the model, and connectivity constraints (8) that are dynamically separated and stored in a global \( CutPool \) to be used in every subsequent B&C call.

Given a reference solution \( Sol \), let \(W_1 = \{ v\in V \mid v \in Sol \}\) and \(W_0 = V {\setminus } W_1\). The symmetric local branching constraint makes sure that the new solution is within a given radius r from the solution \( Sol \) with respect to the Hamming distance between the two solutions, i.e.

Alternatively, one may consider an asymmetric local branching constraint, requiring that the new solution contains at least \(| Sol | - r\) nodes from \( Sol \):

Notice that, for a fixed radius, the neighborhood of the asymmetric version is larger and leads to potentially better solutions—though it is more time consuming to explore. For example, for \(r= 0\) the asymmetric version is equivalent to fixing to 1 all the variables from \( Sol \), so that many feasible solutions are still available even in the 0-neighborhood around \( Sol \). After some preliminary tests, we decided to use the asymmetric LB constraint (13) in our implementation, with a small radius r ranging from 10 to 30. Working with a small radius is indeed crucial for the success of proximity methods such as LB, as recently pointed out in [17].

The LB framework is shown in Algorithm 3. Since the goal of the B&C in this context is to quickly find high-quality solutions, we do not necessarily search for an optimal solution within the given neighborhood, but we rather impose limits on the number of incumbent solutions found (\( LBSolLim \)), and a time limit per iteration (\( LBTimeLim \)).

The neighborhood is systematically explored by starting with an initial radius \(r_{min}\), and increasing it by \(r_{delta}\) each time the subproblem could not provide an improved solution. Each time an improved solution is found, the neighborhood radius is reset to \(r_{min}\). The whole framework is executed until a given number of iterations (\( LBMaxIter \)) is reached, or the radius exceeds \(r_{max}\). Note that the radius limit \(r_{max}\), in combination with a consistent increase of the current radius, implicitly imposes a limit on the overall number of iterations without any improvement.

3.2 Benders-like (set covering) heuristic

Local branching has a primal nature, in the sense that it produces a sequence of feasible solutions of improved cost. In addition, it needs a starting feasible solution, that in some cases can be time consuming to construct. As a matter of fact, for some very large/hard classes of instances we found that a dual approach is preferable, that produces a sequence of infeasible (typically, disconnected) solutions and tries to repair them to enforce feasibility. Algorithm 4 illustrates a general dual scheme that can be viewed as a heuristic version of the well-known Benders’ exact solution approach to general MIPs.

In our experiments, we found that the above approach works very well for uniform STP instances of very large size, for which the standard MIP approach seems not very appropriate as even the LP relaxation of the model takes an exceedingly large computing time to be solved. Our set-covering based heuristic is an implementation of Algorithm 4 for these hard instances, and is based on their set covering interpretation. Indeed, as already observed, the basic model turns out to be a compact set covering problem where columns correspond to Steiner nodes, rows to real terminals not adjacent to any other real terminal, and column j covers row i iff \(\{i,j\}\in E\). The approach can be outlined as follows.

At each iteration of the while loop, the relaxation to be heuristically solved is constructed through a procedure that automatically extracts a set covering relaxation from the current model. This is done by simply (1) projecting all fixed variables (including y variables for hard terminals) out of the model, and (2) skipping all constraints, if any, that are not of type \(y(S) \ge 1\) for some node set S.

We then proceed by heuristically solving the set covering relaxation through an implementation of the Caprara–Fischetti–Toth (CFT) heuristic [7, 8]. This is a very efficient set covering heuristic based on Lagrangian relaxation, that is specifically designed for hard/large instances.

Given a hopefully good set covering solution \( Sol ^R\), we repair it in a very aggressive way by introducing a local branching constraint in asymmetric form with radius \(r=0\), and then by applying our B&C solver (with its ad-hoc connectivity cut separation) with a short time/node limit. As already observed, this local branching constraint in fact corresponds to fixing \(y_j=1\) for all j such that \( Sol ^R_j=1\). As a result, the size/difficulty of the MIP model after fixing is greatly reduced, hence the node throughput of the B&C solver becomes acceptable even for large instances—while setting a larger radius would not result in a comparable speedup.

All violated connectivity cuts generated by the B&C procedure are added to \( CutPool \) and hence to the current model. This makes solution \( Sol ^R\) (if disconnected) infeasible even for the next set covering relaxation and thus the procedure can be iterated until an overall time/iteration limit is reached.

To improve diversification, our implementation uses the following two mechanisms:

-

the procedure that extracts the set covering model makes a random shuffle of the rows/columns, so as to affect in a random way the performance of the CFT heuristic;

-

before the repairing phase, we randomly skip (with a uniform probability of 20 %) some variable fixings, meaning that approximately 80 % of the variables \(y_j\)’s with \( Sol ^R_j=1\) are actually fixed.

As such, the performance of our final heuristic (though deterministic) is affected by the initial random seed, a property that can be very useful to produce different solutions in a multi-start scheme.

Finally, we observe that our current CFT implementation is sequential and cannot exploit multiple processors. We therefore decided to also run the refining B&C in single-thread mode, thus obtaining an overall sequential code that can be run in parallel and with different random seeds on each single core (in the multi-thread mode). The best found solution is finally returned.

This Benders-like heuristic is embedded in the overall algorithmic framework shown in Algorithm 2 as InitializationHeuristics for uniform STP instances on bipartite graphs with a large percentage of terminals. Indeed, these kinds of graphs are very regular and the basic model likely gives a reasonable approximation of the STP problem—in the sense that most connectivity constraints are automatically satisfied. In addition, the while-loop in Algorithm 2 that would apply standard local branching after the set-covering based heuristic is unlikely to be effective for these graphs, so we set \( LBMaxIter =0\) and skip it in this case.

We conclude this section by observing that the “relaxation” to be heuristically solved in the Benders-like scheme is not intended to produce valid dual bounds, as its purpose is to feed the refining procedure with good (possibly disconnected) solutions. As a matter of fact, one can think of the relaxation as a “blurred” version of the original problem, which retains some of its main features but is not bothered by too many details (namely, connectivity conditions) that would overload the model. Following this general idea, we implemented the following variant of our set-covering based heuristic, which is intended for non-uniform instances on bipartite graphs with a large percentage of terminals.

Given a non-uniform instance of the STP, it is transformed to a uniform instance by adapting its revenue and edge costs as follows: For each non-terminal \(v \in V {\setminus } T_r\), its node cost \(\tilde{c}_v\) is set to the average cost of its incident edges, i.e., \(\tilde{c}_v=\frac{1}{|\delta (v)|}\sum _{e\in \delta (v)} c_e\). Next, all edge costs and node revenues are set to zero. Intuitively, edge cost information is moved into node costs, so as to get a “blurred” uniform instance on the same underlying graph. Note that these modified costs/revenues are only used within the CFT heuristic, while the original ones are used in the refining phase.

3.3 Implementation details of the (x, y)-model

The model described in Sect. 1 has been implemented together with several algorithmic enhancements, which are detailed in the following paragraphs.

Initialization heuristic A pool of initial feasible solutions is constructed as follows. Several terminals are chosen as root nodes, for each of which a solution is calculated by applying the shortest path Steiner tree heuristic (see, e.g., [4]). In the PCSTP case, for a small number of iterations the set \(T_p \cup T_r\) is perturbed and subsets of terminals of different size are considered as fixed terminals \(T'\). Then for each chosen set \(T'\), the same construction heuristic as for the STP is applied. Each solution is also improved through a local search (see below).

For sparse, (almost) planar non-uniform instances of the STP, our framework computes an additional, enhanced initial solution by applying a parallel variant of the partitioning heuristic described in [21]. Based on a randomly chosen solution from the pool, the input graph is partitioned into a set of smaller subgraphs (containing terminals and their closest Steiner nodes). The STP is then solved to optimality (or with a small time limit) on each of these subgraphs independently. The obtained disconnected (and thus, infeasible) solution is then repaired by a shortest-path like heuristic, followed by a local search. When run in a multi-thread mode, solving the STP on each of the subgraphs is assigned to a single thread.

Primal heuristic We apply a few rounds of rounding on the set of y variables, with different threshold values. Rounded up variables again define a set of fixed terminals \(T'\) on which we run the shortest path Steiner tree heuristic (with a modified cost function that reflects the LP-values at the current branch-and-bound node) and prune Steiner leaves.

Local search heuristics Solutions obtained by the initialization or the primal heuristic are further improved by applying several local search procedures: key-path-exchange, (key-)node-removal and node-insertion. For further implementation details about these heuristics, see [25]. We only accept strictly improving moves, except for uniform and almost uniform instances, for which (due to existence of many symmetric solutions) all moves with non-increasing objective values are performed. An instance is considered as almost uniform if the absolute difference between the minimum and maximum edge weight is less than ten.

Additional valid inequalities In [22] the authors observe that the (x, y)-model for PCSTP contains a lot of symmetries, and propose to get rid of them by fixing the terminal node with the smallest index (among those taken in the solution) to be the root node. This is imposed by adding additional asymmetry constraints. The latter constraints added by [22] were given in a disaggregated form, whereas in our implementation we add their stronger, aggregated variant: \(\sum _{i > j} x_{ri} \le 1 - y_j\), for all \(j \in T\). We also add 2-cycle inequalities (12) to our starting model.

Separation algorithms For fractional solutions, connectivity constraints are separated by the calculation of the maximum flow (implemented through UserCutCallback of CPLEX). Algorithm 5 shows the separation procedure for the (x, y)-model. First, a small value \(\varepsilon \) is added to the fractional LP solution \(x^*\) of arc variables, which are used as capacities for the maximum flow algorithm. This approach ensures that the separated cuts are of minimum cardinality, and is known to reduce the number of required cutting plane iterations [19, 22]. Subsequently, the maximum flow is computed between the root and each potential terminal \(i \in T_p\). Among the two cut sets (\(S_r\) and \(S_i\), \(r \in S_r\), \(i \in S_i\)) returned by the maximum flow algorithm of [9], we choose the one closer to the terminal, i.e., \(S_i\) (cut sets closer to the root typically involve similar edges, and hence may imply many redundant cuts). A technique referred to as nested/orthogonal cuts [19, 23] is applied to generate more diverse cuts in each iteration. Whenever a violated cut is identified, the capacities of the associated arcs are set to one, which ensures that the intersection between violated cuts separated within the same cutting plane iteration is empty.

To further speed up separation, we skip the maximum flow calculation for terminals that are already reachable from the root in the support graph (node set W in the algorithm). We do not separate cuts recursively [22, 23], but instead separate at most one connectivity constraint for each terminal per iteration. Avoiding the separation of fractional solutions while branching and adding only one cut per terminal seemed to perform best on average in preliminary experiments.

For the PCSTP we only separate cuts between the root and those terminals i such that \(y_i \ge 0.5\) in the given fractional solution to separate. Furthermore, instead of adding a connectivity constraint (3) as in [22], we may replace the right-hand side with one if the revenue on the sink side of the cut is larger than the objective of the incumbent solution. This implies that in an optimal solution, at least one more node on the sink side has to be connected to the root.

Infeasible integer solutions are separated by searching for connected components in the support graph. For each subset S inducing a connected component of an infeasible solution, a connectivity constraint \(x(\delta ^-(S)) \ge y_i\) is added to the model, for \(i \in S\) with the highest revenue.

3.4 A unified solver and automatic parameter adjustments

To achieve the best performance over all different types of problem instances, we have implemented a unified solver that switches between the node-based model (in the following referred to as y-model) and the (x, y)-model presented in the introduction, depending on the instance properties. The overall algorithmic framework given in Algorithm 2 remains the same, with the main difference being the MIP model for the underlying B&C procedure. To this end, our solver contains a filter that analyzes the structure and costs of the input graph. According to these properties, the algorithm decides the actual MIP model, as well as the kind of initialization heuristics and preprocessing to apply. We note that in the proposed algorithm these rules are only used for the problem types STP, PCSTP and MWCS (which are transformed to their PCSTP representation). DC-STP and RPCSTP instances are solved solely by B&C on the (x, y)-model without any sophisticated settings. Table 1 shows all types of instance properties identified by the filter.

Table 2 lists general filter rules for model and parameter selection. By default, the y-model is applied to instances with uniform edge costs, while the (x, y)-model is applied to all others. For sparse, uniform graphs with a relatively low number of terminals, we switch to the (x, y)-model, as preliminary experiments have shown that the y-model is less efficient for this specific class. For the y-model, the MIP at the root node is restarted two times in order to generate more general purpose cutting planes, since the cutting plane generation procedures of CPLEX for such cuts are triggered again after a restart and produce additional cuts.

For the (x, y)-model a tailing-off bound is specified, which defines the allowed ratio between the lower bounds of two consecutive B&C iterations in the root node. In addition, a tailing-off tolerance value is defined, which specifies the number of times this bound is allowed to be violated in a row. If the number of violations exceeds the specified tolerance-parameter, the algorithm switches from separation of fractional solutions to branching. The two parameters are chosen based on graph density. Instances are roughly divided into two classes, sparse (\(|E|/|V| \le 3\)) and dense (\(|E|/|V| > 3\)) graphs. The tailing-off bound is only activated for dense graphs, while for sparse graphs we avoid branching as long as possible. In addition, we identify very dense (\(|E|/|V| > 5\)) instances, in which we set a lower tailing-off tolerance than in the regular case.

Table 3 lists filter rules that select problem-specific settings and algorithms for STP instances. Given that the (x, y)-model has been selected by the filter, the model may be initialized by a set of connectivity cuts generated by a dual ascent algorithm [27], and with a starting solution generated by a parallel implementation of a partitioning heuristic (see [21]). Based on the terminal ratio, the dual ascent connectivity cuts are either added at the beginning of the B&C or separated. Similarly, flow-balance constraints and generalized subtour elimination constraints (GSEC) of size two may be chosen to be separated if the graph is large, sparse and has a low terminal ratio. Dense STP instances are instead preprocessed by using the special distance test [13].

Table 4 lists additional rules for the selection of initialization heuristics. In the case of bipartite graphs, the set-covering heuristic is applied for both uniform and non-uniform instances to generate high-quality starting solutions. For non-uniform instances, each node v is assigned the weight \(\frac{1}{|\delta (v)|}\sum _{e \in \delta (v)} c_e\) (“blurred” set-covering heuristic). We note that also in the case of PCSTP instances we choose to apply the blurred set-covering heuristic for the large non-uniform instances. A significant slowdown of the (x, y)-model has been observed for larger instances, at which point the blurred set-covering heuristic performs better. By default, the following settings are also applied when executing local branching: The primal heuristic is executed in every branch-and-bound node for the (x, y)-model and in each 1000 nodes for the y-model. The GSECs of size two in the (x, y)-model are separated and stored in the cut pool. The local branching time limit per iteration is set to 120 s. The neighborhood radius is initialized with 10 and increased by 10 per iteration up to a maximum of 30.

4 Computational results

Our algorithms are applied to the following problems from the DIMACS challenge: STP, (rooted) PCSTP, MWCS, and also degree-constrained STP (DCSTP). We begin by summarizing the results obtained on a set of hard (unsolved) cases of the SteinLib [20] instance library, and on a set of non-trivial MWCS instances posted on the DIMACS challenge website. The performance of the y and (x, y)-model is compared given a 2-h time limit (based on a single run per instance with fixed seed value). The filter from Sect. 3.4 is deactivated during these runs, to enable a bare-bone comparison between the models. Since on some of the tested datasets erratic performance could be observed during preliminary experiments, for these cases ten independent runs with different seeds have been performed, and the best and average running times are reported.

Detailed computational results, covering all nontrivial instances from the challenge are provided in the Appendix, ESM. Among these nontrivial instances, we distinguish between easy and difficult ones. For this purpose, we first run all considered instances using a pure B&C approach, for which the set-covering heuristic and local branching are deactivated. The filter rules listed in Tables 2 and 3 are applied and a time limit of 1 h (with a fixed seed value) is imposed. All instances that remained unsolved by this approach are considered as difficult.

Our heuristic framework (consisting of the initialization and local branching phase, see Algorithm 2) is then applied to all difficult instances with a time limit of 1 h (ten independent runs with different seeds).

The experiments were performed on a cluster of computers, each consisting of 20 cores (Intel E5-2670v2 2.5 GHz) and with 64GB RAM available for the 20 cores. Reported computing times are in wall-clock seconds. To limit the overall time needed to complete our experiments, we decided to allow up to five simultaneous 4-core runs on the same computer, which however implies a significant slowdown due to shared memory.

All algorithms have been implemented in C++ and compiled with GCC 4.9.2. For data structures we used OGDF [10, 24] and the dtree library [14]. CPLEX 12.6 was used as MIP-solver with an imposed memory limit of 12GB RAM.

4.1 Results for uniform STP instances GAPS and SP

For the subgroup “skutella” (s3–s5) of the artificially generated uniform instance set GAPS for the STP, LP-gaps of the standard connectivity-based (x, y)-model are large. Standard MIP approaches for these instances have difficulties in closing the integrality gap. Table 5 reports our results obtained on instances s1 to s5 from GAPS, and clearly demonstrates the power of our y-model.

Additionally, for two previously unsolved instances from the set SP (with uniform edge costs as well), namely w13c29 and w23c23, we provide optimal values. For these two latter instances, both models were able to prove the optimality, with significant speed-ups achieved by the y-model. Table 6 reports results using ten different seeds. The y-model outperforms the (x, y)-model with respect to the best and the average running times for both instances, the average speed-up ranging between one and two orders of magnitude. For both models, the running times vary greatly depending on the chosen seed value. This is due to the fact that solving the instance to optimality is highly dependent on finding an optimal solution.

4.2 Results for MWCS instances

The MWCS can be transformed into the PCSTP with uniform edge costs (see [2]). We have tested both y- and (x, y)-model on the MWCS dataset, and the obtained computational results (for the most challenging instances) are reported in Table 7 for ten different seed values. Results on the JMPALMK dataset are available in the Appendix, ESM—these instances have all been solved within 1 s.

The results show that the y-model outperforms the (x, y)-model on all instances with relatively dense graphs by roughly an order of magnitude. In contrast, on the sparser metabol_expr_mice instances, the performance is extremely erratic, ranging from one second to over an hour, while the very same instances are always solved to optimality within a few seconds by the (x, y)-model. On instance metabol_expr_mice_1, the y-model fails to prove optimality within the given time limit in two out of ten runs. A closer inspection of the test run data shows that for this instance the y-model enumerates on average more than two million branch-and-bound nodes, while the optimal solution is usually found within the first hour of computation. Additional test runs have been performed with an optimal solution provided from the start, however the runtime did not decrease significantly. A likely explanation is thus that at least for this instance the bounds provided by the y-model are simply too weak to be competitive to the (x, y)-model.

4.3 Results for STP and PCSTP instances

The pure B&C manages to solve most STP instances from the SteinLib and PCSTP instances from the DIMACS challenge website to optimality within an hour. A complete list of all results is available in the Appendix, ESM. As an example and to illustrate the limits of the pure B&C, we report results on instances solved to optimality from the PUC dataset in Table 8. PUC remains one of the hardest dataset for the STP, with many unsolved instances that are immune to methods effective on other types of instances, e.g., reduction tests. The results show that the pure B&C only manages to solve twelve out of 50 PUC instances. However, note that to the best of our knowledge instance cc6-3u has not been solved to optimality prior to the challenge.

For the remaining, more difficult instances, Tables 9, 10, 11, 12, 13, 14 list the most important results after applying the set-covering heuristic or local branching. These datasets have been selected from the set of instances unsolved after 1 h by B&C (detailed results are available in the Appendix, ESM). Each run has been computed by starting the algorithm with ten different seeds, each with 1 h time limit. The choice between the local branching and set-covering heuristic is performed by the filter as described in Sect. 3.4. The final B&C step of the framework is always skipped.

Each table is structured as follows: the first four columns list the instance name, the number of nodes, edges and terminals. The next pair of columns (BEST) shows objective value and time of computation for the best found solution. The following pairs (AVG and STD) list the average and standard deviation of these two values over all ten runs. For tests on previously known STP datasets (PUC, I640) the column ‘Impr.’ lists the improvement w.r.t. the best known published solution (by August 1st, 2014) according to the DIMACS challenge website [11]. In all other cases, the improvement is given w.r.t. the best primal solutions produced during the exact runs after 1 h. As is to be expected, given the same time limit, the heuristic procedures manage to outperform the pure B&C with respect to the computed upper bounds. The comparison on notoriously difficult instances for the STP (PUC and I640, Tables 9, 10) particularly emphasizes the heuristics’ effectiveness, as several of the previously known upper bounds could be improved. The most noticeable and consistent improvement can be observed for the hypercube instances, to which the set-covering heuristic has been applied.

Note that the tables report the results obtained within the time limit of 1 h only. By extending the time limit, and/or by using more than four threads in parallel, the obtained values can further be improved. For example, for the most difficult ones we obtain:Footnote 1

Problem | Instance | Best UB | Previous UB | Time (s) | #Threads |

|---|---|---|---|---|---|

STP | hc11u | 1144 | 1154 | 474 | 8 |

STP | hc12u | 2256 | 2275 | 4817 | 8 |

STP | hc12p | 236,158 | 236,949 | 4411 | 4 |

PCSTP | hc12u2 | 1492 | – | 632 | 16 |

Problem | Instance | OPT | Time (s) | #Threads | #Memory (GB) |

|---|---|---|---|---|---|

STP | i640-313 | 35,535 | 16,012 | 20 | 32 |

PCSTP | i640-313 | 32,401 | 15,358 | 20 | 32 |

Furthermore, we were able to prove optimality for the following difficult instances, where according to the SteinLib website [20] the instances i640-313 has previously been unsolved (for the PCSTP version of i640-313, we report similar performance):

5 Conclusions

We have presented a simple model for the Steiner tree problem, involving only node variables. Besides drastically reducing the number of the required variables, the removal of edge variables avoids a number of issues related to equivalent (possibly symmetrical) trees spanning a same node set. In this view, we are “thinning out” the usual edge-based model with the aim of getting a more agile framework. Our model is mainly intended for instances with uniform edge costs, but one could use it to derive a heuristic for tackling the general case (left for future studies). Computational results show that our approach can dramatically improve the performance of an exact solver, and in some cases converts very hard problems into trivial-to-solve ones. At the recent DIMACS challenge on Steiner trees (see [12]), the proposed algorithmic framework, which switches intelligently between the model involving only node-variables and a second model that uses both node and edge variables, won most of the categories of both, exact and heuristic challenge, for the STP, PCSTP, MWCS and DCSTP.

Notes

For instance hc12u2, no upper bounds have been reported before the challenge.

References

11th DIMACS Implementation Challenge in Collaboration with ICERM: Steiner tree problems (2014). http://dimacs11.zib.de/home.html. Accessed 20 Sept 2016

Álvarez-Miranda, E., Ljubić, I., Mutzel, P.: The maximum weight connected subgraph problem. In: Facets of Combinatorial Optimization: Festschrift for Martin Grötschel, pp. 245–270. Springer, Berlin (2013)

Andreello, G., Caprara, A., Fischetti, M.: Embedding \(\{\)0, 1/2\(\}\)-cuts in a branch-and-cut framework: a computational study. INFORMS J. Comput. 19(2), 229–238 (2007)

de Aragão, M.P., Werneck, R.F.F.: On the implementation of MST-based heuristics for the Steiner problem in graphs. In: Mount, D.M., Stein, C. (eds.) ALENEX. Lecture Notes in Computer Science, vol. 2409, pp. 1–15. Springer, London (2002)

Backes, C., Rurainski, A., Klau, G.W., Müller, O., Stöckel, D., Gerasch, A., Küntzer, J., Maisel, D., Ludwig, N., Hein, M., Keller, A., Burtscher, H., Kaufmann, M., Meese, E., Lenhof, H.P.: An integer linear programming approach for finding deregulated subgraphs in regulatory networks. Nucleic Acids Res. 40, 1–13 (2012)

Caprara, A., Fischetti, M.: \(\{\)0, 1/2\(\}\)-Chvátal-Gomory cuts. Math. Program. 74(3), 221–235 (1996)

Caprara, A., Fischetti, M., Toth, P.: A heuristic algorithm for the set covering problem. In: Integer Programming and Combinatorial Optimization, pp. 72–84. Springer, Berlin Heidelberg (1996)

Caprara, A., Fischetti, M., Toth, P.: A heuristic method for the set covering problem. Oper. Res. 47(5), 730–743 (1999)

Cherkassky, B.V., Goldberg, A.V.: On implementing push-relabel method for the maximum flow problem. Algorithmica. 19, 390–410 (1997)

Chimani, M., Gutwenger, C., Jünger, M., Klau, G.W., Klein, K., Mutzel, P.: The open graph drawing framework (OGDF). In: Tamassia, R. (ed.) Handbook of Graph Drawing and Visualization, Chapter 17. CRC Press, Boca Raton (2014)

Best bounds as of August 1, 2014 for SteinLib instances (2014). http://dimacs11.zib.de/instances/bounds20140801.txt. Accessed 20 Sept 2016

Results of the 11th DIMACS Competition on Steiner Tree Problems (2015). http://dimacs11.zib.de/contest/results/results.html. Accessed 20 Sept 2016

Duin, C.: Preprocessing the Steiner problem in graphs. In: Du, D.Z., Smith, J., Rubinstein, J. (eds.) Advances in Steiner Trees, Combinatorial Optimization, vol. 6, pp. 175–233. Springer, US (2000)

Eisenstat, D.: dtree: dynamic trees à la carte (2014). http://www.davideisenstat.com/dtree/. Accessed 20 Sept 2016

Fischetti, M., Lodi, A.: Local branching. Math. Progr. 98(1–3), 23–47 (2003)

Fischetti, M., Monaci, M.: Cutting plane versus compact formulations for uncertain (integer) linear programs. Math. Progr. Comput. 4(3), 239–273 (2012)

Fischetti, M., Monaci, M.: Proximity search for 0–1 mixed-integer convex programming. J. Heuristics 20(6), 709–731 (2014)

Golumbic, M.C.: Algorithmic Graph Theory and Perfect Graphs (Annals of Discrete Mathematics), vol. 57. North-Holland Publishing Co., Amsterdam (2004)

Koch, T., Martin, A.: Solving Steiner tree problems in graphs to optimality. Networks 32(3), 207–232 (1998)

Koch, T., Martin, A., Voß, S.: SteinLib: an updated library on Steiner tree problems in graphs. Tech. Rep. ZIB-Report 00-37, Konrad-Zuse-Zentrum für Informationstechnik Berlin, Takustr. 7, Berlin (2000). http://elib.zib.de/steinlib

Leitner, M., Ljubić, I., Luipersbeck, M., Resch, M.: A partition-based heuristic for the Steiner tree problem in large graphs. In: Blesa, M.J., Blum, C., Voß, S. (eds.) Hybrid Metaheuristics—Proceedings. Lecture Notes in Computer Science, vol. 8457, pp. 56–70. Springer, UK (2014)

Ljubić, I., Weiskircher, R., Pferschy, U., Klau, G.W., Mutzel, P., Fischetti, M.: An algorithmic framework for the exact solution of the prize-collecting Steiner tree problem. Math. Program. 105(2–3), 427–449 (2006)

Lucena, A., Resende, M.G.: Strong lower bounds for the prize collecting Steiner problem in graphs. Discrete Appl. Math. 141(1), 277–294 (2004)

OGDF: The Open Graph Drawing Framework (2015). http://www.ogdf.net/. Accessed 20 Sept 2016

Uchoa, E., Werneck, R.F.: Fast local search for Steiner trees in graphs. In: Blelloch, G.E., Halperin, D. (eds.) ALENEX’10 Proceedings of the Meeting on Algorithm Engineering & Expermiments, pp. 1–10. SIAM (2010)

Wang, Y., Buchanan, A., Butenko, S.: On imposing connectivity constraints in integer programs (2015). Submitted. Available at http://www.optimization-online.org/DB_HTML/2015/02/4768.html

Wong, R.T.: A dual ascent approach for Steiner tree problems on a directed graph. Math. Program. 28(3), 271–287 (1984)

Acknowledgments

Open access funding provided by University of Vienna.

Author information

Authors and Affiliations

Corresponding author

Additional information

The research of the Padova’s group was supported by the University of Padova (Progetto di Ateneo “Exploiting randomness in Mixed Integer Linear Programming”), and by MiUR, Italy (PRIN project “Mixed-Integer Nonlinear Optimization: Approaches and Applications”). The research of the Vienna’s group was supported by the Austrian Research Fund (FWF, Projects P 26755-N19 and I 892-N23). The work of M. Sinnl was supported by an STSM Grant from COST Action TD1207; the authors would like to acknowledge networking support by the same COST action. M. Luipersbeck acknowledges the support of the University of Vienna through the uni:docs fellowship programme.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Fischetti, M., Leitner, M., Ljubić, I. et al. Thinning out Steiner trees: a node-based model for uniform edge costs. Math. Prog. Comp. 9, 203–229 (2017). https://doi.org/10.1007/s12532-016-0111-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12532-016-0111-0