Abstract

Differential evolution (DE) is one of the highly acknowledged population-based optimization algorithms due to its simplicity, user-friendliness, resilience, and capacity to solve problems. DE has grown steadily since its beginnings due to its ability to solve various issues in academics and industry. Different mutation techniques and parameter choices influence DE's exploration and exploitation capabilities, motivating academics to continue working on DE. This survey aims to depict DE's recent developments concerning parameter adaptations, parameter settings and mutation strategies, hybridizations, and multi-objective variants in the last twelve years. It also summarizes the problems solved in image processing by DE and its variants.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Optimization is a procedure of finding the best possible decision variables' values under the given set of constraints and a selected optimization objective function. Applying the optimization procedure minimizes the total cost or maximizes the possible reliability or any other specific objective. Optimization problems are commonly found in science & engineering, industry, and business decision-making, which are solved by implementing the proper optimization approach. Almost every real-world optimization problem is fundamentally and practically challenging. Therefore, continuing research in this field is required as the best possible solution can be guaranteed only by using an appropriate optimization approach. Nature inspires researchers as it helps to model and solve complex computational problems. That is why researchers have been looking after it for several years. According to Darwin's theory, every species had to reconstruct its physical structure to survive in changing natural conditions. The relationship between optimization and biological evolution paved the way for developing evolutionary computing techniques for performing complex searches and optimization. It was around the 1950s when the idea of using Darwin's theory for solving automated problems originated. Lawrence J. Fogel introduced Evolutionary programming (EP) [1] in the USA; in Germany, Rechenberg and Schwefel introduced Evolution strategies (ES) [2] before the 1960s. At the University of Michigan, John Henry Holland proposed an independent method replicating Darwin's evolution theory to solve practical optimization problems called Genetic Algorithm (GA) [3], which was almost a decade later. In the early 1990s, the idea of Genetic Programming (GP) [4] emerged. Figure 1 depicts the classification of meta-heuristic algorithms.

In evolutionary computation, updating the population occurs through iterative progress. Selecting the population with a guided random search and using parallel processing desired result can be achieved. DE has a specified beginning with the genetic annealing algorithm developed by Kenneth Price and published in October 1994 in Dr. Dobb's Journal (DDJ), a renowned programmer's magazine. Genetic Annealing is a population-based, combinatorial optimization algorithm that uses thresholds to implement annealing criteria. Later, the same author discovered a differential mutation operator, which is the base of DE. At present, in the area of nature-inspired metaheuristics, evolutionary algorithms (e.g., EP, ES, GA, GP, DE, etc.) and swarm intelligence algorithms (e.g., monkey search [5], bee colony optimization [6], cuckoo search [7], firefly algorithm [8], wolf search [9], whale optimization algorithm [10], etc.) are used. In the broader field, it includes the artificial immune system [11], memetic and cultural algorithms [12], harmony search [13], etc.

Differential evolution (DE), [14] first suggested by Storn and Price in 1995, is a straightforward and effective evolutionary technique utilized in a continuous domain to address global optimization issues. Due to DE's adaptability and effectiveness, numerous modified variants have been developed. The most effective and adaptable evolutionary computing strategies have been DE and its several versions up until that point. The number of publications referencing the best original piece indicates a rise in curiosity in DE. It's effectively addressed various real-world issues from different scientific and technological fields. The DE varieties have consistently placed first in EA competitions run by the IEEE Congress on Evolutionary Computation (CEC) conference series. The CEC competitions on a single objective, large-scale, multi-objective, constrained, dynamic, and multimodal optimization problems have never had a particular search algorithm secure a place in all of them, excluding DE.

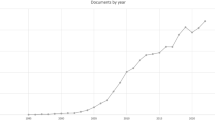

To present a broader perspective regarding the advances, relevance, and impact of the basic DE algorithm, Fig. 2 shows scatter plots of citations of the basic DE articles from 1995 to now. Therefore, it is evident from the graph that researchers' interest in DE has been growing from its inception. As illustrated in Fig. 2, the first publication that used the term DE appeared in the literature in 1995. Considering the citation structure of the various research area of the DE-related journal publications, there’s been an exponential growth in the citation count over the years. The highest citations of 17,370 were received in the year 1997. We provide in Fig. 3 the networked-based visualization of the publication and citation analysis structure of the article among authors covering the main discussion of the DE algorithm and its variants over the last 46 years to give a better understanding of the exponential growth in the research interest and applicability of the DE algorithm. There are eight clusters in the visualization. The red color cluster has Storn and Price [14] as the prominent node, implying that other authors in this cluster, such as Noman and Iba [15], Mohanty et al. [16], Das et al. [17], Rahnamayan and Tizhoosh [18], and Tanabe and Fukunaga [19] have cited more papers on DE from Storn and Price [14]. Another prominent cluster is the blue color containing Das et al., implying that other authors like Ter Braak [20] have cited more papers on DE than the aforementioned author had published.

Choosing a uniformly random set of the population sampled from the feasible search volume, every iteration (known as generation according to the terminology of evolutionary computing) of DE executes the same computational steps as followed by a standard Evolutionary Algorithm (EA). However, like genetic algorithm, it never uses binary encoding and does not use the probability density function to self-adapt its parameters like Evolution Strategy. DE executes mutation in the algorithm upon the distribution of the solutions in the current population. Therefore, the direction of search and possible step sizes depends on the randomly selected individuals' location to calculate the mutation values. DE has four phases: the initialization of vectors, difference vector-based mutation, crossover, and selection. Once the vector population is initialized, iteratively repeat the other three steps as long as a specific condition is met. Figure 4 shows the main steps of the DE algorithm.

According to practical experience, the most appropriate mutation strategies and DE parameters are generally different when applied to various optimization problems. This happens because DE's exploitation and exploration capabilities vary with varying mutation and parameter tuning techniques. It is time-consuming and computationally expensive to configure methods and parameters by trial and error approach. Researchers devised several techniques for intelligent mutation strategy ensemble and automated parameter adaptation to overcome this challenge. For example, a self-adaptive DE algorithm (SaDE) was proposed by Qin et al. [21], whereas; Mallipeddiet al. [22] proposed another DE variant called EPSDE by using a self-adaptive parameter tuning approach. Though they both performed excellently, the SaDE could not find an optimal solution for the high modality composite function due to poor local searchability, which made the SaDE converge prematurely. Similarly, EPSDE provided slight improvement and was only compared to JADE.

Similarly, several efficient adaptive DE variants have been presented during the last few decades. These DE variants have their respective advantages in dealing with different optimization problems. The idea of mixing EAs with other EAs or with some other techniques (hybridization) is to use the benefits of two or more algorithms for better results. Multi-objective variants of DE were also developed to solve optimization problems with multiple objectives. Various survey articles [23,24,25,26] were published on DE during the time. Eltaein et al. [27] presented a survey of DE incorporating the variants developed with adaptive, self-adaptive, and hybrid techniques. Pant et al. [28] surveyed DE regarding its update since initiation using numerous strategies like change in population initialization, modified mutation and crossover scheme, altering parameters, hybridization, and its discrete variants. The authors have also reviewed the DE variants developed to solve various problems in science and engineering. Georgioudakis et al. [29] studied the performance of DE variants like CODE, jDE, JADE, and SaDE in handling constrained structural optimization issues linked to truss structures.

An exception from all the studies mentioned above, this article only includes the recent efficient variants of DE, including multi-criteria type variants from 2009 to 2020. To our knowledge, none of the above literature multi-objective variants of DE were studied. In the application survey part, we have only studied the DE variants developed for solving image processing problems which might be useful for the researchers working on this application domain. To achieve the study objective, the systematic literature review procedure was used as a guide, and carefully selected keywords were used to search and retrieve relevant pieces of literature. The following keywords or noun phrases were used to search the Web of Science (WoS), Scopus repository, and websites listed in Table 1 for articles published in reputable peer-review journals, edited books, and conference proceedings: differential evolution, DE, variants of DE algorithm, a survey of DE. The choice of academic database is influenced by high-quality articles published in SCI-indexed journals and ranked international conferences.

The year of publication is between 2009 and 2020. During each search, articles retrieved were perused to collect more related articles from their citations. The inclusion and exclusion criteria were then applied to the collected articles to ensure that only the ones that fit the study objective were selected. The inclusion criteria are only recent efficient variants of DE, including multi-criteria type variants from 2009 to 2020, multi-objective variants of DE, and DE variants developed for image processing problems. Everything else was excluded.

The paper is structured as follows: The basic DE and its popular mutation strategies are described in Sect. 2. DE variants proposed with modifications in parameter settings and the mutation strategies and hybridization techniques are presented in Sect. 3. Section 4 contains a collection of multi-objective DE variants with brief descriptions. Section 5 summarizes the DE variants developed to solve image processing problems. Section 6 presents potential future direction for DE, and finally, Sect. 7 concludes the study.

2 Basics of Differential Evolution Algorithm

Storn and Price [14] proposed the DE method, a subset of evolutionary computation, to address optimization issues in continuous domains. Every variable's expression in DE is a real number. DE employs mutagenesis for exploring, while selection is used to focus the approach on the likely locations within the possible area. Initialization, mutation, recombination or crossover, and selection are the four essential processes in the conventional DE algorithm. Until the termination requirement (such as the expiration of the maximum functional evaluations) is met, the final three phases of DE run in a loop.

2.1 Decision Vector Initialization

DE looks for the best global point in a D-dimensional real decision variable region. This then starts with a population of NP vectors with legitimate variables that are randomly begun. The multi-dimensional optimization problem has several candidate solutions, each of which is a vector known as a genome or chromosome. In DE, generations are indicated by \({G}_{n}=\mathrm{0,1},2,\dots .,{G}_{max}.\)

We may employ the following notation to denote the kth vector of the group in the latest generation because the parameter(s) used to identify the vectors are likely to differ throughout various generations \({(G}_{n})\):

A defined range may be provided for each decision variable of a specific problem, and the decision variable's value should be limited. Decision variables are typically assessed using natural boundaries or connected to physical elements (for example, if one decision variable is a length or mass, we would want it not to be negative). By consistently randomizing solutions inside this problem space bounded by the recommended lower and higher bounds, the initial population (at \({G}_{n}\)= 0) should encompass this region as broadly feasible: \({X}_{lo\omega }=\left\{{x}_{1,low}, {x}_{2,low},\dots ..{x}_{D,low }\right\}\) and \({X}_{hi}=\left\{{x}_{1,hi}, {x}_{2,hi},\dots ..{x}_{D,hi}\right\}\). Hence, we may initialize the \(j\)th component of the \(k\)th decision vector as:

where \(rn{d}_{j}^{k}\left[0, 1\right]\) is a uniformly distributed random number lying between 0 and 1 (actually 0 ≤ \(rn{d}_{j}^{k}\left[0, 1\right]\)≤ 1) and is instantiated independently for each component of the \(k\)th vector.

2.2 Mutation with Difference Vectors

A "mutation" in biology is a sudden alteration to a chromosome's gene composition. The mutation is viewed as a modification or alteration with a randomized component in the evolutionary computing concept. A parent vector from the most recent generation is referred to as the target vector in the DE concept. Donor vectors are mutant vectors created during the mutation operation. The term "trial vector" refers to a descendant created by recombining the donor and the target vector. In a traditional DE-mutation, three more different parameter vectors, for instance, \({p}_{r{nd}_{1}^{\left(l\right)}}, {p}_{r{nd}_{2}^{\left(m\right)}}, {p}_{r{nd}_{3}^{\left(n\right)}}\) are used to construct the donor vector for each kth target vector from the existing population. The indices \(r{nd}_{1}^{\left(l\right)}, r{nd}_{2}^{\left(m\right)}, r{nd}_{3}^{\left(n\right)}\) are mutually exclusive integers randomly chosen from the range \([1, NP]\); all these three solutions are different from the target vector \(k\). For every mutant vector generation, these indices are produced at random once. The difference between second and third solution vectors is scaled by a scalar number \(F\) (its value generally lies within the interval \([0.4, 1]\)). The parameter \(F\) is described as the scaling factor or scale factor, and the scaled difference is added to the first solution vector to obtain the donor vector \({p{^{\prime}}}_{Gn}^{\left(k\right)}\). A conceptual representation of the DE mutation phase is shown in Figs. 5 . The procedure can be expressed mathematically as:

2.3 Crossover

Crossover is how the donor vector components and the target vector are mixed to bring diversity to the population. The donor vector tries to replace its components with the target vector \({P}_{Gn}^{\left(k\right)}\) and forms the trial vector. For trial vector generation purposes, DE uses two crossover methods: (i) Binomial crossover and (ii) Exponential crossover. In an exponential crossover, an integer n is chosen randomly among the numbers \([1, D]\). The value of this integer \(n\) acts as a starting point in the target vector; from that point, the crossover or exchange of components with the donor vector starts. Another integer \({L}_{n}\) is chosen within the interval \([1, D]\). \({L}_{n}\) signifies the donor vector will contribute the number of components to the target vector. Now, having the value of \(n\) and \({L}_{n}\) trial vector can be obtained as

where the angular brackets denote a modulo function with modulus \(D\). The integer \({L}_{n}\) is drawn from \([1, D]\) according to the following pseudo-code:

"\(Cros\)" is called the crossover rate, and it is also a control parameter of DE. A new set of \(n\) and \({L}_{n}\) must be chosen randomly for each donor vector. A conceptual representation of the DE crossover phase is shown in Fig. 6.

On the other hand, in a binomial crossover, whenever a randomly generated number between 0 and 1 is less than or equal to the \(Cros\) value, the donor vector value is selected as a trial vector. Otherwise, it remains the same. This operation is performed in each of the variables of \(D\) dimensions. In this method, the donor vector's contribution to the trial vector has (nearly) a binomial distribution. The process may be outlined as follows:

where \(r{nd}_{k,j}\left[0, 1\right]\) is a uniformly distributed random number, \({j}_{rnd}\) belonging to \([1, D]\) is a randomly chosen index; it ensures that \({v}_{j,Gn}^{\left(k\right)}\) gets at least one component from \({p}_{j,Gn}^{\left(k\right)}.\)

2.4 Selection

After the generation of trial vectors by the crossover process, it is determined by the selection process whether a trial vector or a target vector will act as a target vector for the next generation \(Gn+1\). The selection process can be written as follows:

where \(f(x)\) is the objective function that needs to be minimized. Therefore, if the new trial vector produces an equal or lower value of the objective function, the corresponding target vector is replaced in the next iteration; otherwise, the target vector is retained in the next generation population. Hence, the population vector gets a better value in every iteration (considering the minimization problem); i.e., the value never depreciates (Fig. 7).

2.5 DE Algorithm

Step1: Fix the values of crossover rate \((cros)\), scale factor \((F)\), and population size \((NP)\) as input from the user.

Step2: Initialize the value of generation \({(G}_{n})\) to 0 and also initialize a random population of\(NP\). Individuals \({P}_{Gn}=\{{p}_{1,Gn} , {p}_{2,Gn},{p}_{3,Gn},\dots ,{p}_{np,Gn}\}\), for every\({P}_{Gn}^{\left(k\right)}=\{{p}_{1,Gn}^{\left(k\right)} , {p}_{2,Gn}^{\left(k\right)} , \dots ,{p}_{D,Gn}^{\left(k\right)}\}\), every individual is uniformly dispersed within the range {\({P}_{lo\omega }\),\({P}_{hi}\)}, where \({P}_{lo\omega }=\{\)p1,low, p2,low, …, pD,low} and \({P}_{hi }=\{{p}_{1,hi} , {p}_{2,hi},\dots ,{p}_{D,hi}\}\), the value of \(k\) lies between \([1, NP]\).

Step3: While the given condition is true.

2.6 Mutation Strategies of DE

DE is a simple but efficient evolutionary algorithm used in a continuous domain to solve global optimization problems. According to practical experience, the most appropriate mutation strategies and DE parameters are generally different when applied to different optimization problems. This happens because DE's exploitation and exploration capabilities vary with different mutation strategies and parameter tuning. Based on this experience and after continuous work, several mutation strategies have been proposed by researchers. At generation G, the kth vector can be written as:

The most popular mutation strategies of DE are:

In the above equations,\(r{nd}_{1}^{l}\),\(r{nd}_{2}^{m}\),\(r{nd}_{3}^{n}\), \(r{nd}_{4}^{o}\), \(r{nd}_{5}^{q}\) are mutually exclusive real numbers selected randomly between 1 to\(NP\). They have a different value from\({p}^{\left(k\right)}\). "\(rnd\)" signifies random, and "\(bml\)" is used to mention that the calculation method used for the trial vector generation is binary. \(F\) and \({p}_{best,Gn}\) are the scaling factor and best value in the present population, respectively. \({p}_{pbest,Gn}\) is the random best individual among the best \(p\) solutions.

2.7 Advantages and Disadvantages of DE

A trustworthy and beneficial global optimizer, DE is a population-based evolutionary algorithm. DE produces offspring by disturbing the solutions with a scaled difference between two randomly chosen individuals from the population, which is different from other evolutionary algorithms. The parent solution is replaced by the sibling only if the sibling is better than its parent. Basic DE is a reasonably straightforward algorithm that can be implemented with just a few lines of code in any common programming language, unlike many other evolutionary computation techniques. Additionally, the scaling factor, crossover rate, and population size are the only control factors needed for the canonical DE, which makes it simple for practitioners to utilize [30]. No other search methodology has been able to obtain a competitive position in essentially all the CEC contests on a single objective, limited, dynamic, large-scale, multi-objective, and multimodal optimization problems. The inferred self-adaptation confined in the algorithm's structure is the cause of DE's remarkable success [23]. An optimization algorithm must be explorative in its early stages since solutions are dispersed throughout the search space. Exploitation around the discovered potential solution is also necessary for the optimization process. DE is heavily explorative early in the process before progressively transitioning to exploitation. Due to this, DE may typically exit the local minima with a reasonable rate of convergence. Regarding the search features of an evolutionary algorithm, DE is a well-balanced algorithm.

DE has certain drawbacks despite these benefits. Liu et al. [31] opined that if the siblings generated in a few iterations are worse in fitness than their parent solutions, it has become impossible for DE to get out of that situation, leading the algorithm to stagnation. If prospective solutions are not found after a few exploratory steps, it is possible to say that the search process is seriously negotiated. The population size in the algorithm is correlated with the algorithm's potential movements over time. A small population may only move a tiny amount, whereas a large population may engage in numerous activities. Unproductive behaviors can increase the amount of computational work wasted as the population grows. Small populations may result in premature convergence [32]. The crossover rate value and scaling factor are essential to the algorithm's efficiency. However, choosing these values is a laborious process. According to several research studies [33, 34] setting a fair value for the parameters is problem-dependent. The probability of stalling in DE grows with greater dimensionality; according to Zamuda et al. [35], parameter setup can be challenging while handling real-life optimization problems with larger dimensions. DE is ineffective in noisy optimization tasks in addition to the dimensionality problem. According to Krink et al. [36], standard DE can struggle to handle the noisy fitness function.

2.8 DE Variants as the Champion of CEC Competitions

DE has proven to be one of the best methods, and many modifications have been made to it during the last two decades. Modified variants of DE could secure a place in almost all CEC competitions held during the time. A list of DE methods with the year of competition and the respective method's position is given in Table 2. The percentage of google scholar citation of DE variants secured position in CEC competition and the performance count of DE variants in CEC competition are shown in Figs 8 and 9.

3 Variants of DE Algorithm

The exploration and exploitation capability of DE also varies with the complexity of the problem. Exploring is concerned with finding new reasonable solutions, and exploitation is searching for a solution near the new good solutions. These two terms are interrelated in the evolutionary search. Equilibrium between these two search processes can give a better result. DE has three control parameters, namely scaling factor \(F\), population size \(NP\), and crossover operator \(CR\). The role of these control parameters is to keep the exploration/exploitation in an equilibrium state. Efficiency, effectiveness, and DE's robustness in applying to a practical problem are positively related to the appropriate choice of these parameter values. Choosing an appropriate value for control parameters is tough when symmetry between exploration and exploitation is required. The selection of control parameter values for a problem is time-consuming and affects the algorithm's efficiency.

The performance of DE mainly depends on its parameter selection and trial vector generation strategy. During the last two decades, several works have been done on DE, and several policies of parameter adaptation, parameter adaptation with mutation strategy selection, and hybridization have been proposed. The below-given framework shown in Fig. 10 displays the work done on DE so far for its modification.

3.1 Modified by Parameter Selection

Three essential parameters, namely, mutation factor, crossover rate, and population size, are used in DE. The crossover rate defines the length of the string passed down from one generation to the next, while the mutation factor determines the population's diversity. The population size affects how robust the process is. Deterministic, adaptive, or self-adaptive parameter selection is all possible. Deterministic parameter selection involves changing values according to a deterministic rule after a predetermined number of generations. The "adaptive parameter selection" method involves altering parameters in response to feedback from the search process. The superior value of these parameters during self-adaptation parameters imprinted into the chromosomes generates better children, propagating to the following generation. Table 3 provides concise descriptions of various algorithms with illustrations.

3.1.1 jDE

The parameters of DE are problem-dependent like other optimization algorithms. Tuning these parameters is a hectic job. Considering this fact, in this study, the authors tried to use \(F\) and \(Cr\) values adaptively during the optimization process. Four new parameters \({F}_{l}, {F}_{u}, {\tau }_{1} and {\tau }_{2}\) were introduced. \({\tau }_{1} and {\tau }_{2}\) represents the probability with a value of 0.1. Value of \({F}_{l} and {F}_{u}\) were fixed as 0.1 and 0.9, respectively. A new value of the scaling factor was evaluated first multiplying a random value with \({F}_{u}\) and adding the result with \({F}_{l}\). The newly evaluated value of \(F\) for the next generation was accepted only if the probability value \({\tau }_{1}\) greater than a random number. Else, the value of \(F\) in the last generation was selected. \(Cr\) value for the next generation will be a random number if the value of \({\tau }_{2}\) greater than a random number. Otherwise, the present generation value was selected. Twenty classical benchmark functions were evaluated and compared with the basic and self-adaptive DE algorithms to establish the performance enhancement.

3.1.2 DEGL

It described a family of improved variants of \(DE-target-to-best/1/bin\) by utilizing the neighborhood scheme for each member of the population. The drawback of the \(DE-target-to-best/1/bin\) technique was identified, the best vector used to generate the donor vector promoting the chance of exploitation as all the vectors try to move to the same best position. To overcome this two-neighborhood strategy, the local and global neighborhoods were used. Each vector was mutated in a local neighborhood using the best value found in the local (small) neighborhood. In contrast, every vector in the global neighborhood was transformed using the entire generation's best value. Later, the local and global model was combined using a weight factor. The result showed that DEGL is the best performer among \(DE/rand/1/bin, DE-target-best/1/bin,\) SADE, etc.

3.1.3 εDEag

The study proposed εconstrained DE with an archive and gradient-based mutation strategy for improving stability, useability, and efficacy of the previously proposed εDEg. The average approach of individuals towards the optimal solution was attained, re-evaluating a child whenever it was not better than the parent. The parameter value in the algorithm was set automatically depending on the state of the initial archive. The introduction of this scheme enabled to specify the ε level's control parameters and enhanced the useability of the algorithm. Gradient-based mutation with skip-move and the long move was introduced, and the ε level was increased to expand the search space. Eighteen problems of “single objective real parameter constrained optimization” were solved using the proposed algorithm.

3.1.4 FiADE

Adaptation of \(F\) and \(CR\) more simply and effectively without the user's intervention was introduced. The adaptation of variables depends on the objective function value of population members. If a particular generation's fitness value of a vector is close to the objective function, the \(F\) value was reduced by allowing a more extensive local search. On the contrary, the \(F\) value was increased to a level if the function value deviates from the objective function value, obstructing premature convergence. Similarly, if the donor vector's fitness value deviates from the objective function value in a negative direction, then the \(CR\) value was increased and vice versa. A study on the application of unimodal and multimodal functions showed that the proposed scheme was easy and competitive.

3.1.5 SFcDE-PSR

Compact DE runs on an optimization problem in systems with less memory power and computational ability. Though the reduction in population size may lead to premature convergence, these algorithms are designed to keep the algorithm's reliability intact. SFcDE-PSR used a super fit mechanism. In this process, an external algorithm was executed to improve a solution with less performance and included in the DE population. Consequently, this solution guided the search process with improvements. This way, the exploration ability of the search process was increased. The second strategy was the reduction of the population progressively. The implication of these strategies together made SFcDE-PSR more efficient than other previous cDE versions.

3.1.6 OXDE

DE uses a binomial/exponential crossover, which generates a hyper-rectangle defined by the mutant and target vector. Therefore, the search area of DE may be limited. In this scheme, the QOX operator improved the searchability of DE. Application of QOX to every pair mutation and the target vector will increase the computational overhead. Thus, QOX was applied to each generation once only. The scheme switches between binomial/exponential and QOD flexible to generate target vectors. The application of this scheme enhanced the efficiency of \(DE/rand/1/bin\).

3.1.7 JADE-APTS

According to the proposed ATPS approach, the size of the total population on the algorithm can be dynamically adjusted by distributing the population and the algorithm's searching status. New possible solutions were discovered by placing new individuals in the proper area with an elite-based population incremental strategy. Under privileged particles are eliminated according to a ranking process, and the place was reserved for better reproduction using an inferior-based population cut strategy. A status monitor regulated these dynamic population control strategies. JADE-APTS gave a competitive performance in 30-dimensional problems and best in 100-dimensional problems among jDE, JADE, SaDE, EPSDE, and CoDE.

3.1.8 EDE

A new modified mutation scheme was proposed considering the best and worst individuals of a particular generation. This scheme was incorporated with the basic mutation scheme of DE through a non-linear decreasing probability rule. This scheme enabled better local search ability and a faster convergence rate, leading to premature convergence. The issue was tackled with a random mutation scheme and modified BGA mutation. Scaling factor \(F\) was set as a uniform random variable in [0.2,0.8] to ensure exploration as a lower value and exploitation as a higher value. \(CR\) was introduced as a uniform random variable in [0.5,0.9] to increase diversity and convergence rate.

3.1.9 DE with RBMO

DE with the ranking-based mutation operators works on the concept that if during the selection of parents with random selection in DE, parents with better characteristics were selected, then the chance of survival of their children and finding a good result is also increased. Vectors were first sorted according to their fitness, and then the selection probability of each vector was calculated. The base vector and the terminal point of the difference vector were selected according to their selection probabilities, while the other vectors were chosen according to the mutation strategy used. This process has given a better result than JDE, which was a superior algorithm among others at that time.

3.1.10 SHADE

The basis of this algorithm is JADE, and it used the \(current-to-pbest/1\) mutation strategy, an external archive, and adaptive control of the \(F\) and \(CR\) parameter values. \({\mu }_{CR}\) and \({\mu }_{F}\) values in JADE are continuously updated to approach the previous generation's successful mean values of \(CR\) and \(F\). \({S}_{CR}\) and \({S}_{F}\) stores the mean values of \(CR\) and \(F,\) which were effective in the last generations. Due to the probabilistic nature of DE, the poor value of \(CR\) and \(F\) may also be included in the \({S}_{CR}\) and \({S}_{F}.\) It could degrade the performance. SHADE used a historical memory \({M}_{CR}\), \({M}_{F}\), to store mean values of \({S}_{CR}\) and \({S}_{F}\) for each generation. SHADE used a diverse set of parameters compared to the JADE’s single pair of parameters, which guides the control parameter adaptation during the search process. This modified algorithm has shown a competitive result on several benchmark problems.

3.1.11 PA-jDE

In lower-dimensional problems, if the population's diversity is less, it leads to stagnation. Stagnation halts the improvement of the population. Population adaptation has emerged to overcome the issue. This algorithm assumes the occurrence of stagnation and diversifies the population by measuring Euclidian distance among the individuals. This scheme, when applied with jDE performance, amplified significantly.

3.1.12 LSHADE

In LSHADE, the basic SHADE algorithm's search performance was enhanced using a linear population reduction mechanism. The population reduction increases the convergence speed and decreases the algorithm's complexity. The performance of the LSHADE was verified by evaluating IEEE CEC 2017 function set and comparing it with state-of-the-art DE and CMA-ES variants. Performance tests confirmed the supremacy or competitiveness of the algorithm.

3.1.13 DE-DPS

The aim was to find the most appropriate value of parameters during the process of evolution. The set of values was defined for the parameters \(F\), \(CR\), and \(NP\). Random individuals were generated within the variable bounds. Each individual in the population was assigned a random \(F\) and random \(CR\) value. Each new offspring was generated using the mutation operator and the crossover operator. If the offspring was better than the individual, it was selected for the next generation, and the success value of the parameter combination was increased by 1.

3.1.14 Repairing the Crossover Rate in Adaptive DE

Crossover rate is not directly related to the trial vector, but the trial vector is directly related to its binary string. Based on this theory, the crossover repair technique was generated in which the average number of components taken from the mutant was calculated. Then the trial vector was generated according to the formula specified. Afterward, the successful combination of the repaired crossover rate and the scaling factor was updated. This process gave superior or competitive results when combined with JADE in terms of robustness and convergence.

3.1.15 MS-DE

In the DE algorithm, the base and the difference vectors were selected randomly without fully utilizing the fitness information, and the diversity information was also unnoticed. The algorithm may trap in local optima while solving multimodal optimization problems. Given the above problem, a multi-objective sorting-based mutation operator was induced to utilize the fitness and diversity information while selecting parents. Non-dominated sorting was used to sort individuals of the latest population according to their fitness and diversity. Parents were selected according to their ranking. The adoption of this new operator enhanced performance satisfactorily.

3.1.16 ESADE

Enhanced self-adaptive DE used for global numerical optimization over continuous space. In this process, population initialization was done in two groups. Then initially, the first set of control parameters was initialized. The second set of control parameters was generated by mutating the first set of parameters. Trial vectors were generated on each set of populations after mutation and crossover. Lastly, target vectors were generated from the trial vector set by selection operation using simulated annealing. Control parameters that performed better were selected for the next generation. ESADE performed better on several state-of-art DE algorithms and PSO.

3.1.17 MDEVM

Micro algorithms work with a minimal number of populations to amplify convergence speed, with increased risk of stagnation. It is necessary to raise the diversity of the population to overcome the threat of stagnation—a vectorized mutation process with micro DE was used here. According to the process, after the generation of the initial population randomized vector of mutation factor was calculated instead of a constant mutation factor. The mutation and crossover strategies were applied the same as DE. The process stopped when the difference between the best fitness value and fitness value to reach was less than an error value or function evolution the maximum limit. MDEVM enhanced the convergence speed of its predecessors.

3.1.18 Enhancing DE Utilizing Eigenvector-Based Crossover Operator

In the proposed approach, eigen vector information of the population's covariance matrix was used for rotating the coordinate system. The trial vectors developed stochastically from the target vectors with a standard coordinate system or a rotating coordinate system. The selection probability of a particular coordinate system among the two was managed using a suitable control parameter. This strategy increased the diversity of the population while reducing the risk of premature convergence. Including the proposed scheme with DE and its other variants gave a satisfactory result.

3.1.19 mJADE

DE and most of its other variants accommodate many populations, which require a large amount of computational cost memory size. The requirement of a large memory size restricts the feasibility of using these variants in embedded systems. mJADE was proposed with a new mutation operator considering the algorithm's reliability despite reducing population size for tackling the issue. The same mutation strategy used by JADE during the search process was also used here. The proposed algorithm was highly competitive and better than the variants of its type.

3.1.20 DEGPA

The proposed approach was designed to lessen the effort while selecting effective DE parameters during the search process. Control parameters of DE were selected adaptively during the search process. The parameter search space was discretized by forming a grid. A local search was conducted on the grid based on DE's estimated performance under matching parameters. The most significant parameter setting found was accepted for several iterations. The exact process was repeated iteratively to select the useful parameters during the process. DEGPA was extended (eDEGPA) with the selection of crossover types in the same study. Rigorous testing of the approach is done with the high dimensional problem of up to 500 dimensions and several composite functions. The results advocated the successful adaptation of parameters without compromising the diversity of the population.

3.1.21 iL-SHADE

In this algorithm, the mutation strategy, external archive, and reducing population mechanism remain unchanged as in the L-SHADE algorithm. However, the historical memory values of \(CR\) are initialized at 0.8 instead of 0.5 in LSHADE. One historical value entry of \(F\) and \(CR\) contains a pair of values 0.9 so that the higher values for both the variables could be used together. The restriction was given using a very high \(CR\) and low \(F\) value while the search process was in its initial stage. The next generation's historical memory values were enumerated, giving the same weight to the present generation's historical memory values with the weighted Lehmer means. After every generation value of \(p\), which controls the algorithm's greediness, was evaluated using a novel formula. An empirical test with benchmark functions with different dimensions such as 10, 30, 50, and 100 confirmed the algorithm's competitiveness.

3.1.22 LSHADE-EpSin

The new algorithm automatically adapted the value of the scaling factor using an ensemble sinusoidal strategy. The ensemble strategy consists of two methods. One was a non-adaptive sinusoidal decreasing adjustment, and the other was an adaptive history-based sinusoidal increasing adjustment. These two methods were used in a way that balanced the exploration and exploitation of the algorithm. A Gaussian walk-based local search method was used at advanced generations to amplify the algorithm's exploitation capability. LSHADE-EpSin was used to evaluate CEC 2014 benchmark functions. The comparison with LSHADE and other state-of-the-art algorithms confirmed the enhanced ability and robustness of the proposed algorithm.

3.1.23 ADE-ALC

The aging leader and challenger mechanism were used in this algorithm. The best individual in the population was selected as the leader, and it was activated for a defined period. This process improved exploitation in the search process. After completing a leader's life based on two local search operators, a challenger was generated, challenging the leader's leading ability. This way, diversity in the population was maintained while increasing the exploration ability of the process. Parameters in this scheme were selected adaptively. The proposed scheme was efficient and comparable to DE's variants while using unimodal, multimodal, and hybrid functions.

3.1.24 FDE

This approach altered the parameters of DE using fuzzy systems dynamically. This system modified the parameter \(F\) in a decreasing manner. The fuzzy system worked on the number of generations. If the generation was low, then \(F\)'s value kept high. When the generation value was medium, the \(F\) value was chosen in the medium range, and if the generation value was high, then the F value was kept low. In this way, the proposed approach balanced the exploration and exploitation process.

3.1.25 LSHADE-cnEpsin

Ensemble of two sinusoidal waves was used to adapt the value of scaling factor \(F\) efficiently. Adaptive sinusoidal waves were used to increase, and non-adaptive sinusoidal waves were used to decrease the adjustment. One of the schemes was chosen based on past performance to evaluate the value of the scaling factor. The high correlation between the variables was undertaken by introducing a co-variance matrix learning with the crossover operator's Euclidean neighborhood. The algorithm's performance enhancement was tested by computing IEEE CEC 2017 functions and comparing them with state-of-the-art algorithms.

3.1.26 SADE-FP

Values of DE's parameters are problem-dependent; therefore, the dynamic selection of parameters during the search process is tedious. SADE-FP addressed this issue by adjusting the parameter self-adaptively and using a perturbation strategy based on an individual's fitness performance. The scaling factor value was evaluated using a cosine distribution. When an individual's fitness was less, the large scaling factor value provided the individual more exploration power. On the contrary, if the fitness value were good enough, the scaling factor value would be less, giving more exploitation ability to the process. This strategy has enhanced the performance of DE and its other variants.

3.1.27 Db SHADE

This algorithm was designed to address the premature convergence of SHADE family algorithms. A distance-based parameter adaptation technique was induced to convert the exploration phase longer in higher dimensional space while considering the computational complexity factor. The distance-based approach works between the original vector and the trial vector. In this approach, the Euclidian distance between them is calculated. The individuals moved farthest will have the highest mutation and crossover value. After empirical tests, it was found that the performance of DbSHADE was better than the SHADE family algorithms.

3.1.28 DEA-6

In this algorithm, mutation and crossover operators were designed and applied in a new way. According to this scheme, the mutation occurred in two phases; in the first phase, exploration was the motive. In the second phase of the mutation process, the individual's best value was considered, thus promoting exploitation. The binomial crossover was also replaced, introducing a new vector whose components were random functions generated by a uniform probability density function between 0 and 1.

3.1.29 ATBDE

A failure member-driven self-adaptive top–bottom strategy was used in DE. The top–bottom approach used previous information from both successful and failed individuals. Two archives for failure and successful members were constructed. The individuals from different archives were selected according to the input from the heuristics. The parameter value for mutation and crossover for every member of the population was updated based on status. When a failure's threshold limit is exceeded, the parameter adaptation comes into force.

3.1.30 Hard-DE

Hierarchical archive-based mutation strategy was proposed here. A new crossover rate with the grouping strategy and parabolic population size reduction scheme was also presented. Hard-DE tested with CEC 2017 and CEC2013 best suits real parameter single objective optimization and two real-world optimization problems from CEC 2011. It was evident from the result that Hard-DE was better than several state-of-art of DE algorithms.

3.1.31 SALSHADE-cnEpSin

Self-adaptive LSHADE-cnEpSin used the mutation strategy, \(DE/current-to-pBest/1\). The scaling factor of the new algorithm was generated utilizing the Weibull distribution. Using some other parameters, this parameter generation method estimates the lifetime of a problem. It helped the algorithm fix the scaling parameter's value from a global search to a local one. \(CR\) values in this method were used in an exponentially decreasing order. The archive was used to store the infeasible solutions.\(F\) and \(CR\) values were also stored in the archive for the next generation. The linear population size reduction mechanism was used as the parent algorithm.

3.1.32 Predicting Effective Control Parameters for DE Using Cluster Analysis of Objective Function

According to this strategy, the cluster analysis technique was used to identify the effective control parameters for the objective function. During the cluster analysis, three features of the objective function were used for parameter identification. These three features are the number of dimensions of the function, interquartile range of the normalized data, and skewness of the normalized data. Collectively these features make \(\beta\) characteristics of the objective function. Training data was used to explore any relationship between the control parameter, the objective function, and its performance. \(\beta\) data points were identified by applying \(k\) means++ to the training data set. Mean \(P\) is calculated from the set, and the top 10% of data points were recognized and used to optimize new functions.

3.1.33 DE-NPC

The algorithm used \(DE/target-to-pbest/1/bin\) mutation strategy considering its effectiveness. The total population was divided into m subgroups. Each of the subgroups uses all the three DE control parameters separately. The scale factor for every individual in a subgroup was calculated using Cauchy distribution with a readjustment policy. The crossover rate of each individual in the subgroup was enumerated using Gaussian distribution and resettled after generating. A linear-parabolic population reduction policy was employed to reduce the population and increase the convergence speed of the algorithm. DE-NPC was tested with several DE variants using CEC 2013, CEC 2014, and CEC 2017 functions; the comparison results confirmed the efficacy of the proposed algorithm.

3.1.34 j2020

The algorithm was designed using the functionality of both the algorithms jDE and jDE100. It uses parameters like jDE. Alike jDE100, it divided the total population into two sub-populations. Among the sub-populations, one is big, and another is small. For mutation, \(jDE/rand/1\) mutation strategy was used. After every generation, the best solution is evaluated in a bigger sub-population used in both populations to generate solutions. Both the sub-populations were reinitialized based on predefined criteria. Euclidean distance-based crowding mechanism was used to identify an individual nearest to the trial vector. The algorithm's efficiency was measured with DE and jSO using the CEC 2020 function set.

3.1.35 ERG-DE

The algorithm used an elite regeneration technique. The new individuals were generated around the best individuals using Gaussian sampling or Cauchy probability distribution methods. The number of the best population was selected using a linearly reducing deterministic technique. The process requires tunning of only two parameters. New solutions were generated in the surroundings of the parent solution, thereby increasing the exploitation capacity of the algorithm and bypassing the local solution. Evaluated results of the CEC 2014 functions set and comparison with various DE variants confirmed the algorithm's effectiveness.

Among the several other studies, a few more exciting improvements in DE based on a modification of parameters are given below. In [76] Wang et al. proposed a modified binary DE algorithm (MBDE) with novel probability estimation operators to solve binary-coded optimization problems. Zhang, in [77], described a dynamic multi-group self-adaptive DE (DMSDE) strategy. The population was divided into multiple groups, exchanging information dynamically; here, parameters were also made self-adaptive. Wang et al. [78] modified the binary DE algorithm with a new probability estimation operator to balance exploration and exploitation in cooperation with the selection operator. Intersect mutation DE (IMDE) proposed by Zhou et al. [79] intersecting the total individuals into two parts, the best and worst parts, new mutation, and crossover operator used to generate population for the next generation. An adaptive ranking mutation operator base DE to solve constraint optimization problems is given in [80]. Zamuda and Brest [81] described a self-adaptive DE with a randomness level parameter to regulate the randomness of the generated control parameters.

In summary, the volume of articles in this category and the successes recorded speaks to the importance modifying these parameters plays in DE's performance. Three essential parameters, namely, mutation factor, crossover rate, and population size, are considered in this category. The deterministic, adaptive, or self-adaptive parameter selection has all been used, as explained in this section. The performance is also dependent on the nature of the optimization problems. It can be concluded that modifying these parameters significantly enhances the performance of DE.

3.2 Modified by Parameter Tuning and Different Mutation Strategy

The parameters of DE have a very important role in the searching process. Likewise, the mutation strategy selection for generating donor vectors also has equal importance. The selection of good parameters with its mutation strategy is problem-dependent. Diversity in the population depends on the strategy used with the amplification factor. The researchers pay much more effort to design algorithms by modifying existing strategies, combining several techniques in one algorithm, combining strategies and parameter settings, and using these policies differently. A list of these modifications on DE is given in Table 4.

3.2.1 JADE

JADE was proposed with a new mutation strategy \(DE/current-to-pbest,\) a modification of the mutation strategy \(DE/current-to-best\). According to this new mutation policy, instead of the global best solution, a randomly chosen solution among \(100p\%\) best solutions will be used. An external archive was maintained to store the recently rejected inferior solutions. Whenever the archive exceeded the limit, a few solutions were deleted randomly to keep the limit. The second random solution was selected from the union of the present solution and the solution in the archive. Scaling factor and crossover parameter values are generated using truncated Cauchy distribution and Normal distribution, respectively. The process showed enhanced results compared to the adaptive DE variants, jDE, SaDE, basic DE, and PSO methods.

3.2.2 SaJADE

Strategy adaptation mechanism used four mutation strategies, non-archived and archived version of \(DE/rand to pbest\) and \(DE/current to pbest\). Strategies without archives are suitable for low-dimensional problems and converge faster. On the contrary, archived strategies are fit for high-dimensional problems and bring diversity to the population. The appropriate process was selected among these strategies based on a strategy parameter. The strategy parameter was updated after every generation. This proposed scheme can be combined with other DE variants and the parameter setting of that particular variant. When combined with JADE named, SaJADE gave a better performance than JADE.

3.2.3 EPSDE

The three mutation strategies \(DE/Best/2/bin\),\(DE/rand/1/bin, and DE/current-to-rand/1/bin\) made a pool of mutation strategies with different characteristics. Each population vector was assigned a randomly chosen mutation strategy for trial vector generation with linked parameters. If the trial vector is generated better than the target vector in the present generation, this trial vector is selected as the next generation's target vector. Simultaneously, the combination of the mutation strategy and parameter values generated the better offspring also stored. On either side, if the trial vector generated was not better than the target vector, then a new pair of mutation strategies and parameter values were allocated from their respective pools, or a pair of successful mutation strategies and parameter values stored which have equal probability was assigned. This way, it worked with a better probability of generating offspring using mutation strategy and control parameters.

3.2.4 CoDE

The proposed method used three trial vector generation strategies to make the strategy candidate pool, and three control parameter settings were used to make the parameter candidate pool. The selected trial vector generation strategies were \("DE/rand/1/bin", "DE/rand/2/bin", and "DE/current-to-rand/1".\) Here the word \("rand"\) denotes uniformly distributed random numbers between 0 and 1. Three selected control parameter settings were \([F =1.0, CR =0.1],[F =1.0, CR =0.9]\) and \([F =0.8, CR =0.2]\). Firstly, the initial population was generated from the feasible solution space. Then, every generation trial vector was generated using every strategy from the strategy pool with a randomly selected parameter setting from the parameter pool. Thus, three trial vectors were generated for every target vector at each generation. Only the best one replaced the target vector and entered the next generation among these three trial vectors. Empirical results showed that this strategy was better than the four DE variants jDE, JADE, SaDE, EPSDE, and some other non-DE variants.

3.2.5 MDE_pBX

Modified DE with p best crossover used a new mutation strategy. For every target vector,\(q\%\) of the total population was randomly selected as a group. The best member of this group was used in the mutation process according to the \(DE/current-to-best/1\) strategy. A crossover policy named \(pbest\) crossover was also incorporated in this scheme, allowing mutant vectors to exchange their components with an individual that falls in the \(p\)- top-ranked individual of the present generation using the binomial crossover. Values of \(F\) and \(CR\) were updated based on their previous successful values in generating a trial vector. DE, jDE, and JADE's performance was amplified when combined with one or more proposed modifications.

3.2.6 MRL-DE

Modified random location-based DE algorithm worked to increase the search process's explorative ability. The whole population was divided into three regions after sorting according to their fitness. The user decided what percentage of the total population would reside in every region. Three random variables were selected for mutation purposes, one from every region. Other phases of the algorithm acted like basic DE. The new algorithm enhanced DE's performance and outperformed in comparison to some of its variants.

3.2.7 GDE

Individuals of the whole population were divided into a superior group and an inferior group based on their fitness value. The superior group was used to increase the algorithm's searchability. Hence \(DE/rand\) mutation strategy was used on that group as the inferior group did not contain much more information.\(DE/Best\) mutation strategy was used to amplify the algorithm's exploitation ability. A self-adaptive strategy automatically selected the group size. The other phases were like the basic DE algorithm. This new algorithm gave better performance compared to some different DE mutation strategies.

3.2.8 SapsDE

As in DE and most of its variants, the size of the population was fixed; this approach worked on a variable size population. The behavior of the search process dynamically adjusts population size. When the fitness value is better, then it increases the population size. If the fitness value is less, then population size decreases, and if stagnation occurs, population size increases for exploring. The resizing of the population was done by population resizing triggers. Two different mutation strategies were used to balance the exploration and exploitation in the search process. Among them, one has the capability of investigation, and the other was used for exploitation. This proposed strategy gave better results on some benchmark functions than DE, SaDE, jDE, JADE, etc.

3.2.9 SAS-DE

Self-adaptive combined strategies using DEcomposed of four mutation strategies, two crossover operators, and two constraint handling techniques. A total of 16 combinations can be obtained from these mechanisms. Each individual in the population was assigned a value between 1 and 16. The donor vector was generated at the first generation using the allocated mutation strategy, a crossover operator, and a constraint handling technique. A tournament selection process was run for the first m individuals of the population to select the best individual and combine strategies. The chosen strategies were applied to the individual. The remaining individuals of the population are assigned a random combination of approaches. The searching process was more effective than DE and its four other variants.

3.2.10 DE with Two-Level Parameter Adaptation

A new mutation strategy,\(DE/lbest/1\), was used here, different from the \(DE/best/1\). The earlier approach used the global best value for the optimization process; the new mutation scheme used multiple best values generated from the different local sub-populations. Thus, it maintains both convergence speed and diversity of the individual in the process. The adaptive parameter control scheme was implemented in this scheme in two steps. According to the search process, the first step controls \(F\) and \(CR\)'s values for each generation's whole population, whether it is exploration or exploitation. The second phase generates each individual's parameters from population-level parameters based on the individual's fitness value and distance from the global best individual. The proposed scheme was superior to some self-adaptive DE variants like CoDE, jDE, JADE, SaDE, etc.

3.2.11 RSDE

The proposed scheme used two population replacement strategies to alleviate the problem of stagnation. The first approach introduced an individual replacement strategy that verifies the individual's improvement within a predefined number of iterations. If the individual has not improved, a new individual is generated, combining the best individual's value with a random parameter offset. The second replacement strategy compares the fitness value of the current best individual with the fitness value of the best individual found before a pre-specified iteration. If the value is improved, the last best individual value is replaced by the current best individual, and the evolution continues. On the other side, if the current best fitness value is not improved, the population will be generated again with the current generation's best individual. While the population was replaced a predefined number of times, the population was randomly generated using a uniform distribution within the whole parameter space. The Exploration and exploration ability of DEwas improved by combining these strategies into it.

3.2.12 Cluster-Based DE with Self-adaptive Parameters

Multi-population was adopted to increase multimodal optimization searchability. The clustering method was used to divide the whole population into sub-populations. Control parameters were selected based on the search process stages in an adaptive manner that speeds the convergence and gives the solution accuracy. This strategy was combined with crowding DE (CDE) and species-based DE (SDE) to form new algorithms, Self-CCDE and Self-CSDE, which provided a far better result than the parent algorithms.

3.2.13 DMPSADE

Self-adaptive discrete parameter control strategy was proposed to control the exploration and exploitation during the search process. Every vector of the population has its mutation strategy and control parameters. Mutation strategies and control parameters were updated on areal-time basis. The cumulative selection probability of the total five mutations was calculated based on the cumulative selection probability; a randomly selected strategy was selected for each individual using a roulette wheel. Comparison with several self-adaptive DE variants revealed that the average performance of the proposed algorithm was better.

3.2.14 mDE-bES

The population was divided into independent subgroups, increasing population diversity on each step of optimization. Each subgroup is allocated a promising mutation and update strategy within a period of function evaluation. At the end of the function evaluation period, individuals in different subgroups are exchanged. This exchange of information guided the algorithm to explore more effective regions in the search space. This algorithm introduced a new mutation strategy using a convex linear combination of randomly selected individuals.

3.2.15 SWDE_Success

The proposed scheme mentioned switching of scaling factor and crossover value within the lowest and highest value of the range using a uniform random distribution for various individuals of the population. During the mutation process, each individual of the population goes through one mutation strategy among the two strategies \(DE/rand/1\) and \(DE/best/1\). Among the two strategies, the strategy which generated successful offspring in the last generation was selected to act for the next generation. SWDE_Success showed comparative results or better results than similar types of algorithms.

3.2.16 jESDE

This approach modified the SaEPSDE model after identifying unnecessary or redundant parts. jESDE used only mutation strategies \(DE/rand/1\) and \(DE/current-to-best\) and two crossover strategies. The SaEPSDE learning process identifies the successful mutation and crossover strategy and applies it to all individuals until the optimization process ends. jESDE used controlled randomization of \(F\) and \(CR\) values like the jDE algorithm. It was given high performance in low dimensional problems, and high dimensional problems performance was good.

3.2.17 IDEI

A combination of mutation strategies \(DE/rand-to guiding/1\) and \(DE/current-to-guiding/1\) with an arranged probability was used to balance exploration and exploitation. The parameter set was guided by the fitness values of both original and guiding individuals. In the selection process, a diversity-based selection strategy was employed to accumulate a greedy selection strategy. It calculated a new value of weighted fitness based on vectors' fitness value and trial and target vectors' position. Experimentally, it was found that the proposed algorithm was very competitive.

3.2.18 SAKPDE

In this proposed strategy, a learning-forgetting mechanism was introduced to implement self-adaptive mutation and crossover strategies. Adjustment of parameters made by prior and non-traditional knowledge. A set of parameter values, a crossover strategy, and a mutation strategy were allocated for each individual in the population during evolution. Initialization of the population was done using \(DE/rand/1\) to diversify the population. Consequently, mutation strategies were selected based on cumulative selection probability using a roulette wheel. One was selected self-adaptively among the binomial, exponential, and Eigenvector-based crossover. Opposition learning was used to generate control parameters. Eight well-known DE variants were compared with this algorithm and found competitive or better performance.

3.2.19 NRDE

The proposed approach used to optimize a single objective noisy function. It employs switching between the values of parameters \(F\) and \(CR\). These parameter values bounce randomly between the limits during each offspring generation process. One is chosen randomly in the mutation phase among the two mutation strategies \(DE/best/1\) strategy or the \(DE/rand/1\) strategy. A modified crossover operator and an adaptive threshold scheme are used to select individuals for the next generation. A competitive performance with robustness was obtained on state-of-the-art DE algorithms while solving noisy optimization.

3.2.20 AGDE

Adaptive guided DE algorithm introduced a new mutation strategy. Instead of choosing three random variables like \(DE/rand/1\), random variables are selected from \(100Z\%\) of the top and bottom individuals, and the other \([PS-2*(100Z\%)]\) individuals. According to the proposed mutation strategy difference, the best and worst vector is multiplied by the scaling factor and added with the individual chosen from the middle individuals. This process curtails the risk of premature convergence and enhances the exploration ability. A new adaptation scheme for updating \(CR\) values at each generation is employed by making a pool of known \(CR\) values. Crossover probability is calculated at each generation following uniform distribution in the range specified.

3.2.21 jSO

The jSO algorithm is the modified version of iLSHADE. \(DE/Current-to-pBest/1\) is the mutation strategy used by its two parent algorithms LSHADE and iLSHADE, whereas, in jSO, a weighted mutation strategy \(DE/Current-to-pBest-w/1\) was introduced. The weighted scaling factor (\({F}_{\omega }\)) was used to multiply a smaller value in the early stages, and in the later phase, a greater value was applied. In other points, it is the same as the iLSHADE algorithm.

3.2.22 RNDE

Mutation strategies of DE, \(DE/rand/1\), and \(DE/best/1\) have good exploration ability and exploitation ability, respectively. A novel strategy,\(DE/neighbor/1\), is used in this algorithm to use both the approach's advantages. According to the \(DE/neighbor/1\) process, the individual's neighbor is chosen randomly, and the best neighbor is selected as the base vector for calculation. The selection of the number of neighbors is vital in this algorithm as it controls the exploration and exploitation of the search process. Comparison with algorithms of \(DE/rand/1\) and \(DE/best/1\) individually and with six other state-of-the-art DE algorithms (DEGL/SAW, EPSDE, MGBDE, SaDE, ODE, and OXDE) depicted the superiority of the proposed approach.

3.2.23 AMECoDEs

Adaptive multiple elites guided composite DE proposed the generation of trial vectors for each individual in two elite vectors' guidance. The procedure of selection for the elite was different. An elite vector with diverse strategy selection produces two trial vectors, among which the better one participates in the selection process. Thus, the probability of trapping the search process in a discouraging area was reduced. Parameters for each trial vector generation strategy were autonomously adapted to the successful experience of previous generations. A shift mechanism is used to move the search process from stagnation. Comparing the proposed algorithm with other DE variants of its time has given a better or more competitive result.

3.2.24 PALM-DE

Parameters with an adaptive learning mechanism addresses parameter tuning and appropriate mutation strategy with the associated parameter values. \(F\) and \(CR\) values are separated into different groups to control the unwanted interaction between the control parameters. The value of \(CR\) was adapted based on its success probability, and the value of \(F\) was adjusted to success values and fitness values associated with it. A timestamp mechanism was introduced to trace and eliminate too old inferior solutions from the archive. A dynamic population size reduction approach ensures a dynamic change in population size. Enhanced or competitive performance is achieved over DE and some of its variants on single objective functions.

3.2.25 HHDE

The algorithm used a historical experience-based mechanism (HEM) and heuristic information (HIM) based parameter setting to accomplish the search process. A mutation strategy is allocated to the individual considering the individual's preference in the search process, determined by the individual's heuristic information. Mutation strategies were also indexed according to the selection rate in the whole population. Allocation of parameters was done by first ranking the individuals according to their fitness value and ranking parameters on their success.

3.2.26 LSHADE-RSP

It is an enhanced variant of the LSHADE algorithm. A new mutation strategy \(current-to-pbest/r\) is introduced in this variant. The new strategy is the modification of the existing \(current-to-pbest/1\) strategy. According to the new strategy, individuals with the highest fitness get the most considerable rank, and the minor position is given to the individual with the least fitness. Scaling factor \(F\) computed using Cauchy distribution with location parameter \(\mu {F}_{r}\) and a scale parameter of 0.1. During the computation of \(Cr\), a normal distribution is used with mean \(\mu {Cr}_{r}\) and variance 0.1. Finally, the algorithm evaluated the IEEE CEC 2018 function set and circular antenna array design problem.

3.2.27 GPDE

The proposed approach used a Gaussian mutation operator and a modified common mutation operator in which the \(DE/rand/1\) strategy changed to \(DE/rand-worst/1\). Both the strategies work together based on their combined score for producing potential places for each individual. Periodic adjustment of the scaling factor is confirmed using a cosine function, and the diversity of the population is dynamically adjusted to the evolutionary process by employing a Gaussian function. Performance analysis of GPDE showed that it outperforms most of the well-known algorithms of its time.

3.2.28 PaDE

Parameter adaptive used a grouping strategy in which individuals of the whole population were clustered into \(k\) groups. Every group was associated with two control parameters \({\mu }_{CR}\) and a selection probability. Control parameter \(F\) was calculated using a Cauchy distribution. A parabolic population reduction mechanism was proposed to control the fast reduction of the population at the beginning when a linear reduction strategy was used in some variants. A timestamp strategy increased the effectiveness of mutation by eliminating too old inferior solutions from the archive. This algorithm has shown better results on state-art-of-the DE variants on single-objective optimization, especially in higher dimension problems.

3.2.29 CIpBDE

Modified the basic DE with a few new strategies. The mutation was performed using two mutation strategies modified \(DE/target-to-ci\_mbest/1\) and \(DE/target-to-pbest/1\) strategies. Selection between the strategy achieved using a constant probability value of 0.5. Parameter values of scale factor and crossover were evaluated adaptively using a modified scheme with successful values from the last generations. Scale factor value for every individual at every iteration generated using Cauchy distribution with a location parameter value. Crossover value evaluated using Gaussian distribution. This process guaranteed the evolution of individuals with better fitness. Finally, CIpBDE was compared with several DE variants using CEC 2013 function and feature selection problem.

3.2.30 Di-DE

In the Di-DE technique, external archive arrangements were kept up during the search. These arrangements are utilized in the mutation methodology, where they can improve the variety of the trial vectors. Besides, a novel gathering system was used in the Di-DE method, and the entire population was partitioned into a few gatherings with data exchange in each gathering. The Di-DE technique acquired a generally better execution with DE variations with fixed population size under several benchmark functions by these improvements.

3.2.31 DMCDE

DMCDE acquainted a new mechanism guided by the best individual to propose two unique variations of the old-style \(DE/rand/2\) and \(DE/best/2\) procedures; the proposed mutation strategies were named as \(DE/e-rand/2\) and \(DE/e-best/2\). They utilized the solution arbitrarily browsed prevalent best solution as the base vector and the primary vector of contrast vectors, giving a more precise direction to singular mutation without losing diversity. The new strategies were used to balance the global and local search trade-offs. Finally, the new method evaluated basic benchmark functions on different dimensions and flight scheduling problem. Comparison of results with DE variants proved the efficacy of the new algorithm.

3.2.32 WMSDE

The population was divided into several groups of subpopulations. The scale factor value was calculated using the wavelet base function. Total five mutation strategies \(DE/rand/1, DE/rand/2, DE/best/1, DE/best/2\) and \(DE/rand-to-best/1\) were used to generate trial vectors. The strategy that developed the best solution was used in the later iterations for mutation purpose. The crossover value was evaluated using the normal distribution. Finally, the normal benchmark functions and airport gate assignment problem were solved using the method.

3.2.33 COLSHADE

COLSHADE emerged from the L-SHADE algorithm by presenting noteworthy highlights, for example, versatile Lévy flights and dynamic tolerance. Lévy flight was used to perform the global search in COLSHADE. The Lévy flight's objective is to manage the choice pressing factor applied over the population to track down the attainable area and keep variety in the solution. Hence, another versatile Lévy flight mutation technique was presented here, called the levy/1/bin. The local search stage was performed by \(current-to-pbest\) strategy. Notwithstanding, the universal system adaptively controlled the execution of these two mutation approaches at various rates and times.