Abstract

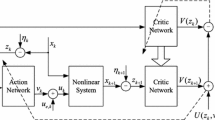

In this paper, we aim to solve the finite horizon optimal control problem for a class of discrete-time nonlinear systems with unfixed initial state using adaptive dynamic programming (ADP) approach. A new ɛ-optimal control algorithm based on the iterative ADP approach is proposed which makes the performance index function converge iteratively to the greatest lower bound of all performance indices within an error according to ɛ within finite time. The optimal number of control steps can also be obtained by the proposed ɛ-optimal control algorithm for the situation where the initial state of the system is unfixed. Neural networks are used to approximate the performance index function and compute the optimal control policy, respectively, for facilitating the implementation of the ɛ-optimal control algorithm. Finally, a simulation example is given to show the results of the proposed method.

Similar content being viewed by others

References

H. G. Zhang, Y. Wang, D. Liu. Delay-dependent guaranteed cost control for uncertain stochastic fuzzy systems with multiple time delays. IEEE Transactions on Systems, Man, and Cybernetics — Part B: Cybernetics, 2008, 38(1): 125–140.

M. R. Hsu, W. H. Ho, J. H. Chou. Stable and quadratic optimal control for T-S fuzzy-model-based time-delay control systems. IEEE Transactions on Systems, Man, and Cybernetics — Part B: Cybernetics, 2008, 38(4): 933–944.

P. J. Goulart, E. C. Kerrigan, T. Alamo. Control of constrained discrete-time systems with bounded L 2 gain. IEEE Transactions on Automatic Control, 2009, 54(5): 1105–1111.

J. H. Park, H. W. Yoo, S. Han, et al. Receding horizon controls for input-delayed systems. IEEE Transactions on Automatic Control, 2008, 53(7): 1746–1752.

H. Zhang, L. Xie, G. Duan. H∞ control of discrete-time systems with multiple input delays. IEEE Transactions on Automatic Control, 2007, 52(2): 271–283.

H. Zhang, M. Li, J. Yang, et al. Fuzzy model-based robust networked control for a class of nonlinear systems. IEEE Transactions on Systems, Man, and Cybernetics — Part B: Cybernetics, 2009, 39(2):437–447.

Z. Wang, H. Zhang, W. Yu. Robust stability of Cohen-Grossberg neural networks via state transmission matrix. IEEE Transactions on Neural Networks, 2009, 20(1): 169–174.

L. Blackmore, S. Rajamanoharan, B. C. Williams. Active estimation for jump Markov linear systems. IEEE Transactions on Automatic Control, 2008, 53(10): 2223–2236.

T. Cimen, S. P. Banks. Nonlinear optimal tracking control with application to super-tankers for autopilot design. Automatica, 2004, 40(11): 1845–1863.

J. Azzato, J. B. Krawczyk. Applying a finite-horizon numerical optimization method to a periodic optimal control problem. Automatica, 2008, 44(6): 1642–1651.

A. Ferrantea, G. Marrob, L. Ntogramatzidisb. A parametrization of the solutions of the finite-horizon LQ problem with general cost and boundary conditions. Automatica, 2005, 41(8): 1359–1366.

N. Fukushima, M. S. Arslan, I. Hagiwara. An optimal control method based on the energy flow equation. IEEE Transactions on Control Systems Technology, 2009, 17(4): 866–875.

I. Kioskeridis, C. Mademlis. A unified approach for four-quadrant optimal controlled switched reluctance machine drives with smooth transition between control operations. IEEE Transactions on Automatic Control, 2009, 24(1): 301–306.

G. N. Saridis, F. Y. Wang. Suboptimal control of nonlinear stochastic systems. Control-Theory and Advanced Technology, 1994, 10(4): 847–871.

A. Zadorojniy, A. Shwartz. Robustness of policies in constrained Markov decision processes. IEEE Transactions on Automatic Control, 2006, 51(4): 635–638.

E. Zattoni. Structural invariant subspaces of singular hamiltonian systems and nonrecursive solutions of finite-horizon optimal control problems. IEEE Transactions on Automatic Control, 2008, 53(5):1279–1284.

H. Ichihara. Optimal control for polynomial systems using matrix sum of squares relaxations. IEEE Transactions on Automatic Control, 2009, 54(5): 1048–1053.

J. Mao, C. G. Cassandras. Optimal control of multi-stage discrete event systems with real-time constraints. IEEE Transactions on Automatic Control, 2009, 54(1): 108–123.

H. Zhang, Q. Wei, Y. Luo. A novel infinite-time optimal tracking control scheme for a class of discrete-time nonlinear systems via the greedy HDP iteration algorithm. IEEE Transactions on Systems, Man, and Cybernetics — Part B: Cybernetics, 2008, 38(4): 937–942.

H. Zhang, Y. Luo, D. Liu. The RBF neural network-based nearoptimal control for a class of discrete-time affine nonlinear systems with control constraint. IEEE Transactions on Neural Networks, 2009, 20(9): 1490–1503.

R. E. Bellman. Dynamic Programming. Princeton: Princeton University Press, 1957.

V. G. Boltyanskii. Optimal Control of Discrete Systems. New York: John Wiley & Sons, 1978.

A. E. Bryson, Y. C. Ho. Applied Optimal Control: Optimization, Estimation, and Control. New York: Hemisphere Publishing Co., 1975.

Q. Wei, H. Zhang, J. Dai. Model-free multiobjective approximate dynamic programming for discrete-time nonlinear systems with general performance index functions. Neurocomputing, 2009, 72(7/9): 1839–1848.

D. Liu, X. Xiong, Y. Zhang. Action-dependent adaptive critic designs. International Joint Conference on Neural Networks. New York: IEEE, 2001: 990–995.

J. Si, Y. Wang. On-line learning control by association and reinforcement. IEEE Transactions on Neural Networks, 2001, 12(2):264–276.

J. J. Murray, C. J. Cox, G. G. Lendaris, et al. Adaptive dynamic programming. IEEE Transactions on Systems, Man, and Cybernetics — Part C: Cybernetics, 2002, 32(2): 140–153.

D. Liu, H. Zhang. A neural dynamic programming approach for learning control of failure avoidance problems. International Journal of Intelligence Control and Systems, 2005, 10(1): 21–32.

T. Landelius. Reinforcement Learning and Distributed Local Model Synthesis. Sweden: Linkoping University, 1997.

D. Liu, Y. Zhang, H. Zhang. A self-learning call admission control scheme for CDMA cellular networks. IEEE Transactions on Neural Networks, 2005, 16(5): 1219–1228.

N. Jin, D. Liu, T. Huang, et al. Discrete-time adaptive dynamic programming using wavelet basis function neural networks. Proceedings of the IEEE Symposium on Approximate Dynamic Programming and Reinforcement Learning. New York: IEEE, 2007: 135–142.

A. Al-Tamimi, F. L. Lewis. Discrete-time nonlinear HJB solution using approximate dynamic programming: convergence proof. Proceedings of the IEEE Symposium on Approximate Dynamic Programming and Reinforcement Learning, New York: IEEE, 2007: 38–43.

A. Al-Tamimi, M. Abu-Khalaf, F. L. Lewis. Adaptive critic designs for discrete-time zero-sum games with application to H∞ control. IEEE Transactions on Systems, Man, and Cybernetics — Part B: Cybernetics, 2007, 37(1): 240–247.

H. Zhang, Q. Wei, D. Liu. An iterative adaptive dynamic programming method for solving a class of nonlinear zero-sum differential games. Automatica, 2011, 47(1): 207–214.

P. J. Werbos. A menu of designs for reinforcement learning over time. W. T. Miller, R. S. Sutton, P. J. Werbos, eds. Neural Networks for Control, Cambridge: MIT Press, 1991: 67–95.

D. V. Prokhorov, D. C. Wunsch. Adaptive critic designs. IEEE Transactions on Neural Networks, 1997, 8(5): 997–1007.

P. J. Werbos. Approximate dynamic programming for real-time control and neural modeling. D. A. White, D. A. Sofge, eds. Handbook of Intelligent Control: Neural, Fuzzy, and Adaptive Approaches, New York: Van Nostrand Reinhold, 1992: 493–525

F. Wang, N. Jin, D. Liu, et al. Adaptive dynamic programming for finite horizon optimal control of discrete-time nonlinear systems with ε-error bound. IEEE Trasctions on Neural Networks, 2011, 22(1): 24–36.

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was partly supported by the National Natural Science Foundation of China (No.60904037, 60921061, 61034002), and the Beijing Natural Science Foundation (No. 4102061).

Qinglai WEI received his B.S. degree in Automation, M.S. degree in Control Theory and Control Engineering, and Ph.D. degree in Control Theory and Control Engineering, from the Northeastern University, Shenyang, China, in 2002, 2005, and 2008, respectively. He is currently a postdoctoral fellow with the Key Laboratory of Complex Systems and Intelligence Science, Institute of Automation, Chinese Academy of Sciences, Beijing, China. His research interests include neural-networks-based control, non nonlinear control, adaptive dynamic programming, and their industrial applications.

Derong LIU received his Ph.D. degree in Electrical Engineering from the University of Notre Dame, Notre Dame, IN, in 1994. Dr. Liu was a staff fellow with General Motors Research and Development Center, Warren, MI, from 1993 to 1995. He was an assistant professor in the Department of Electrical and Computer Engineering, Stevens Institute of Technology, Hoboken, NJ, from 1995 to 1999. He joined the University of Illinois at Chicago in 1999, where he became a full professor of Electrical and Computer Engineering and of Computer Science in 2006. He was selected for the ‘100 Talents Program’ by the Chinese Academy of Sciences in 2008.

Currently, Dr. Liu is the editor-in-chief of the IEEE Transactions on Neural Networks and an associate editor of several other journals. He received the Michael J. Birck Fellowship from the University of Notre Dame (1990), the Harvey N. Davis Distinguished Teaching Award from Stevens Institute of Technology (1997), the Faculty Early Career Development (CAREER) Award from the National Science Foundation (1999), the University Scholar Award from University of Illinois (2006), and the Overseas Outstanding Young Scholar Award from the National Natural Science Foundation of China (2008).

Rights and permissions

About this article

Cite this article

Wei, Q., Liu, D. Finite horizon optimal control of discrete-time nonlinear systems with unfixed initial state using adaptive dynamic programming. J. Control Theory Appl. 9, 381–390 (2011). https://doi.org/10.1007/s11768-011-0181-5

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11768-011-0181-5