Abstract

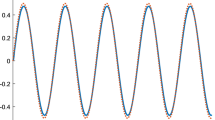

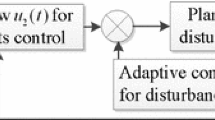

In this paper, the optimal control of a class of general affine nonlinear discrete-time (DT) systems is undertaken by solving the Hamilton Jacobi-Bellman (HJB) equation online and forward in time. The proposed approach, referred normally as adaptive or approximate dynamic programming (ADP), uses online approximators (OLAs) to solve the infinite horizon optimal regulation and tracking control problems for affine nonlinear DT systems in the presence of unknown internal dynamics. Both the regulation and tracking controllers are designed using OLAs to obtain the optimal feedback control signal and its associated cost function. Additionally, the tracking controller design entails a feedforward portion that is derived and approximated using an additional OLA for steady state conditions. Novel update laws for tuning the unknown parameters of the OLAs online are derived. Lyapunov techniques are used to show that all signals are uniformly ultimately bounded and that the approximated control signals approach the optimal control inputs with small bounded error. In the absence of OLA reconstruction errors, an optimal control is demonstrated. Simulation results verify that all OLA parameter estimates remain bounded, and the proposed OLA-based optimal control scheme tunes itself to reduce the cost HJB equation.

Similar content being viewed by others

References

S. Jagannathan. Neural Network Control of Nonlinear Discrete-time Systems. Boca Raton: CRC Press, 2006.

F. L. Lewis, V. L. Syrmos. Optimal Control. 2nd ed. New York: John Wiley & Sons, 1995.

J. Shamma, J. Cloutier. Existence of SDRE stabilizing feedback. IEEE Transactions on Automatic Control, 2003, 48(3): 513–517.

J. Vlassenbroeck, R. Van Dooren. A Chebyshev technique for solving nonlinear optimal control problems. IEEE Transactions on Automatic Control, 1988, 33(4): 333–340.

Z. Chen, S. Jagannathan. Generalized Hamilton-Jacobi-Bellman formulation based neural network control of affine nonlinear discretetime systems. IEEE Transactions on Neural Networks, 2008, 19(1): 90–106.

A. Al-Tamimi, F. L. Lewis, M. Abu-Khalaf. Discrete-time nonlinear HJB solution using approximate dynamic programming: convergence proof. IEEE Transactions on Systems, Man, and Cybernetics — Part B, 2008, 38(4): 943–949.

D. Vrabie, O. Pastravanu, M. Abu-Khalaf, et al. Adaptive optimal control for continuous-time linear systems based on policy iteration. Automatica, 2009, 45(2): 477–484.

G. Toussaint, T. Basar, F. Bullo. H-infinity-optimal tracking control techniques for nonlinear underactuated systems. Proceedings of IEEE Conference on Decision and Control, New York: IEEE, 2000: 2078–2083.

D. Gu, H. Hu. Receding horizon tracking control of wheeled mobile robots. IEEE Transactions on Control Systems Technology, 2006, 14(4): 743–49.

H. Zhang, Q. Wei, Y. Luo. A novel infinite-time optimal tracking control scheme for a class of discrete-time nonlinear systems via the greedy HDP iteration algorithm. IEEE Transactions on Systems, Man, and Cybernetics — Part B, 2008, 38(4): 937–942.

T. Dierks, B. T. Thumati, S. Jagannathan. Optimal control of unknown affine nonlinear discrete-time systems using offline-trained neural networks with proof of convergence. Neural Networks, 2009, 22(5/6): 851–860.

K. G. Vamvoudakis, F. L. Lewis. Online actor-critic algorithm to solve the continuous-time infinite horizon optimal control problem. Automatica, 2010, 46(5): 878–888.

H. K. Khalil. Nonlinear Systems, 3rd ed. New York: Prentice Hall, 2002.

D. Wang, J. Huang. Neural network-based adaptive dynamic surface control for a class of uncertain nonlinear systems in strict-feedback form. IEEE Transactions on Neural Networks, 2005, 16(1): 195–202.

M. K. Bugeja, S. G. Fabri, L. Camilleri. Dual adaptive dynamic control of mobile robots using nearal networks. IEEE Transactions on Systems, Man, and Cybernetics — Part B, 2009, 39(1): 129–141.

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was partly supported by the National Science Foundation (No.ECCS#0621924, ECCS-#0901562), and the Intelligent Systems Center.

Travis DIERKS received his B.S. and M.S. degrees in Electrical Engineering and Ph.D. from the Missouri University of Science and Technology (formerly the University of Missouri-Rolla), Rolla, in 2005, 2007, and 2009, respectively. While completing his doctoral degree, he was a GAANN fellow and a Chancellor’s fellow. His research interests include nonlinear control, optimal control, neural network control, and the control and coordination of autonomous ground and aerial vehicles. He is currently with DRS Sustainment Systems, Inc., St. Louis, MO.

Sarangapani JAGANNATHAN received his Ph.D. degree in Electrical Engineering from the University of Texas, Arlington in 1994. He worked at Systems and Controls Research Division, in Caterpillar Inc., Peoria as a consultant during 1994 to 1998, and during 1998 to 2001 he was at the University of Texas at San Antonio as an assistant professor, and since September 2001, he is at the Missouri University of Science and Technology (former University of Missouri-Rolla) where he is currently a Rutledge-Emerson Distinguished Professor and Site Director for the NSF Industry/University Cooperative Research Center on Intelligent Maintenance Systems. He has coauthored over 87 peer reviewed journal articles, 175 refereed IEEE conference articles, several book chapters and three books entitled ‘Neural Network Control of Robot Manipulators and Nonlinear Systems’, published by Taylor & Francis, London in 1999, ‘Discrete-time Neural Network Control of Nonlinear Discrete-time Systems’, CRC Press, April 2006, and ‘Wireless Ad Hoc and Sensor Networks: Performance, Protocols and Control’, CRC Press, April 2007, and holds 18 patents. His research interests include adaptive and neural network control, computer/communication/sensor networks, prognostics, and autonomous systems/robotics.

Rights and permissions

About this article

Cite this article

Dierks, T., Jagannathan, S. Online optimal control of nonlinear discrete-time systems using approximate dynamic programming. J. Control Theory Appl. 9, 361–369 (2011). https://doi.org/10.1007/s11768-011-0178-0

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11768-011-0178-0