Abstract

Multivariate location and scatter matrix estimation is a cornerstone in multivariate data analysis. We consider this problem when the data may contain independent cellwise and casewise outliers. Flat data sets with a large number of variables and a relatively small number of cases are common place in modern statistical applications. In these cases, global down-weighting of an entire case, as performed by traditional robust procedures, may lead to poor results. We highlight the need for a new generation of robust estimators that can efficiently deal with cellwise outliers and at the same time show good performance under casewise outliers.

Similar content being viewed by others

References

Alqallaf F, Van Aelst S, Yohai VJ, Zamar RH (2009) Propagation of outliers in multivariate data. Ann Stat 37(1):311–331

Alqallaf FA, Konis KP, Martin RD, Zamar RH (2002) Scalable robust covariance and correlation estimates for data mining. In: Proceedings of the eighth ACM SIGKDD international conference on knowledge discovery and data mining, KDD ’02, pp 14–23. doi:10.1145/775047.775050

Danilov M (2010) Robust estimation of multivariate scatter under non-affine equivarint scenarios. Dissertation, University of British Columbia

Danilov M, Yohai VJ, Zamar RH (2012) Robust estimation of multivariate location and scatter in the presence of missing data. J Am Stat Assoc 107:1178–1186

Davies P (1987) Asymptotic behaviour of S-estimators of multivariate location parameters and dispersion matrices. Ann Stat 15:1269–1292

Donoho DL (1982) Breakdown properties of multivariate location estimators. Dissertation, Harvard University

Farcomeni A (2014) Robust constrained clustering in presence of entry-wise outliers. Technometrics 56:102–111

Gervini D, Yohai VJ (2002) A class of robust and fully efficient regression estimators. Ann Stat 30(2):583–616

Huber PJ, Ronchetti EM (1981) Robust statistics, 2nd edn. Wiley, New Jersey

Hubert M, Rousseeuw PJ, Vakili K (2014) Shape bias of robust covariance estimators: an empirical study. Stat Pap 55:15–28

Maronna RA, Martin RD, Yohai VJ (2006) Robust statistic: theory and methods. Wiley, Chichister

Rousseeuw PJ (1985) Multivariate estimation with high breakdown point. In: Grossmann W, Pflug G, Vincze I, Wertz W (eds) Mathematical statistics and applications, vol B. Reidel Publishing Company, Dordrecht, pp 256–272

Rousseeuw PJ, Van Driessen K (1999) A fast algorithm for the minimum covariance determinant estimator. Technometrics 41:212–223

Salibian-Barrera M, Yohai VJ (2006) A fast algorithm for S-regression estimates. J Comput Gr Stat 15(2):414–427

Smith RE, Campbell NA, Licheld A (1984) Multivariate statistical techniques applied to pisolitic laterite geochemistry at Golden Grove, Western Australia. J Geochem Explor 22:193–216

Stahel WA (1981) Breakdown of covariance estimators. Tech. Rep. 31, Fachgruppe für Statistik, ETH Zürich, Switzerland

Stahel WA, Maechler M (2009) Comment on “invariant co-ordinate selection”. J R Stat Soc Ser B Stat Methodol 71:584–586

Tatsuoka KS, Tyler DE (2000) On the uniqueness of S-functionals and M-functionals under nonelliptical distributions. Ann Stat 28:1219–1243

Van Aelst S, Vandervieren E, Willems G (2012) A Stahel–Donoho estimator based on huberized outlyingness. Comput Stat Data Anal 56:531–542

Yohai VJ (1985) High breakdown point and high efficiency robust estimates for regression. Tech. Rep. 66, Department of Statistics, University of Washington. Available: http://www.stat.washington.edu/research/reports/1985/tr066.pdf

Acknowledgments

The authors thank the anonymous reviewers for their thoughtful comments and suggestions which led to an improved version of the paper. Victor Yohai research was partially supported by Grants W276 from Universidad of Buenos Aires, PIP 112-2008-01-00216 and 112-2011-01-00339 from CONICET and PICT2011-0397 from ANPCYT, Argentina. Ruben Zamar and Andy Leung research was partially funded by the Natural Science and Engineering Research Council of Canada.

Author information

Authors and Affiliations

Corresponding author

Additional information

This invited paper is discussed in comments available at: doi:10.1007/s11749-015-0451-5; doi:10.1007/s11749-015-0452-4; doi:10.1007/s11749-015-0453-3; doi:10.1007/s11749-015-0454-2; doi:10.1007/s11749-015-0455-1; doi:10.1007/s11749-015-0456-0.

Appendix: Proofs

Appendix: Proofs

1.1 Proof of Proposition 2.1

Let \(\widehat{F}_n^+\) be the empirical distribution |Z| and \(\widehat{Z}\) as defined by replacing \(\mu \) and \(\sigma \) with \(T_{0n}\) and \(S_{0n}\), respectively, in the definition of Z.

Note that

where \(\widehat{A} \rightarrow 0\) a.s and \(\widehat{B} \rightarrow 0\) a.s. By the uniform continuity of \(F^+\), given \(\varepsilon >0,\) there exists \(\delta > 0\) such that \(|F^+(z(1-\delta ) - \delta )-F^+(z)|\le \varepsilon /2\). With probability one there exists \(n_1\) such that \(n\ge n_1\) implies \(|\widehat{A}|\) \(<\delta \) and \(|\widehat{B}|<\delta \). By the Glivenko–Cantelli Theorem, with probability one there exists \(n_2\) such that \(n\ge n_2\) implies that \(\sup _{z}|\widehat{F}_n^+(z) -F^+(z)|\le \varepsilon /2\). Let \(n_3=\max (n_1,n_2)\), then \(n\ge n_3\) imply

and then

This implies that \(n_{0}/n\rightarrow 0\) a.s.

1.2 Proof of Theorem 3.1

We need the following Lemma proved in Yohai (1985).

Lemma 7.1

Let \(\{\mathbf {Z}_{i}\}\) be i.i.d. random vectors taking values in \(\mathbb {R}^{k}\), with common distribution Q. Let \(f:\mathbb {R}^{k}\times \mathbb {R}^{h}\rightarrow \mathbb {R}\) be a continuous function and assume that for some \(\delta >0\) we have that

Then, if \(\widehat{\lambda }_{n}\rightarrow \lambda _{0}\) a.s., we have

Proof of Theorem 3.1,

Define

We drop out \({\mathbb {X}}\) and \({\mathbb {U}}\) in the argument to simplify the notation. Since \(s_{GS}(\varvec{\mu },\lambda \varvec{\Sigma },\varvec{\widehat{\Omega }})=s_{GS}(\varvec{\mu },\varvec{\Sigma },\varvec{\widehat{\Omega }})\), to prove Theorem 3.1 it is enough to show

-

(a)

$$\begin{aligned} (\varvec{\widehat{\mu }}_{Gs},\ \widetilde{\varvec{\Sigma }}_{GS} )\rightarrow (\varvec{\mu }_{0},\varvec{\Sigma }_{00})\text { a.s.,} \quad \quad \text { and} \end{aligned}$$(17)

-

(b)

$$\begin{aligned} s_{GS}(\varvec{\widehat{\varvec{\mu }}}_{GS},\widetilde{\varvec{\Sigma } }_{GS},\widetilde{\varvec{\Sigma }}_{GS})\rightarrow \sigma _{0}\text { a.s.} \end{aligned}$$(18)

Note that since we have

then part (i) of Lemma 6 in the Supplemental Material of Danilov et al. (2012) implies that given \(\varepsilon >0,\) there exists \(\delta > 0\) such that

where \(C_{\varepsilon }\) is a neighborhood of \((\varvec{\mu }_{0},\varvec{\Sigma }_{00})\) of radius \(\varepsilon \) and if A is a set, then \(A^{C}\) denotes its complement. In addition, by part (iii) of the same Lemma we have for any \(\delta > 0\),

Let

and

Now, if \(|\varvec{\Sigma }|=1\) and \(S=\sigma _{0}(1+\delta )/|\varvec{\widehat{\Omega }}|^{1/p}\), we have

We also have

and, therefore, by Assumption 3.4 we have

Similarly, we can prove that

and

Then, from (19) and (21)–(25) we get

Using similar arguments, from (20) we can prove

Equations (26)–(27) imply that

and

Therefore, with probability one there exists \(n_{0}\) such that for \(n>n_{0}\) we have \((\varvec{\widehat{\mu }}_{GS},\widetilde{\varvec{\Sigma }} _{GS})\in \) \(C_{\varepsilon }^{C}\). Then, \((\varvec{\widehat{\mu }} _{GS},\widetilde{\varvec{\Sigma }}_{GS})\rightarrow (\varvec{\mu } _{0},\varvec{\Sigma }_{00})\) a.s. proving (a).

Let

and

Since \(|\widetilde{\varvec{\Sigma }}_{GS}|=1\), we have that \(s_{GS}(\varvec{\widehat{\mu }}_{GS},\widetilde{\varvec{\Sigma }} _{GS},\widetilde{\varvec{\Sigma }}_{GS})\) is the solution in s in the following equation

Then, to prove (18) it is enough to show that for all \(\varepsilon >0\)

Using Assumption 3.4, to prove (29) it is enough to show

It is immediate that

and

Then, Eq. (30) follows from Lemma 7.1 and part (a). This proves (b).

1.3 Investigation on the performance on the barrow wheel outliers

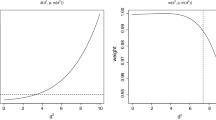

An anonymous referee suggested considering the performance of 2SGS under the barrow wheel contamination setting (Stahel and Maechler 2009; Hubert et al. 2014). We conduct a Monte Carlo study to compare the performance of 2SGS with three second-generation estimators under 5 and 10 % of outliers from the barrow wheel distribution. The data are generated using the R package robustX with default parameters. The three second-generation estimators are: the fast minimum covariance determinant (MCD), the fast S-estimator (FS), and the S-estimator (S), described in Sect. 4. The sample size is \(n = 10\times p\), for \(p=10\) and 20. The results in terms of the LRT measure are graphically displayed in Fig. 3. The performance of 2SGS is comparable to that of the other estimators designed to deal with casewise outliers like the barrow wheel type.

1.4 Timing experiment

Table 4 shows the mean time needed to compute 2SGS for data with cellwise or casewise outliers as described in Sect. 5. We also include the mean time needed to compute MVE-S for comparison. MVE-S is implemented in the R package rrcov, function CovSest, option method=“bisquare”. We consider 10 % contamination and several sample sizes and dimensions. We use the random correlation structures as described in Sect. 4. For each pair of dimension and sample size, we average the computing times over 250 replications for each of the following setups: (a) cellwise contamination with k generated from U(0, 6) and (b) casewise contamination with k generated from U(0, 20). Comparatively longer computing times for 2SGS arise for higher dimensions because GSE becomes more computationally intensive for higher dimensions and for higher fractions of cases affected by filtered cells.

Rights and permissions

About this article

Cite this article

Agostinelli, C., Leung, A., Yohai, V.J. et al. Robust estimation of multivariate location and scatter in the presence of cellwise and casewise contamination. TEST 24, 441–461 (2015). https://doi.org/10.1007/s11749-015-0450-6

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11749-015-0450-6