Abstract

An increasing number of scientists have recently raised concerns about the threat posed by human intervention on the evolution of parasites and disease agents. New parasites (including pathogens) keep emerging and parasites which previously were considered to be ‘under control’ are re-emerging, sometimes in highly virulent forms. This re-emergence may be parasite evolution, driven by human activity, including ecological changes related to modern agricultural practices. Intensive farming creates conditions for parasite growth and transmission drastically different from what parasites experience in wild host populations and may therefore alter selection on various traits, such as life-history traits and virulence. Although recent epidemic outbreaks highlight the risks associated with intensive farming practices, most work has focused on reducing the short-term economic losses imposed by parasites, such as application of chemotherapy. Most of the research on parasite evolution has been conducted using laboratory model systems, often unrelated to economically important systems. Here, we review the possible evolutionary consequences of intensive farming by relating current knowledge of the evolution of parasite life-history and virulence with specific conditions experienced by parasites on farms. We show that intensive farming practices are likely to select for fast-growing, early-transmitted, and hence probably more virulent parasites. As an illustration, we consider the case of the fish farming industry, a branch of intensive farming which has dramatically expanded recently and present evidence that supports the idea that intensive farming conditions increase parasite virulence. We suggest that more studies should focus on the impact of intensive farming on parasite evolution in order to build currently lacking, but necessary bridges between academia and decision-makers.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

From the early expansion of human populations, and particularly with the Agricultural Revolution (about 10,000 years ago), human activity has resulted in increased diversity and severity of disease. This phenomenon is generally known as the ‘first epidemiological transition’ (Armelagos et al. 1991). The number of parasites that humans share with domestic animals appears to be proportional to the time spent since domestication (Southwood 1987). The ‘second epidemiological transition’ followed the Industrial Revolution and was characterised by a dramatic decrease in disease-induced mortality in human populations. We may now be facing the ‘third epidemiological transition’, where an increasing number of parasites (in the context of this review including pathogens) emerge or re-emerge. Even those parasites that previously were considered to be ‘under control’ can re-emerge, and sometimes in highly virulent forms (Barrett et al. 1998). This third epidemiological transition may due to parasite evolution driven by human activity, including ecological changes related to modern agricultural practices (Stearns and Ebert 2001; Lebarbenchon et al. 2008).

Intensive farming of plants and animals creates conditions for parasite transmission and growth that are drastically different from conditions experienced by parasites in wild host populations. Therefore, intensive farming may alter selection on various traits, such as life-history traits and virulence. We focus our review on intensive animal farming (defined as practices involving large numbers of animals raised on limited land and which require large amounts of food, water and medical inputs), because several issues complicate plant parasite evolution, some of them including spatial structure, crop rotation and aspects related to soil ecology (Kiers et al. 2002, 2007). Yet many of the concepts discussed herein apply to the evolution of plant parasites.

Farmed host populations are usually of very high local density (several orders of magnitude greater than in the wild), have reduced genetic variation (down to single host lines or genotypes) and are bred for high yield. Rapid evolution can occur in parasites infesting such populations, as illustrated by the repeated emergence and spread of drug resistance in a wide range of parasite species (Coles 2006; Hastings et al. 2006; Hastings and Watkins 2006; Read and Huijben 2009). But although recent epidemic outbreaks (e.g. avian influenza H5N1, foot-and-mouth disease, swine influenza H1N1) have highlighted the risks associated with intensive farming practices, most work on parasites has focused on reducing the short-term economic losses imposed by parasites, such as application of chemotherapy. Most research on parasite evolution has been conducted using laboratory model systems, often unrelated to economically important systems. Such research is of importance to evolutionary biologists, but is perhaps less relevant for determining farm management policies.

The aim of this review is to examine the possible evolutionary consequences of intensive farming by relating current knowledge of the evolution of parasite life-history and virulence with specific conditions experienced by parasites on farms. We outline two major changes in parasite ecology resulting from modern farming practices. First, there has been a recent worldwide spread of intensive farming providing very dense, rapidly expanding and well-connected (by human assisted transport) host populations, which may favour the evolution of fast-reproducing parasites. Second, under intensive farming conditions, parasite populations are facing cycles of rapid growth followed by drastic declines (caused by either slaughtering of hosts or drug treatment). Such boost-and-bust cycles may select for fast-growing parasites in a way that is similar to serial passage experiments conducted in the laboratory. In this kind of experiments, parasites are transferred from one host to another under conditions that are expected to make the traits of interest evolve. At the end of the experiment, these traits are compared to those in the ancestral lines of parasites (Ebert 1998). Serial passage experiments often yield clear-cut conclusions on the evolution of parasite life-history. For example, shorter host lifespan or higher host availability is known to select for faster life-histories of parasites (Crossan et al. 2007; Paterson and Barber 2007; Nidelet et al. 2009). We then discuss how the predicted changes in parasite life-history may result in increased parasite virulence (parasite-induced host mortality). As an illustration, we examine the effects of fish farming which, to our sense, provides one of the best frameworks for studying the impact of modern food production on the evolution of parasites.

Increased Host Density Selects for Faster Parasite Development

On intensive farms, animals are kept at very high densities all year round. In epidemiological theory, host population density has a central role since an increase in the number of hosts affects the probability for parasite transmission stages to contact new hosts (Anderson and May 1978; May and Anderson 1978). Mean parasite abundance should therefore increase with increasing host density, as this has been observed for parasites of mammals (Arneberg et al. 1998).

Everything else being equal, the basic reproductive ratio (R 0) of parasites increases with host density, so that dense host populations are easier to colonise (R 0 >1) for a higher number of parasite species and hence are likely to harbour higher parasite species richness (Dobson et al. 1992; Poulin and Morand 2004). This pattern has been observed both amongst fish (Morand et al. 2000) and mammals (Arneberg 2002). The proportion of hosts being infected with more than one parasite strain (multiple infections) should therefore be higher in dense populations.

Such ecological effects of increased host density could also have evolutionary consequences. Central to the theory of life-history evolution is the idea that organisms trade off fecundity against mortality in a way that maximises their lifetime reproductive success (Stearns 1992; Roff 2002). An expanding host population will represent an increased resource base for the parasite population, and this will make current reproduction more valuable in relation to future survival. This should select for a faster parasite development and higher investment in early reproduction. This phenomenon was experimentally observed with the entomopathogenic nematode Steinernema feltiae. Over generations, larvae of this parasite became infective earlier when host availability was experimentally increased. In addition, early infectivity was traded-off against larval longevity. As confirmed by a simulation model, the optimal infection strategy in this system depends on the balance between infectivity and survival until successful contact with new hosts (Crossan et al. 2007). A higher frequency of multiple infections has also often been suggested to favour faster growth of parasites, and hence a change in life history towards earlier reproduction, due to increased within-host competition (May and Nowak 1995; Ebert and Mangin 1997; Gandon et al. 2001a).

The structuring of host populations has also changed, sometimes drastically, with intensive farming. Some host populations have become more clustered: whilst animals within the same farm are in close contact with each other, amongst-farm contacts are relatively rare, most of them being indirect through the supply of new, young individuals coming from common stocks (e.g. farmed salmonids, Munro and Gregory 2009). A high degree of clustering may, however, not be the case for all types of farms. For example, the 2001 outbreak of foot-and-mouth disease in the UK highlighted the importance of amongst-farm contacts in the spread of the disease. The restrictions then imposed on animal transport (thus increasing the degree of clustering) were still insufficient in preventing the epidemic, which justified the preventive culling of neighbouring uninfected farms around infected spots (Ferguson et al. 2001; Keeling et al. 2001).

Host population structuring was rarely considered in former epidemiological models (Grenfell and Dobson 1995), but is now increasingly recognised as an important factor affecting disease transmission (Read and Keeling 2003; Keeling and Eames 2005; Webb et al. 2007; Dangerfield et al. 2009). In the early stages of an epidemic, a high degree of clustering is predicted to increase the spread of infection (basic reproductive ratio R 0) because it makes susceptible hosts more easily available to infective stages. In the second and subsequent generations, because of increased immunity in previously infected hosts, clustering may reduce the number of new contacts and may therefore have the opposite effect on R 0 (Keeling 2005). However, not all host taxa have induced immunity.

On some types of intensive farms where a high degree of clustering is combined with the regular arrival of new susceptible individuals (e.g. Munro and Gregory 2009), the first positive effect of clustering on transmission rate may be predominant. In this case, as discussed earlier, increased transmission should select for faster parasite life-histories. But here again, selection for faster life-histories related to host population clustering should not be taken as a general consequence of all intensive farming practices. In other types of farms, clustering is reduced by increased animal transportation. This discussion makes clear that the effect of host population structure on parasite life-histories is important, but that it depends on the parameters of each host-parasite system and on management practises.

Shorter Host and Parasite Lifespan

For parasites any increase in adult mortality, caused by either intrinsic factors typical to host-parasite interactions or extrinsic factors such as host death or anti-parasite drugs, reduces the prospects for future transmission. Hence, increased mortality should select for faster within-host growth, earlier onset of reproduction and increased investment in early reproduction.

Comparative studies have investigated the links between parasite life history and host longevity. In parasitic nematodes, time to release of transmission stages (prepatency) is correlated with female body size (Skorping et al. 1991). Amongst nematode parasites of primates (e.g. oxyurids), there is a positive association between female body length and longevity of their primate hosts, regardless of phylogeny or host body size. In other words, parasite species associated with shorter-lived host species mature on average earlier than those associated with longer-lived host species (Harvey and Keymer 1991; Sorci et al. 1997).

Additionally, formal models have explored life-history trade-offs in parasites from a theoretical perspective. For example, a simple optimality model where delayed reproduction had opposite consequences for fecundity and pre-reproductive survival could explain about 50% of the observed variation in nematode prepatency (Gemmill et al. 1999). Considering that this model made quite crude assumptions and did not explicitly consider host mortality, this result suggests that the fecundity/mortality trade-off is a major determinant of parasite life-history. In another model where mortality could be caused either by intrinsic mortality or by host death, optimal time to patency was found to be inversely related to both types of mortality (Morand and Poulin 2000). Finally, in the particular case of obligately killing parasites (i.e. parasites where transmission requires the death of the host), life-history was modelled assuming density-dependence of within-host growth. Here again, the higher the host background mortality, the earlier the optimal time for killing the host (Ebert and Weisser 1997).

These theoretical predictions are supported by experimental studies specifically designed to test the effect of host lifespan on parasite life history. The most clear-cut example comes from a recent study of Holospora undulata, a bacterial parasite of the ciliate Paramecium caudatum. In an experimental evolution study where hosts were killed either early or late, parasites from early-killing treatment lines had a shorter latency (time until the release of infectious forms) than parasites from late-killing treatment lines (Nidelet et al. 2009). Interestingly, a similar experiment using the horizontally transmitted intestinal parasite Glugoides intestinalis of the crustacean Daphnia magna yielded opposite results: within-host growth was found to be lower in the lines where the parasites had low life expectancy (Ebert and Mangin 1997). However, in the latter experiment, high mortality was achieved by weekly replacing 70–80% of the hosts, whereas low mortality consisted in no replacement at all. As a consequence, the frequency of multiple infections—and hence within-host competition—was probably higher in the low-mortality lines. As later formally confirmed, within-host competition selects for earlier parasite life-history (Gandon et al. 2001a).

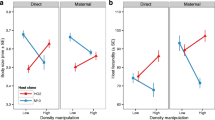

Adult survival of parasites is reduced on intensive farms and there are two main reasons for this. First, host lifespan is usually shorter than in wild host populations because of frequent culling of animals. Second, parasites experience high direct mortality caused by anti-parasite treatments (e.g. antibiotic treatments). According to theory, such reductions in adult survival should favour higher investment in current reproductive effort (Fig. 1), and hence, earlier parasite life-histories. Therefore, frequent use of drugs by the farming industry does not only selects for drug resistance (Coles 2006; Hastings et al. 2006; Hastings and Watkins 2006; Read and Huijben 2009), but is also likely to exacerbate selection for faster growth and transmission of parasites (Webster et al. 2008).

Graphical model for the optimal reproductive effort of parasites in wild and farmed host populations in the presence of a trade-off between current and future reproductive effort. With lower adult survival of parasites in farmed host populations, prospects for future reproduction are reduced (the trade-off curve is lower).The optimal level of current reproductive effort is found at the point the trade-off curve is tangent to a line (the adaptive function) going through all combinations of both variables that result in the same fitness (e.g. Cody 1966)—here a 45 degrees line. Farming conditions are therefore predicted to select for higher levels of current reproductive effort

Parasite Life History and Virulence

A currently widely accepted view is that virulence (parasite-induced host mortality) is both a consequence of parasite transmission and an obstacle to it and that there should be an optimal, intermediate level of virulence (Jensen et al. 2006). On the one hand, a minimum level of host exploitation is necessary for the production of propagules but, on the other hand, increasing host mortality reduces parasite fitness, so that there is an adaptive cost to virulence (reviewed in Alizon et al. 2009, but see Ebert and Bull 2003). However, the higher the host availability, the smaller the predicted cost of virulence. Therefore, very high host densities on intensive farms are likely to favour higher levels of virulence, because the constraints due to the cost of virulence are relaxed. In addition, the use of vaccines reducing pathogen growth and/or toxicity may also reduce the cost of virulence by reducing the risk of host death, and hence select for higher virulence; this may, however, not apply to other types of vaccines such as infection-blocking vaccines (Gandon et al. 2001b).

As discussed earlier, intensive farming is likely to select for faster within-host growth and earlier transmission of parasites. Although the generality of a relation between parasite life-history and virulence is still debated (Ebert and Bull 2003; Alizon et al. 2009), empirical results from a variety of host-parasite systems suggest that selection for early transmission can result in increased virulence. For example, experimental selection for early transmission resulted in higher virulence of the nuclear polyhedrosis virus of the gypsy moth Lymantria dispar (Cooper et al. 2002). Similar results were obtained in the protozoan Paramecium caudatum and its bacterial parasite Holospora undulata (Nidelet et al. 2009). In humans the reduction in host lifespan due to HIV coinfection has been suggested to select for higher virulence of tuberculosis (Basu and Galvani 2009). However, a selective change in parasite life history towards earlier reproduction does not necessarily imply higher virulence. Increased virulence might be expected if the extraction rate of host resources is enhanced or if early parasite reproduction is otherwise linked to increased virulence (e.g. in parasites which need to kill for transmission). But parasites might also trade off quality versus quantity of offspring, e.g. by producing more and cheaper transmission stages earlier (without extracting more host resources). Low quality transmission stages may not be able to survive long in the external environment, but this may not be necessary in high density host populations (Bonhoeffer et al. 1996).

Multiple infections have often been suggested to favour faster growth of parasites and higher levels of virulence (May and Nowak 1995; Ebert and Mangin 1997; Gandon et al. 2001a) because in hosts infected by more than one strain (or species) of parasite, there is little reproductive benefit for the parasite in sparing its the host if it allows other unrelated parasites to compete for the same resource (within-host selection). However, too high levels of virulence are costly in single infected hosts, so that the benefits of increased virulence also depend on the frequency of multiple infected hosts in the population (between-host selection) (de Roode et al. 2005; Coombs et al. 2007; Alizon et al. 2009). Another factor tempering selection for high virulence in multiple infected hosts is the level of relatedness between co-infecting strains. Low levels of virulence can be expected when strains within the same host are closely related (Brown et al. 2002; Wild et al. 2009).

Classical epidemiological models show that increasing extrinsic host mortality can lead to higher levels of parasite virulence in the absence of superinfection (a particular case of multiple infections where one strain replaces the other inside the host) (Gandon et al. 2001a; Day 2003; Best et al. 2009). There are two cases, however, where virulence might decrease with increasing host mortality. First, when mortality causes reduction in the density of infected hosts, the rate of superinfection decreases and, as a consequence, lower within-host competition may in turn select for lower virulence (Gandon et al. 2001a). This effect is probably of little importance in most farmed host populations, where the density of infected hosts is likely to be higher than in wild host populations. Virulence can also decrease with increasing extrinsic host mortality when it makes a host more susceptible to other sources of mortality (e.g. predation), because then parasites with low virulence have greater chances of survival (Williams and Day 2001). This scenario may apply to some extent to farming conditions, for example when diseased fish are removed from culture cages. But the opposite scenario may also occur if diseased animals are preferably excluded from slaughtering (and left for recovery). Here, more detailed data on specific farming management practices are needed to predict how human-induced selection is acting on parasites.

A further difference from natural host populations that may be significant to parasite virulence is the often low level of genetic variation in farmed host populations, which may favour the spread of diseases (Altermatt and Ebert 2008; Ganz and Ebert 2010). Additionally, farmed animal lines are selected for rapid growth and high yield, but usually not for resistance to parasites. More importantly perhaps, host genotypes are sometimes replaced after slaughtering by individuals from the same common stock (e.g. common salmon smolt production sites Munro and Gregory 2009), independently of the infection status of individuals in the preceding generation, so that selection can no longer act on host traits involved in immune defence. In these conditions, parasites would keep adapting to counter host immune defences, whereas hosts would be left more and more susceptible to parasitic infection. However, the opposite may also happen. Replacing hosts after each slaughtering with individuals coming from a different stock than the previous one may hamper local adaptation of parasites and therefore slow down their evolution (Ebert and Hamilton 1996; Ebert 1998). Finally, animal movements due to human transportation—sometimes at an intercontinental scale—will greatly increase the effective population sizes and mix host and parasite genotypes, thereby altering rates of local adaptation and maladaptation between hosts and parasites.

More detailed knowledge of host and parasite demography is necessary to accurately predict the direction and extent to which virulence evolves in farmed populations. Quantitative data about parasite prevalence, mortality due to parasitic infection, replacement rates and management of genetic variation in farmed host populations may exist but, unfortunately, such information is not easily accessible from farming industries. However, even in the absence of detailed information, the prevailing conditions on farms (high density, use of chemotherapy, high replacement rate and low levels of innate immunity in highly selected lines) are likely to increase the degree to which parasites exploit their hosts and thus lead to increased virulence.

Fish Farming and Parasite Evolution

Amongst the different types of intensive farming, fish farming has increased the most rapidly in the last decades. The farmed fish population has increased 100-fold globally in 60 years: from 320,000 tons in 1950, aquaculture fish production reached 32,000,000 tons in 2007 (FAO 2008; Fig. 2). For migrating marine fish species, this enormous increase in population size is associated with another change: year-round presence of fish in coastal seawater, which provides a highly predictable resource for parasites. Altogether, these recent changes in fish ecology can be considered a large-scale natural experiment providing good conditions to study parasite evolution (Skorping and Read 1998), especially because many farmed fish are still genetically close to their wild conspecifics, which makes comparisons easier than in other, older farmed animal species.

The most compelling evidence for increased pathogen virulence on intensive farms comes from a recent study of Flavobacterium columnare, a pathogen of salmon smolts (Salmo salar), trout smolts (Salmo trutta) and rainbow trout (Oncorhynchus mykiss). In the last 23 years, the incidence, severity of symptoms and mortality of this pathogen have steadily increased in Finnish freshwater farms. Antibiotic treatments that were increasingly used from 1992 onwards failed to control mortality. Rearing conditions on farms, coupled with the ability of the pathogen to be transmitted from dead to live fish have likely favoured the emergence and spread of increasingly virulent strains (Pulkkinen et al. 2010).

Faster life history and increased investment in early reproduction is likely to be evolving in other farmed fish-parasite systems. In particular, the ecology of the Atlantic salmon Salmo salar, one of the most abundant farmed fish worldwide (FAO 2008), has radically changed since the beginning of intensive farming (Gross 1998). On salmon farms, parasitic infestations have been increasingly reported and disease epidemics have become a major problem. Epidemiological factors such as higher host density and lower host diversity both facilitate the spread of diseases (Grenfell and Dobson 1995; Altermatt and Ebert 2008) and can therefore be expected to select for faster life history of parasites. Unfortunately, little evidence is available so far to support this claim since most work on parasites of farmed salmon did not focus on life history but rather on drug resistance and vaccine development. However, we will discuss here the possibility that life-history and virulence may be evolving in two major parasites and pathogens of farmed salmon which are causing major economic losses in addition to the threat they impose on wild salmonid stocks.

The salmon louse Lepeophtheirus salmonis is a natural marine ectoparasite of salmonids that that was first reported in the nineteenth century, but salmon louse epidemics did not occur until after cage culture began, in the 1960s in Norway, in the 1970s in Scotland and in the 1980s in North-America. Soon after the first outbreaks, organophosphates were used to control sea lice populations, and national sea lice monitoring programs have been initiated to help reduce the costs to the farming industry (reviewed in Pike and Wadsworth 2000). However, salmon lice have rapidly evolved resistance to widely used chemotherapeutants such as, hydrogen peroxide, dichlorvos and enamectin benzoate (Treasurer et al. 2000; Fallang et al. 2004; Lees et al. 2008). L. salmonis is now a major problem wherever salmon is farmed in the Northern hemisphere. The life cycle of salmon lice consists in eight successive developmental stages. After infection, copepodid larvae develop into four successive chalimi stages attached to the host’s skin by a frontal filament before moulting into two pre-adult stages that are mobile on the fish. Adult salmon lice feed on the mucus, skin and blood of the fish. Highly infested salmons often have severe skin wounds causing osmoregulatory stress, reduced weight gain and increasing the risk of secondary infections by other pathogens (Pike and Wadsworth 2000). Soon after mating, adult female lice start extruding fertilized eggs enclosed in a matrix that binds the eggs together in egg strings. Egg strings remain attached to the female until hatching but are functionally independent (Pike and Wadsworth 2000). Female lice keep producing eggs (up to 11 successive pairs of egg strings) at regular, temperature-dependent intervals for the rest of their lifespan (Heuch et al. 2000). Age at maturity and both the number and volume of eggs produced in each pair of egg strings—especially in the first pair—are quite variable (A. Mennerat, pers. obs.). There is therefore potential for evolution of these life history traits, and indeed preliminary data (A. Mennerat, unpublished data) indicate that salmon lice coming from an intensively farmed area of the Norwegian coast reproduce significantly earlier on average than lice coming from an area without salmon farming, which tends to support the theoretical predictions discussed earlier.

Another pathogen causing major economic loss for salmon farms is the infectious salmon anaemia (ISA) virus. ISA virus is an orthomyxovirus comprised of two distinct clades, one European and one North-American that diverged before 1900 (Krossoy et al. 2001). This divergence indicates that an ancestral form of the virus was present in wild salmonids before the start of cage-culture. Accumulating evidence points to vertical transmission (parent-to-offspring transmission) as the major transmission route of the virus. Horizontal transmission (transmission between members of the same species that are not in a parent-offspring relationship) may also occur to a smaller extent (Nylund et al. 2007). The haemagglutinin-esterase (HE) surface glycoprotein, assumed to be of importance in virulence, contains a highly polymorphic region (HPR), the region of greatest sequence variation (Mjaaland et al. 2002). A full-length, avirulent precursor gene (HPR0) is commonly found in healthy marine and freshwater fish, where it has never been associated with any of the classical clinical signs of ISA disease. In particular, HPR0 variants were found in 22 of 24 sampled Norwegian smolt production sites, which indicates that most smolt imported from freshwater sites to marine cages carry ISA virus in its avirulent form (Nylund et al. 2007). The first outbreaks of ISA were recorded in 1984 on salmonid farms in Norway (Thorud and Djupvik 1988) and subsequently in Canada, Maine, Scotland, Faroe Islands and Chile (Ritchie et al. 2009). More than 20 pathogenic HPR variants have been described so far, probably resulting from differential deletions of the HPR0 avirulent precursor gene (Mjaaland et al. 2002). The emergence of these ISA virulent variants has been suggested to be a consequence of increased transmission from natural reservoirs to dense, farmed Atlantic salmon populations (Mjaaland et al. 2002 Nylund et al. 2003).

Overall, most variance in disease spread is likely to be explained by epidemiological changes. However, this should not distract us from the problems we create by inducing evolutionary changes in the parasites. The evolutionary response of the parasite often reduces the efficiency of our control measures, which may lead to a cultural—genetic arms race between farmers (cultural evolution: improve methods to control parasites) and parasites (adapt to new conditions). Such cultural—genetic arms races are well known from our attempts to control bacteria with the help of antibiotics, where nowadays the problem is considered to be an evolutionary issue. The salmon farming industry does not have yet the same problems as we see in drug resistance of human diseases, but the above examples suggest that life history and virulence of parasites and pathogens may be evolving, potentially rendering our attempts to manage these diseases useless. More detailed knowledge on the characteristics of each system and the traits responding to selection would help predict the direction and rate of such evolution.

Conclusion

Evolutionary biologists have recently raised concerns about the impact of human intervention on the evolution of parasites and disease agents (e.g. Palumbi 2001; Altizer et al. 2003; Lebarbenchon et al. 2008; Smith and Bernatchez 2008; Waples and Hendry 2008; Restif 2009). Long-term evolutionary effects of parasite management strategies have received little attention so far from the farming industry (Pike and Wadsworth 2000; Ebert and Bull 2003). Instead, the majority of research has focused on how to reduce economic losses caused by parasitic infestations of farmed animals and the proposed solutions are mostly driven by short-term motivations targeting the parasite directly or reducing transmission. The farming industry’s neglect of the evolutionary impact of intensive farming may simply reflect diverging interests (reducing the risk of virulence is of little financial interest), but it may also be due to the lack of empirical evidence.

However, we caution not to draw conclusions based on simplified models (Ebert and Bull 2003). Host-parasite systems are often characterised by idiosyncrasies, which may play an important role for the evolution of the system and which may lead to apparently counter intuitive outcomes. Therefore, before management plans are put into practice, we suggest investigating if the host or the parasite population is already showing an evolutionary response to the altered environmental conditions typical of farming. With this knowledge at hand, refined models can be developed and the direction and rate of parasite evolution may be predicted for the particular epidemiological and demographic settings of the host-parasite association under consideration. In the long term, understanding parasite evolution and taking appropriate actions to prevent it may be the most successful and cost-effective way to control disease. Furthermore, using evolutionary principles to prevent or influence parasite evolution (as opposed to chemical parasite control) is likely to be in the interest of sustainable farming practice.

References

Alizon, S., Hurford, A., Mideo, N., & Van Baalen, M. (2009). Virulence evolution and the trade-off hypothesis: History, current state of affairs and the future. Journal of Evolutionary Biology, 22(2), 245–259.

Altermatt, F., & Ebert, D. (2008). Genetic diversity of Daphnia magna populations enhances resistance to parasites. Ecology Letters, 11(9), 918–928.

Altizer, S., Harvell, D., & Friedle, E. (2003). Rapid evolutionary dynamics and disease threats to biodiversity. Trends in Ecology & Evolution, 18(11), 589–596.

Anderson, R. M., & May, R. M. (1978). Regulation and stability of host-parasite population interactions. 1. Regulatory processes. Journal of Animal Ecology, 47(1), 219–247.

Armelagos, G. J., Goodman, A. H., & Jacobs, K. H. (1991). The origins of agriculture - Population growth during a period of declining health. Population and Environment, 13(1), 9–22.

Arneberg, P. (2002). Host population density and body mass as determinants of species richness in parasite communities: Comparative analyses of directly transmitted nematodes of mammals. Ecography, 25(1), 88–94.

Arneberg, P., Skorping, A., Grenfell, B., & Read, A. F. (1998). Host densities as determinants of abundance in parasite communities. Proceedings of the Royal Society of London Series B-Biological Sciences, 265(1403), 1283–1289.

Barrett, R., Kuzawa, C. W., McDade, T., & Armelagos, G. J. (1998). Emerging and re-emerging infectious diseases: The third epidemiologic transition. Annual Review of Anthropology, 27, 247–271.

Basu, S., & Galvani, A. P. (2009). The Evolution of Tuberculosis Virulence. Bulletin of Mathematical Biology, 71(5), 1073–1088.

Best, A., White, A., & Boots, M. (2009). The implications of coevolutionary dynamics to host-parasite interactions. American Naturalist, 173(6), 779–791.

Bonhoeffer, S., Lenski, R. E., & Ebert, D. (1996). The Curse of the Pharaoh: The Evolution of Virulence in Pathogens with Long Living Propagules. Proceedings: Biological Sciences, 263(1371), 715–721.

Brown, S. P., Hochberg, M. E., & Grenfell, B. T. (2002). Does multiple infection select for raised virulence? Trends in Microbiology, 10(9), 401–405.

Cody, M. L. (1966). A General Theory of Clutch Size. Evolution, 20(2), 174–184.

Coles, G. C. (2006). Drug resistance and drug tolerance in parasites. Trends in Parasitology, 22(8), 348.

Coombs, D., Gilchrist, M. A., & Ball, C. L. (2007). Evaluating the importance of within- and between-host selection pressures on the evolution of chronic pathogens. Theoretical Population Biology, 72(4), 576–591.

Cooper, V. S., Reiskind, M. H., Miller, J. A., Shelton, K. A., Walther, B. A., Elkinton, J. S., et al. (2002). Timing of transmission and the evolution of virulence of an insect virus. Proceedings of the Royal Society of London Series B-Biological Sciences, 269(1496), 1161–1165.

Crossan, J., Paterson, S., & Fenton, A. (2007). Host availability and the evolution of parasite life-history strategies. Evolution, 61(3), 675–684.

Dangerfield, C. E., Ross, J. V., & Keeling, M. J. (2009). Integrating stochasticity and network structure into an epidemic model. Journal of the Royal Society Interface, 6(38), 761–774.

Day, T. (2003). Virulence evolution and the timing of disease life-history events. Trends in Ecology & Evolution, 18(3), 113–118.

de Roode, J. C., Pansini, R., Cheesman, S. J., Helinski, M. E. H., Huijben, S., Wargo, A. R., et al. (2005). Virulence and Competitive Ability in Genetically Diverse Malaria Infections. Proceedings of the National Academy of Sciences of the United States of America, 102(21), 7624–7628.

Dobson, A. P., Pacala, S. V., Roughgarden, J. D., Carper, E. R., & Harris, E. A. (1992). The Parasites of Anolis Lizards in the Northern Lesser Antilles. I. Patterns of Distribution and Abundance. Oecologia, 91(1), 110–117.

Ebert, D. (1998). Experimental evolution of parasites. Science, 282(5393), 1432–1435.

Ebert, D., & Bull, J. J. (2003). Challenging the trade-off model for the evolution of virulence: Is virulence management feasible? Trends in Microbiology, 11(1), 15–20.

Ebert, D., & Hamilton, W. D. (1996). Sex against virulence: The coevolution of parasitic diseases. Trends in Ecology & Evolution, 11(2), 79–82.

Ebert, D., & Mangin, K. L. (1997). The influence of host demography on the evolution of virulence of a microsporidian gut parasite. Evolution, 51(6), 1828–1837.

Ebert, D., & Weisser, W. W. (1997). Optimal killing for obligate killers: The evolution of life histories and virulence of semelparous parasites. Proceedings of the Royal Society of London Series B-Biological Sciences, 264(1384), 985–991.

Fallang, A., Ramsay, J. M., Sevatdal, S., Burka, J. F., Jewess, P., Hammell, K. L., et al. (2004). Evidence for occurrence of an organophosphate-resistant type of acetylcholinesterase in strains of sea lice (Lepeophtheirus salmonis Kroyer). Pest Management Science, 60(12), 1163–1170.

FAO. (2008). FAO yearbook of fishery statistics.

Ferguson, N. M., Donnelly, C. A., & Anderson, R. M. (2001). Transmission intensity and impact of control policies on the foot and mouth epidemic in Great Britain. Nature, 413(6855), 542–548.

Gandon, S., Jansen, V. A. A., & van Baalen, M. (2001a). Host life history and the evolution of parasite virulence. Evolution, 55(5), 1056–1062.

Gandon, S., Mackinnon, M. J., Nee, S., & Read, A. F. (2001b). Imperfect vaccines and the evolution of pathogen virulence. Nature, 414(6865), 751–756.

Ganz, H. H., & Ebert, D. (2010). Benefits of host genetic diversity for resistance to infection depend on parasite diversity. Ecology, 91(5), 1263–1268.

Gemmill, A. W., Skorping, A., & Read, A. F. (1999). Optimal timing of first reproduction in parasitic nematodes. Journal of Evolutionary Biology, 12(6), 1148–1156.

Grenfell, B. T., & Dobson, A. P. (1995). In B. T. Grenfell & A. P. Dobson (Eds.), Ecology of infectious diseases in natural populations. Cambridge: Cambridge University Press.

Gross, M. R. (1998). One species with two biologies: Atlantic salmon (Salmo salar) in the wild and in aquaculture. Canadian Journal of Fisheries and Aquatic Sciences, 55(S1), 131–144.

Harvey, P. H., & Keymer, A. E. (1991). Comparing Life Histories Using Phylogenies. Philosophical Transactions: Biological Sciences, 332(1262), 31–39.

Hastings, I. M., Lalloo, D. G., & Khoo, S. H. (2006). No room for complacency on drug resistance in Africa. Nature, 444(7115), 31.

Hastings, I. M., & Watkins, W. M. (2006). Tolerance is the key to understanding antimalarial drug resistance. Trends in Parasitology, 22(2), 71–77.

Heuch, P. A., Nordhagen, J. R., & Schram, T. A. (2000). Egg production in the salmon louse [Lepeophtheirus salmonis (Kroyer)] in relation to origin and water temperature. Aquaculture Research, 31(11), 805–814.

Jensen, K. H., Little, T. L., Skorping, A., & Ebert, D. (2006). Empirical support for optimal virulence in a castrating parasite. PLoS Biology, 4(7), 1265–1269.

Keeling, M. (2005). The implications of network structure for epidemic dynamics. Theoretical Population Biology, 67(1), 1–8.

Keeling, M. J., & Eames, K. T. D. (2005). Networks and epidemic models. Journal of the Royal Society Interface, 2(4), 295–307.

Keeling, M. J., Woolhouse, M. E. J., Shaw, D. J., Matthews, L., Chase-Topping, M., Haydon, D. T., et al. (2001). Dynamics of the 2001 UK foot and mouth epidemic: stochastic dispersal in a heterogeneous landscape. Science, 294(5543), 813–817.

Kiers, E. T., Hutton, M. G., & Denison, R. F. (2007). Human selection and the relaxation of legume defences against ineffective rhizobia. Proceedings of the Royal Society B-Biological Sciences, 274(1629), 3119–3126.

Kiers, E. T., West, S. A., & Denison, R. F. (2002). Mediating mutualisms: farm management practices and evolutionary changes in symbiont co-operation. Journal of Applied Ecology, 39(5), 745–754.

Krossoy, B., Nilsen, F., Falk, K., Endresen, C., & Nylund, A. (2001). Phylogenetic analysis of infectious salmon anaemia virus isolates from Norway, Canada and Scotland. Diseases of Aquatic Organisms, 44(1), 1–6.

Lebarbenchon, C., Brown, S. P., Poulin, R., Gauthier-Clerc, M., & Thomas, F. (2008). Evolution of pathogens in a man-made world. Molecular Ecology, 17, 475–484.

Lees, F., Baillie, M., Gettinby, G., & Revie, C. W. (2008). The efficacy of emamectin benzoate against infestations of lepeophtheirus salmonis on farmed Atlantic salmon (Salmo salar L) in Scotland, 2002–2006. PLoS ONE, 3(2), 1–11.

May, R. M., & Anderson, R. M. (1978). Regulation and stability of host-parasite population interactions. 2. Destabilizing processes. Journal of Animal Ecology, 47(1), 249–267.

May, R. M., & Nowak, M. A. (1995). Coinfection and the evolution of parasite virulence. Proceedings: Biological Sciences, 261(1361), 209–215.

Mjaaland, S., Hungnes, O., Teig, A., Dannevig, B. H., Thorud, K., & Rimstad, E. (2002). Polymorphism in the infectious salmon anemia virus hemagglutinin gene: Importance and possible implications for evolution and ecology of infectious salmon anemia disease. Virology, 304(2), 379–391.

Morand, S., Cribb, T. H., Kulbicki, M., Rigby, M. C., Chauvet, C., Dufour, V., et al. (2000). Endoparasite species richness of New Caledonian butterfly fishes: host density and diet matter. Parasitology, 121(01), 65–73.

Morand, S., & Poulin, R. (2000). Optimal time to patency in parasitic nematodes: host mortality matters. Ecology Letters, 3(3), 186–190.

Munro, L. A., & Gregory, A. (2009). Application of network analysis to farmed salmonid movement data from Scotland. Journal of Fish Diseases, 32(7), 641–644.

Nidelet, T., Koella, J. C., & Kaltz, O. (2009). Effects of shortened host life span on the evolution of parasite life history and virulence in a microbial host-parasite system. Bmc Evolutionary Biology, 9, 10.

Nylund, A., Devold, M., Plarre, H., Isdal, E., & Aarseth, M. (2003). Emergence and maintenance of infectious salmon anaemia virus (ISAV) in Europe: a new hypothesis. Diseases of Aquatic Organisms, 56(1), 11–24.

Nylund, A., Plarre, H., Karlsen, M., Fridell, F., Ottem, K. F., Bratland, A., et al. (2007). Transmission of infectious salmon anaemia virus (ISAV) in farmed populations of Atlantic salmon (Salmo salar). Archives of Virology, 152(1), 151–179.

Palumbi, S. R. (2001). Evolution—Humans as the world’s greatest evolutionary force. Science, 293(5536), 1786–1790.

Paterson, S., & Barber, R. (2007). Experimental evolution of parasite life-history traits in Strongyloides ratti (Nematoda). Proceedings of the Royal Society B-Biological Sciences, 274(1617), 1467–1474.

Pike, A. W., & Wadsworth, S. L. (2000). Sealice on salmonids: their biology and control. Advances in Parasitology, 44, 233–337.

Poulin, R., & Morand, S. (2004). Parasite biodiversity. Washington: Books S.

Pulkkinen, K., Suomalainen, L. R., Read, A. F., Ebert, D., Rintamäki, P., & Valtonen, E. T. (2010). Intensive fish farming and the evolution of pathogen virulence: the case of columnaris disease in Finland. Proceedings of the Royal Society B: Biological Sciences, 277, 593–600.

Read, A. F., & Huijben, S. (2009). Evolutionary biology and the avoidance of antimicrobial resistance. Evolutionary Applications, 2(1), 40–51.

Read, J. M., & Keeling, M. J. (2003). Disease evolution on networks: the role of contact structure. Proceedings of the Royal Society of London Series B-Biological Sciences, 270(1516), 699–708.

Restif, O. (2009). Evolutionary epidemiology 20 years on: Challenges and prospects. Infection Genetics and Evolution, 9(1), 108–123.

Ritchie, R. J., McDonald, J. T., Glebe, B., Young-Lai, W., Johnsen, E., & Gagne, N. (2009). Comparative virulence of Infectious salmon anaemia virus isolates in Atlantic salmon, Salmo salar L. Journal of Fish Diseases, 32(2), 157–171.

Roff, A. D. (2002). In D. A. Roff (Ed.), Life history evolution. Sunderland, Massachusetts, U.S.A: Sinauer Associates, Inc.

Skorping, A., & Read, A. F. (1998). Drugs and parasites: global experiments in life history evolution? Ecology Letters, 1(1), 10–12.

Skorping, A., Read, A. F., & Keymer, A. E. (1991). Life-history covariation in intestinal nematodes of mammals. Oikos, 60(3), 365–372.

Smith, T. B., & Bernatchez, L. (2008). Evolutionary change in human-altered environments. Molecular Ecology, 17(1), 1–8.

Sorci, G., Morand, S., & Hugot, J. P. (1997). Host-parasite coevolution: Comparative evidence for covariation of life history traits in primates and oxyurid parasites. Proceedings of the Royal Society of London Series B-Biological Sciences, 264(1379), 285–289.

Southwood, T. R. E. (1987). Species-time relationships in human parasites. Evolutionary Ecology, 1, 245–246.

Stearns, S. C. (1992). In S. C. Sterans (Ed.), The evolution of life histories. Oxford: Oxford University Press.

Stearns, S. C., & Ebert, D. (2001). Evolution in health and disease: Work in Progress. The Quarterly Review of Biology, 76(4), 417–432.

Thorud, K. E., & Djupvik, H. O. (1988). Infectious salmon anaemia in Atlantic salmon (Salmo salar L.). Bulletin of the European Association of Fish Pathologists, 8, 109–111.

Treasurer, J. W., Wadsworth, S., & Grant, A. (2000). Resistance of sea lice, Lepeophtheirus salmonis (Kroyer), to hydrogen peroxide on farmed Atlantic salmon, Salmo salar L. Aquaculture Research, 31(11), 855–860.

Waples, R. S., & Hendry, A. P. (2008). Special issue: Evolutionary perspectives on salmonid conservation and management. Evolutionary Applications, 1(2), 183–188.

Webb, S. D., Keeling, M. J., & Boots, M. (2007). Host-parasite interactions between the local and the mean-field: How and when does spatial population structure matter? Journal of Theoretical Biology, 249(1), 140–152.

Webster, J. P., Gower, C. M., & Norton, A. J. (2008). Evolutionary concepts in predicting and evaluating the impact of mass chemotherapy schistosomiasis control programmes on parasites and their hosts. Evolutionary Applications, 1(1), 66–83.

Wild, G., Gardner, A., & West, S. A. (2009). Adaptation and the evolution of parasite virulence in a connected world. Nature, 459(7249), 983–986.

Williams, P. D., & Day, T. (2001). Interactions between sources of mortality and the evolution of parasite virulence. Proceedings of the Royal Society of London Series B-Biological Sciences, 268(1483), 2331–2337.

Acknowledgments

We are grateful to S. Gandon, K.H. Jensen and J. Gohli for comments on earlier versions of the manuscript, and to two anonymous referees whose detailed and constructive comments helped much improving the quality of this review. This research was supported by a grant from the Norwegian Research Council to A. Skorping (grant no 186140). D. Ebert was supported by the Swiss National Science Foundation.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License (https://creativecommons.org/licenses/by-nc/2.0), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Mennerat, A., Nilsen, F., Ebert, D. et al. Intensive Farming: Evolutionary Implications for Parasites and Pathogens. Evol Biol 37, 59–67 (2010). https://doi.org/10.1007/s11692-010-9089-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11692-010-9089-0