Abstract

Sustainable forest management is essential to confront the detrimental impacts of diseases on forest ecosystems. This review highlights the potential of vegetation spectroscopy in improving the feasibility of assessing forest disturbances induced by diseases in a timely and cost-effective manner. The basic concepts of vegetation spectroscopy and its application in phytopathology are first outlined then the literature on the topic is discussed. Using several optical sensors from leaf to landscape-level, a number of forest diseases characterized by variable pathogenic processes have been detected, identified and quantified in many country sites worldwide. Overall, these reviewed studies have pointed out the green and red regions of the visible spectrum, the red-edge and the early near-infrared as the spectral regions most sensitive to the disease development as they are mostly related to chlorophyll changes and symptom development. Late disease conditions particularly affect the shortwave-infrared region, mostly related to water content. This review also highlights some major issues to be addressed such as the need to explore other major forest diseases and geographic areas, to further develop hyperspectral sensors for early detection and discrimination of forest disturbances, to improve devices for remote sensing, to implement long-term monitoring, and to advance algorithms for exploitation of spectral data. Achieving of these goals will enhance the capability of vegetation spectroscopy in early detection of forest stress and in managing forest diseases.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Forests are essential ecosystems, providing numerous ecological, economic, social, and cultural services (Chen and Meentmeyer 2016) and a major portion of the global terrestrial carbon dioxide sink (Pan et al. 2011). However, climate change (i.e., changes in global temperatures and rainfall) and global trade have increased forest vulnerability to a number of natural disturbances, including diseases caused by pathogens such as fungi, bacteria, viruses and nematodes (Boyd et al. 2013; Wingfield et al. 2015). While some pathogens are native to local ecosystems, many recent major disturbances occur from nonindigenous species that may pose dangerous and unpredictable threats to forest health (Boyd et al. 2013). Over the past few decades, the frequency and intensity of forest disturbances due to diseases have dramatically increased, leading to wide tree mortality in major forest biomes worldwide (Chen and Meentmeyer 2016).

Sustainable forest management is fundamental to confront the detrimental consequences of diseases in forest ecosystems. This is especially true when such disturbances have the potential to reduce the dominant native species, causing a permanent change in forest structure. One prerequisite for effective management is to understand the severity and spatial distribution of forest damage. Consequently, mitigation efforts can limit the population and the spread of pathogens on infected or susceptible host trees. Although conventional field mensuration remains the most accurate way to quantify stages of infection, it can be logistically challenging, time-consuming and expensive, especially when pathogen populations reach epidemic levels.

Remote sensing makes possible a rapid and accurate evaluation of ecosystem functioning and status, and has the potential to be extended to larger spatial scales. Notably, the employment of spectroscopy in remote sensing is an outstanding approach for monitoring vegetation performance under environmental constraints (Cotrozzi et al. 2018). Recent developments in space- and airborne sensors have further advanced the ability to collect observation data across multiple spatial, temporal, and spectral scales, making remote sensing feasible to monitor forest disturbances, e.g., variations in biophysical and biochemical parameters, in response to disease outbreaks of varying levels of invasion. Such rapid and accurate delineation of large-area forest damage allows decision makers to take prompt and informed action (Chen and Meentmeyer 2016).

In order to encourage other research in this area, this paper summarizes the present knowledge on the use of vegetation spectroscopy for the detection of forest damage caused by diseases. First, it briefly reports basic concepts of vegetation spectroscopy, focusing on reflectance spectroscopy. It then reviews the literature concerning the spectroscopic detection of forest diseases. Finally, it highlights knowledge gaps and proposes new perspectives on the topic. The published peer-reviewed literature was searched in the Web of Science (Thompson-ISI, Philadelphia, PA, USA, http://apps.webofknolwedge.com/) and Scopus (Elsevier, Amsterdam, Netherlands, http://www.scopus.com/) databases, using multiple combinations of “spectroscopy”, “reflectance”, “multispectral”, “hyperspectral”, “forest”, “tree”, “pathology” and “disease” keywords. Database searches were performed in December 2020 back to 1970. Papers that did not match the aim of the present review, e.g., those focused on crop species and/or abiotic factors, were excluded, as well as those not in English. In order to identify other suitable references, the reference lists of articles recorded by this literature search were examined.

Vegetation spectroscopy

Basic concepts

Spectroscopy has been used in plant science over several decades (Gates et al. 1965). Radiation striking vegetation, or any other target, may be reflected (diffuse, specular), absorbed, or transmitted (with refraction, Fig. 1a). Among the different spectroscopy approaches (Conrad and Bonello 2016), reflectance spectroscopy is the one most used for measuring vegetation in the field. Reflectance spectroscopy relies on the interaction of light with plant structure, chemical configuration and water content reflectance being dependent on variations in absorption as a consequence of vibrational excitation of molecular bonds, e.g., C–H, N–H, and O–H bonds, at specific wavelengths. Reflectance of vegetation can be calculated from the measurement of the vegetation radiance divided by the radiance of white reference panels, and defined as percentage (Kumar et al. 2001).

(a) Incoming light (L) reaching the surface of vegetation may be reflected (R; diffuse, specular), transmitted (T; with refraction) or absorbed (A); (b) during pathogenesis, leaf pathogens, e.g., biotrophic (left) and necrotrophic (right), affect leaf structural and chemical properties, and by this leaf reflectance as well as other leaf optical properties is distinctively altered in the visible spectra (VIS: blue, b; green, g; red, r), near-infrared (NIR) and shortwave-infrared (SWIR); leaf sections re-drawn from Mahlein (2015)

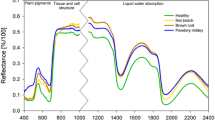

Within the full range of the solar radiation reaching vegetation (i.e., 350−2500 nm), the typical reflectance profile of a green leaf is characterized by three regions (Fig. 2): (1) the visible VIS, 400−700 nm showing low reflectance due to strong absorption by leaf pigments; (2) the near-infrared, NIR, 700−1100 nm characterized by high reflectance and mainly related to leaf cell structure; and, (3) the shortwave-infrared SWIR, 1100−2500 nm characterized by another reduction of reflectance due to lower incident radiation as well as to the absorption by water and other absorptions by biochemical metabolites, e.g., lignin, starch, cellulose, proteins, phenols (Curran 1989; Asner and Martin 2008; Serbin et al. 2015). Another feature of the vegetation reflectance spectrum is the red-edge, i.e., the region in the VIS red-NIR transition zone where reflectance increases drastically, which has been shown to be dependent on chlorophyll content and stress conditions. In light of the above, variations in spectral data can be used for various purposes such as mapping plant functional traits, species biodiversity and distribution, and monitoring plant responses to resource availability and environmental constraints (Kumar et al. 2001; Cotrozzi et al. 2018).

Typical full range, i.e., 350−2500 nm, reflectance profile of healthy vegetation; major absorption and reflectance features are indicated. VIS: visible, 400−700 nm; NIR: near-infrared, 700−1100 nm; SWIR: shortwave-infrared, 1100−2500 nm; gray stripes show spectral bands commonly measured by multispectral sensors

Optical sensors are classified on their spectral resolution and range. Multispectral sensors (MS) measure reflected energy from few to many specific discrete sections, i.e., bands, of the electromagnetic spectrum, e.g., 3−12 bands covering a few tenths of wavelengths each, common only in the VIS–NIR; Fig. 2. Hyperspectral sensors (HS) measure energy in narrower and more numerous bands than MS (e.g., hundreds or thousands covering 5−20 nm each). The numerous narrow bands of HS provide a continuous spectral measurement which is more sensitive to subtle variations in reflected light, providing more data complexity and information content. Since the 1990s, several hand-held HS spectroradiometers covering the full VIS–NIR-SWIR range, i.e., 350−2500 nm, have become available and widely used for field vegetation research (Cotrozzi et al. 2018). Evidently, MS (or HS covering narrower wavelength ranges) are smaller in volume, lighter in weight, needing fewer internal components for working, and are less expensive than HS in collecting wider wavelength ranges, and for these reasons are more used for remote sensing collections by unmanned airborne vehicles (UAV).

Reflectance measurements of vegetation are rapid, nondestructive, and relatively economical since the costs to operate such equipment once acquired are minimal compared with the costs to run other standard analytical instrumentation, e.g., spectrophotometry and gas/liquid chromatography systems. This technique also allows to investigate markedly more individual plant traits in situ and in vivo over numerous time periods than standard measurements alone, e.g., those carried out with classic ecophysiological approaches or wet chemistry. In addition, this approach can monitor plant functions over large geographic areas if scaled to remote sensing measurements from space, i.e., satellites, or airborne platforms, e.g., UAV and aircrafts (Cotrozzi et al. 2018).

In a remote sensing context, e.g., forest monitoring, imaging systems are usually preferable compared to non-imaging systems, this being a further categorization of spectral sensors. Non-imaging spectral sensors average the spectral information with spatial resolution over a definite area within their field of view, and are preferable when the user wants to learn more about the radiation/vegetation interaction, and spatial information such as mapped out is not needed e.g., leaf- and ground canopy-level characterization of plant traits. Imaging spectral sensors are different and supply spectral information with spatial resolution of the imaged object; data is generated in the form of a 3D spatial map of spectral variation, where the first two dimensions (x- and y-axes) show the spatial information and the third dimension (z-axis) provides the spectral information (Fig. 3). For each pixel in an image, multispectral imaging (MS-I) devices capture a small number of spectral bands, typically three to fifteen, whereas hyperspectral imaging (HS-I) instrumentation capture continuous spectral ranges. Obviously, imaging spectral data needs more pre-processing (e.g., atmospheric calibration, geometric correction and spectral calibration) than non-imaging ones. Nevertheless, being an integration of imaging and conventional point spectroscopy, imaging spectroscopy receives complementary information from both domains (Shaw and Burke 2003). Point spectroscopy provides information to explain the physiology, biochemistry and morphology of plants, while the information from imaging technology is used to design the dynamics of these vegetation characteristics (Asner and Martin 2008; Serbin et al. 2015; Singh et al. 2015; Mishra et al. 2017; Mahlein et al. 2018).

Principle of imaging spectroscopy. Space- and airborne imaging spectral sensors provide spectral information with spatial resolution of the imaged object. Figure has been re-drawn from Shaw and Burke (2003)

In addition, some physically based models have been successfully used to simulate leaf reflectance and transmittance and to estimate leaf biochemical properties, e.g., radiative transfer models where variation in canopy reflectance can be modeled by relevant plant functional traits that are known to specifically affect light transmission in canopies. The optical PROperties SPECTra (PROSPECT, Féret et al. 2017) and the Leaf Incorporating Biochemistry Exhibiting Reflectance and Transmittance Yields (LIBERTY, specifically designed for pine needles; Dawson et al. 1998) are among the most widely validated models.

Advancements in the portability and sensitivity of spectrometers, together with improvements in computational and chemometric efficiency, have allowed the exploitation of reflectance data by using different approaches. First, using simple vegetation spectral indices (VSI) based on the ratio of reflectance at distinct wavelengths that have been developed to estimate several foliar traits, e.g., normalized difference vegetation index, NDVI (Rouse et al. 1974); photochemical reflectance index, PRI (Gamon et al. 1997); normalized difference water index, NDWI (Gao 1996); chlorophyll index, CI (Merzlyak et al. 1999). Second, using multivariate approaches, e.g., partial least squares regression, PLSR (Wold et al. 2001), which has become the current standard of chemometric modeling, stepwise multiple linear regression, SMLR (Grossman et al. 1996), principal component regression, PCR (Martens 2001), and least absolute shrinkage and selection operator, Lasso (Tibshirani 2011) to directly estimate widely investigated physiological, biochemical and morphological plant traits as a function of the leaf and vegetation hyperspectral profiles (e.g., Serbin et al. 2014, 2015; Couture et al. 2016; Marchica et al. 2019; Cotrozzi et al. 2020). Third, testing spectra themselves as a phenotypic expression of the combined signals of the physiological, biochemical and morphological features of leaves under particular environmental conditions (i.e., spectral signatures), analyzable through multivariate approaches such as partial least squares discriminant analysis (PLSDA) or other classification techniques such as support vector machine learning (Cotrozzi and Couture 2020; Calzone et al. 2021). These approaches can be applied on untransformed or pre-treated spectra, i.e., derivative spectra is often used for the elimination of background signals and for resolving overlapping spectral features, with remote sensing collections particularly needing data pre-processing, e.g., signal compression and noise reduction (Rasti et al. 2018).

Applications in phytopathology

Vegetation spectroscopy has shown considerable potential to supply new insights into phytopathology. During host-pathogen interactions, a series of processes occur that induce variations in the plant’s physiology, biochemistry and morphology. As a consequence, these processes during pathogenesis and symptom development affect the optical properties of vegetation (Fig. 1b; Mahlein 2015). Therefore, variations in reflectance due to plant pathogens and diseases can be interpreted as highly specific changes in the structure and chemical configuration of the leaf tissue in the course of pathogenesis, e.g., sequence of chlorotic and necrotic tissue or the presentation of characteristic fungal structures. Each host-pathogen interaction has a distinctive spatial and temporal progression and influences specific wavelength ranges during distinct pathogenesis phases (Khaled et al. 2018; Mahlein et al. 2018). For instance, the occurrence of visible symptoms could be early detected by variations in the VIS spectral region, or the loss of leaf water due to the challenge of a necrotrophic pathogen could be monitored thanks to the water sensitivity of SWIR. As a consequence, reflectance spectroscopy has become one of the most powerful non-invasive approaches used for the detection (deviation from healthy, even at early stage), identification (diagnosis of specific symptoms among others and discrimination of various diseases) and quantification (assessment of disease severity) of plant diseases at multiple scales (Lowe et al. 2017; Thomas et al. 2018).

Although plant disease research including such spectral approaches have risen sharply in the last 20 years, most of these studies have been carried out for agricultural applications, specifically within the areas of precision farming, aimed to optimize agricultural procedures and the application of agrochemicals by considering in-field and temporal variability, and plant phenotyping, aimed to assess multiple plant traits to evaluate a specific genotype/environment interaction. Indeed, numerous reviews on the use of vegetation spectroscopy for detecting and monitoring major crop diseases are available (e.g., Bock et al. 2010; Mahlein 2015; Lowe et al. 2017; Khaled et al. 2018; Mahlein et al. 2018; Thomas et al. 2018; Zhang et al. 2020a), and highly suggested to the reader interested in learning the technical and analytical details of the approach. Conversely, the use of vegetation spectroscopy for detecting and monitoring forest diseases has been less investigated. Lower investments and/or greater experimental difficulties might be among the causes of this tendency for forestry, and a review devoted to summarizing the state-of-the-art of this topic was lacking. Chen and Meentmeyer (2016) and Stone and Mohammed (2017) reviewed the application of remote sensing approaches for monitoring forest damage by both insects (mainly) and diseases.

Spectroscopic detection of forest diseases

While the idea of applying remote sensing to detect disease-induced forest damage was considered many decades ago (e.g., Heller and Bega 1973), only since the late 1990s has the approach began to receive considerable attention for managing disease outbreaks, as shown by reviewed papers reported in Tables 1 and 2. Two reasons possibly explain such slow adoption: (1) an increasing number of studies have showed that the frequency and intensity of forest diseases have significantly increased over the past two decades as a result of globalization, responsible for a worldwide distribution of pests and pathogens, and climate change (Boyd et al. 2013), highlighting the need for understanding disease progression to implement effective mitigation strategies; and, (2) the collected spectral data have increased dramatically during the same time period, mainly due to the abatement of data acquisition costs. Geographically, research hotspots have been primarily located in North America and Australia, with several other studies conducted in Europe, East Asia and South Africa.

In this review, the studies are presented according to the following three criteria: (1) works on conifers (Table 1) are discussed before those on broadleaf species (Table 2); (2) within each tree type, genera mostly studied (i.e., higher number of reviewed papers) are presented first; and, (3) within each genus, diseases mostly studied are listed first. For each disease, a brief description, e.g., causal agent, distribution, symptoms, is given, and then the related studies mentioning the adopted sensors and data analysis approaches, the most disease-sensitive spectral regions, and the major outcomes.

Pinus spp.

Sphaeropsis blight

Sphaeropsis sapinea (or Diplodia pinea) is among the most frequent fungal pathogens affecting conifers worldwide, causing shoot blight, crown wilt, cankers and sapwood stain that have produced heavy losses in pine stands. Usually top and outer branch shoots are first affected, quickly becoming pale green, then yellow, orange and red, and finally being shed, while the lower crown may stay green and of normal density (Stanosz et al. 2002).

Sims et al. (2007) used decision tree analysis to evaluate the crown condition of Pinus radiata D. Don (Monterey pine) stands in southern New South Wales, Australia showing discolored leaves due to S. sapinea. Imagery was collected by the digital multi-spectral camera (DMSC) II system (SpecTerra Services) with four wavelength filters, 680, 720, 740, and 850 nm in September 2002; 680, 700, 720, and 750 nm in September 2003. Imagery was acquired at a pixel resolution of 0.5 × 0.5 m. Spectral VSI and fraction images (see Goodwin et al. 2005, reported below) were used to classify crowns into binary condition classes, i.e., proportional discoloration, 0: < 40%, 1: > 40%. The developed decision tree model identified crown discoloration due to S. sapinea with an effective accuracy between 83 (Kappa) and 92% overall accuracy. This study confirmed the outputs reported by Coops et al. (2006) which analyzed the same dataset by evaluating the relation between the crown-condition variables and the spectral VSI through a standard linear regression, and through a classification error matrix, i.e., confusion matrix. The slope of the upper red-edge was the feature mostly related with crown conditions (R2 = 0.76 and 0.88 for 2002 and 2003, respectively), with an independent classification accuracy of over 90%. In fact, several studies have shown that the shape of the red-edge is dependent on chlorophyll content (Smith et al. 2004; Zarco-Tejada et al. 2004) and stress conditions (Mutanga and Skidmore 2007). On the same dataset collected in 2002, Goodwin et al. (2005) applied a linear spectral unmixing approach to measure the fractional abundances of the key image endmembers: sunlit canopy, shadow and soil. Applying multiple linear regression and endmember fraction images, high significance rates were reported for the color of the crown leader (a six class variable; R2 = 0.79) and the overall crown color (proportional discoloration, 0: < 40%, 1: > 40%; R2 = 0.80). In general, the sunlit canopy image fraction resulted in the most meaningful variable for the estimation of forest condition.

These outputs are in accordance with Coops et al. (2004) who adopted a similar linear spectral unmixing approach but investigated the potential of the Compact Airborne Spectrographic Imager 2 (CASI-2) to detect the same S. sapinea infection. Results indicated that four fraction endmember, i.e., sunlit canopy, soil, shadow and non-photosynthetic vegetation (NPV) images could be accurately obtained from the 12 spectral bands of CASI-2 imagery (ranging from 450 to 850 nm, spatial resolution: 0.8 m). Single crowns were detectable on the sunlit crown fraction. Interestingly, NPV showed scattered patches of high fractional abundance where there were aggregates of brown needles. The stepwise regressions for modeling the color of the crown leader and the overall crown color showed high R2 (0.75 and 0.72, respectively), with NPV followed by the shadow fractions showing the higher significances.

Concurrently in the same experimental site, Coops and Stone (2005) collected needle spectra by a field UNISPEC spectrometer (PP Systems; 350−1100 nm), and took needle samples to determine chlorophyll a and b, and water contents. Under increasing S. sapinea-induced disease, needles with a lowering of chlorophyll content showed an increase in reflectance in the VIS region. The shape of the red-edge significantly changed among the S. sapinea severity classes (i.e., low, moderate, high). Needle chlorophyll concentration was mostly correlated with various VSI, especially those including reflectance at 705 nm (chlorophyll sensitive) and at either 750 or 445 nm (chlorophyll insensitive). A good correlation was also reported between moisture content and the water band index (Peñuelas et al. 1995), suggesting that the inclusion of water sensitive wavelengths (SWIR) may also help to better monitor S. sapinea infection by spectral data. Furthermore, the LIBERTY radiative transfer model was also applied to estimate theoretical needle reflectance (400−2500 nm) by changing chlorophyll and water content input parameters. Such LIBERTY estimated spectra mostly matched both trends and rates of actual spectra, suggesting that the utilization of radiative transfer models, properly parameterized, can produce information in discriminating needle damage severity.

Pine wilt disease

Pine wilt disease (PWD) is an important quarantine disease responsible for the overwhelming death of Pinus species caused by the pine wood nematode (PWN) Bursaphelenchus xylophilus. This is the only pathogen that is not a microorganism among those discussed in this review. B. xylophilus is transmitted by vector pine sawyer beetles in the genus Monochamus. At present, PWD is primarily distributed in eight countries across North America (United States, Mexico, and Canada), East Asia (China, Japan, South Korea, North Korea) and Europe (Portugal, Spain). However, 52 countries worldwide have listed PWN as a quarantine pathogen as PWN can spread by transport of infested wood. At an early stage, PWD cannot be detected with the naked eye. PWN starts to multiply in xylem cells, obstructing their internal ducts. Trees affected by PWD cease resin secretion and needles gradually become yellowish. Finally, the needles turn brown and the plant dies (Wu et al. 2020).

Spectroscopy assessment of PWD was performed on various Pinus species. Ju et al. (2014) and Zhang et al. (2020b) investigated the capability of using hyperspectral data over the 350−1100 nm spectral range, acquired by an ASD FieldSpec spectrometer; Analytical Spectral Devices, to distinguish Pinus massoniana Lamb. (Chinese red pine) trees infected by PWN from healthy ones. Spectral measurements were collected ca. 0.5−1 m above the canopy. Ju et al. (2014) used first derivative spectra and VSI to reduce data dimensionality and to identify the most important wavelengths for detection. The most effective first derivative metric was at 759 nm, and thus selected to classify the health status of P. massoniana. The NDVI, calculated from reflectance at 810 and 450 nm, in the fully infected stage was strongly related to changes of chlorophyll concentration (r = 0.95). The researchers concluded that the combined use of specific spectral features and chlorophyll concentration are an efficient method to detect PWN infection around 30 days after inoculation. Zhang et al. (2020a, b) also observed that samples in different PWD infection states, a five-class variable, generated distinctive reflectance and their first-order derivative profiles, but they used a different spectra exploitation approach. To indicate such spectral changes, 16 spectral characteristics were extrapolated from the green (510–580 nm), red (620−680 nm), red-edge (680−760 nm) and NIR (780−1100 nm) regions, and were coded as genes constituting the chromosome of a genetic algorithm (GA). Using the optimal spectral features with suitable fitness from the GA, an accurate (R2 = 0.82) PLSR model was developed to predict PWD severity.

Kim et al. (2018) focused differently on hyperspectral analysis of Pinus thunbergii Parl. (black pine) inoculated with PWN in Geoje Island, South Korea. Full range leaf reflectance (350–2500 nm) collected with a GER 3700 spectrometer (Geophysical and Environmental Research Corporation) showed PWD-induced changes in the red (ca. 600−700 nm) and SWIR (ca. 1400−1500 nm) regions within two months from PWN infection. The already existing vegetation atmospherically resistant index (VARI), the vegetation index green (VIgreen) and the normalized wilt index (NWI), as well as the green–red spectral area index (GRSAI) they specifically introduced, constantly reported significant differences between tree infection conditions earlier than the other tested indices. Notably, the GRSAI, which includes the red and green regions, showed less fluctuation over time than the other VSI.

Recently, Iordache et al. (2020) proposed a machine learning algorithms detection of PWD in Pinus pinaster Aiton (maritime pine) from multi- (MicaSense Red-Edge M multispectral camera, MicaSense Inc.; five spectral bands centered at 475, 560, 668, 717, and 840 nm) and hyperspectral data (MicroHyperSpec A-series hyperspectral scanner, Headwall Photonics; 380−1100 nm) acquired over two regions of central Portugal affected by PWD. Classification schemes for both multi- and hyperspectral imagery (spatial resolution: 5 and 10 cm, respectively) used sets of 13 selected VSI, and were developed by random forest approaches. These classification schemes achieved high PWD identification overall accuracies (> 91%). Moreover, it was shown that PWD was identifiable by spectral imagery even before symptoms could be detected by the naked eye. The development of PWD was also detectable in the derived maps.

All these results clearly show the practicability of PWD detection by spectral imaging and non-imaging, as further confirmed by the extensive review by Wu et al. (2020).

Red band needle blight

Red band needle blight, caused by Dothistroma septosorum, is a fungal disease with a significant impact on pine forests worldwide, affecting over 80 pine species. The development of disease symptoms includes initial chlorosis, rapid loss of cellular integrity, cellular necrosis (bands) and eventual phylloptosis or leaf fall. Infection commonly begins in the lower portions of the crown on older foliage and spreads to higher parts (Bradshaw 2004).

Smigaj et al. (2019) examined the ability of hyperspectral data for detection of red band needle blight. Airborne VIS–NIR hyperspectral data (450−980 nm, 2 m spatial resolution) were collected in central Scotland using the airborne Aisa FENIX imager (Channel Systems) flown over diseased Pinus contorta Douglas (lodgepole pine) plantations with variable defoliation and infection status. Spectra of foliage affected by red band needle blight were also collected in a young Pinus sylvestris L. (Scots pine) stand by an ASD FieldSpec Pro spectroradiometer (350−2500 nm). The green, red and early NIR spectral regions were the most sensitive to the development of the disease. First order derivative values of the green to red-edge region performed best at separating healthy and infected leaves with an accuracy around 90%. The best index was the normalized green red difference index (NGRDI), including green and NIR regions, followed by the enhanced vegetation index (EVI). Transferring the results obtained at leaf level to airborne collections carried out on lodgepole pine sites, resulted in a good distinction comparing the more diseased/defoliated plot and the marginally affected pine stand using numerous spectral indices, including PRI, NGRDI and EVI. Smigaj et al. (2019) also investigated LiDAR data and suggested that the combination of data from different sensors could improve the ability to detect red band needle blight.

In fact, in the early 2000s, leaf and canopy spectral assessments of red band needle blight were, respectively, investigated by Stone et al. (2003) and Coops et al. (2003) over P. radiata plantations in New South Wales, Australia which had been ground evaluated and ranked following the extent of Dothistroma needle blight (a six-class variable) on an individual tree basis. Stone et al. (2003) used an ASD FieldSpec FR spectroradiometer (350–2500 nm) and found that the largest reflectance difference between healthy and diseased leaves took place on the shoulder of the NIR region at 763 nm. Wavelengths in the 675−691 nm range showed the greatest sensitivity to D. septosporum infection, followed by those close to 760 and 550 nm (shown by first derivative spectra), while the lowest sensitivity resulted at 434, 493, 506, 709, and 1373 nm. The 709/691 nm reflectance ratio best correlated to needle damage. Among the other tested VSI, an index calculated by the 710−740 nm wavelengths, i.e., the upper red-edge, was also strongly related to disease severity (r = − 0.73). Conversely, a weak relation was found between the needle damage category and the slope maximum of the red-edge.

Coops et al. (2003) acquired airborne remote sensing imagery by the CASI-2 (10 bands ranging from 450 to 850 nm, 0.8 m spatial resolution) and reported that Dothistroma needle blight was more severe in the lower crown, and significant relationships were found between ground evaluation and crown reflectance features, i.e., lower and upper slope of the red-edge and red-edge vegetation stress index, RVSI, using an ‘halo’ imaging approach which ignored the brightest central pixels of each tree crown. This method showed an accuracy of over 70%. Independent accuracy evaluation of the approach showed that the technique could successfully detect three levels (the original six-class classification system was aggregated into three classes) of Dothistroma needle blight.

Blister rust

Blister rust, due to the invasive fungus Cronartium ribicola, is a dominant source of stress and mortality to high-altitude Pinus albicaulis Engelm. (whitebark pine) within the Greater Yellowstone Ecosystem, California as well as mountain pine beetle (Dendroctonus ponderosae Hopkins). Prediction and control of blister rust is challenging since the pathogen does not diffuse from tree to tree but by way of wind-borne spores from the population of its alternate host Ribes spp. The development from green to red needles at the outer-most branches is an early symptom of blister rust infection and mountain pine beetle infestation (Geils et al. 2010).

Hatala et al. (2010) analyzed images acquired by the HyMap airborne hyperspectral sensor (HyVista Corporation Pty. Ltd.; 128 bands across the 450−2500 nm spectral range, 2–4 m spatial resolution) to identify the sites of blister rust-induced stress and mortality in the Greater Yellowstone Ecosystem whitebark pine crowns by using sub-pixel mixture-tuned matched-filter analysis. Discrepancies in the spatial trends of blister rust and mountain pine beetle infestation allowed to distinguish areas dominated by mountain pine beetle against blister rust by analyzing variations in the spatial scale of significant stress and mortality clusters estimated by the Ripley’s K algorithm.

Pitch canker

Pitch canker is an episodic disease caused by the fungus Fusarium circinatum. It affects only Pinus forests, with Pinus radiata being among the most susceptible. Pitch canker was first reported in North Carolina, USA in the 1940s, but the pathogen is now present over the whole southeastern United States and in numerous countries worldwide (Haiti, Chile, South Africa, Japan, Korea, Mexico, Italy, Portugal and Spain). The disease, characterized by strong resin exudation at the infection site on branches, negatively impacts growth and is also able to kill seedlings and mature trees (Wingfield et al. 2008).

Poona and Ismail (2013) explored the capability of transformed high spatial resolution QuickBird satellite imagery (DigitalGlobe; 447−512, 499−594, 620−688, and 755−874 nm, 2.44 spatial resolution) and artificial neural networks to detect and map P. radiata plantations affected by pitch canker disease in South Africa. Tree crowns were outlined by an automated segmentation and classification procedure within an object-based image analysis environment. Various VSI, including the tasseled cap transformation, were then estimated and included into a neural network model. The feed-forward neural network allowed a disease discrimination with an overall accuracy of 82% and KHAT of 0.65. These outcomes highlight the potential of the approach for future crown-level mapping of the disease at an ecosystem level.

Pseudotsuga menziesii (Mirbel) Franco (Douglas-fir)

Laminated root rot

Phellinus weirii, the fungus causing laminated root rot (this is the only root disease discussed in this review), is among the most significant single natural agent inducing long-term variations in the coastal forests of western North America. Pseudotsuga menziesii (Douglas-fir) is highly susceptible to P. weirii and is the most economically important host of the fungus. P. weirii infection occurs when healthy roots encounter infected stumps or diseased roots of a previous stand, P. weirii gradually induces root decay, leading to weakened uptake of water and nutrients and reduced structural support. Crown symptoms, reduced growth, needle chlorosis and loss, massive production of small cones, usually occur only after the fungus has compromised a significant portion of the root apparatus (Thies 1998).

Leckie et al. (2004) acquired two sets of CASI imagery (spatial resolution: 0.6 m) over a Douglas-fir site in British Columbia, Canada in 1995 (five spectral bands from 456 to 779 nm) and 1996 (eight spectral bands from 438 to 861 nm). Trees showing different levels of root rot symptoms were evaluated in the field, manually outlined on the imagery, and associated to their spectral properties. The NIR and red bands and band ratio including these two regions resulted in most associated to root rot injury, but a blue region was also effective. Using these four spectral bands, an average classification accuracy of 55−60% was reported for healthy, slightly infected, light to moderately severe, 100% needle loss, snag and shadowed snag conditions, while the accuracy for broader classes was much better, e.g., around 80% if a tolerance of ± 1 class was allowed. Specifically, there was a good relationship between areas of root disease with moderate and severely damage trees, while lightly damaged trees were not reliably identified.

Quercus spp.

Sudden oak death

Sudden oak death (SOD), caused by the pathogen Phytophthora ramorum, was first reported in the mid-1990s on tanoak (Notholithocarpus densiflorus) and oaks (Quercus spp.) in the San Francisco Bay area, then spreading epidemically in several coastal forests in California and Oregon, USA. This disease has also had a devastating impact on larch stands in the United Kingdom. Phytophthora ramorum has been either recorded as a regulated species or listed in forest legislations in 68 countries worldwide. Symptoms include bark cankers, leaf spots and twig dieback (Grünwald et al. 2019).

Sudden oak death has received the most attention in terms of remote sensing. He et al. (2019) combined multi-sensor remote sensing with species distribution modeling to map SOD in a California forested region of 80,000 ha from 2005 to 2016. To permit both the fine-scale monitoring of disease development and adequate description at broader scales, they used a spectral unmixing approach to extract sub-pixel disease infection produced by a species distribution model. They calibrated/validated the approach with imagery from high-spatial resolution National Agriculture Imagery Program (NAIP) of the USDA Farm Service Agency, and hyperspectral Airborne Visible/Infrared Imaging Spectrometer (AVIRIS; 360−2500 nm, 3 m spatial resolution) data, Google Earth® imagery, and field investigation. The outcomes revealed an annual SOD infection of 7%, with 76−83% overall mapping accuracy. The combination of multi-sensor remote sensing and species distribution modeling markedly decreased the overestimation of disease impacts as compared to the unique use of remote sensing sensors.

A similar remote sensing study was performed by Pu et al. (2008) using a CASI-2 dataset (2 m spatial resolution) for detecting SOD-induced tree stress and mortality in another California forest, including costa live oak (Quercus agrifolia), black oak (Q. kelloggii), and valley oak (Q. lobata, no SOD host) living in mixed stands with mature madrone (Arbutus menziesii) and California bay trees (Umbellularia californic). They first developed a multilevel classification (0 to 3) design to enhance classification efficiency. The CASI raw data were then converted to reflectance and rectified for topography, and a principal component (PC) transformation of all 48 bands and the visible and NIR bands were individually carried out to select features from the data. Lastly, the processed CASI imagery was classified by a maximum likelihood approach and tested for classification accuracies. The outcomes showed that classification accuracy of oak tree stress mostly increased as the detailed classification level increased, ranging from 3 to 76%. Furthermore, PCs from VIS and NIR regions led to more accurate classification than the PCs from all 48 CASI bands.

The work by Pu et al. (2008) was a follow-up of two studies at leaf level (Pu et al. 2003, 2004), where reflectance, collected by an ASD FieldSpec ProFR spectroradiometer; 350−2500 nm, and relative water content (RWC, %) were determined from hundreds of coast live oak (Q. agrifolia) leaf samples with three levels of SOD symptoms, healthy, infected and newly dead. Pu et al. (2003) conducted correlation analysis between RWC and absorption features, i.e., wavelength position (WP), absorption feature depth, width, and area at the 975, 1200, and 1750 nm positions, and three-band ratio VSI centered at 975 and 1200 nm (RATIO975 and RATIO1200, respectively). Among these features, area showed the highest and more stable relation with RWC. Pu et al. (2004) extracted the maximum or minimum first derivative and its related WP from 10 spectral slopes along each reflectance profile. Using all 306 spectra collected on healthy, infected and newly dead leaves, maximum or minimum derivative values at both sides of the reflectance depressions near 1200, 1400, and 1900 nm, the WPs on the right of the 1200 nm depression, and both sides of the 1400 nm and 1940 depressions were highly correlated with RWC. With a selection of 260 spectra collected only on green and green-yellowish leaves, discarding newly dead leaves, the WPs at the right side of the 1400 nm depression, at the left side of the 1940 nm depression and at the red region showed a constant relation with leaf RWC. As a result, RATIO975 and RATIO1200 indices, as well as the spectral feature on the right of the 1400 nm spectral depression may be crucial spectral indicators for evaluating water status and for early detection of SOD before the occurrence of symptoms. Overall, this study confirms the importance of including the water sensitive SWIR region for monitoring necrotrophic pathogens.

Changing perspective, Hultquist et al. (2014) and Chen et al. (2015) examined the effects of fire and SOD on forests, focusing on the evaluation of post-fire burn severity where trees experienced three stages of disease development, pre-fire: early- trees retaining dried foliage and fine twigs, middle- trees losing fine crown fuels, and late-stage, trees falling. The experimental activities were carried out by using Geographic Object-Based Image Analysis (GEOBIA) to MASTER MODIS (Moderate Resolution Imaging Spectroradiometer)/ASTER (Advanced Spaceborne Thermal Emission and Reflection Radiometer) airborne images with high-spatial (4 m) and high-spectral resolutions (50 bands covering VIS, NIR and SWIR spectral regions, as well as other longer infrared wavelengths, i.e., 400–14,000 nm) that were collected immediately after the fire for rapid assessment. Hultquist et al. (2014) investigated the capability of the Gaussian process regression (GPR), random forests (RF) and support vector regression (SVR) machine learning techniques. RF showed the best performance in burn severity assessment, and lower sensitivity to the use of multiple combinations of remote sensing variables as well. Chen et al. (2015) also investigated two commonly used wavelength reduction processes, PCA, principal component analysis, and MNF, minimum noise fraction, for the outline of image objects and the consecutive accuracy of burn severity models using either PCA or MNF derived variables. Both PCA and MNF were promising for balancing computation efficiency and the accuracy of burn severity models in forests subjected to the middle and late stages of SOD progression, but not for those in the early stage of the disease.

A number of studies also focused on mapping SOD using the airborne data acquisition and registration (ADAR) 5500 imager (four spectral bands: blue, 450−550, 520−610, 610−700, and 780−920 nm) in California (Kelly 2002; Kelly and Meentmeyer 2002; Kelly and Liu 2004; Kelly et al. 2004; Liu et al. 2006, 2007; Guo et al. 2007). These studies are not presented in detail since their focus was on the imaging approach instead of the spectroscopy one.

Japanese oak wilt

Japanese oak wilt disease, caused by the fungus Raffaelea quercivora and transmitted by the bark beetle Platypus quercivorus, is responsible for considerable mortality of Fagaceae species in Japan. Following infection, R. quercivora impedes the rising of sap through the vessels, finally leading to tree death (Kubono and Ito 2002).

Since the detection of affected trees is the only strategy to avoid tree mortality, Uto et al. (2008, 2009) proposed an automatic detection approach using remotely sensed hyper- or multi-spectral data to overcome the problem. After the collection of leaf spectra by an Imspector V10 imaging spectrograph (Specim, Spectral imaging; 400−1000 nm), they first introduced a normalized wilt index (NWI) which was then validated on hyperspectral remote sensing data and a multispectral satellite imagery acquired by the Advanced Spaceborne Thermal Emission and Reflection Radiometer (ASTER, NASA Jet Propulsion Laboratory). Since the performance of this index on leaves showing various autumnal tints was not initially verified, this gap was covered by Uto et al. (2011), who proposed another index, i.e., normalized difference wilt index, NDIwilt to be applied for wilt identification in leaves collected in October.

Oak wilt (and bur oak blight)

Oak wilt is a severe disease of oaks (Quercus spp.) over large regions of the eastern and central United States, and is caused by the invasive fungal pathogen Bretziella fagacearum, previously known as Ceratocystis fagacearum Bretz, which spreads in the outer sapwood. Oak wilt is transmitted by bark beetles and sap-feeding beetles, but can also be transmitted over short distances via root grafts between adjacent trees. After infection, trees respond by producing tyloses and gum which consequently reduce the flow of water and nutrients in infected vascular tissues, leading to tree wilt and finally death (Koch et al. 2010).

The ability of reflectance measurements to detect oak wilt disease was firstly shown by Everitt et al. (1999). They performed a study in south-central Texas, USA where airborne reflectance collections in the VIS green (520−600 nm), VIS red (630−690 nm) and NIR (760−900 nm) spectral bands were made with a Barnes modular multispectral radiometer (Barnes Engineering Company) on branches of Quercus fusiformis (escarpment live oak) showing different stages of disease. Reflectance data collected in the field showed that dead, diseased and healthy trees had divergent VIS and NIR levels; oak wilt resulted in increasing green and red values and in decreasing NIR.

More recently, Fallon et al. (2020) evaluated the capability of spectral data to discriminate oak wilt from other processes of decline. They exposed greenhouse-grown seedlings (Quercus ellipsoidalis and Q. macrocarpa) to drought, or to oak wilt or bur oak blight fungi (Tubakia iowensis, a common leafspot fungus). They collected leaf and canopy reflectance by a Spectra Vista HR 1024i spectroradiometer (Spectra Vista Corporation; 400−2400 nm) and instantaneous photosynthetic and stomatal conductance levels, then ran PLSDA to estimate the exposure type from hyperspectral data. They also detected oak wilt before the onset of symptoms, accurately classified the disease in symptomatic samples, and showed that classification accuracy from spectral data increased with oak wilt-induced reductions in photosynthetic performance. Wavelengths found to be mostly important for oak wilt diagnosis occurred only in NIR and SWIR spectral regions, and were related to water status, non-structural carbohydrates and photosynthetic processes. They showed that hyperspectral models could discriminate oak wilt from other factors of tree damage, and that detection was related to biological processes linked to oak wilt infection and progression. They also found that within the canopy, symptom variety could negatively affect detection, but that symptomatic leaves and canopies were accurately diagnosed by spectral information.

Eucalyptus globulus Labill.

Mycosphaerella leaf disease

Numerous Mycosphaerella spp. have been related to leaf diseases of many Eucalyptus spp., generally specified as Mycosphaerella Leaf Disease (MLD). Juvenile leaves and shoots of Eucalyptus trees are mostly affected by MLD, with symptoms including leaf spots, phylloptosis or premature leaf drop, twig cankers and reduced tree growth. However, many Mycosphaerella spp. are also able to infect adult leaves owing to their ability to develop a proto-appressorium that allows direct cuticle penetration. As a consequence, sometimes trees are infected by an array of Mycosphaerella spp. (Hunter et al. 2011).

Early investigations carried out spectral sensitivity analysis at the leaf level to select wavelengths capable of detecting increasing MLD severity on Eucalyptus globulus Labill. Reflectance measurements were performed by Pietrzykowski et al. (2006) on healthy and infected juvenile E. globulus leaves collected in a plantation in Tasmania, Australia using an ASD FieldSpec FR spectroradiometer (400−1000 nm). Reflectance at 760 nm gave the largest difference between the minimum and maximum of infection severity (a six-class variable). The wavelength most sensitive to disease severity was at 680 nm, followed by those between 480 and 500 nm. Conversely, reflectance near 708 nm and 550 nm was relatively insensitive to disease severity. The VSI most related to leaf infection was the ratio between reflectance at 678 and 550 nm (r = 0.84), which was also used in a linear regression model for accurately estimating leaf infection severity from an independent data set (r = 0.95). This index was then tested on the same Tasmanian plantation site with airborne multispectral imagery (digital multi-spectral camera; four spectral bands at 550, 680, 740, and 780 nm, 0.5 m × 0.5 m pixel size) to detect necrosis at a site characterized by strong MLD infection (Pietrzykowski et al. 2007). Models to detect crown-level necrosis using this index reported a classification accuracy of 67% (Kappa = 0.54) according to field evaluations, and a similar accuracy for a model developed to detect crown-level defoliation which included reflectance at 780 nm. Although maps drawn by these linear models showed precisely the pattern and gradient of MLD severity recorded in the field, this accuracy could likely be improved by utilizing spectral data at a higher resolution, allowing for the calculation of more complex VSI and the exploitation of whole spectral regions by multivariate approaches.

Therefore, Barry et al. (2011) carried out two studies focused on necrosis detection in pot-grown E. globulus seedlings, one in which necrosis was due to artificial infection of Tetratosphaeria spp. and one in which necrosis was due to injuries superimposed on plants already showing reddening or chlorosis. Leaf reflectance profiles were acquired with the same ASD FieldSpec FR spectroradiometer (400−1000 nm) reported in Pietrzykowski et al. (2006). By spectral sensitivity analysis, they reported that 679−695 nm wavelengths were most sensitive to necrosis occurrence, while 706−726 nm ones were least sensitive. An ad-hoc developed VSI (i.e., ratio of reflectance at 688 and 721 nm), was capable of discriminating necrotic and non-necrotic treatments, while other important VSI could not. However, multivariate approaches, e.g., penalized discriminant analysis and Lasso, applied on the whole spectrum enabled much greater discrimination of all necrotic treatments compared to the VSI. Wavelengths between 679 and 695 nm were only sporadically selected as key wavelengths; therefore, results were not similar to the spectral sensitivity data.

Metrosideros polymorpha Gaudich.

Rapid ʻŌhiʻa Death

Rapid ʻŌhiʻa Death (ROD) is a disease greatly killing a multitude of Metrosideros polymorpha trees (‘ōhi ‘a, Myrtaceae), a native keystone species in Hawaii. In 2010, many ‘ōhi’a trees began showing browning foliage, rapidly followed by tree mortality. In following years, the extent of ROD spread substantially. Two previously unknown fungal pathogens in the genus Ceratocystis were isolated as the cause of infection. First symptoms include leaf and/or branch yellowing, wilting, and then browning within several days to weeks. This browning stage is quite apparent some extent specific to ROD infection. Leaves finally drop from the infected tree over several weeks to months until only bare branches remain (Keith et al. 2015).

Two concomitant studies (Asner et al. 2018; Vaughn et al. 2018) were carried out to spectroscopically assess ROD. Vaughn et al. (2018) used the Carnegie Airborne Observatory (CAO) aircraft laboratory to acquire laser-guided imaging spectroscopy data (350−2485 nm) and high-resolution imagery (24 cm) over more than 500,000 ha of Hawaii in summer 2017. They established a method to map individual tree crowns matching the symptoms of both active (brown; desiccated ‘ōhi’a crowns) and past (leafless tree crowns) ROD infection by the combination of two machine learning methods, SVM and a gradient boosting machine. Using a conservative classification design to curtail false-positives, model sensitivity levels were 87% and 83%, and precision levels 97% and 95% for browning and leafless crowns, respectively. Over the Hawaii Island, they recorded 43,134 individual crowns displaying the browning phase of ROD, while leafless crowns 547,666 in total and more scattered over the island.

The associated study by Asner et al. (2018) used field collections of leaf spectral data with laboratory chemical investigations and airborne remote sensing to develop a spectral signature for ROD. Leaf spectra were collected with an ASD FieldSpec 3 spectroradiometer (350−2500 nm), while remote data were collected with the same CAO reported above. PLSR-models were developed to predict several leaf traits. Reflectance spectra predicted leaf mass per area, chlorophyll, phenol, carbon and water concentrations with high accuracies (R2: 0.85−0.99), while lower prediction accuracies were found for nitrogen, non-structural carbohydrates, cellulose and tannin concentrations. They reported that around 80% of ROD-infected plants showed distinct decreases in chlorophyll, moisture and non-structural carbohydrates, which overall led to significant and consistent variations in leaf reflectance over the VIS and SWIR regions. Leaf-level outcomes were scaled at the canopy level using airborne laser-guided imaging spectroscopy, with quantitative spectral separability of normal green-leaf canopies from suspected ROD-infected brown-leaf canopies in the VIS and SWIR spectrum. These achievements provided the spectral-chemical basis for detection, mapping and monitoring of the development of ROD in Hawaii.

Ulmus americana L.

Dutch elm disease

Dutch elm disease (DED), caused by some ascomycete fungi of the genus Ophiostoma (Ophiostoma ulmi s.l.) affecting elm trees (Ulmus spp.), is one of the most destructive diseases in forest pathology. DED spread and infection of suitable hosts is due to a synchrony between life cycles of the host tree, the pathogen and its vectors, elm bark beetles. The first DED symptom is commonly an upper branch with leaves starting to wither and yellowing in summer, months before autumn leaf shedding. This gradually extents to the rest of the tree, with further dieback of branches (Santini and Faccoli 2015).

Reflectance measurements of DED-infected trees were collected by Wilson et al. (1998) under laboratory conditions in the 350−2500 nm wavelength range using an ASD FieldSpec FR spectroradiometer. Different reflectance profiles were reported on leaf samples from trees showing variable DED infection (with early and late symptoms). Early disease stage resulted in a rapid increase in green and red regions and a small decrease in NIR (pigment variations), while the late disease stage induced a decrease in green and NIR and an increase in SWIR reflectance (water loss). These outcomes were useful for selection of suitable remote sensors to early detect and monitor DED in forests.

Conclusions and future perspectives

This review highlights the capability of vegetation spectroscopy in improving the assessment of forest disturbances induced by diseases in a timely and cost-effective manner. Using several optical sensors from leaf to landscape-level, a number of diseases characterized by variable pathogenic processes have been detected, identified and quantified in numerous sites worldwide. Overall, these studies have pointed to the green and red regions of the VIS, the red-edge and the early NIR as the spectral regions, i.e. those related to chlorophyll changes and symptom development, as being the most sensitive to disease development. Early disease stages usually produce a rapid increase in green and red reflectance and a small decrease in early NIR, whereas late disease stages result in a decrease of green and NIR regions and an increase in SWIR, mostly related to water content.

This review also highlights some major issues to be addressed in the future. First, many major forest diseases and geographic areas remain unexplored by spectroscopic studies. Second, further development of high-resolution, cost-effective and portable spectral sensors are required for improving the assessment of forest diseases, since hyperspectral data have mostly shown their capability to assist with early detection of tree stress, e.g. slight decline in chlorophyll and leaf water content, providing forest managers an opportunity to perform efficient disease control. Third, since it has been demonstrated that leaf-level spectroscopy can be effectively used as ground reference or training input for airborne-based platforms and scaled to field and landscape levels, more studies should focus on linking spectral data collected at different scales. Fourth, since sensors mounted on airborne platforms make data acquisition an expensive process, and only a few satellite sensors are operational to date (their application has been restrained because of limited spatial coverage and high spectral noise), a potential solution is to assemble hyperspectral sensors on UAV which, although largely used in agriculture, have not been operated for forest disease assessment. One limitation, however, is the obligation to meet UAV regulations and policies that may vary considerably from region to region. Fifth, data archives, especially those of hyperspectral sensors, have to increase both temporal and spatial coverage since long-term monitoring is required to assess historical impacts of diseases on forests. Sixth, since the disturbances affecting the same forested regions may come from a range of sources, and it is also possible that one disturbance may influence forest responses to another, further studies should focus on differentiating between disease-caused forest damage and other types of damage. Since one major challenge is that single sensors are typically not suitable to complete this task, together with improvements in sensitivity and resolution of instrumentation, a likely solution may be the development of a multisensory approach. Seventh, accordingly to previous issues, algorithms for modelling spectra-disturbance relationships will need to be continually refined or redeveloped to take advantage of new data and novel landscape changes caused by pathogens.

Change history

24 August 2021

The original version is updated due to the change in the style and font in tables and figs provided by the author.

Abbreviations

- ADAR:

-

Airborne data acquisition and registration

- AVIRIS:

-

Airborne visible/infrared imaging spectrometer

- CAO:

-

Carnegie airborne observatory

- CASI:

-

Compact airborne spectrographic imager

- CI:

-

Chlorophyll index

- DED:

-

Dutch elm disease

- DMSC:

-

Digital multi-spectral camera

- EVI:

-

Enhanced vegetation index

- GA:

-

Genetic algorithm

- GEOBIA:

-

Geographic object-based image analysis

- GPR:

-

Gaussian process regression

- GRSAI:

-

Green–red spectral area index

- HS:

-

Hyperspectral sensor

- HS-I:

-

Hyperspectral imaging sensor

- Lasso:

-

Least absolute shrinkage and selection operator

- LIBERTY:

-

Leaf incorporating biochemistry exhibiting reflectance and transmittance yields model

- MASTER:

-

MODIS (moderate resolution imaging spectroradiometer)/ASTER (advanced spaceborne thermal emission and reflection radiometer)

- MLD:

-

Mycosphaerella leaf index

- MNF:

-

Minimum noise fraction

- MS:

-

Multispectral sensor

- MS-I:

-

Multispectral imaging sensor

- NAIP:

-

National agriculture imagery program

- NDIwilt :

-

Normalized difference wilt index

- NDVI:

-

Normalized difference vegetation index

- NDWI:

-

Normalized difference water index

- NGRDI:

-

Normalized green red difference index

- NIR:

-

Near-infrared

- NPV:

-

Non-photosynthetic vegetation

- NWI:

-

Normalized wilt index

- PC:

-

Principal component

- PCA:

-

Principal component analysis

- PCR:

-

Principal component regression

- PLSDA:

-

Partial least squares discriminant analysis

- PLSR:

-

Partial least squares regression

- PRI:

-

Photochemical reflectance index

- PROSPECT:

-

Properties spectra model

- PWD:

-

Pine wilt disease

- PWN:

-

Pine wilt nematode

- RATIO975 :

-

Three-band ratio VSI centered at 975 nm

- RATIO1200 :

-

Three-band ratio VSI centered at 1200 nm

- RF:

-

Random forests

- ROD:

-

Rapid ʻŌhiʻa Death

- RVSI:

-

Red-edge vegetation stress index

- RWC:

-

Relative water content

- SMLR:

-

Stepwise multiple linear regression

- SOD:

-

Sudden oak death

- SVR:

-

Support vector regression

- SWIR:

-

Shortwave-infrared

- UAV:

-

Unmanned airborne vehicles

- VARI:

-

Vegetation atmospherically resistant index

- VIgreen:

-

Vegetation index green

- VIS:

-

Visible

- VSI:

-

Vegetation spectral indices

- WP:

-

Wavelength position

References

Asner GP, Martin RE (2008) Spectral and chemical analysis of tropical forests: scaling from leaf to canopy levels. Remote Sens Environ 112:3958–3970

Asner GP, Martin RE, Keith LM, Heller WP, Hughes MA, Vaughn NR, Hughes RF, Balzotti C (2018) A spectral mapping signature for the Rapid Ohia Death (ROD) pathogen in Hawaiian forests. Remote Sens 10:404

Barry KM, Corkrey R, Pham Thi H, Ridge S, Mohammed CL (2011) Spectral characterization of necrosis from reflectance of Eucalyptus globulus leaves with Mycosphaerella leaf disease or subjected to artificial lesions. Int J Remote Sens 32:9243–9259

Bock CH, Poole GH, Parker PE, Gottwald TR (2010) Plant disease severity estimated visually, by digital photography and image analysis, and by hyperspectral imaging. Crit Rev Plant Sci 29:59–107

Boyd IL, Freer-Smith PH, Gilligan CA, Godfray HCJ (2013) The consequence of tree pests and diseases for ecosystem services. Science 342:1235773

Bradshaw RE (2004) Dothistroma (red-band) needle blight of pines and the dothistromin toxin: a review. For Pathol 34:163–185

Calzone A, Cotrozzi L, Remorini D, Lorenzini G, Nali C, Pellegrini E (2021) Oxidative stress assessment by a spectroscopic approach in pomegranate plants under a gradient of ozone concentrations. Environ Exp Bot 182:104309

Chen G, Meentemeyer RK (2016) Remote sensing of forest damage by diseases and insects. In: Weng Q (ed) Remote sensing for sustainability. CRC Press, Boca Raton, pp 145–162

Chen G, Metz MR, Rizzo DM, Dillon WW, Meentemeyer RK (2015) Object-based assessment of burn severity in diseased forests using high-spatial and high-spectral resolution MASTER airborne imagery. ISPRS J Photogramm Remote Sens 102:38–47

Conrad AO, Bonello P (2016) Application of infrared and raman spectroscopy for the identification of disease resistant trees. Front Plant Sci 6:1152

Coops NC, Stone C (2005) A comparison of field-based and modelled reflectance spectra from damaged Pinus radiata foliage. Aust J Bot 53:417–429

Coops N, Stanford M, Old K, Dudzinski M, Culvenor D, Stone C (2003) Assessment of dothistroma needle blight of Pinus radiata using airborne hyperspectral imagery. Phytopathology 93:1524–1532

Coops NC, Goodwin N, Stone C, Sims N (2006) Application of narrow-band digital camera imagery to plantation canopy condition assessment. Can J Remote Sens 32:19–32

Coops NC, Goodwin N, Stone C (2004) Predicting Sphaeropsis sapinea damage on Pinus radiata stands from CASI-2 using spectral mixture analysis. In: Anchorage, AK, USA: IEEE International Geoscience and Remote Sensing Symposium, pp. 1007–1012

Cotrozzi L, Couture JJ (2020) Hyperspectral assessment of plant responses to multi-stress environments: prospects for managing protected agrosystems. Plants People Planet 2:244–258

Cotrozzi L, Townsend PA, Pellegrini E, Nali C, Couture JJ (2018) Reflectance spectroscopy: a novel approach to better understand and monitor the impact of air pollution on Mediterranean plants. Environ Sci Pollut Res 25:8249–8267

Cotrozzi L, Peron R, Tuinstra MR, Mickelbart MV, Couture JJ (2020) Spectral phenotyping of physiological and anatomical leaf traits related with maize water status. Plant Physiol 184:1363–1377

Couture JJ, Singh A, Rubert-Nason KF, Serbin SP, Lindroth RL, Townsend PA (2016) Spectroscopic determination of ecologically relevant plant secondary metabolites. Methods Ecol Evol 7:1402–1412

Curran PJ (1989) Remote sensing of foliar chemistry. Remote Sens Environ 30:271–278

Dawson TP, Curran PJ, Plummer SE (1998) LIBERTY-Modeling the effects of leaf biochemical concentration on reflectance spectra. Remote Sens Environ 65:50–60

Everitt JH, Escobar DE, Appel DN, Riggs WG, Davis MR (1999) Using airborne digital imagery for detecting oak wilt disease. Plant Dis 83:502–505

Fallon B, Yang A, Lapadat C, Armour I, Juzwik J, Montgomery RA, Cavender-Bares J (2020) Spectral differentiation of oak wilt from foliar fungal disease and drought is correlated with physiological changes. Tree Physiol 40:377–390

Féret J-B, Gitelson AA, Noble SD, Jacquemond S (2017) PROSPECT-D: towards modeling leaf optical properties through a complete lifecycle. Remote Sens Environ 193:204–215

Gamon J, Serrano L, Surfus JS (1997) The photochemical reflectance index: an optical indicator of photosynthetic radiation use efficiency across species, functional types, and nutrient levels. Oecologia 112:492–501

Gao BC (1996) NDWI: a normalized difference water index for remote sensing of vegetation liquid water from space. Rem Sens Environ 58:257–266

Gates DM, Keegan HJ, Schleter JC, Weidner VR (1965) Spectral properties of plants. Appl Opt 4:11–20

Geils BW, Hummer KE, Hunt RS (2010) White pines, Ribes, and blister rust: a review and synthesis. For Path 40:147–185

Goodwin N, Coops NC, Stone C (2005) Assessing plantation canopy from airborne imagery using spectral mixture analysis and fractional abundances. Int J Appl Earth Obs 7:11–28

Grossman YL, Ustin SL, Jacquemoud S, Sanderson EW, Schmuck G, Verdebout J (1996) Critique of stepwise multiple linear regression for the extraction of leaf biochemistry information from leaf reflectance data. Remote Sens Environ 56:182–193

Grünwald NJ, LeBoldus JM, Hamelin RC (2019) Ecology and evolution of the Sudden Oak Death pathogen Phytophthora ramorum. Annu Rev Phytopathol 57:14.1-14.21

Guo Q, Kelly M, Gong P, Liu D (2007) An object-based classification approach in mapping tree mortality using high spatial resolution imagery. Gisci Remote Sens 44:24–47

Hatala JA, Crabtree RL, Halligan KQ, Moorcroft PR (2010) Landscape-scale patterns of forest pest and pathogen damage in the Greater Yellowstone Ecosystem. Remote Sens Environ 114:375–384

He Y, Chen G, Potter C, Meentemeyer RK (2019) Integrating multi-sensor remote sensing and species distribution modeling to map the spread of emerging forest disease and tree mortality. Remote Sens Environ 231:111238

Heller RC, Bega RV (1973) Detection of forest diseases by remote sensing. J for 71:18–21

Hultquist C, Chen G, Zhao K (2014) A comparison of Gaussian process regression, random forests and support vector regression for burn severity assessment in diseased forests. Remote Sens Lett 5:723–732

Hunter GC, Crous PW, Carnegie AJ, Burgess TI, Wingfield MJ (2011) Mycosphaerella and Teratosphaeria diseases of Eucalyptus: easily confused and with serious consequences. Fungal Divers 50:145–166

Iordache M-D, Mantas V, Baltazar E, Pauly K, Lewyckyi N (2020) A machine learning approach to detecting pine wilt disease using airborne spectral imagery. Remote Sens 12:2280

Ju Y, Pan J, Wang X, Zhang H (2014) Detection of Bursaphelenchus xylophilus infection in Pinus massoniana from hyperspectral data. Nematology 16:1197–1207

Keith LM, Hughes RF, Sugiyama LS, Heller WP, Bushe BC, Friday JB (2015) First report of Ceratocystis Wilt on ‘Ōhi’a (Metrosideros polymorpha). Plant Dis 99:1276

Kelly M, Liu D (2004) Mapping diseased oak trees using ADAR imagery. Geocarto Int 19:57–64

Kelly M, Meentemeyer RK (2002) Landscape dynamics of the spread of Sudden Oak Death. Photogramm Eng Remote Sensing 68:1001–1009

Kelly M, Shaari D, Guo Q, Liu D (2004) A comparison of standard and hybrid classifier methods for mapping hardwood mortality in areas affected by “Sudden Oak Death.” Photogramm Eng Remote Sens 70:1229–1239

Kelly NM (2002) Monitoring sudden oak death in California using high-resolution imagery. In: Standiford RB et al. (eds) Proceedings of the Fifth Symposium on Oak Woodlands: Oaks in California's Challenging Landscape. Gen. Tech. Rep. PSW-GTR-184. Albany, CA, USA: Pacific Southwest Research Station, Forest Service, USDA, pp 799–810

Khaled AY, Aziz SA, Bejo SK, Nawi NM, Seman IA, Onwude DI (2018) Early detection of diseases in plant tissue using spectroscopy–applications and limitations. Appl Spectrosc Rev 53:36–64

Kim S-R, Lee W-K, Lim C-H, Kim M, Kafatos MC, Lee S-H, Lee S-S (2018) Hyperspectral analysis of pine wilt disease to determine an optimal detection index. Forests 9:115

Koch KA, Quiram GL, Venette RC (2010) A review of oak wilt management: a summary of treatment options and their efficacy. Urban for Urban Green 9:1–8

Kubono T, Ito S (2002) Raffaelea quercivora sp. nov. associated with mass mortality of Japanese oak, and the ambrosia beetle (Platypus quercivorus). Mycoscience 43:255–260

Kumar L, Schmidt K, Dury S, Skidmore A (2001) Imaging spectrometry and vegetation science. In: van der Meer F, De Jong SM (eds) Imaging spectrometry. Kluwer Academic Publisher, Dordrecht, pp 111–155

Leckie DG, Jay C, Gougeon FA, Sturrock RN, Paradine D (2004) Detection and assessment of trees with Phellinus weirii (laminated root rot) using high resolution multi-spectral imagery. Int J Remote Sens 25:793–818

Liu D, Kelly M, Gong P (2006) A spatial-temporal approach to monitoring forest disease spread using multi-temporal high spatial resolution imagery. Remote Sens Environ 101:167–180

Liu D, Kelly M, Gong P, Guo Q (2007) Characterizing spatial-temporal tree mortality patterns associated with a new forest disease. For Ecol Manag 253:220–231

Lowe A, Harrison N, French AP (2017) Hyperspectral image analysis techniques for the detection and classification of the early onset of plant disease and stress. Plant Methods 13:80

Mahlein A-K (2015) Plant disease detection by imaging sensors-parallels and specific demands for precision agriculture and plant phenotyping. Plant Dis 100:241–251

Mahlein A-K, Kuska MT, Behmann J, Polder G, Walter A (2018) Hyperspectral sensors and imaging technologies in phytopathology: state of the art. Annu Rev Phytopathol 56:535–558

Marchica A, Loré S, Cotrozzi L, Lorenzini G, Nali C, Pellegrini E, Remorini D (2019) Early detection of sage (Salvia officinalis L.) responses to ozone using reflectance spectroscopy. Plants 8:346

Martens H (2001) Reliable and relevant modelling of real world data: a personal account of the development of PLS regression. Chemom Intell Lab Syst 58:85–95

Merzlyak MN, Gitelson AA, Chikunova OB, Rakitin VY (1999) Non-destructive optical detection of pigment changes during leaf senescence and fruit ripening. Physiol Plant 106:135–141

Mishra P, Asaari MSM, Herrero-Langreo A, Lohumi S, Diezma B, Scheunders P (2017) Close range hyperspectral imaging of plants: A review. Biosyst Eng 164:49–67

Mutanga O, Skidmore AK (2007) Red edge shift and biochemical content in grass canopies. ISPRS J Photogramm Remote Sens 62:34–42

Pan Y, Birdsey RA, Fang J (2011) A large and persistent carbon sink in the world’s forests. Science 333:988–993

Peñuelas J, Filella I, Lloret P, Muñoz F, Vilajeliu M (1995) Reflectance assessment of mite effects on apple trees. Int J Remote Sens 16:2727–2733

Pietrzykowski E, Stone C, Pinkard E, Mohammed C (2006) Effects of Mycosphaerella leaf disease on the spectral reflectance properties of juvenile Eucalyptus globulus foliage. For Path 36:334–348

Pietrzykowski E, Sims N, Stone C, Pinkard L, Mohammed C (2007) Predicting Mycosphaerella leaf disease severity in a Eucalyptus globulus plantation using multi-spectral imagery. South For J 69:175–182

Poona NK, Ismail R (2013) Discriminating the occurrence of pitch canker fungus in Pinus radiata trees using QuickBird imagery and artificial neural networks. South For 75:29–40

Pu R, Ge S, Kelly NM, Gong P (2003) Spectral absorption features as indicators of water status in coast live oak (Quercus agrifolia) leaves. Int J Remote Sens 24:1799–1810

Pu R, Foschi L, Gong P (2004) Spectral feature analysis for assessment of water status and health level in coast live oak (Quercus agrifolia) leaves. Int J Remote Sens 25:4267–4286

Pu R, Kelly M, Anderson GL, Gong P (2008) Using CASI hyperspectral imagery to detect mortality and vegetation stress associated with a new hardwood forest disease. Photogramm Eng Remote Sens 74:65–75

Rasti B, Scheunders P, Ghamisi P, Licciardi G, Chanussot J (2018) Noise reduction in hyperspectral imagery: overview and application. Remote Sens 3:482

Rouse JW, Haas RH, Schell JA, Deering DW (1974) Monitoring vegetation systems in the Great Plains with ERTS. NASA Spec Publ 351:309

Santini A, Faccoli M (2015) Dutch elm disease and elm bark beetles: a century of association. iForest 8:126–134

Serbin AP, Singh A, McNeil BE, Kingdon CC, Townsend PA (2014) Spectroscopic determination of leaf morphological and biochemical traits for northern temperate and boreal tree species. Ecol Appl 24:1651–1669

Serbin SP, Singh A, Desai AR, Dubois SG, Jablosnki AD, Kingdon CC, Kruger EL, Townsend PA (2015) Remotely estimating photosynthetic capacity, and its response to temperature, in vegetation canopies using imaging spectroscopy. Remote Sens Environ 167:78–87

Shaw GA, Burke HK (2003) Spectral imaging for remote sensing. Lincoln Lab J 14:3–28

Sims NC, Stone C, Coops NC, Ryan P (2007) Assessing the health of Pinus radiata plantations using remote sensing data and decision tree analysis. N Z J For Sci 37:57–80

Singh A, Serbin SP, McNeil BE, Kingdon CC, Townsend PA (2015) Imaging spectroscopy algorithms for mapping canopy foliar chemical and morphological traits and their uncertainties. Ecol Appl 25:2180–2197

Smigaj M, Gaulton R, Suárez JC, Barr SL (2019) Combined use of spectral and structural characteristics for improved red band needle blight detection in pine plantation stands. For Ecol Manag 434:213–223

Smith KL, Steven MD, Colls JJ (2004) Use of hyperspectral derivative tools in red-edge region to identify plant stress response to gas leaks. Remote Sens Environ 92:207–212

Stanosz GR, Blodgett JT, Smith DR, Kruger EL (2002) Water stress and Sphaeropsis sapinea as a latent pathogen of red pine seedlings. New Phytol 149:531–538

Stone C, Mohammed C (2017) Application of remote sensing technologies for assessing planted forests damaged by insect pests and fungal pathogens: a review. Curr for Rep 3:75–92

Stone C, Chisholm LA, McDonald S (2003) Spectral reflectance characteristics of Pinus radiata needles affected by dothistroma needle blight. Can J Bot 81:560–569

Thies WG (1998) Laminated root rot. In: Hansen EM, Lewis KJ (eds) Compendium of conifer diseases. APS Press, St. Paul, pp 14–15

Thomas S, Kuska MT, Bohnenkamp D, Brugger A, Alisaac E, Wahabzada M, Behmann J, Mahlein AK (2018) Benefits of hyperspectral imaging for plant disease detection and plant protection: a technical perspective. J Plant Dis Prot 125:5–20

Tibshirani R (2011) Regression shrinkage and selection via the Lasso: a retrospective. J Royal Stat Soc B 73:273–282

Uto K, Takabayashi Y, Kosugi Y (2008) Hyperspectral analysis of Japanese oak wilt to determine normalized wilt index. In: IEEE International Geoscience and Remote Sensing Symposium, Boston, MA. Piscataway, NJ, USA: Institute of Electrical and Electronics Engineers, pp II-295-II-298

Uto K, Kosugi Y, Ogata T (2009) Evaluation of oak wilt index based on genetic programming. In: First Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing, Grenoble. Piscataway, NJ, USA: Institute of Electrical and Electronics Engineers, pp. 1–4

Uto K, Massaki T, Kosugi Y, Saito G, Ogata T (2011) Band selection for Japanese oak wilt extraction in autumnal tints of forest based on NWI. In: 3rd Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Lisbon. Piscataway, NJ, USA: Institute of Electrical and Electronics Engineers, pp. 1–4

Vaughn NR, Asner GP, Brodrick PG, Martin RE, Heckler JW, Knapp DE, Hughes RF (2018) An approach for high-resolution mapping of Hawaiian Metrosideros forest mortality using laser-guided imaging spectroscopy. Remote Sens 10:502