Abstract

Background

In order to close the gap between discoveries that could improve health, and widespread impact on routine health care practice, there is a need for greater attention to the factors that influence dissemination and implementation of evidence-based practices. Evidence synthesis projects (e.g., systematic reviews) could contribute to this effort by collecting and synthesizing data relevant to dissemination and implementation. Such an advance would facilitate the spread of high-value, effective, and sustainable interventions.

Objective

The objective of this paper is to evaluate the feasibility of extracting factors related to implementation during evidence synthesis in order to enhance the replicability of successes of studies of interventions in health care settings.

Design

Drawing on the implementation science literature, we suggest 10 established implementation measures that should be considered when conducting evidence synthesis projects. We describe opportunities to assess these constructs in current literature and illustrate these methods through an example of a systematic review.

Subjects

Twenty-nine studies of interventions aimed at improving clinician-patient communication in clinical settings.

Key Results

We identified acceptability, adoption, appropriateness, feasibility, fidelity, implementation cost, intervention complexity, penetration, reach, and sustainability as factors that are feasible and appropriate to extract during an evidence synthesis project.

Conclusions

To fully understand the potential value of a health care innovation, it is important to consider not only its effectiveness, but also the process, demands, and resource requirements involved in downstream implementation. While there is variation in the degree to which intervention studies currently report implementation factors, there is a growing demand for this information. Abstracting information about these factors may enhance the value of systematic reviews and other evidence synthesis efforts, improving the dissemination and adoption of interventions that are effective, feasible, and sustainable across different contexts.

Similar content being viewed by others

INTRODUCTION

The field of implementation science aims to address the time lag and quality chasm between discoveries that could improve health, and the widespread dissemination and adoption of those findings.1,2,3,4 To close this gap, there is a need for greater attention to factors that influence dissemination and implementation of evidence-based practices. The field of implementation science provides guiding frameworks and methods to facilitate successful implementation and sustainment of interventions in the “real world.”1,2,3,4,5

Historically, intervention design and evaluation methods focused primarily on effectiveness outcomes without considering system-level factors such as implementation complexity and cost, impact on workflow, and sustainability. As a result, interventions that improve outcomes in efficacy trials are frequently abandoned due to their failure to achieve the same, or any, impact in a different setting.6 Often, adoption or sustainability efforts fail due to factors such as resource allocation, organizational structure, intervention adaptability, and lack of leadership support. Even interventions as basic as proper hand hygiene among providers can be subject to implementation barriers.7 Considering these factors early in the design and evaluation of interventions may promote the dissemination of interventions that are effective, feasible, and sustainable across different contexts.

Evidence synthesis projects (e.g., systematic reviews) typically focus on effectiveness outcomes, such as clinical and health services outcomes (quality of life, cost of care, etc.), an approach that does not provide the information necessary to understand potential implementation challenges and opportunities. The findings from these synthesis efforts often inform the design of future interventions, and this gap creates a cycle where important implementation considerations are ignored, preventing interventions from moving beyond an initial pilot stage. Incorporating implementation and dissemination measures into evidence synthesis efforts could help facilitate the spread of high-value, effective, and sustainable interventions.

The objective of this paper is to evaluate the feasibility of extracting factors related to implementation during evidence synthesis in order to enhance the replicability of successes of studies of interventions in health care settings. We describe opportunities to use established measures from the field of implementation science to abstract information from health care delivery intervention studies (clinical studies in which participants are assigned to groups that receive one or more intervention/treatment [or no intervention] so that researchers can evaluate the effects of the interventions on biomedical or health-related outcomes).8 We illustrate the application of these recommendations by presenting a systematic review of clinician-patient communication skills interventions.

DEFINING TERMINOLOGY

We reviewed landmark papers1, 3, 5, 6, 9,10,11,12 describing implementation measures to identify widely used appropriate and feasible constructs to abstract during literature reviews. Our goal in selecting measures was to codify previous interventions’ technical components and underlying social, contextual, and dynamic factors that may affect implementation, which are crucial to successful replication.6 Table 1 presents 10 identified factors: acceptability*, adoption*, appropriateness*, feasibility*, fidelity*, implementation cost*, intervention complexity, penetration*, reach, and sustainability*. Most of these factors (marked with an asterisk above) are outlined and defined in Proctor et al.1 The constructs of reach and adoption derive from the Reach Effectiveness-Adoption Implementation Maintenance (RE-AIM) model,9 a widely used framework for evaluating implementation outcomes. In addition, we included intervention complexity, a core construct in the Consolidated Framework for Implementation Research (CFIR)10 and other widely used implementation science frameworks.11

IMPLEMENTATION FACTORS AND ABSTRACTION RECOMMENDATIONS

Below we describe strategies for abstracting ten implementation factors from intervention studies during evidence synthesis. In cases where included studies cite previous papers that describe the intervention, these papers should also be reviewed. Table 1 presents a definition of each factor, examples of relevant information that can be abstracted from intervention studies, and the sections in which the relevant information is most often found.

Acceptability

During implementation, acceptability is the “perception among implementation stakeholders that a given treatment, service, practice, or innovation is agreeable, palatable, or satisfactory.”1 Acceptability of an intervention by the target population is strongly associated with likelihood of adoption, as described in seminal work by Everett Rogers.12 Acceptability is typically measured through a survey of stakeholders and often focuses on provider and/or patient satisfaction.13, 14 If acceptability is not assessed in an intervention trial, it might be inferred in part from retention of providers and patients throughout the study, as dropout rates may indicate that participants do not find the intervention to be worthwhile, satisfactory, or agreeable.15 Caution should be taken in extrapolating retention rates to acceptability, because of the multiple factors that influence study retention, and comparing retention rates across studies that place varying emphasis on retention (e.g., highly controlled studies vs. pragmatic studies) could be misleading. However, if dropouts are surveyed or interviewed, these reported findings might provide a source of information about acceptability.

Adoption

Adoption is the “intention, initial decision, or action to try or employ an innovation or evidence-based practice.”1 Adoption is often influenced by other implementation factors (e.g., acceptability, feasibility, implementation cost)11 and is an outcome measure of implementation. Adoption can be extrapolated from the proportion of eligible providers (or clinics) that participate in part or all of an intervention.

Appropriateness

Appropriateness (sometimes referred to as compatibility)9 is the “perceived fit, relevance, or compatibility of an innovation or evidence-based practice for a given practice setting, provider, consumer, or problem.”1 Interventions perceived as more appropriate by stakeholders are more likely to be adopted.11, 16 Appropriateness can be determined through provider satisfaction surveys that measure provider opinions about intervention usefulness or impact on workflow, as well as pre-implementation considerations about fit (i.e., Does the hospital have the proper resources to be able to implement the intervention? Does the intervention make sense with the population of a given setting?).

Feasibility

Feasibility is the “extent to which a new treatment, or an innovation, can be successfully used or carried out within a given agency or setting.”1 Settings with adequate time and resources (both actual and perceived) to adopt and continue an intervention are more likely to initiate the use of that intervention.1, 11 Feasibility considerations mostly consist of demands put on a provider or a system, including the cost of the intervention and the time and staffing demands to properly implement the intervention. Papers may address feasibility by describing the training time and staffing required, start-up costs, materials needed for the intervention, and the need for systems-level changes for implementation.

Fidelity

Fidelity is the “degree to which an intervention was implemented as it was prescribed in the original protocol or as it was intended by the program developers.”1 Higher levels of fidelity are associated with greater improvement in clinical outcomes.16 Fidelity is most relevant when an intervention is tested in multiple trials. It is typically measured through a fidelity checklist or post-implementation assessment of the degree to which the intervention was implemented as planned. In an evidence synthesis project, fidelity can be evaluated by examining multiple studies of the same intervention to determine whether later versions of the intervention resemble the original intervention or whether adaptations were made (and if so, the reasons why).

Implementation Cost

Implementation cost is the “cost impact of an implementation effort”1 and is often influenced by intervention complexity, implementation strategy, and setting. High implementation costs are frequently a barrier to adoption.17 Implementation costs are specific to costs related to the implementation process and are distinct from cost (or cost-effectiveness) outcomes that result from an intervention. Implementation costs are rarely reported in efficacy studies but may be estimated by reviewing reports of personnel requirements (including the need for new staff, or time demands for training/participation) and technology or equipment.

Intervention Complexity

Intervention complexity is the “perceived difficulty of implementation, reflected by duration, scope, radicalness, disruptiveness, centrality, and intricacy and number of steps required to implement.”9 Complexity can also influence the reproducibility of an intervention and the external validity of trials the intervention undergoes.18 Intervention complexity can be assessed by examining the time period over which an intervention is carried out, the number of steps and personnel required to carry out the intervention, or the number of trainings required to teach an intervention.

Penetration

Penetration is the “integration of a practice within a service setting and its subsystems.”1 Interventions that have buy-in from an organization and/or greater organizational support have greater uptake.11, 19 Penetration shares elements with reach, but is a system-level measure of the quality and scope of reach and is defined by the proportion of providers who use an intervention out of the anticipated providers.20 Penetration can be observed through multi-site data (if available) or through a description of intervention integration within a system.

Reach

Reach examines “the absolute number, proportion, and representativeness of individuals who are willing to participate in a given initiative [or intervention].”9 The greater the population that an efficacious intervention reaches, the greater positive impact it will have on population health outcomes. Certain measures of reach could also ensure that underserved populations receive appropriate care and services. Demographics tables typically present the characteristics of the population that received an intervention. In some cases, flow diagrams may present information about the characteristics of patients who dropped out of a study. Combining these data with information about the target population can provide insight about the representativeness of the study population and the patients who received the full intervention.

Sustainability

Sustainability (or maintenance)9 is the “extent to which a newly implemented treatment is maintained or institutionalized within a service setting’s ongoing, stable operations.”1 Organizations with resources to sustain an intervention are more likely to adopt and continue an intervention.11 An intervention that is more easily sustainable will also have greater longevity. Sustainability is rarely described in early intervention studies, but the discussion section may consider characteristics of the intervention or implementation process that influence sustainability. In an evidence synthesis, follow-up studies or reports may provide information about whether an intervention was expanded or sustained after initial financial and workforce support ends.

SYSTEMATIC REVIEW

Methods

To illustrate the feasibility of abstracting the above implementation measures, we conducted an evidence synthesis of 29 studies of physician communication skills interventions published between 1997 and 2017 (see Appendix). To be included, studies had to be randomized controlled trials or controlled observational studies focused on an interpersonal intervention aimed at improving clinician communication skills, and had to report an outcome aligned with the Quadruple Aim of improving patient experience, improving provider experience, containing costs, and improving population health outcomes.21 The studies were a subset of articles from a systematic review that was conducted for a previous evidence synthesis project.22 For this review, we focused on studies of physician communication skills interventions to facilitate comparison of implementation factors across similar types of interventions.

For this proof-of-concept systematic review, our objective was to determine whether implementation factors could be reliably abstracted using the above guidelines. Two reviewers (AT, GP) independently abstracted data about implementation considerations from each article. For each of the 10 aforementioned factors, the reviewers determined whether the measure was described in the article text, tables, appendices, and (when cited) previous publications about the intervention. In order for a “measure to be described,” both reviewers needed to identify supporting information in the paper. Discrepancies were resolved by consensus with a third reviewer (MH). Percent agreement was initially 69%. However, one of the raters was trained in implementation science methods and the other rater was new to the material. Consensus ratings favored the reviewer with more implementation science experience in 70 of the 91 (77%) disagreements.

Results

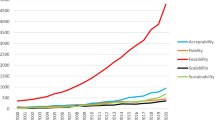

Among the 29 studies, there was variation in the proportion that reported information relevant to acceptability (48%), adoption (76%), appropriateness (66%), feasibility (62%), fidelity (66%), implementation cost (79%), intervention complexity (100%), penetration (76%), reach (86%), and sustainability (24%). Approximately two-thirds (19/29) of studies included a measure of fidelity. Only 1 (3%) study contained information related to all 10 of to implementation measures. Detailed data on the studies and their considerations can be found in Table 2 and Appendix Table. Based on these results, the full systematic literature review incorporated a measure of intervention complexity (given that it was widely addressed). In doing so, the review revealed that interpersonal communication interventions with lower demands on provider time and effort were often as effective as those with higher demands.22

DISCUSSION

To fully understand the downstream potential of an intervention, it is important to consider its effectiveness and the process, demands, and resource requirements involved in implementation. While there is variation in the degree to which intervention studies currently report implementation factors, there is a growing demand for this information. As is evident from our systematic review, it is often feasible to extract implementation information during a literature review even though it might not be explicitly reported in analyzed studies. As intervention studies increasingly report implementation factors, evidence synthesis efforts should evolve to abstract and synthesize this information.

We illustrate that abstracting information about factors such as acceptability, adoption, appropriateness, feasibility, fidelity, implementation cost, implementation complexity, penetration, reach, and sustainability may enhance the value of systematic reviews. Including this information will inform clinicians, researchers, and health system leaders about stakeholder preferences, resource demands, and other factors that may influence implementation and dissemination success. In the instance of our proof-of-concept extraction, we found that all papers included some description of intervention complexity, and this information could be used to examine the association between intervention complexity and study outcomes.22 Such information could also provide justification for certain implementation strategies or inspire innovations to address implementation barriers. Standardizing the practice of incorporating implementation information could also improve intervention design methods, resulting in greater uptake and implementation of interventions with proven efficacy on improving outcomes of interest.

We found few implementation measures are routinely reported or described, although several can be estimated from frequently reported information. Some factors were buried in unusual places in the text, requiring a comprehensive review of each article and potential review of previous publications that describe the intervention under investigation. However, all the studies reported information relevant to multiple implementation factors, although the authors of these studies were likely not actively considering them during authorship” with “might not have explicitly labeled them as such. This demonstrates that our method is appropriate and feasible with a diverse pool of studies. The time and resources required to abstract implementation factors are also likely to vary by topic and intervention complexity.

This study builds on a growing body of literature that describes recommendations for incorporating implementation science into intervention design, evaluation, and evidence synthesis.2, 3, 23,24,25,26 We advocate for the development and adoption of a more standardized structure for reporting implementation factors in intervention trials to facilitate integration of this information into evidence synthesis. For example, studies of new interventions should consider evaluating stakeholder perceptions (e.g., acceptability, appropriateness, feasibility). One mechanism to support this could be a new checklist for the Consolidated Standards of Reporting Trials (CONSORT), similar to the non-pharmacological treatment intervention extension, which already includes constructs related to appropriateness (tailoring for each participant) and fidelity.27 CONSORT is widely used in medical literature and includes the creation of the CONSORT statement from checklists that help ensure transparent and complete reporting in randomized trials. The Quality Improvement Minimum Quality Criteria Set (QI-MQCSS), which offers guidelines for evaluating quality improvement interventions, is another resource and incorporates domains such as fidelity, reach, and sustainability.28 Finally, augmenting the Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) may be an effective avenue to encourage the inclusion of implementation factors in evidence reviews.29 More extensive adoption of certain existing reporting approaches (such as Standards for Reporting Implementation Studies [StaRI]) could also advance this goal.30

Similarly, it is important to publish information about the intervention and implementation processes (in a background paper or an appendix, if necessary) that can form the basis for understanding intervention complexity and implementation costs. Reach can usually be extrapolated from conventional demographics papers, but it is valuable to incorporate a discussion about the representativeness of the study population to the potential population that may benefit from an intervention. Other factors, such as fidelity and sustainability, may need to be reported in follow-up papers. Standardizing and explicitly labeling implementation factors will help eliminate error in the abstraction process and reduce the time and effort needed to abstract and synthesize this information.

Importantly, the goal of this paper is to demonstrate the feasibility of abstracting information about implementation factors. The specific factors we chose will benefit from iteration and refinement, as well as expert review. A possible limitation of abstracting implementation data is the additional personnel time required to perform the extraction. For our systematic review, two reviewers completed implementation factor abstraction in just over 1 week. Another limitation is that previous experience with implementation science may be an important consideration in accurately identifying implementation factors, particularly when they are not explicitly defined in the text. Not all teams are familiar with implementation science and additional training may be required. Finally, some implementation factors are qualitative in nature or lack standardized measures, so it may not be feasible to synthesize this information in meta-analyses. This may change given heighted interest in quantitative methodology in the field of implementation science. The qualitative nature of the process also creates the possibility of bias and misinterpretation of information presented in studies. Extra care should be taken before making inferences from data garnered from this abstraction method.

In summary, while there is variation in the degree to which intervention studies currently report implementation factors, there is a growing demand for this information. This paper aims to enhance the evidence synthesis process by providing researchers with potential implementation factors to aid in translation. While abstracting information surrounding these 10 factors does not guarantee replicability, it can reveal barriers to implementation. It can also highlight factors that were not addressed in previous studies that future implementers may explore. Recent recommendations by Powell et al. and the CFIR website also stress the importance of tailoring and adapting implementation and sustainment strategies. Abstracting information about these 10 factors could provide initial insight into strategies that may be helpful in similar settings.3, 10 Abstracting information about implementation factors may increase the value of evidence synthesis efforts, improving the dissemination and adoption of interventions that are effective, feasible, and sustainable across different contexts. In addition, enriching reviews with this information is likely to create a new avenue to increase the cumulative knowledge of implementation science among the scientific community, and support design efforts that facilitate dissemination.

Data Availability

The datasets during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Proctor E, Silmere H, Raghavan R, et al. Outcomes for Implementation Research: Conceptual Distinctions, Measurement Challenges, and Research Agenda. Adm Policy Ment Heal Ment Heal Serv Res. 2011;38(2):65–76. https://doi.org/10.1007/s10488-010-0319-7

Proctor EK, Powell BJ, Mcmillen J. Implementation strategies: recommendations for specifying and reporting. Implementation Science. 2013;8(139).

Powell BJ, Waltz TJ, Chinman MJ, et al. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implementation Science. 2015;10:21. https://doi.org/10.1186/s13012-015-0209-1

Glasgow RE, Vinson C, Chambers D, et al. National Institutes of Health approaches to dissemination and implementation science: current and future directions. Am J Public Health. 2012;102(7):1274–81.

Brownson RC, Colditz GA, Proctor EK. Dissemination and Implementation Research in Health: Translating Science to Practice. Oxford University Press. 2018 (2nd edition).

Horton TJ, Illingworth JH, Warburton WH. Overcoming Challenges in Codifying and Replicating Complex Health Care Interventions. Health Affairs. 2018;37(2). https://doi.org/10.1377/hlthaff.2017.1161

Schwartz R, Sharek, PJ. Changing the Gaem for Hand Hygiene Conversations. Hospital Pediatrics. 2018; 8(3):168–9. https://doi.org/10.1542/hpeds.2017-0205

Glossary of Common Site Terms. Available at: https://clinicaltrials.gov/ct2/about-studies/glossary. Accessed October 6, 2019.

Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health. 1999;89(9):1322–7.

Center for Clinical Management Research. Consolidated framework for implementation research. Ann Arbor: Center for Clinical Management Research; 2014. Available from: http://cfirguide.org/. Accessed October 6, 2018.

Greenhalgh T, Robert G, Macfarlane F, et al. Diffusion of innovations in service organizations: systematic review and recommendations. Milbank Q. 2004;82 (4):581–629.

Rogers EM. Rogers’ Diffusion of Innovations theory. Diffusion of innovations (4th ed.). New York: The Free Press. 1995 (4th edition).

Atkinson NL. Developing a Questionnaire to Measure Perceived Attributes of eHealth Innovations. Am J Health Behav. 2007;31 (6):612–621. https://doi.org/10.5993/ajhb.31.6.6

Aarons GA, Glisson C, Hoagwood K, et al. Psychometric properties and U.S. National norms of the Evidence-Based Practice Attitude Scale (EBPAS). Psychol Assess. 2010;22 (2):356–65.

Sekhon M, Cartwright M, Francis JJ. Acceptability of healthcare interventions: an overview of reviews and development of a theoretical framework. BMC Health Serv Res. 2017;17 (1):88. https://doi.org/10.1186/s12913-017-2031-8

Oxman TE, Schulberg HC, Greenberg RL, et al. A Fidelity Measure for Integrated Management of Depression in Primary Care. Med Care. 2006;44(11):1030–1037. https://doi.org/10.1097/01.mlr.0000233683.82254.63

Teplensky JD, Pauly MV, Kimberly JR, et al. Hospital adoption of medical technology: an empirical test of alternative models. Health Serv Res. 1995;30 (3):437–65.

Green LW, Nasser M. Furthering Dissemination and Implementation Research: The need for More Attention to External Validity. In: Brownson, RC, Colditz GA, Proctor EK, eds. Dissemination and Implementation Research in Health: Translating Science to Practice. 2nd New York: Oxford University Press; 2018.

Rogal SS, Yakovchenko V, Waltz TJ, et al. The association between implementation strategy use and the uptake of hepatitis C treatment in a national sample. Implement Sci. 2017;12 (1). https://doi.org/10.1186/s13012-017-0588-6

Lewis CC, Proctor EK, Brownson RC. Measurement Issues in Dissemination and Implementation Reseach. In: Brownson RC, Colditz GA, Proctor EK, eds. Dissemination and Implementation Research in Health: Translating Science to Practice. 2nd New York: Oxford University Press; 2018.

Bodenheimer T, Sinsky C. From Triple to Quadruple Aim: Care of the Patient Requires Care of the Provider. Ann Fam Med. 2014;12 (6):573–576. doi:https://doi.org/10.1370/afm.1713

Haverfield MC, Tierney A, Schwartz R, et al. Can Patient-Provider Interpersonal Interventions Achieve the Quadruple Aim of Health Care?: A Systematic Review. J Gen Internal Med. In press. https://doi.org/10.1007/s11606-019-05525-2

Chaudoir SR, Dugan AG, Barr CHI. Measuring factors affecting implementation of health innovations: a systematic review of structural, organizational, provider, patient, and innovation level measures. Implement Sci. 2013;8 (1). https://doi.org/10.1186/1748-5908-8-22

Pinnock H, Barwick M, Carpenter CR, et al. Standards for Reporting Implementation Studies (StaRI) Statement. BMJ. 2017:i6795. https://doi.org/10.1136/bmj.i6795

Powell BJ, Beidas RS, Lewis CC, et al. Methods to Improve the Selection and Tailoring of Implementation Strategies. J Behav Health Serv Res. 2017;44 (2):177–194.

Ugalde A, Caderyn JG, Rankin NM, et al. A systematic review of cancer caregiver interventions: Appraising the potential for implementation of evidence into practice. Psycho-Oncology. 2019 February 04;28 (4). https://doi.org/10.1002/pon.5018

Boutron I, Altman DG, Moher D, Schulz KF, Ravaud P. CONSORT Statement for Randomized Trials of Nonpharmacologic Treatments: A 2017 Update and a CONSORT Extension for Nonpharmacologic Trial Abstracts. Annals of Internal Medicine. 2017 Jul 4;167 (1):40–7.Hempel S, Shekelle PG, Liu JL, et al. Development of the Quality Improvement Minimum Quality Criteria Set (QI-MQCS): a tool for critical appraisal of quality improvement intervention publications. BMJ. 2015 Aug 26;24:796–804. https://doi.org/10.1136/bmjqs-2014-003151

Hempel S, Shekelle PG, Liu JL, et al. Development of the Quality Improvement Minimum Quality Criteria Set (QI-MQCS): a tool for critical appraisal of quality improvement intervention publications. BMJ. 2015;24:796–804. https://doi.org/10.1136/bmjqs-2014-003151

Moher D, Liberati A, Tetzlaff J, Altman DG, The PRISMA Group. Preferred Reporting Items for Systematic Reviews and Meta-Analyses: The PRISMA Statement. PLoS Med. 2009;6 (7):e1000097. https://doi.org/10.1371/journal.pmed1000097

Pinnock H, Barwick M, Carpenter CR, Eldridge S, Grandes G, Griffiths CJ, Rycroft-Malone J, Meissner P, Murray E, Patel A, Sheikh A, Taylor SJ; StaRI Group. Standards for Reporting Implementation Studies (StaRI) Statement. BMJ. 2017;356:i6795. https://doi.org/10.1136/bmj.i6795

Acknowledgments

We would like to thank Gabi Piccininni for her contribution to evidence abstraction in the proof-of-concept systematic review. We would also like to thank Rachel Schwartz, PhD; Michelle B. Bass, PhD, MSI, AHIP; Cati Brown Johnson, PhD; Nadia Safaeinili, MPH; Dani Zionts, MScPH; Jonathan G Shaw, MD, MS; Sonoo Thadaney, MBA; Karl A Lorenz, MD, MS; Steven M. Asch, MD, MPH; Abraham Verghese, MD; Laura Jacobson, MPH; Shreyas Bharadwaj; and Isabella Romero for their contribution to the overall systematic review that laid the initial groundwork for the proof-of-concept systematic review in this paper.

Funding

This study was funded by a grant from the Gordon and Betty Moore Foundation (#6382; PIs Donna Zulman and Abraham Verghese). Dr. Haverfield was supported by VA Palo Alto Center for Innovation to Implementation (Ci2i) HSR&D postdoctoral fellowship.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they do not have a conflict of interest.

Disclaimer

The contents of this article do not represent the views of VA or the United States Government.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

ESM 1

(PDF 362 kb)

Rights and permissions

About this article

Cite this article

A. Tierney, A., C. Haverfield, M., P. McGovern, M. et al. Advancing Evidence Synthesis from Effectiveness to Implementation: Integration of Implementation Measures into Evidence Reviews. J GEN INTERN MED 35, 1219–1226 (2020). https://doi.org/10.1007/s11606-019-05586-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11606-019-05586-3