Abstract

A convex envelope for the problem of finding the best approximation to a given matrix with a prescribed rank is constructed. This convex envelope allows the usage of traditional optimization techniques when additional constraints are added to the finite rank approximation problem. Expression for the dependence of the convex envelope on the singular values of the given matrix is derived and global minimization properties are derived. The corresponding proximity operator is also studied.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let \({\mathbb {M}}_{m,n}\) denote the Hilbert space of complex \(m\times n\)-matrices equipped with the Frobenius (Hilbert–Schmidt) norm. The Eckart–Young–Schmidt theorem [4, 14] provides a solution to the classical problem of approximating a matrix by another matrix with a prescribed rank, i.e.,

by means of a singular value decomposition of F and keeping only the K largest singular values. However, if additional constraints are added then there will typically not be an explicit expression for the best approximation.

Let \(g(A)=0\) describe the additional constraints (for instance imposing a certain matrix structure on A), and consider

The problem (1.2) can be reformulated as minimizing

For instance, if g describes the condition that A is a Hankel matrix and F is the Hankel matrix generated by some vector f, then the minimization problem above is related to that of approximating f by K exponential functions [9]. This particular case of (1.3) was for instance studied in [1].

Standard (e.g. gradient based) optimization techniques do no work on (1.3) due to the highly discontinuous behavior of the rank function. A popular approach is to relax the optimization problem by replacing the rank constraint with a nuclear norm penalty, i.e. to consider the problem

where \(\Vert A\Vert _*= \sum _j \sigma _j(A)\) and the parameter \(\mu _K\) is varied until the desired rank K is obtained.

In contrast to \({\mathcal {R}}_K(A)\) the nuclear norm \(\Vert A\Vert _*\) is a convex function, and hence (1.4) is much easier to solve than (1.3). In fact, the nuclear norm is the convex envelope of the rank function restricted to matrices with operator norm \(\le 1\) [5] which motivates the replacement of \({\mathcal {R}}_K(A)\) with \(\mu _K \Vert A\Vert _*\) (for a suitable choice of \(\mu _K\)).

However, the solutions obtained by solving this relaxed problem are different and exhibit bias and other undesirable side-effects (compared with the originally sought solution), because the contribution of the (convex) misfit term \(\Vert A-F\Vert ^2\) is not used. In [10, 11] it was suggested to incorporate the misfit term and work with the l.s.c. convex envelopes of

and

respectively for the problem of low-rank and fixed rank approximations, (where l.s.c. refers to lower semi-continuous). The superior performance of using this relaxation approach in comparison to the nuclear norm approach was verified for several examples in [10, 11], where also efficient optimization algorithms for the corresponding restricted minimization problems are presented. In [2] these functionals are studied in a common framework called the \(\mathcal {S}\)-transform. Grussler and Rantzer [6] consider optimization of (1.6) over non-negative matrices. They derive the Lagrange dual of the problem and show that the resulting relaxation can be optimized using semidefinite programming. Furthermore, they derive sufficient conditions (in terms of the dual variables) for the relaxation to be tight. The use of semidefinite programming does however limit the approach to moderate scale problems.

For the l.s.c convex envelope of (1.5) it turns out that there are simple explicit formulas acting on each of the singular values of F individually. In this paper we present explicit expressions for the l.s.c. convex envelope of (1.6) in terms of the singular values \((\alpha _j)_{j=1}^{\min (m,n)}\) of A, as well as detailed information about global minimizers. More precisely, in Theorem 1 we show that the l.s.c. convex envelope of (1.6) is given by

where \(k_*\) is a particular value between 1 and K [see (2.1)]. This article also contains further information on how the l.s.c. convex envelope can be used in optimization problems. Since (1.7) is finite at all points it is also continuous, so we will sometimes write “convex envelope” instead of “l.s.c. convex envelope”.

The second main result of this note is Theorem 2, where the global minimizers of (1.7) are found. In case the Kth singular value of F (denoted \(\phi _K\)) has multiplicity one, then the minimizer of (1.7) is unique and coincides with that of (1.6), given by the Eckart–Young–Schmidt theorem. If \(\phi _K\) has multiplicity M and is constant between sub-indices \(J\le K\le L\), it turns out that the singular values \(\alpha _j\) of global minimizers A, in the range \(J\le j\le L\) lie on a certain simplex in \({\mathbb {R}}^M\). We refer to Sect. 3, in particular (3.3), for further details.

Many optimization routines for solving the convex envelope counterpart of (1.3) involve computing the so called proximal operator, i.e. the operator

In Sect. 4 we investigate the properties of this operator. In particular we show that it is a contraction with respect to the Frobenius norm and show that the proximal operator coincides with the solution of (1.1) whenever F has a sufficient gap between the Kth and \(K+1\)th singular value.

Since the submission of this article the two related papers [8] and [7] have appeared. In [8] the convex envelope of (1.6) and its proximal operator are computed. In [7] these results are generalized to arbitrary unitarily invariant norms when \(F=0\).

2 Fenchel conjugates and the l.s.c. convex envelope

The Fenchel conjugate, also called the Legendre transform [13, Section 26], of a function f is defined by

Note that \({\mathbb {M}}_{m,n}\) becomes a real Hilbert space with the scalar product

and that for any function \(f:{\mathbb {M}}_{m,n}\rightarrow {\mathbb {R}}\) that only depends on the singular values, we have that the maximum of \(\langle A,B\rangle -f(A)\) with respect to A is achieved for a matrix A with the same Schmidt-vectors (singular vectors) as B, by von-Neumann’s inequality [12]. More precisely, denote the singular values of A, B by \(\alpha ,\beta \) and denote the singular value decomposition by \(A=U_A\Sigma _\alpha V_A^*\), where \(\Sigma _\alpha \) is a diagonal matrix of length \(N=\min (m,n)\). We then have:

Proposition 1

For any \(A,B\in {\mathbb {M}}_{m,n}\) we have \(\langle {A,B}\rangle \le \sum _{j=1}^{N} \alpha _j\beta _j\) with equality if and only if the singular vectors can be chosen such that \(U_A=U_B\) and \(V_A=V_B\).

See [3] for a discussion regarding the proof and the original formulation of von Neumann.

Proposition 2

Let \({\mathcal {I}}(A)={\mathcal {R}}_K(A)+\Vert A-F\Vert ^2\) [see (1.3)]. For its Fenchel conjugate it then holds that

Proof

\({\mathcal {I}}^*(B)\) is the supremum over A of the expression

If we fix the singular values of A, then the last three terms are independent of the singular vectors. By Proposition 1 it follows that the maximum value is attained for a matrix A which has the same singular vectors as \(F+\frac{B}{2}\). We denote \(\sigma _j\left( F+\frac{B}{2}\right) \) by \(\gamma _j\) and \(\sigma _j(A)\) by \(\alpha _j\), and write \({\mathcal {R}}_K(\alpha )\) in place of \({\mathcal {R}}_K(A)\) (since the singular vectors are irrelevant for this functional). Combining the above results gives

It is optimal to choose \(\alpha _j=\gamma _j\) for \(1\le j\le K\) and \(\alpha _j=0\) otherwise. Hence,

\(\square \)

The computation of \({\mathcal {I}}^{**}\) is a bit more involved.

Theorem 1

Given a positive non-increasing sequence \(\alpha =(\alpha _j)_{j=1}^N\), the sequence

is also non-increasing for \(k=1,\ldots ,K\). Let \(k_*\) be the largest value such that (2.1) is non-negative. The l.s.c. convex envelope of \({\mathcal {I}}(A)={\mathcal {R}}_K(A)+\Vert A-F\Vert ^2\) then equals

Note that \({\mathcal {I}}(A) \ge \Vert A-F\Vert ^2\) and \(\Vert A-F\Vert ^2\) is convex and continuous in A. Since \({\mathcal {I}}^{**}(A)\) is the largest l.s.c. convex lower bound on \({\mathcal {I}}(A)\) we therefore have \({\mathcal {I}}^{**}(A) \ge \Vert A-F\Vert ^2\) which shows that

Proof

We again employ the notation \(\sigma _j\left( F+\frac{B}{2}\right) =\gamma _j\). For the bi-conjugate it then holds that

where the third identity follows by Proposition 1 and analogous considerations as those in Proposition 2. Let the supremum be attained at the point \(\gamma ^*\). If we hold \(\gamma _K^*\) fixed and consider the supremum over the remaining variables, we get \(\gamma _j^*=\max \{\alpha _j,\gamma ^*_K\}.\) By inspection of the above expression it is also clear that \(\gamma _K^*\ge \alpha _K\) (in particular, \(\gamma _j^*=\gamma _K^*\) for \(j>K\)). Introducing

we conclude that

The function f is clearly differentiable with derivative

which is a non-increasing function of t. In particular, the sequence \((f'(\alpha _{K+1-k}))_{k=1}^{K}\) is non-increasing and up to a factor of 2 it equals (2.1), which proves the first claim in the theorem. Moreover \(f'(\alpha _K)=\sum _{j=K+1}^N\alpha _j\) and \(\lim _{t\rightarrow \infty }f'(t)=-\infty \), whereby it follows that f has a maximum in \((\alpha _K,\infty )\) at a point \(t_*\) where \(f'(t_*)=0\). It also follows that \(k_*\) is the largest integer k such that \(f'(\alpha _{K+1-k})\ge 0\), and hence \(t_*\) lies in the interval \([\alpha _{K+1-k_*},\alpha _{K-k_*})\), (with the convention \(\alpha _0=\infty \) in case \(k_*=K\)). In this interval we have

whereby it follows that

Moreover,

Returning to (2.4) we conclude that

which equals (2.2), and the proof is complete. \(\square \)

3 Global minimizers

We now consider global minimizers of \({\mathcal {I}}\) and \({\mathcal {I}}^{**}\). Given a sequence \((\phi _n)_{n=1}^N\) we recall that \(\Sigma _\phi \) denotes the corresponding diagonal matrix. We introduce the notation \(\tilde{\phi }\) for the sequence \(\phi \) truncated at K, i.e.

Recall the Eckart-Young-Schmidt theorem, which can be rephrased as follows;

The elements of \(\underset{A}{\mathrm {argmin~}} {\mathcal {I}}(A)\) are all matrices of the form \(A_*=U\Sigma _{\tilde{\phi }} V^*\), where \(U\Sigma _\phi V^*\) is any singular value decomposition of F. In particular, \(A_*\) is unique if and only if \(\phi _K \ne \phi _{K+1}\).

Obviously, a global minimizer of \({\mathcal {I}}\) is a global minimizer of \({\mathcal {I}}^{**}\), but the converse need not be true. It is not hard to see that, in case \(\phi _K\) has multiplicity one, the minimizer of \({\mathcal {I}}\) is also the (unique) minimizer of \({\mathcal {I}}^{**}\). The general situation is more complicated.

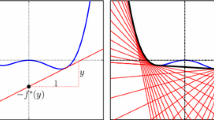

Illustration of the notation used in Theorem 2

Theorem 2

Let \(K\in {\mathbb {N}}\) be given, let F be a fixed matrix and let \(\phi \) be its singular values. Let \(\phi _J\) (respectively \(\phi _L\)) be the first (respectively last) singular value that equals \(\phi _K\), and set \(M=L+1-J\) (that is, the multiplicity of \(\phi _K\)). Finally set \(m=K+1-J\), (that is, the multiplicity of \(\tilde{\phi }_K\)). Figure 1 illustrates the setup.

The global minimum of \({\mathcal {I}}\) and \({\mathcal {I}}^{**}\) both equal \(\sum _{j>K}\phi _j^2\) and the elements of

are all matrices of the form \(A_*=U\Sigma _{\alpha } V^*\), where \(U\Sigma _\phi V^*\) is any singular value decomposition of F, and \(\alpha \) is a non-increasing sequence satisfying:

Also, the minimal rank of such an \(A_*\) is K and the maximal rank is L. In particular, \(A_*\) is unique if and only if \(\phi _K \ne \phi _{K+1}\).

Proof

The fact that the minimum value of \({\mathcal {I}}\) and \({\mathcal {I}}^{**}\) coincide follows immediately since \({\mathcal {I}}^{**}\) is the l.s.c. convex envelope of \({\mathcal {I}}\), and the fact that this value is \(\sum _{j>K}\phi _j^2\) follows by the Eckart-Young-Schmidt theorem.

Suppose first that \(A_*\) is as stated in (3.3). We first prove that \(k_*=m\). Evaluating the testing condition (2.1) for \(k=m+1\) gives

(where we use (3.3) in the last identity) so \(k_*\le m\). But on the other hand, the testing condition for \(k=m\) is

so we must have \(m=k_*\). With (3.3) in mind we get that \(\sum _{j> K-k_*}\alpha _j=\sum _{j=J}^N \alpha _j=m\phi _K\) and then

since \(m\phi _K^2=\sum _{j=J}^K\phi _j^2\). This proves that \(A_*\) is a solution to (3.2).

Conversely, let \(A_*\) be a solution to (3.2). The only part of the expression (2.2) for \({\mathcal {I}}^{**}(A_*)\) that depends on the singular vectors of \(A_*\) is \(\Vert A_*-F\Vert ^2\). By expanding \(\Vert A_*\Vert ^2-2\langle {A_*,F}\rangle +\Vert F\Vert ^2\) and invoking Proposition 1, it follows that we can choose matrices U and V such that \(A_*=U\Sigma _\alpha V^*\) and \(F=U\Sigma _\phi V^*\) are singular value decompositions of \(A_*\) and F respectively. Set \(\tilde{F}=U\Sigma _{\tilde{\phi }}V^*\) and note that \(\tilde{F}\) also is a minimizer of (3.2), by the first part of the proof. Since \({\mathcal {I}}^{**}\) is the l.s.c. convex envelope of \({\mathcal {I}}\), it follows that all matrices

are solutions of (3.2). Set

and note that \(A(t)=U\Sigma _{\tilde{\phi }+t\epsilon }V^*\), i.e. the singular values of A(t) equals \(\tilde{\phi }+t\epsilon =t\alpha +(1-t)\tilde{\phi }\) which is non-increasing for all \(t\in [0,1]\), being the weighted mean of two non-increasing sequences. Since \(\tilde{F}\) satisfies (3.3), it follows that A(t) satisfies (3.3) if and only if

This is independent of t, and hence it suffices to prove (3.3) for some fixed \(A(t_0)\) in order for \(A_*=A(1)\) to satisfy (3.3) as well. In other words we may assume that \(\epsilon \) in (3.5) is arbitrarily small [by redefining \(A_*\) to equal \(A(t_0)\)]. With this at hand, we evaluate the testing condition for \(k_*\) [recall (2.1)] at \(k=m+1\);

This expression is certainly strictly negative if \(\alpha =\tilde{\phi }\), [by the calculation (3.4)], and hence it is also strictly negative for \(\alpha \) sufficiently close to \(\tilde{\phi }\). Since we have already argued that it is no restriction to make this assumption, we conclude that \(k_*\le m\).

With this at hand, we have

Upon omitting the last term we get

Moreover, since \(\sum _{j>K-k_*}\phi _j^2=k_*\phi _K^2+\sum _{j=K+1}^N\phi _j^2\), this can be further simplified to

Since the right hand side equals the global minimum of \({\mathcal {I}}^{**}\), we must have equality in all the above inequalities. The first one in (3.6) is equal if and only if \(\alpha _j=\phi _j\) for \(j=1\ldots ,K-k_*\), the second one if and only if \(\alpha _j=0\) for \(j>L\), leading us to \(\sum _{j>K-k_*}\alpha _j=\sum _{j=K-k_*+1}^L\alpha _j\). Since we need inequality in (3.7) as well, this in turn gives \(\sum _{j=K-k_*+1}^L\alpha _j=k^*\phi _K\). As \(\alpha _j=\phi _j=\phi _K\) for \(j=J,\ldots , K-k_*\), this implies

To verify (3.3) it remains to verify that \(\alpha _j\le \phi _K\) for \(J\le j\le L\). If \(k_*<m\), this is immediate since \(\alpha \) by definition is a non-increasing sequence and \(\alpha _J=\phi _K\) in this case. Otherwise, by (2.1) for \(k_*=m\) we get

where we used (3.8) in the last identity. Thus \(\alpha _j\le \alpha _J\le \phi _K\) for \(J\le j\le L\), which concludes the converse part of the proof.

Finally, the uniqueness statement is immediate. Clearly we can pick \(\alpha _j\) in accordance with (3.3) to get L non-zero entries, but not more, so the maximal possible rank is L. In order to have as few non-zero entries as possible, the condition \(\sum _{j=J}^L\alpha _j =\phi _K m\) together with \(\alpha _j\le \phi _K\) for \(J\le j\le L\) clearly forces at least m non-zero entries in \(J\le j\le L\), so the minimal possible rank is \(J-1+m=K\). \(\square \)

4 The proximal operator

As argued in the introduction, many optimization routines for solving

i.e. the l.s.c. convex envelope counterpart of (1.3), require efficient computation of the proximal operator, which we now address.

Theorem 3

Let \(F=U \Sigma _\phi V^*\) be given. The solution of

is of the form \(A=U\Sigma _\alpha V^*\) where \(\alpha \) has the following structure; there exists natural numbers \(k_1 \le K\le k_2 \) and a real number \(s>\phi _{k_2}\) such that

The appropriate value of s is found by minimizing the convex function

in the interval \([\phi _K, (1+\rho )\phi _{K+1}]\). Given such an s, \(k_1\) is the smallest index \(\phi \) with \(\phi _{k_1}<s\) and \(k_2\) last index with \(\phi _{k_2}>\frac{s}{1+\rho }\). In particular, \(\alpha \) is a non-increasing sequence and \(\alpha \le \phi \). In other words, the proximal operator is a contraction.

The theorem can be deduced by working directly with the expression for \({\mathcal {I}}^{**}\), but it turns out that it is easier to follow the approach in [10] which is based on the minimax theorem and an analysis of the simpler functional \({\mathcal {I}}^*\). Note in particular that the proximal operator (given by Theorem 3) reduce to the “Eckart–Young approximation” (3.1) if \(\phi _K\ge (1+\rho )\phi _{K+1}\).

Proof

Note that \({\mathcal {I}}^{**}(0)+\rho \Vert 0-F\Vert ^2=(1+\rho )\Vert F\Vert ^2,\) and that \({\mathcal {I}}^{**}(A)+\rho \Vert A-F\Vert ^2\ge (1+\rho )\Vert F\Vert ^2\) whenever A is outside the compact convex set \({\mathcal {C}}=\{A:\Vert A-F\Vert \le \Vert F\Vert \}\) [recall (2.3)]. This combined with Proposition 2 and some algebraic simplifications shows that

where \(Z = F+\frac{B}{A}\). Let us denote the function in the last line by f(A, Z), and note that by construction it is convex in A and concave in Z. By Sion’s minimax theorem [15] the order of \(\max \) and \(\min \) can be switched (giving the relation \(A=((1+\rho )F-Z)/\rho \)), and the above \(\min \max \) thus equal

where \(\zeta _j=\sigma _j(Z)\). The maximum is clearly obtained at some finite matrix \(Z=Z_*\). Set

For these points it then holds

The latter inequality together with the previous calculations imply that \({\mathcal {I}}^{**}(A_*)+\rho \Vert A_*-F\Vert ^2\le {\mathcal {I}}^{**}(A)+\rho \Vert A-F\Vert ^2\). Thus \(A_*\) given by (4.5) solves the original problem (4.1) as long as \(Z_*\) is a maximizer of (4.4). By Proposition 1 it follows that the appropriate Z shares singular vectors with F, so the problem reduces to that of minimizing

The unconstrained minimization (i.e. ignoring that the singular values need to be non-increasing) of this is \(\zeta _j=\phi _j\) for \(j\le K\) and \(\zeta _j=(1+\rho )\phi _j\) for \(j>K\). It is not hard to see (see the appendix of [10] for more details) that the constrained minimization has the solution

where s is a parameter between \(\phi _K\) and \((1+\rho )\phi _{K+1}\). Inserting this in the previous expression gives (4.3) and the appropriate value of s is easily found. Let \(k_1\) resp. \(k_2\) be the first resp. last index where s shows up in \(\zeta \). Formula (4.2) is now an easy consequence of (4.6). \(\square \)

5 Conclusions

We have analyzed and derived expressions for how to compute the l.s.c. convex envelope corresponding to the problem of finding the best approximation to a given matrix with a prescribed rank. These expressions work directly on the singular values.

References

Andersson, F., Carlsson, M., Tourneret, J.-Y., Wendt, H.: A new frequency estimation method for equally and unequally spaced data. IEEE Trans. Signal Process. 62(21), 5761–5774 (2014)

Carlsson, M.: On convexification/optimization of functionals including an l2-misfit term. arXiv preprint arXiv:1609.09378 (2016)

de Sá, E.M.: Exposed faces and duality for symmetric and unitarily invariant norms. Linear Algebra Appl. 197, 429–450 (1994)

Eckart, C., Young, G.: The approximation of one matrix by another of lower rank. Psychometrika 1(3), 211–218 (1936)

Fazel, Maryam: Matrix rank minimization with applications. PhD thesis, Stanford University (2002)

Grussler, C., Rantzer, A.: On optimal low-rank approximation of non-negative matrices. In: 2015 54th IEEE Conference on Decision and Control (CDC), pp. 5278–5283 (2015)

Grussler, C., Giselsson, P.: Low-rank inducing norms with optimality interpretations. CoRR arXiv:1612.03186 (2016)

Grussler, C., Rantzer, A., Giselsson, P.: Low-rank optimization with convex constraints. CoRR arXiv:1606.01793 (2016)

Kronecker, L.: Zur Theorie der Elimination einer Variabeln aus zwei algebraischen Gleichungen. Königliche Akad. der Wissenschaften (1881)

Larsson, V., Olsson, C.: Convex envelopes for low rank approximation. In: Energy Minimization Methods in Computer Vision and Pattern Recognition, pp. 1–14. Springer, Berlin (2015)

Larsson, V., Olsson, C., Bylow, E., Kahl, F.: Rank minimization with structured data patterns. In: Computer Vision—ECCV 2014, pp. 250–265. Springer, Berlin (2014)

Mirsky, L.: A trace inequality of John von Neumann. Monatshefte für Mathematik 79(4), 303–306 (1975)

Rockafellar, R.T.: Convex Analysis. Princeton university press, Princeton (2015)

Schmidt, E.: Zur Theorie der linearen und nichtlinearen Integralgleichungen. III. Teil. Mathematische Annalen 65(3), 370–399 (1908)

Sion, M., et al.: On general minimax theorems. Pac. J. Math. 8(1), 171–176 (1958)

Acknowledgements

This research is partially supported by the Swedish Research Council, Grants Nos. 2011-5589, 2012-4213 and 2015-03780; and the Crafoord Foundation. We are also indebted to an anonymous referee for significantly improving the manuscript.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Andersson, F., Carlsson, M. & Olsson, C. Convex envelopes for fixed rank approximation. Optim Lett 11, 1783–1795 (2017). https://doi.org/10.1007/s11590-017-1146-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11590-017-1146-5