Abstract

The tendency of humans to shy away from using algorithms—even when algorithms observably outperform their human counterpart—has been referred to as algorithm aversion. We conduct an experiment with young adults to test for algorithm aversion in financial decision making. Participants acting as investors can tie their incentives to either a human fund manager or an investment algorithm. We find no sign of algorithm aversion: participants care about returns, but do not have strong preferences which financial intermediary obtains these returns. Contrary to what has been suggested, participants are neither quicker to lose confidence in the algorithm after seeing it err. However, we find that participants’ inability to separate skill and luck when evaluating intermediaries slows down their migration to the algorithm.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Increasing digitization and automation of processes in all parts of society have sparked a debate on whether people are willing to rely on algorithms. At the same time, algorithms offer opportunities for new business models that rely less on continuous human decision-making and human interaction, but more on pre-defined rules and processes. The financial industry, in particular, offers many applications for algorithms, be it in trading, asset management, or financial advice (Thakor 2020). For example, robo-advisors that operate based on algorithms are a recent competitor to traditional delegated investment with the help of human fund managers or investment advisors. In this study, we examine to what extent participants are willing to delegate to investment algorithms in an experimental investment task.

We use the term algorithm for any automated formula or decision rule that is used to process data. In a series of experiments, Dietvorst et al. (2015) show that participants prefer to rely on predictions made by a human rather than an algorithm, even if the latter performs better. Participants are quicker to lose confidence in the algorithm than the human once they observe an error. Dietvorst et al. (2015) explain this behavior with algorithm aversion. Unwarranted algorithm aversion is costly and could hamper the adoption of technology in many areas, including finance.

Our motivation to study delegated investments is twofold. First, there is accumulating evidence that human financial professionals such as fund managers and financial advisors perform poorly (Fama and French 2010; Linnainmaa et al. 2021). Oftentimes they do not reach passive benchmarks, which is the minimum an algorithm can do by directing investors to portfolios of passive products. Human intermediaries provide only limited customization in their asset allocation and are affected by their own preferences (Foerster et al. 2017). Pre-defined rules could link investor characteristics and preferences more tightly to suggested allocations. Second, due to economies of scale, algorithms can offer low-cost products and advice to retail clients. A pre-programmed algorithm can serve many people at a time, while fees for human financial advice are often prohibitive when investing small sums of money. Actively managed funds likewise charge much higher fees than rule-based passive products.

With the emergence and growth of robo-advisors, financial algorithms now directly interact with retail investors. Based on client’s inputs, they formulate investment advice without the intervention of a human. Robo-advisors like Betterment or Wealthfront already report assets under management worth several billion U.S. dollars.Footnote 1 New fintech companies heavily relying on technology enter the market for investments all over the world. Despite the growth and activity in the robo-advice segment, its overall market penetration is still low. Whether algorithm aversion is a factor in deciding for a financial intermediary thus remains an open question. It could determine whether new competitors to traditional financial intermediaries will remain in a niche market and cater the tech-savvy, or will gain wide acceptance in the general population. Our study therefore aims to answer two key questions: (1) Are experimental participants less likely to invest in a portfolio selected and “managed” by an investment algorithm than in a portfolio selected and managed by a human fund manager? and (2) Are they quicker to abandon the investment algorithm than the human fund manager if performance (absolute or relative) is poor?

To answer these questions, we conduct an online survey and a laboratory experiment with the same university student participants. The survey is administered several weeks prior to the experiment and elicits beliefs about the strengths and weaknesses of algorithms relative to human judgment in a financial context. It examines explanations for algorithm aversion suggested in the literature and is designed to contribute to our understanding of participants’ preference for either intermediary. We implement a distance in time between the survey and the main experiment to avoid a direct effect of the survey on experimental decisions.

In the laboratory experiment, we ask participants to choose between a human fund manager and an investment algorithm to invest for them. Both financial intermediaries then repeatedly decide to invest in either a risk-free bond or a risky stock. The stock can be in a good state or a bad state, which is gradually revealed by its performance (the design is adapted from Kuhnen 2015). The pre-programmed algorithm strictly applies Bayes’ Law and decides accordingly, while the human fund manager has complete discretion over the decisions to make. In each experimental session, the participant with the best financial literacy and numeracy skills takes over the role of the human fund manager. The incentives of participants depend on the payoffs generated by their selected intermediary.

Importantly, the selection of the financial intermediary is repeated ten times. Besides initial preferences without much information, this allows us to study the reaction to the outcomes the intermediaries produce. The experimental design gives rise to frequent (ex-post) errors that occur even if an optimal strategy is applied. This feature reflects the partially random nature of real financial markets. We can thus examine the consequences of such errors on the preferences of participants. To determine the strengths of these preferences, we apply several different fee schemes, which render one intermediary more expensive than the other.

We find no evidence for algorithm aversion. In the initial choice of an intermediary (with equal fees), 56% of participants decide to invest with the algorithm. If fees differ between the intermediaries, participants mostly (\(>80\%\)) choose the intermediary with the lower fee. They apparently do not believe that one will outperform the other by a high enough margin to justify a higher fee. Indeed, human fund managers perform quite well and register only slight underperformance relative to the Bayesian algorithm. They can win over a few participants early on, but this trend reverses and in the final decision 63% choose the algorithm.

There is no particularly strong attachment to either intermediary. Once participants learn about investment choices and outcomes of the human fund manager and the algorithm, they focus on performance. Choices are strongly influenced by cumulative past performance whereby the highest weight is given to the most recent performance. In their reaction to performance, participants do not discriminate between intermediaries. In particular, they do not respond differently to (ex-post) mistakes by the intermediaries, rejecting the idea of trust in an algorithm eroding more quickly. We thus do not find support for the two major predictions of algorithm aversion—a general preference for human judgment and an adverse response to errors by an algorithm—in the domain of financial decision making.

The survey provides some insights into the reasons for this result. Participants have prior perceptions about the strengths and weaknesses of a financial algorithm relative to a human decision-maker. In aggregation, these perceptions help to explain initial choices in the experiment and remain significant even after participants have observed investment performance. Of the individual attributes we elicit, only a belief in the ability of an algorithm to generate higher returns has explanatory power for observed choices in the experiment. The survey further reveals that participants view algorithms as an aid rather than a competitor to human fund managers.

We further establish that in focusing mainly on returns, participants fail to distinguish between skill and luck. They take into account the outcome of an investment but not whether an investment decision was reasonable ex ante (outcome bias). They will thus be slow in recognizing true skill, which might explain the only gradual migration towards the algorithm over time. The random component in outcomes introduces noise, which prevents small performance differences to be noticeable by participants (consistent with Heuer et al. 2017).

We corroborate the results by using data from a representative survey of the German population. Only about 30% of participants are familiar with financial algorithms or robo-advisors. In this subsample, we find similar perceptions about algorithms, including a belief in their outperformance and the view that they are an aid to human fund managers instead of a competitor. In a hypothetical investment task, about half would invest with the algorithm. We detect an age effect in the population survey, but consider it as not strong enough to undermine the generalizability of the experimental findings with a student sample.

Our results have several implications for the financial industry. First, algorithm aversion is absent among experimental participants, which suggests that products based on algorithmic strategies are generally accepted by young adults. Second, however, preferences can be quite sticky, as the investment proportions in our experiment do not change much. It might need a long performance history or large performance difference to convince people who are initially in favor of a human fund manager. Third, lower fees are very effective in the experiment to attract participants that would otherwise not choose the algorithm. To market algorithms as the cheaper alternative to human fund managers could be promising. Fourth, the view of algorithms serving as an aid expressed in both studied samples suggests that the most preferred intermediary could be a human manager assisted by an algorithm. Even though people are forgiving in case of errors, they might view human intervention in extreme scenarios favorably.

2 Literature and hypotheses

Algorithm aversion is neither a new concept, nor limited to a particular domain. Researchers as early as Meehl (1954) discuss the superior performance of algorithms in various prediction tasks, while noting the scepticism of clinicians against them. Dawes (1979) confirms the superiority of even improper specified algorithms and reports common objections against their use. These include technical concerns about the particular methodology applied, psychological misperceptions of performance, and ethical problems with algorithms deciding in sensitive areas. We borrow a definition by Jussupow et al. (2020), who refer to algorithm aversion as “biased assessment of an algorithm which manifests in negative behaviours and attitudes towards the algorithm compared to a human agent”.

The tendency to discount algorithms has been documented in a variety of domains. In medicine, recommendations coming from a physician are rated higher than recommendations from a computer system or from a physician aided by a computer system (Promberger and Baron 2006; Shaffer et al. 2013; Longoni et al. 2019). In matters of personal taste, Yeomans et al. (2019) provide evidence that although an algorithm outperforms humans at recommending jokes that participants rate funny, they still prefer to receive joke recommendations from other humans. In the financial domain, Önkal et al. (2009) show in an experiment that stock price forecasts provided by a statistical forecasting method are more severely discounted than forecasts by a human expert. Based on fund flow data, Harvey et al. (2017) report that algorithm-based (“systematic”) hedge funds receive less inflows than actively managed (“discretionary”) hedge funds. However, they do not find a performance gap justifying this aversion towards algorithm-based hedge funds.

A first hypothesis emerging from this literature is that people assess algorithms negatively and tend to prefer a human expert, which potentially extends to financial decisions:

- Hypothesis 1::

-

A larger proportion of participants will initially select to invest with a human fund manager than with an investment algorithm.

- Hypothesis 1a::

-

Participants’ willingness-to-pay for the human fund manager (i.e., fees) will initially be higher than their willingness-to-pay for the algorithm.

Hypothesis 1a is added as a measure for the strength of preference for a financial intermediary. By attaching a price to delegated investing, we are able to determine at what price people are indifferent between investing with the human fund manager and the algorithm. For both hypotheses, we test \(H_0\) that there is no difference between intermediaries.

Dietvorst et al. (2015) analyze algorithm aversion in a systematic way. Participants in several experiments observe predictions of human judges and algorithms in domains such as MBA student performance or U.S. air traffic. They can either tie their incentives to the performance of the algorithm or human (which is in some experiments themselves and in other experiments another participant). In several conditions, the amount of performance feedback participants receive is varied. Dietvorst et al. (2015) observe that participants turn away from the algorithm after seeing it perform, even if this performance is superior to the human judge. Performance, despite superior, is not perfect, and observing it negatively affects beliefs about the accuracy of the algorithm. Participants are particularly troubled by seeing the algorithm err and abandon it in response.

We can thus formulate hypotheses on the expected reaction to seeing an investment algorithm perform and to mistakes that it makes:

- Hypothesis 2::

-

Participants will disregard higher performance of an algorithm and continue to favor a human fund manager after outcomes are observed.

- Hypothesis 3::

-

After mistakes by the algorithm, participants will be more prone to switching from the algorithm to the human fund manager than vice versa.

We note that not all prior literature is in agreement with these hypotheses. Dietvorst et al. (2015) do not find initial algorithm aversion as proposed in Hypothesis 1, but only in response to observing imperfect performance. Logg et al. (2019) even find algorithm appreciation using a variety of non-financial tasks and prior to receiving performance feedback. Castelo et al. (2019) find stronger algorithm aversion for tasks perceived as subjective (such as recommending jokes) than for tasks perceived as objective (such as buying stocks). Consistently, when solving logical problems, participants rely more on algorithms than on other people or themselves (Dijkstra et al. 1998; Dijkstra 1999).

Logg (2017) identifies confounding factors in existing studies that make it difficult to establish a clear case for or against algorithm aversion. Studies differ in whether the alternative to using an algorithm is a human expert or oneself. In the latter case, overconfidence and illusion of control might decrease the reliance on an algorithm. Studies also differ in how humans and algorithms are labeled and described. Making strong references to their expertise or rationality might affect outcomes. Further confounding factors are task-subjectivity and whether situations prior to or after performance feedback are analyzed.

The very active recent literature on algorithm aversion has turned to its underlying causes and how to overcome it (see Burton et al. 2020; Jussupow et al. 2020 for reviews). A majority of studies finds evidence for algorithm aversion, supporting the predictions stated in Hypotheses 1–3. However, a considerable number of studies finds inconclusive results or algorithm appreciation. Restricting attention to a subset of the literature concerned with financial decisions, a similar picture emerges. Table 1 summarizes methodology, population, and results of relevant publications and working papers. Most of this work is contemporaneous and was unavailable to derive hypotheses and design of our study. Önkal et al. (2009), Harvey et al. (2017), Litterscheidt and Streich (2020), Niszczota and Kaszás (2020) and Filiz et al. (2021) find algorithm aversion. Hodge et al. (2021), David and Sade (2018) and Rossi and Utkus (2020) report inconclusive results that depend on experimental condition or participant demographics, while Holzmeister et al. (2022) find algorithm appreciation. We will return to these studies in Sect. 5, when we discuss potential reasons for differences in results in comparison to our own findings.

Our study contributes to the emerging literature on the presence (or absence) of algorithm aversion in financial decision making in multiple ways. To our knowledge, we are the first to use an experimental setting in which both investment algorithm and human fund manager act and are observed to act. Due to the straightforward design, we are able to address confounding factors that make conclusions about algorithm aversion often difficult. By presenting decisions and investment outcomes to participants, we generate rich data on how they respond to performance and to mistakes, which has been described as key element of algorithm aversion. Finally, we explore the underlying beliefs that shape people’s preferences for an intermediary.

3 Experimental design and participants

We design an experiment that consists of two parts, an online survey and a laboratory experiment. We separate the parts to avoid spill-over effects from the survey to the experiment or vice versa. As for practical reasons payment of participants takes place at the laboratory stage, the survey is run beforehand. A survey link is sent out to participants about four weeks before the scheduled experiment and the survey closes three weeks before. Upon completion of the survey, participants receive an individual code to partake in the laboratory experiment.

3.1 Online survey

The aim of the survey is to understand perceptions of algorithms and human managers that may affect algorithm aversion in financial decision making. There are several aspects of decision making and data processing for which either an algorithm or a human might be better equipped. We draw on the literature to identify relevant dimensions for which we measure participants’ perceptions. Table 2 lists statements that suggest one intermediary is better than the other in a particular dimension. Participants express their agreement on a five-point Likert scale ranging from “strongly disagree” to “strongly agree.” To avoid acquiescence bias, we create an inverted version of each statement and one is presented at random (Weijters and Baumgartner 2012).

A straightforward question is whether participants expect an investment fund run by an algorithm or a human fund manager to achieve higher returns (statement one). One objection against algorithms is their supposed inability to learn or to improve through experience (Dawes 1979; Highhouse 2008), which we capture in statement two. It has been suggested that algorithms are unable to incorporate qualitative data and to react to unexpected events or outliers (Grove and Meehl 1996), which we address in statements three and six. There also might be different perception on intermediaries’ ability to identify relevant factors and to integrate this data (statements four and five, Dawes 1979). Of specific interest to the industry might be whether algorithms are expected to compete with (and probably replace) human fund managers, or whether they are perceived as an aid to human managers (statement seven). It is unclear whether a combination of the two intermediaries is considered superior to a single one (Shaffer et al. 2013).

In addition, we elicit self-reported measures for trust and risk aversion (Falk et al. 2018), and self-assessed economic knowledge. These factors might interact with algorithm aversion, as for example more sophisticated investors have been suggested to rely more on algorithms (D’Acunto et al. 2019). The impact of trust and risk-aversion will depend on which intermediary is considered to be more trustworthy and less risky.

3.2 Laboratory experiment

In the laboratory experiment, we simulate financial decisions in the context of delegated investments. This provides a simple setup in which an algorithm can directly compete with a human fund manager. Dietvorst et al. (2015, p. 114) define the term algorithm to “encompass any evidence-based forecasting formula or rule. Thus, the term includes statistical methods, decision rules, and all other mechanical procedures that can be used for forecasting.” For an investment context, we derive the following criteria for the algorithm: (1) once constructed, it must act independently of a human, (2) it must be strictly rule-based, and (3) its recommended actions must be executed automatically.

There are two roles in the experiment, that of an investor and that of a fund manager. Investors decide whether they want to invest with the human fund manager or an investment algorithm. This choice is repeated ten times for ten ‘blocks’ of investments. Each block of investment consists of six ‘trials’, for which financial intermediaries make decisions that the investors can observe. The investment decisions made by the financial intermediaries are repeated choices between a risk-less bond and a risky stock. Our experimental design follows the gain condition in Kuhnen (2015). There are two securities on a market, one of which is a bond paying 3€ for certain. The other is a stock paying either 5€ or 1€. The probability for the high payoff is either 70% (good state) or 30% (bad state). Whether the stock happens to be in a good or bad state is randomly determined with equal probability at the beginning of a block. The state of the stock stays fixed for a block of six trials and is not revealed to participants. A trial hereby represents one realization of payoffs for the two securities.

An important difference to the original design is that participants do not decide themselves in which security to invest, but instead choose the intermediary they want to invest with. Intermediaries are presented as investment funds managed either by a human fund manager or by an investment algorithm. The algorithm is programmed to maximize expected return following Bayes’ law. In case expected returns are equal for both securities, it chooses randomly. The algorithms’ goal of maximizing expected return is disclosed to participants. The exact mechanism, however, is not disclosed (see Online Appendix A for the experimental instructions). Likewise, the literature on algorithm aversion usually does not explain to participants how algorithms work exactly or whether they are optimal. In reality, the mechanics of an algorithm would typically not be disclosed by fund companies either. While a concern is that participants believe an algorithm constructed by the experimenters must be superior, prior research finds algorithm aversion despite this fact (Dietvorst et al. 2015).Footnote 2

The human fund manager represents an actual human being selected from participants. This avoids simulating human decisions, which would make them appear similar to an algorithmic decision. Participants complete a set of eight advanced financial literacy questions (van Rooij et al. 2011) and a four-question numeracy test (Berlin Numeracy Test, see Cokely et al. 2012). Known to participants, the participant with the highest score is anonymously appointed human fund manager. This is to ensure that the other participants view this individual as financially competent, even though he or she is not a professional fund manager. In case of ties for highest score, one of the tied participants is selected randomly.

After the role of the fund manager is assigned, participants decide whether to tie their incentives for the first block of trials to the human or to the algorithm. Their decision is fixed for this block and can be revised only after the block ends. They then observe choices and outcomes of both intermediaries. In each trial, the human and the algorithm invest in the stock or the bond and observe outcomes of both securities. After a block ends, a new state for the stock is drawn and participants can change their preferred intermediary. They are shown a summary of the aggregated payoffs of both intermediaries for all previous blocks. This is repeated for a total of ten blocks. For an overview of the experimental design see Fig. 1.

Illustration of the experimental design. The figure shows from left to right the progress of the experiment. Grey rectangles represent activity by participants in the role of investors, white rectangles activity by the participant in the role of the fund manager. Shaded rectangles represent activity by all participants

The experimental design allows for (ex-post) mistakes by the human manager and the algorithm, as even perfect information will result in the selection of the asset with the inferior payoff in 30% of the cases. The design thus enables us to study how participants react to mistakes by the intermediaries. It further avoids several of the confounding effects identified by Logg (2017): we study pure delegation and do not allow for self-directed investments by participants in the role of investors, we study both decisions prior to and after receiving performance feedback, and we study a task in which errors can be objectively identified.

In addition to the decision for one of the intermediaries, we also measure the strength of participants’ preferences. Investing with the human fund manager costs a fixed fee of 2€ in each block. Investing with the algorithm costs a fee of either 0, 1, 2, 3, or 4€. For each of the five possible fee-combinations we ask which intermediary a participant would prefer (see Online Appendix B for screenshots of the experiment). Participants can thus express a preference for either intermediary in the range from \(-2\)€ to +2€. Directly afterwards, one fee combination is randomly drawn and the actual decision for this combination is used. Participants thus know at the beginning of a block, which intermediary determines their payoff. Fees are not transferred to the human fund manager (or the algorithm) to avoid choices being influenced by reciprocity or inequity aversion.

All participants are incentivized based on the outcomes of their decisions. Participants acting as investors receive the payoff generated by their chosen intermediary minus fees. Fees are deducted from investment proceeds just as management fees in reality. As the minimum investment outcome in a block is 6€ (six trials with low stock outcome), participants can always pay fees, which rules out bankruptcy. Only one blocks is randomly drawn for payment to avoid wealth effects. Participants acting as fund manager receive the gross payoff they achieved in a random block. This also provides incentive to become fund manager.Footnote 3 The laboratory experiment concludes with asking participants how they rate the human fund manager’s and the algorithm’s investing capability, and an open-ended question regarding participants’ primary motivation when choosing between both intermediaries.

3.3 Participants

The laboratory experiment was implemented using z-tree (Fischbacher 2007), and the survey was run on the research platform SoSci survey. Participants were invited to the Mlab of the University of Mannheim via the recruiting software ORSEE (Greiner 2015). In total, 114 participants took part in the laboratory experiment, 107 of which could be matched to survey data. To preserve anonymity, matching was done via an individual code generated in the survey, which some participants could not recall. We nevertheless allowed these participants to enter the main experiment. We aimed for twelve sessions of ten participants, determined by available funding. Due to no-shows, some sessions had fewer but never less than eight participants. This means that we ended up with 12 unique human fund managers (one per session) with seven to nine investors each. The small sessions were intended to generate more variation in human fund managers, which implies more independent clusters and reduced risk that results might be driven by extreme strategies of one particular fund manager.

Participants were 22.8 years old on average, were predominantly female (58%), and a quarter had already invested in stocks (24.3%). The average payoff from the experiment was 16.79€ for participants in the role of investors and 18.83€ for participants in the role of fund managers. The payoff range was between 4€ and 30€. Considering an average experiment duration of approximately 40 min (and an additional 5–10 min for the survey), the payoff for participation was substantially higher than the laboratory average and German minimum wage.

4 Results

4.1 Survey results

We begin with the analysis of the survey responses on how algorithms are perceived in a financial context. As two reversed versions of each statement are randomly presented, we re-scale all answers such that a value of 5 expresses the algorithm is strongly favored, and a value of 1 that the human is strongly favored. A value of 3 indicates a neutral perception. We do find a (sometimes significant) effect of the version of the question shown to participants (acquiescence bias), which we eliminate by counterbalancing versions. As intended, the statements capture different dimensions: Answers show low and mostly insignificant correlations.

Table 3 summarizes participants’ perceptions along the measured dimensions. On average, investment algorithms are expected to deliver better investment performance than human fund managers. In addition, investment algorithms are viewed to be slightly better at adapting their investment approach. Not surprisingly, however, human fund managers are perceived to make better use of qualitative data. No difference is found for both data aggregation and data weighting. When it comes do dealing with outliers, such as financial crises, human fund managers are again viewed more capable. Excluding neutral responses of three, a majority favors the algorithm in four out of six criteria.

Overall, participants’ perceptions of algorithms in finance appear quite reasonable. Some correspond to the views expressed in the literature such as dealing with qualitative data and with outliers. In the domains probably most relevant for the laboratory experiment, expected return and the ability to learn, participants view algorithms as superior to humans. This means their perceptions do not unambiguously support all of the proposed reasons for algorithm aversion. We further find that young adults view algorithms as an aid to instead of a competitor of fund managers. In a similar vein, Rossi and Utkus (2020) report that even robo-advised clients value the possibility to reach out and interact with humans.

Lastly, participants are somewhat inclined to take financial risks (5.4 out of 10, SD = 2.0) and report to have an average level of general trust (4.7 out of 10, SD = 2.3). As many of the recruited participants are students of business or economics, they rate their economic knowledge above average (4.5 out of 7, SD = 1.1). As investors in stocks and mutual funds typically represent an economically rather sophisticated group, the participant group should be a relevant one even though they lack investment experience.

4.2 Investment decisions by financial intermediaries

In this section, we will outline how the algorithm and the human fund manager invest, as a background to better understand participants’ delegation decisions. We mainly focus on the rationality and similarity of their choices, their relative performance, and their risk taking. The algorithm maximizes returns following Bayes’ law, which is relatively simple in the experimental setting. In the first trial without any information, it selects the stock or the bond at random. In any later trial, it selects the security that cumulatively outperformed in the preceding trials. In case of equal performance, the algorithm again selects at random.

Six trials usually provide enough information to identify the true state of the stock with high probability. In the final investment decision, the choice of the algorithm is in line with the true state in 87% of the cases. We refer to such a decision as an ex ante correct decision, because the intermediary correctly selects the asset that is expected to perform better according to the underlying probabilities. Figure 2 shows how the fraction of ex ante correct decisions by the algorithm rises over the course of the trials. However, the fraction of ex post correct decisions, meaning that the selected asset outperforms the other asset in the following trial, is lower, as it is subject to chance. The upper limit for ex post correct decisions is 70% even for a perfectly informed investor. As the figure shows, the algorithm remains below this limit even in the final trials.

Investment decisions by intermediary. The figure shows the fractions of ex ante and ex post optimal decisions by the algorithm and the human fund manager. An ex ante optimal decision is one that matches the true state of the stock (selecting the bond in the bad state and the stock in the good state). An ex post optimal decision is one that matches the highest outcome in a trial. Fractions are averaged over all ten blocks of investing

The expected investment outcome of a perfectly informed investor would be 22.80€ in the good state (always selecting the stock) and 18.00€ in the bad state (always selecting the bond). The algorithm on average reaches 21.61€ (good state) and 16.64€ (bad state). These values provide a benchmark for human fund managers. As the algorithm uses the best available strategy, human fund managers will most likely underperform. This is consistent with the literature on algorithm aversion in which the algorithm usually outperforms the human. By appointing the most financially sophisticated participant, the human fund manager should be well-equipped to make good investment decisions and might come close to the optimal strategy of the algorithm. Participants on average answer 8.3 out of 12 questions correctly in the financial literacy and numeracy task. As intended, participants selected as fund managers perform substantially better with an almost perfect score of 11.7.

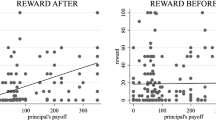

Human fund managers’ knowledge and abilities translate into investment decisions. The dashed lines in Fig. 2 show the fractions of their ex-ante and ex-post correct decisions. From trial two onward, they are below those of the algorithm but only by 11 (ex ante) and 6 (ex post) percentage points. On average, the algorithm outperforms human fund managers by on average 0.58€ per block.Footnote 4 As depicted in Fig. 3, payoff differences between algorithm and human fund manager are skewed to the right. By construction, it is difficult to outperform the algorithm by more than 2€. However, algorithms sometimes outperform the human fund manager by a large margin.

A majority of outcomes differing by no more than 2€ suggests that, by and large, human fund managers and algorithms adopt a similar investment strategy. However, there is enough variation to study investor responses to underperformance, equal performance and outperformance by the algorithm. In a block of six trials, on average both intermediaries choose the same asset in 4.1 trials. Even two Bayesian actors might differ in their choices and outcomes as some choices are ambiguous (expected returns are the same for both assets). Figure 4 shows how the number of identical decisions is distributed. Identical choices for all trials are observed in 20% of all blocks, which is a special case which we will examine.

Human fund managers can only deviate substantially from the algorithm if they do not follow Bayesian logic. For example, they may falsely identify trends and patterns in outcome data (Schwaiger et al. 2020). On average, human fund managers make Bayesian investment choices in 82% of all trials.Footnote 5 As shown in Fig. 4, human fund managers make Bayesian decisions in every trial in about 50% of all blocks. One reason for deviating from Bayes rule might be risk preferences. Human fund managers behave risk-neutrally on average, as they invest into the stock 53% of the time. However, there is great heterogeneity across individual human fund managers, as some act risk averse and some act risk seeking. This rules out the possibility that they all follow a common strategy that specifically appeals to human participants. They also receive no information to tailor their risk-taking to investors in their experimental session and have no incentive to do so. It is thus unlikely that fund manager risk preferences will drive our overall results. We will test, though, whether specific levels of risk-taking make the human fund manager more (or less) attractive to participants.

4.3 Investors’ initial choice of intermediary

When analyzing the choices of investors, we distinguish between the first decision and all subsequent decisions. Entering the first block of investments, participants have to rely on their general perception of the intermediaries. There is no information yet available on their performance in the investment task, and decisions might differ from those after seeing the intermediaries perform. In absence of information, some participants might be indifferent and randomize. However, those who harbor strong algorithm aversion would presumably not be indifferent, but choose the human fund manager. To test Hypotheses 1 and 1a, we thus examine investors’ initial choice of an intermediary before the first block of investments.

The aim of the algorithm to maximize expected return is stated in the experimental instructions. Similar to what is observed in reality, the exact mechanism of the investment algorithm is not disclosed. We avoid any particular reference to its quality.Footnote 6 It is common knowledge to participants that human fund managers are selected based on financial sophistication and that they are incentivized based on investment performance. It is thus reasonable for participants to assume that they aim at maximizing performance as well. Although, as reported, human managers act rather risk neutrally, investors might initially believe that they invest more closely to human investors’ (potentially risk-averse) preferences.

When participants first select an intermediary, we find no evidence for algorithm aversion. Our baseline is the choice situation with equal fees, in which 56% of investors choose the algorithm. While this is a slight majority, the proportion is not significantly different from 50% (\(p=0.23\), non-parametric test of proportions). To reject the presence of algorithm aversion, however, we do not need to show algorithm appreciation. The 95%-confidence interval for participants’ choices is [0.46, 0.66], which makes it unlikely that our data occur in presence of strong algorithm aversion. We interpret the result as evidence against Hypothesis 1.

Under Hypothesis 1a, we should further find a higher willingness-to-pay (WTP) for the human fund manager when fees are unequal. This means, we should observe relatively more choices in favor of the human fund manager than the algorithm if their respective fees are higher. However, the observed distribution of choices is almost symmetric. If the human fund manager costs 1€ (2€) more than the investment algorithm, 13% (6%) of investors still prefer the human manager in the initial choice. If the investment algorithm costs 1€ (2€) more than the human fund manager, 15% (5%) of investors prefer the algorithm. Revealed preferences imply an on average 9 cents higher WTP for the algorithm.Footnote 7 We therefore cannot confirm Hypothesis 1a.

The low fraction of participants selecting a more expensive intermediary suggests that they do not believe any intermediary will outperform by a Euro or more. This is interesting, as just one (ex-post) mistake per block loses 2€ relative to the other intermediary. Fee intervals are admittedly too coarse to pick up small differences in WTP. However, given the symmetry of choices for high fee levels and the slight preference for the algorithm at equal fees, we do not expect a strong asymmetry of WTP for low fee levels. Open-ended feedback at the end of the experiment supports the importance of fees: out of 95 participants who state their motivation for choosing between intermediaries, 75 (79%) mention costs. We will return to the stability of WTP, when we analyze choices after seeing intermediaries perform.

For participants in the role of investors with a matching survey (n = 95), we regress their initial choice for an intermediary on their perceptions of algorithms, demographics and controls. Table 4 shows marginal effects of probit regressions. In a first step, we aggregate the perceptions towards the algorithm by taking the mean of questions 1 to 6 as reported in Table 2.Footnote 8 We find that the general perception of the algorithm is positively correlated with choosing the algorithm in the equal fee condition (column 1). One step on the five-point scale makes it 26.5% more likely to select the algorithm. This speaks against participants just randomizing in the absence of information, as their general perceptions of financial algorithms are predictive for their first block choices.

Effects for individual perceptions (columns 2 and 3) are all positive, but only the belief that the algorithm is able to generate higher returns attains significance. This is consistent with participants viewing this ability as the most important attribute in the experimental task. Of the control variables, being male and having invested in stocks have a negative effect. This might come as a surprise, as men are widely found to adopt financially technology more eagerly (Chen et al. 2021). On the other hand, male and active investors are prone to overconfidence (Barber and Odean 2001) and may believe that the human fund manager can beat the algorithm. Risk tolerance has a positive effect, suggesting that the algorithm is perceived as the riskier alternative or that human fund managers are expected to behave risk averse. In the verbal statements, six participants mention risk considerations. When fees are unequal (columns 4 and 5), we find only few effects of the independent variables on the choice of intermediary. As assumed before, decisions in these cases seem to be mostly driven by fees.

4.4 Investors’ choices after seeing intermediaries perform

We first consider descriptive evidence whether algorithm aversion arises in response to seeing the algorithm perform. Figure 5 shows the fractions of participants choosing to invest with the algorithm over the course of the experiment at different fee levels. At equal fees, the fraction drops from an initial 56% to a low of 44% in investment blocks 4 and 5. Possibly, participants are disappointed that the algorithm is not perfect and makes mistakes. However, afterwards we observe a strong recovery to 63% in the final two blocks (95%-confidence interval [0.57, 0.70]). With accumulating evidence apparently the outperformance of the algorithm becomes harder to ignore. The average over all investment blocks is 52% [0.49, 0.55] in favor of the algorithm, which speaks against a general presence of algorithm aversion. The experiment is sufficiently powered to detect an effect size of about 10%-points relative to the \(H_0\) of equal proportions. Comparable studies such as Litterscheidt and Streich (2020) and Holzmeister et al. (2022) find effect sizes of 15%-points and 12%-points, respectively.

The figure further shows that almost all participants choose the algorithm if it is offered for a lower fee, while few participants choose it for a higher fee. There is an uptick in the fraction of participants who choose the algorithm for a 1€ lower fee in the second half of the experiment similar to the uptick observed for equal fees. The other fractions remain very stable over the entire experiment. We conclude that, even if algorithm aversion may be a reason for some to avoid the algorithm at equal fees, almost no one does so at lower fees. Contrary to Hypothesis 1a, there is no higher WTP for the human fund manager.

To examine Hypothesis 2 more closely, we will mainly focus on investors’ choices when fees are equal, as they are more sensitive to developments in the experiment. For a regression analysis, we treat repeated choices by participants as panel data with investment blocks as time dimension. Rational investors should learn from observing decisions of intermediaries and their performance. At the end of each block, accumulated payoffs of both intermediaries are prominently displayed (including all previous blocks). We investigate how investors respond to cumulative performance of both intermediaries as well as their performance in previous blocks. The dependent variable is whether a participant chooses to invest with the algorithm for the next block. We estimate panel logistic regressions with bootstrapped standard errors and report marginal effects.

As displayed in Panel A of Table 5, investors react to cumulative performance of both intermediaries in the expected direction. The higher the past payoff of the algorithm, the more likely are investors to choose the algorithm in the current block. On the contrary, the higher the payoff of the human fund manager, the less likely are they to choose the algorithm. An increase of 1€ in performance of the algorithm implies an about 3.3% increase in the probability of choosing the algorithm. The magnitude of coefficients is very similar for both intermediaries. We cannot reject the null hypothesis that coefficients are of equal size in any of the regression specifications. Hence, we do not find that investors show different sensitivity to the performance of the algorithm.

In further specifications, we add the choice in the previous block, which suggests that having chosen the algorithm in t − 1 makes it about 14% more likely to choose the algorithm again (column 2). We interact this variable with past performance to determine whether investors pay different attention to outcomes depending on the intermediary they invested with (column 3). We do not find an interaction effect.Footnote 9 To examine whether risk-taking behavior of the human fund manager has an effect on its attractiveness, we include indicator variables for whether a fund manager behaves risk averse or risk seeking (risk neutrality is the omitted category). We define fund managers as risk averse if they choose the risky option (the stock) significantly less often than the risk-neutral algorithm, while risk seeking fund managers choose it significantly more often. In univariate results, we find that participants are more likely to invest with human fund managers, who behave risk averse. As column 4 shows, once controlled for performance, the effect disappears.

It is possible that the effect of human fund manager risk-taking is ambiguous, because participants in the role of investors differ in their risk preferences. We use risk tolerance of participants elicited in the survey to construct an indicator whether it matches the risk-taking behavior of the fund manager in their session.Footnote 10 Investors should appreciate a fund manager that behaves in line with their risk preferences and invest less with the algorithm. However, results in column (5) show that the coefficient for the indicator is positive and insignificant. Results for risk preferences as such which are included in the control variables are also insignificant. We conclude that neither risk-taking behavior of human fund managers nor risk preferences of investors drive our results.

We further control in column (5) for the aggregated perception of the algorithm expressed in the survey and find a significant coefficient of almost the same magnitude as in the regression for the initial choice (cp. Table 4). This means that participants’ prior perceptions are not easily overturned by observing the intermediaries perform. A one-standard deviation change in the perception of the algorithm has an effect of 10.5% (\(0.44\cdot 0.24\)) on the likelihood to choose the algorithm even after seeing it perform. When we restrict the regression to the second half of the experiment, the respective coefficient is reduced to 0.18, and to 0.06 for the final block. It thus takes time until a predisposition for an intermediary makes way for an evaluation based on data.

Panel B of Table 5 reports results for individual lagged payoffs of both intermediaries. Their economic and statistical significance is slightly weaker than that of the cumulative payoffs, as they reflect only part of the observed performance history. There is evidence that more recent payoffs matter more, with the strongest effect of blocks t − 1 to t − 3. We find mixed evidence on coefficient size, with mostly larger effects of the algorithm’s performance (not significant). In sum, we cannot confirm Hypothesis 2 that participants disregard the performance of the algorithm. We find similar results for the decisions with unequal fees, but the sensitivity to performance is generally lower. For example, coefficients for cumulative performance are about two-thirds as large as in the regression with equal fees.

Figure 6 illustrates the almost monotonous effect of a payoff difference between intermediaries in the previous block on the propensity to invest with the algorithm. One can interpret this effect as trade-off between algorithm aversion and its cost. With rising costs (underperformance by the human), participants who are averse to algorithms overrule their aversion. The trade-off with performance is less strong than with fees, as for a two Euro fee difference almost all participants migrate to the algorithm. A plausible reason is that while fees are certain, it is uncertain whether an intermediary will be able to repeat prior outperformance. One could view choices at a zero payoff difference as a baseline for algorithm aversion. At a zero payoff difference for block t − 1, 48.7% choose the algorithm, and 50% do at a zero cumulative payoff difference. If intermediaries selected identical assets for all trials in block t − 1, 51.6% choose the algorithm. There seems to be neither algorithm aversion nor algorithm appreciation after equal performance.

We next examine the possibility that participants punish the algorithm more severely for bad performance. As the regression results do not condition on good or bad outcomes, the prediction of Hypothesis 3 might still be valid. Table 6 shows results of several regression models testing for this possibility. With the choice of the algorithm as dependent variable, we now split past payoff differences into cases when the algorithm outperforms the human fund manager and vice versa (cumulative payoffs in columns 1 and 2, and previous payoffs in columns 3 and 4). Coefficients are larger when the algorithm underperforms, suggesting a stronger sensitivity to bad outcomes by the algorithm. The effect size is between 20% and 60% larger than after good outcomes, but does not attain statistical significance.

We earlier defined an ex-post error as choosing the asset that then delivers the lower payoff in a given trial. Counting the number of such errors per block for both intermediaries is another way to test whether investors are quicker to abandon the investment algorithm. Participants’ sensitivity to errors by the algorithm is somewhat higher than to errors by the human manager (see columns 5 and 6; not statistically significant). However, human fund managers make more errors, which renders it quite natural that a single error bears less significance for judging them. A similar argument holds for payoff differences in favor of the human, which are less frequent and on average smaller justifying a stronger reaction on a per Euro basis. These statistics also explain why the found asymmetry does not produce algorithm aversion in the long-run. As the algorithm is the better intermediary, frequency and magnitude of outperformance more than compensate for the slightly lower sensitivity. We thus do not find evidence for Hypothesis 3.

So far, we treated repeated decisions for an intermediary the same way as a switch between intermediaries. Arguably, a switch has special significance in determining what considerations govern participants’ choices. We observe 105 switches to the algorithm and 98 switches to the human fund managers (at equal fees). On average, investors switch intermediaries two times. 30 participants never revise their initial choice, 19 switch once, 15 twice, and 38 switch three or more times. An optimal switching point for a Bayesian is once the chosen intermediary is overtaken by the non-chosen one in terms of accumulated payoffs.Footnote 11 We identify 109 such situations, which means that participants switch about twice as often as a Bayesian would. However, they seize 57% of the optimal switching opportunities.

In a logistic panel regression with observed switches as dependent variable, we confirm that optimal switching points have strong explanatory power (see Panel A of Table 7, column 1). When switching is optimal, we are 29% more likely to observe an actual switch. Participants might either look at the performance of the intermediary they currently invest with (own) or the one they might switch to (target). We find no conclusive evidence in that regard, whether we look at cumulative payoffs, last block payoffs, or number of errors (columns 2–4). Higher own performance always reduces the propensity to switch, while higher target performance increases it, both with very similar effect size. Last block results play a relatively large role for switching, consistent with the idea that new information is the strongest trigger of a switch. More important for algorithm aversion is switching behavior by intermediary type in Panel B. The regressions only include participants who have chosen the respective other intermediary in the previous block. When participants switch to the algorithm, they consider its performance and the performance of the human fund manager about equally. Likewise, switching to the human fund manager is informed almost symmetrically by the performance of the algorithm and human. We do not find any evidence for more pronounced switching behavior after errors by the algorithm. In fact, errors by the algorithm matter slightly less for switching. We thus do not find support for Hypothesis 3 from switching behavior.

4.5 Skill vs. luck

We presented evidence that participants strongly consider performance when selecting a financial intermediary. However, in the experimental setting as well as in reality, performance is only a noisy signal of true skill (Heuer et al. 2017). Participants in the experiment observe both decisions and outcomes of intermediaries. Decisions are the cleaner signal for skill, as outcomes depend on a random draw. Even in the good state, the stock can perform poorly and vice versa. We thus break down total performance of intermediaries into a component of skill and a component of luck. We examine to what extent participants are able to identify skill or confound skill with luck. The analysis serves to illustrate why a switch to a more skillful intermediary takes time and only occurs towards the end of the experiment.

Skill in the experiment can be defined as making an optimal decision conditional on the information available at each point in time. We calculate expected returns for each decision based on updated probabilities that the stock is in the good or bad state. Decisions based on Bayesian posteriors maximize expected returns. Selecting the asset with the higher expected return signals skill, but will not always be rewarded by a higher realized outcome. Participants should thus focus on decisions not outcomes, when evaluating skill. Positive or negative deviations from expected returns are the luck component in performance, as they are unpredictable at the time of the decision. For bond investments, luck is therefore always zero. For stock investments, luck can either be positive (outcome > expected outcome) or negative (outcome < expected outcome).Footnote 12

Table 8 summarizes luck and skill for both intermediaries aggregated by investment block. Average luck is not significantly different from zero for both intermediaries. This finding is not surprising, as consistent luck would defy the random nature of outcomes. The difference in total payoffs of 58 cents per block in favor of the algorithm is almost entirely due to skill. However, luck or bad luck in individual blocks can be large. When either intermediary outperforms the other within a block, luck drives this outperformance in 65% of the cases (due to its larger standard deviation). The table also details the average number of errors both intermediaries make within an investment block. While human fund managers make more errors of all types, algorithms also make 2.5 ex-post errors per block, i.e., they choose the asset that yields the lower payoff in the next trial.

To disentangle whether skill or luck is appreciated by investors, we include skill and luck as variables in a regression of investor choice (Table 9). As already established, participants respond to the payoff difference between intermediaries in block t − 1 (column 1). However, the effect of the payoff component produced by skill remains insignificant (column 2). In contrast, participants strongly react to luck, which also is the only relevant payoff component when we include both components simultaneously (columns 3 and 4). Although the assets in the experiment have simple payoff structures and investors possess the same information as intermediaries, they are unable to draw additional inferences from choices. They concentrate on outcomes in line with an outcome bias (Baron and Hershey 1988).

To corroborate this finding, we look at ex-post and ex-ante errors as defined in Sect. 4.2. Few ex-ante errors are a better signal of skill, as they measure how often an intermediary did not identify the correct state of the stock (good or bad). In contrast, ex-post errors include a major luck component as they depend on the outcome of the payoff draw. Indeed, participants react as expected to both types of errors, with a stronger effect of ex-post errors (columns 5 and 6). However, ex-ante and ex-post errors often coincide. Including both types of errors simultaneously reveals that ex-post errors crowd out the effect of ex-ante errors (column 7). We conclude that participants are unable to distinguish skill and luck in the experimental setting. Contrary to Hypothesis 3, they do not respond more strongly to errors by the algorithm using different types of error definitions.

5 Discussion

It can be seen as a limitation of the experiment that participants are university students. A young and educated participant population could have a greater inclination to invest with an algorithm than the general population. This is not entirely obvious, as earlier experiments find algorithm aversion for student populations even in the financial domain (Önkal et al. 2009). In this discussion, we will first point out why we think young adults are a relevant population to study. We will then review the finance literature on algorithm aversion to take stock of the results found for different investor populations. Finally, we will present results from a representative survey of German adults that uses questions from our survey.

Our student sample is similar in financial and technological sophistication to young professionals, often suggested to be a target customer base for financial service providers (Schwinn and Teo 2018; Oehler et al. 2021; Isaia and Oggero 2022). We believe that the behavior of young adults is interesting in its own right, even if other segments of the population showed higher algorithm aversion.Footnote 13 Of course, feedback effects exist between who is most open to financial technology and who fintech companies target as their customer base. For this reason, it is difficult to draw conclusions from observed demographics of robo-advisor clients. Empirically, D’Acunto et al. (2019) find no differences in age between adopters and non-adopters of robo-advisors, while Loos et al. (2020) find that robo-advised investors are younger than human-advised investors, but about the same age as self-directed investors.

Due to the challenges in identification, many studies on algorithm aversion use controlled experiments. For the following discussion, we refer again to Table 1, which summarizes relevant papers in finance that study algorithm aversion. The majority of papers finds evidence in support of algorithm aversion for different populations (students, crowd workers, and investors). An exception are Holzmeister et al. (2022), who find evidence for relative algorithm appreciation at low levels of delegation. Differences in experimental design can contribute to the variation in outcomes. For example, Önkal et al. (2009) and Filiz et al. (2021) study stock price forecasting, which is closest to the forecasting tasks dominating the general algorithm aversion literature. Participants do not know the return generating process of the stocks, and it remains uncertain how the algorithm works. A high level of uncertainty might contribute to high levels of algorithm aversion (Dietvorst and Bharti 2020). Several papers study adoption of intermediaries an as alternative to self-directed investing (David and Sade 2018; Litterscheidt and Streich 2020; Filiz et al. 2021; Holzmeister et al. 2022). This might decrease reliance on intermediaries due to overconfidence and illusion of control (Logg 2017). Finally, Niszczota and Kaszás (2020) use a subjective task that is morally contentious, which has also been shown to increase algorithm aversion (Castelo et al. 2019; Dietvorst and Bartels 2022).

We believe that these design features can at least partly explain the higher share of participants opting for the algorithm in our experiment. Like Holzmeister et al. (2022), we provide a lot of information to participants including the payoff structure of available assets and underlying probabilities. Participants further observe choices of the intermediaries in each trial, which allows them to make sense of their decisions and reduces uncertainty. We avoid a moral dimensions to the decisions, and subjectivity is limited to potential differences in risk preferences. Perhaps most importantly, we do not allow participants in the role of investors to invest themselves.

We use data from a representative survey of German adults (N = 2061) conducted with polling institute YouGov in 2019 to provide some evidence whether the presented results generalize (compare Merkle 2020). A filter question asks participants about their knowledge of financial algorithms: “For private investors who do not wish to invest directly in securities themselves, alternatives exist in the form of human fund managers or computer-based algorithms. Such algorithms are typically used by robo-advisors, for example. Have you ever heard of such investment algorithms?” 596 (29%) answer the question affirmatively. We use this sample for the analysis, as unlike in the experiment, there is no opportunity to learn about how an algorithm invests. We first show in Online Appendix Table C.1 that the subsample differs in multiple dimensions from the full sample. There is a higher share of male, educated, and higher-income people, who have an idea about algorithms and robo-advisors. We do not interpret this selection effect as algorithm aversion but rather as algorithm ignorance, which shows that the fintech industry still needs to raise awareness on how technological alternatives to traditional delegated investment work.

We next look into the perception of investment algorithms in the subsample of people who know algorithms. They answer the same questions as the student sample (see Table 2). On average participants have a favorable perception of algorithms on all dimensions with highest ratings for data aggregation and data weighting (see Online Appendix Table C.2). They are less convinced than students that algorithms will earn higher returns, but still view them positively in this dimension. They share the opinion that algorithms are rather an aid to humans than a competitor. Correlations between responses to individual questions are all below 0.4, alleviating concerns that participants just give similar ratings across the board.

Most interesting for the question of generalizability is to what extent demographics affect perceptions of algorithms and delegating investments to algorithms. We construct a variable for the aggregated perception of the algorithm from the questions discussed before, in the same way as we did for the student sample. We further use an indicator variable, whether people actually invest with a robo-advisor (self-reported). The overall percentage who do so is 7%, which reflects the quite low market penetration of robo-advisors. For comparison, 22% report to invest with a human fund manager and 37% with a human bank advisor (see also the empirical data for the German market in Loos et al. 2020). Finally, we ask a hypothetical question on how people would invest 20,000€ when they can only select between a human fund manager and an algorithm. This last question is probably closest to the initial choice in the experiment, even though the experiment provides much more structure to the investment decision. 48% decide for the algorithm in the hypothetical investment question.

The young age of student participants that potentially comes with greater openness to technology is a limitation of the experiment. We find that in the population survey, the age group between 18 and 24 is most likely to invest with the algorithm in the hypothetical survey question (60%) as well as with a robo-advisor in reality (12.5%). In fact the 60% are in the same range as the 56% first block choices in favor of the algorithm in the experiment. Among the oldest cohort in the survey (age 55 an over), 46% choose the algorithm in the hypothetical question. This is less than the youngest group (p = 0.07), but not significantly below 50%. In the intermediate age groups, the fraction investing with the algorithm is even closer to 50%. Real investment with robo-advisors is also least prevalent in the oldest cohort (4%). The univariate results suggest that older participants are less inclined to delegate investments to algorithms, but that there is no prevalent algorithm aversion in any age group. Age effects appear less strong than in Rossi and Utkus (2020), but their age groups are more extreme (with an average age of 74 in their oldest cohort).

To analyze this more formally, we run multivariate regressions with the three introduced variables as dependent variables (see Online Appendix Table C.3). Column 1 shows results for the aggregated perception of an investment algorithm. There are no strong correlations between demographic variables and how people view the algorithm (except for a positive effect of the number of children). Columns 2 to 4 show the results for the hypothetical investment. The aggregated perception of the algorithm has a positive but insignificant effect. Among the individual ratings, the belief that algorithms achieve higher returns has a strongly positive effect. This is consistent with the results in the experiment, in which likewise only return beliefs were significant. For actual investments with a robo-advisor (columns 5 to 7), the perceptions expressed in the survey have a much weaker relevance. Among the demographics, we find a negative effect of age and a positive effect of household income.

We conclude that in the population survey demographic variables do not explain much of the variation in algorithm aversion (or appreciation). Our interpretation is that inviting more diverse participants is unlikely to fundamentally change results in the experimental setting. However, there are several insights that matter for external validity. First, there are information costs that need to be overcome in a real world setting, which result in less than a third of a representative population sample to be acquainted with investment algorithms. Second, demographics such as age can have a stronger effect on actual investments than hypothetical investments, as actual investments are path dependent. Older investors started investing before robo-advisors existed and use established investment channels, while younger investors are more likely to look for new investment opportunities. We find a strong and reversed age effect for investing with a bank advisor. We do not necessarily view this as a manifestation of algorithm aversion, but this remains open for debate.

6 Conclusion

While the term “algorithm aversion” has been introduced only recently, there already exist numerous studies on human preferences for or against using algorithms. However, the literature is still undecided on the general prevalence of algorithm aversion. Partly this is due to the different contexts in which algorithm aversion is tested, and partly this is due to the different methodologies by which algorithm aversion is identified. As Logg (2017) points out, it is difficult to assess when and how algorithm aversion matters.

The aim of this study is to provide insights for financial decisions, as in finance the use of algorithms is not only theoretically promising, but also practically important. We test algorithm aversion in an experimental setting that is (necessarily) simplified, but that contains many features of real-world investment delegation. In particular, a real human fund manager selected by financial knowledge competes with a rule-based investment algorithm. They act as financial intermediaries for investors just as mutual funds or robo-advisors would. They operate in a financial market that reveals useful information, but at the same time is driven by chance. This means that they have opportunities to show their skill, but also inevitably will make errors. Experimental participants observe the performance and choices of the intermediaries and can react by changing their choice of intermediary.

Under these premises, we find no wide-spread algorithm aversion in the studied group of young adults. Participants acting as investors have a slight preference for the algorithm initially. After observing outcomes, they strongly favor the outperforming intermediary, but independent of intermediary type. We do not find support for the assumption that participants abandon algorithms after seeing them err. Instead, superior performance over time convinces them to switch to the algorithm. However, participants do not discern luck and skill and mostly rely on investment outcomes without considering the skill revealed by choices.

There are certain ways in which financial decisions differ from decisions typically studied in the algorithm aversion literature. Two prominent examples are university admissions and medical decisions, which are likely perceived as contexts in which human intuition or even human empathy should play a greater role. In these contexts, prospects of academic success and health of humans are judged, while in finance the prospects of (inanimate) financial assets are judged. The contexts might further differ in the weight people place on “soft factors” such as interviews and other direct communication, as opposed to quantitative strategies based on data (Capon et al. 1996). Finally, there is a moral dimension which deters people from allowing algorithms to make important life decisions on career or health that is presumably less pronounced for asset allocation. These considerations are in line with lower or absent algorithm aversion as we observe in the experiment.

Several practical implications for these service providers follow from our experiment. In the online survey, participants state they believe human fund managers to be better able to deal with outlier events (e.g., a financial crisis or pandemic). They also state they rather view algorithms as an aid to human fund managers than a competitor. These statements suggest that robo-advisors or rule-based funds are perceived to benefit from human experts. Financial intermediaries could adopt a hybrid approach in which human experts monitor algorithms and are able to intervene in case of extreme events. In private wealth management, traditionally relying more strongly on human interaction, hybrid approaches are tested in advice settings. Another way to reduce potential algorithm aversion is to communicate that any algorithm was ultimately programmed by humans. Robo-advisors such as Betterment and Wealthfront frame their services as being delivered by their team of experts (emphasizing the personal pronoun “we”).Footnote 14

As we find that performance but not skill in asset selection is rewarded, robo-advisors and algorithm-based funds may need relatively long time periods to establish any superiority they might possess. As with any investment, runs of bad luck might not be forgiven by investors. At least, we show that experimental participants do not punish algorithms more than human fund managers for bad returns. Still, financial service providers employing algorithms may want to highlight factors that contribute to performance but are not subject to luck. One such factor are lower fees, which prove to be very important in the experiment. Another example are algorithms designed to optimally exploit tax-loss harvesting.

Data availability

Experimental data will be made available in a data repository upon acceptance.

Notes

US-$26.8bn AUM for Betterment and US-$21.4bn for Wealthfront. Data retrieved from www.forbes.com/advisor/investing/top-robo-advisors-by-aum as of February 4, 2022.

Dietvorst et al. (2015) use light deception and tell participants that “the admissions office had created a statistical model that was designed to forecast student performance”.

There might be concerns that participants do not want to stand out and become fund manager. We make it clear in the instructions that the fund manager is appointed anonymously and not revealed to anyone. From the results in the literacy and numeracy tests, we conclude that participants spend effort on the tasks.

The difference in payoffs is statistically significant over all 120 blocks (12 human fund managers \(\times\) 10 blocks, \(p<0.05\)). However, participants observe only one pair of intermediaries. For them inference is much harder.

We generously count any decision as Bayesian in cases where the Bayesian decision is ambiguous (i.e., the algorithm randomizes). If we exclude such decisions, the fraction of Bayesian decisions by human fund managers is reduced to 76%.

Unlike Dietvorst et al. (2015), who explain to participants “that the model was sophisticated, put together by thoughtful analysts (p. 117)”. If anything, we should observe stronger algorithm aversion in presence of quality uncertainty.

This is an approximation as exact switching points (maximum WTP) cannot be identified. We instead use the mid-point of the fee interval, at which participants switch.

The simple mean is highly correlated with the first component of a principal component analysis. We exclude the question on perceiving the algorithm as a competitor or an aid, as the direction of this item is unclear.

As interactions in logistic regressions can be misleading, we estimate a linear probability model for robustness. While the main effects are very similar, we do not find an interaction either.

The matching is not perfect, as the risk tolerance score by Falk et al. (2018) does not classify investors neatly into risk averse, risk neutral, and risk tolerant. We define those who respond 4–6 on the 0 to 10 scale as risk neutral, 0–3 as risk averse and 7–10 as risk seeking.

This rule can be refined by considering decisions and not just outcomes. For the current purpose, optimality based on outcomes seems sufficient (see also Sect. 4.5).

Example: After three positive outcomes of the stock, the posterior probability for the good state is 0.927 and the expected return of the stock for trial 4 is $3.68 [\(=0.927(0.7\cdot \$5+0.3\cdot \$1)+0.073(0.3\cdot \$5+0.7\cdot \$1)\)]. Of a realized outcome of $5, we thus attribute $3.68 to skill and $1.32 to luck.

Age effects might be generation effects that will not repeat when the currently young generation ages (Carlin et al. 2017). In this interpretation, financial technology adoption is linked to growing up in the digital age.

See their web presence. For Betterment: “We’ll learn a bit about you.”, “We’ll build you a portfolio.”, or “We’re on a mission to help you make the most of your money.” For Wealthfront: “Live the life you want. We’ve got your back.”, “Financial planning and investing with Wealthfront couldn’t be easier. We do it for you.” Examples as of August 8, 2018.

References

Barber BM, Odean T (2001) Boys will be boys: gender, overconfidence, and common stock investment. Q J Econ 116:261–292

Baron J, Hershey JC (1988) Outcome bias in decision evaluation. J Pers Soc Psychol 54:569–579

Burton JW, Stein M-K, Jensen TB (2020) A systematic review of algorithm aversion in augmented decision making. J Behav Decis Mak 33:220–239

Capon N, Fitzsimons GJ, Prince RA (1996) An individual level analysis of the mutual fund investment decision. J Financ Serv Res 10:59–82

Carlin B, Olafsson A, Pagel M (2017) Fintech adoption across generations: financial fitness in the information age, NBER working paper

Castelo N, Bos MW, Lehmann DR (2019) Task-dependent algorithm aversion. J Mark Res 56:809–825

Chen S, Doerr S, Frost J, Gambacorta L, Shin HS (2021) The fintech gender gap, CEPR Discussion Paper No. DP16270

Cokely ET, Galesic M, Schulz E, Ghazal S, Garcia-Retamero R (2012) Measuring risk literacy: the Berlin numeracy test. Judgm Decis Mak 7:25–47