Abstract

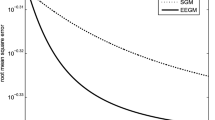

It has been shown that, by adding a chaotic sequence to the weight update during the training of neural networks, the chaos injection-based gradient method (CIBGM) is superior to the standard backpropagation algorithm. This paper presents the theoretical convergence analysis of CIBGM for training feedforward neural networks. We consider both the case of batch learning as well as the case of online learning. Under mild conditions, we prove the weak convergence, i.e., the training error tends to a constant and the gradient of the error function tends to zero. Moreover, the strong convergence of CIBGM is also obtained with the help of an extra condition. The theoretical results are substantiated by a simulation example.

Similar content being viewed by others

References

Ahmed SU, Shahjahan M, Murase K (2011) Injecting chaos in feedforward neural networks. Neural Process Lett 34:87–100

Behera L, Kumar S, Patnaik A (2006) On adaptive learning rate that guarantees convergence in feedforward networks. IEEE Trans Neural Netw 17(5):1116–1125

Bertsekas DP, Tsitsiklis JN (2000) Gradient convergence in gradient methods with errors. SIAM J Optim 3:627–642

Charalambous C (1992) Conjugate gradient algorithm for efficient training of artificial neural networks. Inst Electr Eng Proc 139:301–310

Fan QW, Zurada JM, Wu W (2014) Convergence of online gradient method for feedforward neural networks with smoothing L1/2 regularization penalty. Neurocomputing 131:208–216

Fine TL, Mukherjee S (1999) Parameter convergence and learning curves for neural networks. Neural Comput 11:747–769

Guo DQ (2011) Inhibition of rhythmic spiking by colored noise in neural systems. Cogn Neurodyn 5(3):293–300

Hagan MT, Mehnaj MB (1994) Training feedforward networks with Marquardt algorithm. IEEE Trans Neural Netw 5(6):989–993

Haykin S (2008) Neural networks and learning machines. Prentice Hall, New Jersey

Heskes T, Wiegerinck W (1996) A theoretical comparison of batch-mode, on-line, cyclic, and almost-cyclic learning. IEEE Trans Neural Netw 7(4):919–925

Ho KI, Leung CS, Sum JP (2010) Convergence and objective functions of some fault/noise-injection-based online learning algorithms for RBF networks. IEEE Trans Neural Netw 21(6):938–947

Iiguni Y, Sakai H, Tokumaru H (1992) A real-time learning algorithm for a multilayered neural netwok based on extended Kalman filter. IEEE Trans Signal Process 40(4):959–966

Karnin ED (1990) A simple procedure for pruning back-propagation trained neural networks. IEEE Trans Neural Netw 1:239–242

Li Y, Nara S (2008) Novel tracking function of moving target using chaotic dynamics in a recurrent neural network model. Cogn Neurodyn 2(1):39–48

Osowski S, Bojarczak P, Stodolski M (1996) Fast second order learning algorithm for feedforward multilayer neural network and its applications. Neural Netw 9(9):1583–1596

Shao HM, Zheng GF (2011) Boundedness and convergence of online gradient method with penalty and momentum. Neurocomputing 74:765–770

Sum JP, Leung CS, Ho KI (2012a) Convergence analyses on on-line weight noise injection-based training algorithms for MLPs. IEEE Trans Neural Netw Learn Syst 23(11):1827–1840

Sum JP, Leung CS, Ho KI (2012b) On-line node fault injection training algorithm for MLP networks: objective function and convergence analysis. IEEE Trans Neural Netw Learn Syst 23(2):211–222

Uwate Y, Nishio Y, Ueta T, Kawabe T, Ikeguchi T (2004) Performance of chaos and burst noises injected to the hopfield NN for quadratic assignment problems. IEICE Trans Fundam E87–A(4):937–943

Wang J, Wu W, Zurada JM (2011) Deterministic convergence of conjugate gradient method for feedforward neural networks. Neurocomputing 74:2368–2376

Wu W, Feng G, Li Z, Xu Y (2005) Deterministic convergence of an online gradient method for BP neural networks. IEEE Trans Neural Netw 16:533–540

Wu W, Wang J, Chen MS, Li ZX (2011) Convergence analysis on online gradient method for BP neural networks. Neural Netw 24(1):91–98

Wu Y, Li JJ, Liu SB, Pang JZ, Du MM, Lin P (2013) Noise-induced spatiotemporal patterns in Hodgkin–Huxley neuronal network. Cogn Neurodyn 7(5):431–440

Yoshida H, Kurata S, Li Y, Nara S (2010) Chaotic neural network applied to two-dimensional motion control. Cogn Neurodyn 4(1):69–80

Yu X, Chen QF (2012) Convergence of gradient method with penalty for Ridge Polynomial neural network. Neurocomputing 97:405–409

Zhang NM, Wu W, Zheng GF (2006) Convergence of gradient method with momentum for two-layer feedforward neural networks. IEEE Trans Neural Netw 17(2):522–525

Zhang C, Wu W, Xiong Y (2007) Convergence analysis of batch gradient algorithm for three classes of sigma–pi neural networks. Neural Process Lett 261:77–180

Zhang C, Wu W, Chen XH, Xiong Y (2008) Convergence of BP algorithm for product unit neural networks with exponential weights. Neurocomputing 72:513–520

Zhang HS, Wu W, Liu F, Yao MC (2009) Boundedness and convergence of online gradient method with penalty for feedforward neural networks. IEEE Trans Neural Netw 20(6):1050–1054

Zhang HS, Wu W, Yao MC (2012) Boundedness and convergence of batch back-propagation algorithm with penalty for feedforward neural networks. Neurocomputing 89:141–146

Zhang HS, Liu XD, Xu DP, Zhang Y (2014) Convergence analysis of fully complex backpropagation algorithm based on Wirtinger calculus. Cogn Neurodyn 8(3):261–266

Zheng YH, Wang QY, Danca MF (2014) Noise induced complexity: patterns and collective phenomena in a small-world neuronal network. Cogn Neurodyn 8(2):143–149

Acknowledgments

This work is partly supported by the National Natural Science Foundation of China (Nos. 61101228, 61301202, 61402071), the China Postdoctoral Science Foundation (No. 2012M520623), and the Research Fund for the Doctoral Program of Higher Education of China (No. 20122304120028).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Zhang, H., Zhang, Y., Xu, D. et al. Deterministic convergence of chaos injection-based gradient method for training feedforward neural networks. Cogn Neurodyn 9, 331–340 (2015). https://doi.org/10.1007/s11571-014-9323-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11571-014-9323-z