Abstract

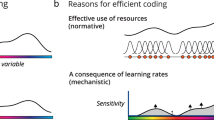

In this study, based on the view of statistical inference, we investigate the robustness of neural codes, i.e., the sensitivity of neural responses to noise, and its implication on the construction of neural coding. We first identify the key factors that influence the sensitivity of neural responses, and find that the overlap between neural receptive fields plays a critical role. We then construct a robust coding scheme, which enforces the neural responses not only to encode external inputs well, but also to have small variability. Based on this scheme, we find that the optimal basis functions for encoding natural images resemble the receptive fields of simple cells in the striate cortex. We also apply this scheme to identify the important features in the representation of face images and Chinese characters.

Similar content being viewed by others

Notes

Here we only consider un-biased estimators. A biased estimator may achieve lower inferential sensitivity, but it is at the expense of biased estimation.

Indeed, this knowledge can be only obtained after the basis functions are optimized. For instance, if the basis functions turn out to be localized and oriented, it tells us that the bar or edge like features are important in the representation of natural images, and other elements are relatively un-important and can be regarded as noise.

We follow the recommendation of Modern Chinese Language Frequently Used Characters.

References

Atick JJ (1992) Could information theory provide an ecological theory of sensory processing? Network-Comp Neural 3:213–251

Attneave F (1954) Some informational aspects of visual perception. Psychol Rev 61:183–193

Barlow HB (1961) Possible principles underlying the transformation of sensory messages. In: Rosenblith WA (ed) Sensory communication. MIT Press, Cambridge, MA

Barlow HB (1989) Unsupervised learning. Neural Comput 1:295–311

Becker S (1993) Learning to categorize objects using temporal coherence. In: Hanson SJ, Cowan JD, Giles CL (eds) Advances in neural information processing systems 5. Morgan Kaufmann, San Mateo, CA

Bell AJ, Sejnowski TJ (1997) The independent components of natural scenes are edge filters. Vision Res 37:3327–3338

Bishop CM (1996) Neural networks for pattern recognition. Oxford University Press

Field DJ (1994) What is the goal of sensory coding? Neural Comput 6:559–601

Földiák P (1991) Learning invariance from transformation sequences. Neural Comput 3:194–200

Hildebrandt TH, Liu WT (1993) Optical recognition of handwritten Chinese characters: Advances since 1980. Pattern Recognit 26:205–225

Hubel DH, Wiesel TN (1968) Receptive fields and functional architecture of monkey striate cortex. J Physiol 195:215–243

Hurri J, Hyvärinen A (2003) Simple-cell-like receptive fields maximize temporal coherence in natural video. Neural Comput 15:663–691

Hyvärinen A (1999) Sparse code shrinkage: denoising of nongaussian data by maximum likelihood estimation. Neural Comput 11:1739–1768

Laughlin SB (1981) A simple coding procedure enhances a neuron’s information capacity. Z Naturforsch C 36:910–912

Lewicki MS, Olshausen BA (1999) Probabilistic framework for the adaptation and comparison of image codes. J Opt Soc Am A 16:1587–1601

Li S, Wu S (2005) On the variability of cortical neural responses: a statistical interpretation. Neurocomputing 65–66:409–414

Li Z, Atick JJ (1994) Toward a theory of the striate cortex. Neural Comput 6:127–146

Olshausen BA, Field DJ (1996) Emergence of simple-cell receptive field properties by learning a sparse code for natural images. Nature 381:607–609

Olshausen BA, Field DJ (1997) Sparse coding with an overcomplete basis set: a strategy employed by V1? Vision Res 37:3311–3325

Palmer SE (1999) Vision science: photons to phenomenology. MIT Press, Cambridge, MA

Parzen E (1962) On estimation of a probability density function and mode. Ann Math Stat 33:1065–1076

Peng D, Ding G, Perry C, Xu D, Jin Z, Luo Q, Zhang L, Deng Y (2004) fMRI evidence for the automatic phonological activation of briefly presented words. Cognitive Brain Res 20:156–164

Principe JC, Xu D, Fisher JW (2000) Information-theoretic learning. In: Haykin S (ed) Unsupervised adaptive filtering, vol 1: Blind Source Separation. Wiley

Renyi A (1976) Some fundamental questions of information theory. In: Turan P (ed) Selected papers of Alfred Renyi, vol 2. Akademiai Kiado, Budapest

Salinas E (2006) How behavioral constraints may determine optimal sensory representations. PLoS Biol 4(12):e387

Schölkopf B, Smola AJ (2001) Learning with kernels: Support vector machines, regularization, optimization, and beyond. MIT Press, Cambridge, MA

Simoncelli EP, Olshausen BA (2001) Natural image statistics and neural representation. Annu Rev Neurosci 24:1193–1216

Stone JV (1996) Learning perceptually salient visual parameters using spatiotemporal smoothness constraints. Neural Comput 8:1463–1492

van Hateren JH, van der Schaaf A (1998) Independent component filters of natural images compared with simple cells in primary visual cortex. Proc R Soc Lond B 265:359–366

Vincent BT, Baddeley RJ (2003) Synaptic energy efficiency in retinal processing. Vision Res 43:1283–1290

Wiskott L, Sejnowski TJ (2002) Slow feature analysis: unsupervised learning of invariances. Neural Comput 14:715–770

Acknowledgements

We are very grateful to Peter Dayan. Without his instructive and inspirational discussions, the paper would exist in a rather different form. We also acknowledge valuable comments from Kingsley Sage and Jim Stone.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A: The Fisher information and the performance of LSE

The Fisher information

Since noise is independent Gaussian, the conditional probability of observing data I(x) given a is written as

where N is the number of data points and σ2 the noise strength.

The Fisher information matrix F is calculated to be

where F mn is the element in the mth row and nth column of F.

It is straightforward to check that

According to the Cramér-Rao bound, the inverse of the Fisher information defines the lower bound for decoding errors of un-biased estimators. Consider the covariance matrix for estimation errors of an un-biased estimator is given by \(\Omega_{mn}=\langle (\hat{a}_{m}-a_{m}) (\hat{a}_{n}-a_{n})\rangle\) . The Cramér-Rao bound states that \(\Omega \ge {\varvec F}^{-1}\) , or more exactly, the matrix \((\Omega-{\varvec F}^{-1}\)) is semi-positive definite. Intuitively, this means that the inverse of the Fisher information quantifies the minimum inferential sensitivity of un-biased estimators.

The asymptotical performance of LSE

It is straightforward to check that for independent Gaussian noise, LSE is equivalent to maximum likelihood inference, i.e., its solution is obtained through maximizing the log likelihood, \(\hbox{ln}\,\,p(I|{\varvec a})\) (comparing Eq. (3) with Eq. (17)). This implies the solution of LSE satisfies the condition,

Consider \(\hat{{\varvec a}}\) is sufficiently close to the true value a, the above equations can be approximated as (the first-order Taylor expansion at the point a)

By using Eq. (17), the above equations can be simplified as

Since noise are independent Gaussian, we have

where \(\epsilon_{i}\), for i = 1,...,N, are independent Gaussian random numbers of zero mean and variance σ2.

Combining Eqs. (22) and (23), we obtain the estimation error of LSE. Here, we only show the result for \(\hat{a}_{1}\) (the case for \(\hat{a}_{2}\) is similar), which is given by,

It is easy to check LSE is un-biased, i.e.,

The variance of \(\hat{a}_{1}\) is calculated to be

According to the Central Limiting Theorem, when the number of data points N is sufficiently large, the random variable \((\hat{a}_{1}-a_{1})\) will satisfy a normal distribution with the variance given by Eq. (26).

The covariance between the estimation errors of the two components can also be calculated, which is given by

It is straightforward to check that the covariance matrix of estimation errors of LSE, given by \(\Omega_{mn}=\langle (\hat{a}_{m}-a_{m})(\hat{a}_{n}-a_{n}) \rangle\), for m,n = 1,2, is the inverse of the Fisher information matrix F, i.e., \(\Omega{\varvec F}=1\). This implies LSE is asymptotically efficient.

Appendix B: Optimizing the basis functions of robust coding

The sensitivity measure H(a)

We choose the Renyi’s quadratic entropy to measure the variability of neural responses when natural images are presented, which is given by

Here for simplicity, we use a to replace a l , for \(l=1,\ldots,M\).

Suppose we have K sampled values of a which are obtained when K natural images are presented, then according to the Parzen window approximation (with the Gaussian kernel), \(p(a|{\varvec I})\) can be approximated as

where \(\{a^k\}\), for k = 1,...,K, represents the sampled values, and d is the width of Gaussian kernel.

Note that

Thus, we have

which fully depends on the sampled values.

The training procedure

Minimizing Eq. (15) is carried out by using the gradient descent method in two alternative steps, namely, (1) updating a while fixing ϕ and (2) updating ϕ while fixing a.

(1) Updating a

To apply the gradient descent method, the key is to calculate the gradient of E with respect to \(a_{l}^{k}\), for \(l=1,\ldots,M\) and k = 1,...,K.

For the first term in Eq. (15), we have

For the second term, we have

Combining Eqs. (32) and (33), we obtain the update rule for a:

where η is the learning rate, and \(\Delta a_{l}^{k}\) is given by

(2) Updating ϕ

Similarly, the update rule for ϕ is given by

Rights and permissions

About this article

Cite this article

Li, S., Wu, S. Robustness of neural codes and its implication on natural image processing. Cogn Neurodyn 1, 261–272 (2007). https://doi.org/10.1007/s11571-007-9021-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11571-007-9021-1