Abstract

Purpose

A profound education of novice surgeons is crucial to ensure that surgical interventions are effective and safe. One important aspect is the teaching of technical skills for minimally invasive or robot-assisted procedures. This includes the objective and preferably automatic assessment of surgical skill. Recent studies presented good results for automatic, objective skill evaluation by collecting and analyzing motion data such as trajectories of surgical instruments. However, obtaining the motion data generally requires additional equipment for instrument tracking or the availability of a robotic surgery system to capture kinematic data. In contrast, we investigate a method for automatic, objective skill assessment that requires video data only. This has the advantage that video can be collected effortlessly during minimally invasive and robot-assisted training scenarios.

Methods

Our method builds on recent advances in deep learning-based video classification. Specifically, we propose to use an inflated 3D ConvNet to classify snippets, i.e., stacks of a few consecutive frames, extracted from surgical video. The network is extended into a temporal segment network during training.

Results

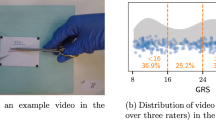

We evaluate the method on the publicly available JIGSAWS dataset, which consists of recordings of basic robot-assisted surgery tasks performed on a dry lab bench-top model. Our approach achieves high skill classification accuracies ranging from 95.1 to 100.0%.

Conclusions

Our results demonstrate the feasibility of deep learning-based assessment of technical skill from surgical video. Notably, the 3D ConvNet is able to learn meaningful patterns directly from the data, alleviating the need for manual feature engineering. Further evaluation will require more annotated data for training and testing.

Similar content being viewed by others

References

Ahmed K, Miskovic D, Darzi A, Athanasiou T, Hanna GB (2011) Observational tools for assessment of procedural skills: a systematic review. Am J Surg 202(4):469–480

Ahmidi N, Tao L, Sefati S, Gao Y, Lea C, Haro BB, Zappella L, Khudanpur S, Vidal R, Hager GD (2017) A dataset and benchmarks for segmentation and recognition of gestures in robotic surgery. IEEE Trans Biomed Eng 64(9):2025–2041

Bouget D, Allan M, Stoyanov D, Jannin P (2017) Vision-based and marker-less surgical tool detection and tracking: a review of the literature. Med Image Anal 35:633–654

Bradski G (2000) The OpenCV library. Dr. Dobb’s J Softw Tools 25(11):120–125

Bromley J, Guyon I, LeCun Y, Säckinger E, Shah R (1994) Signature verification using a “siamese” time delay neural network. In: NIPS, pp 737–744

Carreira J, Zisserman A (2017) Quo vadis, action recognition? A new model and the kinetics dataset. In: CVPR, pp 4724–4733

Chmarra MK, Grimbergen CA, Dankelman J (2007) Systems for tracking minimally invasive surgical instruments. Minim Invasive Ther Allied Technol 16(6):328–340

Doughty H, Damen D, Mayol-Cuevas WW (2018) Who’s better, who’s best: skill determination in video using deep ranking. In: CVPR, pp 6057–6066

Du X, Kurmann T, Chang PL, Allan M, Ourselin S, Sznitman R, Kelly JD, Stoyanov D (2018) Articulated multi-instrument 2D pose estimation using fully convolutional networks. IEEE Trans Med Imaging 37(5):1276–1287

Fard MJ, Ameri S, Darin Ellis R, Chinnam RB, Pandya AK, Klein MD (2018) Automated robot-assisted surgical skill evaluation: predictive analytics approach. Int J Med Robot 14(1):e1850

Gao Y, Vedula SS, Reiley CE, Ahmidi N, Varadarajan B, Lin HC, Tao L, Zappella L, Béjar B, Yuh DD, Chen CCG, Vidal R, Khudanpur S, Hager GD (2014) JHU-ISI gesture and skill assessment working set (JIGSAWS): a surgical activity dataset for human motion modeling. In: M2CAI

Goh AC, Aghazadeh MA, Mercado MA, Hung AJ, Pan MM, Desai MM, Gill IS, Dunkin BJ (2015) Multi-institutional validation of fundamental inanimate robotic skills tasks. J Urol 194(6):1751–1756

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: CVPR, pp 770–778

Ismail Fawaz H, Forestier G, Weber J, Idoumghar L, Muller PA (2018) Evaluating surgical skills from kinematic data using convolutional neural networks. In: MICCAI, pp 214–221

Ji S, Xu W, Yang M, Yu K (2013) 3D convolutional neural networks for human action recognition. IEEE Trans Pattern Anal Mach Intell 35(1):221–231

Jin A, Yeung S, Jopling J, Krause J, Azagury D, Milstein A, Fei-Fei, L (2018) Tool detection and operative skill assessment in surgical videos using region-based convolutional neural networks. In: WACV, pp 691–699

Kay W, Carreira J, Simonyan K, Zhang B, Hillier C, Vijayanarasimhan S, Viola F, Green T, Back T, Natsev P, Suleyman M, Zisserman A (2017) The kinetics human action video dataset. arXiv preprint arXiv:1705.06950

Kingma DP, Ba J (2015) Adam: a method for stochastic optimization. In: ICLR

Laina I, Rieke N, Rupprecht C, Vizcaíno JP, Eslami A, Tombari F, Navab N (2017) Concurrent segmentation and localization for tracking of surgical instruments. In: MICCAI, pp 664–672

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521(7553):436–444

Martin J, Regehr G, Reznick R, Macrae H, Murnaghan J, Hutchison C, Brown M (1997) Objective structured assessment of technical skill (OSATS) for surgical residents. Br J Surg 84(2):273–278

Paszke A, Gross S, Chintala S, Chanan G, Yang E, DeVito Z, Lin Z, Desmaison A, Antiga L, Lerer A (2017) Automatic differentiation in PyTorch. In: NIPS Workshops

Peters JH, Fried GM, Swanstrom LL, Soper NJ, Sillin LF, Schirmer B, Hoffman K, Sages FLS Committee (2004) Development and validation of a comprehensive program of education and assessment of the basic fundamentals of laparoscopic surgery. Surgery 135(1):21–27

Simonyan K, Zisserman A (2014) Two-stream convolutional networks for action recognition in videos. In: NIPS, pp 568–576

Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z (2016) Rethinking the inception architecture for computer vision. In: CVPR, pp 2818–2826

Tao L, Elhamifar E, Khudanpur S, Hager GD, Vidal R (2012) Sparse hidden markov models for surgical gesture classification and skill evaluation. In: IPCAI, pp 167–177

Tran D, Bourdev L, Fergus R, Torresani L, Paluri M (2015) Learning spatiotemporal features with 3D convolutional networks. In: ICCV, pp 4489–4497

Vedula SS, Ishii M, Hager GD (2017) Objective assessment of surgical technical skill and competency in the operating room. Annu Rev Biomed Eng 19:301–325

Wang L, Xiong Y, Wang Z, Qiao Y, Lin D, Tang X, Van Gool L (2016) Temporal segment networks: Towards good practices for deep action recognition. In: ECCV. Springer, pp 20–36

Wang L, Xiong Y, Wang Z, Qiao Y, Lin D, Tang X, Van Gool L (2018) Temporal segment networks for action recognition in videos. IEEE Trans Pattern Anal Mach Intell. https://doi.org/10.1109/TPAMI.2018.2868668

Wang Z, Majewicz Fey A (2018) Deep learning with convolutional neural network for objective skill evaluation in robot-assisted surgery. Int J Comput Assist Radiol Surg 13(12):1959–1970

Zach C, Pock T, Bischof H (2007) A duality based approach for realtime TV-L1 optical flow. In: Joint pattern recognition symposium. Springer, pp 214–223

Zia A, Essa I (2018) Automated surgical skill assessment in RMIS training. Int J Comput Assist Radiol Surg 13(5):731–739

Zia A, Sharma Y, Bettadapura V, Sarin EL, Essa I (2018) Video and accelerometer-based motion analysis for automated surgical skills assessment. Int J Comput Assist Radiol Surg 13(3):443–455

Acknowledgements

The authors would like to thank the Helmholtz-Zentrum Dresden-Rossendorf (HZDR) for granting access to their GPU cluster for running additional experiments during paper revision.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

This article does not contain any studies with human or animal subjects performed by any of the authors.

Informed consent

This articles does not contain patient data.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Funke, I., Mees, S.T., Weitz, J. et al. Video-based surgical skill assessment using 3D convolutional neural networks. Int J CARS 14, 1217–1225 (2019). https://doi.org/10.1007/s11548-019-01995-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-019-01995-1