Abstract

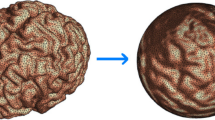

Nonnegativity has been shown to be a powerful principle in linear matrix decompositions, leading to sparse component matrices in feature analysis and data compression. The classical method is Lee and Seung’s Nonnegative Matrix Factorization. A standard way to form learning rules is by multiplicative updates, maintaining nonnegativity. Here, a generic principle is presented for forming multiplicative update rules, which integrate an orthonormality constraint into nonnegative learning. The principle, called Orthogonal Nonnegative Learning (ONL), is rigorously derived from the Lagrangian technique. As examples, the proposed method is applied for transforming Nonnegative Matrix Factorization (NMF) and its variant, Projective Nonnegative Matrix Factorization (PNMF), into their orthogonal versions. In general, it is well-known that orthogonal nonnegative learning can give very useful approximative solutions for problems involving non-vectorial data, for example, binary solutions. Combinatorial optimization is replaced by continuous-space gradient optimization which is often computationally lighter. It is shown how the multiplicative updates rules obtained by using the proposed ONL principle can find a nonnegative and highly orthogonal matrix for an approximated graph partitioning problem. The empirical results on various graphs indicate that our nonnegative learning algorithms not only outperform those without the orthogonality condition, but also surpass other existing partitioning approaches.

Similar content being viewed by others

References

Paatero P, Tapper U. Positive matrix factorization: A non-negative factor model with optimal utilization of error estimates of data values. Environmetrics, 1994, 5(2): 111–126

Lee D D, Seung H S. Learning the parts of objects by nonnegative matrix factorization. Nature, 1999, 401(6755): 788–791

Cichocki A, Zdunek R, Phan A H, Amari S-I. Nonnegative Matrix and Tensor Factorizations. Singapore: Wiley, 2009

Ding C, Li T, Peng W, Park H. Orthogonal nonnegative matrix t-factorizations for clustering. In: Proceedings of the 12th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. 2006, 126–135

Yang Z, Laaksonen J. Multiplicative updates for non-negative projections. Neurocomputing, 2007, 71(1–3): 363–373

Choi S. Algorithms for orthogonal nonnegative matrix factorization. In: Proceedings of IEEE International Joint Conference on Neural Networks. 2008, 1828–1832

Ding C, He X, Simon H D. On the equivalence of nonnegative matrix factorization and spectral clustering. In: Proceedings of SIAM International Conference of Data Mining. 2005, 606–610

Yuan Z, Oja E. Projective nonnegative matrix factorization for image compression and feature extraction. In: Proceedings of the 14th Scandinavian Conference on Image Analysis (SCIA 2005). Joensuu, Finland, 2005, 333–342

Oja E. Principal components, minor components, and linear neural networks. Neural Networks, 1992, 5(6): 927–935

Dhillon I, Guan Y, Kulis B. Kernel k-means, spectral clustering and normalized cuts. In: Proceedings of the 10th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. Seattle, WA, USA, 2004, 551–556

Yu S X, Shi J. Multiclass spectral clustering. In: Proceedings of the 9th IEEE International Conference on Computer Vision. 2003, 2: 313–319

Jolliffe I T. Principal Component Analysis. Berlin: Springer-Verlag, 2002

Diamantaras K, Kung S Y. Principal Component Neural Networks. New York: Wiley, 1996

Oja E. Subspace Methods of Pattern Recognition. Letchworth: Research Studies Press, 1983

Yang Z, Oja E. Linear and nonlinear projective nonnegative matrix factorization. IEEE Transactions on Neural Networks, 2009, accepted, to appear

Lloyd S P. Least square quantization in PCM. IEEE Transactions on Information Theory, 1982, 28(2): 129–137

Lee D D, Seung H S. Algorithms for non-negative matrix factorization. In: Proceedings of the Conference on Advances in Neural information Processing Systems. 2000, 556–562

Hoyer P O. Non-negative matrix factorization with sparseness constraints. Journal of Machine Learning Research, 2004, 5: 1457–1469

Xu W, Liu X, Gong Y. Document clustering based on non-negative matrix factorization. In: Proceedings of the 26th Annual International ACM SIGIR Conference on Research and Development in Informaion Retrieval. 2003, 267–273

Févotte C, Bertin N, Durrieu J-L. Nonnegative matrix factorization with the itakura-saito divergence: With application to music analysis. Neural Computation, 2009, 21(3): 793–830

Drakakis K, Rickard S, de Fréin R, Cichocki A. Analysis of financial data using non-negative matrix factorization. International Mathematical Forum, 2008, 3: 1853–1870

Young S S, Fogel P, Hawkins D M. Clustering scotch whiskies using non-negative matrix factorization. Joint Newsletter for the Section on Physical and Engineering Sciences and the Quality and Productivity Section of the American Statistical Association, 2006, 14(1): 11–13

Brunet J-P, Tamayo P, Golub T R, Mesirov J P. Metagenes and molecular pattern discovery using matrix factorization. Proceedings of the National Academy of Sciences of the United States of America, 2004, 101(12): 4164–4169

Ding C, Li T, Jordan M I. Convex and semi-nonnegative matrix factorizations. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2010, 32(1): 45–55

Sha F, Saul L K, Lee D D. Multiplicative updates for large margin classifiers. In: Proceedings of the 16th Annual Conference on Learning Theory and the 7th Kernel Workshop, COLT. 2003, 188–202

Cichocki A, Lee H, Kim Y-D, Choi S. Non-negative matrix factorization with α-divergence. Pattern Recognition Letters, 2008, 29(9): 1433–1440

Kivinen J, Warmuth M K. Exponentiated gradient versus gradient descent for linear predictors. Information and Computation, 1997, 132(1): 1–63

Yang Z, Yuan Z, Laaksonen J. Projective non-negative matrix factorization with applications to facial image processing. International Journal of Pattern Recognition and Artificial Intelligence, 2007, 21(8): 1353–1362

Chung F R K. Spectral Graph Theory. American Methematical Society, 1997

Newman M E J. Finding community structure in networks using the eigenvectors of matrices. Physical Review E, 2006, 74(3): 036104

Leicht E A, Holme P, Newman M E J. Vertex similarity in networks. Physical Review E, 2006, 73(2): 026120

Ye J, Zhao Z, Wu M. Discriminative K-means for clustering. In: Proceedings of the Conference on Advances in Neural Information Processing Systems. 2007, 1649–1656

Author information

Authors and Affiliations

Corresponding author

Additional information

Erkki Oja is Director of the Adaptive Informatics Research Centre and Professor of Computer Science at the Department of Information and Computer Science, Aalto University, Finland. He received his Dr. Sc. degree in 1977 and a honorary doctorate from Uppsala University, Sweden, in 2008. He has been post-doctoral research associate at Brown University, Providence, RI, and visiting professor at Tokyo Institute of Technology and Universite Paris I — Pantheon Sorbonne. Dr. Oja is the author or coauthor of more than 300 articles and book chapters on pattern recognition, image and signal processing, and machine learning, as well as three books: Subspace Methods of Pattern Recognition (RSP and J. Wiley, 1983), which has been translated into Chinese and Japanese, Kohonen Maps (Elsevier, 1999), and Independent Component Analysis (J. Wiley, 2001; Japanese translation, 2005; Chinese translation, 2006).

Dr. Oja’s research interests are in the study of statistical signal processing, pattern recognition, and data analysis. He is member of the editorial boards of several journals and program committees of several recent and upcoming conferences including ICANN, IJCNN, NIPS, and ICONIP. He is Chairman of the Research Council for Natural Sciences and Engineering of the Academy of Finland, member of the Finnish Academy of Sciences, Fellow of the IEEE, Founding Fellow of the International Association of Pattern Recognition (IAPR), Fellow of the INNS, and Past President of the European Neural Network Society (ENNS). He was recipient of the 2004 IAPR Pierre Devijver Award and the 2006 IEEE Computational Intelligence Society Pioneer Award.

About this article

Cite this article

Oja, E., Yang, Z. Orthogonal nonnegative learning for sparse feature extraction and approximate combinatorial optimization. Front. Electr. Electron. Eng. China 5, 261–273 (2010). https://doi.org/10.1007/s11460-010-0106-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11460-010-0106-y