Abstract

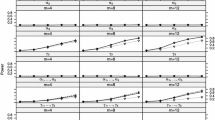

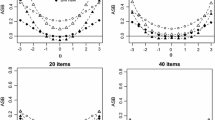

Standardized tests are frequently used for selection decisions, and the validation of test scores remains an important area of research. This paper builds upon prior literature about the effect of nonlinearity and heteroscedasticity on the accuracy of standard formulas for correcting correlations in restricted samples. Existing formulas for direct range restriction require three assumptions: (1) the criterion variable is missing at random; (2) a linear relationship between independent and dependent variables; and (3) constant error variance or homoscedasticity. The results in this paper demonstrate that the standard approach for correcting restricted correlations is severely biased in cases of extreme monotone quadratic nonlinearity and heteroscedasticity. This paper offers at least three significant contributions to the existing literature. First, a method from the econometrics literature is adapted to provide more accurate estimates of unrestricted correlations. Second, derivations establish bounds on the degree of bias attributed to quadratic functions under the assumption of a monotonic relationship between test scores and criterion measurements. New results are presented on the bias associated with using the standard range restriction correction formula, and the results show that the standard correction formula yields estimates of unrestricted correlations that deviate by as much as 0.2 for high to moderate selectivity. Third, Monte Carlo simulation results demonstrate that the new procedure for correcting restricted correlations provides more accurate estimates in the presence of quadratic and heteroscedastic test score and criterion relationships.

Similar content being viewed by others

References

Aguinis, H., & Whitehead, R. (1997). Sampling variance in the correlation coefficient under indirect range restriction: Implications for validity generalization. Journal of Applied Psychology, 82(4), 528–538.

Alexander, R. A., Alliger, G. M., & Hanges, P. J. (1984). Correcting for range restriction when the population variance is unknown. Applied Psychological Measurement, 8(4), 431–437.

Arneson, J. J., Sackett, P. R., & Beatty, A. S. (2011). Ability-performance relationships in education and employment settings critical tests of the more-is-better and the good-enough hypotheses. Psychological Science, 22(10), 1336–1342.

Berry, C. M., Sackett, P. R., & Sund, A. (2013). The role of range restriction and criterion contamination in assessing differential validity by race/ethnicity. Journal of Business and Psychology, 28, 345–359.

Brillinger, D. (1969). The calculation of cumulants via conditioning. Annals of the Institute of Statistical Mathematics, 21, 215–218.

Chan, W., & Chan, D. (2004). Bootstrap standard error and confidence intervals for the correlation corrected for range restriction: A simulation study. Psychological Methods, 9, 369–385.

Chernyshenko, O. S., & Ones, D. S. (1999). How selective are psychology graduate programs? The effect of the selection ratio on GRE score validity. Educational and Psychological Measurement, 59(6), 951–961.

Coward, W. M., & Sackett, P. R. (1990). Linearity of ability-performance relationships: A reconfirmation. Journal of Applied Psychology, 75(3), 297–300.

Cullen, M. J., Hardison, C. M., & Sackett, P. R. (2004). Using SAT-grade and ability-job performance relationships to test predictions derived from stereotype threat theory. Journal of Applied Psychology, 89(2), 220–230.

Culpepper, S. A. (2010). Studying individual differences in predictability with gamma regression and nonlinear multilevel models. Multivariate Behavioral Research, 45(1), 153–185.

Dobson, P. (1988). The correction of correlation coefficients for restriction of range when restriction results from the truncation of a normally distributed variable. British Journal of Mathematical and Statistical Psychology, 41(2), 227–234.

Fife, D. A., Mendoza, J. L., & Terry, R. (2013). Revisiting case IV: A reassessment of bias and standard errors of case IV under range restriction. British Journal of Mathematical and Statistical Psychology, 66(3), 521–542.

Greener, J., & Osburn, H. (1979). An empirical study of the accuracy of corrections for restriction in range due to explicit selection. Applied Psychological Measurement, 3, 31–41.

Greener, J., & Osburn, H. (1980). Accuracy of corrections for restriction in range due to explicit selection in heteroscedastic and nonlinear distributions. Educational and Psychological Measurement, 40, 337–346.

Gross, A. (1982). Relaxing the assumptions underlying corrections for restriction of range. Educational and Psychological Measurement, 42(3), 795–801.

Gross, A. (1990). A maximum likelihood approach to test validation with missing and censored dependent variables. Psychometrika, 55(3), 533–549.

Gross, A. (1997). Interval estimation of bivariate correlations with missing data on both variables: A Bayesian approach. Journal of Educational and Behavioral Statistics, 22(4), 407–424.

Gross, A. (2000). Bayesian interval estimation of multiple correlations with missing data: A Gibbs sampling approach. Multivariate Behavioral Research, 35(2), 201–227.

Gross, A., & Fleischman, L. (1983). Restriction of range corrections when both distribution and selection assumptions are violated. Applied Psychological Measurement, 7, 227–237.

Gross, A., & Fleischman, L. (1987). The correction for restriction of range and nonlinear regressions: An analytic study. Applied Psychological Measurement, 11, 211–217.

Gross, A., & Perry, P. (1983). Validating a selection test, a predictive probability approach. Psychometrika, 48(1), 113–127.

Gross, A., & Torres-Quevedo, R. (1995). Estimating correlations with missing data, a Bayesian approach. Psychometrika, 60(3), 341–354.

Hagglund, G., & Larsson, R. (2006). Estimation of the correlation coefficient based on selected data. Journal of Educational and Behavioral Statistics, 31, 377–411.

Harvey, A. (1976). Estimating regression models with multiplicative heteroscedasticity. Econometrica, 44, 461–465.

Held, J., & Foley, P. (1994). Explanations for accuracy of the general multivariate formulas in correcting for range restriction. Applied Psychological Measurement, 518, 355–367.

Holmes, D. (1990). The robustness of the usual correction for restriction in range due to explicit selection. Psychometrika, 55, 19–32.

Hsu, J. (1995). Sampling behaviour in estimating predictive validity in the context of selection and latent variable modelling: A Monte Carlo study. British Journal of Mathematical and Statistical Psychology, 48, 75–97.

Hunter, J., Schmidt, F., & Le, H. (2006). Implications of direct and indirect range restriction for meta-analysis methods and findings. Journal of Applied Psychology, 91, 594–612.

Kobrin, J., Sinharay, S., Haberman, S., & Chajewski, M. (2011). An investigation of the fit of linear regression models to data from an SAT validity study. Tech. Rep. 2011–3, College Board Research Report.

Lawley, D. (1944). A note on Karl Pearson’s selection formulæ. Proceedings of the Royal Society of Edinburgh Section A Mathematical and Physical Sciences, 62(01), 28–30.

Le, H., Oh, I., Robbins, S., Ilies, R., Holland, E., & Westrick, P. (2011). Too much of a good thing: Curvilinear relationships between personality traits and job performance. Journal of Applied Psychology, 96, 113–133.

Lee, R., & Foley, P. (1986). Is the validity of a test constant throughout the test score range? Journal of Applied Psychology, 71, 641–644.

Li, J., Chan, W., & Cui, Y. (2011). Bootstrap standard error and confidence intervals for the correlations corrected for indirect range restriction. British Journal of Mathematical and Statistical Psychology, 64(3), 367–387.

Li, J., Cui, Y., & Chan, W. (2013). Bootstrap confidence intervals for the mean correlation corrected for case IV range restriction: A more adequate procedure for meta-analysis. Journal of Applied Psychology, 98(1), 183–193.

Linn, R. (1983). Pearson selection formulas: Implications for studies of predictive bias and estimates of educational effects in selected samples. Journal of Educational Measurement, 20, 1–15.

Linn, R. (1984). Selection bias: Multiple meanings. Journal of Educational Measurement, 21, 33–47.

Little, R. J., & Rubin, D. B. (2014). Statistical analysis with missing data. Hoboken, NJ: John Wiley & Sons Inc.

Mendoza, J. (1993). Fisher transformations for correlations corrected for selection and missing data. Psychometrika, 58(4), 601–615.

Mendoza, J., Bard, D. E., Mumford, M., & Ang, S. C. (2004). Criterion-related validity in multiple-hurdle designs: Estimation and bias. Organizational Research Methods, 7(4), 418–441.

Mendoza, J., & Mumford, M. (1987). Corrections for attenuation and range restriction on the predictor. Journal of Educational Statistics, 12, 282–293.

Mendoza, J., Stafford, K. L., & Stauffer, J. M. (2000). Large-sample confidence intervals for validity and reliability coefficients. Psychological Methods, 5(3), 356–369.

Muthen, B., & Hsu, J. (1993). Selection and predictive validity with latent variable structures. British Journal of Mathematical and Statistical Psychology, 46, 255–271.

Muthen, B., Kaplan, D., & Hollis, M. (1987). On structural equation modeling with data that are not missing completely at random. Psychometrika, 52, 431–462.

Raju, N. S., & Brand, P. A. (2003). Determining the significance of correlations corrected for unreliability and range restriction. Applied Psychological Measurement, 27(1), 52–71.

Raju, N. S., Lezotte, D. V., Fearing, B. K., & Oshima, T. (2006). A note on correlations corrected for unreliability and range restriction. Applied Psychological Measurement, 30(2), 145–149.

Robie, C., & Ryan, A. (1999). Effect of nonlinearity and heteroscedasticity on the validity of conscientiousness in predicting overall job performance. International Journal of Selection and Assessment, 7, 157–169.

Sackett, P., & Yang, H. (2000). Correction for range restriction: An expanded typology. Journal of Applied Psychology, 85, 112–118.

Stauffer, J., & Mendoza, J. (2001). The proper sequence for correcting correlation coefficients for range restriction and unreliability. Psychometrika, 66, 63–68.

Yang, H., Sackett, P., & Nho, Y. (2004). Developing a procedure to correct for range restriction that involves both institutional selection and applicants’ rejection of job offers. Organizational Research Methods, 7, 442–455.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendix: Upper-Bound for \(\left| \rho -\rho _\mathrm{{c}}\right| \) in Educational and Employment Selection

Appendix: Upper-Bound for \(\left| \rho -\rho _\mathrm{{c}}\right| \) in Educational and Employment Selection

This section derives an expression for the most extreme quadratic polynomial for selection decisions, which is used to establish an upper-bound for \(\left| \rho -\rho _\mathrm{{c}}\right| \) attributed to a quadratic relationship. This Appendix addresses three issues. The first subsection identifies upper-bound equations for quadratic polynomials that satisfy a positive monotonicity constraint on a fixed interval. The second subsection presents equations for deriving \(\rho _\mathrm{{c}}\) based upon information about the relationship between \(X\) and \(Y\) in \(\pi \), the moments of \(X\) in \(\pi \) and \(\pi _s\), and the nature of selection on \(X\). The third subsection compliments the simulation study by examining the upper-bounds for \(\left| \rho -\rho _\mathrm{{c}}\right| \) in cases of extreme quadratic relationships when \(X\sim N\left( 0,1\right) \) and the distribution of \(Y|X\) is modeled as normal, but heteroscedastic.

1.1 Upper-Bound for Monotone Quadratic Functions

A foundational assumption employed in educational and psychological selection is that the relationship between \(X\) and \(Y\) should be monotonically increasing on a subinterval \(\Omega _\mathrm{{c}} = \left\{ X:X \in \left( -c,c\right) \right\} \) of the support for \(X\) (Arneson et al., 2011). In accordance with the available empirical research, let \(g\left( X\right) \) denote a monotonically increasing quadratic polynomial defined on \(\Omega _\mathrm{{c}}\) such that \(g\left( X\right) = \sum _{j=0}^2 \beta _j X^j\) and \(g^\prime \left( X\right) > 0\) for all \(X \in \Omega _\mathrm{{c}}\).

The variance of slopes for a linear function across the support of \(X\) is zero, so one measure for the degree of deviation from linearity is the variance in the slope of \(g\left( X\right) \) on the support of \(X\). Let the moments of the distribution for \(X\) be denoted as \(\mu _j = E\left( X^j\right) \). The variance of the slopes for the quadratic polynomial \(g\left( X\right) \) is

Equation 8 implies that the variance in slopes (and the deviation from linearity) increases as \(\beta _2\) increases in absolute value. Larger values of \(\beta _2\) imply a stronger quadratic relationship and the following theorem establishes necessary bounds on \(\beta _2\) and \(V\left[ g^\prime \left( X\right) \right] \) to ensure that a quadratic polynomial monotonically increases on \(\Omega _\mathrm{{c}}\).

Theorem 1

If \(g\left( X\right) \) satisfies positive monotonicity on \(\Omega _\mathrm{{c}}\), the coefficient for the quadratic term \(\beta _2\) is bounded as

Proof

For quadratic polynomials, the slope relating \(X\) to \(Y\) changes linearly as \(X\) changes. That is, \(g^\prime \left( X\right) = \beta _1 + 2 \beta _2 X\). Let \(c_0\) be an arbitrary value such that \(c_0 \not \in \Omega _\mathrm{{c}}\). A quadratic polynomial is monotone on the subinterval \(\left( -c_0,c_0\right) \) if \(g^\prime \left( X\right) =0\) at either \(X=-c_0\) and/or \(X=c_0\), which occurs when,

Clearly, \(\beta _2\) declines as \(c_0\) increases in absolute value, so the largest possible value for \(\beta _2\) is achieved when \(c_0 = c\) at the boundary of \(\Omega _\mathrm{{c}}\), which implies that \(V\left[ g^\prime \left( X\right) \right] \le \beta _1^2 \left( \mu _2-\mu _1^2\right) /c^2\). \(\square \)

1.2 Deriving \(\rho _\mathrm{{c}}\) for Nonlinear and Homoscedastic Relationships

Holmes (1990) presented a framework for assessing the effect that nonlinearity and heteroscedasticity have on corrections for range restriction. In particular, the probability density for \(X\) and \(Y\) in the unrestricted population \(\pi \) is

As noted by Holmes, selection on test scores only alters the marginal density of \(X\). Consequently, the joint density for the predictor and criterion in the restricted population \(\pi _s\) is

where \(s\) is included in the subscript to denote that \(p_s\left( X\right) \) is the density of \(X\) in \(\pi _s\).

Let \(E\) and \(V\) denote the expected value and variance in the unrestricted population and \(E_s\) and \(V_s\) moments in the restricted population. Holmes et al. (1990) showed how the Laws of Total Variance and Covariance (Brillinger, 1969) can be used to represent the covariance between \(X\) and \(Y\) and the criterion variance in the restricted population (i.e., \(\sigma _{xys}\) and \(\sigma _{ys}^2\)),

Note that Equations 13 and 14 are used to compute the restricted correlation \(\rho _s\) that is corrected for selection with Equation 1. That is, \(\rho _s\) is

which is used with Equation 1.

The derivations in this paper specify the following relationship between \(X\) and \(Y\) in \(\pi \):

where \(g\left( X\right) \) is a nonlinear function describing how \(E\left( Y|X\right) \) changes as a function of \(X\), \(u\) is a random error that is unrelated to \(X\) and has a mean of zero and variance of \(\sigma _u^2\), and \(h\left( X\right) \) is a function used to describe the pattern of nonconstant error variance as a function of \(X\). Note that \(V\left( Y|X\right) = \left( h\left( X\right) \right) ^2\sigma _u^2\). The Law of Total Variance implies that the amount of variance that \(g\left( X\right) \) accounts for in \(Y\) in \(\pi \) is

Recall that prior empirical research in educational and employment selection found evidence that the order of \(g\left( X\right) \) does not exceed a quadratic polynomial, which implies that, \(V \left[ g\left( X\right) \right] \) is

where the last equality was obtained by substituting the upper-bound value for \(\beta _2\) and \(sgn\left( \beta _2\right) \) denotes the sign of \(\beta _2\) where \(\beta _2<0\) implies a concave function and \(\beta _2>0\) indicates a convex function.

The proportion of variance accounted by \(g\left( X\right) \) in the restricted population is

Similar to Equation 18, \(V_s \left[ g\left( X\right) \right] \) for the most extreme quadratic and monotone polynomial is defined as

where \(\mu _{js} = E_s\left( X^j\right) \) are moments in \(\pi _s\).

Let the restricted correlation for the upper-bound value of \(\beta _2\) for a given heteroscedastic pattern be denoted as \(\rho _s\left( \beta _2\right) \) and define the corrected correlation that uses \(\rho _s\left( \beta _2\right) \) with Equation 1 as \(\rho _\mathrm{{c}}\left( \beta _2\right) \). Note that \(\rho _\mathrm{{c}}\left( \beta _2\right) \) represents the corrected correlation when linearity and homoscedasticity are violated. Consequently, the bias of the corrected correlation attributed to satisfying the upper-bound quadratic relationship is

1.3 Examination of Upper-Bound for \(\rho -\rho _\mathrm{{c}}\) for \(X\sim N\left( 0,1\right) \) Case

This subsection presents specific results on the bias of \(\rho _\mathrm{{c}}\) when the relationship between \(X\) and \(Y\) is nonlinear and heteroscedastic and \(X\sim N\left( 0,1\right) \). The discussion in this subsection proceeds by deriving an expression for \(\rho -\rho _\mathrm{{c}}\). The results in this section use the following definitions for the marginal and conditional distributions (Harvey, 1976),

where \(\phi \) denotes the normal density. It is important to note that \(p\left( Y|X\right) \) in Equation 23 assumes that the errors around \(g\left( X\right) \) are normally distributed, but that the error variance differs across values of \(X\). This section considers the case where decision-makers use cut scores to select applicants. For instance, only applicants with \(X>x^*\) are selected, which implies that \(p_s\left( X\right) \) is a truncated normal distribution at \(x^*\). Furthermore, the following equation for \(h\left( X\right) \) is used:

Equation 24 is a nonnegative function and has accordingly been used in the literature to model nonconstant error variance that either increases or decreases with \(X\). Note that \(\gamma _1<0\) in Equation 24 is consistent with prior research (Held & Foley, 1994; Kobrin et al., 2011; Lee & Foley, 1986).

It is important to note several properties of the normal distribution. Namely, the first four moments of the standard normal distribution are defined as \(\mu _1 = 0\), \(\mu _2 = 1\), \(\mu _3 = 0\), and \(\mu _4 = 3\). The moments can be used to find the covariances among different powers of \(X\). For instance, \(\sigma _{x,x^2} = \mu _3 - \mu _1 \mu _2 = 0\) and \(\sigma _{x^2}^2=\mu _4 - \mu _2^2=2\).

Let \({\varvec{\Sigma }}\) denote the covariance among the \(X\) variables in \(\pi \) and \({\varvec{\beta }}^\prime = \left( \beta _0\;\beta _1\; \beta _2\right) ^\prime \) be the vector of coefficients and note that \(V\left[ g\left( X\right) \right] = {\varvec{\beta }}^\prime {\varvec{\Sigma }} \varvec{\beta }\). An expression for \(E\left[ h\left( X\right) ^2\right] \) can be found using the moment generating function (MGF) for the normal distribution. More precisely, the MGF for a \(\phi \left( \mu , \sigma ^2\right) \) distribution in \(\pi \) is

which implies that

For the case where \(X\) is standard normal, the aforementioned results imply that \(\rho ^2\) is defined as

Recall that \(\rho _s^2\) is the validity coefficient in \(\pi _s\) that assumes a linear relationship between \(X\) and \(Y\). An expression for \(\rho _s^2\) is a function of the \(\sigma _{xys}\), \(\sigma _{xs}^2\) and \(\sigma _{ys}^2\), which are available from the MGF of the truncated normal distribution. The MGF for the normal distribution truncated at \(x^*\) is

where \(\alpha = \left( x^*-\mu \right) \sigma ^{-1}\). Accordingly, \(\mu _{js} = E_s\left( X^j\right) ={\mathcal {M}}_s^{(j)}\left( t=0\right) \) and \(E_s\left[ h\left( X\right) ^2\right] = e^{\gamma _0} {\mathcal {M}}_s \left( t=\gamma _1\right) \). For this example, Equation 13 implies that \(\sigma _{xys}\) is defined as

Also, note that \(\sigma _{xs}^2=\mu _{2s}-\mu _{1s}^2\). Let \({\varvec{\Sigma }}_s\) denote the variance–covariance matrix among the powers of \(X\) in \(\pi _s\) and note that \(V_s\left[ g\left( X\right) \right] = {\varvec{\beta }}^\prime {\varvec{\Sigma }}_s {\varvec{\beta }}\). Equation 14 implies that \(\sigma _{ys}^2\) is defined as,

The squared correlation that assumes a linear relationship in \(\pi _s\) is

Note that \(c = 3\) was chosen for the boundaries in \(\Omega _\mathrm{{c}}\) given that a standard normal distribution for \(X\) was examined, which implies that the quadratic polynomials were defined as \(\beta _2 = \pm \beta _1/6\) depending upon whether the relationship is concave (i.e., \(\beta _2<0\)) or convex (i.e., \(\beta _2>0\)). Prior empirical research has estimated range-restriction-corrected validity coefficients in the range between 0.4 and 0.8 (Berry, Sackett, & Sund, 2013; Chernyshenko & Ones, 1999) and it is possible to find values of \(\beta _1\) that are consistent with prior research. In the case where \(X\sim N\left( 0,1\right) \), \(\beta _2 = \pm \beta _1/\left( 2 c\right) \), \(\gamma _0=0\), and \(\gamma _1=0\), \(\rho ^2 = \beta _1^2\left( \beta _1^2 + \frac{2c^2}{1+2c^2}\right) ^{-1}\), which implies that \(\beta _1 = \left( \frac{\rho ^2}{1-\rho ^2} \frac{2c^2}{1+2c^2}\right) ^{1/2}\). The model assumes the quadratic maximum/minimum occurs at the extremes of the test score distribution. Recall that 99.7 % of observations fall between \(\pm 3\) for the standard normal distribution, so \(c=3\) implies that \(\rho = 0.4\) when \(\beta _1 = 0.425\) and \(\rho =0.8\) when \(\beta _1 = 1.298\). Consequently, the simulation study examines the bias of \(\rho _\mathrm{{c}}\) for \(\beta _1 \in \left[ 0.4,1.4\right] \), which yields values of \(\rho \) that are representative of estimates observed by applied researchers.

Rights and permissions

About this article

Cite this article

Culpepper, S.A. An Improved Correction for Range Restricted Correlations Under Extreme, Monotonic Quadratic Nonlinearity and Heteroscedasticity. Psychometrika 81, 550–564 (2016). https://doi.org/10.1007/s11336-015-9466-9

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11336-015-9466-9