Abstract

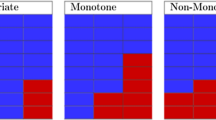

Missing data occur in many real world studies. Knowing the type of missing mechanisms is important for adopting appropriate statistical analysis procedure. Many statistical methods assume missing completely at random (MCAR) due to its simplicity. Therefore, it is necessary to test whether this assumption is satisfied before applying those procedures. In the literature, most of the procedures for testing MCAR were developed under normality assumption which is sometimes difficult to justify in practice. In this paper, we propose a nonparametric test of MCAR for incomplete multivariate data which does not require distributional assumptions. The proposed test is carried out by comparing the distributions of the observed data across different missing-pattern groups. We prove that the proposed test is consistent against any distributional differences in the observed data. Simulation shows that the proposed procedure has the Type I error well controlled at the nominal level for testing MCAR and also has good power against a variety of non-MCAR alternatives.

Similar content being viewed by others

References

Chen, H. Y., & Little, R. (1999). A test of missing completely at random from generalised estimating equation with missing data. Biometrika, 86, 1–13.

Davison, A. C., & Hinkley, D. V. (1997). Bootstrap methods and their application. Oxford: Cambridge University Press.

Efron, B., & Tibshirani, R. (1993). An introduction to bootstrap. London: Chapman & Hall.

Fuchs, C. (1982). Maximum likelihood estimation and model selection in contingency tables with missing data. Journal of the American Statistical Association, 77, 270–278.

Jamshidian, M., & Jalal, S. (2010). Tests of homoscedasticity, normality and missing completely at random for incomplete multivariate data. Psychometrika, 75, 649–674.

Kim, K. H., & Bentler, P. M. (2002). Tests of homogeneity of means and covariance matrices for multivariate incomplete data. Psychometrika, 67, 609–624.

Little, R. J. A. (1988). A test of missing completely at random for multivariate data with missing values. Journal of the American Statistical Association, 83, 1198–1202.

Little, R. J. A., & Rubin, D. B. (2002). Statistical analysis with missing data (2nd ed.). New York: Wiley.

Qu, A., & Song, P. X. K. (2002). Testing ignorable missingness in estimating equation approaches for longitudinal data. Biometrika, 89, 841–850.

Rizzo, M. L., & Székely, G. J. (2010). DISCO analysis: A nonparametric extension of analysis of variance. The Annals of Applied Statistics, 4, 1034–1055.

Rubin, D. B. (1976). Inference and missing data. Biometrika, 63, 581–592.

Székely, G. J., & Rizzo, M. L. (2005). A new test for multivariate normality. Journal of Multivariate Analysis, 93, 58–80.

Acknowledgments

The authors thank the Editor, the Associate Editor, and the referees for their thoughtful and constructive comments, which have helped improve our article. This research has been supported in part by Institute of Education Sciences Grant R305D090019.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Proof of Proposition 1

We first denote the vector of the \(p\) random variables by \({\varvec{y}}\), and the vector of missing indicators for each of the \(p\) random variables as \({\varvec{r}}\). We define the joint density function of \({\varvec{y}}\) and \({\varvec{r}}\) as \(f({\varvec{y}},{\varvec{r}})\). We further define \({\varvec{r}}_{\varvec{i}}\) as the vector of missing indicators for the \(i\)th missing-pattern group, and \(f_{i}({\varvec{y}})\) as the joint density function of the \(p\) variables (including the variables both observed and missing) for the \(i\)th missing-pattern group, \(i=1,\ldots ,s\). It is clear that \(f_{i}({\varvec{y}})=f({\varvec{y}}|{\varvec{r}}_i)\).

We first prove that, if the missingness is MCAR, \(F_1=\cdots =F_s\). Based on the definition of MCAR, the missingness does not depend on the data, which implies \(f({\varvec{r}}|{\varvec{y}})=f({\varvec{r}})\). Therefore, \(f({\varvec{y}},{\varvec{r}})=f({\varvec{r}}|{\varvec{y}}) f({\varvec{y}})=f({\varvec{r}})f({\varvec{y}})\). Since \(f({\varvec{y}},{\varvec{r}})=f({\varvec{y}}|{\varvec{r}})f({\varvec{r}})\), we have \(f({\varvec{y}}|{\varvec{r}})=f({\varvec{y}})\). This further implies that \(f_{1}({\varvec{y}})=\cdots =f_{s}({\varvec{y}})\). In other words, \(F_1=\cdots =F_s\).

Next, we prove that, if \(F_1=\cdots =F_s\), the missingness is MCAR. Since \(F_1=\cdots =F_s,\, f_{1}({\varvec{y}})=\cdots =f_{s}({\varvec{y}})\), i.e., \(f({\varvec{y}}|{\varvec{r}}_1)=\cdots =f({\varvec{y}}|{\varvec{r}}_s)=f({\varvec{y}})\). Therefore, we have

which suggests that the missingness is independent of the data. Therefore, the missingness is MCAR.

Proof of Theorem 1

Suppose the null hypothesis is false, say \(F_{k,\mathbf{o}_{kl}} \ne F_{l,\mathbf{o}_{kl}}\) for some \(k \ne l \in \{1,\ldots ,s\}\) and \(\mathbf{o}_{kl} \ne \emptyset \). Since \(Q=\frac{B/(s-1)}{W/(n-s)},\, B=\sum _{\begin{array}{c} 1 \le i<j\le s \\ \mathbf{o}_{ij} \ne \emptyset \end{array}} (\frac{n_in_j}{2n})d (\mathbb {Y}_{i,\mathbf{o}_{ij}},\mathbb {Y}_{j,\mathbf{o}_{ij}})\), and \(d(\mathbb {Y}_{i,\mathbf{o}_{ij}},\mathbb {Y}_{j,\mathbf{o}_{ij}})\) is always nonnegative, we have

Therefore,

Since \(W=\sum _{i=1}^{s} n_{i}g(\mathbb {Y}_{i,\mathbf{o}_i},\mathbb {Y}_{i,\mathbf{o}_i})/2\) and \(n_ig(\mathbb {Y}_{i,\mathbf{o}_i},\mathbb {Y}_{i,\mathbf{o}_i})/(n_i-1)\) a \(U\)-statistic, based on the properties of \(U\)-statistics,

where \(\eta _{i}\) is a constant. This implies that

where \(\lambda _i=\lim _{n\rightarrow \infty }\frac{n_i}{n_{1}+\cdots +n_{s}}\). Therefore,

Next we show that \(c_{\alpha }\) is bounded above by a constant which does not depend on \(n\). Recall that \(Q=\frac{B/(s-1)}{W/(n-s)},\, B=\sum _{\begin{array}{c} 1 \le i<j\le s \\ \mathbf{o}_{ij} \ne \emptyset \end{array}} (\frac{n_in_j}{2n})d(\mathbb {Y}_{i,\mathbf{o}_{ij}},\mathbb {Y}_{j,\mathbf{o}_{ij}})\). Denote the number of the pairs \((i,j)\) satisfying \(1 \le i<j\le s \) and \( \mathbf{o}_{ij} \ne \emptyset \) by \(t\). In other words, there are \(t\) terms in \(B\). Clearly, \(t \le s(s-1)/2\). Therefore, for any \(k\),

and

Based on Székely and Rizzo (2005), under the null hypothesis of equal distributions,\(n_in_jd(\mathbb {Y}_{i,\mathbf{o}_{ij}},\mathbb {Y}_{j,\mathbf{o}_{ij}})/(n_i+n_j)\) converges in distribution to a quadratic form

where the \(Z_l\) are independent standard normal random variables, and the \(\omega _{l}\) are positive constants and do not depend on \(n\). Therefore, we can choose \(k=k_{\alpha }\), a constant which does not depend on \(n\), such that

For such a \(k_{\alpha }\), we have \(\lim _{n\rightarrow \infty }P(Q>k_{\alpha }) \le \alpha \) under \(H_0\) based on (5). Since \(\lim _{n\rightarrow \infty }P(Q>c_{\alpha })=\alpha \) under \(H_0,\, \lim _{n\rightarrow \infty } c_{\alpha } \le k_{\alpha }\). Therefore, we have shown that \(c_{\alpha }\) bounded above by \(k_{\alpha }\), a constant which does not depend on \(n\).

Applying this result to (4), we have \(c_{\alpha }(s-1) \sum _{i=1}^s \lambda _i\eta _i/(n\lambda _k\lambda _l) \rightarrow 0\), as \(n \rightarrow \infty \). Since \(d(\mathbb {Y}_{k,\mathbf{o}_{kl}},\mathbb {Y}_{l,\mathbf{o}_{kl}})\) is a V-statistic, \(d(\mathbb {Y}_{k,\mathbf{o}_{kl}},\mathbb {Y}_{l,\mathbf{o}_{kl}})\) converges in probability to \(0\) if \(F_{k,\mathbf{o}_{kl}}= F_{l,\mathbf{o}_{kl}}\), and to some nonzero constant if \(F_{k,\mathbf{o}_{kl}} \ne F_{l,\mathbf{o}_{kl}}\). Therefore,

which implies that \(\lim _{n\rightarrow \infty }P(Q>c_{\alpha })=1\). As a result, our \(Q\) test is consistent. This completes the proof.

Rights and permissions

About this article

Cite this article

Li, J., Yu, Y. A Nonparametric Test of Missing Completely at Random for Incomplete Multivariate Data. Psychometrika 80, 707–726 (2015). https://doi.org/10.1007/s11336-014-9410-4

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11336-014-9410-4