Abstract

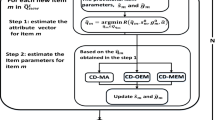

Item replenishing is essential for item bank maintenance in cognitive diagnostic computerized adaptive testing (CD-CAT). In regular CAT, online calibration is commonly used to calibrate the new items continuously. However, until now no reference has publicly become available about online calibration for CD-CAT. Thus, this study investigates the possibility to extend some current strategies used in CAT to CD-CAT. Three representative online calibration methods were investigated: Method A (Stocking in Scale drift in on-line calibration. Research Rep. 88-28, 1988), marginal maximum likelihood estimate with one EM cycle (OEM) (Wainer & Mislevy In H. Wainer (ed.) Computerized adaptive testing: A primer, pp. 65–102, 1990) and marginal maximum likelihood estimate with multiple EM cycles (MEM) (Ban, Hanson, Wang, Yi, & Harris in J. Educ. Meas. 38:191–212, 2001). The objective of the current paper is to generalize these methods to the CD-CAT context under certain theoretical justifications, and the new methods are denoted as CD-Method A, CD-OEM and CD-MEM, respectively. Simulation studies are conducted to compare the performance of the three methods in terms of item-parameter recovery, and the results show that all three methods are able to recover item parameters accurately and CD-Method A performs best when the items have smaller slipping and guessing parameters. This research is a starting point of introducing online calibration in CD-CAT, and further studies are proposed for investigations such as different sample sizes, cognitive diagnostic models, and attribute-hierarchical structures.

Similar content being viewed by others

References

Ban, J.-C., Hanson, B.H., Wang, T., Yi, Q., & Harris, D.J. (2001). A comparative study of on-line pretest item-calibration/scaling methods in computerized adaptive testing. Journal of Educational Measurement, 38, 191–212.

Ban, J.-C., Hanson, B.H., Yi, Q., & Harris, D.J. (2002). Data sparseness and online pretest item calibration/scaling methods in CAT (ACT Research Report 02-01). Iowa City, IA, ACT, Inc. Available at http://www.eric.ed.gov/ERICDocs/data/ericdocs2sql/content_storage_01/0000019b/80/19/da/e9.pdf.

Chang, Y.-C.I., & Lu, H. (2010). Online calibration via variable length computerized adaptive testing. Psychometrika, 75, 140–157.

Cheng, Y. (2009). When cognitive diagnosis meets computerized adaptive testing. Psychometrika, 74, 619–632.

Cheng, Y., & Chang, H. (2007). The modified maximum global discrimination index method for cognitive diagnostic computerized adaptive testing. Paper presented at the 2007 GMAC Conference on Computerized Adaptive Testing, McLean, USA, June.

Dibello, L.V., Stout, W.F., & Roussos, L.A. (1995). Unified cognitive/psychometric diagnostic assessment likelihood-based classification techniques. In P. Nichols, S. Chipman, & R. Brennan (Eds.), Cognitively diagnostic assessments (pp. 361–389). Hillsdale: Erlbaum.

de la Torre, J. (2009). DINA model and parameter estimation: a didactic. Journal of Educational and Behavioral Statistics, 34, 115–130.

de la Torre, J., & Douglas, J.A. (2004). Higher-order latent trait models for cognitive diagnosis. Psychometrika, 69, 333–353.

Doignon, J.P., & Falmagne, J.C. (1999). Knowledge spaces. New York: Springer.

Embretson, S. (1984). A general latent trait model for response processes. Psychometrika, 49, 175–186.

Embretson, S., & Reise, S. (2000). Item response theory for psychologists. Mahwah: Erlbaum.

Fedorov, V.V. (1972). Theory of optimal design. New York: Academic Press.

Haertel, E.H. (1989). Using restricted latent class models to map the skill structure of achievement items. Journal of Educational Measurement, 26, 333–352.

Hartz, S.M. (2002). A Bayesian framework for the unified model for assessing cognitive abilities: Blending theory with practicality (Unpublished doctoral dissertation). University of Illinois at Urbana-Champaign, Urbana-Champaign, IL.

Junker, B.W., & Sijtsma, K. (2001). Cognitive assessment models with few assumptions, and connections with nonparametric item response theory. Applied Psychological Measurement, 25, 258–272.

Leighton, J.P., Gierl, M.J., & Hunka, S.M. (2004). The attribute hierarchy method for cognitive assessment: a variation on Tatsuoka’s rule-space approach. Journal of Educational Measurement, 41, 205–237.

Liu, H., You, X., Wang, W., Ding, S., & Chang, H. (2010). Large-scale applications of cognitive diagnostic computerized adaptive testing in China. Paper presented at the annual meeting of National Council on Measurement in Education, Denver, CO, April.

Macready, G.B., & Dayton, C.M. (1977). The use of probabilistic models in the assessment of mastery. Journal of Educational Statistics, 33, 379–416.

Makransky, G. (2009). An automatic online calibration design in adaptive testing. Paper presented at the 2007 GMAC Conference on Computerized Adaptive Testing, McLean, USA, June.

Maris, E. (1999). Estimating multiple classification latent class models. Psychometrika, 64, 187–212.

McGlohen, M.K. (2004). The application of cognitive diagnosis and computerized adaptive testing to a large-scale assessment. Unpublished doctoral thesis, University of Texas at Austin.

McGlohen, M.K., & Chang, H. (2008). Combining computer adaptive testing technology with cognitively diagnostic assessment. Behavior Research Methods, 40, 808–821.

Rupp, A., & Templin, J. (2008). The effects of Q-matrix misspecification on parameter estimates and classification accuracy in the DINA model. Educational and Psychological Measurement, 68, 78–96.

Silvey, S.D. (1980). Optimal design. London: Chapman and Hall.

Stocking, M.L. (1988). Scale drift in on-line calibration (Research Rep. 88-28). Princeton, NJ: ETS.

Tatsuoka, K.K. (1995). Architecture of knowledge structures and cognitive diagnosis: a statistical pattern classification approach. In P. Nichols, S. Chipman, & R. Brennan (Eds.), Cognitively diagnostic assessments (pp. 327–359). Hillsdale: Erlbaum.

Tatsuoka, C. (2002). Data analytic methods for latent partially ordered classification models. Journal of the Royal Statistical Society. Series C, Applied Statistics, 51, 337–350.

Tatsuoka, K.K., & Tatsuoka, M.M. (1997). Computerized cognitive diagnostic adaptive testing: effect on remedial instruction as empirical validation. Journal of Educational Measurement, 34, 3–20.

Templin, J., & Henson, R. (2006). Measurement of psychological disorders using cognitive diagnosis models. Psychological Methods, 11, 287–305.

Wainer, H. (1990). Computerized adaptive testing: A primer. Hillsdale: Erlbaum.

Wainer, H., & Mislevy, R.J. (1990). Item response theory, item calibration, and proficiency estimation. In H. Wainer (Ed.), Computerized adaptive testing: A primer (pp. 65–102). Hillsdale: Erlbaum.

Weiss, D.J. (1982). Improving measurement quality and efficiency with adaptive testing. Applied Psychological Measurement, 6, 473–492.

Xu, X., Chang, H., & Douglas, J. (2003). A simulation study to compare CAT strategies for cognitive diagnosis. Paper presented at the annual meeting of National Council on Measurement in Education, Chicago, IL, April.

Acknowledgements

This study was conducted when the first author was a visiting scholar at the University of Illinois at Urbana-Champaign (UIUC). He would like to thank the China Scholarship Council (CSC) for the opportunity and one year financial support. Part of the paper was originally presented in 2010 annual meeting of the Psychometric Society, Athens, GA. The authors are indebted to the editor, associate editor and three anonymous reviewers for their constructive suggestions and comments on the earlier manuscript.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A. CD-Method A Online Calibration Method for the DINA Model in CD-CAT

To obtain the conditional maximum likelihood estimates of g j and s j , we take the derivatives of lnL j with respect to g j and s j

Equations (A.1) and (A.2) can be simplified as follows:

Thus, maximizations of lnL j with respect to g j and s j are equivalent to solving for g j and s j in the following equations:

Equation (A.5) gives the estimator \(\hat{g}_{j} = \frac{n_{2}}{n_{1} +n_{2}}\), where n 1 is the number of examinees who take the new item j and satisfy the condition “\(\hat{\eta} _{ij} = 0\) and u ij =0”, n 2 is the number of examinees who answer the new item j and meet the condition “\(\hat{\eta} _{ij} = 0\) and u ij =1”; Similarly, Equation (A.6) results in the estimator \(\hat{s}_{j} =\frac{n_{3}}{n_{3} + n_{4}}\), where n 3 is the number of examinees who take the new item j and satisfy the condition “\(\hat{\eta} _{ij}= 1\) and u ij =0”, n 4 is the number of examinees who answer the new item j and meet the condition “\(\hat{\eta} _{ij} = 1\) and u ij =1”. Note that if n 1+n 2=0 or n 3+n 4=0, we let \(\hat{g}_{j} = \frac{\mathit{Lower}\_\mathit{Bound} +\mathit{Upper}\_\mathit{Bound}}{2}\) or \(\hat{s}_{j}= \frac{\mathit{Lower}\_\mathit{Bound} + \mathit{Upper}\_\mathit{Bound}}{2}\).

Therefore, for every new item, once the n 1,n 2,n 3 and n 4 values are calculated, the estimators \(\hat{g}_{j}\) and \(\hat{s}_{j}\) can be easily computed.

Appendix B. CD-OEM Online Calibration Method for the DINA Model in CD-CAT

To obtain the estimates of g j and s j , we take the derivative of l j (u j ) with respect to Δ j (Δ j =g j ,s j ):

Because

Equation (B.1) becomes

where  is the expected number of examinees who take the new item j and have the KS α

l

;

is the expected number of examinees who take the new item j and have the KS α

l

;  is the expected number of examinees who correctly answer the new item j and have the KS α

l

.

is the expected number of examinees who correctly answer the new item j and have the KS α

l

.

In addition, all possible KSs can be divided into two categories by the new item j, the first category is “ ” (examinees whose KS α

l

does not include mastery of all the required attributes of the new item j), and the second one is “

” (examinees whose KS α

l

does not include mastery of all the required attributes of the new item j), and the second one is “ ” (examinees whose KS α

l

includes mastery of all the required attributes of the new item j). Consequently, Equation (B.3) turns into

” (examinees whose KS α

l

includes mastery of all the required attributes of the new item j). Consequently, Equation (B.3) turns into

Since

Equation (B.4) changes into

where

is the number of examinees who take the new item j and lack mastery of at least one of the required attributes of the new item j,  is the number of examinees who correctly answer the new item j and lack mastery of at least one of the required attributes of new item j; Similarly,

is the number of examinees who correctly answer the new item j and lack mastery of at least one of the required attributes of new item j; Similarly,  ,

,  and they belong to the examinees who have mastered all the required attributes of the new item j. Equation \(I_{j}^{(0)} + I_{j}^{(1)} = n_{j}\) (j=1,2,…,m) holds for every new item.

and they belong to the examinees who have mastered all the required attributes of the new item j. Equation \(I_{j}^{(0)} + I_{j}^{(1)} = n_{j}\) (j=1,2,…,m) holds for every new item.

Equation (B.6) results in the estimators of g j and s j

Therefore, for the new item j, once the \(I_{j}^{(0)}\), \(R_{j}^{(0)}\), \(I_{j}^{(1)}\) and \(R_{j}^{(1)}\) values are calculated, the estimators \(\hat{g}_{j}\) and \(\hat{s}_{j}\) can be easily computed. However, it should be noted that calculating the above four values needs to use the item parameters of the new items. Consequently, this study sets the initial guessing and slipping parameters to all \(\frac{\mathit{Lower}\_\mathit{Bound} +\mathit{Upper}\_\mathit{Bound}}{2}\).

Appendix C. Attributes for the Level-2 English Proficiency Test

Rights and permissions

About this article

Cite this article

Chen, P., Xin, T., Wang, C. et al. Online Calibration Methods for the DINA Model with Independent Attributes in CD-CAT. Psychometrika 77, 201–222 (2012). https://doi.org/10.1007/s11336-012-9255-7

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11336-012-9255-7